Visual Object Tracking Based on the Motion Prediction and Block Search in UAV Videos

Abstract

1. Introduction

- (1)

- We introduce three evaluation metrics: the APCE, SCR, and TS, which are used to evaluate the tracking results for each frame. These evaluation metrics are used to jointly identify the tracking status and provide feedback information to the DTUN in the tracking of subsequent frames. The proposed DTUN adjusts the template strategy according to different tracking statuses, enabling the tracker to adapt to changes in the object and the tracking scenario.

- (2)

- We propose a motion prediction and block search module. When tracking drift occurs due to complex statuses (e.g., occlusion and being out of view), we first predict the motion state of the object using a Kalman filter, and then utilize the block search to re-locate the object. Its performance is excellent for solving the tracking drift problem from the UAV perspective.

- (3)

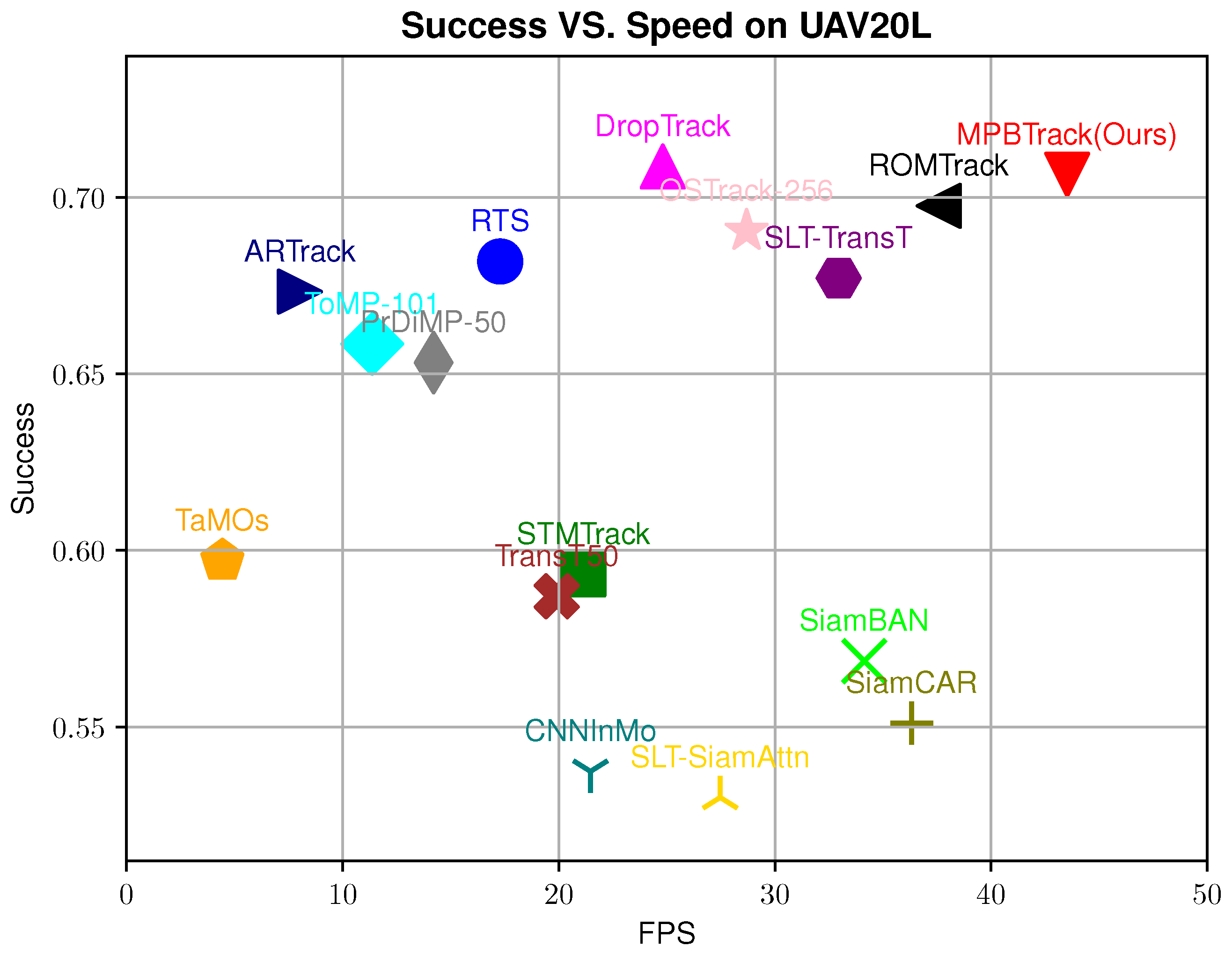

- Our proposed algorithm achieves significant performance improvement on five aerial datasets, UAV20L [15], UAV123 [15], UAVDT [16], DTB70 [17], and VisDrone2018-SOT [18]. In particular, on the long-term tracking dataset UAV20L, our method achieves 19.1% and 20.8% increase in success and precision, respectively, compared to the baseline method, and achieves 43 FPS real-time speed.

2. Related Work

2.1. Object Tracking Algorithm

2.2. Tracking Algorithms in the UAV

3. Proposed Method

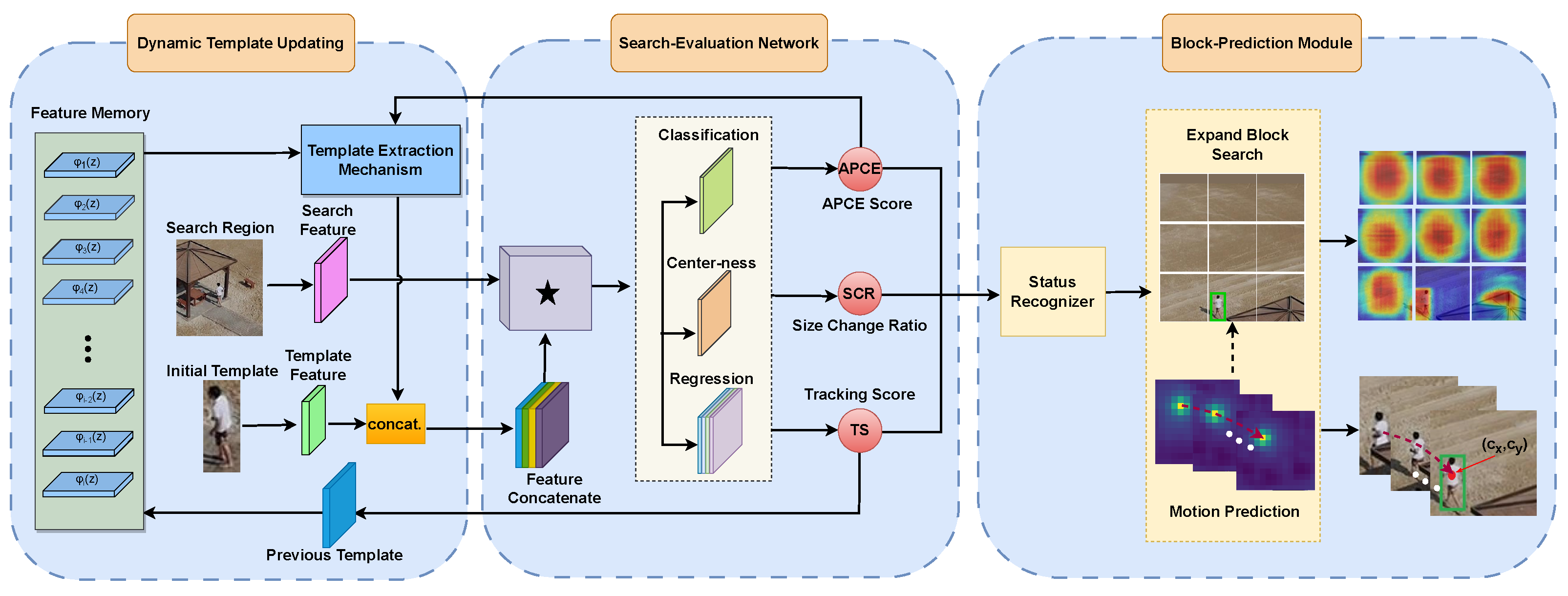

3.1. Overall Framework

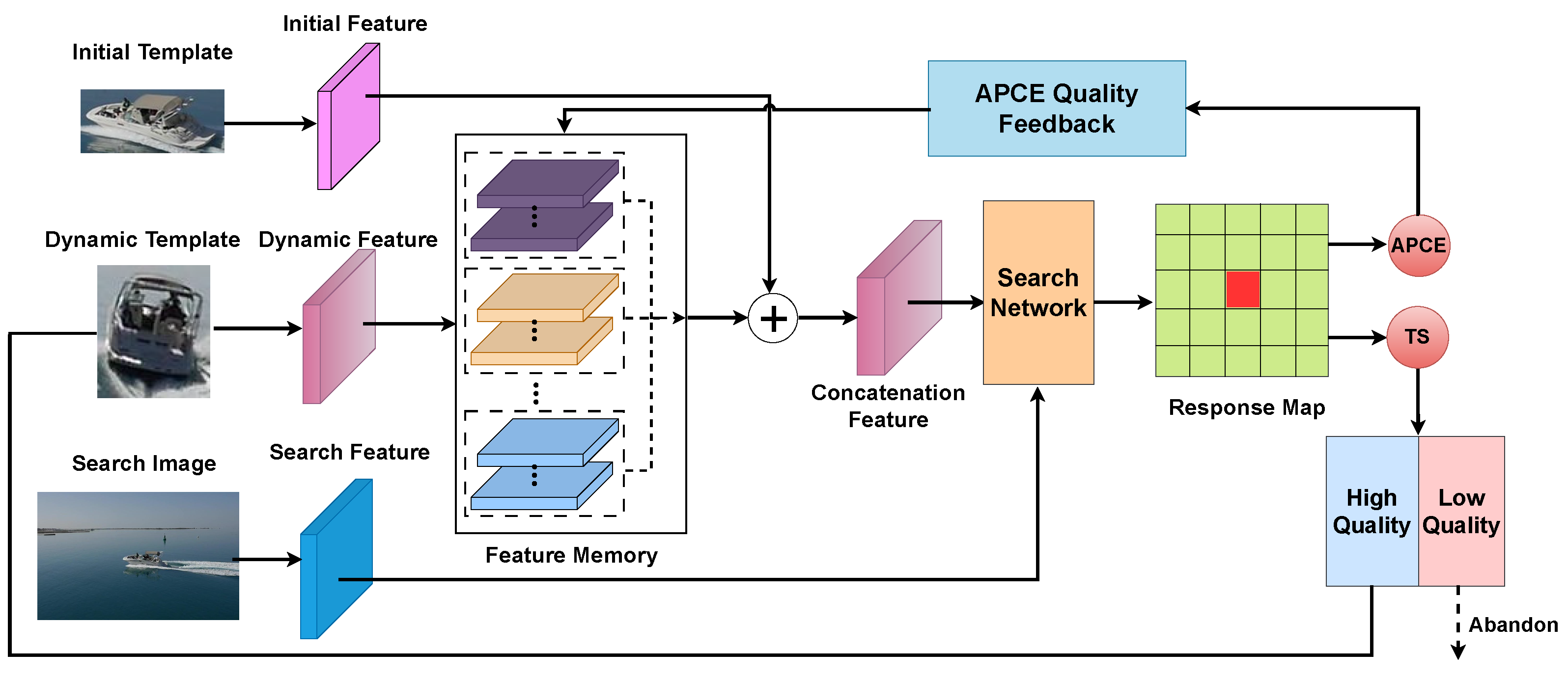

3.2. Dynamic Template Updating Network

3.3. Search–Evaluation Network

3.3.1. Search Subnetwork

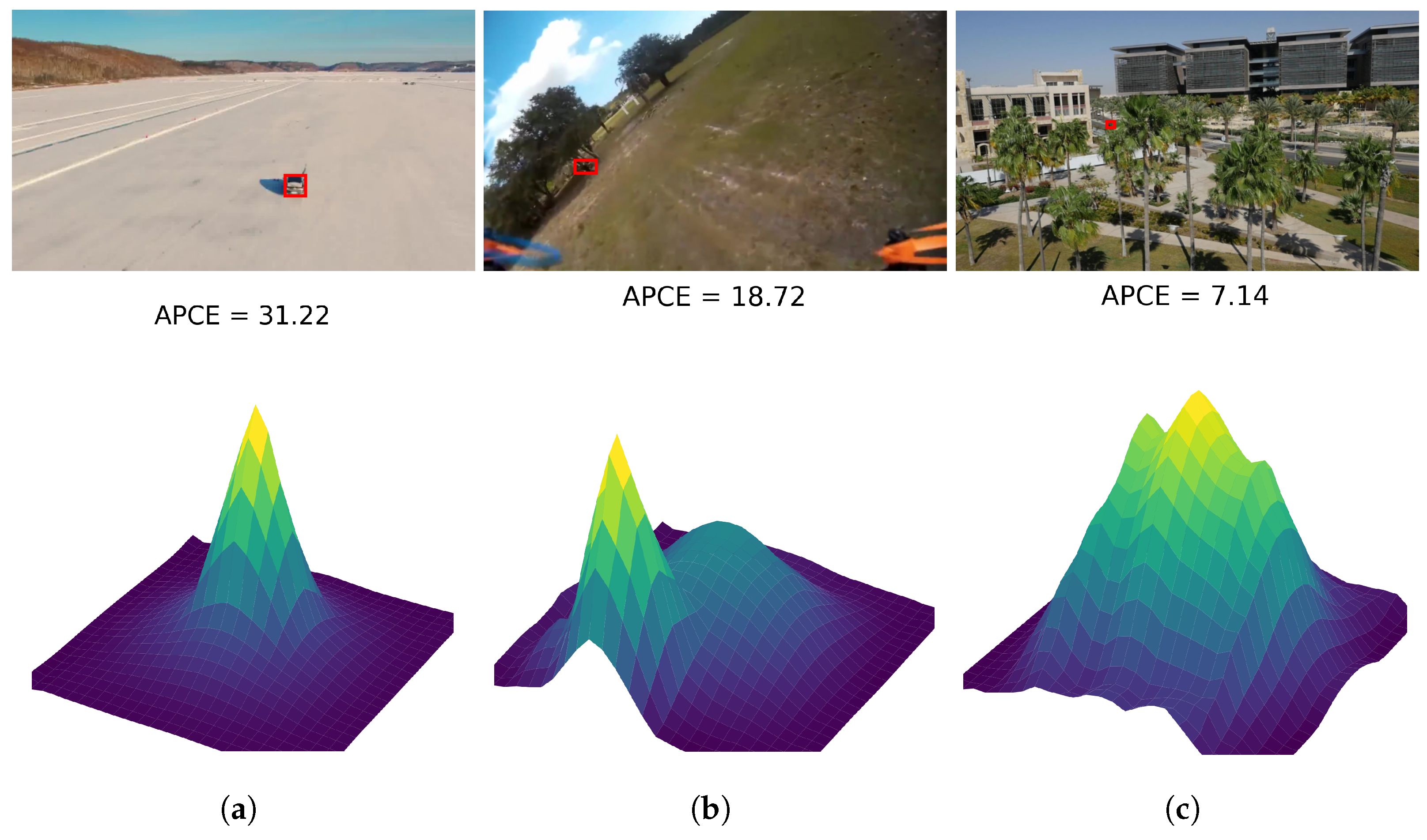

3.3.2. Evaluation Network

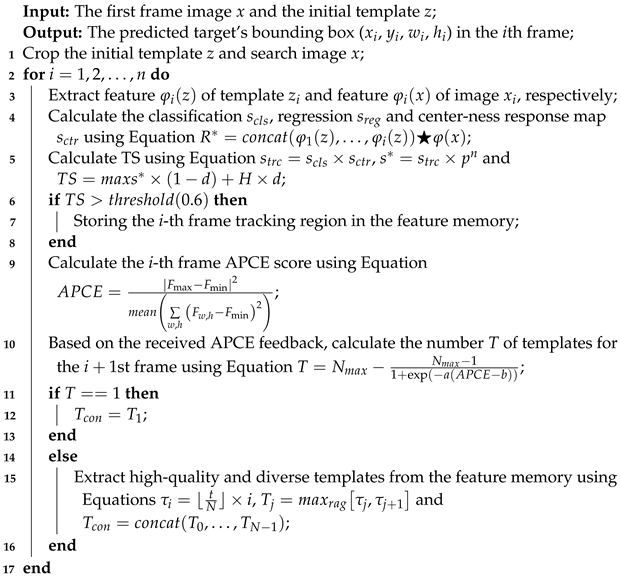

| Algorithm 1: Dynamic template-updating network. |

|

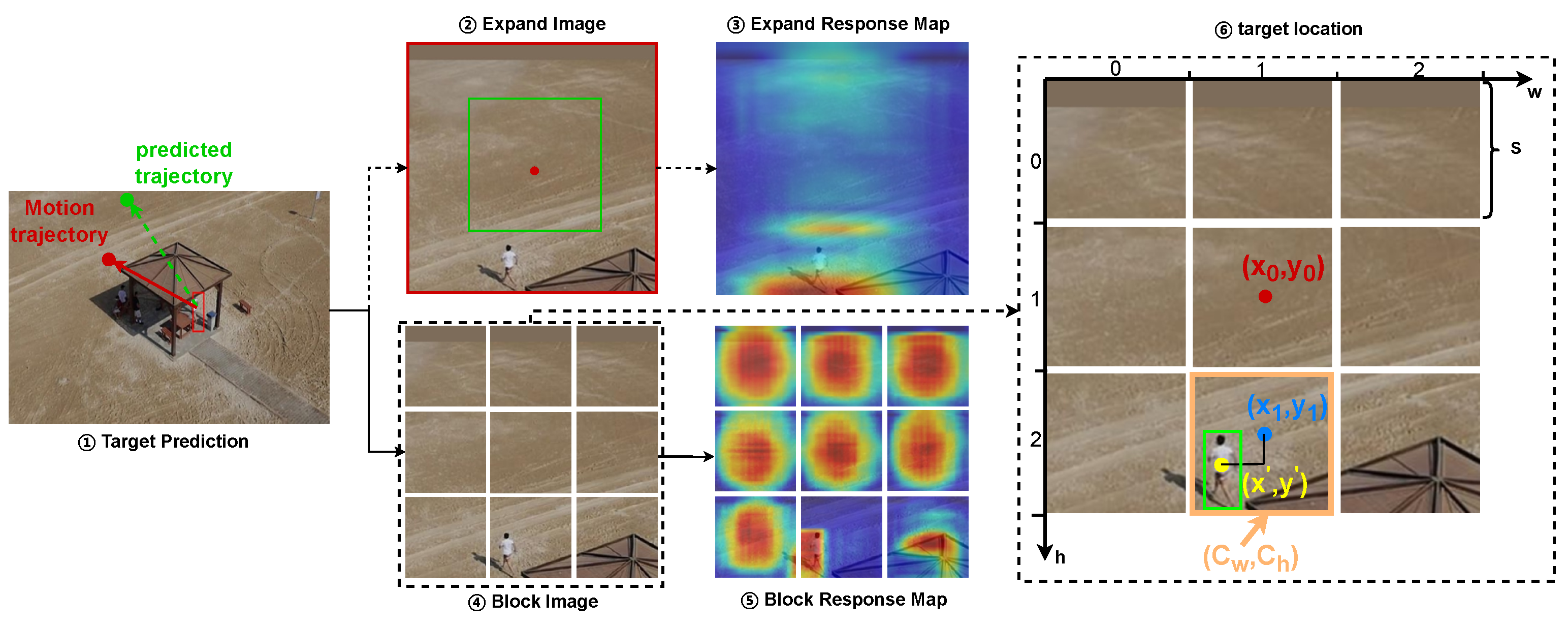

3.4. Block-Prediction Module

3.4.1. Motion Prediction

3.4.2. Block Search

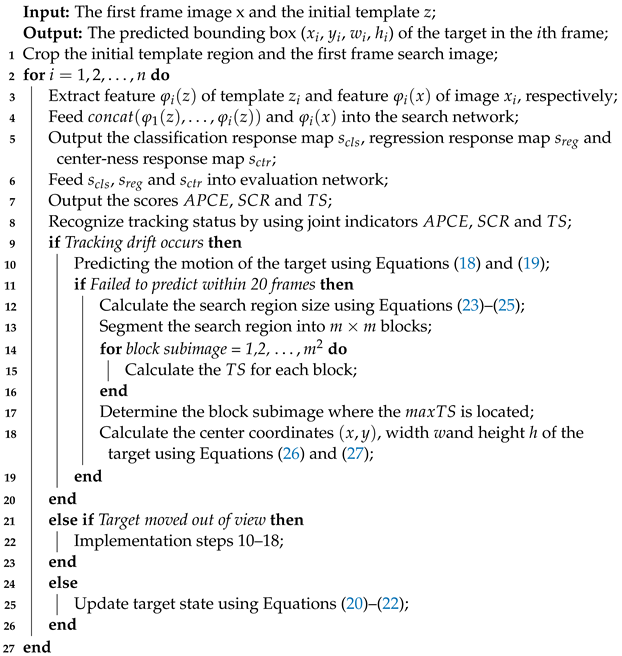

| Algorithm 2: The proposed MPBTrack algorithm. |

|

4. Experiments

4.1. Experimental Details

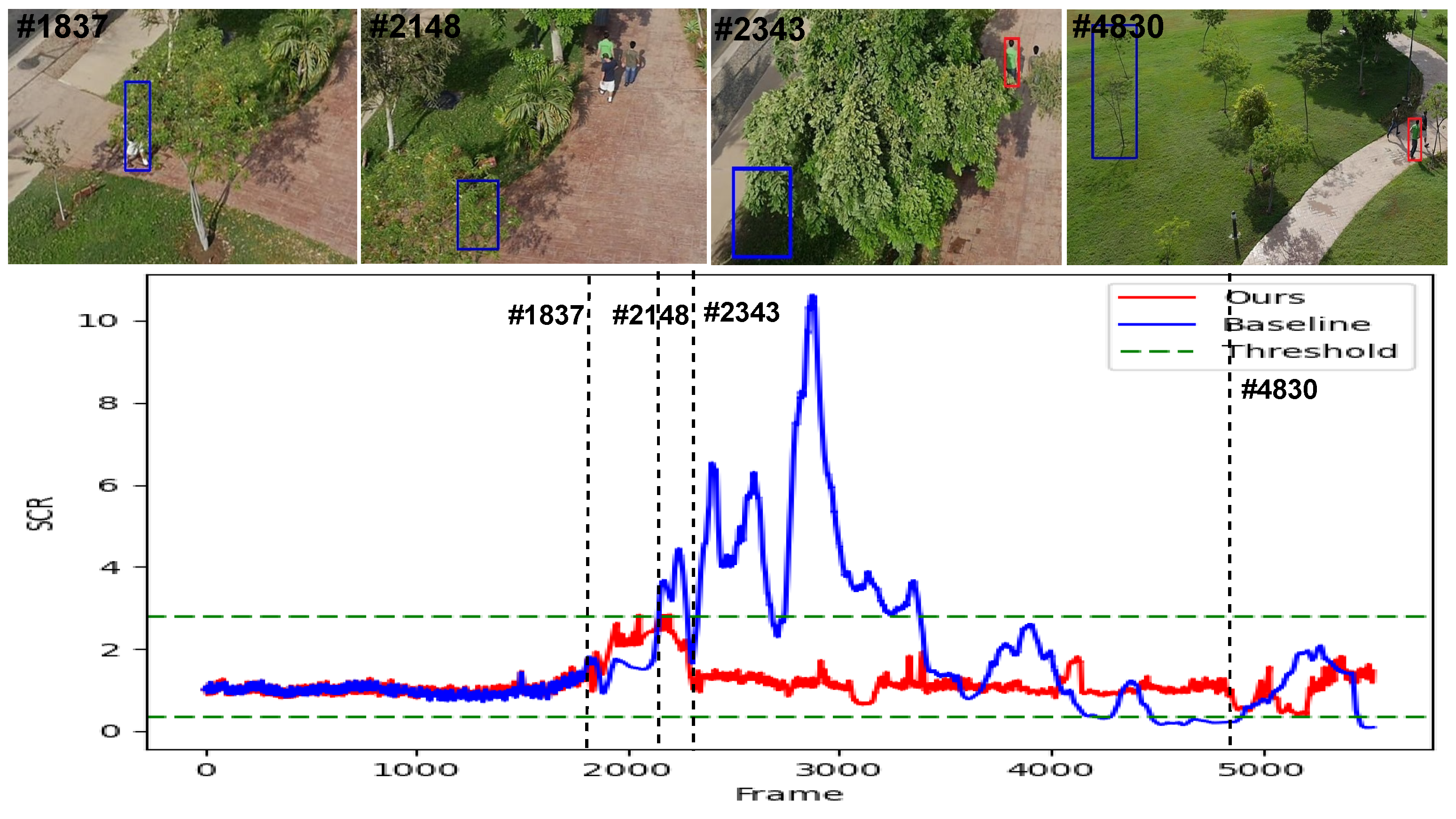

4.2. Ablation Experiments

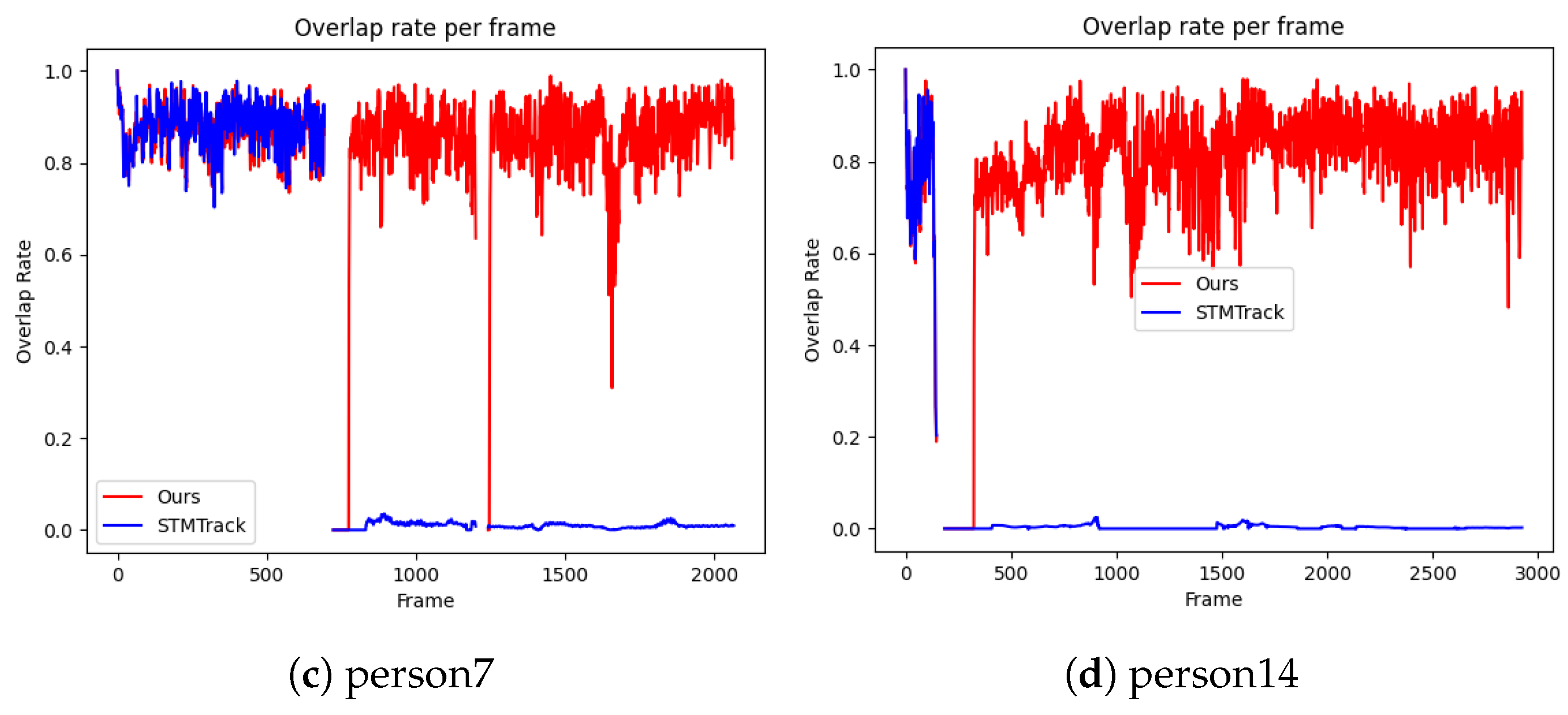

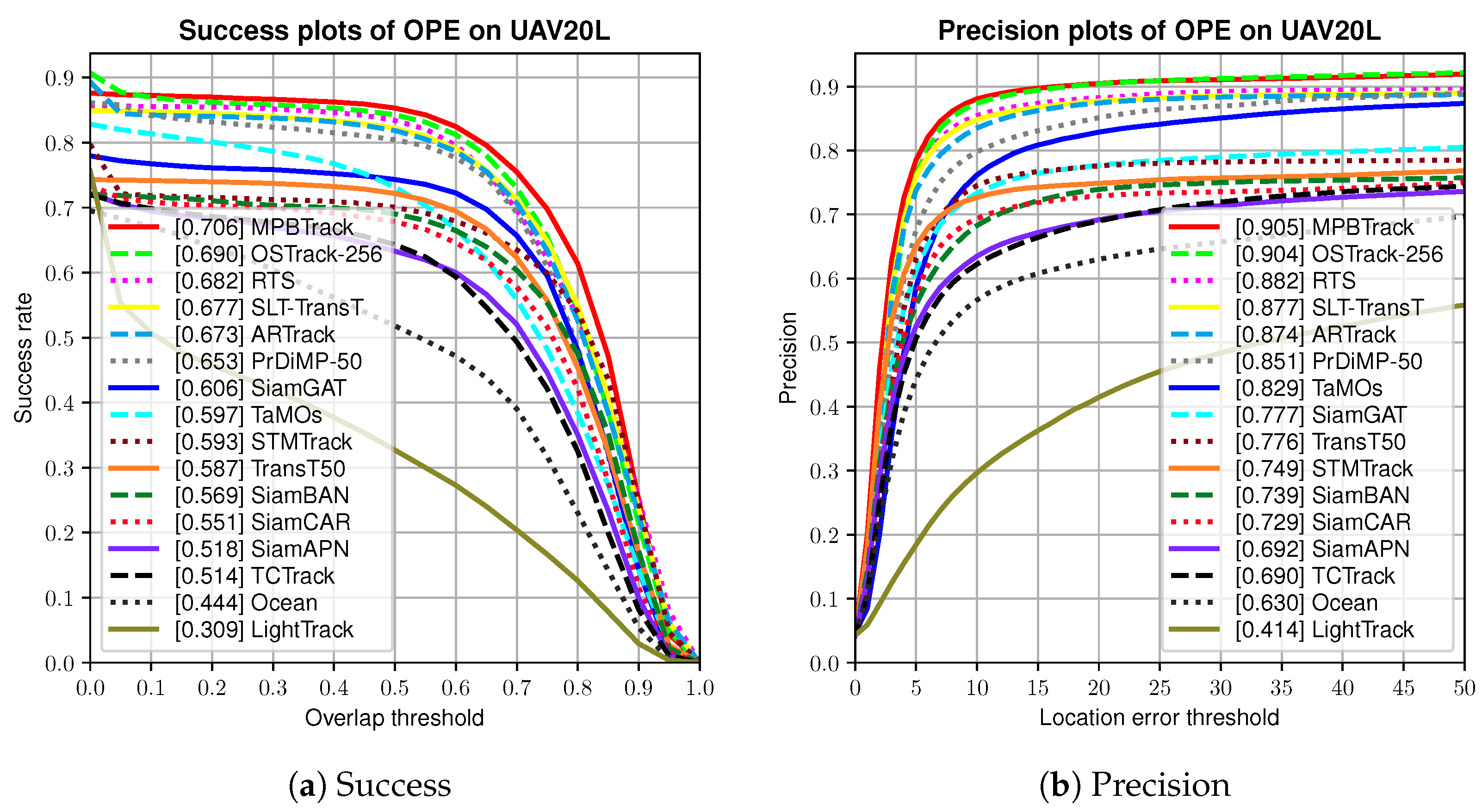

4.3. Experiments on UAV20L Benchmark

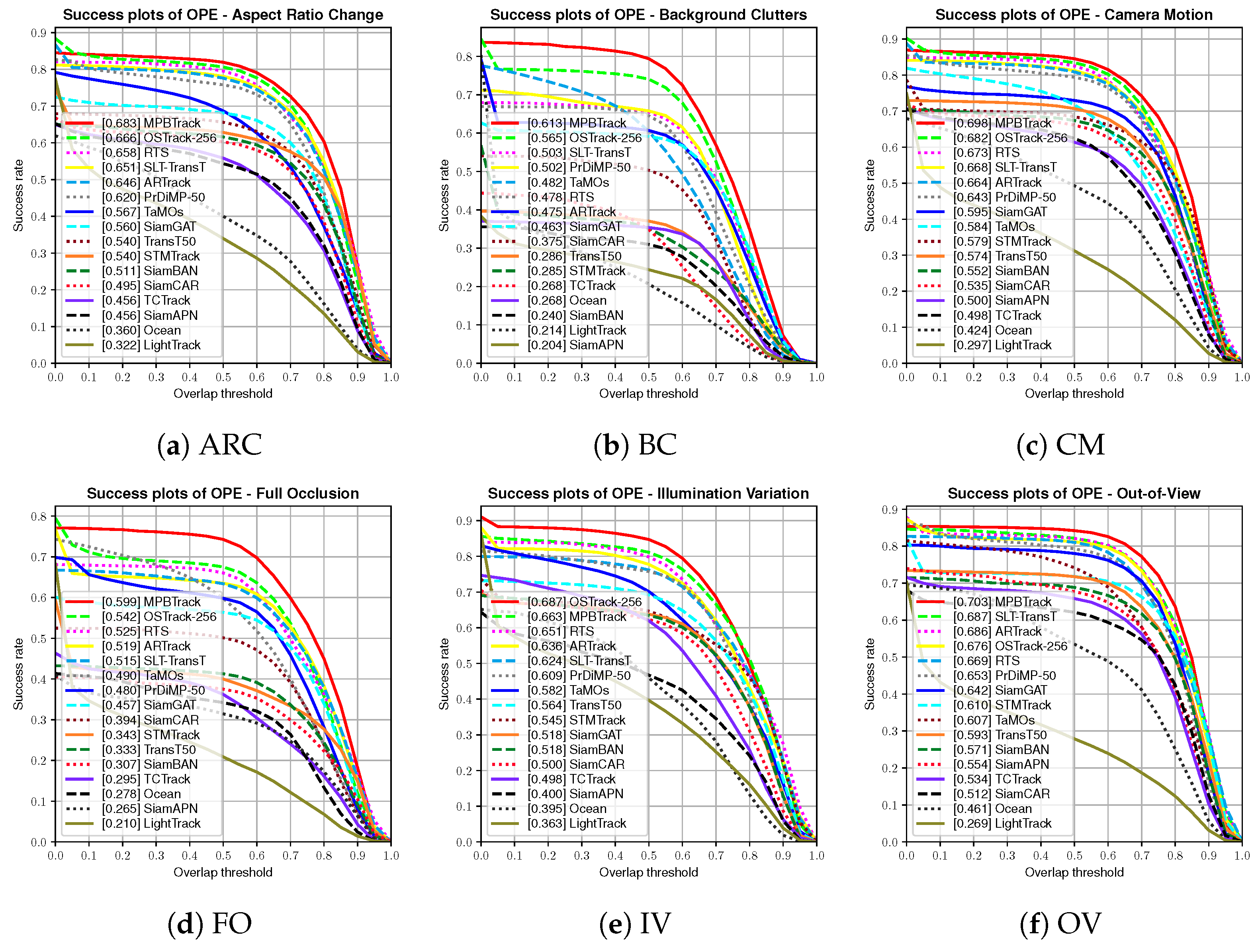

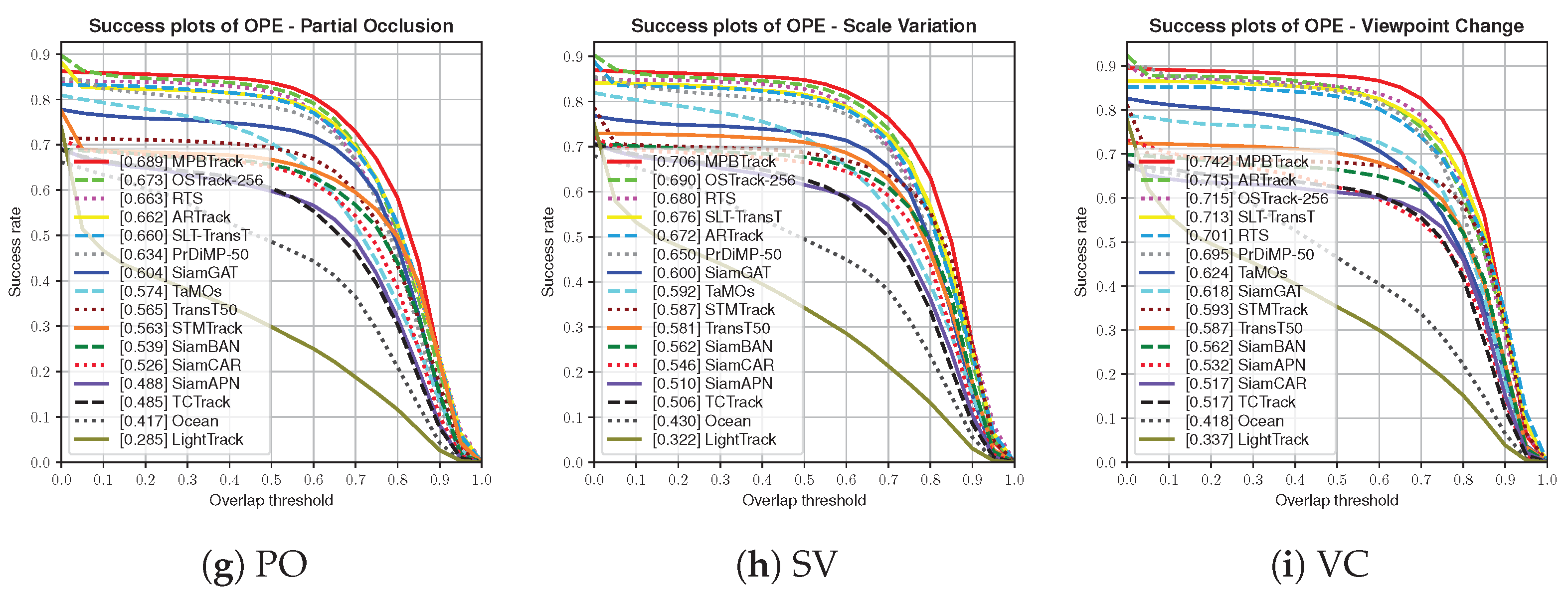

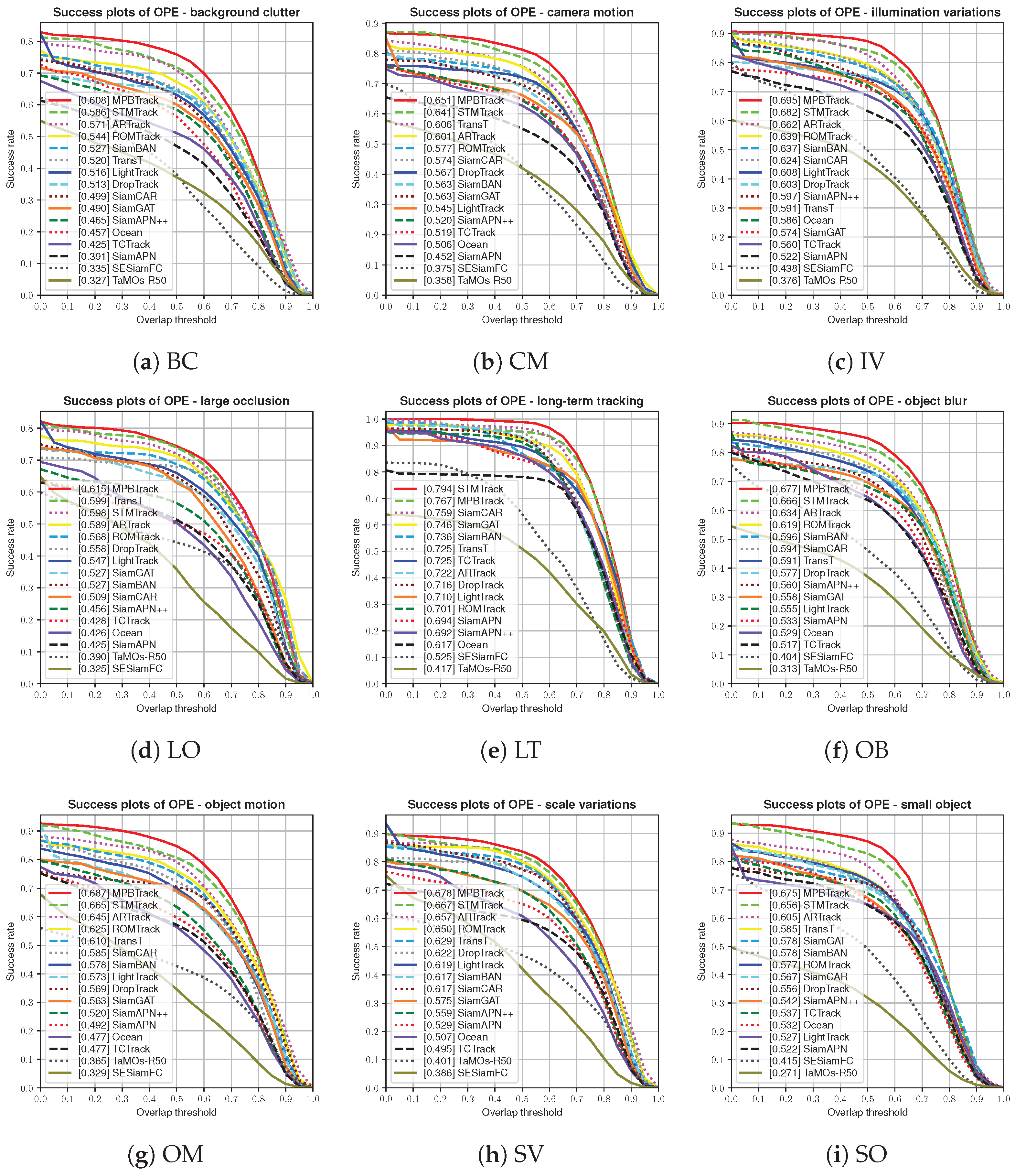

4.4. Experiments on UAV123 Benchmark

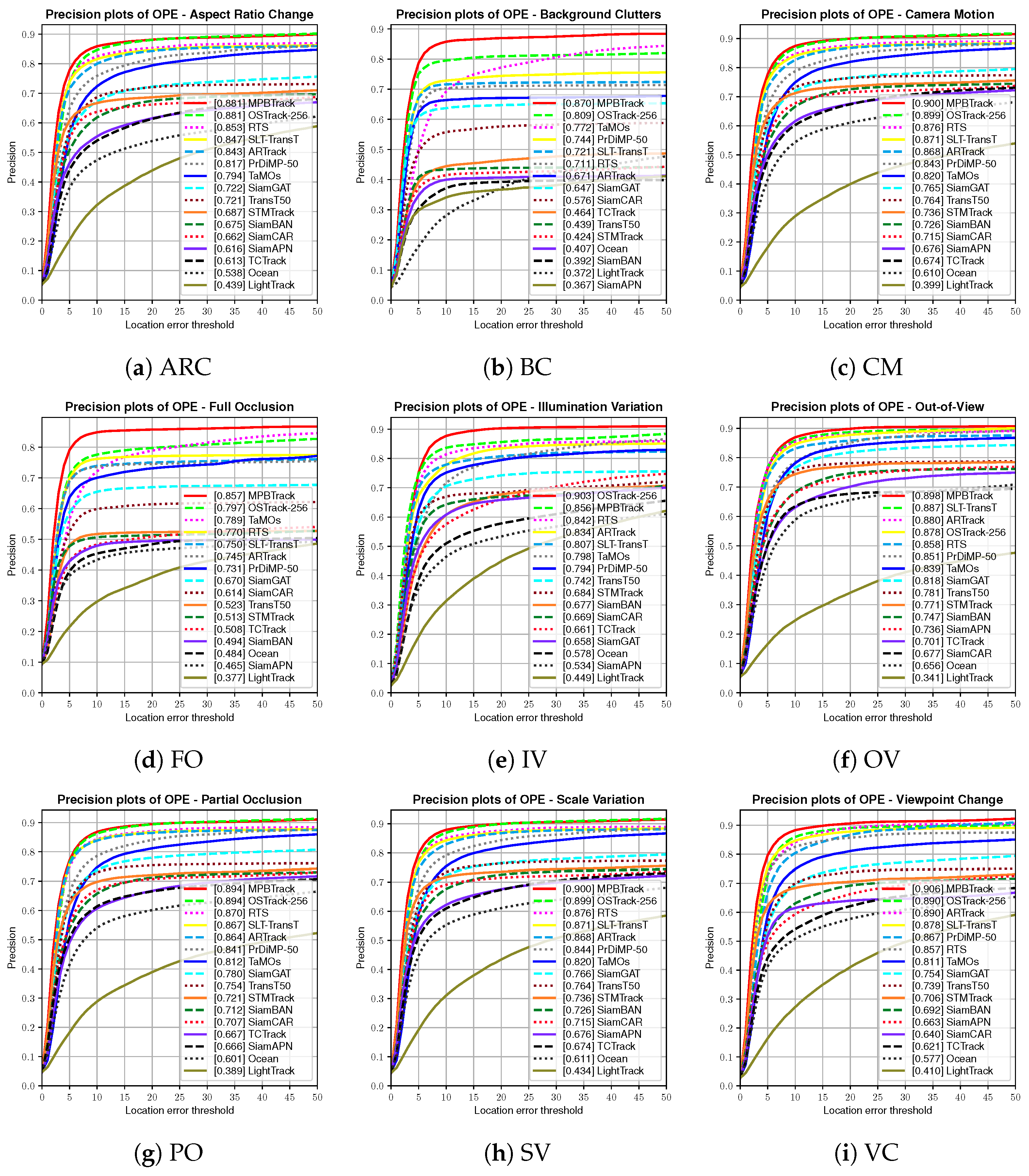

4.5. Experiments on UAVDT Benchmark

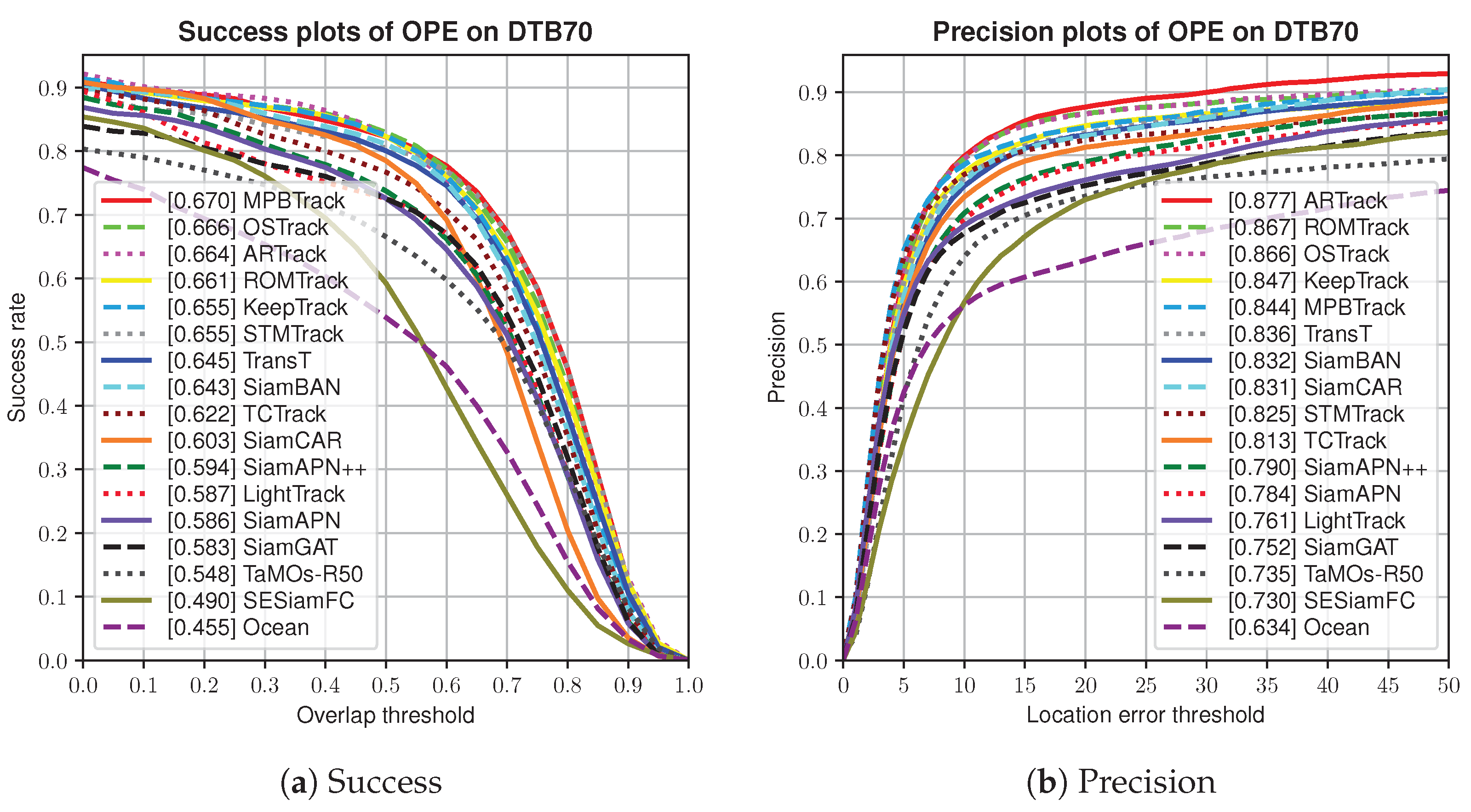

4.6. Experiments on DTB70 Benchmark

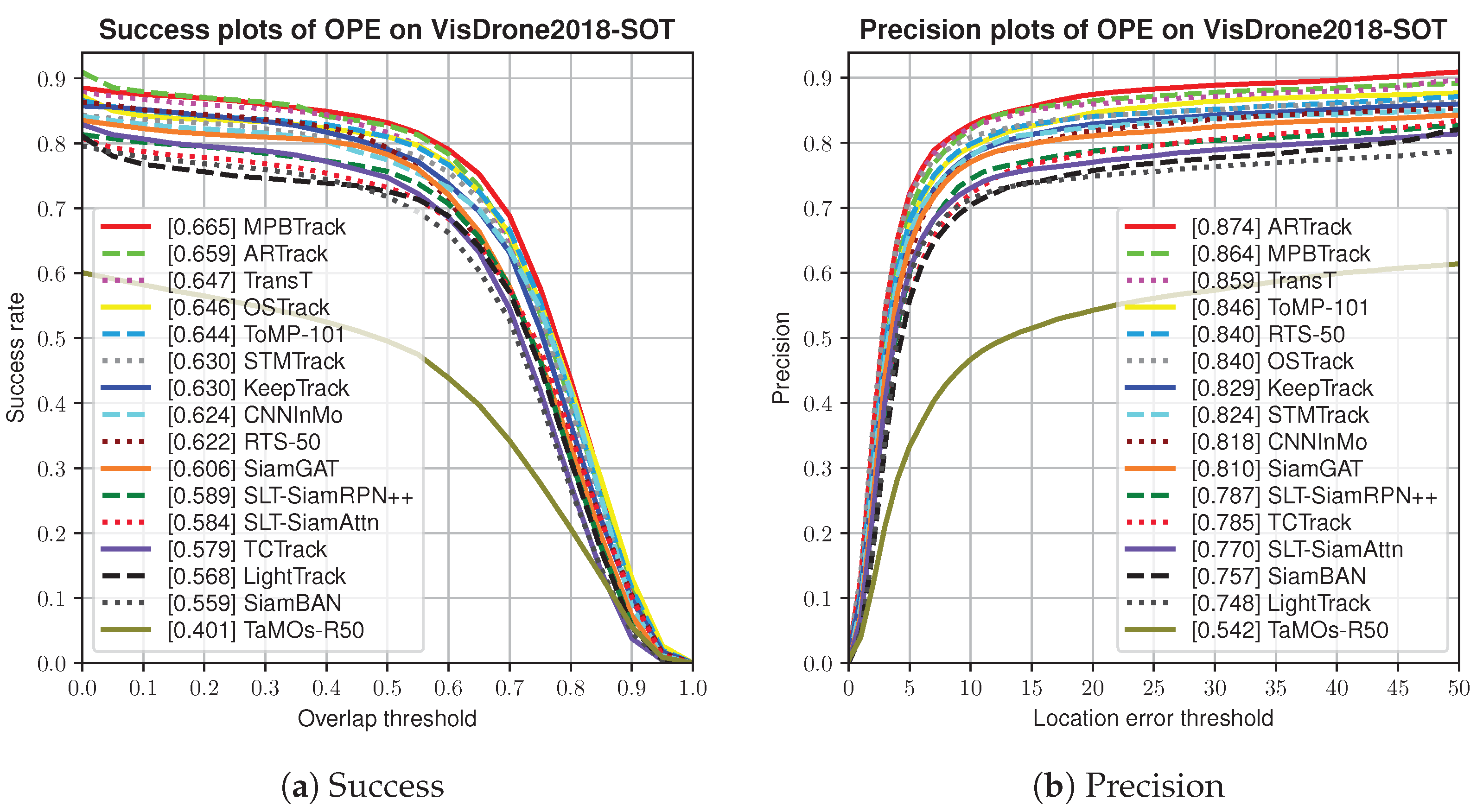

4.7. Experiments on VisDrone2018-SOT Benchmark

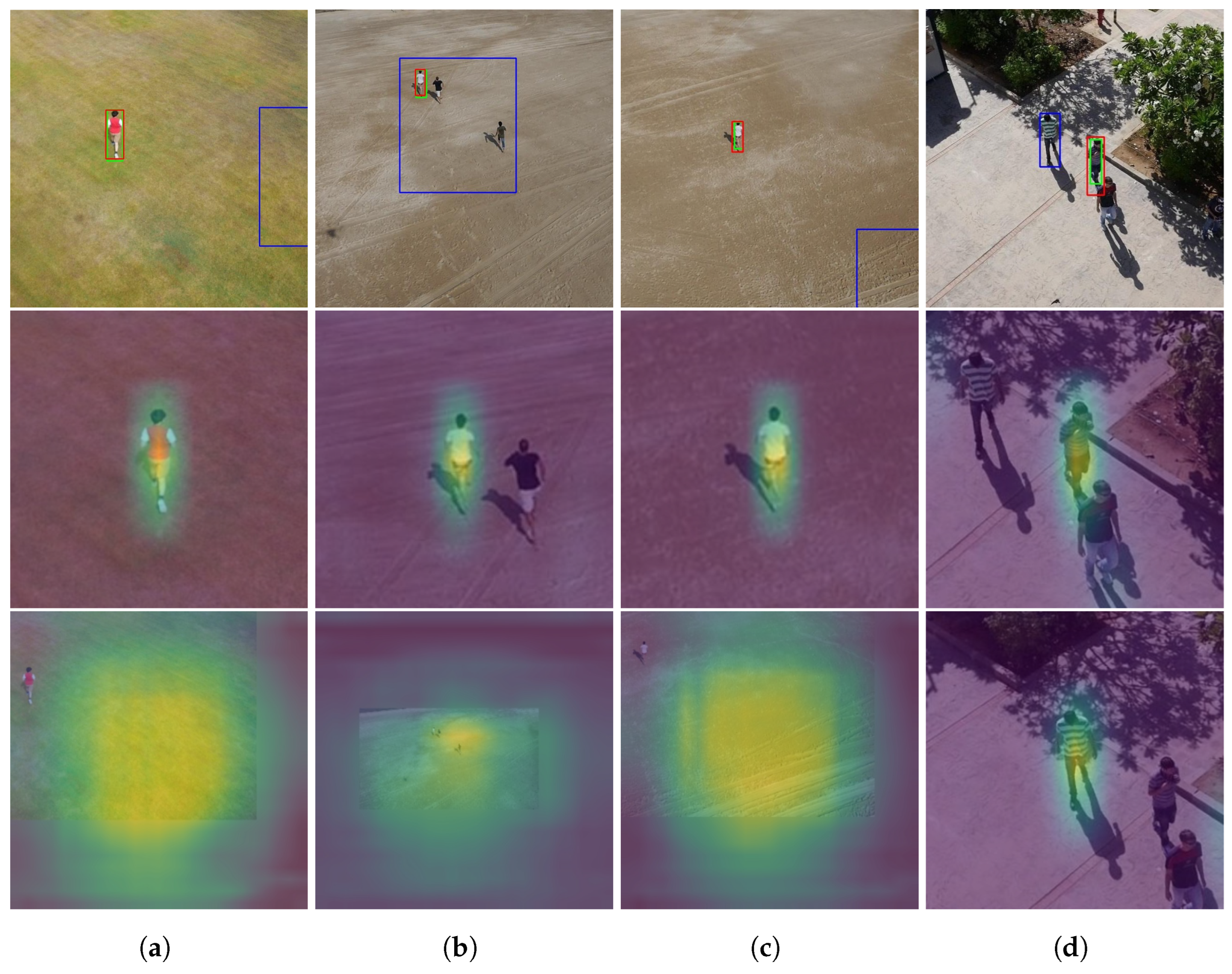

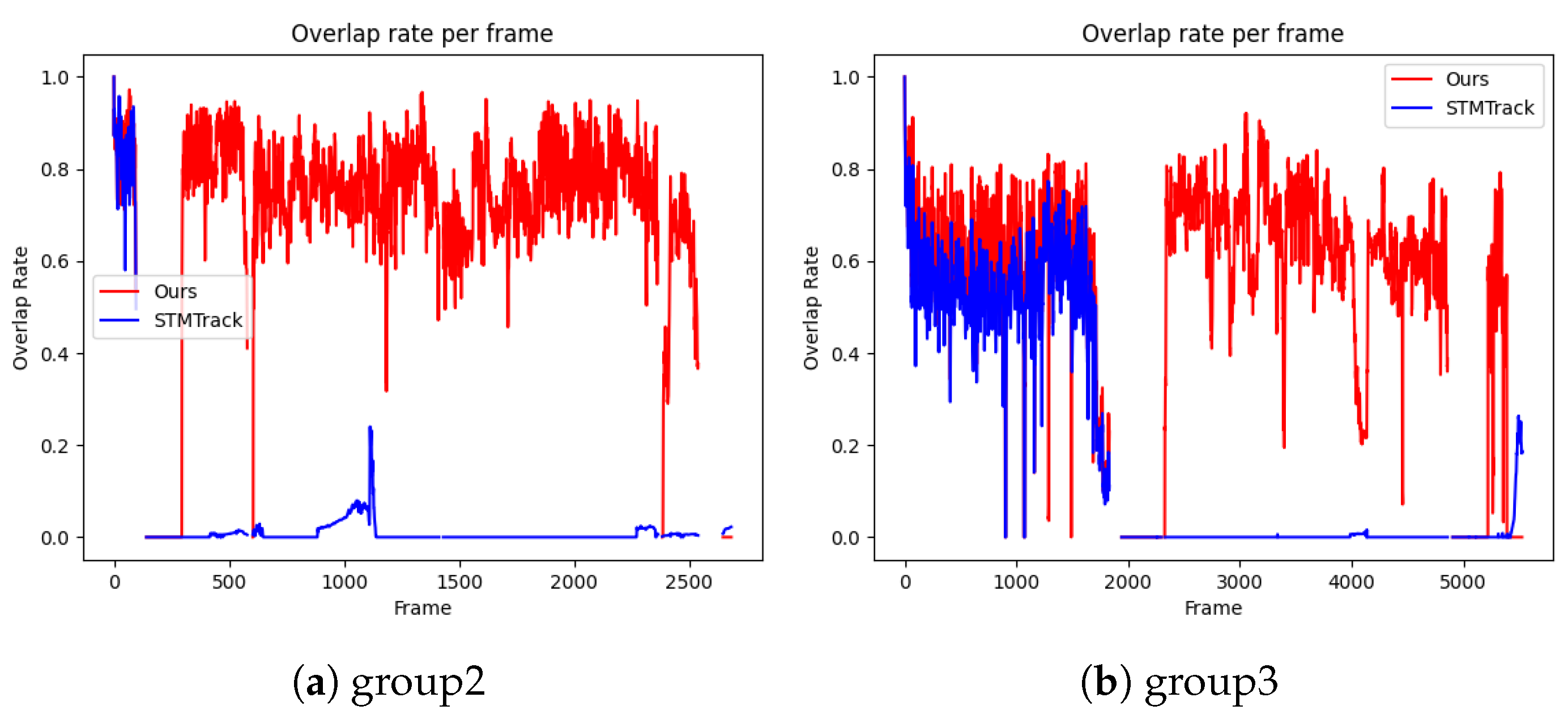

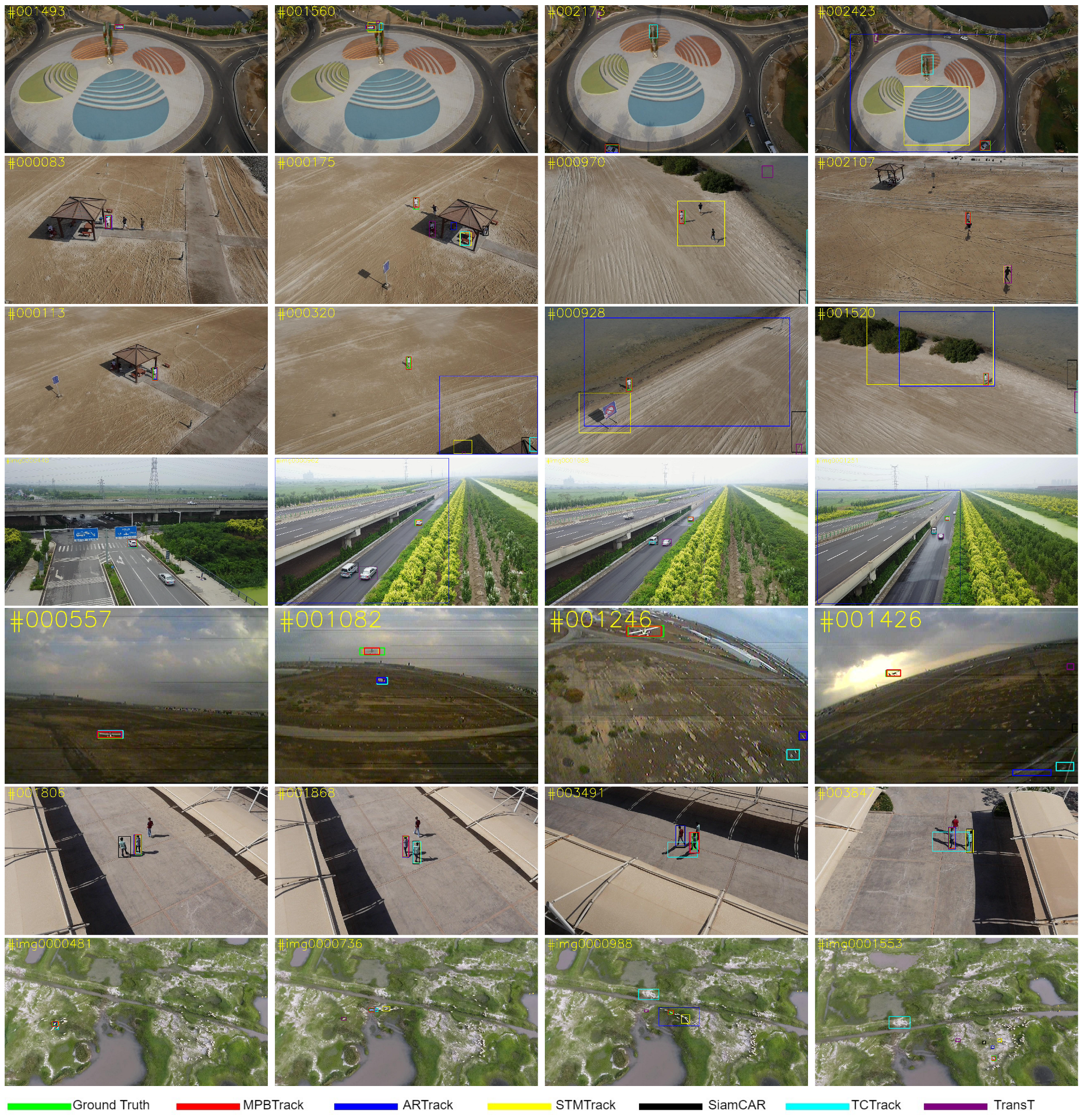

4.8. Qualitative Analysis

- (1)

- car 1: This sequence presents two challenges: occlusion of the object and the object moving out of view. Some trackers experience tracking drift after the object is occluded, and more trackers experience tracking drift after the target moves out of the field of view. Our method, however, successfully tracks the object again after both challenges.

- (2)

- group2, person14, uav180: These three sequences present the challenge of object occlusion. The visualization results demonstrate that when the object is occluded, only our tracker successfully tracks it, while the other trackers experience tracking drift or track the wrong object for a prolonged period in the subsequent frames. This highlights the significant advantages of our tracker in long-term tracking and handling challenging situations.

- (3)

- uav1: The uav1 sequence involves the challenges of camera motion, background clutter, and fast object motion. The simultaneous interference of these three challenges in the tracking of this uav1 sequence leads to tracking drift in multiple trackers. However, our tracker relies on dynamic template updating and block search to remain relatively resistant to interference from complex conditions.

- (4)

- group1 and uav93: These two sequences present challenges with similar targets and object occlusion. When mutual occlusion between objects occurs, other trackers appear to track the wrong object. Our tracker can still accurately track the correct object in this challenging scenario.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Yeom, S. Thermal Image Tracking for Search and Rescue Missions with a Drone. Drones 2024, 8, 53. [Google Scholar] [CrossRef]

- Han, Y.; Yu, X.; Luan, H.; Suo, J. Event-Assisted Object Tracking on High-Speed Drones in Harsh Illumination Environment. Drones 2024, 8, 22. [Google Scholar] [CrossRef]

- Chen, Q.; Liu, J.; Liu, F.; Xu, F.; Liu, C. Lightweight Spatial-Temporal Contextual Aggregation Siamese Network for Unmanned Aerial Vehicle Tracking. Drones 2024, 8, 24. [Google Scholar] [CrossRef]

- Memon, S.A.; Son, H.; Kim, W.G.; Khan, A.M.; Shahzad, M.; Khan, U. Tracking Multiple Unmanned Aerial Vehicles through Occlusion in Low-Altitude Airspace. Drones 2023, 7, 241. [Google Scholar] [CrossRef]

- Gao, Y.; Gan, Z.; Chen, M.; Ma, H.; Mao, X. Hybrid Dual-Scale Neural Network Model for Tracking Complex Maneuvering UAVs. Drones 2023, 8, 3. [Google Scholar] [CrossRef]

- Kalman, R.E. A new approach to linear filtering and prediction problems. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Xie, X.; Xi, J.; Yang, X.; Lu, R.; Xia, W. STFTrack: Spatio-Temporal-Focused Siamese Network for Infrared UAV Tracking. Drones 2023, 7, 296. [Google Scholar] [CrossRef]

- Cao, Z.; Fu, C.; Ye, J.; Li, B.; Li, Y. SiamAPN++: Siamese attentional aggregation network for real-time UAV tracking. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 3086–3092. [Google Scholar]

- Fu, Z.; Liu, Q.; Fu, Z.; Wang, Y. Stmtrack: Template-free visual tracking with space-time memory networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13774–13783. [Google Scholar]

- Guo, D.; Shao, Y.; Cui, Y.; Wang, Z.; Zhang, L.; Shen, C. Graph attention tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 9543–9552. [Google Scholar]

- Cheng, S.; Zhong, B.; Li, G.; Liu, X.; Tang, Z.; Li, X.; Wang, J. Learning to filter: Siamese relation network for robust tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 4421–4431. [Google Scholar]

- Wu, Q.; Yang, T.; Liu, Z.; Wu, B.; Shan, Y.; Chan, A.B. Dropmae: Masked autoencoders with spatial-attention dropout for tracking tasks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 14561–14571. [Google Scholar]

- Lin, L.; Fan, H.; Zhang, Z.; Xu, Y.; Ling, H. Swintrack: A simple and strong baseline for transformer tracking. Adv. Neural Inf. Process. Syst. 2022, 35, 16743–16754. [Google Scholar]

- Gao, S.; Zhou, C.; Ma, C.; Wang, X.; Yuan, J. Aiatrack: Attention in attention for transformer visual tracking. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 146–164. [Google Scholar]

- Mueller, M.; Smith, N.; Ghanem, B. A benchmark and simulator for uav tracking. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 445–461. [Google Scholar]

- Du, D.; Qi, Y.; Yu, H.; Yang, Y.; Duan, K.; Li, G.; Zhang, W.; Huang, Q.; Tian, Q. The unmanned aerial vehicle benchmark: Object detection and tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 370–386. [Google Scholar]

- Li, S.; Yeung, D.Y. Visual object tracking for unmanned aerial vehicles: A benchmark and new motion models. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 31. [Google Scholar]

- Wen, L.; Zhu, P.; Du, D.; Bian, X.; Ling, H.; Hu, Q.; Liu, C.; Cheng, H.; Liu, X.; Ma, W.; et al. Visdrone-sot2018: The vision meets drone single-object tracking challenge results. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Li, B.; Yan, J.; Wu, W.; Zhu, Z.; Hu, X. High performance visual tracking with siamese region proposal network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8971–8980. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 1–9. [Google Scholar] [CrossRef]

- Guo, D.; Wang, J.; Cui, Y.; Wang, Z.; Chen, S. SiamCAR: Siamese fully convolutional classification and regression for visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2020; pp. 6269–6277. [Google Scholar]

- Paul, M.; Danelljan, M.; Mayer, C.; Van Gool, L. Robust visual tracking by segmentation. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 571–588. [Google Scholar]

- Zhu, Z.; Wang, Q.; Li, B.; Wu, W.; Yan, J.; Hu, W. Distractor-aware siamese networks for visual object tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 101–117. [Google Scholar]

- Yan, B.; Peng, H.; Fu, J.; Wang, D.; Lu, H. Learning spatio-temporal transformer for visual tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10448–10457. [Google Scholar]

- Cao, Z.; Fu, C.; Ye, J.; Li, B.; Li, Y. Hift: Hierarchical feature transformer for aerial tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 15457–15466. [Google Scholar]

- Ye, B.; Chang, H.; Ma, B.; Shan, S.; Chen, X. Joint feature learning and relation modeling for tracking: A one-stream framework. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 341–357. [Google Scholar]

- Cao, Z.; Huang, Z.; Pan, L.; Zhang, S.; Liu, Z.; Fu, C. Tctrack: Temporal contexts for aerial tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 14798–14808. [Google Scholar]

- Li, B.; Fu, C.; Ding, F.; Ye, J.; Lin, F. All-day object tracking for unmanned aerial vehicle. IEEE Trans. Mob. Comput. 2022, 22, 4515–4529. [Google Scholar] [CrossRef]

- Yang, J.; Gao, S.; Li, Z.; Zheng, F.; Leonardis, A. Resource-efficient RGBD aerial tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 13374–13383. [Google Scholar]

- Luo, Y.; Guo, X.; Dong, M.; Yu, J. RGB-T Tracking Based on Mixed Attention. arXiv 2023, arXiv:2304.04264. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Wang, M.; Liu, Y.; Huang, Z. Large margin object tracking with circulant feature maps. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4021–4029. [Google Scholar]

- Muller, M.; Bibi, A.; Giancola, S.; Alsubaihi, S.; Ghanem, B. Trackingnet: A large-scale dataset and benchmark for object tracking in the wild. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 300–317. [Google Scholar]

- Fan, H.; Lin, L.; Yang, F.; Chu, P.; Deng, G.; Yu, S.; Bai, H.; Xu, Y.; Liao, C.; Ling, H. Lasot: A high-quality benchmark for large-scale single object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5374–5383. [Google Scholar]

- Huang, L.; Zhao, X.; Huang, K. Got-10k: A large high-diversity benchmark for generic object tracking in the wild. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1562–1577. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Mayer, C.; Danelljan, M.; Yang, M.H.; Ferrari, V.; Van Gool, L.; Kuznetsova, A. Beyond SOT: Tracking Multiple Generic Objects at Once. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 1–6 January 2024; pp. 6826–6836. [Google Scholar]

- Wei, X.; Bai, Y.; Zheng, Y.; Shi, D.; Gong, Y. Autoregressive visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 9697–9706. [Google Scholar]

- Kim, M.; Lee, S.; Ok, J.; Han, B.; Cho, M. Towards sequence-level training for visual tracking. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 534–551. [Google Scholar]

- Chen, X.; Yan, B.; Zhu, J.; Wang, D.; Yang, X.; Lu, H. Transformer tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 8126–8135. [Google Scholar]

- Chen, Z.; Zhong, B.; Li, G.; Zhang, S.; Ji, R. Siamese box adaptive network for visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6668–6677. [Google Scholar]

- Tang, F.; Ling, Q. Ranking-based Siamese visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 8741–8750. [Google Scholar]

- Yan, B.; Peng, H.; Wu, K.; Wang, D.; Fu, J.; Lu, H. Lighttrack: Finding lightweight neural networks for object tracking via one-shot architecture search. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 15180–15189. [Google Scholar]

- Zhang, D.; Zheng, Z.; Jia, R.; Li, M. Visual tracking via hierarchical deep reinforcement learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 2–9 February 2021; Volume 35, pp. 3315–3323. [Google Scholar]

- Guo, M.; Zhang, Z.; Fan, H.; Jing, L.; Lyu, Y.; Li, B.; Hu, W. Learning target-aware representation for visual tracking via informative interactions. arXiv 2022, arXiv:2201.02526. [Google Scholar]

- Zhang, Z.; Liu, Y.; Wang, X.; Li, B.; Hu, W. Learn to match: Automatic matching network design for visual tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 13339–13348. [Google Scholar]

- Cai, Y.; Liu, J.; Tang, J.; Wu, G. Robust object modeling for visual tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 9589–9600. [Google Scholar]

- Fu, C.; Cao, Z.; Li, Y.; Ye, J.; Feng, C. Siamese anchor proposal network for high-speed aerial tracking. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 510–516. [Google Scholar]

- Mayer, C.; Danelljan, M.; Bhat, G.; Paul, M.; Paudel, D.P.; Yu, F.; Van Gool, L. Transforming model prediction for tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 8731–8740. [Google Scholar]

| Module | UAV20L | UAV123 | ||

|---|---|---|---|---|

| Success | Precision | Success | Precision | |

| Baseline | 0.589 | 0.742 | 0.647 | 0.825 |

| Baseline + DTUN | 0.617 | 0.784 | 0.651 | 0.834 |

| Baseline + BPM | 0.683 | 0.875 | 0.651 | 0.841 |

| Baseline + DTUN + BPM | 0.706 | 0.905 | 0.656 | 0.842 |

| Interval Frames | UAV20L | VisDrone2018-SOT | ||||

|---|---|---|---|---|---|---|

| Success | Precision | FPS | Success | Precision | FPS | |

| 10 | 0.697 | 0.893 | 32.4 | 0.637 | 0.822 | 27.9 |

| 15 | 0.700 | 0.898 | 32.6 | 0.637 | 0.823 | 28.3 |

| 20 | 0.706 | 0.905 | 43.5 | 0.665 | 0.864 | 43.4 |

| 25 | 0.689 | 0.811 | 32.8 | 0.633 | 0.818 | 26.7 |

| SCR | 2.0 | 2.4 | 2.8 | 3.2 | 3.6 |

|---|---|---|---|---|---|

| Success | 0.696 | 0.704 | 0.706 | 0.695 | 0.688 |

| Precision | 0.892 | 0.901 | 0.905 | 0.890 | 0.882 |

| Tracker | Ta-MOs [38] | HiFT [25] | TC-Track [27] | PAC-Net [45] | Siam-CAR [21] | Light-Track [44] | CNN-InMO [46] | Siam-BAN [42] | Siam-RN [11] | Auto-Match [47] | SiamPW-RBO [43] | Siam-GAT [10] | STM-Track [9] | MPB-Track |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Succ. | 0.571 | 0.589 | 0.604 | 0.620 | 0.623 | 0.626 | 0.629 | 0.631 | 0.643 | 0.644 | 0.645 | 0.646 | 0.647 | 0.656 |

| Prec. | 0.791 | 0.787 | 0.800 | 0.827 | 0.813 | 0.809 | 0.818 | 0.833 | - | - | - | 0.843 | 0.825 | 0.842 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, L.; Li, X.; Yang, Z.; Gao, D. Visual Object Tracking Based on the Motion Prediction and Block Search in UAV Videos. Drones 2024, 8, 252. https://doi.org/10.3390/drones8060252

Sun L, Li X, Yang Z, Gao D. Visual Object Tracking Based on the Motion Prediction and Block Search in UAV Videos. Drones. 2024; 8(6):252. https://doi.org/10.3390/drones8060252

Chicago/Turabian StyleSun, Lifan, Xinxiang Li, Zhe Yang, and Dan Gao. 2024. "Visual Object Tracking Based on the Motion Prediction and Block Search in UAV Videos" Drones 8, no. 6: 252. https://doi.org/10.3390/drones8060252

APA StyleSun, L., Li, X., Yang, Z., & Gao, D. (2024). Visual Object Tracking Based on the Motion Prediction and Block Search in UAV Videos. Drones, 8(6), 252. https://doi.org/10.3390/drones8060252