1. Introduction

Rotor drones [

1], known for their vertical takeoff and landing capabilities, exceptional stability during hovering, agile flight maneuvers, and ease of control, have found widespread applications in aerial photography, mapping, logistics, and search and rescue operations [

2]. However, the affordability and accessibility of rotor drones have led to a proliferation of illegal usage driven by curiosity, invasion of privacy, commercial interests, or political motives [

3]. Illegal drones pose a grave threat by infringing upon personal privacy and disrupting social order. Existing measures for managing airspace primarily rely on radio frequency jammers and similar devices to interfere with and neutralize illegal drones [

4]. Nevertheless, the effectiveness of radio frequency jammers is limited due to their shorter range and inability to detect autonomous drones, resulting in decreased efficiency in congested radio frequency areas [

5]. Moreover, using radio frequency jammers in densely populated regions is unsuitable as it may endanger ground personnel when the illegal drones plummet to the ground [

6]. Therefore, the most viable approach to combatting illegal drones lies in autonomously identifying, tracking, and capturing them, while ensuring a stable flight state and transporting them to secure zones. Considering the high mobility characteristic of illegal drones [

7] and maintaining stable flight conditions during the identification, tracking, and capture process poses a formidable challenge.

In recent years, there has been a widespread application of intelligent perception components such as cameras [

8], millimeter-wave radar [

9], and LIDAR [

10] in mobile robots. By incorporating cameras with deep learning-based object detection algorithms like YOLO (You Only Look Once) [

11], SSD (Single Shot MultiBox Detector) [

12], and RetinaNet [

13], these robots have demonstrated exceptional recognition capabilities for shapes and colors, enabling the accurate tracking of illegal unmanned aerial vehicles (drones). Cameras, with their lightweight and compact design, do not compromise the payload capacity of autonomous drones [

14]. When compared to millimeter-wave radar and LIDAR, cameras are the most suitable choice for identifying illegal drones.

Visual servoing applies visual sensors to acquire real-time image information and utilizes these data to accomplish objectives such as target tracking, localization and navigation, posture control, and feedback control [

15]. This technology enables the precise positioning, path planning, posture adjustment, and autonomous control of robots [

16]. Visual servoing encompasses Position-based Visual Servoing (PBVS) and Image-based Visual Servoing (IBVS) [

17]. PBVS involves establishing a mapping relationship between the autonomous drone and the target drone in relation to the inertial coordinate system [

18], requiring GPS information. However, commercial GPS information has a positioning error of up to 1 m, which is larger than the size of the target drones. On the other hand, IBVS directly defines image plane coordinates using image features, rather than task space [

19]. Its fundamental principle involves calculating control variables from the error signal and converting them into the motion space of the autonomous drone. By employing the obtained image error for closed-loop feedback control, the autonomous drone can move towards the target drone and accomplish the tracking process.

Considering the maneuverability of illegal drones, if the position of the illegal drone in the next moment can be predicted in advance during the tracking process, it would be beneficial for the trajectory planning [

20] of the autonomous drone, allowing it to reach the capture position earlier and improve capture efficiency. Currently, trajectory prediction methods mainly include interpolation methods, linear regression, filtering methods, dynamic model methods, and machine learning methods [

21]. The position signal of the illegal drone obtained using computer vision techniques contains noise [

22]. Interpolation methods are sensitive to noisy data or outliers, resulting in prediction deviations. In addition, interpolation methods are usually based on linear or polynomial interpolation principles, making it difficult to reflect complex nonlinear relationships. Linear regression models have a trade-off between bias and variance. When the model complexity is insufficient, underfitting may occur, meaning the model fails to fit the data well. On the other hand, when the model complexity is too high, overfitting may occur, whereby the model performs well on the training data but poorly on new data. Dynamic model methods and machine learning methods both involve solving complex mathematical optimization problems, especially for nonlinear and non-convex problems, which can be time-consuming. Filtering methods mainly include Kalman filtering [

23], particle filtering, etc. Among them, the Extended Kalman Filter (EKF) algorithm [

24], which is an extension of the KF algorithm, can be used for nonlinear models, and is highly acclaimed for its low computational complexity and fewer computational resource requirements. It provides a feasible solution for real-time and efficient state estimation and trajectory prediction.

Configuring an automatically triggered robotic arm beneath the autonomous drone, which closes and grasps the unauthorized drone upon catching up with it, is crucial. However, during this process, the autonomous drone is susceptible to strong interference, which can potentially lead to a crash. Enhancing the robustness of the system is vital to mitigate the risk of a crash. The real-time estimation of external disturbances and compensation for unknown disturbances are key challenges in achieving stable flight for autonomous drones. Time Delay Estimation Control (TDC) is a nonlinear control strategy that effectively addresses unknown disturbances in dynamic control problems with complex nonlinear effects [

25]. TDC utilizes the previous state of the system to estimate the current collective dynamics. It offers a concise and efficient model-free control strategy. TDC is suitable for handling continuous and slow-varying unknown disturbances [

26]. However, when impact signals exist within the system, the control effectiveness of TDC can be weakened. Therefore, combining TDC with other robust control algorithms can compensate for estimation errors and enhance the robustness of the control system.

Sliding mode control is a robust, fast-response, and simple implementation nonlinear control strategy [

27]. It suppresses system parameter disturbances and external disturbances by introducing sliding manifolds and quickly responds to changes in system states [

28]. With advances in sliding mode control theory [

29], Fast Non-Singular Terminal Sliding Mode Control (FNTSM) has emerged in recent years [

30]. It achieves the finite-time convergence of system states and improves control robustness. Therefore, for a class of underactuated drone systems containing time-varying uncertain nonlinearities, unknown, and impulsive disturbances, the complex external disturbances are generalized as an aggregated unknown term. Based on Terminal Sliding Mode Control (TSMC), FNTSM is introduced to construct a composite controller. The traditional PD-type sliding manifold is upgraded to a PID-type sliding manifold, which obtains faster transient response and smaller steady-state error through the integral term [

31]. Furthermore, to avoid the drawback of fixed high gain coefficients that may lead to system oscillations and trigger high-frequency unmodeled terms, a parameter adaptive updating mechanism is introduced into the PID-type sliding manifold to achieve the adaptive tuning of control parameters. The derivative of the control gain is proportional to the sliding manifold, which is beneficial for suppressing the adverse effects of noise. Without changing the structure of the sliding manifold, the increase in gain automatically adjusts with the change of the sliding manifold and accelerates convergence speed when the sliding variable deviates from the sliding manifold.

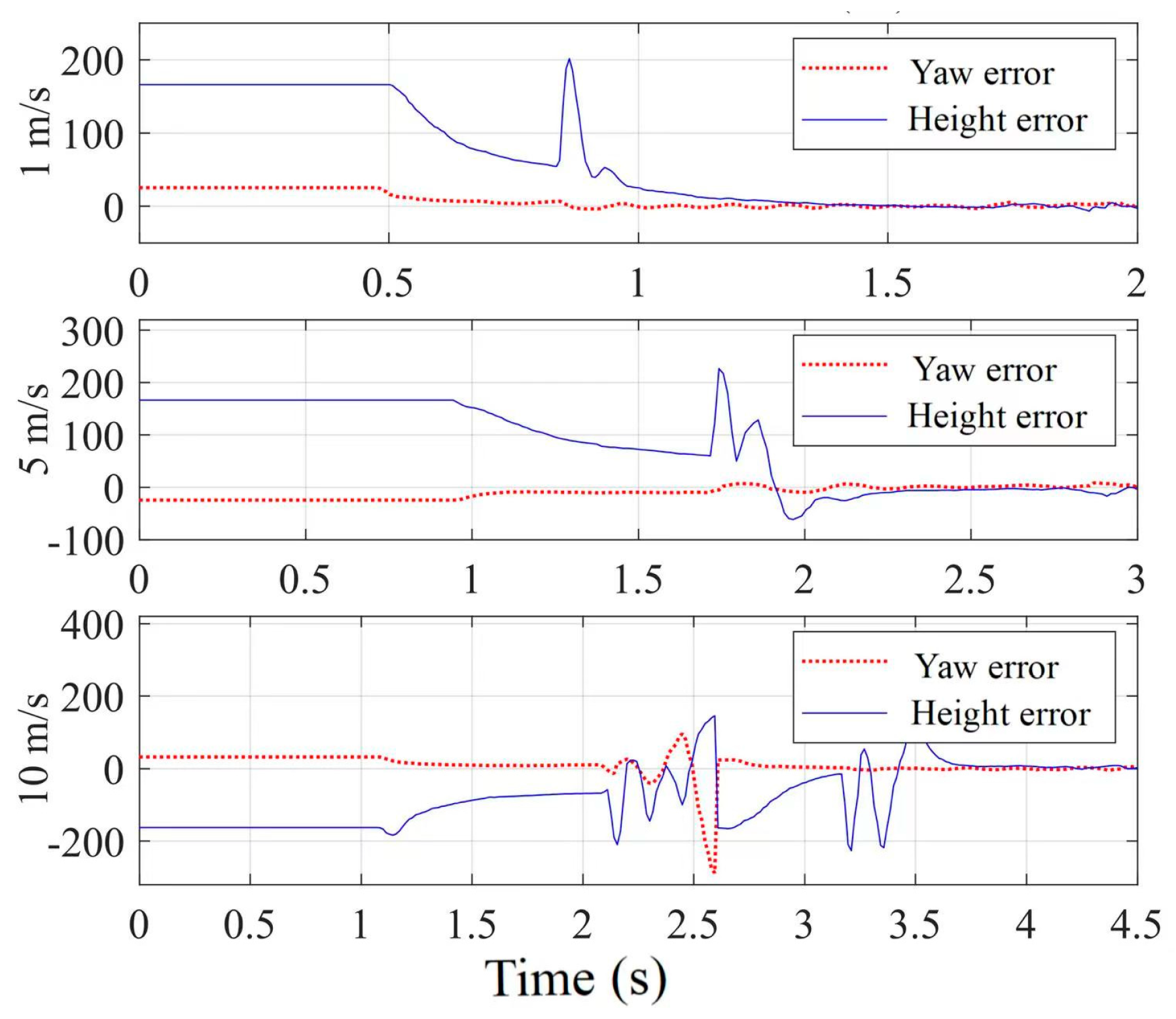

The contributions of this work can be further emphasized as follows: (1) In order to suppress the interference of illegal drones on autonomous drones and maintain stable flight, sliding mode control is introduced into Target Drone Control (TDC), forming a new robust control strategy integrating adaptive PID-type sliding manifold, TDC, and fast non-singular terminal sliding mode, which has strong robustness and characteristics of model-free control. (2) In order to reach the capture position in advance and improve capture efficiency, the EKF algorithm is integrated into IBVS to estimate the future position of the target drone, providing feasible solutions for real-time and efficient state estimation and trajectory prediction. The superiority of the proposed methods is verified through counter drone capture experiments.

The remaining parts of this paper are organized as follows:

Section 2 presents the system design, including the modeling of the counter drone dynamics, IBVS method, EKF trajectory prediction, and controller design.

Section 3 presents hardware-in-the-loop simulation and flight experiments.

Section 4 concludes the paper.

2. System Design

The system diagram in

Figure 1 depicts the remote communication between the ground station and the counter drone, which is equipped with an onboard computer featuring autonomous flight capabilities and intelligent perception using a camera to detect the illegal drone. The proposed airspace management strategy incorporates three critical technologies: IBVS, a trajectory prediction module based on the EKF, and a non-linear robust controller.

The core idea of the counter capture strategy is to integrate IBVS and EKF algorithms to estimate the future position of the illegal drone. The mechanical arm is then used to capture the target at a specified velocity, ultimately bringing the illegal drone to a designated area. Subsequently, key issues such as the dynamic modeling of the counter drone, IBVS, and trajectory prediction will be discussed.

2.1. Counter Drone Dynamics Modeling

The dynamics model of the drone is utilized for designing robust controllers. The counter drone is a quadcopter, which is a nonlinear, underactuated, and strongly coupled dynamic system with four input control signals and six output states. As shown in

Figure 2, the counter drone is considered as a rigid body model, experiencing the lift generated by four motors and its own weight, with three position coordinates and three attitude angles. The figure depicts the world coordinate system

denoted as {

e} and the body coordinate system

denoted as {

b}.

The counter drone dynamics model consists of both position dynamics and attitude dynamics models, with the position dynamics model encompassing horizontal and vertical channel dynamics models [

32].

The horizontal channel dynamics model is established based on the Newton–Euler dynamics theory [

33]:

where

represents the horizontal acceleration in the world coordinate system;

X and

Y represent the positions in the

xe and

ye directions under the world coordinate system, respectively;

and

represent the speeds in the

xe and

ye directions in the world coordinate system, respectively;

;

;

represents the yaw angle;

represents the roll angle;

represents the pitch angle;

represents the horizontal disturbance.

The dynamic model of the vertical channel is established as follows:

where

is the total thrust of the drone;

,

, and

are the displacements, velocities, and accelerations in the z direction in the world coordinate system, respectively; m is the total mass of the drone; g is the acceleration of gravity;

l is the drag coefficient; and Δ

P is the mechanical hand dynamics term.

We define

as follows:

where

can be considered as a lumped uncertainty term that includes counter drone gravity, torque, manipulator dynamics, and external disturbances. Then the above formula can be changed to:

The following content is the posture dynamics model

where

represents the torque of the propeller relative to the body axis;

denotes the rotational inertia about the three axes of the counter drone;

corresponds to the angular velocity in the body coordinate system;

stands for the angular acceleration in the body coordinate system; and

signifies the gyroscopic torque experienced by the drone.

Based on the small perturbation assumption, we obtain

where

.

2.2. Image-Based Visual Servoing Method

During the theoretical analysis phase, two fundamental assumptions are provided.

Assumption 1: The relative position between the counter drone’s body coordinate system and the camera coordinate system remains constant.

Assumption 2: The counter drone can record in real time the center coordinates of the illegal drone, which are acquired via machine vision methodologies, such as YOLO-v5 [34], and can be used to predict its future trajectory center coordinates .

The core idea of IBVS is to achieve the coincidence of the future trajectory center coordinates

of the illegal drone and the center coordinates of the manipulator claw in the pixel coordinate system {

i} [

35]. The relative positional relationships between the camera, camera plane, and the illegal drone are illustrated in

Figure 3. In the figure,

represents the camera coordinate system, and

represents the pixel coordinate system.

The counter drone is equipped with a forward pinhole camera fixed on its lower part, denoted as coordinate system . and represent the image plane axis and the camera focal length, respectively. The quadcopter drone is an underactuated system. To decouple the dynamics of the quadcopter, a virtual camera frame is introduced. The origin of coincides with C, and the axis is always parallel to the axis. Additionally, the definition of the virtual image plane is similar to the definition of the image plane in C.

Assuming the illegal drone is a stationary target point

P, with the world coordinate and the virtual camera frame,

is

and

, respectively. Their transformation equations can be expressed as

where

Differentiating

with respect to time

where the linear velocities of the illegal drone in the framesworld coordinate and virtual camera frame

are denoted as

and

, respectively, with the unit vector

.

Let the coordinates of the illegal drone P in the virtual image plane be labeled as

P. The perspective projection equation in camera frame

is then expressed as:

Substituting the above equation into Equation (11), the visual servoing equation is obtained, and the expression for the illegal drone

P in the virtual image plane is:

where

Through the Jacobian matrix Jv in Equation (13), it is evident that is not related to the pitch and yaw angles of the counter drone. To maintain the stability of the counter drone’s flight, the desired pitch and yaw angles are set to zero.

Equation (13) expresses how the illegal drone moves in the image plane when the camera is in motion. For visual servoing, the focus is on its inverse problem—given the motion of the illegal drone in the camera plane, we determine the camera’s motion.

We then transform Equation (13) into:

The first term of corresponds to the velocity of the drone in the z-axis direction, denoted as B. Under the assumption of small perturbation linearization, the second term represents the angular rate of the desired roll angle. With this, the dynamic deconstruction of the counter drone in visual servoing is concluded.

2.3. Extended Kalman Filter Trajectory Prediction

To reach the interception position in advance, the EKF algorithm is employed to predict the position of the illegal drone in pixel coordinates. The EKF algorithm utilizes Taylor series expansion to linearize the treatment of nonlinear systems, followed by recursive computation and estimation. Its nonlinear expression is given by

In the equation, f(x) and h(x) represent the nonlinear mapping relationship; k is the time step; is the state vector; is the control vector; is the process noise; is the measurement vector; is the measurement noise; k−1 corresponds to the previous time step; ; ; ; ; is the observation error covariance matrix; is the measurement error covariance matrix.

The linearization process is as follows:

The linearized equation for Formula (11) is as follows:

The prediction process comprises a prediction equation and a correction equation.

Correction Equation:

where

;

represents the true value of the visual servoing feedback;

is the a priori estimate; I is the identity matrix.

Directly predicting the coordinates in (

x,

y), we can define:

obtaining

We then define

and obtain

2.4. Controller Design

The goal of controller design is to endow the counter drone with high maneuverability and precision during the interception of the illegal drone, suppress disturbances caused by the illegal drone to the system, and achieve strong robustness.

Following the core idea of IBVS, the tracking error is defined as:

In the equation, and represent the tracking errors in the horizontal and vertical directions between the predicted center and the visual servoing center, respectively. denotes the coordinates of the visual servoing target point, chosen here as the center of the manipulator claw in the camera plane. represents the coordinates of the predicted center.

The drone’s control process is illustrated in

Figure 4. To ensure the precise and rapid tracking of the target center in visual servoing, a controller is designed. We take the vertical channel as an example.

The following sliding mode surface [

20] is chosen

where

;

.

To eliminate oscillations and achieve finite-time convergence, the FTSM reaching law is employed.

where

,

is the sign function;

;

.

The system’s stabilization time is

Since

, considering Equation (4), the control law is designed as follows:

where

.

Due to the inability to establish an accurate mathematical model for the impact disturbance at the moment of interception, the TDE technique is employed for online estimation and real-time compensation of unknown disturbances. This approach enables the acquisition of the nominal model of the controlled object, thereby simplifying the controller structure.

Based on the vertical channel’s dynamic model (4), in conjunction with Equation (27), the TDE technique is utilized to obtain

:

where

t represents the current time, and (

t −

k) corresponds to the current time delayed by

k time. The complete expression of the control law is:

Similarly, the control law’s expression for the horizontal channel is

Taking the vertical channel as an example to demonstrate the stability of the proposed controller, the Lyapunov design is formulated as

The derivative with respect to time is

Taking the derivative of Equation (28) and substituting it into Equation (35), we get

By simultaneously solving Equations (30) and (31), we obtain

For the estimation error of TDE, there always exists a positive number such that is bounded, i.e., .

Substituting Equation (37) into Equation (36), we get:

The above equation is further discussed in the two cases

and

For equation (39), when

,

, the sliding surface can converge to the region in finite time

where

.

For Equation (40), when

,

, the sliding surface can converge to the region in finite time

where

.

Combining Equations (41) and (42), the sliding surface converges in finite time to

where

.