1. Introduction

The rapid development of uncrewed aerial vehicle (UAV) technology has enabled its increased application in civil operations, including modelling of hazardous gas leakage [

1,

2], infrastructure inspections [

3,

4], monitoring of morphological changes in coastal areas [

5], traffic incident management processes [

6], as well as various agricultural activities and related design issues [

7].

There is a growing interest in scientific research regarding the use of drones equipped with thermal cameras in search and rescue (SAR) missions, with a focus on their effectiveness, limitations, and potential technological capabilities. This discussion includes various aspects such as optimal integration of thermal infrared (TIR) technology within UAV technology, challenges that are caused by environmental conditions [

8], and the accuracy of victim detection [

9,

10]. Advances in artificial intelligence and machine learning are additionally expanding the possibilities of automated detection and operational efficiency enhancements. The discussion is supported by various studies and field tests aimed at improving the use of thermal imaging drones for more effective SAR operations [

11,

12], especially in challenging conditions such as night-time operations [

13,

14,

15] or difficult terrain [

16].

The application of a thermal camera plays a significant role in the use of drones in SAR operations in maritime and coastal areas [

17]. The possibility of automated detection considerably enhances the success of these actions. Moreover, the combination of optical and thermal cameras can further increase detection accuracy in maritime environments, especially during daytime searches [

18]. It is also important to understand how thermal imaging captures thermal signatures and how they appear in the water, allowing rescuers to conduct a more efficient search [

19].

Given the risk of drowning in water and the faster onset of hypothermia in colder water, rapid detection is crucial. At a temperature of 4.5 °C, a person can survive in water for less than an hour (

Table 1). Therefore, there is a clear need for fast, reliable, and efficient systems for victim detection and verification during SAR operations [

20,

21].

Despite many technological innovations over the last decade, maritime accidents continue to pose a significant threat to human lives [

23]. Consequently, there is a recognised need to improve maritime SAR operations and the technology used for them [

24,

25,

26].

The use of drones in maritime SAR operations has several advantages compared with traditional aerial surveillance methods. Benefits such as fast response times, low logistical requirements, cost-effectiveness, extensive SAR area coverage (through the use of multiple drones), and more, are clear advantages of using drones over SAR aircraft and helicopters. On the other hand, a limitation is the flight duration due to the battery capacity of electrically powered drones, which restricts their application to coastal areas, depending on the drone’s propulsion capabilities [

17]. Given their relatively low payload capacity, commercially available drones are primarily used for surveillance and detection in maritime SAR operations and are equipped with optical (RGB) and TIR systems.

The DRI requirements based on the Johnson criteria (one line pair for detection, three line pairs for recognition, and six line pairs for identification) are standard where “Detection” refers to the distance at which a target initially becomes visible in the image, “Recognition” refers to the distance at which you can determine the object’s class (whether it is a human, animal, or vehicle), and “Identification” refers to the distance at which you can distinguish between objects within the same class [

27,

28]. These specified DRI distances assume a 50% success rate, with individual manufacturers of TIR equipment having the autonomy to determine their own DRI distances. This is because the DRI requirements do not take atmospheric parameters into account, allowing manufacturers to interpret them independently.

An alternative approach is the “Pixels on Target” method, which is based on the number of pixels over the critical dimensions of the observed target, subsequently converted into pixels per meter (PPM) values using algorithms. For example, if the target is a person, the standard dimensions are 1.8 × 0.5 m; the corresponding DRI parameters (for humans only) are presented in

Table 2 [

28].

Previous research has shown that these values can vary significantly. The most important parameter affecting the efficiency of the TIR system is the temperature difference between the environment and a person’s body. This makes location and season fundamental parameters for the configuration of the TIR systems if the aim is the automatic detection or machine learning-based analysis of thermal images. The authors of one relevant study [

17] concluded that for the detection of a person (0.5 m × 0.5 m) with a TIR reflection of five pixels and a temperature difference of 10 °C, the detection altitude is 397 m above the ground and 119 m for a 3 °C difference. For a TIR reflection of 10 pixels, the altitudes decrease to 199 m (10 °C temperature difference) and 60 m (3 °C temperature difference). The scenarios in which these results were achieved included the detection of a person in the sea, people hidden in vegetation, and areas with canopy cover, among others. The results indicate specificities where grass, soil, vegetation, and other land obstacles have their own thermal reflections that affect the ability to detect the target person. In contrast to the mentioned scenarios, this research focuses solely on locating a person in the sea, which represents a realistic scenario for potential maritime SAR operations, with the fundamental environmental factor being the sea temperature. In this study, the effectiveness of aerial thermal detection in maritime environments is analysed, with a focus on identifying the operational parameters that influence detection efficiency.

2. Methodology

The aim of this research is to determine the capabilities of the TIR system during night-time search operations under weather conditions unfavourable for the efficiency of the TIR system—a sea temperature of 20.7 °C and air temperatures of 23–27 °C—focusing on determining the maximum operating altitude of a drone. The scenario involves a predefined location with two volunteers in the sea, representing individuals for whom a SAR operation is initiated, and a drone equipped with a TIR system searching for them (

Figure 1). This involves testing, in real time or through subsequent analysis, of the maximum altitude at which the TIR system can detect the presence of individuals in the sea, i.e., the altitude up to which the TIR system provides images that allow successful detection, recognition, and identification of a person in the sea. One limitation of the research is that it was conducted in the late summer period at relatively high sea temperatures, which significantly limits the potential of thermal drones.

2.1. Testing Setup

The location for the testing was the area of Bajnice, located in Split-Dalmatia County in Croatia. This location was selected due to hardly any people being present, which allows for continuous and uninterrupted conduct of the research, and because it is legally possible to reach higher altitudes for the drone.

Two adults were selected as test subjects for this study. This study was conducted according to two scenarios:

Survival suit scenario: One volunteer was dressed in a professional survival suit, which is mandatory equipment of the Life-Saving Appliances (LSAs) on ships. This represents a realistic component of potential maritime SAR scenarios involving commercial vessels.

Swimsuit scenario: The second volunteer was dressed only in a swimsuit, which is appropriate for the summer period when, in the case of a man overboard (MOB) incident, there is a high probability of individuals being in the sea in such attire for various reasons, and maritime SAR operations being required.

This test setup allowed the analysis of the thermal responses of individuals in realistic situations. To collect precise meteorological data, the Kestrel 500 Weather Meter was used. This atmospheric measuring instrument measures basic meteorological parameters: air temperature, humidity, pressure, wind speed, and direction, among others. The integration of this device into the experimental procedure enabled continuous monitoring and recording of the meteorological conditions.

Since the temperature measurements performed with the uncooled sensors of the TIR system depend on environmental temperature, the time required for the sensors to stabilise, the distance to the subject, the size of the field of view (FOV) [

30], and the body temperature of the volunteers were measured with the Extech IR200 IR thermometer.

The DJI Matrice 210 V2 drone (Shenzhen, China), equipped with the DJI Zenmuse XT2 thermal camera (Shenzhen, China) (

Figure 2), was used for this study. This drone, with a total weight of approximately 4.8 kg (including two TB55 batteries), offers a maximum flight time of 38 min, without considering additional load. Its maximum speed can reach up to 62 km/h. The drone’s ability to maintain stable flight in wind speeds of up to 12 m/s is particularly important for this research and its stated application. The additional protection provided by the IP43 certification is also crucial and prevents the ingress of water making it exceptionally useful in this context. According to the manufacturer’s data, this drone has a maximum communication range of 8 km (in obstacle-free and interference-free conditions), representing a reliable solution when large areas need to be covered. From these specifications, it is clear that the DJI Matrice 210 V2 is a significant tool for conducting fast and reliable terrain searches in the context of maritime search and rescue operations at sea, enabling victim verification and detection in real time.

The DJI Zenmuse XT2 (Shenzhen, China) system includes a thermal camera equipped with a 13 mm lens, as well as an optical camera with an 8 mm lens and a resolution of 12 MP. However, since the main objective of this research is to determine the optimal altitude values when using the TIR system to achieve DRI at night, under the given meteorological conditions and sea state, the RGB spectrum was not included in the analysis. The advanced version of this camera used in this study (

Table 3) enables thermal imaging with a resolution of 640 × 512 and up to 8× zoom.

2.2. Data Collection and Analysis

Two adult volunteers participated in this study as test subjects. Within this study, one volunteer was dressed in a maritime survival suit, while the other wore only a swimsuit. The results obtained from different altitudes and clothing of the individuals in the sea include atmospheric parameters of the air and sea and the body temperature of the person in the sea. These parameters, along with the technical characteristics of the TIR system, determine the degree of success in achieving DRI outcomes in maritime SAR operations.

During the search, the TIR system attached to the drone was positioned vertically toward the ground, clearly defining the field of view—FOV (

Figure 3). The thermal camera has a viewing angle of 45° × 37° (

Figure 3, α and β). Throughout the research, the TIR system maintained a vertical position, which means that the area of the FOV changed only with the alteration in altitude. This approach allowed for precise analysis, considering that the search area was accurately defined.

In both implemented scenarios, the drone with the TIR system recorded the thermal reflection of a person in the sea at various altitudes. A series of images were made at different altitudes: 20, 40, 60, 80, 100, and 120 m, to analyse the effect of altitude on DRI efficiency. The drone’s TIR camera system was remotely controlled, and the signal was transmitted on a portable computer where a manual (by eye) analysis of the thermal images was conducted. The observed differences in the measured temperatures of the head and torso/extremities of the person in the survival suit were also analysed separately, as they significantly affect the surface and the intensity of the thermal reflection.

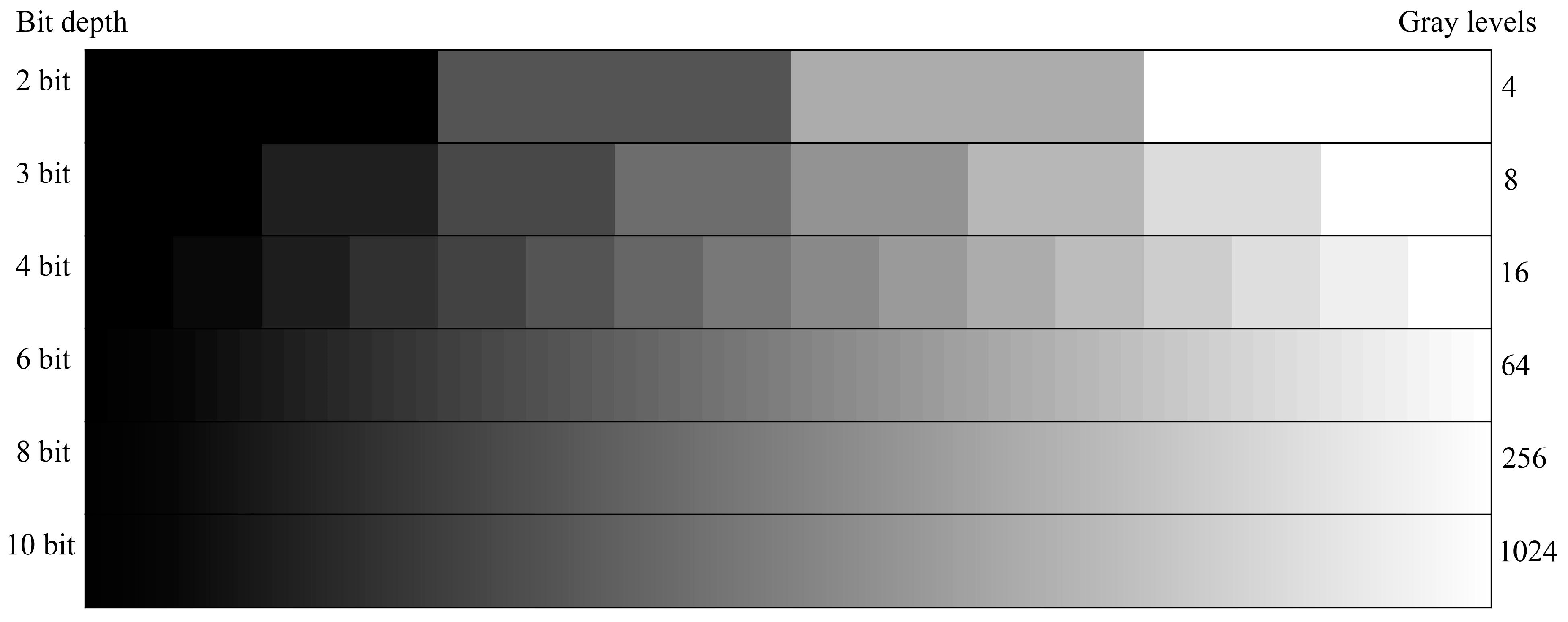

The 13 mm lens of the thermal system, with a fixed aperture of f = 1.25, makes it possible to capture a focused image of the entire area beyond the minimum focusing distance of 7.6 cm. The temperature range is displayed in a black-and-white palette, where black represents colder areas and white represents warmer areas. The bit depth of the thermal system is 8 bits so that 256 shades of the range from completely black to completely white can be displayed (

Figure 4).

The 8-bit range is sufficient for working with the existing video monitor on the drone’s control unit, as 256 shades allow the human eye to review the images without noticing steps between individual shades of grey. However, there is a limitation when enlarging the image on a larger computer screen. When the image is enlarged, the software selects the shades of grey that most accurately capture the measured frequency—thermal range—and reproduces them without changing. Nevertheless, with higher resolution images (e.g., 10–12 bits), more details can be observed upon zooming in, which are not captured in an 8-bit image. The full potential of the 8-bit thermal image resolution becomes apparent when zooming in on thermal images and observing the parameter of the total number of available sensor matrix pixels per square meter. Considering the resolution, the field of view of the thermal system, and the altitude of the drone, the number of available pixels per unit area of the field of view is shown in

Figure 5.

The obtained values were achieved with symmetrical x and y axes of the field of view in relation to the altitude of the drone, without the use of a drone gimbal head pan or tilt movement. Through their analysis, it is possible to determine the PPM parameter that is used for the DRI of a person in the sea. Given the resolution of the thermal system, the number of pixels decreases from the initial 1477 (at an altitude of 20 m) to 41 (at the maximum altitude of 120 m). The surface area of the TIR system’s field of view ranges from 221 m2 at 20 m altitude to 7985 m2 for a maximum of 120 m altitude.

The meteorological parameters of the research location are shown in

Figure 6, which indicates a constant sea temperature of 20.7 °C, and air temperature and pressure parameters that decrease with increasing altitude. The relative humidity of the air increases with altitude, from an initial 44.7% at 20 m to 49% at 120 m. The initial air pressure of 1012.8 hPa (at 20 m) decreases to 1000.8 hPa (at 120 m), as does the air temperature, which drops from an initial 26.2 °C at an altitude of 20 m to 23.8 °C at an altitude of 120 m. An additional parameter considered is the state of the sea, which according to the Douglas Sea Scale [

32] was recorded as level 4, indicating wave altitudes between 1.25 and 2.5 m. It is also important to note that the sea temperature is close to the temperature of a person in the sea. Therefore, with the existing dynamic range (DR) of the thermal system, recording their temperature difference exploits a very narrow dynamic range of the spectrum of available shades of grey in the thermal display.

3. Results and Discussion

Broadly speaking, there are two approaches to the interpretation of digital images. The first analysis is based on the observer’s skill, known as a photointerpreter, while the second method relies on machine-assisted interpretation, usually referred to as quantitative analysis [

33]. Photointerpretation involves the manual analysis of digital images by a human analyst, where the likelihood of a successful DRI process depends on the experience and skill of the analyst. Computer-conducted quantitative analysis entails analysing the image at the pixel level and grouping and creating a map of labels (so-called thematic maps) [

34]. Unlike a human analyst who can perform analysis based on usually one or just a few images (regardless of the expected DRI success), computer quantitative analysis necessarily uses images from multiple types of sensors, sources, and the spectrum of radio bands, which includes visual spectrum, infrared, thermal and different radar bands, ground, satellite, and other remote sensing sources.

3.1. Available Computerised Image Analysis Data

Unlike a human observer who views an image and notices details on a large-scale image, computer analysis of the image is performed at the level of individual pixels, combining all available sources of images, regardless of the source or frequency spectrum. In this process, depending on the frequency spectrum of the image, pixels are grouped with the aim of creating a mathematical model, known as a pixel vector. It contains all available spectral information for each individual vector in matrix form. Through statistical analysis of the pixel vector, with knowledge of the area occupied by each individual pixel, it is possible to identify what each pixel represents in the observed image. The success of computer analysis of images depends on two basic input factors: the number of input images, which should be as high as possible, and the diversity of the input spectrum of radio bands, which should also be as high as possible [

34]. This way, a sufficient number of input data about each individual pixel within different spectral bands is available, which are subjected to various mathematical transformations with the ultimate goal of recognising shapes, contrasts, patterns, textures, spatial relationships, and orientations. Some of the parameters that undergo these mathematical transformations visually manifest as brightness, contrast, noise, colour composition, light reflection, etc. Given that only a limited number of images in the thermal spectrum were used in this work, it is not possible to provide adequate computerised image analysis or its quantitative metrics; however, some of the methods applicable to the used images were utilised. The histogram is one of the basic indicators of pixel frequency (in grey 8-bit scale in this case) in an image. The histogram of a thermal image taken from an altitude of 80 m of a subject in a swimsuit (

Figure 7a) is shown in

Figure 7b.

The histogram shows information about the distribution of pixel intensities within the range of 0–255. The left side of the histogram represents the area with dark pixels, and it is noticeable that the majority of pixels are located around the 120-bit mark, i.e., in the middle of the greyscale spectrum. The total range of the recorded spectrum is narrowed, with neither the darkest nor the brightest tones captured (just above 200 bits,

Figure 7). The narrowed shape of the histogram also indicates the image contrast parameter. Contrast is a measure of the difference in brightness between dark and light areas in the image, and the narrow histogram shape indicates low contrast, which results from meteorological and/or lighting conditions on the scene. This highlights the complexity of the DRI process when analysing thermal images of thermally homogeneous objects. Computer analysis also allows for separate analysis of RGB channels. Since the image was originally taken in greyscale (not converted by postprocessing), the visual difference is only available for the blue channel, as shown in

Figure 8.

Despite the values of contrast and pixel distribution remaining the same, the image displayed in the blue spectrum enables easier visual identification during manual identification, which is a routine used in manual DRI analysis. Additional basic quantitative parameters are presented in

Table 4.

Pixel (specific) values refer to the count of pixels with a certain brightness intensity suitable for DRI analysis, which is individually determined according to the histogram scale and corresponding colour bar.

Additional capabilities of the quantitative computer analysis process enable the modelling of individual image parameters that can be used to facilitate manual DRI analysis, as shown in

Figure 9.

Figure 9 systematically shows (a) the original image; (b) the image after the application of the dilation process; and (c) the original image following the application of the exposure rescale intensity algorithm [

35]. The figure clearly demonstrates that, by applying different algorithms, the image can be manipulated with the aim of easier detection of the desired object. The mentioned processes can be conducted separately from the comprehensive computer analysis of the image or can be combined.

3.2. Photointerpretation

The analysis of thermal images of submerged subjects is carried out separately for scenarios in which the person in the sea is wearing a survival suit or not. In this process, the thermal reflection of the person’s head, extremities, and torso are analysed separately. The recorded night-time temperature of the sea (20.7 °C) falls within the range of the measured temperatures of the person in the sea, with minimal deviations, especially for individuals who are not wearing a survival suit (

Figure 10).

The temperature readings of the extremities and torso of the person in the sea (blue line) and the head (black line) are identical (22.5 °C), and they overlap in the graph.

3.2.1. Scenario 1—Submerged Subjects without Survival Suit

When analysing the thermal reflection of the person in the sea without a survival suit, a negative impact of the recorded sea temperature on the thermal image is observed. The thermal display of the person in the sea without a thermal suit is characterised by a uniform body temperature (22.5 °C) with a unique deviation from the sea temperature of 1.8 °C. The research has shown that the applied thermal system, within the recorded weather conditions, is usable from an initial altitude of 20 m up to a maximum of 110 m (

Figure 11a,b).

At altitudes above 110 m, the thermal system does not register the reflection of the submerged subject. Although the extremities and the head have the same temperature, at the maximum altitude, the only part of the body that emits thermal radiation with sufficient intensity is the head area of the observed person in the sea. The PPM parameter, which enables the DRI of the submerged subject, decreases with increasing altitude. At the same time, their relative proportion in the total number of available pixels per unit area changes from a minimum of 2.2% at 20 m to 6.14% at 110 m (

Figure 12).

Within the existing weather parameters, the system no longer registers thermal reflection at altitudes above 110 m. The number of detected pixels, forming the basis for the DRI of people in the sea, ranges from 33 (at an altitude of 20 m) to 3 (at 110 m). At the maximum altitude, this satisfies the presumed number of pixels for the initial detection phase. Additionally, around the spatial arrangement of the observed pixels, an adjacent, wider area of pixels in partially darker shades of grey was recorded, thus facilitating the silhouette observation of the submerged subject. Although these enable the recognition phase to be performed with higher accuracy, they are not included in the PPM parameter, as the additional pixels can only be identified after the primary, brighter pixels have been detected.

3.2.2. Scenario 2—Submerged Subject with Survival Suit

The detection of a submerged subject in a survival suit was carried out under the same weather conditions and sea state. One characteristic of a survival suit is that it retains body heat, extending the time a person can spend in the sea. As it covers the entire body except for the head (face), the thermal reflection differs considerably from that of a submerged subject without a survival suit. The measured temperatures of the covered extremities and torso of the person in the survival suit vary minimally from 18.5 °C to 18.7 °C, while the temperature in the head (face) area remains constant at 29 °C (

Figure 10). Considering the recorded sea temperature of 22.5 °C, the temperatures of the person in the survival suit achieve a significantly greater temperature difference, resulting in a higher value of the PPM parameter. This allows for DRI even at an altitude of 120 m (

Figure 13a,b).

The distinctiveness of the thermal reflection of a person in the sea with a survival suit compared with a person without a survival suit is reflected in the number and intensity of the observed pixels. At lower altitudes, in addition to the thermal reflection of the head, a limited thermal reflection in the area of the lower extremities is visible (depending on the position of the submerged subject in the sea, as well as the air and sea temperatures). At higher altitudes, both the number of observable pixels and their intensity increase significantly. Since the survival suit reduces the area of the body emitting heat, the exposed part of the body (face) emits a more intense thermal reflection. At the same time, the area immediately around the submerged subject is recorded in the darker part of the spectrum. In this way, the silhouette of the person in the sea is outlined by pixels of a wider DR. At altitudes above 100 m, detection is possible only based on the thermal reflection pixels from the head area (see

Appendix A,

Figure A1,

Figure A2,

Figure A3,

Figure A4,

Figure A5,

Figure A6 and

Figure A7).

The PPM parameter and its relation to the total number of available pixels are greater than for a person in the sea without a survival suit (

Figure 14).

The graph shows that the value of the PPM parameter, which enables the DRI phases of a person in the sea, does not decrease significantly at limited altitudes, which can be explained by the influence of the survival suit on retaining heat and decreasing the area of thermal radiation. The number of PPM pixels ranges from 80 (at 20 m) to a minimum of 4 (at a definite altitude of 120 m for the detection phase). This enables the direct identification phase up to 60 m, the recognition phase up to 80 m, and the detection phase up to 120 m (see

Appendix A).

3.3. Camera Movement and DRI Capabilities

Considering the capabilities of the used equipment and activities during an actual SAR operation, a further simulation of the camera system’s capabilities was conducted. The movement of image parameter values necessary for successful DRI, obtained with the included camera motion, i.e., camera tilt, was analysed. Unlike the permanently perpendicular and self-stabilising camera position used in the research, the camera changed its initial position during the simulation (0°) to a maximum tilt of 70°, where 0° represents the perpendicular position used in the research. The purpose of the simulation is to determine the change in the camera’s field of view (with a constant viewing angle of 45° × 37°) and the number of visible pixels/m

2 with camera motion and simultaneous change in altitude. The results are shown in

Figure 15 and

Figure 16.

The number of available pixels/m² ranges from a maximum of 1477 (at 20 m altitude, 0° tilt) to 0.74 (at 120 m altitude, 70° tilt). Given that acceptable DRI results require a defined minimum resolution (standard person dimension 1.8 × 0.5 m) [

28], or the acceptance of a value of five pixels for the TIR reflection of an average person (0.5 × 0.5 m) [

17], it is evident that not all altitudes or tilt values are acceptable. More detailed results of the critical range of camera movement are shown in

Figure 16.

The number of available pixels/m² does not meet the basic DRI requirements above a camera tilt of 62° for drone altitudes above 80 m. Altitudes from 100 m to 120 m could potentially utilise up to the maximum camera tilt of approximately 40°. Although the parameter of available pixel count/m² is satisfactory, when viewed through the lens of the potential PPM parameter’s success rate and the total number of available pixels per unit area (

Figure 12 and

Figure 14), caution is necessary when using the drone camera’s tilt function, depending on the selected altitude. A camera tilt up to 40° at all altitudes does not diminish the achievement of acceptable pixel count values (

Appendix A Figure A8). Since atmospheric conditions and sea state were not considered here, the simulation results should be taken as guidelines and regarded as a theoretical basis for future research.

4. Conclusions

Thermal reflections of a person in the sea with and without a survival suit differ in the number of observable pixels, their spectrum (contrast), and the shape they represent. The PPM parameter achieved in this study includes only the brightest pixels, i.e., those closest to the brightest part of the spectrum. It should also be noted that, unlike previous similar studies, the body position of a person in the sea may not always be horizontal. While a person in a survival suit maintains a horizontal position with minimal movement in the sea, a person without a survival suit can change their position, reducing the visible surface and thermal reflection. Additionally, along with the survival suit, the temperature of the surrounding sea is a key factor. Without a survival suit, a person in the sea emits heat more evenly from a larger surface area, reducing the intensity of the recorded pixels, and thereby decreasing the effective altitude for maritime SAR thermal drone operations. With a survival suit, the surface area of heat emission is significantly reduced, increasing its intensity, which the thermal system images as a more noticeable clustering of much brighter pixels. Depending on the position of the person in the sea, at lower and medium altitudes (achieved in this study with the equipment used), additional thermal atmospheric reflection from non-immersed parts of the survival suit can also be recorded. Another key factor for the successful identification of a person in the sea is the resolution and bit depth of the thermal system. An 8-bit system is sufficient for reliable detection of larger thermal differences; however, in warmer seas when temperature can fluctuate greatly, there is minimal thermal difference between a person in the sea and the environment. For example, if using a 10-bit system was considered, it would result in 1024 shades of grey. This is four times more than the 8-bit system used in this research. It can be assumed that such a system would achieve a similar proportion of the PPM parameter to the total number of available pixels as the existing thermal system (

Figure 12 and

Figure 14), and in that case, the thermal images with a higher bit depth would provide better contrast. This improvement could lead to a better ability to detect persons in the sea. The survival suit reduces the surface area of heat emission with an elevated intensity of the remaining body emission, resulting in a thermal image with greater contrast (within the available dynamic range). Without a survival suit, the thermal image’s reduced contrast between the person in the sea and the surrounding sea becomes more noticeable. In both cases, a system with a higher bit depth would enable a larger number of recorded shades of grey within the available black-and-white spectrum. This would reduce the transitions between adjacent pixels, making the silhouette of the submerged subjects better distinguished from the surroundings, and therefore more recognisable. However, it is important to point out that the greatest benefit from increasing resolution and bit depth is realised during computerised image analysis, as this significantly increases the number of input pixel vectors. A pixel vector represents a single matrix record of a pixel in a format processed by the computer, while a human observer views the image as a whole, not at the pixel level. Due to the limitations of the human eye, increasing bit and depth resolution partially enhances the usability of such images in manual DRI analysis. In manual analysis, more information can be visually perceived by zooming in on details. Thus, analysing a wider range of shades leads to the facilitation of the DRI process for a trained analyst’s eye.

The uniformity of the sea temperature can be an advantage if the temperature difference between the person in the sea and the surrounding sea is sufficient. In this study, the value of the sea temperature is very close to the measured body temperatures, making the possibility of automatic detection of a person in the sea much more difficult and significantly complicating manual detection of a person without a survival suit. On the other hand, the survival suit’s property to retain heat results in an increased thermal reflection of the face as the only uncovered body part, thereby achieving a more contrasted thermal image. The research has shown that in such an environment, the maximum altitude allowing DRI ends at about 60 m (see

Appendix A Figure A3a,b). At higher altitudes, successful recognition/identification phases require heightened attention from SAR operators and careful analysis of thermal images. In this way, under the given meteorological conditions, the sea parameters, and the used TIR system, identification is possible up to an altitude of 110–120 m (see

Figure A5,

Figure A6 and

Figure A7), without camera rotation. This makes sea temperature a key factor in the success of DRI in maritime SAR operations, as in a uniform maritime environment there are no other subjects whose thermal reflection would interfere with or mask the reflection of a person in the sea. In addition, a favourable thermal difference increases the possibility for automatic detection of the person being searched for, which is more pronounced in the winter months and areas with colder seas.