1. Introduction

Emergency rescue scenarios primarily target natural disasters such as fires, floods, earthquakes, and landslides, as well as public safety incidents such as urban counter-terrorism, hostage rescues, and explosive disposal. Typically, natural disasters present complex conditions including communication outages, power interruptions, road damage, and extreme weather, while safety incidents face challenges such as intelligence fog, rapidly changing situations, complex public opinion, and crowd gatherings. Therefore, efficient intelligence collection and upload, as well as command decision distribution, become critical to solving these problems. Drones, with their plug-and-play capability and high flexibility, can effectively expand the boundaries of intelligence collection and deploy airborne base stations to achieve the agile deployment of dedicated rescue networks and have thus become a focal point for researchers [

1]. However, drones in rescue scenarios often encounter various environmental disturbances and challenges [

2]. Not only do they need to serve as relay nodes to establish network links, but they also need to support the overall system in achieving computationally intensive tasks such as disaster data integration, intelligence situation analysis, and intelligent auxiliary decision-making. In addition, the payload carried by the drones during the execution of related tasks also contributes to the total energy consumption of the drones.

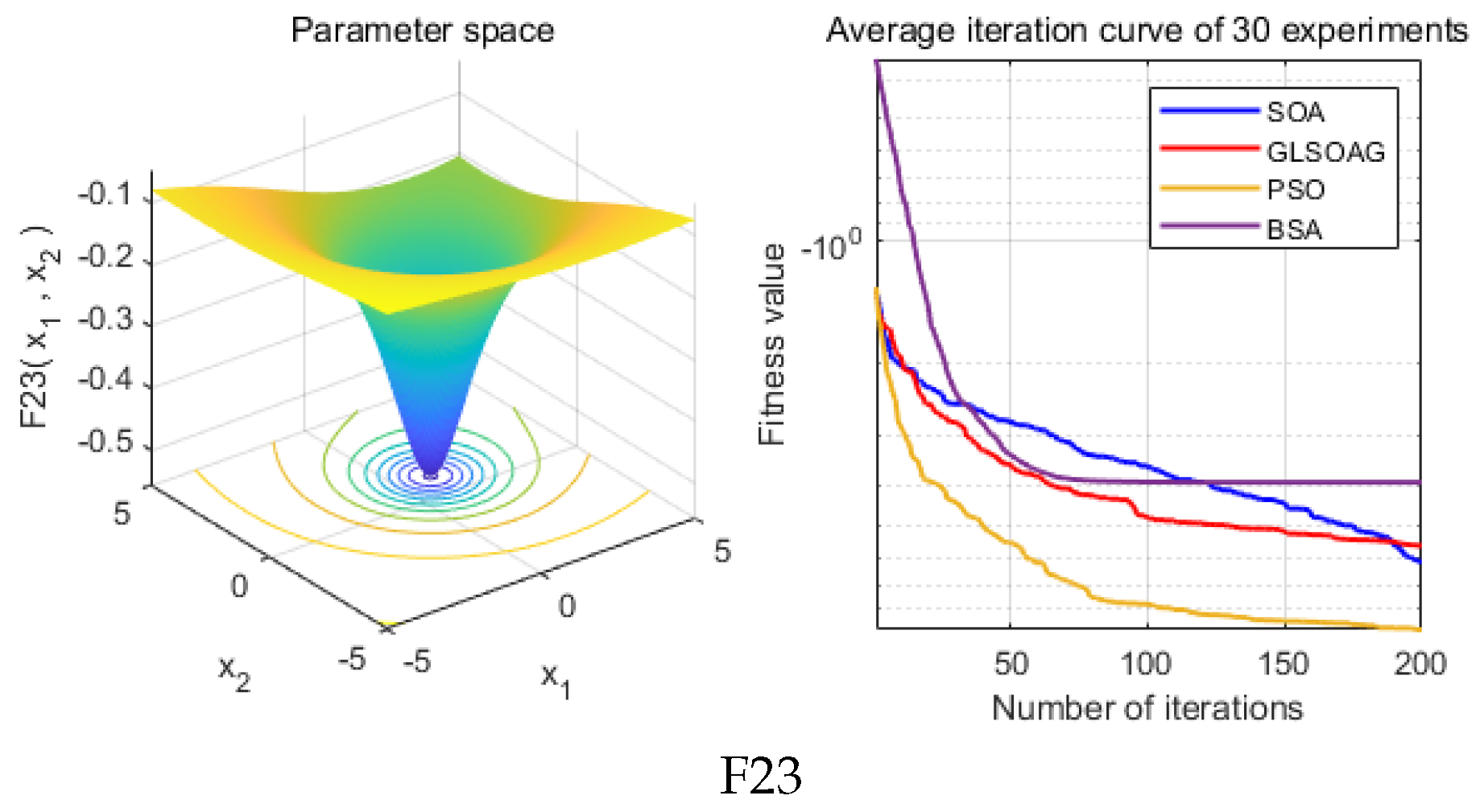

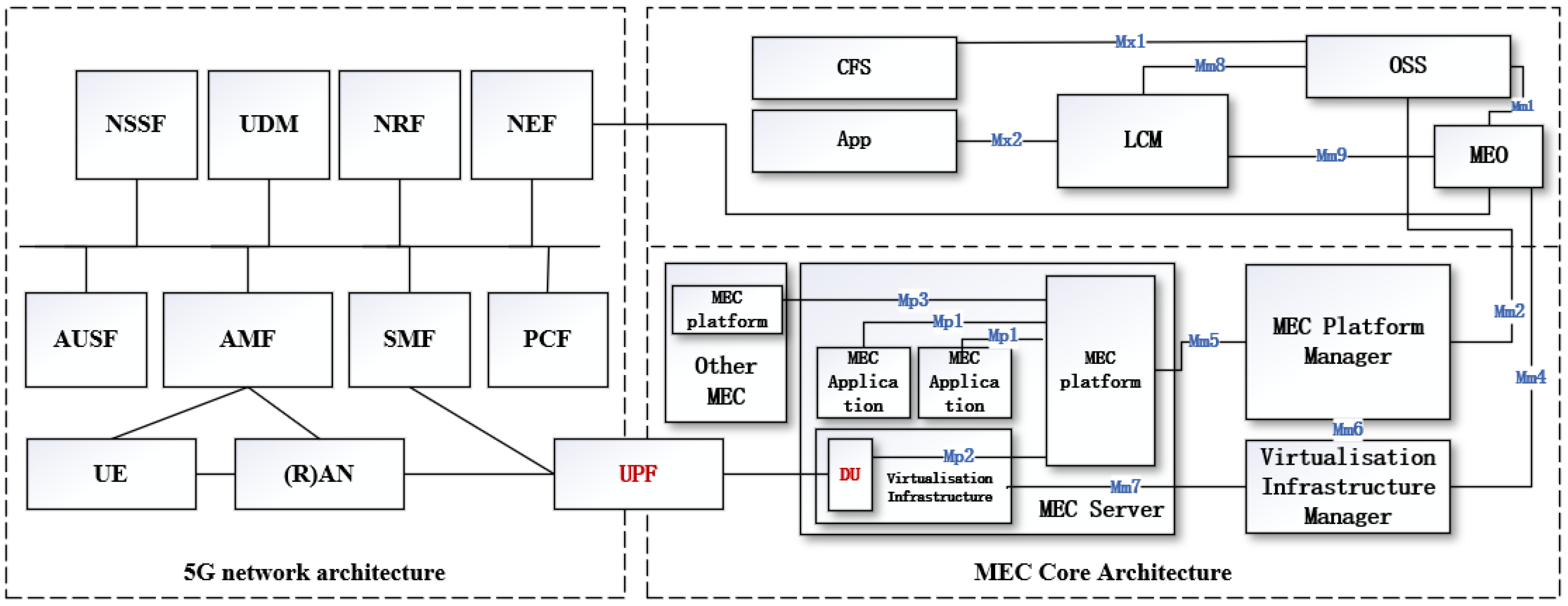

To this end, we comprehensively consider the needs of latency-sensitive and compute-intensive tasks in emergency rescue scenarios, addressing issues such as limited reconnaissance boundaries, complex data fusion, and heterogeneous communication networks. We propose a layered network architecture that is structurally simple and elastically powerful. Using 5G networks [

3] as the backbone, it provides a dedicated communication network with large bandwidth, low latency, and high reliability. Mobile Edge Computing (MEC) [

4] servers are deployed at both fixed and mobile nodes to offer a localized computing platform for intelligence data processing. This significantly improves the efficiency of drone control and data transmission, reduces the difficulty of data fusion processing, and extends the boundaries of drone search and rescue operations, as well as intelligence reconnaissance. Furthermore, to tackle the contradiction between the limited local processing resources for computational tasks and the higher latency associated with remote processing, we use the Seagull Optimization Algorithm (SOA) [

5] as a baseline and propose the Global Learning Seagull Algorithm for Gaussian Mapping (GLSOAG). Corresponding task offloading strategies are established to enable the system to effectively utilize computing resources, balance task delivery latency with system energy consumption, and adapt to emergency rescue operations under complex conditions. The main contributions of this paper are as follows:

Taking into account current issues in emergency rescue scenarios, such as limited reconnaissance boundaries, heterogeneous communication networks, complex data fusion, high task latency, and limited equipment endurance, a hierarchical network architecture based on 5G MEC is designed. Using the characteristics of 5G, including large bandwidth, low latency, and high reliability, MEC servers with the corresponding computing power are deployed as needed. This makes the system architecture feature flexible plug-and-play, network simplicity, strong applicability, and elastic computing power, effectively alleviating the aforementioned pain points. Not only improves efficiency, it also reduces the safety risks for rescue personnel.

The Global Learning Seagull Algorithm for Gaussian Mapping (GLSOAG) is proposed. Firstly, the population is initialized using Gaussian mapping. Secondly, the position update of the original Seagull Optimization Algorithm is changed to a global learning approach, allowing individual seagulls to learn not only from the current optimal individual’s position but also from the historical optimal positions and then update their positions by utilizing a random weight synthesis selection. Finally, a hybrid reverse learning mechanism is designed, which involves lens reverse learning for the optimal seagull and random reverse learning for individuals at the worst positions. Compared to other algorithms, the GLSOAG achieves advantages in convergence speed, optimization accuracy, and stability.

For the designed hierarchical network architecture, the Tammer decomposition method is introduced to decompose the multi-objective optimization problems of the system. Meanwhile, a task offloading strategy suitable for the target system is proposed, and based on the GLSOAG, joint optimization of system energy consumption and latency cost is performed. This effectively reduces the task delivery latency in rescue scenarios and optimizes the total system energy consumption.

2. Research Background and Motivation

Currently, in practical emergency rescue scenarios, three methods are predominantly used to establish dedicated networks: mesh self-organizing networks [

6], cluster communications, and specialized network devices. First, biological infrared detection devices, acoustic sensors, and other IoT sensing devices are connected via a mesh self-organizing network. Then, a small number of aggregation nodes are deployed within the network, through which each IoT sub-node transmits the detected data to the upper-level network. Second, in scenarios where communication and power supply are interrupted, mobile communication networks usually cannot operate normally; therefore, cluster base stations are often set up on-site to handle single calls, group calls, and cluster communications for all networked walkie-talkies. Third, terminals carried by rescue personnel can effectively monitor the location, vital signs, and behavioral posture of the rescue personnel, connecting through the lightweight MQTT (Message Queuing Telemetry Transport) network.

Although various devices and systems can provide good services, the requirements of rescue missions increase, the quantity and variety of required equipment grow, and the network standards vary, making it difficult for different subsystems to effectively integrate within the same rescue scenario. This poses serious challenges to disaster data fusion, efficient information dissemination, and disaster situation analysis. In response to these challenges, the existing research mainly follows three technical approaches: first, optimizing at the self-organizing network protocol level to improve network compatibility, reliability, and security [

7,

8]; second, focusing on the algorithmic level for drone payload functions to enrich system capabilities and broaden mission scenarios [

9,

10]; third, working on the network architecture level to enhance the efficiency of drone mission execution as a whole [

11,

12]. While these efforts largely meet the needs of emergency rescue missions, the protocol-level work tends to overlook the latency sensitivity of rescue tasks, and the algorithmic-level work is somewhat specialized, catering to specific domains such as visual or radar signals, neglecting the common needs of data fusion. Network architecture-level work has a certain degree of generality but is often based on certain limitations, whereas real-world scenarios are multifaceted. Motivated by this, we design a general system architecture that is network-simple, flexibly modular, and elastically powerful, propose the GLSOAG, and conduct joint optimization of energy consumption and latency, two key factors constraining rescue missions. Simulation experiments show that, under different task loads, the GLSOAG-based method can achieve certain advantages compared to other methods.

Although various types of equipment and systems can better provide the corresponding services, with the rescue mission needs, the number and types of equipment and devices required are greater and greater, and the network system is also different; with the various components of the system in the same rescue scenarios, it is difficult to be effectively compatible with disaster data fusion, disaster situation analysis and prediction, and other challenges. For this reason, based on the full study of the 5G and edge computing system architecture, a system architecture with a simple network, flexible splicing, and elastic arithmetic is designed.

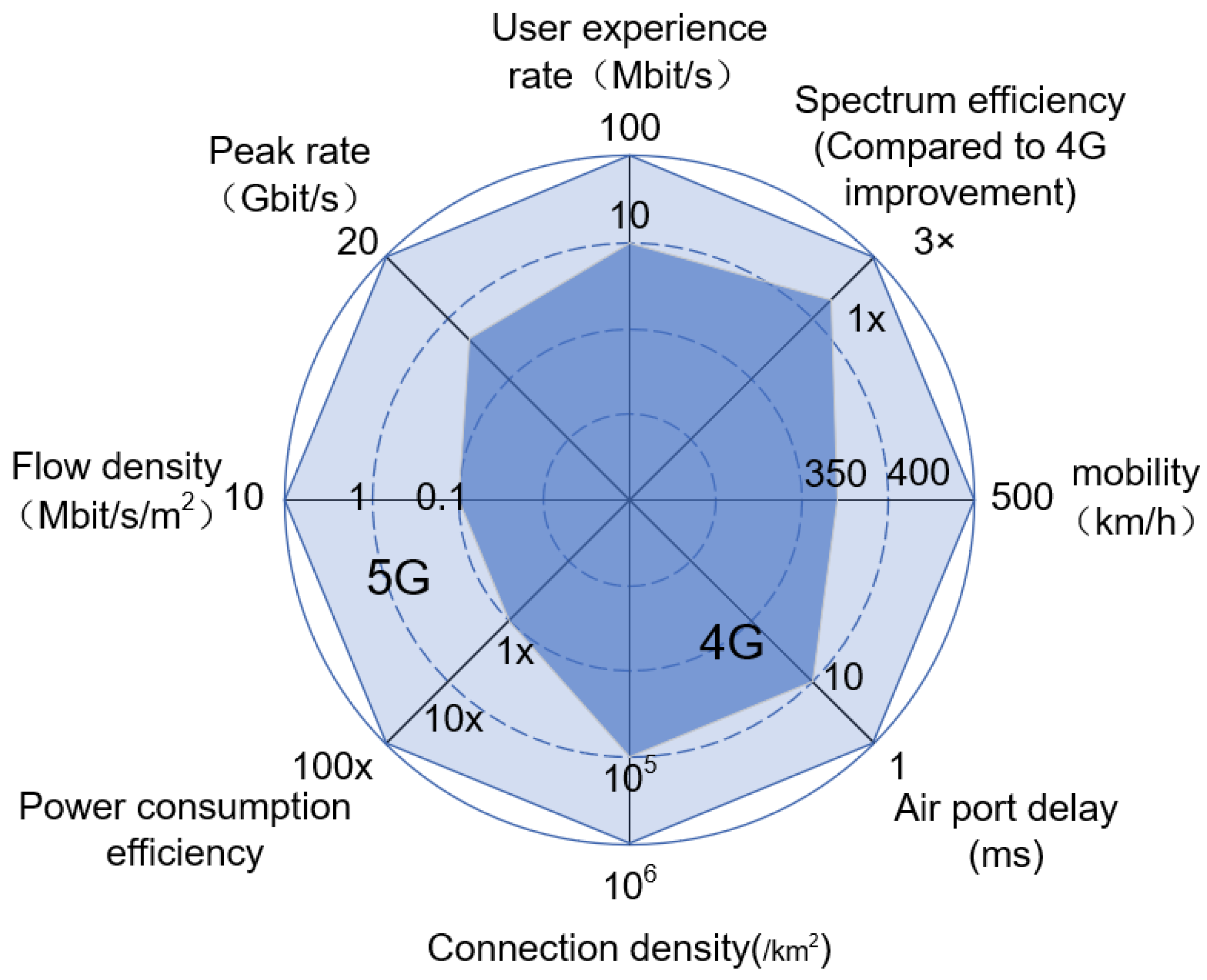

As shown in

Figure 1, 5G mobile communication networks and edge computing can be integrated in the User Plane Function (UPF). Based on this theoretical foundation, in the system architecture designed in this paper, MEC is uniformly deployed based on macro base stations, micro base stations, or vehicle-mounted base stations. This avoids transmission latency between servers and network access, enhancing the efficiency of task processing. Additionally, within the dedicated network area, devices either connect directly via 5G or access through a protocol converter deployed on the base station side, from the IoT aggregation nodes. This preliminarily realizes the concept of a unified network for rescue missions.

5. Method

5.1. System Architecture Design

5.1.1. Demand Analysis

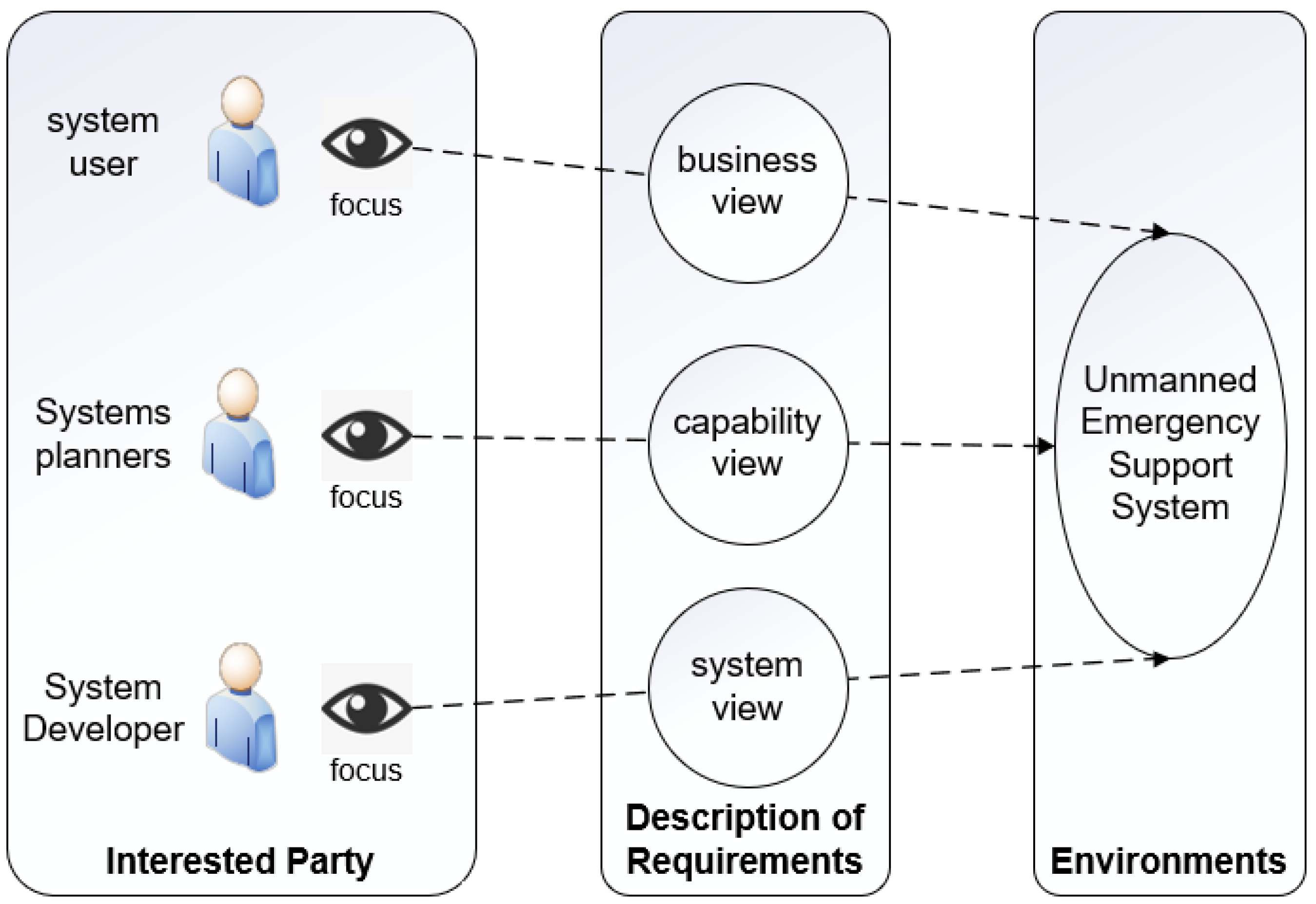

To capitalize on the smart devices in UAVs, unmanned vehicles, and other mission circuits to enhance the system’s empowering advantages, taking the emergency rescue mission requirements as a pull, we introduce the multi-view methodology in the field of systems engineering [

22] to comprehensively describe the target system from the perspectives of three types of stakeholders, namely, system developers, system planners, and users, to derive a requirements analysis framework on which to design the target system.

Figure 4 shows the multi-view model, where the 3 categories of people are mapped to the system view, capability view, and business view, and from these, the system requirements, capability requirements, and business requirements are derived, respectively, which together form the requirements analysis framework shown in

Table 1.

5.1.2. System Architecture

Based on the requirements analysis framework, we initially depict the key elements of the system that meet the mission requirements. As the backbone network, 5G is used to provide a mobile network with large bandwidth, low latency, and high reliability to adapt to high mobility into the network devices such as UAVs. At the same time, based on 5G network slicing, it meets the differentiated network support for different payloads of the same UAV and realizes the logical network exclusivity for multiple services. With MEC as the server entity of each layer node, it is suitable for the edge deployment of most common software and large models, and meets the needs of data fusion and situation analysis. The rest of the network-entry equipment uses protocol converters and other transitional means to access the 5G private network to meet the needs of various types of intelligent terminals in the emergency and disaster relief region for network entry.

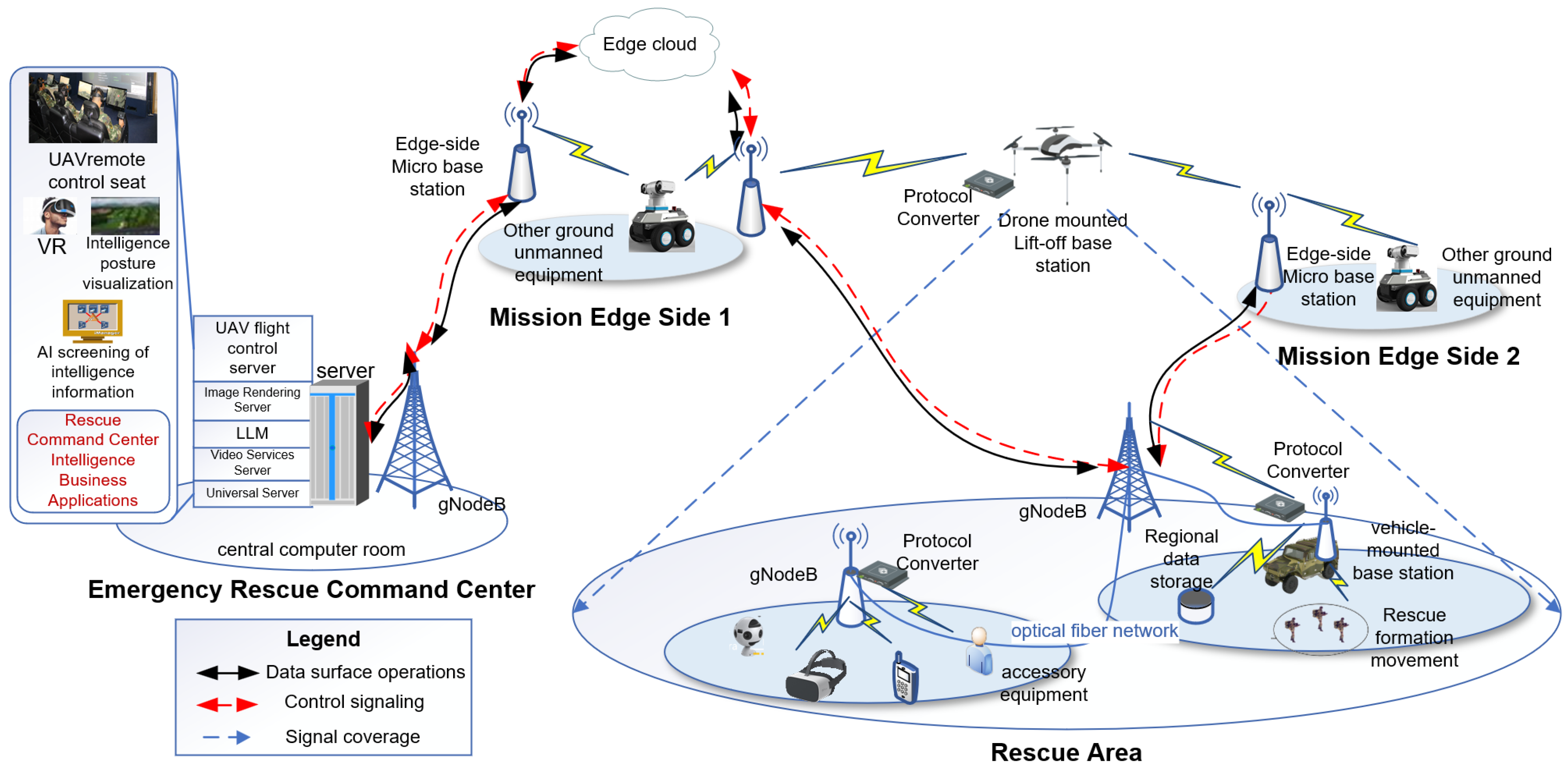

As shown in

Figure 5, a 5G macro base station, a central cloud server, and various business applications are deployed on the side of the emergency rescue command center. The 5G macro base station provides wireless network coverage for the command center and connects to the micro base station on the edge side of the mission through a fiber optic cable network through multiple hops to achieve the return of data from the edge as well as the distribution of the results of the processing. The central cloud server deploys the drone flight control, AI large model, image rendering, video rectification, preset algorithms, and other general emergency rescue business applications on the center cloud server. On the edge side of the mission, 5G micro base stations and Mobile Edge Computing servers are deployed, and the edge cloud is established by emergency communication vehicles or existing communication nodes to carry drone control signaling in the control plane of the 5G network, carry drone business data streams in the user plane, and allocate network resources for various types of services based on 5G network slicing. Data in the rescue area are offloaded to the edge cloud to complete lightweight data processing services. In the rescue area, a 5G private network is constructed based on the existing network information infrastructure in the field. If the network is interrupted, the communication vehicle carries a vehicle-mounted base station, the drone carries a lift-off base station, and the rescuer carries a backpack base station for network formation, providing high-speed network access for intelligent devices, drones, unmanned vehicles in the rescue area, etc. Because of the difference in network standard, some specialized rescue devices use the strategy of deploying protocol converters on the side of the micro base station to realize network access and ensure that the devices in the mission area are all in the same mission-specific network. The network access is realized by deploying protocol converters on the micro base station side to ensure that the devices in the mission area are in the same mission-dedicated network.

5.1.3. System Function

Rescue areas usually deploy drones, unmanned vehicles, robotic dogs, and other vital-sign detection devices according to mission needs and field environments, with drones and other devices realizing remote control signaling based on the control plane of the 5G network. Meanwhile, the collected intelligence data are transmitted from the user plane, offloading the rescue area’s imagery, audio, acoustic patterns, geographic environment, and other information within the local private network, and shunting to the local area, based on preset rules as needed, the edge, or the rear center. Among them, delay-sensitive tasks or simple solving tasks are solved and distributed locally in the rescue area via the on-board server to provide information support for on-site rescue tasks. Lightweight computing tasks are shunted to the edge side for processing through the private network, and then the results are distributed to the rescue area through the 5G downstream network. Large-scale tasks or data fusion tasks, such as disaster situation analysis, situation prediction, public opinion judgment, risk assessment, etc., are transmitted back to the rear command center and processed based on the pre-trained large language model and sufficient arithmetic resources, and the results are distributed to the edge side or the rescue area via the downstream network.

5.2. Feasibility Analysis

5.2.1. Application Feasibility

Regarding the rescue area side, the UAV carries different mission payloads, such as a lift-off base station, infrared detection device, aerial photography equipment, etc., to perform tasks such as disaster intelligence reconnaissance, emergency communication, and material protection as required. The drones are connected to each other through a self-organized network, and the aggregation node is selected to connect with the 5G base station (gNodeB) according to the local conditions to access the 5G network. At the same time, intelligent devices, VR/AR terminals, IoT terminals, etc., in the mission area access the 5G network through the protocol converter on the base station side to realize application services for various rescue missions. On the edge side of the mission, MEC servers are co-located with the base station to simplify deployment, and on-demand networking is used to build an edge cloud for deploying AI large models and unmanned equipment control servers. The edge cloud is connected to the 5G macro base station in the rear command center through the fiber optic cable network, and the emergency rescue command center all adopts wireless access to the network. Emergency rescue command center. Located in the fixed rear, the computing, storage and power resources are sufficient and can be reliably guaranteed. Therefore, it facilitates the deployment of emergency rescue services such as drone control applications, AI large models, image rendering, and video rectification, and realizes business applications such as intelligence processing based on large models, situation analysis and visualization, the cooperative control of drones, and VR/AR remote support.

5.2.2. Technical Feasibility

The implementation at the technical level mainly faces three difficulties.

Difficulty 1. Edge deployment of AI large models. Current approach: ➀ Deploying lightweight and large models based on server Docker environment but with limited resource utilization. ➁ By using large-scale model compression techniques such as quantization, pruning, or knowledge distillation, the model size can be compressed to achieve lightweight deployment, but the accuracy of the model will inevitably decrease. Our solution utilizes cloud and edge collaborative deployment, offloading lightweight tasks such as latency sensitive ones to edge servers for processing, thus avoiding the latency and security risks associated with multi-hop backhaul. When the task exceeds the load of a single machine, it is diverted to other edge servers for processing based on heuristic algorithms. Complex tasks are transmitted back to the central cloud for processing, and the inference results are distributed to the end of the rescue site through the 5G downstream network.

Difficulty 2. The fine-tuning and alignment of large models under low resource conditions. When computing resources are insufficient, large models often struggle to make effective inferences and cannot serve users properly. This article proposes a resource optimization strategy based on heuristic algorithms for a three-layer computing resource network architecture, which can effectively integrate idle resources in the network and enable large models to effectively utilize parallel training strategies such as data parallelism or model parallelism to achieve fine-tuning and alignment.

Difficulty 3. Heterogeneous data fusion. The transition strategy of using protocol converters for heterogeneous networks is based on 5G slicing to separate different business networks. In the future, with the development of semiconductors, more intelligent terminal chips will support heterogeneous network access and have certain computing power, making the network more concise and efficient. The above-mentioned difficult issues have accumulated relevant research and field testing experience in the early stage of the research group.

5.3. Problem Modeling

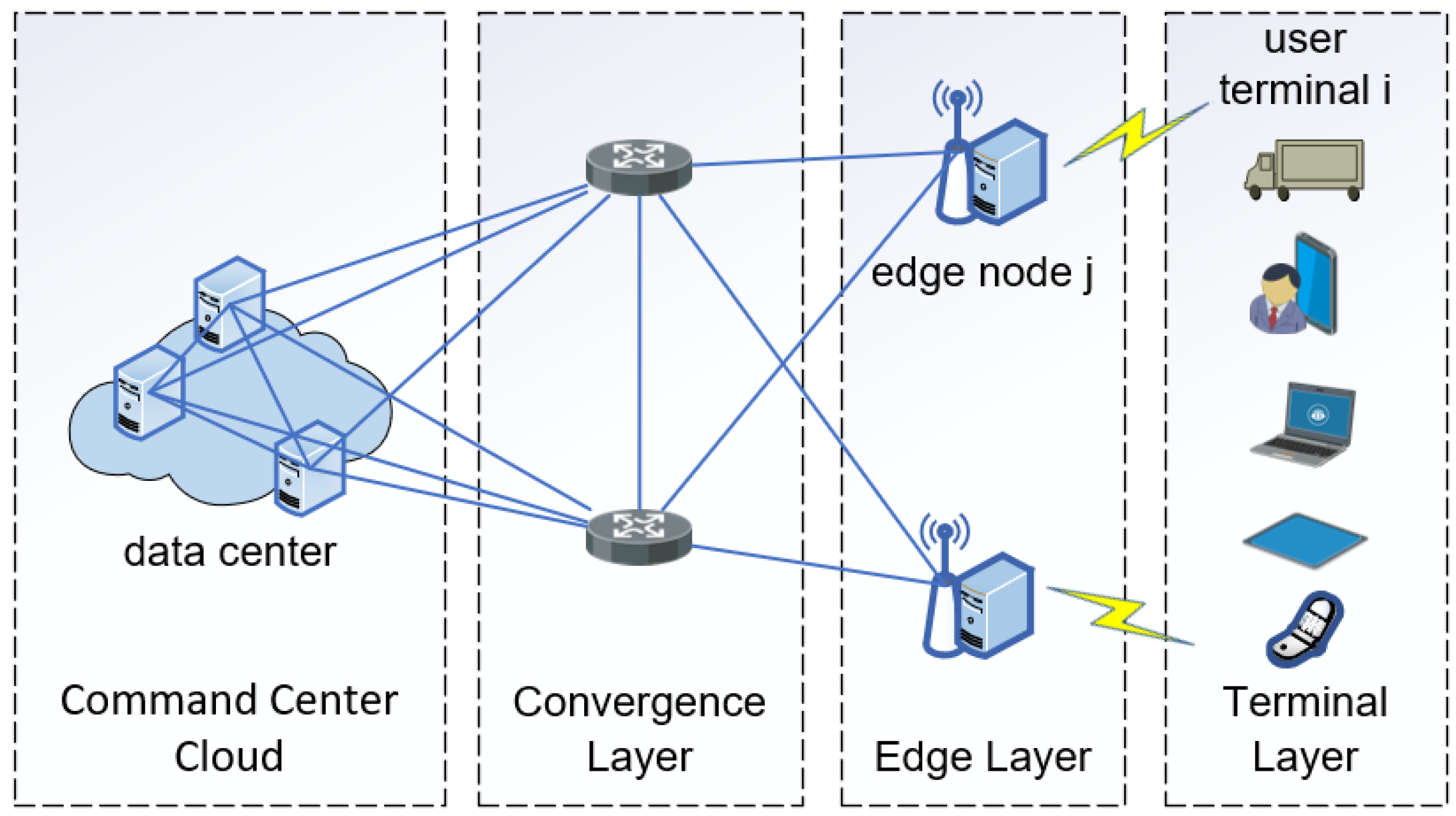

This paper designs the system architecture shown in

Figure 5, which is designed according to the common characteristics of most emergency response scenarios and disaster relief scenarios, and is highly generalized in terms of geographic environments, meteorological conditions, communication resources, and computational resources. In specific emergency rescue tasks, the system can be disassembled and expanded as needed to meet the actual task needs. To facilitate the modeling of the target system, the system architecture shown in

Figure 5 is simplified and abstracted, and the simplified model shown in

Figure 6 is established.

The emergency rescue center mainly handles large-scale tasks, such as disaster situation analysis, situation prediction, public opinion judgment, risk assessment, etc., which require data fusion, intelligence correlation, and situation analysis based on pre-trained large language models, and rely on large computing and storage resources. However, the rescue command center has multi-hop fiber-optic cable network transmission from the rescue site, so the up-linking of intelligence data and the downstreaming of processing results require a certain delay overhead. Therefore, when faced with a large intelligence analysis task, or when there are not enough computing and storage resources on the edge side of the task, the intelligence data are transmitted back to the emergency rescue center for processing.

The mission edge side mainly deals with lightweight tasks, such as data aggregation, multi-modal intelligence alignment, content distribution, remote information relay, etc. The mission edge side can usually rely on the existing information and communication infrastructure, or motorized communication security vehicles to build an edge cloud, which is flexible in siting and easy to set up, and is therefore closer to the mission area and avoids a lot of the transmission latency. When the computing and storage resources on the edge side are sufficient and the task requires low latency, the rescue intelligence data will be shunted to the edge side of the task for processing.

The rescue region is mainly oriented to simple tasks, for example, the aggregation and processing of life detection data, IoT sensing node data, and geographic information data, as well as the on-site control of drones, unmanned vehicles, or robot dogs. Therefore, when the task volume is small and the equipment in the rescue task area can autonomously complete simple task processing, it is processed locally. In this model, the specific meaning is shown in

Table 2.

5.3.1. Rescue Area Model

The rescue region, as the end of the system’s extension to the emergency rescue mission, typically deploys a large number of drones, unmanned vehicles, robot dogs, and IoT sensing devices, so the arithmetic resources of these devices are expressed in terms of clock cycle frequencies

. The latency of the rescue task to be processed by the devices in the field can be expressed as

The energy consumption under the same conditions can be expressed as

In Equation (

2),

is the energy coefficient determined by the computing chip architecture of the device used in the emergency rescue mission [

23], which is proportional to the computing power of the device, considering that most of the devices and equipment in the emergency rescue scenarios are mostly mobile networks or IoT terminals, with limited computational power, so according to the method in the [

24], the energy coefficient of the device on the rescue region side is taken as

.

When facing complex tasks such as rescue intelligence fusion, multi-modal data alignment, UAV obstacle avoidance optimization, etc., the terminal device’s own arithmetic and storage resources are difficult to support, and problems such as high energy consumption, high latency, and short endurance will occur, seriously affecting the efficiency of the rescue mission. Therefore, energy consumption and time delay are taken as the main indicators of actual task performance and user experience. In addition, in order to truly reflect the differentiated needs of rescue site tasks, the optimization weights of delay and energy consumption are flexibly adjusted, the delay weight factor

is introduced, and the total system overhead in the rescue area is expressed by

. In actual emergency rescue tasks, flexible adjustment is made according to different types of application services and optimization needs. For delay-sensitive tasks, such as hostage rescue, threat judgment, etc., the delay weight factor

is further expanded to obtain better optimization of delay indicators, and the energy consumption weight factor is

. The optimization process can be expressed as

When the rescue task exceeds the calculated capacity of the rescue equipment, overloading and other phenomena will occur. At this time, it is necessary to transmit the computation task to the MEC server at the task edge layer for processing via the upstream, while 5G or 4G LTE networks all adopt orthogonal frequency division multiple access technology, and the data can be transmitted in parallel and without interference between subcarriers of the upstream channel. Thus, the transmission rate of the user terminal

i in sub-band

is

In Equation (

4),

is the sub-band bandwidth of the upstream transmission of the rescue device,

is the transmit power of the rescue device

i,

is the channel gain between the rescue device

i and the edge node

j, and

denotes the background noise variance, the offloading transmission delay of the device

i can be expressed as

5.3.2. Task Edge Layer Model

In the task edge layer model, the entire process of diverting rescue tasks to the task edge layer is divided into three steps: upstream transmission, task solving, and result distribution. After the rescue mission is completed and analyzed, it is encoded and compressed to a smaller scale before being distributed. Due to the fast transmission rate of the downstream in 5G networks, which has an advantage of orders of magnitude over the upstream, and the smaller scale of data distribution after encoding, the delay of downstream distribution can be ignored. Therefore, the total time delay for rescue tasks to be diverted to the task edge layer is

where

is the time for task

i to complete processing at the MEC platform, and the MEC server allocates the corresponding computational resources for task

i.

, and thus there is

When the rescue task is diverted from the rescue area to the task edge layer, the energy consumption is mainly generated by the rescue equipment, which can be calculated from the rescue equipment transmit power:

Due to the deployment of MEC servers and rear rescue center cloud servers based on mobile communication base stations, there is sufficient power guaranteed. Therefore, the total energy consumption of the task being diverted from the rescue area to the task edge layer needs to include the transmission energy consumption, while if the task edge layer is diverted to the rescue command center layer, the transmission and calculation energy consumption of both do not need to be considered. Therefore, the total cost of task allocation to the MEC servers is

Based on the foregoing, the total overhead of the system for processing computational tasks is the weighted sum of the overheads of each layer:

5.4. Problem Posing

In order to further optimize the performance of the unmanned emergency support system and make full use of the existing computational and storage resources in the system, this paper takes the rescue mission requirements as the traction and aims at reducing the delay and energy consumption, based on the Global Learning Seagull Algorithm for Gaussian Mapping (GLSOAG) joint optimization of the rescue task diversion strategy, system communication, and computational resource allocation problems. The weighted sum of delay and energy consumption is expressed in terms of the offloading overhead; therefore, from Equation (

10), in order to reduce the total offloading overhead of the target system, problem Q can be obtained:

In the above model,

represents the set of offloading decisions at the task edge layer. The constraints in Equations (

13)–(

17)are modeling the channel resource planning, representing the channel resources acquired by the UAV when it shunts the collected data to the superior node, which indicates that communication channels are allocated to the terminal devices at the time of offloading and that the sum of the sub-bandwidths does not exceed the total bandwidth; (

14) and (

15) are modeling the computational power planning process in the target system, which indicates that the tasks diverted to the edge node are allocated to the reasonable computational resources; and (

13) corresponds to the task diversion policy, indicating that there is a total of

implementation per layer when offloading is performed at the terminal and edge layers.

The implementation of a data triage is a nonlinearly constrained 0–1 planning problem, whereas in the two subproblems of communication and arithmetic decomposed above, the vast majority of the variables are linearly related to the emergency rescue mission and the data triage; communication and arithmetic resource planning problems are MINP problems [

25]. However, the emergency rescue task, as well as the distributed deployment of the large model in the system, is a systematic problem that should not be separated for optimization and analysis, and thus the original modeling problem needs to be decoupled and split.

5.5. Problem Breakdown

In stochastic optimization or multi-objective optimization problems, the commonly used methods are Lyapunov’s method [

26], MOEA/D (Multi-Objective Evolutionary Algorithm based on Decomposition) [

27], and Tammer decomposition method [

28] to combine complex problems that are decomposed into multiple equivalent problems, decoupled from the relevant variables, and then solved by the GLSOAG. Thus, Equation (

12) is decomposed as

Let

correspond to the task diversion problem in parentheses, then there is

Meanwhile, question Q can be formulated as

In this way, problem Q is decomposed into a computational power allocation and a triage execution problem, and the constraints of the two subproblems are decoupled. In addition, it can be further simplified by substituting the decision variables , the offloading decision variables in the rescue task region , and the task triage variables in the task edge layer . Finally, the problem is transformed into a convex optimization problem and solved using the GLSOAG.

6. Algorithmic Improvements

6.1. Ideas for Improvement

The original seagull algorithm has the advantages of strong inspiration, few parameters, and adaptability. However, the convergence speed will be greatly affected when the high-dimensional space or the optimization space is large. Moreover, the seagull algorithm will be premature under some specific conditions. In addition, the performance of the algorithm is more sensitive to the parameters, and the setting of the parameters requires certain engineering attempts. The relevant influencing factors are shown in

Table 3.

In addition, multiple parameter combinations can be traversed by grid search, random search, or Bayesian optimization to determine the final parameter settings according to the actual problem. To this end, we first use Gaussian chaotic mapping to initialize the seagull algorithm population and improve population diversity. Secondly, we optimize and adjust the population position update method to global learning. By letting seagulls learn from both the current generation’s best seagull and the historical best seagull, and introducing random learning weights, the seagull position is updated by considering the advantages of both. Finally, after each iteration update is completed, the optimal seagull individual is subjected to lens reverse learning, and the worst performing seagull individual is subjected to random reverse learning to enhance their ability to jump out of the local optima and avoid algorithm premature convergence.

6.2. Gaussian Map

Chaotic mapping is a method observed in nonlinear dynamical systems. It is deterministic and exhibits stochastic characteristics but is not periodic nor converges to a fixed point. It is often used for randomization in mathematics to improve the efficiency of algorithms with the help of chaotic properties. The core idea is to use the uncertainty of chaotic behavior to convert the parameters into the value domain of the chaotic space, and then map the results back to the variable space of the optimization problem through linear transformation. A variety of chaotic mapping methods are currently developed in optimization techniques, such as logistic mapping [

29], sinusoidal mapping [

30] and Gauss mapping [

31]. The Gauss mapping used in this paper is defined as follows:

Since the original seagull algorithm has concise parameters and an intuitive model and is easy to apply, combined with the traversal and stochastic properties of Gauss mapping, it can significantly expand the exploration range of the algorithm. Therefore, the use of Gauss mapping instead of the traditional stochastic initialization method not only increases the diversity of the population but also helps the algorithm to avoid falling into the local optimum, which in turn improves the overall optimization ability.

6.3. Seagull Algorithm

6.3.1. Global Search

Global search simulates the phenomenon of seagull migration. Seagull migration is actually a process of exploring a new area; when the seagull group finds the area of food and resources, they will stay. SOA, by simulating this process, can balance the algorithm’s ability to explore and develop.There are three prerequisites for this process:

6.3.2. Local Search

Localized search simulates seagull predation behavior. When a gull finds a food resource, it continuously adjusts its attack azimuth and flight speed to ensure the success rate of prey capture. During this period, the gulls round up prey through spiral maneuvers, represented in the

x,

y, and

z dimensions as follows:

where

r is the radius of the spiral,

is the angle at which the gulls attacked, in the interval

, and

u and

v are the spiral coefficients. Then, the gull attack position is

6.4. Attack Strategy Improvement

The update of the seagull positions introduces a global learning approach, where seagulls learn both the current generation’s best seagull and the best seagull so far, using random learning weights to comprehensively consider updating seagull positions as shown in the following equation:

where

is a random number between [0, 1],

,

is a set of random numbers between [0, 1] of the same dimensions as the seagulls.

is the historical optimum, and

is the optimum for the current generation.

6.5. Hybrid Reverse Learning

6.5.1. Mixed Reverse Learning Strategies

The hybrid backward learning strategy is a common technique in optimization algorithms that can enhance the algorithm’s global search as well as its ability to jump out of the local optima by generating the opposite of the current solution and evaluating its quality to select a better one. In this paper, we introduce a small-hole imaging reverse learning mechanism to enhance the algorithm’s optimization search efficiency and convergence accuracy by inverting the feasible solutions in the iteration, and provide a dynamic adjustment mechanism for the algorithm to adaptively equalize its exploratory and exploitative capabilities.

6.5.2. Imaging Small Holes Reverse Learning Strategies

The small-hole imaging reverse learning strategy is a learning method inspired by the small-hole imaging principle in the field of optics, and is commonly used in optimization algorithms to improve their search efficiency and ability to jump out of the local optima. The basic idea of this strategy is to guide the algorithm to explore under-searched regions in the solution space by constructing a “reverse solution” relative to the current feasible solution. This approach helps to increase the diversity of solutions, facilitate global search, and thus improve the algorithm’s optimization efficiency.

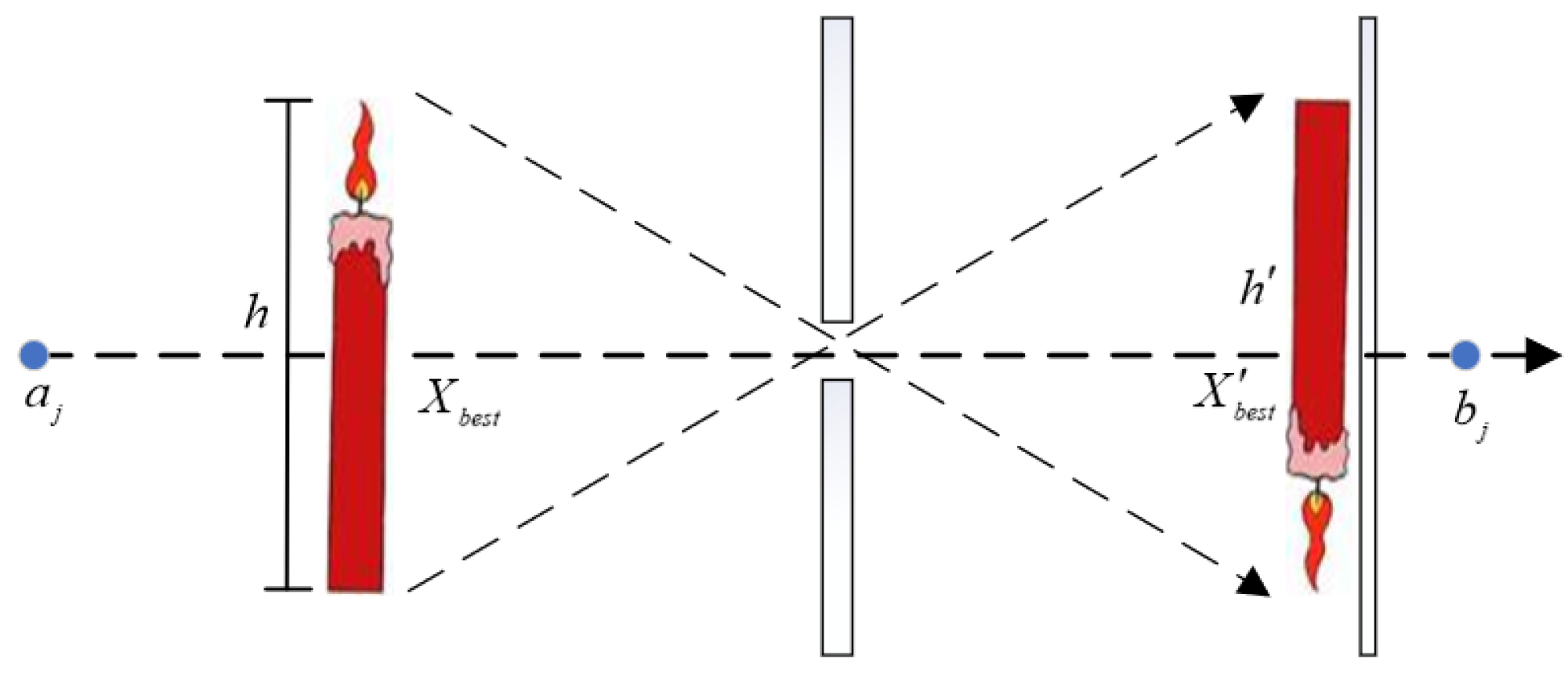

As shown in

Figure 7, in the small-hole imaging reverse learning model, the height of the flame is h. Assuming that its projection location is the global optimal location, the effective interval of the horizontal axis in the space represents the boundary of the solution space, and the small hole is located at the midpoint o. The flame maps the flame reflection, whose location is also the optimal location, under the physical action of small-hole imaging. The process can be expressed as follows:

Let

, which is obtained by transforming

, have the expression

From the above equation, it can be seen that when n = 1, the small-hole imaging reverse learning degrades to conventional reverse learning, so the obtained fixed reverse points are obtained by conventional reverse learning mapping, which is still far from the global optimum. For this reason, the receiver screen is adapted to find the location of the global optimum solution. The concept of the optimal and worst individuals in evolutionary algorithms is introduced into SOA, and when the worst seagull individual is caught in the local optimal solution, the small aperture imaging reverse learning mechanism is used to help it jump out of the local optimum.

6.5.3. Optimal–Worst Reverse Learning Policy

The worst positioned seagulls tend to affect the convergence accuracy and speed of the algorithm, so the rich representation of their spatial position is enhanced by a stochastic reverse learning strategy to obtain a stronger global search performance, which can be expressed as follows:

In the above equation,

denotes the spatial location of the global worst seagull, and

is a random number in the interval [0, 1]. In the algorithm optimization process, the position selection needs to be carried out through Equations (

34) and (

36) one by one, and the global optimal solution is updated after comparing the changes in the adaptation values before and after the reverse learning. Compared with other reverse learning mechanisms, e.g., the use of m number of individuals for reverse learning in elite reverse learning, it can effectively improve the efficiency of the algorithm’s pre-search, but it will constrain the ability of local development and affect the convergence speed of the algorithm. In this paper, the two extreme values of the individual states of the seagull population are processed in parallel, the solution space boundary

in the model is dynamically adjusted with the focus of the search, and the algorithm’s convergence speed and accuracy are effectively improved.

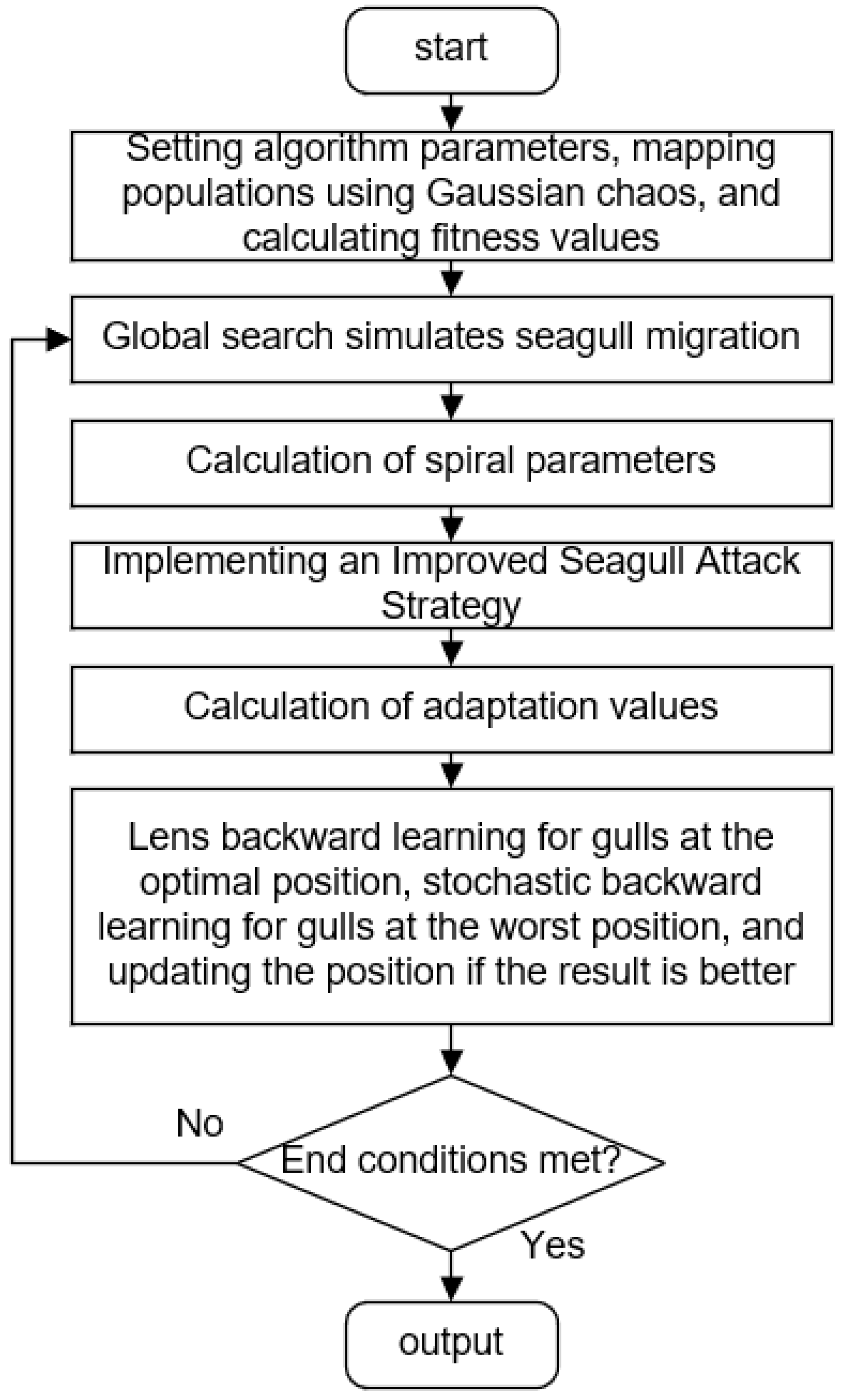

6.6. Algorithmic Process

The computational flow of the Global Learning Seagull Algorithm for Gaussian Mapping (GLSOAG) proposed in this paper is shown in

Figure 8.

7. Experimentation and Analysis

7.1. Algorithm Performance Validation

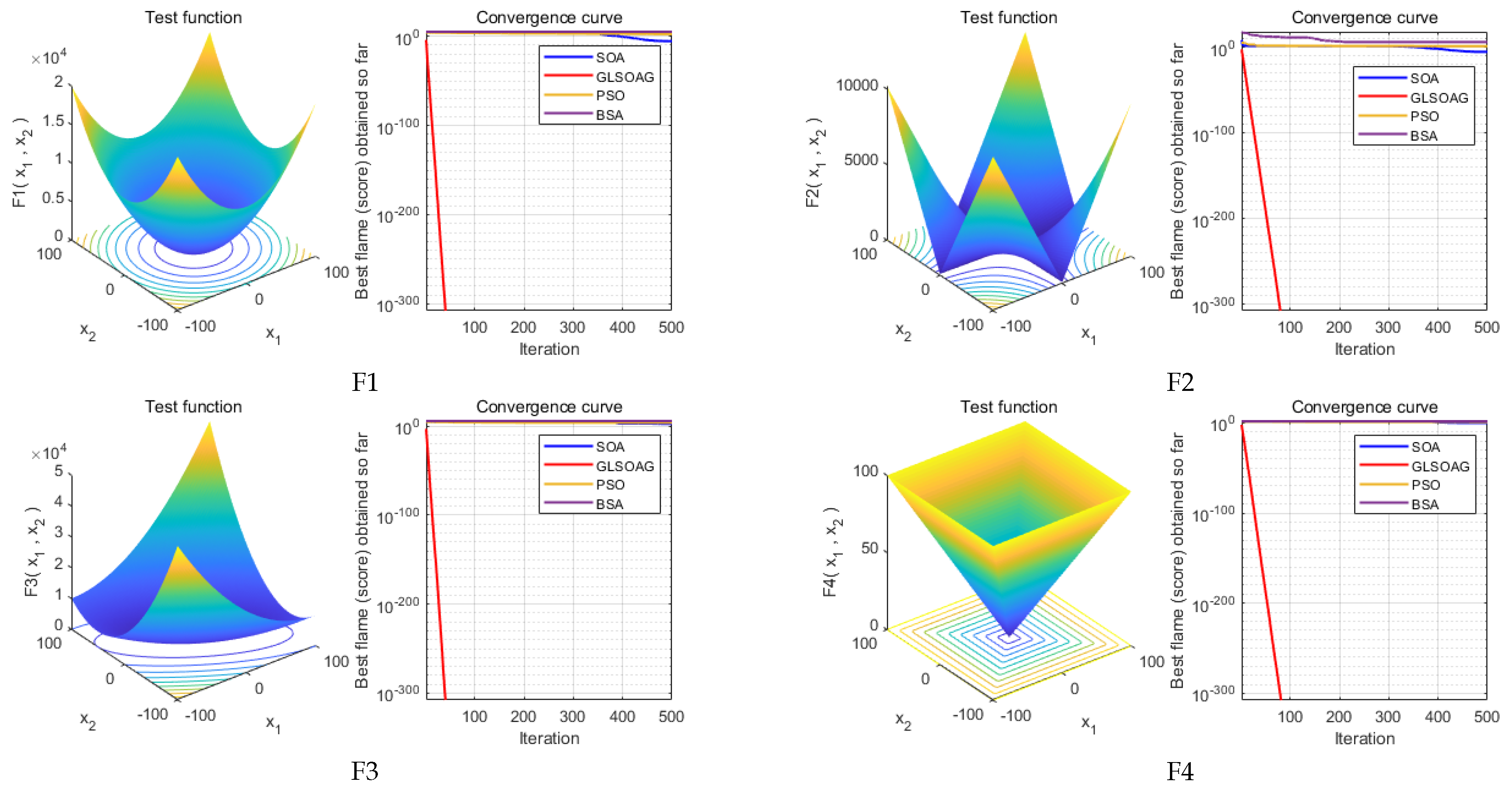

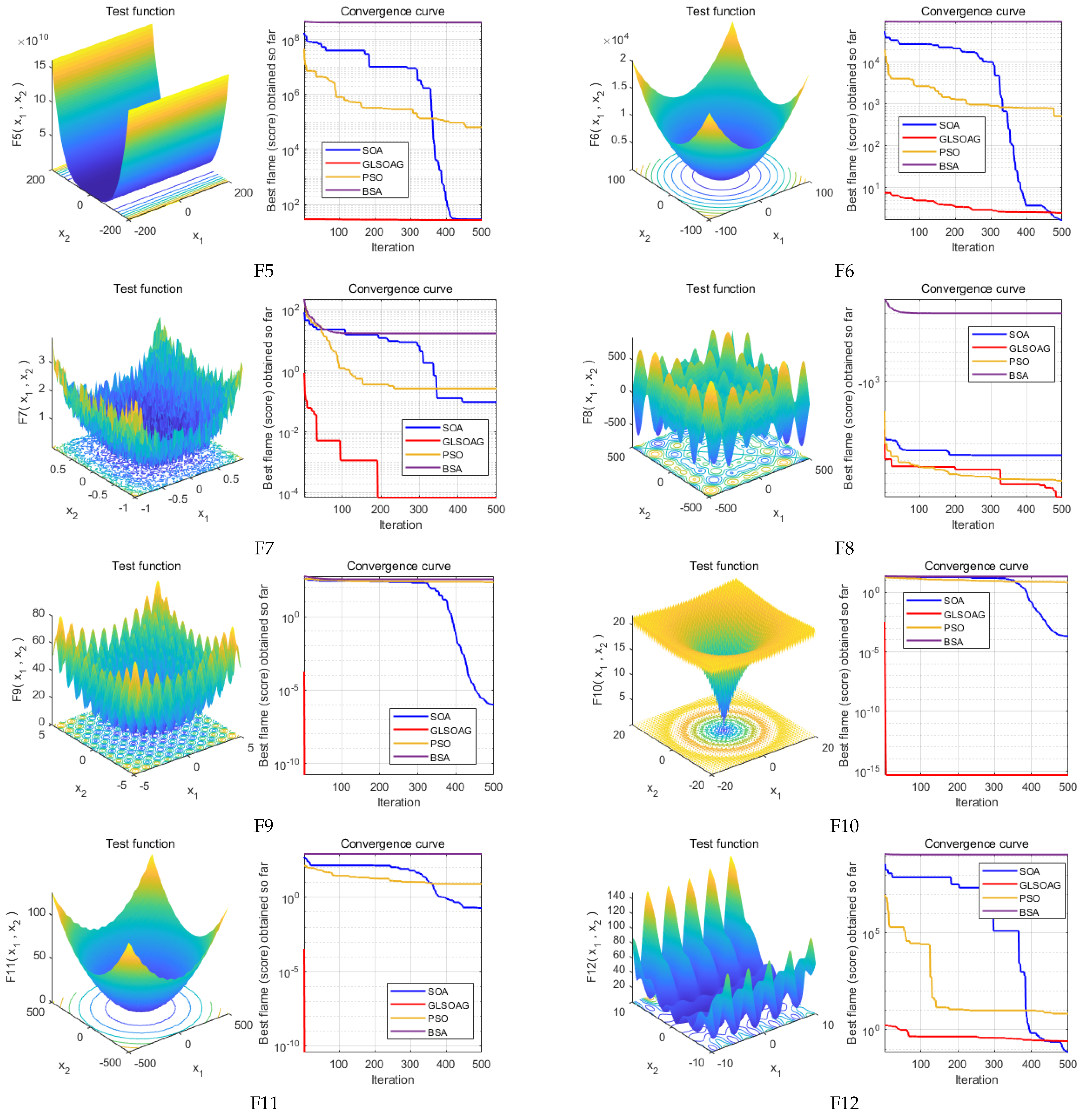

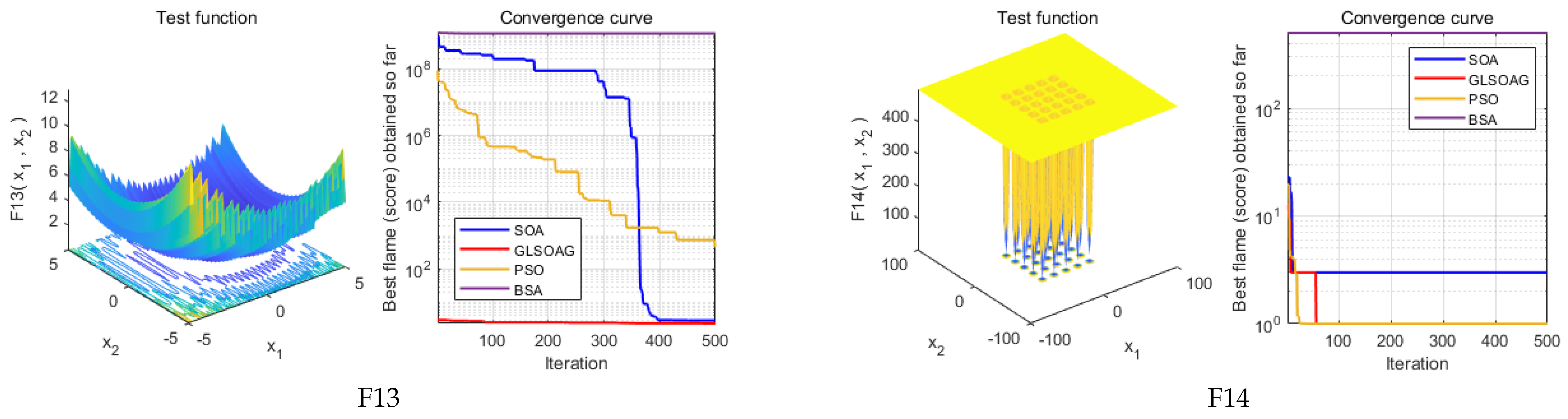

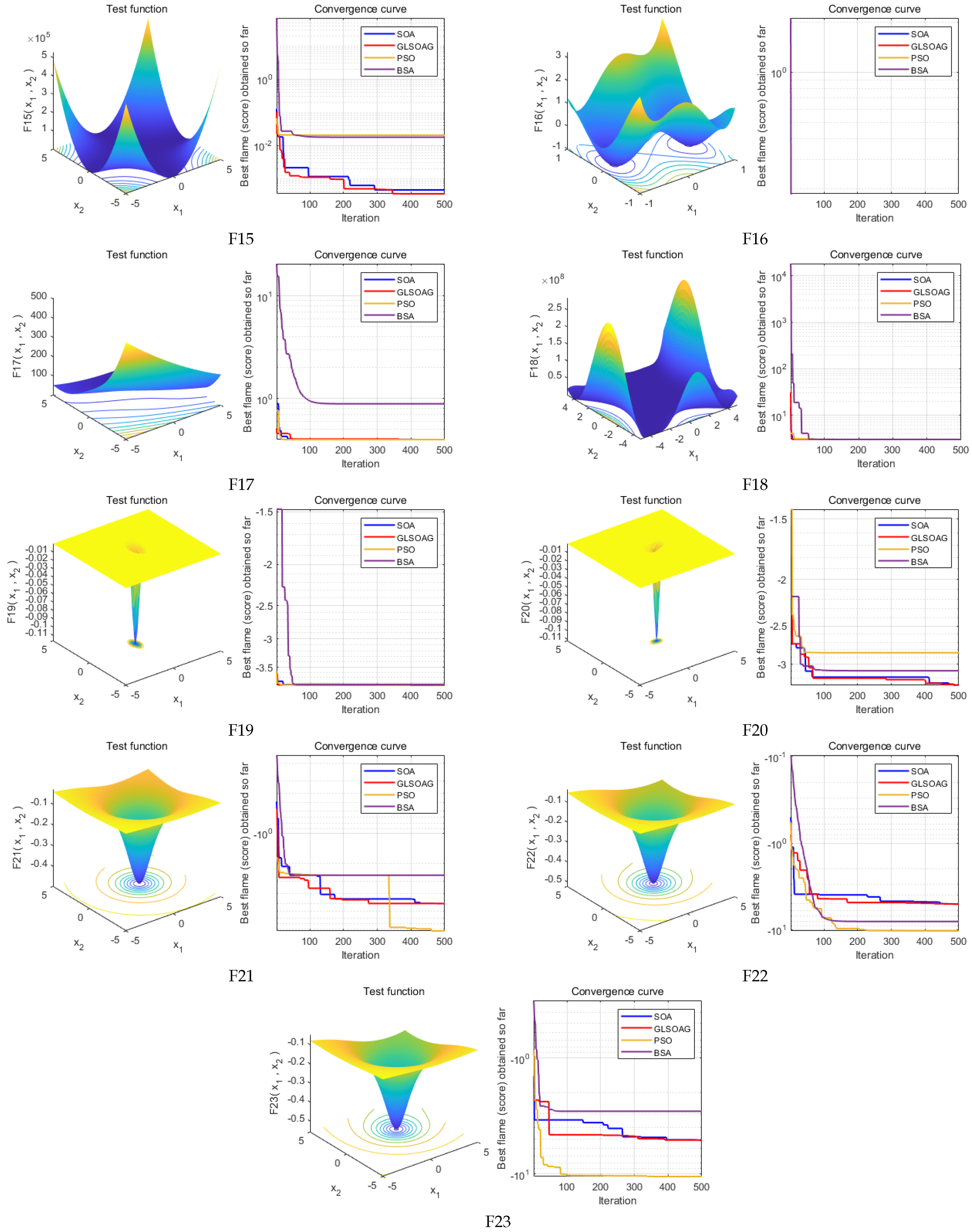

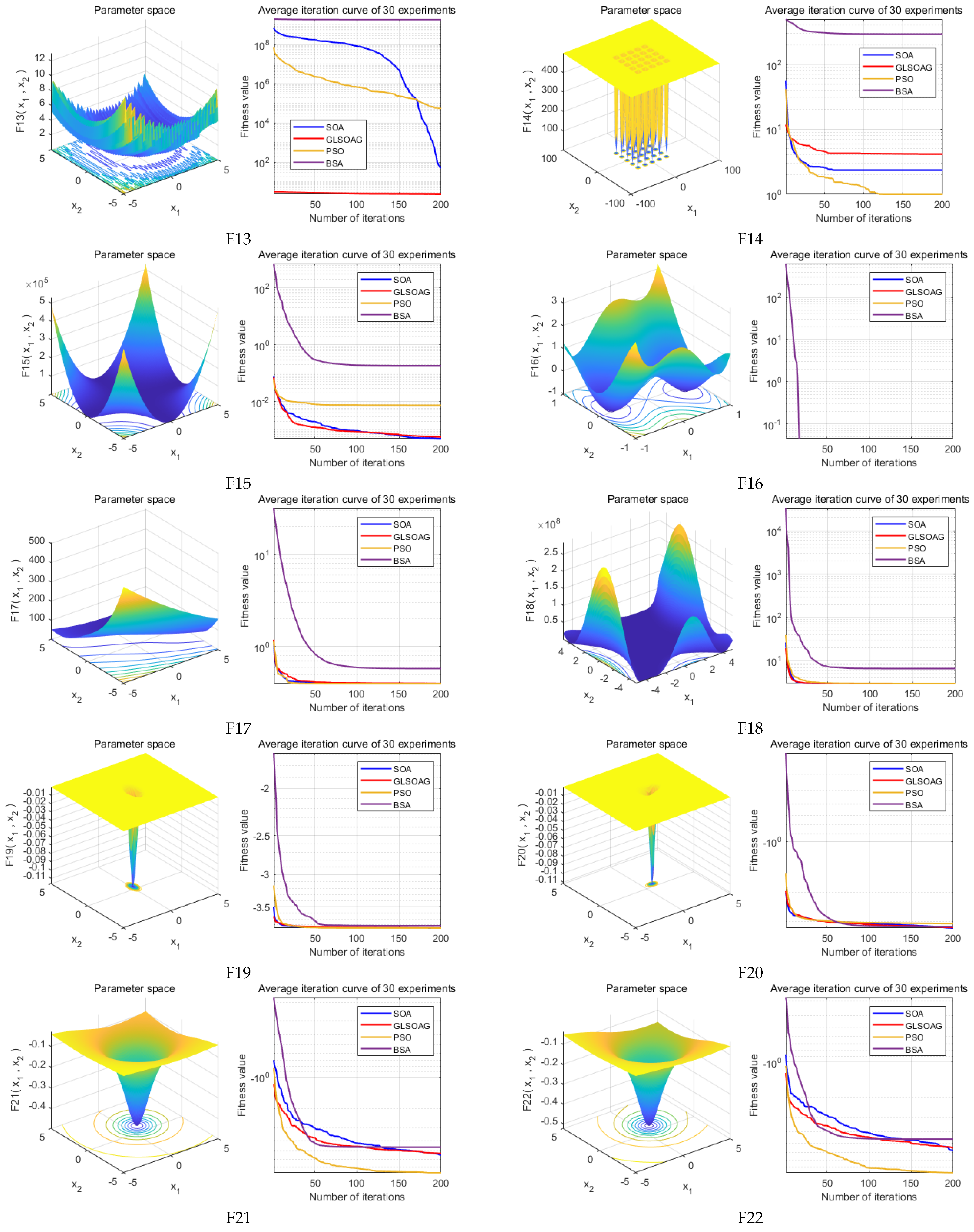

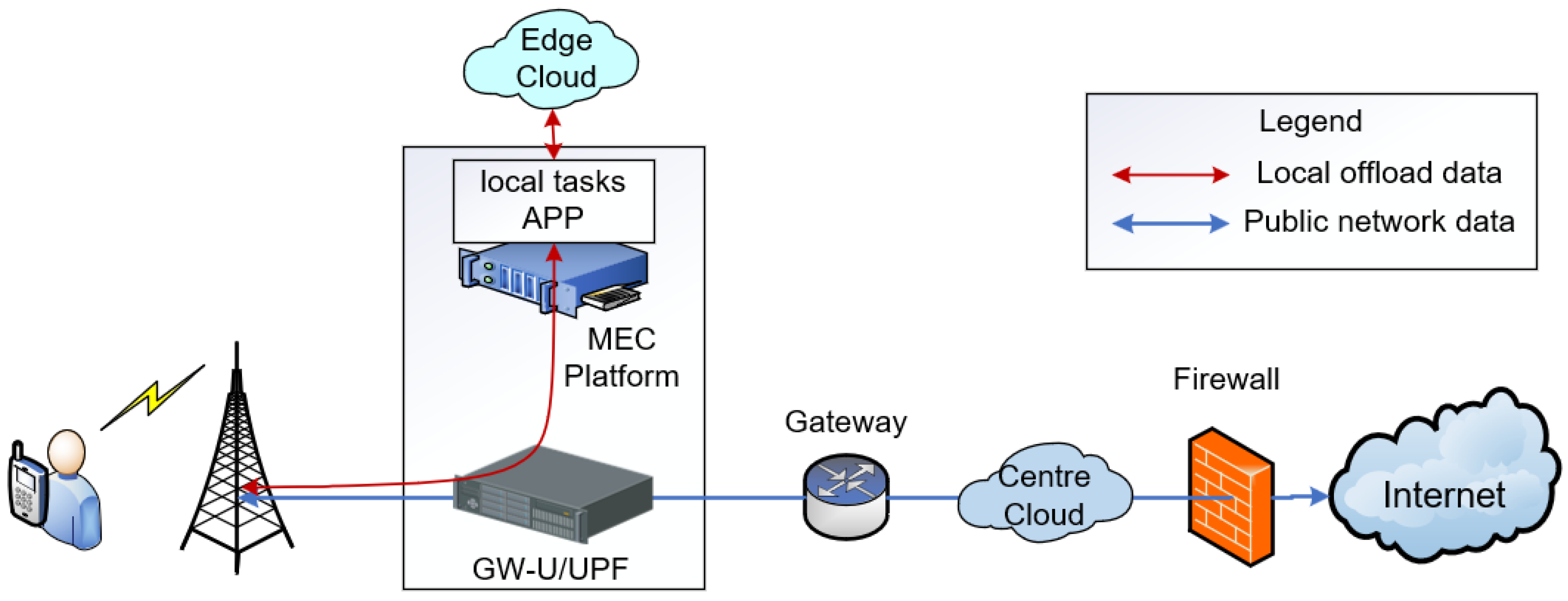

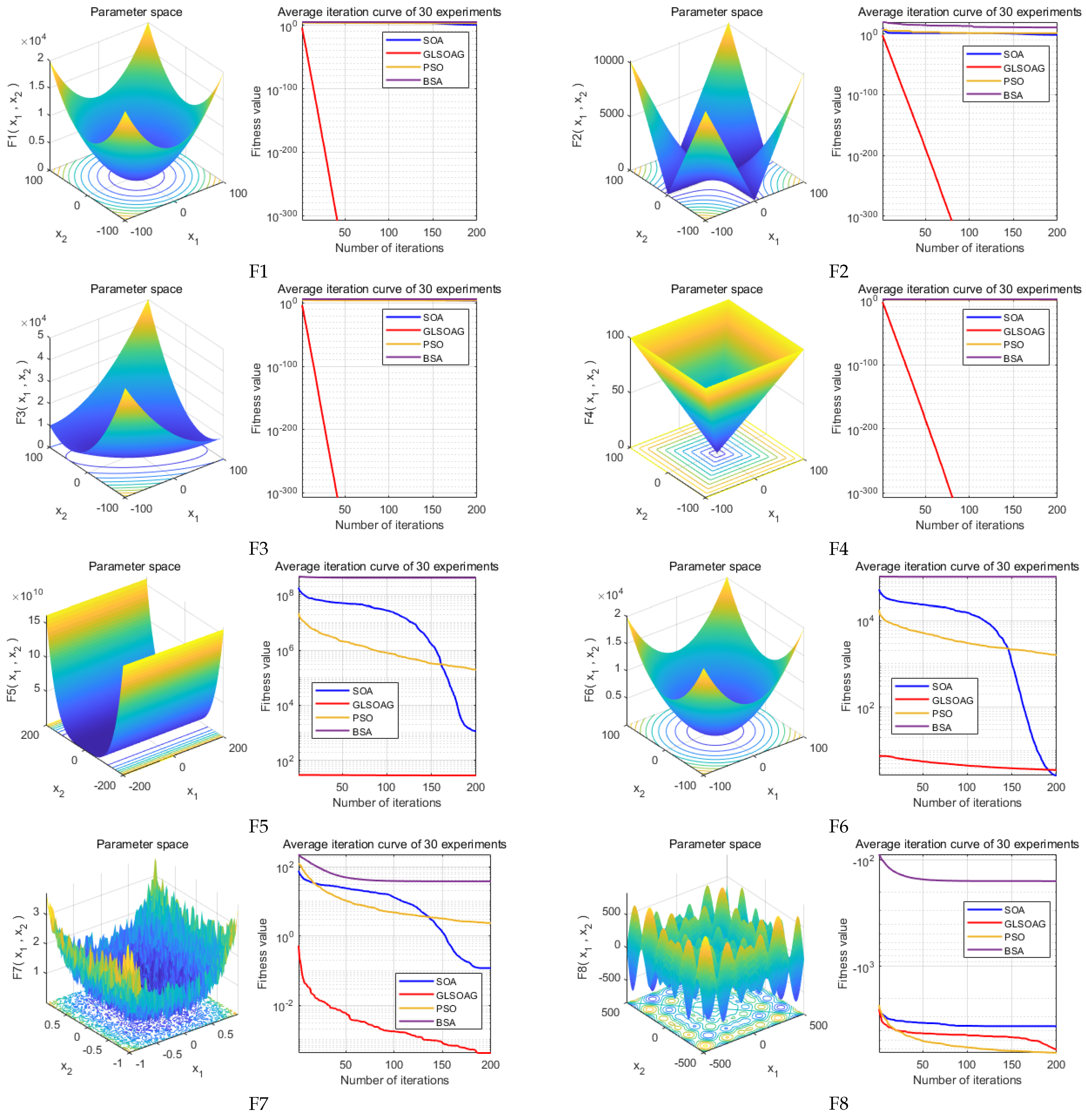

In this section, the performance of the GLSOAG is verified and comparatively analyzed. The experiments are conducted to compare the GLSOAG proposed in this paper with the classical SOA, classical PSO and BSA. Simulation experiments are conducted for the four algorithms based on the classical test function set IEEE CEC2005 [

32] shown in

Table 4 and the algorithm iteration results are recorded in

Table 5. In addition, the comparison of test function images and algorithm convergence curves are shown in

Figure 9 to visualize the advantages of the algorithms in convergence speed and convergence accuracy. Due to space constraints, this section only conducts simulation experiments on test functions F1 to F12 from IEEE CEC2005, with the results for test functions F13 to F23 presented in

Appendix B. Furthermore, we demonstrate the simulation results of running the 4 algorithms once on all test functions with a maximum of 500 iterations in

Appendix A, to reflect a more aggressive performance comparison of the algorithms.

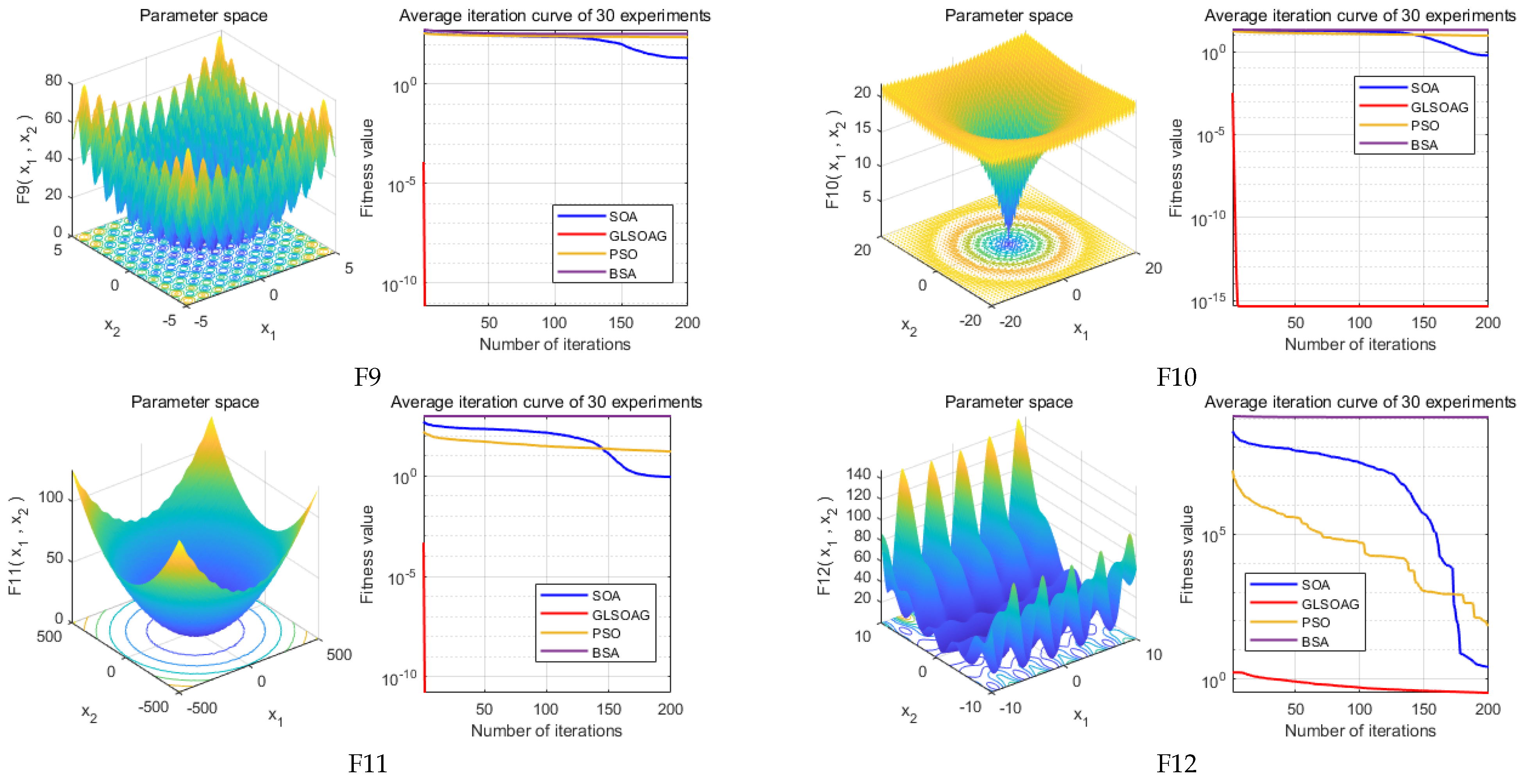

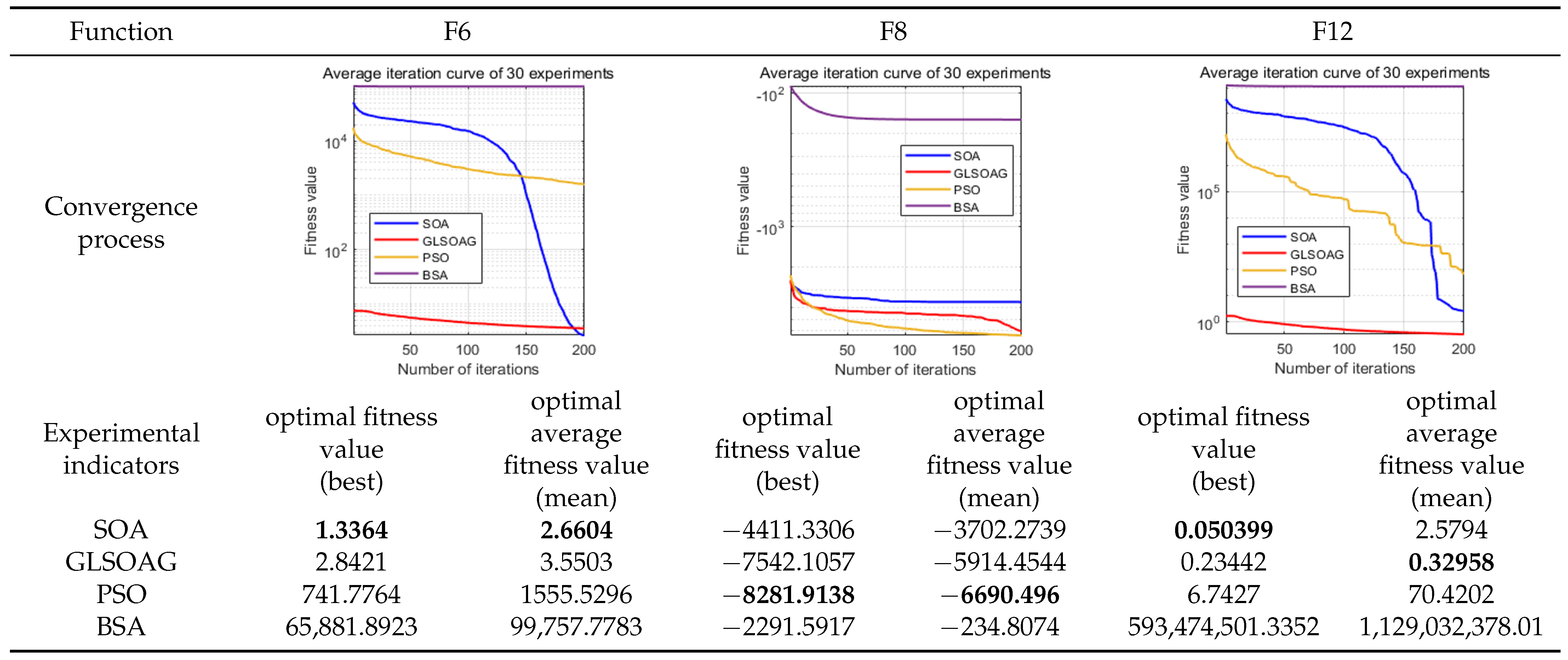

7.1.1. Algorithm Convergence Accuracy Comparison

Figure 10 shows the experimental results of the algorithm on F6, F8, and F12. From the experimental results, the GLSOAG shows a weak accuracy degradation at the late stage when it is approaching more than 190 iterations on F6. In addition, the GLSOAG is far ahead of the other algorithms on F6, but the accuracy is overtaken by SOA at the end of convergence. From the experimental results, although SOA achieves a slight advantage in the optimal fitness value, the difference between its optimal fitness value and the average fitness value corresponding to the optimal solution is larger than that of the GLSOAG by 0.6158, proving that in a comprehensive view, the GLSOAG is still superior to SOA. The GLSOAG is far ahead of BSA on F8, but its performance is close to that of SOA and PSO, with the front and end of the iteration in the fore, and its convergence performance is in the sub-optimal ranking from the experimental values. In addition, the GLSOAG achieves a clear lead in the test functions F1 to F5, F7, and F9 to F12 because the convergence accuracy of the algorithm needs to be combined with the optimal fitness value (best) and the average fitness value corresponding to the optimal solution (mean). The former determines the best performance the algorithm can achieve, and the latter determines the consistency of the optimal solution in multiple iterations. The closer the two are, the better the convergence performance and the higher the accuracy of the algorithm. It is worth noting that the optimal fitness value of the GLSOAG on F12 is slightly higher than that of SOA by 1.84021 × 10

−1, but the difference between the optimal fitness value and the mean fitness value of the GLSOAG is smaller than that of SOA by 2.433841. Overall, except for the sub-optimal result on F8 with a slight difference, the GLSOAG has a certain advantage in all other test functions. The maximum accuracy difference amounts to 1 × 10

300.

7.1.2. Algorithm Convergence Speed Comparison

Figure 9 illustrates the optimization graphs for the 12 test functions, and based on the average curve change over 30 consecutive rounds of experiments with 200 iterations per round, the GLSOAG converges ahead of the BSA on test function F8 but at a comparable rate of convergence to the PSO and SOA, gaining an advantage in the early part of the iteration but then iterating at a sub-optimal rate of convergence. In additsion, the convergence speeds on the remaining test functions all take the lead by a large margin early in the iteration.

7.1.3. Computation Complexity Comparison

Although the algorithm execution time is affected by factors such as the hardware, compiler, operating system, compilation language, and input data set, the algorithm execution time can reflect the complexity of the algorithm to a certain extent under specific hardware and software environments.

Table 6 demonstrates the execution time of the four algorithms on the test functions from F1 to F12. It can be seen that the GLSOAG proposed in this paper, despite the fact that it did not achieve an advantage in the longitudinal comparison with PSO and BSA because of the smaller number of parameters and simple iteration mechanism of the PSO and BSA algorithms, the overall difference is controlled to be less than 1 s. The average difference between the GLSOAG and SOA in the horizontal comparison is even smaller, indicating that its improvement on SOA does not bring an excessive time overhead.

7.1.4. Algorithm Stability Comparison

The standard deviation of the experimental results shows that the GLSOAG achieved the smallest standard deviation values on the rest of the tested functions with an exponential advantage except for the sub-optimal standard deviation on function F8. The GLSOAG differed from the maximum standard deviation on F1 to F12 by , , , 6.4512, , , , , , 1.1818, , and , respectively, with an average dominance of across the 12 test functions. This reflects that the GLSOAG has strong stability.

7.1.5. Results and Discussion

In summary, in most cases, the GLSOAG has significant advantages in convergence accuracy, convergence speed, and algorithmic stability, and the algorithmic complexity is within tolerance. Through the improvement strategy proposed in this paper, the global exploration ability of the algorithm in the early stage is effectively improved, the phenomenon of the premature maturity of the algorithm is improved, and the stability of the algorithm is maintained while meeting the needs of the algorithm to deal with high-dimensional complex problems.

7.2. Validation of Resource Optimization Methods

7.2.1. Environment Setup and Parameterization

In this section, the system optimization model developed based on the GLSOAG is simulated and validated in order to demonstrate the usability of the designed unmanned emergency support system and the effectiveness of the system resource optimization method. The simulation parameters are set as shown in

Table 7.

7.2.2. System Architecture Performance Validation

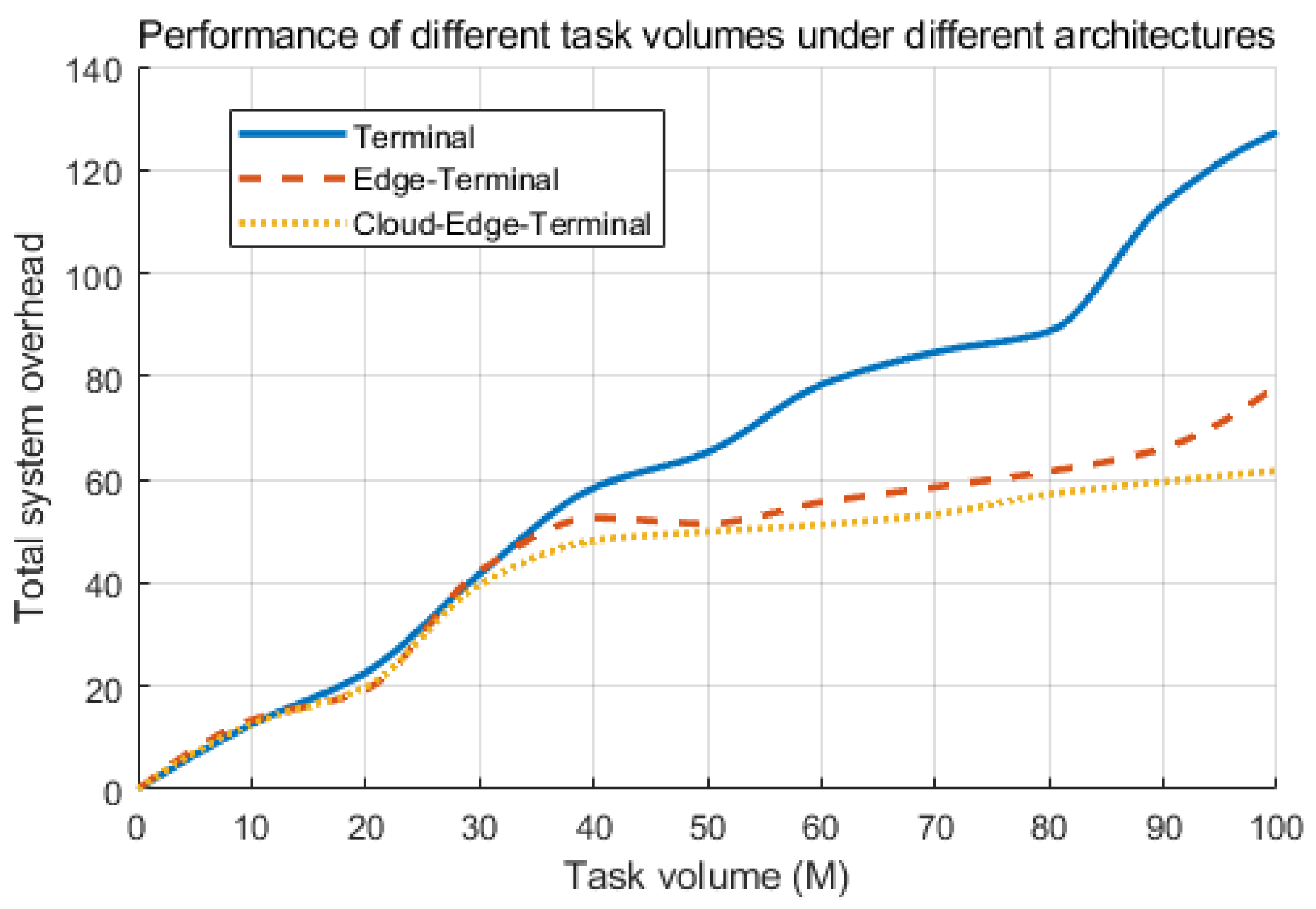

Firstly, a validation experiment is conducted on the architecture of the “Unmanned Emergency Support System”. Different task volumes are set to run in three network modes, namely, “terminal side”, “terminal–edge side” and “center–edge–terminal side”, based on the random traffic diversion policy. In the

Figure 11, the total overhead of the above architectures is roughly the same at the beginning stage of the task volume increase; as the task volume continues to increase, the terminal low-computing power equipment cannot meet the overhead of the large model inference task, and the system faces overload, which makes the latency and energy consumption increase rapidly; the “edge–terminal” collaborative architecture is able to handle the medium-sized inference task better, and the “edge–terminal” collaborative architecture can handle the medium-sized inference task better than the MEC server computing power and the MEC server computing power. The “cloud–edge–terminal” architecture has no obvious advantage in a small number of tasks, and the performance is jittery and slightly better than that of the edge-end architecture because the experiment is based on a random offloading strategy, while the “cloud–edge–terminal” architecture has no obvious advantage in a small number of tasks, and the performance is slightly better than that of the edge–terminal architecture because the experiment is based on a random offloading strategy. In the case of the “cloud–edge–terminal” architecture, the performance of the edge-end architecture is slightly better than that of the edge-end architecture. The “cloud-edge- terminal” architecture does not have significant advantages for a small number of tasks, and there is performance jitter, which is slightly greater than that of the edge-end architecture, because the experiment is based on a random offloading strategy, and this system has significant advantages for large-scale tasks.

7.2.3. The Effect of the Number of Iterations on System Overhead

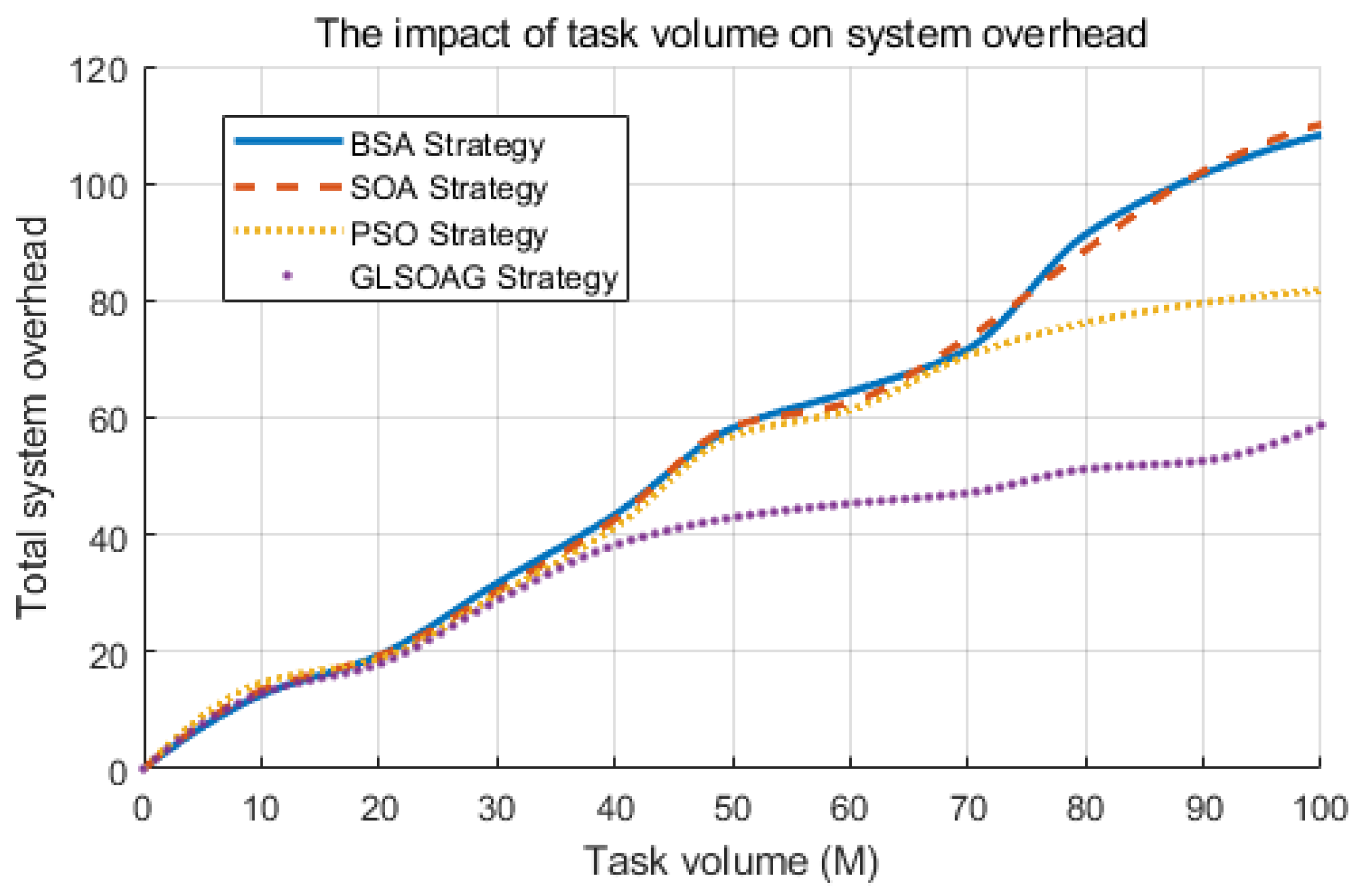

Figure 12 shows the convergence effect of the SOA, GLSOAG, PSO, and BSA strategies. At the beginning of the iteration, the convergence trend of SOA, PSO, and BSA is overall gentle, while the GLSOAG converges faster, and the optimal solution can be approached around the 180th iteration because the GLSOAG adopts Gaussian mapping processing for the initialization of the population, which makes its pre-exploration global exploration more efficient. In addition, the four algorithms show slight oscillations at 10–60 iterations before resuming the converged state, indicating that the algorithms all have good global search capabilities. Overall, the resource optimization method based on the GLSOAG has a significant advantage over the methods based on SOA, PSO, and BSA in terms of convergence speed and accuracy.

7.2.4. Impact of Number of Tasks on System Overhead

Figure 13 compares the changes in total system overhead of the four algorithms when the task volume increases. When the task volume is small, the difference between the four algorithms is not significant because the terminal side where the task area is located can meet the task processing demand, and when the task volume increases, the terminal devices in the task area are close to full capacity, so the GLSOAG advantage is not obvious. As the task volume continues to increase, when the large volume tasks such as intelligence analysis exceed the processing capacity of the server on the edge side, the task data need to be shunted to the center cloud. The GLSOAG can still maintain its advantage in convergence speed and accuracy in the face of complex multi-dimensional problems, and the advantage gradually expands when the task volume is greater than 40.

7.3. Analysis and Discussion

On the one hand, from the simulation experiment results of the above four offloading strategies, the resource optimization strategy based on the GLSOAG achieves a certain advantage among similar algorithms, which can effectively reduce the system energy consumption and delay overhead, and then improve the execution efficiency of emergency rescue tasks. On the other hand, in different task scenarios, facing the actual demand of different task volumes, although our approach does not have obvious advantages when the task volume is small, it is because the lightweight tasks do not need to be offloaded to the edge side, and can be processed locally in the device, and the tasks do not use most of the components of the system that we designed, so the system cannot be fully functional. However, when the task volume is increased, the emergency rescue system based on the GLSOAG is able to show superiority, which proves that the system we designed and the optimization method have a certain degree of generality.

In addition, with the increase in emergency rescue equipment, the demand for data fusion processing is further increased, and by adding MEC nodes with different arithmetic power to the system, the system can be flexibly expanded, and our optimization method is also applicable. As a whole, the emergency rescue system we designed has good generality and scalability, and our proposed resource optimization strategy based on the GLSOAG has good performance.

8. Conclusions

Emergency rescue scenarios are in complex climatic and terrain environments, characterized by the complexity of the rescue equipment network system, the diversity of intelligence data modalities, the limited system survey boundaries, and the lack of algorithmic support for situational analysis, and UAVs face a variety of complex uncertainties in rescue scenarios, for example, communication link failure and malicious attacks [

33]. For this reason, this paper designs a system architecture with elastic arithmetic for the mission requirements, introduces 5G network and MEC technology to provide UAVs with network support for the separation of the control and user plane, expands the system survey boundary, and improves the intelligence detection capability. Second, the Global Learning Seagull Algorithm for Gaussian Mapping (GLSOAG) is proposed, which has a substantial improvement over SOA, PSO, and DSA in terms of convergence accuracy, speed and stability. Finally, based on the GLSOAG-driven system resource optimization, the effectiveness and reliability of the resource optimization method is verified through simulation experiments, which proves that the system designed in this paper has a certain degree of feasibility, and that the proposed resource optimization method can effectively support various scales of computational tasks in emergency rescue scenarios and enhance the effectiveness of emergency rescue tasks.