Use of Unmanned Aerial Vehicles for Monitoring Pastures and Forages in Agricultural Sciences: A Systematic Review

Abstract

1. Introduction

2. Methodology

3. Findings and Discussion

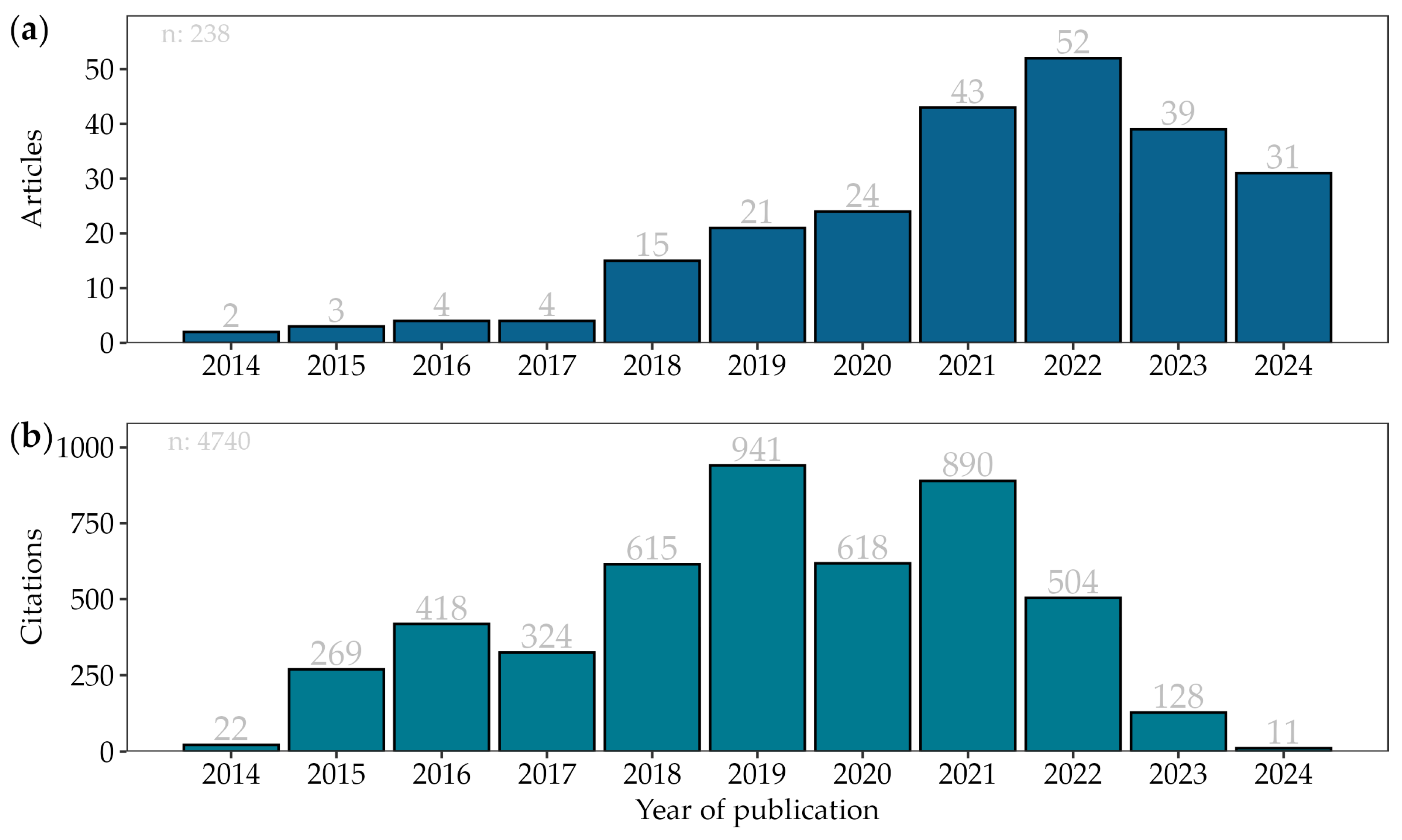

3.1. Scientometric Analysis

3.2. Image Processing

3.2.1. Radiometric Correction

3.2.2. Geometric Correction

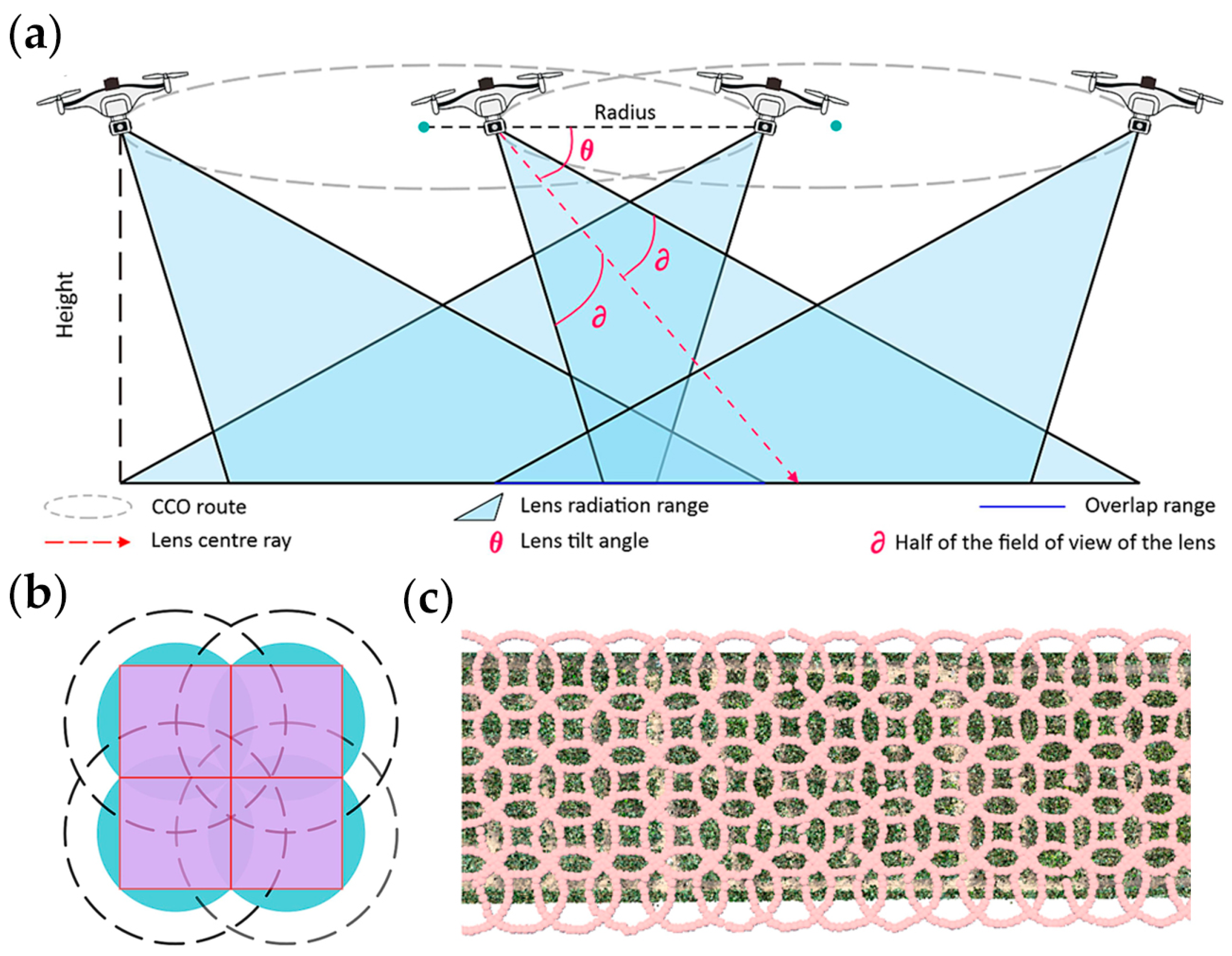

3.3. Methods for Image Feature Extraction

3.3.1. Vegetation Index (VIs)

3.3.2. Texture Analysis

3.3.3. Color Space

3.3.4. Three-Dimensional (3D) Point Clouds

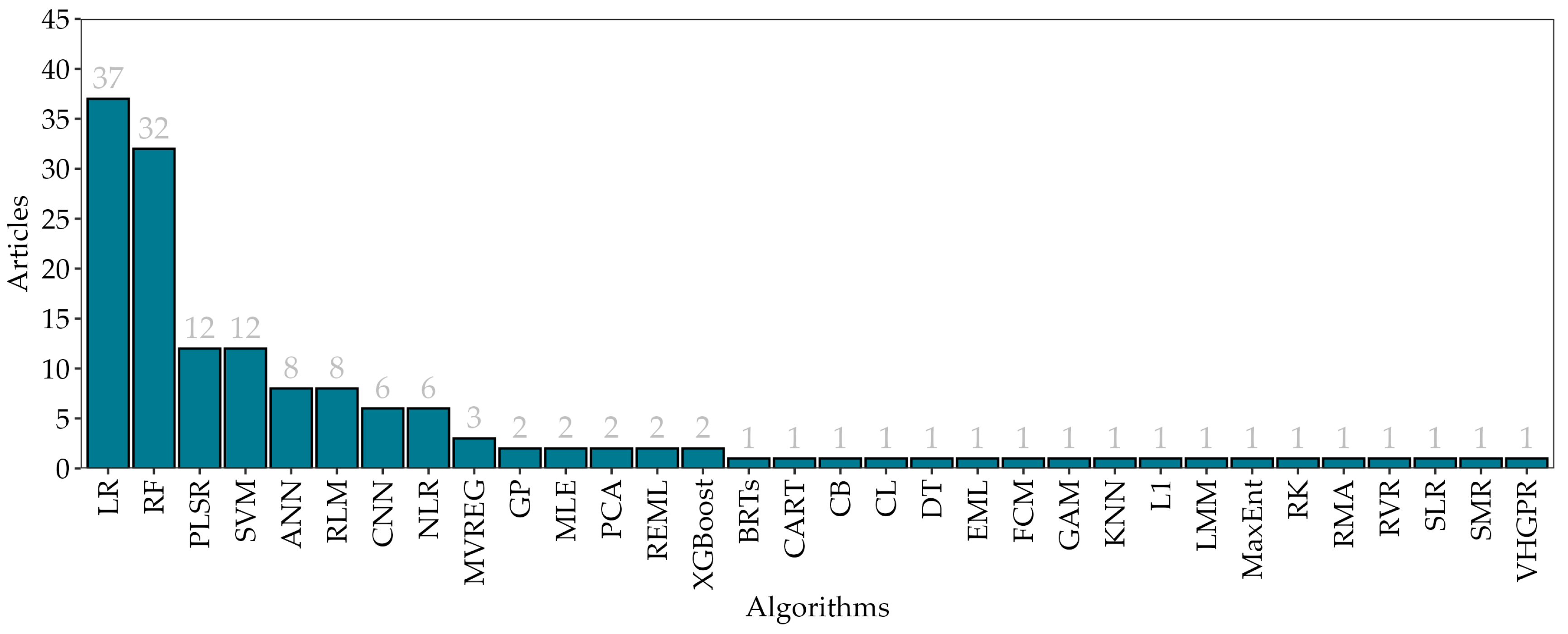

3.4. Machine Learning

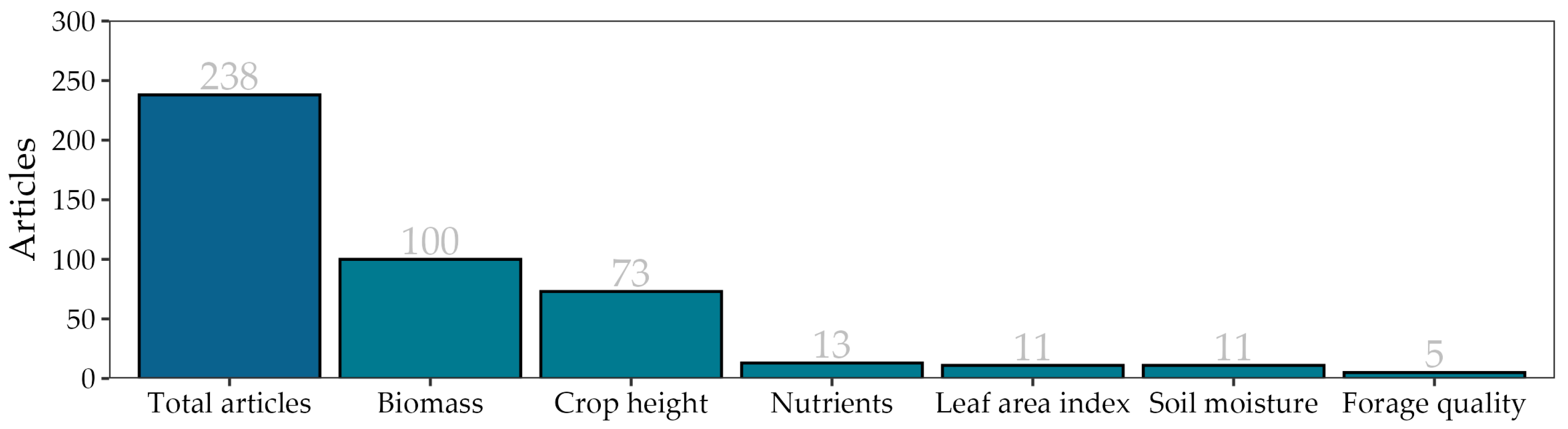

3.5. Applications of UAVs in Pastures and Forage Crops

3.5.1. Biomass and Crop Height

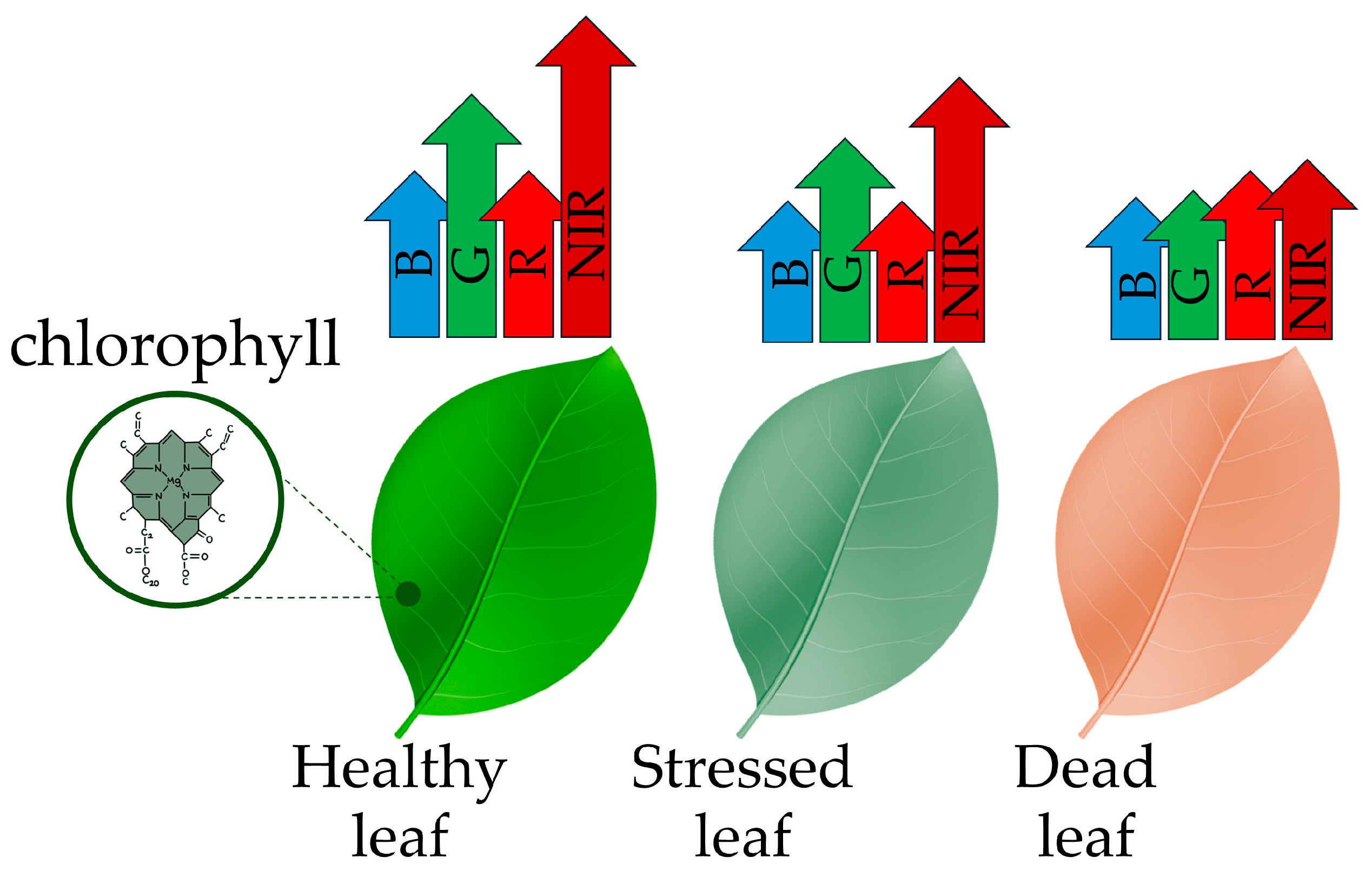

3.5.2. Chlorophyll

3.5.3. Leaf Area Index (LAI)

3.5.4. Nutrients

3.5.5. Soil Moisture (SM)

3.5.6. Forage Quality

3.5.7. Challenges in the Use of UAVs

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- da Rocha Fernandes, M.H.M.; de Souza FernandesJunior, J.; Adams, J.M.; Lee, M.; Reis, R.A.; Tedeschi, L.O. Using Sentinel-2 Satellite Images and Machine Learning Algorithms to Predict Tropical Pasture Forage Mass, Crude Protein, and Fiber Content. Sci. Rep. 2024, 14, 8704. [Google Scholar] [CrossRef]

- Sanyaolu, M.; Sadowski, A. The Role of Precision Agriculture Technologies in Enhancing Sustainable Agriculture. Sustainability 2024, 16, 6668. [Google Scholar] [CrossRef]

- Papakonstantinou, G.I.; Voulgarakis, N.; Terzidou, G.; Fotos, L.; Giamouri, E.; Papatsiros, V.G. Precision Livestock Farming Technology: Applications and Challenges of Animal Welfare and Climate Change. Agriculture 2024, 14, 620. [Google Scholar] [CrossRef]

- Sishodia, R.P.; Ray, R.L.; Singh, S.K. Applications of Remote Sensing in Precision Agriculture: A Review. Remote Sens. 2020, 12, 3136. [Google Scholar] [CrossRef]

- Řezník, T.; Lukas, V.; Charvát, K.; Křivánek, Z.; Kepka, M.; Herman, L.; Řezníková, H. Disaster Risk Reduction in Agriculture through Geospatial (Big) Data Processing. ISPRS Int. J. Geo-Inf. 2017, 6, 238. [Google Scholar] [CrossRef]

- Manoj, K.N.; Shekara, B.G.; Sridhara, S.; Jha, P.K.; Prasad, P.V.V. Biomass Quantity and Quality from Different Year-Round Cereal–Legume Cropping Systems as Forage or Fodder for Livestock. Sustainability 2021, 13, 9414. [Google Scholar] [CrossRef]

- Blaix, C.; Chabrerie, O.; Alard, D.; Catterou, M.; Diquelou, S.; Dutoit, T.; Lacoux, J.; Loucougaray, G.; Michelot-Antalik, A.; Pacé, M.; et al. Forage Nutritive Value Shows Synergies with Plant Diversity in a Wide Range of Semi-Natural Grassland Habitats. Agric. Ecosyst. Environ. 2023, 347, 108369. [Google Scholar] [CrossRef]

- Tlahig, S.; Neji, M.; Atoui, A.; Seddik, M.; Dbara, M.; Yahia, H.; Nagaz, K.; Najari, S.; Khorchani, T.; Loumerem, M. Genetic and Seasonal Variation in Forage Quality of Lucerne (Medicago sativa L.) for Resilience to Climate Change in Arid Environments. J. Agric. Food Res. 2024, 15, 100986. [Google Scholar] [CrossRef]

- Fraser, M.D.; Vallin, H.E.; Roberts, B.P. Animal Board Invited Review: Grassland-Based Livestock Farming and Biodiversity. Animal 2022, 16, 100671. [Google Scholar] [CrossRef]

- Cheng, M.; McCarl, B.; Fei, C. Climate Change and Livestock Production: A Literature Review. Atmosphere 2022, 13, 140. [Google Scholar] [CrossRef]

- Cabrita, A.R.J.; Valente, I.M.; Monteiro, A.; Sousa, C.; Miranda, C.; Almeida, A.; Cortez, P.P.; Castro, C.; Maia, M.R.G.; Trindade, H.; et al. Environmental Conditions Affect the Nutritive Value and Alkaloid Profiles of Lupinus Forage: Opportunities and Threats for Sustainable Ruminant Systems. Heliyon 2024, 10, e28790. [Google Scholar] [CrossRef]

- Vermelho, A.B.; Moreira, J.V.; Teixeira Akamine, I.; Cardoso, V.S.; Mansoldo, F.R.P. Agricultural Pest Management: The Role of Microorganisms in Biopesticides and Soil Bioremediation. Plants 2024, 13, 2762. [Google Scholar] [CrossRef]

- Subhashree, S.N.; Igathinathane, C.; Akyuz, A.; Borhan, M.; Hendrickson, J.; Archer, D.; Liebig, M.; Toledo, D.; Sedivec, K.; Kronberg, S.; et al. Tools for Predicting Forage Growth in Rangelands and Economic Analyses—A Systematic Review. Agriculture 2023, 13, 455. [Google Scholar] [CrossRef]

- Carella, A.; Fischer, B.; Massenti, P.T.; Lo Bianco, R.; Carella, A.; Tomas, P.; Massenti, R.; Lo Bianco, R. Continuous Plant-Based and Remote Sensing for Determination of Fruit Tree Water Status. Horticulturae 2024, 10, 516. [Google Scholar] [CrossRef]

- Cárceles Rodríguez, B.; Durán-Zuazo, V.H.; Soriano Rodríguez, M.; García-Tejero, I.F.; Gálvez Ruiz, B.; Cuadros Tavira, S. Conservation Agriculture as a Sustainable System for Soil Health: A Review. Soil Syst. 2022, 6, 87. [Google Scholar] [CrossRef]

- Wang, X.; Yan, S.; Wang, W.; Liubing, Y.; Li, M.; Yu, Z.; Chang, S.; Hou, F. Monitoring Leaf Area Index of the Sown Mixture Pasture through UAV Multispectral Image and Texture Characteristics. Comput. Electron. Agric. 2023, 214, 108333. [Google Scholar] [CrossRef]

- Lu, B.; He, Y.; Liu, H.H.T. Mapping Vegetation Biophysical and Biochemical Properties Using Unmanned Aerial Vehicles-Acquired Imagery. Int. J. Remote Sens. 2018, 39, 5265–5287. [Google Scholar] [CrossRef]

- Avneri, A.; Aharon, S.; Brook, A.; Atsmon, G.; Smirnov, E.; Sadeh, R.; Abbo, S.; Peleg, Z.; Herrmann, I.; Bonfil, D.J.; et al. UAS-Based Imaging for Prediction of Chickpea Crop Biophysical Parameters and Yield. Comput. Electron. Agric. 2023, 205, 107581. [Google Scholar] [CrossRef]

- Júnior, G.D.N.A.; da Silva, T.G.F.; de Souza, L.S.B.; de Araújo, G.G.L.; de Moura, M.S.B.; Alves, C.P.; da Silva Salvador, K.R.; de Souza, C.A.A.; de Assunção Montenegro, A.A.; da Silva, M.J. Phenophases, Morphophysiological Indices and Cutting Time in Clones of the Forage Cacti under Controlled Water Regimes in a Semiarid Environment. J. Arid Environ. 2021, 190, 104510. [Google Scholar] [CrossRef]

- Rueda-Ayala, V.P.; Peña, J.M.; Höglind, M.; Bengochea-Guevara, J.M.; Andújar, D. Comparing UAV-Based Technologies and RGB-D Reconstruction Methods for Plant Height and Biomass Monitoring on Grass Ley. Sensors 2019, 19, 535. [Google Scholar] [CrossRef]

- Grüner, E.; Astor, T.; Wachendorf, M. Biomass Prediction of Heterogeneous Temperate Grasslands Using an SfM Approach Based on UAV Imaging. Agronomy 2019, 9, 54. [Google Scholar] [CrossRef]

- Pan, T.; Ye, H.; Zhang, X.; Liao, X.; Wang, D.; Bayin, D.; Safarov, M.; Okhonniyozov, M.; Majid, G. Estimating Aboveground Biomass of Grassland in Central Asia Mountainous Areas Using Unmanned Aerial Vehicle Vegetation Indices and Image Textures—A Case Study of Typical Grassland in Tajikistan. Environ. Sustain. Indic. 2024, 22, 100345. [Google Scholar] [CrossRef]

- Fan, X.; Kawamura, K.; Xuan, T.D.; Yuba, N.; Lim, J.; Yoshitoshi, R.; Minh, T.N.; Kurokawa, Y.; Obitsu, T. Low-Cost Visible and near-Infrared Camera on an Unmanned Aerial Vehicle for Assessing the Herbage Biomass and Leaf Area Index in an Italian Ryegrass Field. Grassl. Sci. 2018, 64, 145–150. [Google Scholar] [CrossRef]

- López-Calderón, M.J.; Estrada-ávalos, J.; Rodríguez-Moreno, V.M.; Mauricio-Ruvalcaba, J.E.; Martínez-Sifuentes, A.R.; Delgado-Ramírez, G.; Miguel-Valle, E. Estimation of Total Nitrogen Content in Forage Maize (Zea mays L.) Using Spectral Indices: Analysis by Random Forest. Agriculture 2020, 10, 451. [Google Scholar] [CrossRef]

- Zhu, X.; Yang, Q.; Chen, X.; Ding, Z. An Approach for Joint Estimation of Grassland Leaf Area Index and Leaf Chlorophyll Content from UAV Hyperspectral Data. Remote Sens. 2023, 15, 2525. [Google Scholar] [CrossRef]

- Geipel, J.; Bakken, A.K.; Jørgensen, M.; Korsaeth, A. Forage Yield and Quality Estimation by Means of UAV and Hyperspectral Imaging. Precis. Agric. 2021, 22, 1437–1463. [Google Scholar] [CrossRef]

- Chen, A.; Xu, C.; Zhang, M.; Guo, J.; Xing, X.; Yang, D.; Xu, B.; Yang, X. Cross-Scale Mapping of above-Ground Biomass and Shrub Dominance by Integrating UAV and Satellite Data in Temperate Grassland. Remote Sens. Environ. 2024, 304, 114024. [Google Scholar] [CrossRef]

- Messina, G.; Modica, G. Applications of UAV Thermal Imagery in Precision Agriculture: State of the Art and Future Research Outlook. Remote Sens. 2020, 12, 1491. [Google Scholar] [CrossRef]

- Xu, C.; Zhao, D.; Zheng, Z.; Zhao, P.; Chen, J.; Li, X.; Zhao, X.; Zhao, Y.; Liu, W.; Wu, B.; et al. Correction of UAV LiDAR-Derived Grassland Canopy Height Based on Scan Angle. Front. Plant Sci. 2023, 14, 1108109. [Google Scholar] [CrossRef]

- Yepes-Nuñez, J.J.; Urrútia, G.; Romero-García, M.; Alonso-Fernández, S. The PRISMA 2020 Statement: An Updated Guideline for Reporting Systematic Reviews. Rev. Esp. Cardiol. 2021, 74, 790–799. [Google Scholar] [CrossRef]

- Jenerowicz, A.; Wierzbicki, D.; Kedzierski, M. Radiometric Correction with Topography Influence of Multispectral Imagery Obtained from Unmanned Aerial Vehicles. Remote Sens. 2023, 15, 2059. [Google Scholar] [CrossRef]

- Jakob, S.; Zimmermann, R.; Gloaguen, R. The Need for Accurate Geometric and Radiometric Corrections of Drone-Borne Hyperspectral Data for Mineral Exploration: MEPHySTo—A Toolbox for Pre-Processing Drone-Borne Hyperspectral Data. Remote Sens. 2017, 9, 88. [Google Scholar] [CrossRef]

- Lu, B.; He, Y. Species Classification Using Unmanned Aerial Vehicle (UAV)-Acquired High Spatial Resolution Imagery in a Heterogeneous Grassland. ISPRS J. Photogramm. Remote Sens. 2017, 128, 73–85. [Google Scholar] [CrossRef]

- Kim, J.I.; Kim, T.; Shin, D.; Kim, S.H. Fast and Robust Geometric Correction for Mosaicking UAV Images with Narrow Overlaps. Int. J. Remote Sens. 2017, 38, 2557–2576. [Google Scholar] [CrossRef]

- Zhu, H.; Huang, Y.; An, Z.; Zhang, H.; Han, Y.; Zhao, Z.; Li, F.; Zhang, C.; Hou, C. Assessing Radiometric Calibration Methods for Multispectral UAV Imagery and the Influence of Illumination, Flight Altitude and Flight Time on Reflectance, Vegetation Index and Inversion of Winter Wheat AGB and LAI. Comput. Electron. Agric. 2024, 219, 108821. [Google Scholar] [CrossRef]

- Xue, B.; Ming, B.; Xin, J.; Yang, H.; Gao, S.; Guo, H.; Feng, D.; Nie, C.; Wang, K.; Li, S. Radiometric Correction of Multispectral Field Images Captured under Changing Ambient Light Conditions and Applications in Crop Monitoring. Drones 2023, 7, 223. [Google Scholar] [CrossRef]

- Daniels, L.; Eeckhout, E.; Wieme, J.; Dejaegher, Y.; Audenaert, K.; Maes, W.H. Identifying the Optimal Radiometric Calibration Method for UAV-Based Multispectral Imaging. Remote Sens. 2023, 15, 2909. [Google Scholar] [CrossRef]

- Jiang, J.; Zhang, Q.; Wang, W.; Wu, Y.; Zheng, H.; Yao, X.; Zhu, Y.; Cao, W.; Cheng, T. MACA: A Relative Radiometric Correction Method for Multiflight Unmanned Aerial Vehicle Images Based on Concurrent Satellite Imagery. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Poncet, A.M.; Knappenberger, T.; Brodbeck, C.; Fogle, M.; Shaw, J.N.; Ortiz, B.V. Multispectral UAS Data Accuracy for Different Radiometric Calibration Methods. Remote Sens. 2019, 11, 1917. [Google Scholar] [CrossRef]

- Andrade, O.B.d.; Montenegro, A.A.d.A.; Silva Neto, M.A.d.; Sousa, L.d.B.d.; Almeida, T.A.B.; de Lima, J.L.M.P.; Carvalho, A.A.d.; Silva, M.V.d.; Medeiros, V.W.C.d.; Soares, R.G.F.; et al. UAV-Based Classification of Intercropped Forage Cactus: A Comparison of RGB and Multispectral Sample Spaces Using Machine Learning in an Irrigated Area. AgriEngineering 2024, 6, 509–525. [Google Scholar] [CrossRef]

- Wang, Y.; Yang, Z.; Khan, H.A.; Kootstra, G. Improving Radiometric Block Adjustment for UAV Multispectral Imagery under Variable Illumination Conditions. Remote Sens. 2024, 16, 3019. [Google Scholar] [CrossRef]

- Cao, S.; Danielson, B.; Clare, S.; Koenig, S.; Campos-Vargas, C.; Sanchez-Azofeifa, A. Radiometric Calibration Assessments for UAS-Borne Multispectral Cameras: Laboratory and Field Protocols. ISPRS J. Photogramm. Remote Sens. 2019, 149, 132–145. [Google Scholar] [CrossRef]

- Nigon, T.; Paiao, G.D.; Mulla, D.J.; Fernández, F.G.; Yang, C. The Influence of Aerial Hyperspectral Image Processing Workflow on Nitrogen Uptake Prediction Accuracy in Maize. Remote Sens. 2021, 14, 132. [Google Scholar] [CrossRef]

- Honkavaara, E.; Hakala, T.; Markelin, L.; Rosnell, T.; Saari, H.; Mäkynen, J. A Process for Radiometric Correction of UAV Image Blocks. Photogramm. Fernerkund. Geoinf. 2012, 2012, 115–127. [Google Scholar] [CrossRef] [PubMed]

- Khadka, N.; Teixeira Pinto, C.; Leigh, L.; Petropoulos, G.P.; Pavlides, A.; Nocerino, E. Detection of Change Points in Pseudo-Invariant Calibration Sites Time Series Using Multi-Sensor Satellite Imagery. Remote Sens. 2021, 13, 2079. [Google Scholar] [CrossRef]

- Mei, A.; Bassani, C.; Fontinovo, G.; Salvatori, R.; Allegrini, A. The Use of Suitable Pseudo-Invariant Targets for MIVIS Data Calibration by the Empirical Line Method. ISPRS J. Photogramm. Remote Sens. 2016, 114, 102–114. [Google Scholar] [CrossRef]

- Ryadi, G.Y.I.; Syariz, M.A.; Lin, C.H. Relaxation-Based Radiometric Normalization for Multitemporal Cross-Sensor Satellite Images. Sensors 2023, 23, 5150. [Google Scholar] [CrossRef]

- Liu, K.; Ke, T.; Tao, P.; He, J.; Xi, K.; Yang, K. Robust Radiometric Normalization of Multitemporal Satellite Images Via Block Adjustment without Master Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 6029–6043. [Google Scholar] [CrossRef]

- Redana, M.; Lancaster, L.T.; Chong, X.Y.; Lip, Y.Y.; Gibbins, C. An Open-Source Method for Producing Reliable Water Temperature Maps for Ecological Applications Using Non-Radiometric Sensors. Remote Sens. Appl. Soc. Environ. 2024, 34, 101184. [Google Scholar] [CrossRef]

- Malbéteau, Y.; Johansen, K.; Aragon, B.; Al-Mashhawari, S.K.; McCabe, M.F. Overcoming the Challenges of Thermal Infrared Orthomosaics Using a Swath-Based Approach to Correct for Dynamic Temperature and Wind Effects. Remote Sens. 2021, 13, 3255. [Google Scholar] [CrossRef]

- Aragon, B.; Johansen, K.; Parkes, S.; Malbeteau, Y.; Al-mashharawi, S.; Al-amoudi, T.; Andrade, C.F.; Turner, D.; Lucieer, A.; McCabe, M.F. A Calibration Procedure for Field and UAV-Based Uncooled Thermal Infrared Instruments. Sensors 2020, 20, 3316. [Google Scholar] [CrossRef] [PubMed]

- Virtue, J.; Turner, D.; Williams, G.; Zeliadt, S.; McCabe, M.; Lucieer, A. Thermal Sensor Calibration for Unmanned Aerial Systems Using an External Heated Shutter. Drones 2021, 5, 119. [Google Scholar] [CrossRef]

- Tunca, E.; Köksal, E.S.; Çetin Taner, S. Calibrating UAV Thermal Sensors Using Machine Learning Methods for Improved Accuracy in Agricultural Applications. Infrared Phys. Technol. 2023, 133, 104804. [Google Scholar] [CrossRef]

- de Oca, A.M.; Flores, G. A UAS Equipped with a Thermal Imaging System with Temperature Calibration for Crop Water Stress Index Computation. In Proceedings of the 2021 International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 15–18 June 2021; pp. 714–720. [Google Scholar]

- Kelly, J.; Kljun, N.; Olsson, P.O.; Mihai, L.; Liljeblad, B.; Weslien, P.; Klemedtsson, L.; Eklundh, L. Challenges and Best Practices for Deriving Temperature Data from an Uncalibrated UAV Thermal Infrared Camera. Remote Sens. 2019, 11, 567. [Google Scholar] [CrossRef]

- Han, Y.; Tarakey, B.A.; Hong, S.J.; Kim, S.Y.; Kim, E.; Lee, C.H.; Kim, G. Calibration and Image Processing of Aerial Thermal Image for UAV Application in Crop Water Stress Estimation. J. Sens. 2021, 2021, 5537795. [Google Scholar] [CrossRef]

- Matese, A.; Di Gennaro, S.F. Practical Applications of a Multisensor UAV Platform Based on Multispectral, Thermal and RGB High Resolution Images in Precision Viticulture. Agriculture 2018, 8, 116. [Google Scholar] [CrossRef]

- Rocchini, D.; Di Rita, A. Relief Effects on Aerial Photos Geometric Correction. Appl. Geogr. 2005, 25, 159–168. [Google Scholar] [CrossRef]

- Santana, L.S.; Ferraz, G.A.E.S.; Marin, D.B.; Barbosa, B.; Dos Santos, L.M.; Ferraz, P.F.P.; Conti, L.; Camiciottoli, S.; Rossi, G. Influence of Flight Altitude and Control Points in the Georeferencing of Images Obtained by Unmanned Aerial Vehicle. Eur. J. Remote Sens. 2021, 54, 59–71. [Google Scholar] [CrossRef]

- Zhang, K.; Okazawa, H.; Hayashi, K.; Hayashi, T.; Fiwa, L.; Maskey, S. Optimization of Ground Control Point Distribution for Unmanned Aerial Vehicle Photogrammetry for Inaccessible Fields. Sustainability 2022, 14, 9505. [Google Scholar] [CrossRef]

- Dai, W.; Zheng, G.; Antoniazza, G.; Zhao, F.; Chen, K.; Lu, W.; Lane, S.N. Improving UAV-SfM Photogrammetry for Modelling High-Relief Terrain: Image Collection Strategies and Ground Control Quantity. Earth Surf. Process. Landf. 2023, 48, 2884–2899. [Google Scholar] [CrossRef]

- Villanueva, J.K.S.; Blanco, A.C. Optimization of Ground Control Point (GCP) Configuration for Unmanned Aerial Vehicle (UAV) Survey Using Structure from Motion (SFM). Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 167–174. [Google Scholar] [CrossRef]

- Gomes Pessoa, G.; Caceres Carrilho, A.; Takahashi Miyoshi, G.; Amorim, A.; Galo, M. Assessment of UAV-Based Digital Surface Model and the Effects of Quantity and Distribution of Ground Control Points. Int. J. Remote Sens. 2021, 42, 65–83. [Google Scholar] [CrossRef]

- Liu, X.; Lian, X.; Yang, W.; Wang, F.; Han, Y.; Zhang, Y. Accuracy Assessment of a UAV Direct Georeferencing Method and Impact of the Configuration of Ground Control Points. Drones 2022, 6, 30. [Google Scholar] [CrossRef]

- Martínez-Carricondo, P.; Agüera-Vega, F.; Carvajal-Ramírez, F.; Mesas-Carrascosa, F.J.; García-Ferrer, A.; Pérez-Porras, F.J. Assessment of UAV-Photogrammetric Mapping Accuracy Based on Variation of Ground Control Points. Int. J. Appl. Earth Obs. Geoinf. 2018, 72, 1–10. [Google Scholar] [CrossRef]

- James, M.R.; Antoniazza, G.; Robson, S.; Lane, S.N. Mitigating Systematic Error in Topographic Models for Geomorphic Change Detection: Accuracy, Precision and Considerations beyond off-Nadir Imagery. Earth Surf. Process. Landf. 2020, 45, 2251–2271. [Google Scholar] [CrossRef]

- Ulvi, A. The Effect of the Distribution and Numbers of Ground Control Points on the Precision of Producing Orthophoto Maps with an Unmanned Aerial Vehicle. J. Asian Archit. Build. Eng. 2021, 20, 806–817. [Google Scholar] [CrossRef]

- Stott, E.; Williams, R.D.; Hoey, T.B. Ground Control Point Distribution for Accurate Kilometre-Scale Topographic Mapping Using an RTK-GNSS Unmanned Aerial Vehicle and SfM Photogrammetry. Drones 2020, 4, 55. [Google Scholar] [CrossRef]

- Tomaštík, J.; Mokroš, M.; Surový, P.; Grznárová, A.; Merganič, J. UAV RTK/PPK Method—An Optimal Solution for Mapping Inaccessible Forested Areas? Remote Sens. 2019, 11, 721. [Google Scholar] [CrossRef]

- Cho, J.M.; Lee, B.K. GCP and PPK Utilization Plan to Deal with RTK Signal Interruption in RTK-UAV Photogrammetry. Drones 2023, 7, 265. [Google Scholar] [CrossRef]

- Famiglietti, N.A.; Cecere, G.; Grasso, C.; Memmolo, A.; Vicari, A. A Test on the Potential of a Low Cost Unmanned Aerial Vehicle RTK/PPK Solution for Precision Positioning. Sensors 2021, 21, 3882. [Google Scholar] [CrossRef]

- Jain, A.; Mahajan, M.; Saraf, R. Standardization of the Shape of Ground Control Point (GCP) and the Methodology for Its Detection in Images for UAV-Based Mapping Applications. In Proceedings of the Advances in Computer Vision; Arai, K., Kapoor, S., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 459–476. [Google Scholar]

- Santos, W.M.d.; Costa, C.d.J.P.; Medeiros, M.L.d.S.; Jardim, A.M.d.R.F.; Cunha, M.V.d.; Dubeux Junior, J.C.B.; Jaramillo, D.M.; Bezerra, A.C.; Souza, E.J.O.d. Can Unmanned Aerial Vehicle Images Be Used to Estimate Forage Production Parameters in Agroforestry Systems in the Caatinga? Appl. Sci. 2024, 14, 4896. [Google Scholar] [CrossRef]

- Carneiro, F.M.; Angeli Furlani, C.E.; Zerbato, C.; Candida de Menezes, P.; da Silva Gírio, L.A.; Freire de Oliveira, M. Comparison between Vegetation Indices for Detecting Spatial and Temporal Variabilities in Soybean Crop Using Canopy Sensors. Precis. Agric. 2020, 21, 979–1007. [Google Scholar] [CrossRef]

- Pereira, J.A.; Vélez, S.; Martínez-Peña, R.; Castrillo, D. Beyond Vegetation: A Review Unveiling Additional Insights into Agriculture and Forestry through the Application of Vegetation Indices. J 2023, 6, 421–436. [Google Scholar] [CrossRef]

- El-Hendawy, S.E.; Al-Suhaibani, N.A.; Elsayed, S.; Hassan, W.M.; Dewir, Y.H.; Refay, Y.; Abdella, K.A. Potential of the Existing and Novel Spectral Reflectance Indices for Estimating the Leaf Water Status and Grain Yield of Spring Wheat Exposed to Different Irrigation Rates. Agric. Water Manag. 2019, 217, 356–373. [Google Scholar] [CrossRef]

- Ali, A.; Martelli, R.; Lupia, F.; Barbanti, L. Assessing Multiple Years’ Spatial Variability of Crop Yields Using Satellite Vegetation Indices. Remote Sens. 2019, 11, 2384. [Google Scholar] [CrossRef]

- Amaral, L.R.; Oldoni, H.; Baptista, G.M.M.; Ferreira, G.H.S.; Freitas, R.G.; Martins, C.L.; Cunha, I.A.; Santos, A.F. Remote Sensing Imagery to Predict Soybean Yield: A Case Study of Vegetation Indices Contribution. Precis. Agric. 2024, 25, 2375–2393. [Google Scholar] [CrossRef]

- Wang, R.; Tuerxun, N.; Zheng, J. Improved Estimation of SPAD Values in Walnut Leaves by Combining Spectral, Texture, and Structural Information from UAV-Based Multispectral Image. Sci. Hortic. 2024, 328, 112940. [Google Scholar] [CrossRef]

- Liu, H.Q.; Huete, A. A Feedback Based Modification of the NDVI to Minimize Canopy Background and Atmospheric Noise. IEEE Trans. Geosci. Remote Sens. 1995, 33, 457–465. [Google Scholar] [CrossRef]

- Huete, A.R. A Soil-Adjusted Vegetation Index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Brenner, C.; Zeeman, M.; Bernhardt, M.; Schulz, K. Estimation of Evapotranspiration of Temperate Grassland Based on High-Resolution Thermal and Visible Range Imagery from Unmanned Aerial Systems. Int. J. Remote Sens. 2018, 39, 5141–5174. [Google Scholar] [CrossRef]

- Gée, C.; Denimal, E. RGB Image-Derived Indicators for Spatial Assessment of the Impact of Broadleaf Weeds on Wheat Biomass. Remote Sens. 2020, 12, 2982. [Google Scholar] [CrossRef]

- Liang, H.; Lee, S.C.; Bae, W.; Kim, J.; Seo, S. Towards UAVs in Construction: Advancements, Challenges, and Future Directions for Monitoring and Inspection. Drones 2023, 7, 202. [Google Scholar] [CrossRef]

- Cottrell, B.; Kalacska, M.; Arroyo-Mora, J.P.; Lucanus, O.; Inamdar, D.; Løke, T.; Soffer, R.J. Limitations of a Multispectral UAV Sensor for Satellite Validation and Mapping Complex Vegetation. Remote Sens. 2024, 16, 2463. [Google Scholar] [CrossRef]

- Shamaoma, H.; Chirwa, P.W.; Ramoelo, A.; Hudak, A.T.; Syampungani, S. The Application of UASs in Forest Management and Monitoring: Challenges and Opportunities for Use in the Miombo Woodland. Forests 2022, 13, 1812. [Google Scholar] [CrossRef]

- Marques, P.; Pádua, L.; Sousa, J.J.; Fernandes-Silva, A. Advancements in Remote Sensing Imagery Applications for Precision Management in Olive Growing: A Systematic Review. Remote Sens. 2024, 16, 1324. [Google Scholar] [CrossRef]

- Stuart, M.B.; McGonigle, A.J.S.; Willmott, J.R. Hyperspectral Imaging in Environmental Monitoring: A Review of Recent Developments and Technological Advances in Compact Field Deployable Systems. Sensors 2019, 19, 3071. [Google Scholar] [CrossRef]

- Akbar, S.; Abdolmaleki, M.; Ghadernejad, S.; Esmaeili, K. Applying Knowledge-Based and Data-Driven Methods to Improve Ore Grade Control of Blast Hole Drill Cuttings Using Hyperspectral Imaging. Remote Sens. 2024, 16, 2823. [Google Scholar] [CrossRef]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Color Indices for Weed Identification Under Various Soil, Residue, and Lighting Conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and Photographic Infrared Linear Combinations for Monitoring Vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel Algorithms for Remote Estimation of Vegetation Fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring Vegetation Systems in the Great Plains with ERTS. NASA Spec. Publ. 1973, 351, 309. [Google Scholar]

- Gitelson, A.; Kaufman, Y.; Merzlyak, M.N. Use of a Green Channel in Remote Sensing of Global Vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Gitelson, A.; Merzlyak, M.N. Spectral Reflectance Changes Associated with Autumn Senescence of Aesculus hippocastanum L. and Acer platanoides L. Leaves. Spectral Features and Relation to Chlorophyll Estimation. J. Plant Physiol. 1994, 143, 286–292. [Google Scholar] [CrossRef]

- Jordan, C.F. Derivation of Leaf-Area Index from Quality of Light on the Forest Floor. Ecology 1969, 50, 663–666. [Google Scholar] [CrossRef]

- Broge, N.H.; Leblanc, E. Comparing Prediction Power and Stability of Broadband and Hyperspectral Vegetation Indices for Estimation of Green Leaf Area Index and Canopy Chlorophyll Density. Remote Sens. Environ. 2001, 76, 156–172. [Google Scholar] [CrossRef]

- Penuelas, J.; Filella, I.; Biel, C.; Serrano, L.; Save, R. The Reflectance at the 950–970 Nm Region as an Indicator of Plant Water Status. Int. J. Remote Sens. 1993, 14, 1887–1905. [Google Scholar] [CrossRef]

- Merzlyak, M.N.; Gitelson, A.A.; Chivkunova, O.B.; Rakitin, V.Y. Non-Destructive Optical Detection of Pigment Changes during Leaf Senescence and Fruit Ripening. Physiol. Plant. 1999, 106, 135–141. [Google Scholar] [CrossRef]

- Gamon, J.A.; Serrano, L.; Surfus, J.S. The Photochemical Reflectance Index: An Optical Indicator of Photosynthetic Radiation Use Efficiency across Species, Functional Types, and Nutrient Levels. Oecologia 1997, 112, 492–501. [Google Scholar] [CrossRef]

- Sellami, M.H.; Albrizio, R.; Čolović, M.; Hamze, M.; Cantore, V.; Todorovic, M.; Piscitelli, L.; Stellacci, A.M. Selection of Hyperspectral Vegetation Indices for Monitoring Yield and Physiological Response in Sweet Maize under Different Water and Nitrogen Availability. Agronomy 2022, 12, 489. [Google Scholar] [CrossRef]

- Lu, D.; Batistella, M. Exploring TM Image Texture and Its Relationships with Biomass Estimation in Rondônia, Brazilian Amazon. Acta Amaz. 2005, 35, 249–257. [Google Scholar] [CrossRef]

- Sun, G.; Zhang, Y.; Chen, H.; Wang, L.; Li, M.; Sun, X.; Fei, S.; Xiao, S.; Yan, L.; Li, Y.; et al. Improving Soybean Yield Prediction by Integrating UAV Nadir and Cross-Circling Oblique Imaging. Eur. J. Agron. 2024, 155, 127134. [Google Scholar] [CrossRef]

- Liu, T.; Zhu, S.; Yang, T.; Zhang, W.; Xu, Y.; Zhou, K.; Wu, W.; Zhao, Y.; Yao, Z.; Yang, G.; et al. Maize Height Estimation Using Combined Unmanned Aerial Vehicle Oblique Photography and LIDAR Canopy Dynamic Characteristics. Comput. Electron. Agric. 2024, 218, 108685. [Google Scholar] [CrossRef]

- Grüner, E.; Astor, T.; Wachendorf, M. Prediction of Biomass and N Fixation of Legume–Grass Mixtures Using Sensor Fusion. Front. Plant Sci. 2021, 11, 603921. [Google Scholar] [CrossRef]

- Zhu, X.; Bi, Y.; Du, J.; Gao, X.; Zhang, T.; Pi, W.; Zhang, Y.; Wang, Y.; Zhang, H. Research on Deep Learning Method Recognition and a Classification Model of Grassland Grass Species Based on Unmanned Aerial Vehicle Hyperspectral Remote Sensing. Grassl. Sci. 2023, 69, 3–11. [Google Scholar] [CrossRef]

- Benco, M.; Hudec, R.; Kamencay, P.; Zachariasova, M.; Matuskal, S. An Advanced Approach to Extraction of Colour Texture Features Based on GLCM. Int. J. Adv. Robot. Syst. 2014, 11, 104. [Google Scholar] [CrossRef]

- Wu, Y.; Ma, J.; Zhang, W.; Sun, L.; Liu, Y.; Liu, B.; Wang, B.; Chen, Z. Rapid Evaluation of Drought Tolerance of Winter Wheat Cultivars under Water-Deficit Conditions Using Multi-Criteria Comprehensive Evaluation Based on UAV Multispectral and Thermal Images and Automatic Noise Removal. Comput. Electron. Agric. 2024, 218, 108679. [Google Scholar] [CrossRef]

- Liu, Y.; Fan, Y.; Feng, H.; Chen, R.; Bian, M.; Ma, Y.; Yue, J.; Yang, G. Estimating Potato Above-Ground Biomass Based on Vegetation Indices and Texture Features Constructed from Sensitive Bands of UAV Hyperspectral Imagery. Comput. Electron. Agric. 2024, 220, 108918. [Google Scholar] [CrossRef]

- Vyas, R.; Kanumuri, T.; Sheoran, G.; Dubey, P. Co-Occurrence Features and Neural Network Classification Approach for Iris Recognition. In Proceedings of the 2017 Fourth International Conference on Image Information Processing (ICIIP), Shimla, India, 21–23 December 2017; Institute of Electrical and Electronics Engineers Inc.: Shimla, India, 2017; pp. 1–6. [Google Scholar]

- Wang, F.; Yi, Q.; Hu, J.; Xie, L.; Yao, X.; Xu, T.; Zheng, J. Combining Spectral and Textural Information in UAV Hyperspectral Images to Estimate Rice Grain Yield. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102397. [Google Scholar] [CrossRef]

- Ganesan, P.; Sajiv, G. User Oriented Color Space for Satellite Image Segmentation Using Fuzzy Based Techniques. In Proceedings of the 2017 International Conference on Innovations in Information, Embedded and Communication Systems (ICIIECS), Coimbatore, India, 17–18 March 2017; Institute of Electrical and Electronics Engineers Inc.: Coimbatore, India, 2017; pp. 1–6. [Google Scholar]

- Ganesan, P.; Sathish, B.S.; Vasanth, K.; Sivakumar, V.G.; Vadivel, M.; Ravi, C.N. A Comprehensive Review of the Impact of Color Space on Image Segmentation. In Proceedings of the 2019 5th International Conference on Advanced Computing & Communication Systems (ICACCS), Coimbatore, India, 15–16 March 2019; Institute of Electrical and Electronics Engineers Inc.: Coimbatore, India, 2019; pp. 962–967. [Google Scholar]

- Gracia-Romero, A.; Kefauver, S.C.; Vergara-Díaz, O.; Zaman-Allah, M.A.; Prasanna, B.M.; Cairns, J.E.; Araus, J.L. Comparative Performance of Ground vs. Aerially Assessed Rgb and Multispectral Indices for Early-Growth Evaluation of Maize Performance under Phosphorus Fertilization. Front. Plant Sci. 2017, 8, 309121. [Google Scholar] [CrossRef]

- Weerasuriya, C.; Ng, S.H.; Woods, W.; Johnstone, T.; Vitta, P.; Hugrass, L.; Juodkazis, S. Feasibility of Magneto-Encephalography Scan under Color-Tailored Illumination. Appl. Sci. 2023, 13, 2988. [Google Scholar] [CrossRef]

- Niu, Y.; Han, W.; Zhang, H.; Zhang, L.; Chen, H. Estimating Maize Plant Height Using a Crop Surface Model Constructed from UAV RGB Images. Biosyst. Eng. 2024, 241, 56–67. [Google Scholar] [CrossRef]

- Liu, Y.; You, H.; Tang, X.; You, Q.; Huang, Y.; Chen, J. Study on Individual Tree Segmentation of Different Tree Species Using Different Segmentation Algorithms Based on 3D UAV Data. Forests 2023, 14, 1327. [Google Scholar] [CrossRef]

- Fei, S.; Xiao, S.; Li, Q.; Shu, M.; Zhai, W.; Xiao, Y.; Chen, Z.; Yu, H.; Ma, Y. Enhancing Leaf Area Index and Biomass Estimation in Maize with Feature Augmentation from Unmanned Aerial Vehicle-Based Nadir and Cross-Circling Oblique Photography. Comput. Electron. Agric. 2023, 215, 108462. [Google Scholar] [CrossRef]

- Zhao, X.; Su, Y.; Hu, T.; Cao, M.; Liu, X.; Yang, Q.; Guan, H.; Liu, L.; Guo, Q. Analysis of UAV Lidar Information Loss and Its Influence on the Estimation Accuracy of Structural and Functional Traits in a Meadow Steppe. Ecol. Indic. 2022, 135, 108515. [Google Scholar] [CrossRef]

- Taugourdeau, S.; Diedhiou, A.; Fassinou, C.; Bossoukpe, M.; Diatta, O.; N’Goran, A.; Auderbert, A.; Ndiaye, O.; Diouf, A.A.; Tagesson, T.; et al. Estimating Herbaceous Aboveground Biomass in Sahelian Rangelands Using Structure from Motion Data Collected on the Ground and by UAV. Ecol. Evol. 2022, 12, e8867. [Google Scholar] [CrossRef]

- Hasheminasab, S.M.; Zhou, T.; Habib, A. GNSS/INS-Assisted Structure from Motion Strategies for UAV-Based Imagery over Mechanized Agricultural Fields. Remote Sens. 2020, 12, 351. [Google Scholar] [CrossRef]

- Xiao, S.; Ye, Y.; Fei, S.; Chen, H.; Zhang, B.; Li, Q.; Cai, Z.; Che, Y.; Wang, Q.; Ghafoor, A.Z.; et al. High-Throughput Calculation of Organ-Scale Traits with Reconstructed Accurate 3D Canopy Structures Using a UAV RGB Camera with an Advanced Cross-Circling Oblique Route. ISPRS J. Photogramm. Remote Sens. 2023, 201, 104–122. [Google Scholar] [CrossRef]

- Wazid, M.; Das, A.K.; Chamola, V.; Park, Y. Uniting Cyber Security and Machine Learning: Advantages, Challenges and Future Research. ICT Express 2022, 8, 313–321. [Google Scholar] [CrossRef]

- Bulagang, A.F.; Weng, N.G.; Mountstephens, J.; Teo, J. A Review of Recent Approaches for Emotion Classification Using Electrocardiography and Electrodermography Signals. Inform. Med. Unlocked 2020, 20, 100363. [Google Scholar] [CrossRef]

- Guido, R.; Ferrisi, S.; Lofaro, D.; Conforti, D. An Overview on the Advancements of Support Vector Machine Models in Healthcare Applications: A Review. Information 2024, 15, 235. [Google Scholar] [CrossRef]

- Khan, F.; Albalawi, O. Analysis of Fat Big Data Using Factor Models and Penalization Techniques: A Monte Carlo Simulation and Application. Axioms 2024, 13, 418. [Google Scholar] [CrossRef]

- Tufail, S.; Riggs, H.; Tariq, M.; Sarwat, A.I. Advancements and Challenges in Machine Learning: A Comprehensive Review of Models, Libraries, Applications, and Algorithms. Electronics 2023, 12, 1789. [Google Scholar] [CrossRef]

- Drogkoula, M.; Kokkinos, K.; Samaras, N. A Comprehensive Survey of Machine Learning Methodologies with Emphasis in Water Resources Management. Appl. Sci. 2023, 13, 12147. [Google Scholar] [CrossRef]

- Zhao, Y.; Sun, Y.; Lu, X.; Zhao, X.; Yang, L.; Sun, Z.; Bai, Y. Hyperspectral Retrieval of Leaf Physiological Traits and Their Links to Ecosystem Productivity in Grassland Monocultures. Ecol. Indic. 2021, 122, 107267. [Google Scholar] [CrossRef]

- Giraldo, R.A.D.; De León, M.Á.; Castillo, Á.R.; López, O.P.; Rocha, E.C.; Asprilla, W.P. Estimation of Forage Availability and Parameters Associated to the Nutritional Quality of Urochloa humidicola Cv Llanero Based on Multispectral Images. Trop. Grasslands-Forrajes Trop. 2023, 11, 61–74. [Google Scholar] [CrossRef]

- De Rosa, D.; Basso, B.; Fasiolo, M.; Friedl, J.; Fulkerson, B.; Grace, P.R.; Rowlings, D.W. Predicting Pasture Biomass Using a Statistical Model and Machine Learning Algorithm Implemented with Remotely Sensed Imagery. Comput. Electron. Agric. 2021, 180, 105880. [Google Scholar] [CrossRef]

- Freitas, R.G.; Pereira, F.R.S.; Dos Reis, A.A.; Magalhães, P.S.G.; Figueiredo, G.K.D.A.; do Amaral, L.R. Estimating Pasture Aboveground Biomass under an Integrated Crop-Livestock System Based on Spectral and Texture Measures Derived from UAV Images. Comput. Electron. Agric. 2022, 198, 107122. [Google Scholar] [CrossRef]

- Singh, A.K.; Kumar, P.; Ali, R.; Al-Ansari, N.; Vishwakarma, D.K.; Kushwaha, K.S.; Panda, K.C.; Sagar, A.; Mirzania, E.; Elbeltagi, A.; et al. An Integrated Statistical-Machine Learning Approach for Runoff Prediction. Sustainability 2022, 14, 8209. [Google Scholar] [CrossRef]

- P Fernandes, A.C.; R Fonseca, A.; Pacheco, F.A.L.; Sanches Fernandes, L.F. Water Quality Predictions through Linear Regression—A Brute Force Algorithm Approach. MethodsX 2023, 10, 102153. [Google Scholar] [CrossRef]

- Eilbeigi, S.; Tavakkolizadeh, M.; Masoodi, A.R. Nonlinear Regression Prediction of Mechanical Properties for SMA-Confined Concrete Cylindrical Specimens. Buildings 2022, 13, 112. [Google Scholar] [CrossRef]

- Ranstam, J.; Cook, J.A. LASSO Regression. Br. J. Surg. 2018, 105, 1348. [Google Scholar] [CrossRef]

- Rocks, J.W.; Mehta, P. Bias-Variance Decomposition of Overparameterized Regression with Random Linear Features. Phys. Rev. E 2022, 106, 025304. [Google Scholar] [CrossRef] [PubMed]

- Speiser, J.L.; Miller, M.E.; Tooze, J.; Ip, E. A Comparison of Random Forest Variable Selection Methods for Classification Prediction Modeling. Expert Syst. Appl. 2019, 134, 93–101. [Google Scholar] [CrossRef] [PubMed]

- Cheng, L.; Chen, X.; De Vos, J.; Lai, X.; Witlox, F. Applying a Random Forest Method Approach to Model Travel Mode Choice Behavior. Travel Behav. Soc. 2019, 14, 1–10. [Google Scholar] [CrossRef]

- Sutradhar, A.; Akter, S.; Shamrat, F.M.J.M.; Ghosh, P.; Zhou, X.; Bin Idris, M.Y.I.; Ahmed, K.; Moni, M.A. Advancing Thyroid Care: An Accurate Trustworthy Diagnostics System with Interpretable AI and Hybrid Machine Learning Techniques. Heliyon 2024, 10, e36556. [Google Scholar] [CrossRef]

- Pereira, F.R.d.S.; de Lima, J.P.; Freitas, R.G.; Dos Reis, A.A.; Amaral, L.R.d.; Figueiredo, G.K.D.A.; Lamparelli, R.A.C.; Magalhães, P.S.G. Nitrogen Variability Assessment of Pasture Fields under an Integrated Crop-Livestock System Using UAV, PlanetScope, and Sentinel-2 Data. Comput. Electron. Agric. 2022, 193, 106645. [Google Scholar] [CrossRef]

- Akhiat, Y.; Manzali, Y.; Chahhou, M.; Zinedine, A. A New Noisy Random Forest Based Method for Feature Selection. Cybern. Inf. Technol. 2021, 21, 10–28. [Google Scholar] [CrossRef]

- Mentch, L.; Zhou, S. Randomization as Regularization: A Degrees of Freedom Explanation for Random Forest Success. J. Mach. Learn. Res. 2020, 21, 1–36. [Google Scholar]

- Probst, P.; Wright, M.N.; Boulesteix, A.L. Hyperparameters and Tuning Strategies for Random Forest. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2019, 9, e1301. [Google Scholar] [CrossRef]

- Kim, H.; Ko, K. Improving Forecast Accuracy of Financial Vulnerability: PLS Factor Model Approach. Econ. Model. 2020, 88, 341–355. [Google Scholar] [CrossRef]

- Bratković, K.; Luković, K.; Perišić, V.; Savić, J.; Maksimović, J.; Adžić, S.; Rakonjac, A.; Matković Stojšin, M. Interpreting the Interaction of Genotype with Environmental Factors in Barley Using Partial Least Squares Regression Model. Agronomy 2024, 14, 194. [Google Scholar] [CrossRef]

- Al Marouni, Y.; Bentaleb, Y. State of Art of PLS Regression for Non Quantitative Data and in Big Data Context. In Proceedings of the 4th International Conference on Networking, Information Systems & Security, Kenitra, Morocco, 1–2 April 2021; Association for Computing Machinery: New York, NY, USA, 2021. [Google Scholar]

- Hou, Y.Y.; Li, J.; Chen, X.B.; Ye, C.Q. A Partial Least Squares Regression Model Based on Variational Quantum Algorithm. Laser Phys. Lett. 2022, 19, 095204. [Google Scholar] [CrossRef]

- Metz, M.; Abdelghafour, F.; Roger, J.M.; Lesnoff, M. A Novel Robust PLS Regression Method Inspired from Boosting Principles: RoBoost-PLSR. Anal. Chim. Acta 2021, 1179, 338823. [Google Scholar] [CrossRef] [PubMed]

- Alnaqbi, A.J.; Zeiada, W.; Al-Khateeb, G.; Abttan, A.; Abuzwidah, M. Predictive Models for Flexible Pavement Fatigue Cracking Based on Machine Learning. Transp. Eng. 2024, 16, 100243. [Google Scholar] [CrossRef]

- Feng, L.; Zhang, Z.; Ma, Y.; Du, Q.; Williams, P.; Drewry, J.; Luck, B. Alfalfa Yield Prediction Using UAV-Based Hyperspectral Imagery and Ensemble Learning. Remote Sens. 2020, 12, 2028. [Google Scholar] [CrossRef]

- Costa, L.S.; Sano, E.E.; Ferreira, M.E.; Munhoz, C.B.R.; Costa, J.V.S.; Rufino Alves Júnior, L.; de Mello, T.R.B.; da Cunha Bustamante, M.M. Woody Plant Encroachment in a Seasonal Tropical Savanna: Lessons about Classifiers and Accuracy from UAV Images. Remote Sens. 2023, 15, 2342. [Google Scholar] [CrossRef]

- Adugna, T.; Xu, W.; Fan, J. Comparison of Random Forest and Support Vector Machine Classifiers for Regional Land Cover Mapping Using Coarse Resolution FY-3C Images. Remote Sens. 2022, 14, 574. [Google Scholar] [CrossRef]

- Lin, X.; Chen, J.; Lou, P.; Yi, S.; Qin, Y.; You, H.; Han, X. Improving the Estimation of Alpine Grassland Fractional Vegetation Cover Using Optimized Algorithms and Multi-Dimensional Features. Plant Methods 2021, 17, 96. [Google Scholar] [CrossRef]

- Araya, S.N.; Fryjoff-Hung, A.; Anderson, A.; Viers, J.H.; Ghezzehei, T.A. Advances in Soil Moisture Retrieval from Multispectral Remote Sensing Using Unoccupied Aircraft Systems and Machine Learning Techniques. Hydrol. Earth Syst. Sci. 2021, 25, 2739–2758. [Google Scholar] [CrossRef]

- Vilar, P.; Morais, T.G.; Rodrigues, N.R.; Gama, I.; Monteiro, M.L.; Domingos, T.; Teixeira, R.F.M. Object-Based Classification Approaches for Multitemporal Identification and Monitoring of Pastures in Agroforestry Regions Using Multispectral Unmanned Aerial Vehicle Products. Remote Sens. 2020, 12, 814. [Google Scholar] [CrossRef]

- Taye, M.M. Understanding of Machine Learning with Deep Learning: Architectures, Workflow, Applications and Future Directions. Computers 2023, 12, 91. [Google Scholar] [CrossRef]

- Fan, F.L.; Xiong, J.; Li, M.; Wang, G. On Interpretability of Artificial Neural Networks: A Survey. IEEE Trans. Radiat. Plasma Med. Sci. 2021, 5, 741–760. [Google Scholar] [CrossRef] [PubMed]

- Taye, M.M. Theoretical Understanding of Convolutional Neural Network: Concepts, Architectures, Applications, Future Directions. Computation 2023, 11, 52. [Google Scholar] [CrossRef]

- Wang, Y.-H.; Su, W.-H.; Wang, Y.-H.; Su, W.-H. Convolutional Neural Networks in Computer Vision for Grain Crop Phenotyping: A Review. Agronomy 2022, 12, 2659. [Google Scholar] [CrossRef]

- Krichen, M. Convolutional Neural Networks: A Survey. Computers 2023, 12, 151. [Google Scholar] [CrossRef]

- Kuzudisli, C.; Bakir-Gungor, B.; Bulut, N.; Qaqish, B.; Yousef, M. Review of Feature Selection Approaches Based on Grouping of Features. PeerJ 2023, 11, e15666. [Google Scholar] [CrossRef]

- Khaire, U.M.; Dhanalakshmi, R. Stability of Feature Selection Algorithm: A Review. J. King Saud Univ.—Comput. Inf. Sci. 2022, 34, 1060–1073. [Google Scholar] [CrossRef]

- Bertolini, R.; Finch, S.J.; Nehm, R.H. Enhancing Data Pipelines for Forecasting Student Performance: Integrating Feature Selection with Cross-Validation. Int. J. Educ. Technol. High. Educ. 2021, 18, 44. [Google Scholar] [CrossRef]

- Lu, B.; He, Y.; Liu, H. Investigating Species Composition in a Temperate Grassland Using Unmanned Aerial Vehicle-Acquired Imagery. In Proceedings of the 2016 4th International Workshop on Earth Observation and Remote Sensing Applications (EORSA), Guangzhou, China, 4–6 July 2016; Institute of Electrical and Electronics Engineers Inc.: Piscataway Township, NJ, USA, 2016; pp. 107–111. [Google Scholar]

- Lin, X.; Chen, J.; Wu, T.; Yi, S.; Chen, J.; Han, X. Time-Series Simulation of Alpine Grassland Cover Using Transferable Stacking Deep Learning and Multisource Remote Sensing Data in the Google Earth Engine. Int. J. Appl. Earth Obs. Geoinf. 2024, 131, 103964. [Google Scholar] [CrossRef]

- Raiaan, M.A.K.; Sakib, S.; Fahad, N.M.; Al Mamun, A.; Rahman, M.A.; Shatabda, S.; Mukta, M.S.H. A Systematic Review of Hyperparameter Optimization Techniques in Convolutional Neural Networks. Decis. Anal. J. 2024, 11, 100470. [Google Scholar] [CrossRef]

- Elgeldawi, E.; Sayed, A.; Galal, A.R.; Zaki, A.M. Hyperparameter Tuning for Machine Learning Algorithms Used for Arabic Sentiment Analysis. Informatics 2021, 8, 79. [Google Scholar] [CrossRef]

- Setiadi, D.R.I.M.; Susanto, A.; Nugroho, K.; Muslikh, A.R.; Ojugo, A.A.; Gan, H.S. Rice Yield Forecasting Using Hybrid Quantum Deep Learning Model. Computers 2024, 13, 191. [Google Scholar] [CrossRef]

- Angelakis, D.; Ventouras, E.C.; Kostopoulos, S.; Asvestas, P. Comparative Analysis of Deep Learning Models for Optimal EEG-Based Real-Time Servo Motor Control. Eng 2024, 5, 1708–1736. [Google Scholar] [CrossRef]

- Kaliappan, J.; Bagepalli, A.R.; Almal, S.; Mishra, R.; Hu, Y.C.; Srinivasan, K. Impact of Cross-Validation on Machine Learning Models for Early Detection of Intrauterine Fetal Demise. Diagnostics 2023, 13, 1692. [Google Scholar] [CrossRef]

- Jan, M.S.; Hussain, S.; e Zahra, R.; Emad, M.Z.; Khan, N.M.; Rehman, Z.U.; Cao, K.; Alarifi, S.S.; Raza, S.; Sherin, S.; et al. Appraisal of Different Artificial Intelligence Techniques for the Prediction of Marble Strength. Sustainability 2023, 15, 8835. [Google Scholar] [CrossRef]

- Szeghalmy, S.; Fazekas, A. A Comparative Study of the Use of Stratified Cross-Validation and Distribution-Balanced Stratified Cross-Validation in Imbalanced Learning. Sensors 2023, 23, 2333. [Google Scholar] [CrossRef]

- Allgaier, J.; Pryss, R. Cross-Validation Visualized: A Narrative Guide to Advanced Methods. Mach. Learn. Knowl. Extr. 2024, 6, 1378–1388. [Google Scholar] [CrossRef]

- Wan, L.; Liu, Y.; He, Y.; Cen, H. Prior Knowledge and Active Learning Enable Hybrid Method for Estimating Leaf Chlorophyll Content from Multi-Scale Canopy Reflectance. Comput. Electron. Agric. 2023, 214, 108308. [Google Scholar] [CrossRef]

- Chang, Y.; Le Moan, S.; Bailey, D. RGB Imaging Based Estimation of Leaf Chlorophyll Content. In Proceedings of the 2019 International Conference on Image and Vision Computing New Zealand (IVCNZ), Dunedin, New Zealand, 2–4 December 2019; IEEE Computer Society: Dunedin, New Zealand, 2019; pp. 1–6. [Google Scholar]

- Zhang, Y.W.; Wang, T.; Guo, Y.; Skidmore, A.; Zhang, Z.; Tang, R.; Song, S.; Tang, Z. Estimating Community-Level Plant Functional Traits in a Species-Rich Alpine Meadow Using UAV Image Spectroscopy. Remote Sens. 2022, 14, 3399. [Google Scholar] [CrossRef]

- Cockson, P.; Landis, H.; Smith, T.; Hicks, K.; Whipker, B.E. Characterization of Nutrient Disorders of Cannabis sativa. Appl. Sci. 2019, 9, 4432. [Google Scholar] [CrossRef]

- Noulas, C.; Torabian, S.; Qin, R. Crop Nutrient Requirements and Advanced Fertilizer Management Strategies. Agronomy 2023, 13, 2017. [Google Scholar] [CrossRef]

- Casamitjana, M.; Torres-Madroñero, M.C.; Bernal-Riobo, J.; Varga, D. Soil Moisture Analysis by Means of Multispectral Images According to Land Use and Spatial Resolution on Andosols in the Colombian Andes. Appl. Sci. 2020, 10, 5540. [Google Scholar] [CrossRef]

- Lu, F.; Sun, Y.; Hou, F. Using UAV Visible Images to Estimate the Soil Moisture of Steppe. Water 2020, 12, 2334. [Google Scholar] [CrossRef]

- Sang, Y.; Yu, S.; Lu, F.; Sun, Y.; Wang, S.; Ade, L.; Hou, F. UAV Monitoring Topsoil Moisture in an Alpine Meadow on the Qinghai–Tibet Plateau. Agronomy 2023, 13, 2193. [Google Scholar] [CrossRef]

- Brenner, C.; Thiem, C.E.; Wizemann, H.D.; Bernhardt, M.; Schulz, K. Estimating Spatially Distributed Turbulent Heat Fluxes from High-Resolution Thermal Imagery Acquired with a UAV System. Int. J. Remote Sens. 2017, 38, 3003–3026. [Google Scholar] [CrossRef]

- Zhang, W.; Yi, S.; Qin, Y.; Sun, Y.; Shangguan, D.; Meng, B.; Li, M.; Zhang, J. Effects of Patchiness on Surface Soil Moisture of Alpine Meadow on the Northeastern Qinghai-Tibetan Plateau: Implications for Grassland Restoration. Remote Sens. 2020, 12, 4121. [Google Scholar] [CrossRef]

- Morgan, B.E.; Caylor, K.K. Estimating Fine-Scale Transpiration From UAV-Derived Thermal Imagery and Atmospheric Profiles. Water Resour. Res. 2023, 59, e2023WR035251. [Google Scholar] [CrossRef]

- Nobre, I.d.S.; Araújo, G.G.L.d.; Santos, E.M.; Carvalho, G.G.P.d.; de Albuquerque, I.R.R.; Oliveira, J.S.d.; Ribeiro, O.L.; Turco, S.H.N.; Gois, G.C.; Silva, T.G.F.d.; et al. Cactus Pear Silage to Mitigate the Effects of an Intermittent Water Supply for Feedlot Lambs: Intake, Digestibility, Water Balance and Growth Performance. Ruminants 2023, 3, 121–132. [Google Scholar] [CrossRef]

- Wijesingha, J.; Astor, T.; Schulze-Brüninghoff, D.; Wengert, M.; Wachendorf, M. Predicting Forage Quality of Grasslands Using UAV-Borne Imaging Spectroscopy. Remote Sens. 2020, 12, 126. [Google Scholar] [CrossRef]

- Xia, G.S.; Datcu, M.; Yang, W.; Bai, X. Information Processing for Unmanned Aerial Vehicles (UAVs) in Surveying, Mapping, and Navigation. Geo-Spat. Inf. Sci. 2018, 21, 1. [Google Scholar] [CrossRef]

- Wu, B.; Zhang, M.; Zeng, H.; Tian, F.; Potgieter, A.B.; Qin, X.; Yan, N.; Chang, S.; Zhao, Y.; Dong, Q.; et al. Challenges and Opportunities in Remote Sensing-Based Crop Monitoring: A Review. Natl. Sci. Rev. 2023, 10, nwac290. [Google Scholar] [CrossRef] [PubMed]

- Karmakar, P.; Teng, S.W.; Murshed, M.; Pang, S.; Li, Y.; Lin, H. Crop Monitoring by Multimodal Remote Sensing: A Review. Remote Sens. Appl. Soc. Environ. 2024, 33, 101093. [Google Scholar] [CrossRef]

- Lambertini, A.; Mandanici, E.; Tini, M.A.; Vittuari, L. Technical Challenges for Multi-Temporal and Multi-Sensor Image Processing Surveyed by UAV for Mapping and Monitoring in Precision Agriculture. Remote Sens. 2022, 14, 4954. [Google Scholar] [CrossRef]

- Sproles, E.A.; Mullen, A.; Hendrikx, J.; Gatebe, C.; Taylor, S. Autonomous Aerial Vehicles (AAVs) as a Tool for Improving the Spatial Resolution of Snow Albedo Measurements in Mountainous Regions. Hydrology 2020, 7, 41. [Google Scholar] [CrossRef]

- Puppala, H.; Peddinti, P.R.T.; Tamvada, J.P.; Ahuja, J.; Kim, B. Barriers to the Adoption of New Technologies in Rural Areas: The Case of Unmanned Aerial Vehicles for Precision Agriculture in India. Technol. Soc. 2023, 74, 102335. [Google Scholar] [CrossRef]

- Askerbekov, D.; Garza-Reyes, J.A.; Roy Ghatak, R.; Joshi, R.; Kandasamy, J.; Luiz de Mattos Nascimento, D. Embracing Drones and the Internet of Drones Systems in Manufacturing—An Exploration of Obstacles. Technol. Soc. 2024, 78, 102648. [Google Scholar] [CrossRef]

- Rakholia, R.; Tailor, J.; Prajapati, M.; Shah, M.; Saini, J.R. Emerging Technology Adoption for Sustainable Agriculture in India—A Pilot Study. J. Agric. Food Res. 2024, 17, 101238. [Google Scholar] [CrossRef]

- Bai, A.; Kovách, I.; Czibere, I.; Megyesi, B.; Balogh, P. Examining the Adoption of Drones and Categorisation of Precision Elements among Hungarian Precision Farmers Using a Trans-Theoretical Model. Drones 2022, 6, 200. [Google Scholar] [CrossRef]

- Parmaksiz, O.; Cinar, G. Technology Acceptance among Farmers: Examples of Agricultural Unmanned Aerial Vehicles. Agronomy 2023, 13, 2077. [Google Scholar] [CrossRef]

- Tsiamis, N.; Efthymiou, L.; Tsagarakis, K.P. A Comparative Analysis of the Legislation Evolution for Drone Use in OECD Countries. Drones 2019, 3, 75. [Google Scholar] [CrossRef]

- Merz, M.; Pedro, D.; Skliros, V.; Bergenhem, C.; Himanka, M.; Houge, T.; Matos-Carvalho, J.P.; Lundkvist, H.; Cürüklü, B.; Hamrén, R.; et al. Autonomous UAS-Based Agriculture Applications: General Overview and Relevant European Case Studies. Drones 2022, 6, 128. [Google Scholar] [CrossRef]

- Ayamga, M.; Tekinerdogan, B.; Kassahun, A. Exploring the Challenges Posed by Regulations for the Use of Drones in Agriculture in the African Context. Land 2021, 10, 164. [Google Scholar] [CrossRef]

| Description | Results |

|---|---|

| Time period | 2014–2024 * |

| Articles | 238 |

| Journals | 93 |

| Authors | 1086 |

| Author appearances | 1529 |

| Authors of single-authored documents | 0 |

| N° of citations | 4740 |

| References | 12,250 |

| Author keywords | 936 |

| Annual growth rate | 31.50% |

| International co-authorship | 13% |

| Documents per author | 0.219 |

| Authors per document | 6.43 |

| Citations per document | 19.92 |

| Articles per year | 21.64 |

| Sensor | Vegetation Indices | Calculation Formula | Reference |

|---|---|---|---|

| RGB | Excess Green Vegetation Index (EXG) | [90] | |

| Red Chromatic Coordinate Index (RCC) | [90] | ||

| Green Chromatic Coordinate Index (GCC) | [90] | ||

| Blue Chromatic Coordinate Index (BCC) | [90] | ||

| Normalized Green Red Difference Index (NGRDI) | [91] | ||

| Visible Atmospherically Resistant Index (VARI) | [92] | ||

| Multispectral | Normalized Difference Vegetation Index (NDVI) | [93] | |

| Green Normalized Difference Vegetation Index (GNDVI) | [94] | ||

| Normalized Difference Red Edge Index (NDRE) | [95] | ||

| Ratio Vegetation Index (RVI) | [96] | ||

| Triangular Vegetation Index (TVI) | [97] | ||

| Soil-adjusted Vegetation Index (SAVI) | [81] | ||

| Hyperspectral * | Normalized Difference Vegetation Index (NDVI) | [93] | |

| Water Band Index (WBI) | [98] | ||

| Normalized Difference Red Edge Index (NDRE) | [95] | ||

| Soil-Adjusted Vegetation Index (SAVI) | [81] | ||

| Plant Senescence Reflectance Index (PSRI) | [99] | ||

| Structure Insensitive Pigment Index (SIPI) | [100] |

| Metrics | Formula |

|---|---|

| Mean (ME) | |

| Variance (VA) | |

| Homogeneity (HO) | |

| Contrast (CO) | |

| Dissimilarity (DI) | |

| Entropy (EN) | |

| Second Moment (SM) | |

| Correlation (CC) |

| Color Space | Components |

|---|---|

| CIEXYZ | Y: luminance, Z: blue stimulation, and X: linear combination of cone response curves chosen to be non-negative |

| CIELab | L: luminance, a and b: chrominance |

| CIELuv | L: luminance, u and v: chrominance |

| CIELch | L: luminance, C: chrominance, and h: hue angle |

| CMY | C: cyan, M: magenta, and Y: yellow |

| HSV | H: hue, S: saturation, and V: value |

| HSL | H: hue, S: saturation, and L: luminance |

| HSI | H: hue, S: saturation, and I: intensity |

| I1I2I3 | I1: luminance, I2 and I3: chrominance |

| YIQ | Y: luminance, I and Q: chrominance |

| YUV | Y: luminance, U and V: chrominance |

| YCbCr | Y: luminance, Cb and Cr: chrominance |

| LMS | L: long, M: medium, and S: short light wavelengths |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Santos, W.M.d.; Martins, L.D.C.d.S.; Bezerra, A.C.; Souza, L.S.B.d.; Jardim, A.M.d.R.F.; Silva, M.V.d.; Souza, C.A.A.d.; Silva, T.G.F.d. Use of Unmanned Aerial Vehicles for Monitoring Pastures and Forages in Agricultural Sciences: A Systematic Review. Drones 2024, 8, 585. https://doi.org/10.3390/drones8100585

Santos WMd, Martins LDCdS, Bezerra AC, Souza LSBd, Jardim AMdRF, Silva MVd, Souza CAAd, Silva TGFd. Use of Unmanned Aerial Vehicles for Monitoring Pastures and Forages in Agricultural Sciences: A Systematic Review. Drones. 2024; 8(10):585. https://doi.org/10.3390/drones8100585

Chicago/Turabian StyleSantos, Wagner Martins dos, Lady Daiane Costa de Sousa Martins, Alan Cezar Bezerra, Luciana Sandra Bastos de Souza, Alexandre Maniçoba da Rosa Ferraz Jardim, Marcos Vinícius da Silva, Carlos André Alves de Souza, and Thieres George Freire da Silva. 2024. "Use of Unmanned Aerial Vehicles for Monitoring Pastures and Forages in Agricultural Sciences: A Systematic Review" Drones 8, no. 10: 585. https://doi.org/10.3390/drones8100585

APA StyleSantos, W. M. d., Martins, L. D. C. d. S., Bezerra, A. C., Souza, L. S. B. d., Jardim, A. M. d. R. F., Silva, M. V. d., Souza, C. A. A. d., & Silva, T. G. F. d. (2024). Use of Unmanned Aerial Vehicles for Monitoring Pastures and Forages in Agricultural Sciences: A Systematic Review. Drones, 8(10), 585. https://doi.org/10.3390/drones8100585