Assessment of UAS Photogrammetry and Planet Imagery for Monitoring Water Levels around Railway Tracks

Abstract

:1. Introduction

2. Materials and Methods

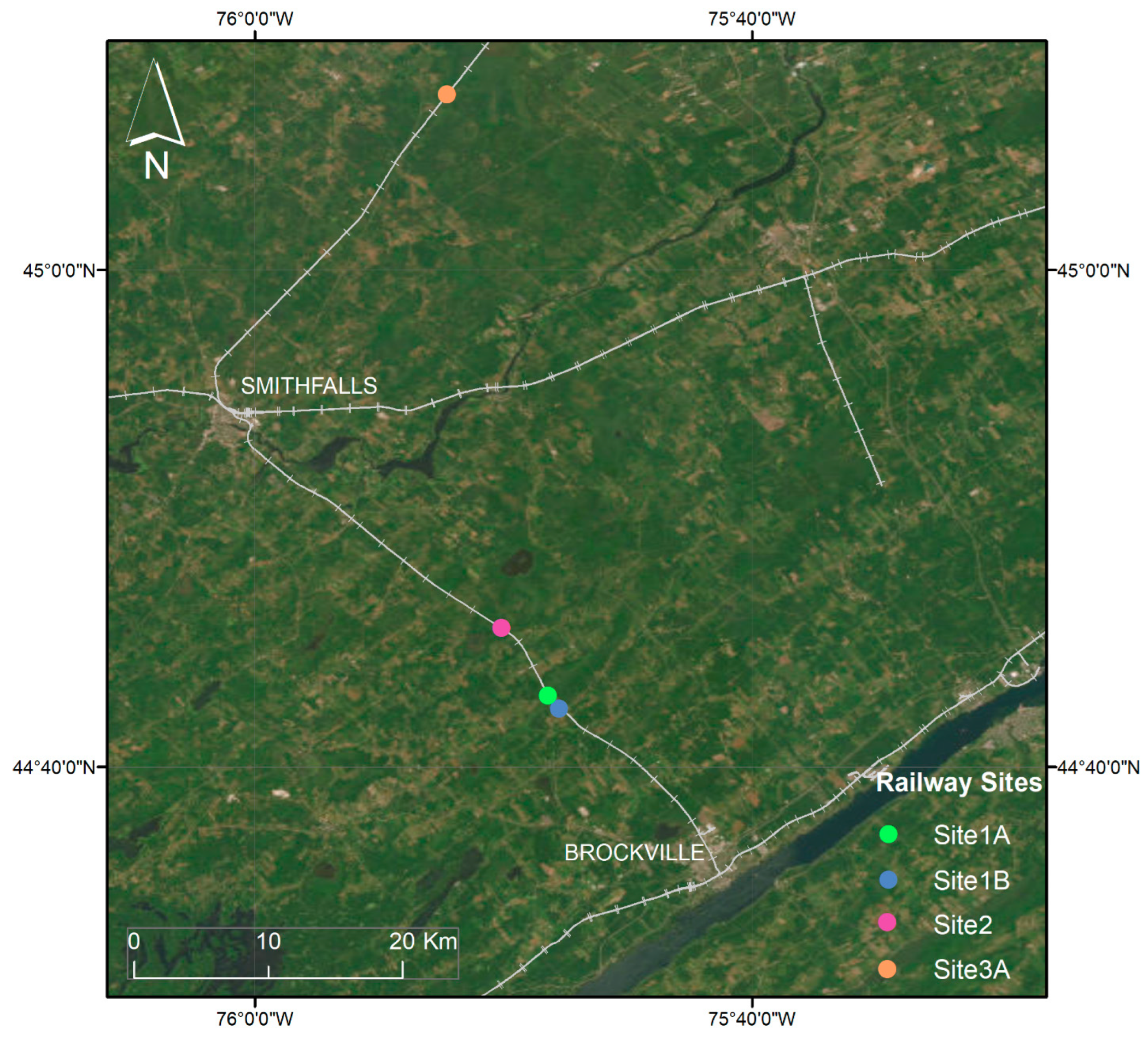

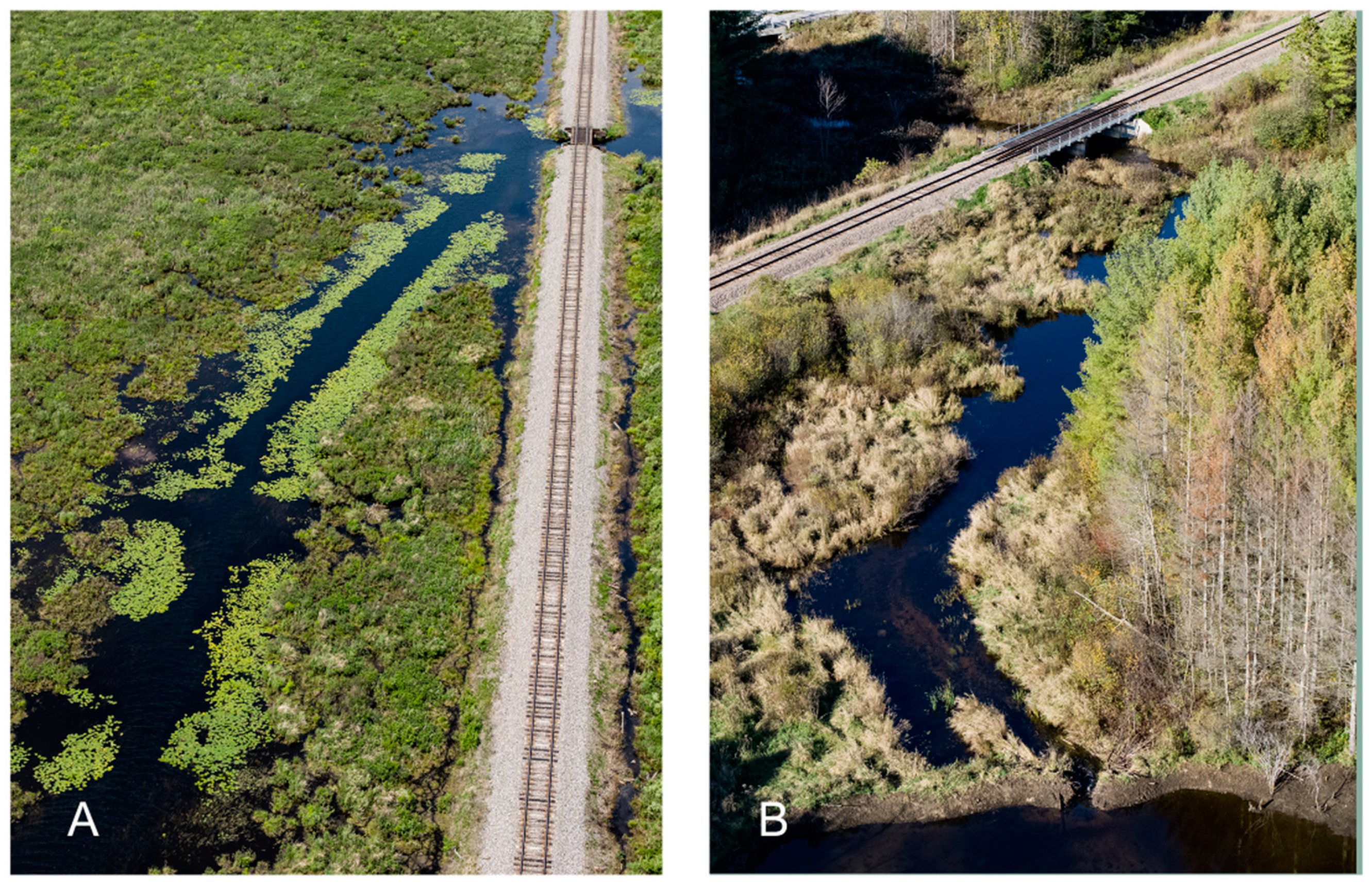

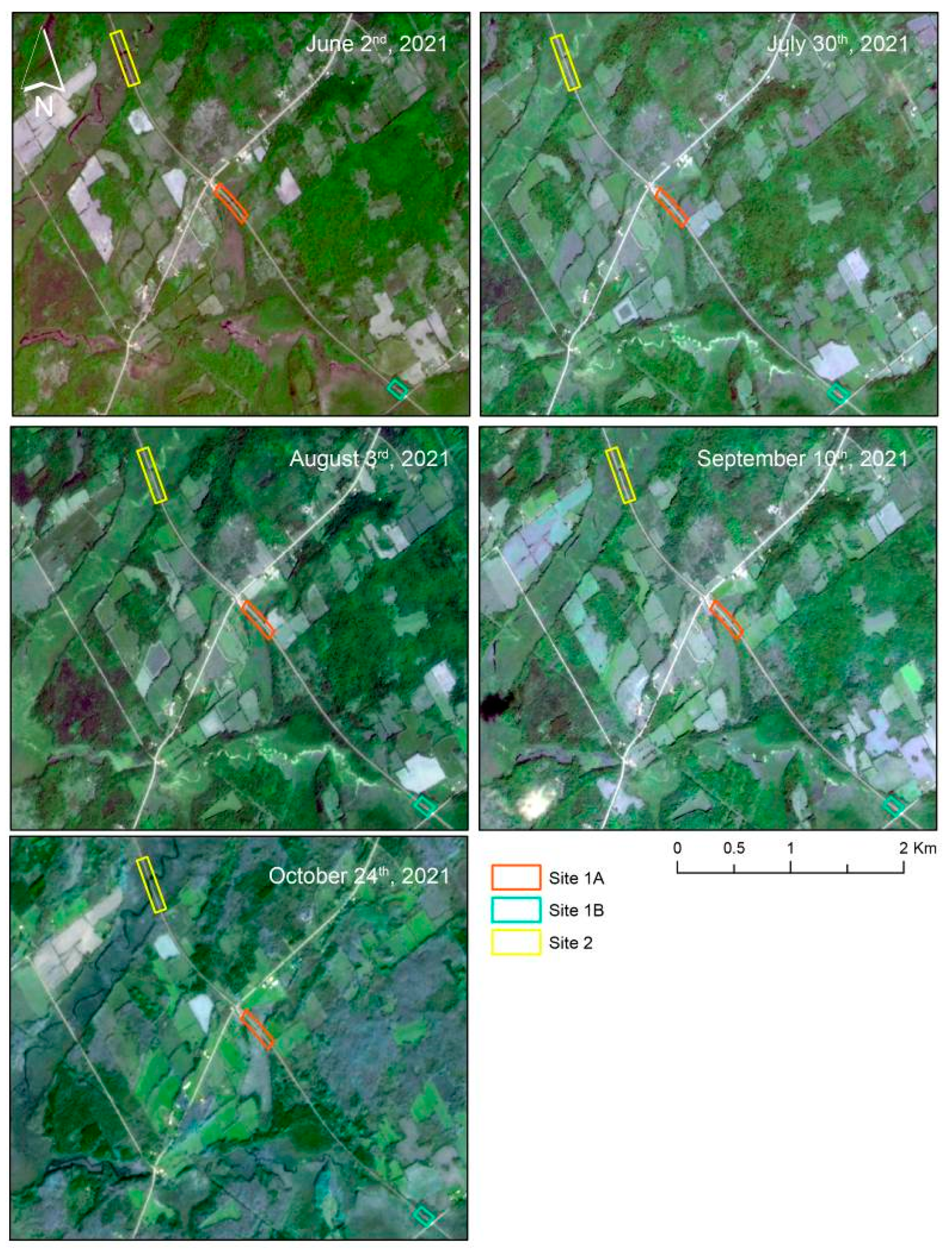

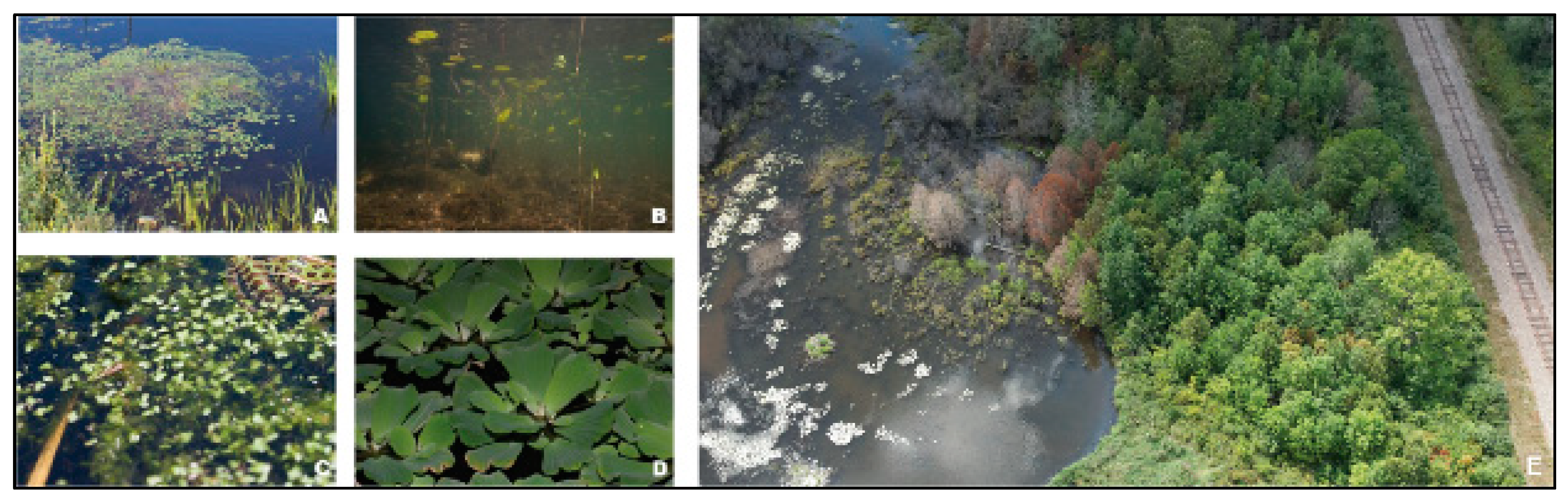

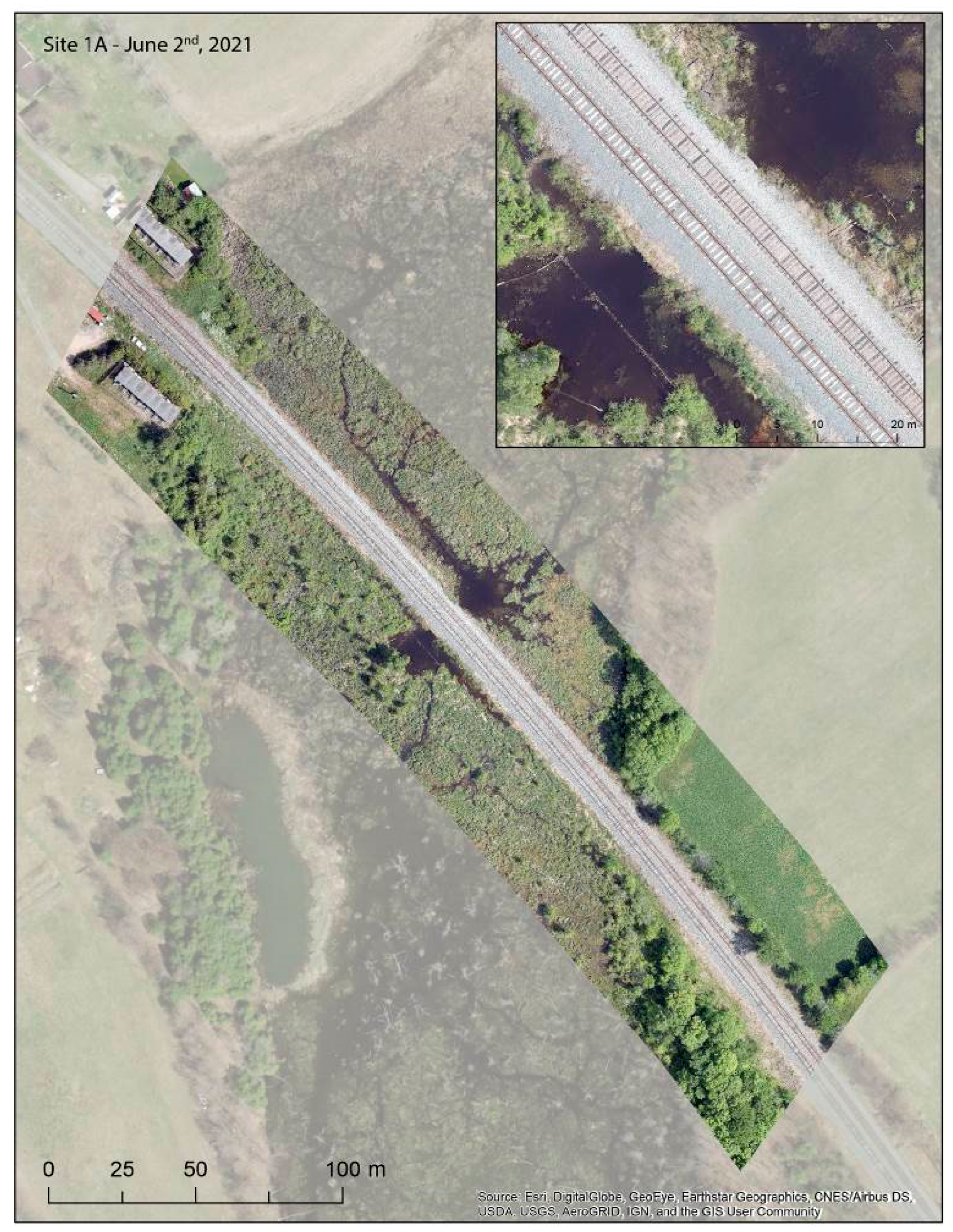

2.1. Test Sites and Flight Plan Considerations

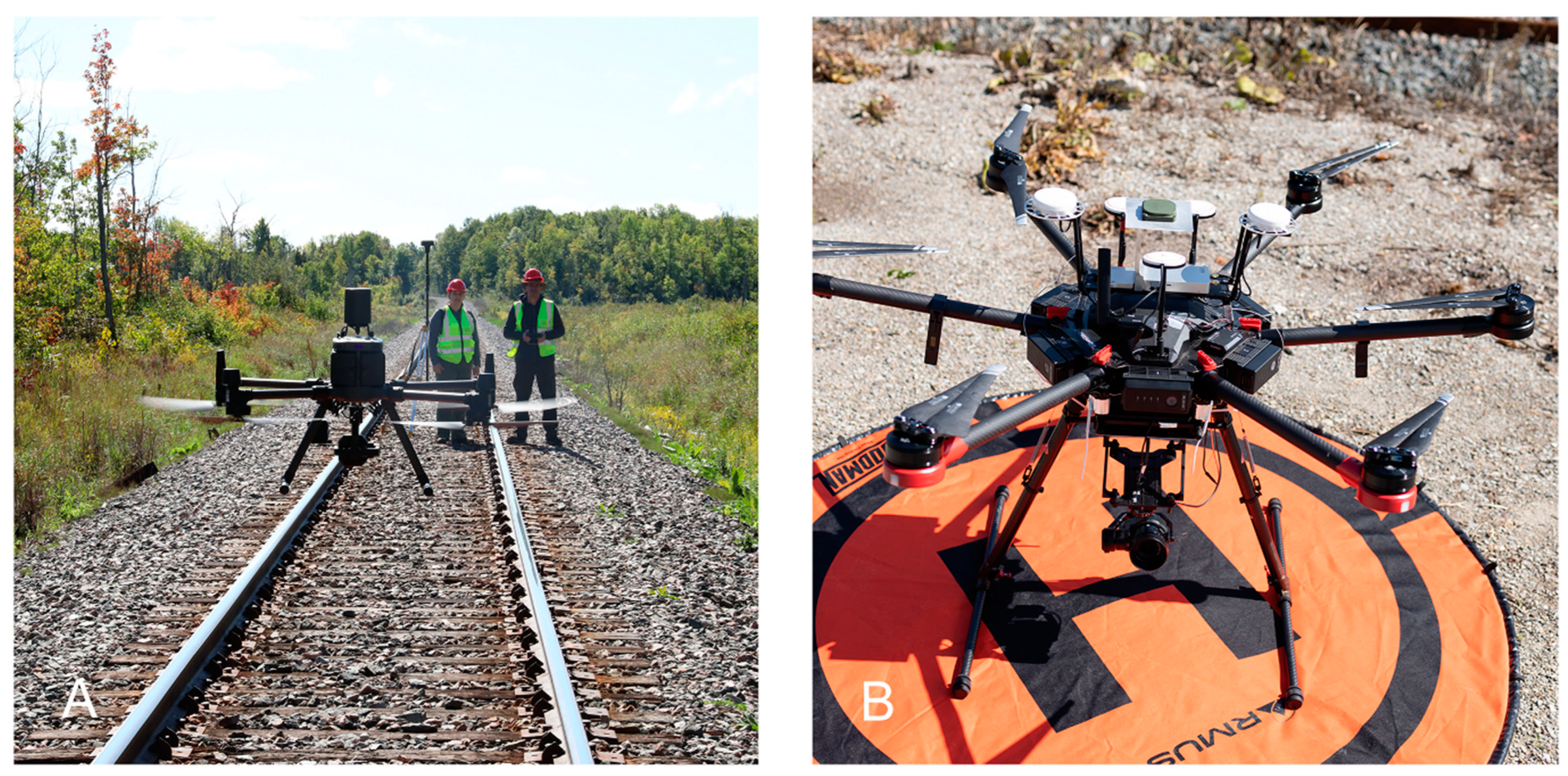

2.2. UAS and Sensors

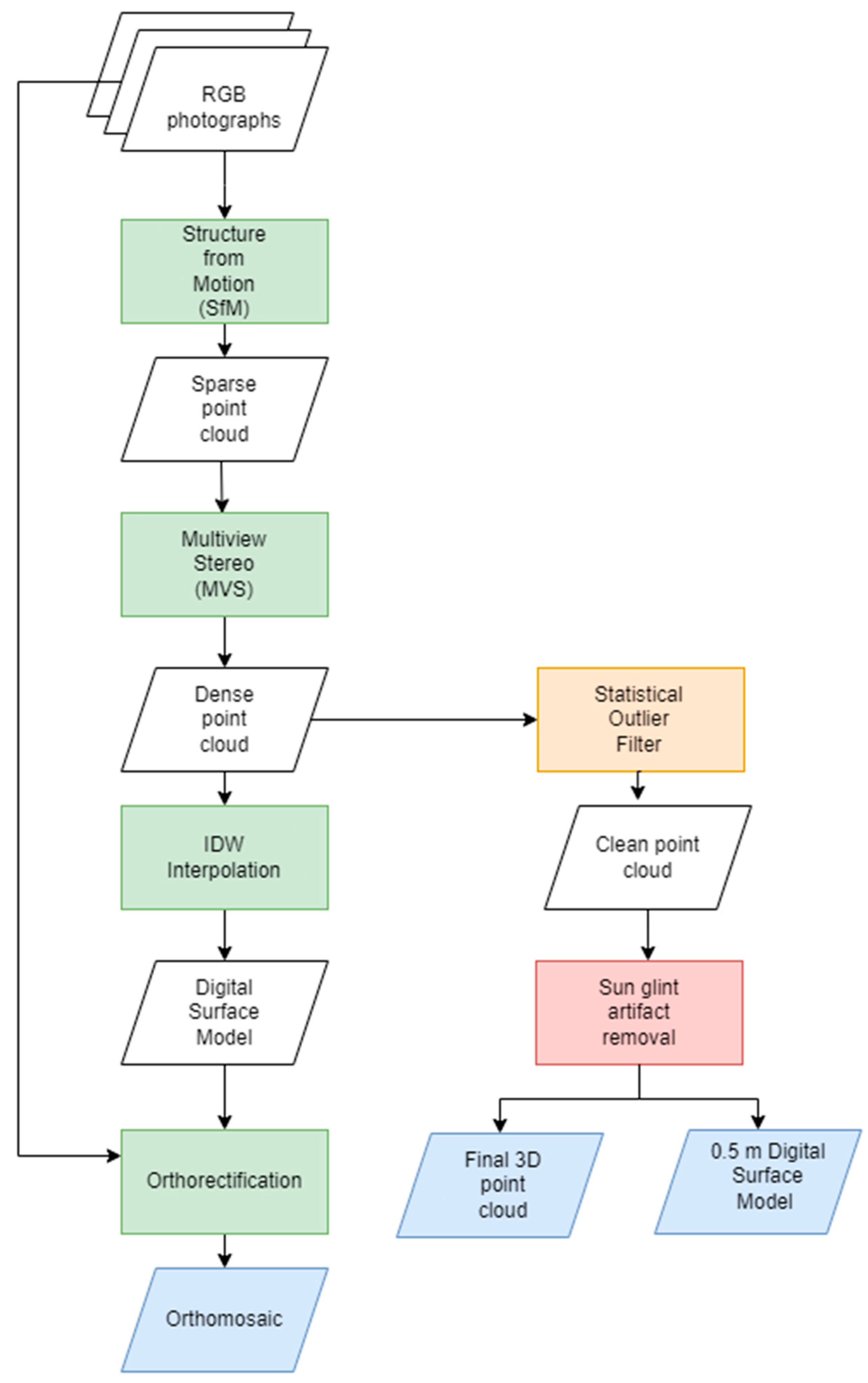

2.3. UAS Structure-from-Motion Multiview Stereo Photogrammetry

2.4. Satellite Imagery

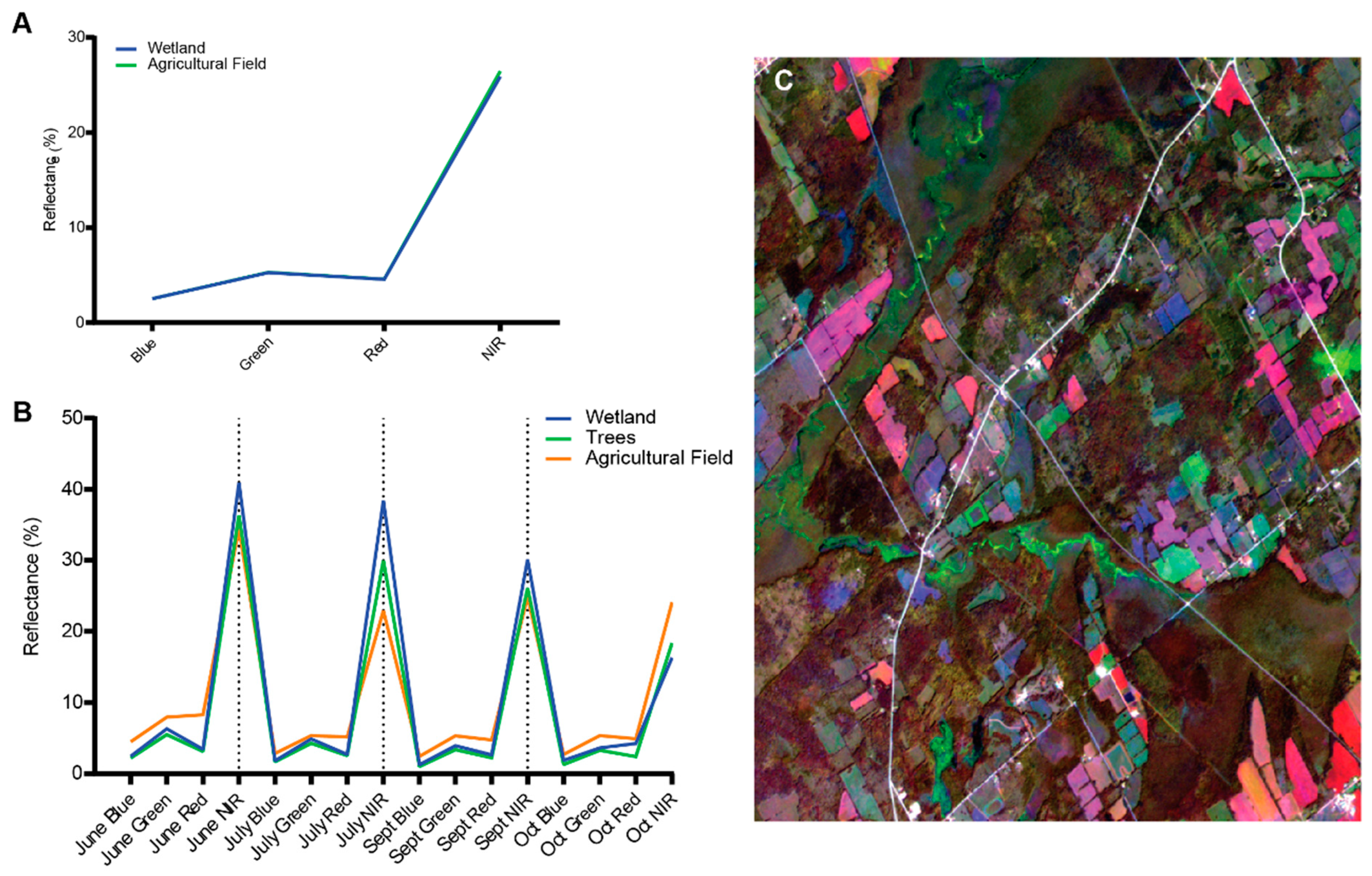

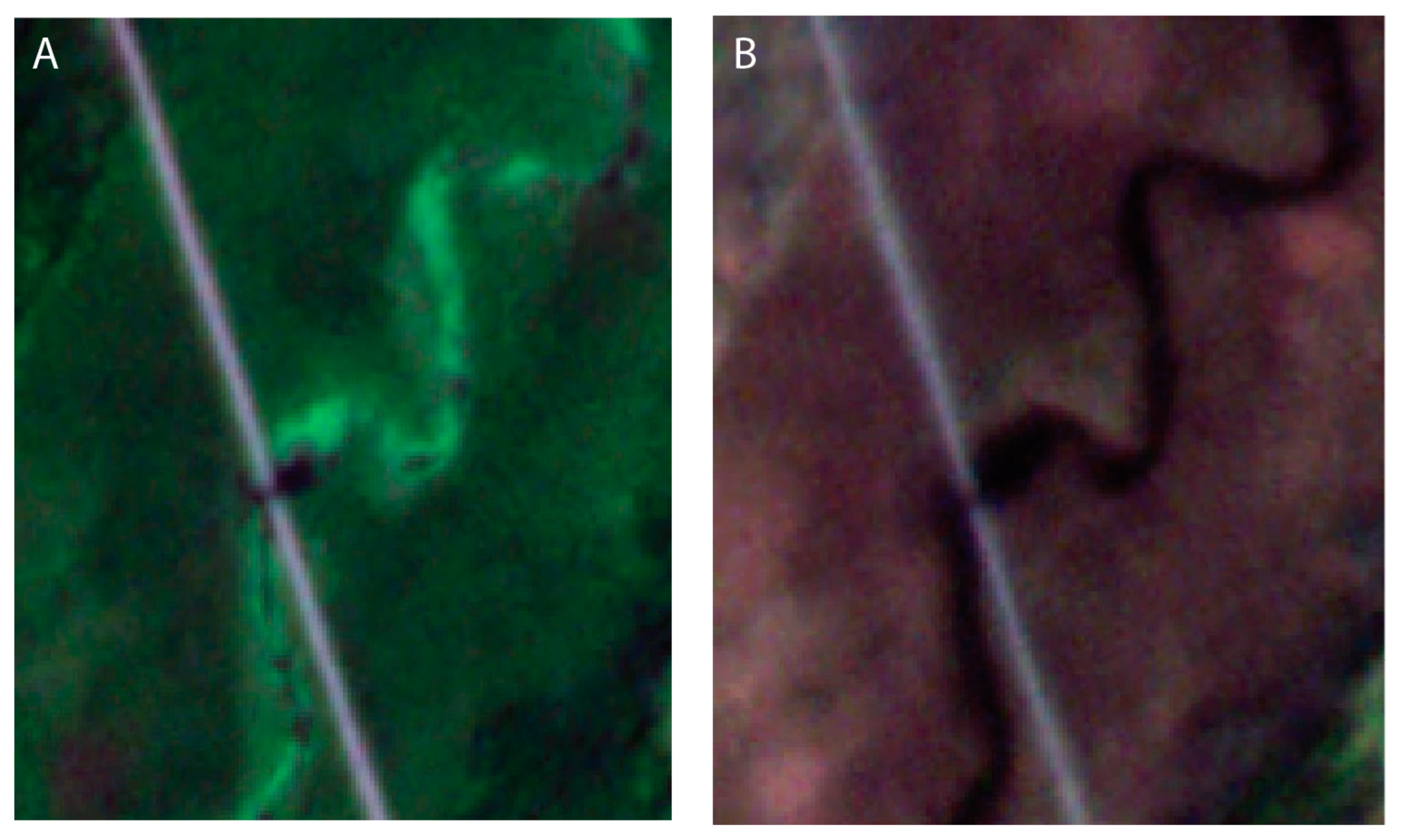

2.5. Water Classification from UAS Orthomosaics and Satellite Imagery

2.5.1. UAS Orthomosaic Classification

2.5.2. Satellite Image Classification

“…wetlands where standing or gently moving waters occur seasonally or persist for long periods, leaving the subsurface continuously waterlogged. The water may also be present as a subsurface flow of mineralized water. The water table may drop seasonally below the rooting zone of the vegetation, creating aerated conditions at the surface. Their substrate consists of mixtures of mineral and organic materials, and peat deposited may be present. The vegetation may consist of dense coniferous or deciduous forest, or tall shrub thickets”.

2.6. Analysis

3. Results

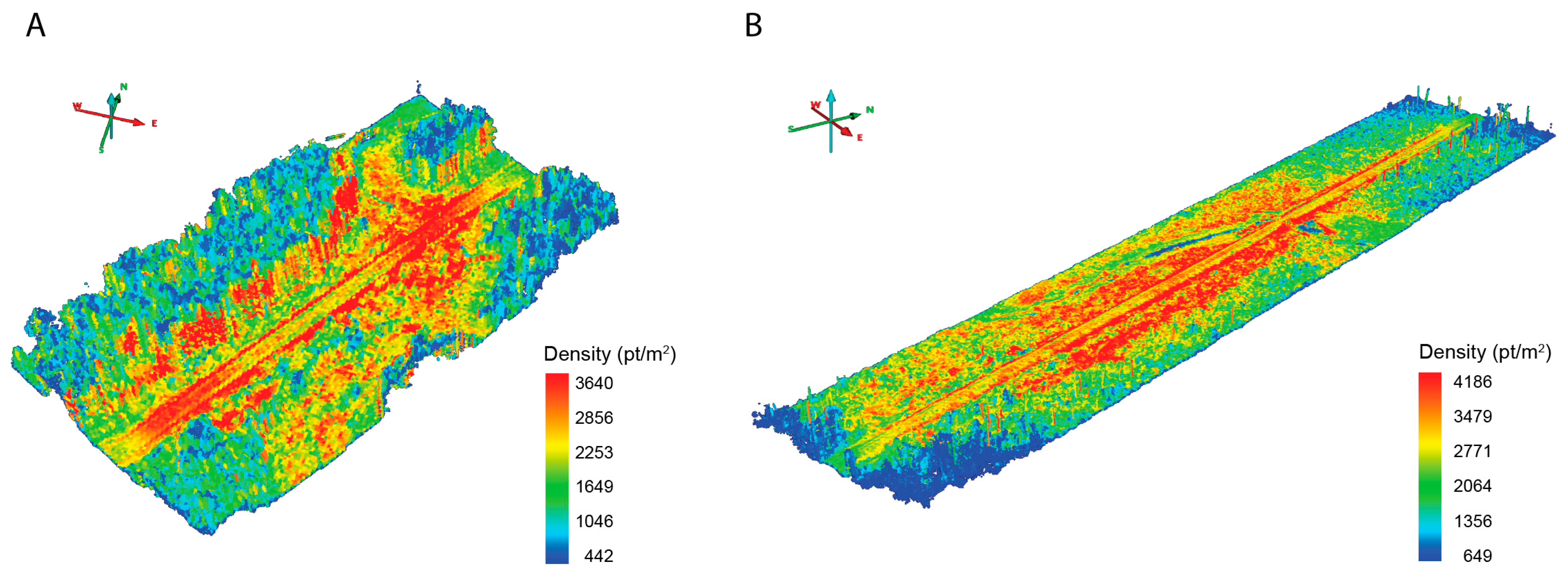

3.1. UAS Data Acquisition

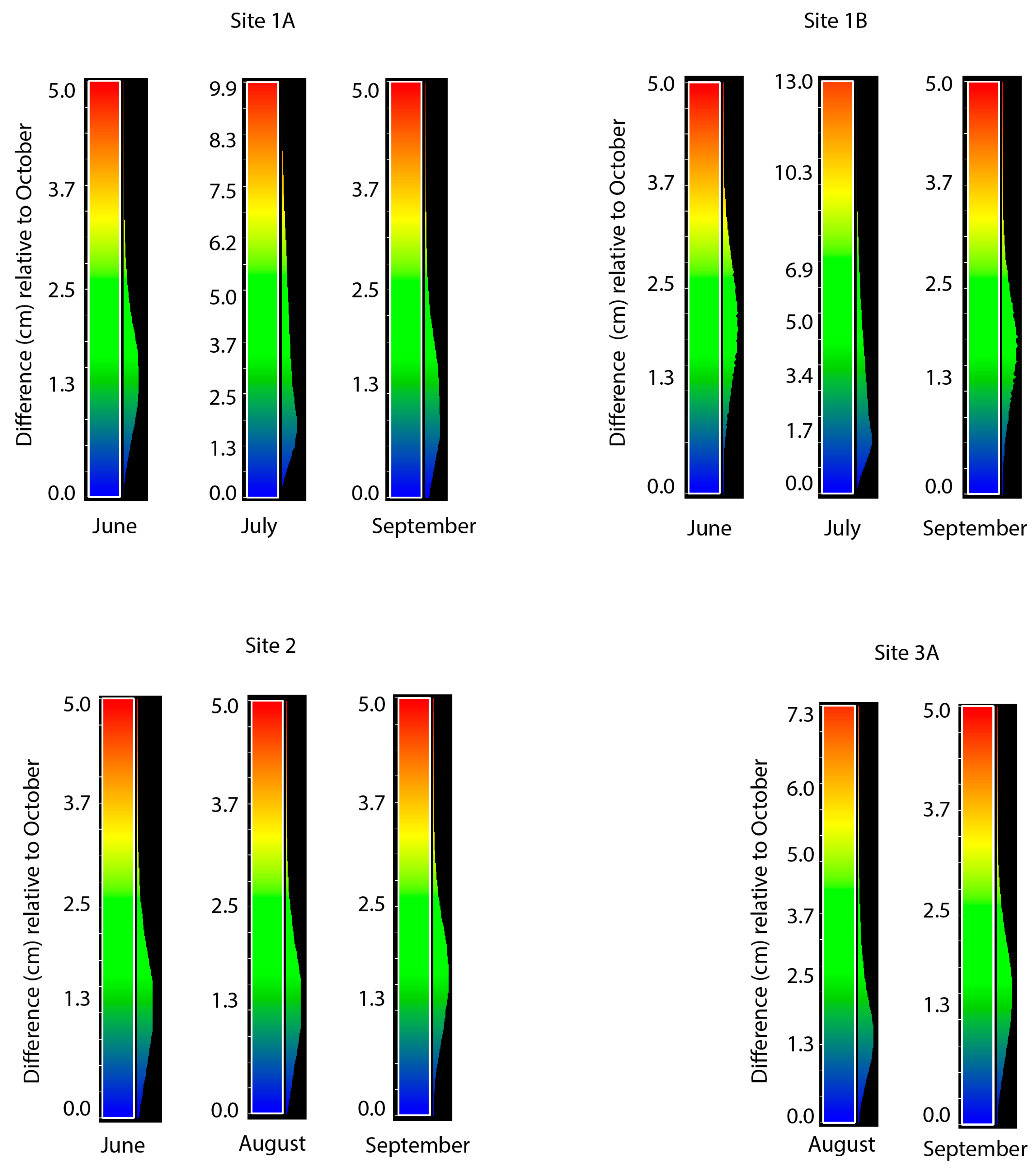

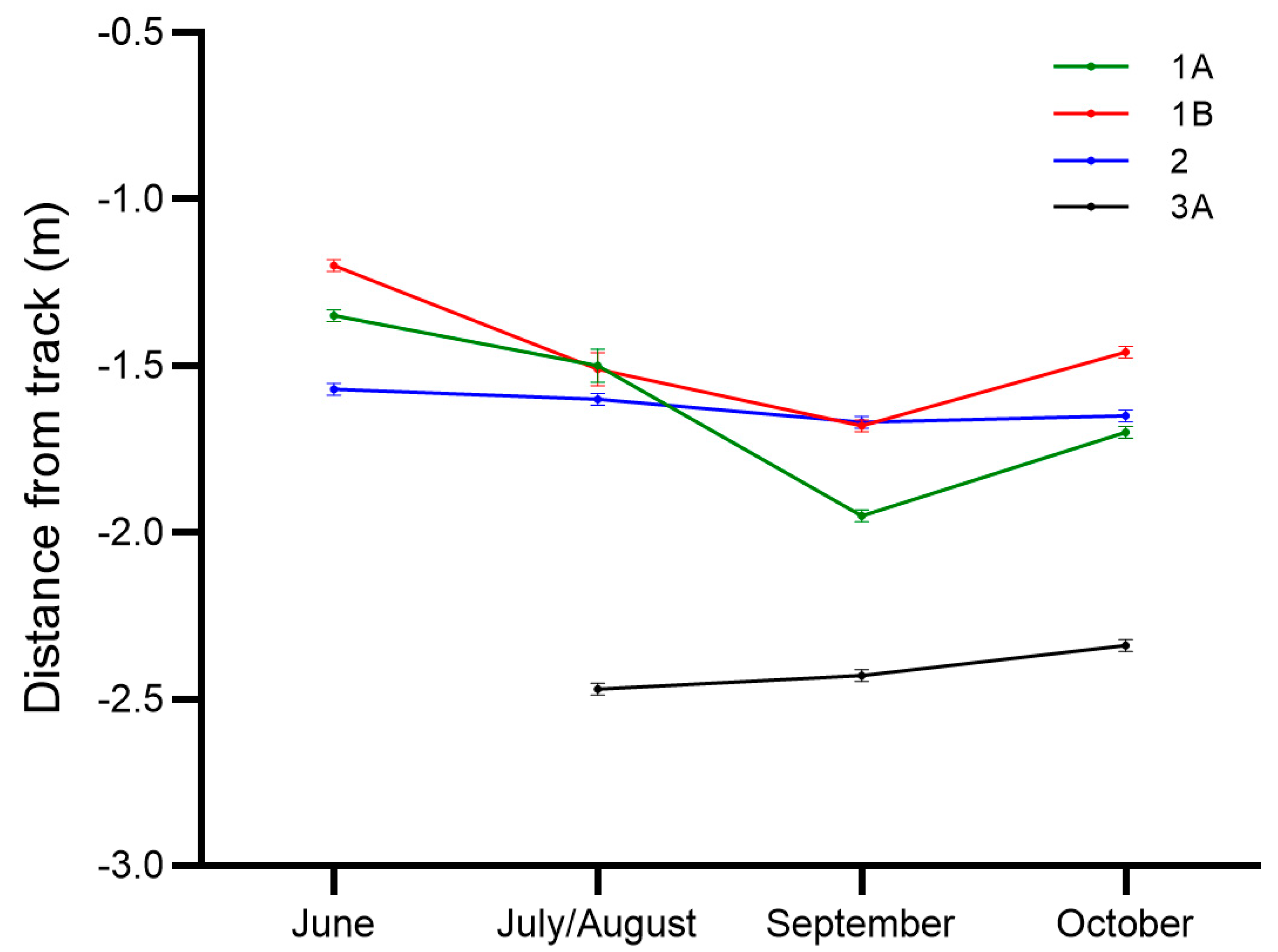

3.2. Water Area and Water Level

3.2.1. UAS Orthomosaics and 3D Point Clouds

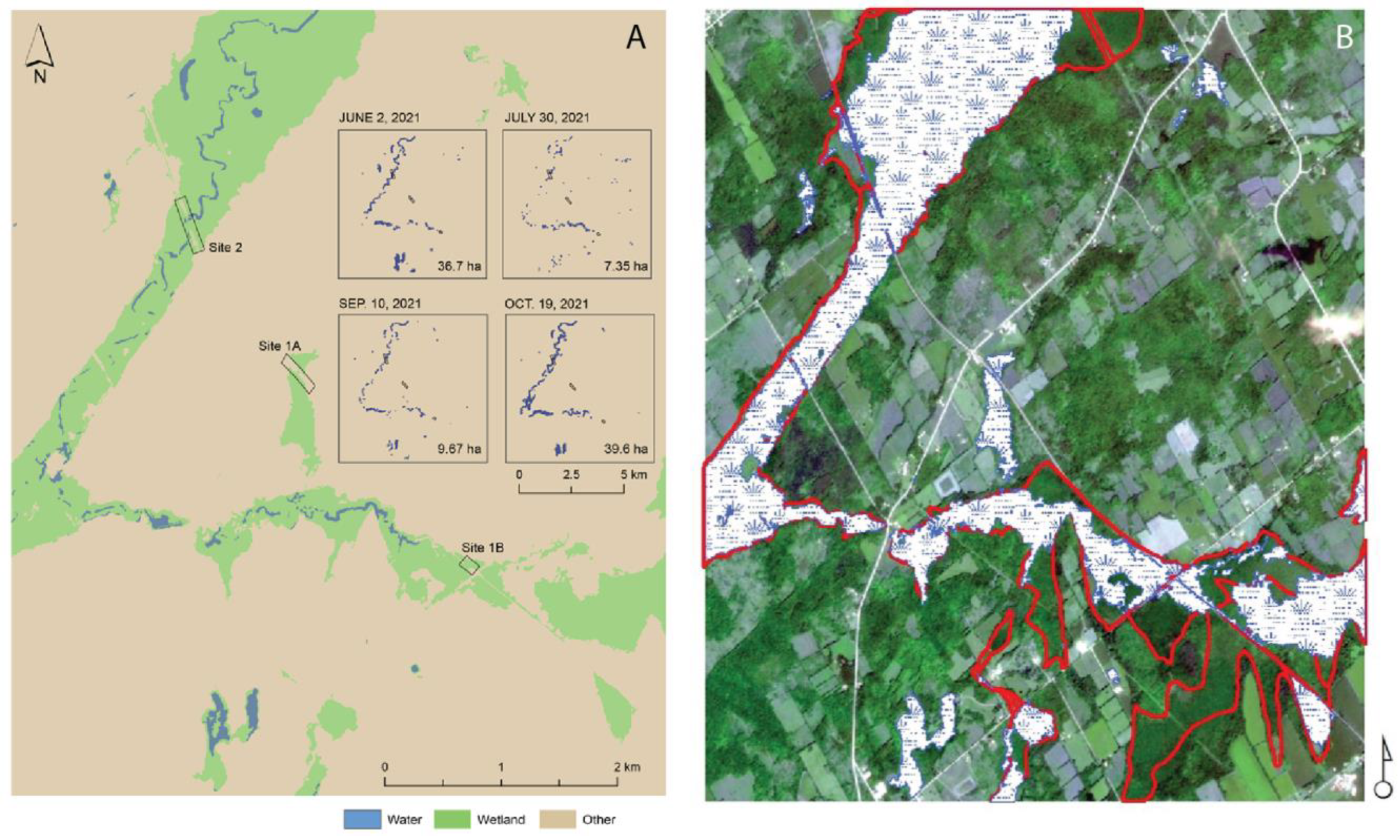

3.2.2. Satellite Image Classification

4. Discussion

5. Conclusions

- Improving the safety of railway operations by providing solutions based on emerging technologies that are able to detect problematic sources of water that are difficult to detect with current methods.

- Improving the fluidity of train traffic by reducing track downtime by implementing automated methods as an alternate solution to time consuming and labor-intensive visual inspections.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Trenberth, K.E. Changes in precipitation with climate change. Clim. Res. 2011, 47, 123–138. [Google Scholar] [CrossRef]

- Nemry, F.O.; Demirel, H. Impacts of Climate Change on Transport a Focus on Road and Rail Transport Infrastructures; European Commission: Brussels, Belgium, 2012.

- Palin, E.J.; Stipanovic Oslakovic, I.; Gavin, K.; Quinn, A. Implications of climate change for railway infrastructure. WIREs Clim. Change 2021, 12, e728. [Google Scholar] [CrossRef]

- Hansen, J.; Sato, M.; Ruedy, R.; Lo, K.; Lea, D.W.; Medina-Elizade, M. Global temperature change. Proc. Natl. Acad. Sci. USA 2006, 103, 14288–14293. [Google Scholar] [CrossRef]

- Meehl, G.A.; Arblaster, J.M.; Tebaldi, C. Understanding future patterns of increased precipitation intensity in climate model simulations. Geophys. Res. Lett. 2005, 32, L18719. [Google Scholar] [CrossRef]

- Fischer, E.M.; Knutti, R. Anthropogenic contribution to global occurrence of heavy-precipitation and high-temperature extremes. Nat. Clim. Change 2015, 5, 560–564. [Google Scholar] [CrossRef]

- Rahmstorf, S. Rising hazard of storm-surge flooding. Proc. Natl. Acad. Sci. USA 2017, 114, 11806–11808. [Google Scholar] [CrossRef] [PubMed]

- Roghani, A.; Mammeri, A.; Siddiqui, A.J.; Abdulrazagh, P.H.; Hendry, M.T.; Pulisci, R.M.; Canadian Rail Research, L. Using emerging technologies for monitoring surface water near railway tracks. In Proceedings of the Canadian & Cold Regions Rail Research Conference 2021 (CCRC 2021), Virtual Event, 9 November 2021; pp. 106–114. [Google Scholar]

- Esmaeeli, N.; Sattari, F.; Lefsrud, L.; Macciotta, R. Critical Analysis of Train Derailments in Canada through Process Safety Techniques and Insights into Enhanced Safety Management Systems. Transp. Res. Rec. 2022, 2676, 603–625. [Google Scholar] [CrossRef]

- Transportation Safety Board of Canada. Railway Investigation Report R09H0006. 2009. Available online: https://www.tsb-bst.gc.ca/eng/rapports-reports/rail/2009/r09h0006/r09h0006.html (accessed on 10 March 2023).

- Pickens, A.H.; Hansen, M.C.; Stehman, S.V.; Tyukavina, A.; Potapov, P.; Zalles, V.; Higgins, J. Global seasonal dynamics of inland open water and ice. Remote Sens. Environ. 2022, 272, 112963. [Google Scholar] [CrossRef]

- Cooley, S.W.; Smith, L.C.; Ryan, J.C.; Pitcher, L.H.; Pavelsky, T.M. Arctic-Boreal Lake Dynamics Revealed Using CubeSat Imagery. Geophys. Res. Lett. 2019, 46, 2111–2120. [Google Scholar] [CrossRef]

- Kalacska, M.; Chmura, G.L.; Lucanus, O.; Bérubé, D.; Arroyo-Mora, J.P. Structure from motion will revolutionize analyses of tidal wetland landscapes. Remote Sens. Environ. 2017, 199, 14–24. [Google Scholar] [CrossRef]

- Kalacska, M.; Lucanus, O.; Arroyo-Mora, J.P.; Laliberté, É.; Elmer, K.; Leblanc, G.; Groves, A. Accuracy of 3D Landscape Reconstruction without Ground Control Points Using Different UAS Platforms. Drones 2020, 4, 13. [Google Scholar] [CrossRef]

- Cadieux, N. Shapefile to DJI Pilot KML Conversion Tool. 2023. Available online: https://borealisdata.ca/dataset.xhtml?persistentId=doi:10.5683/SP3/W1QMQ9 (accessed on 10 March 2023).

- DJI. A3-Pro User Manual; DJI: Schenzen, China, 2016; Available online: https://dl.djicdn.com/downloads/a3/en/A3_and_A3_Pro_User_Manual_en_160520.pdf (accessed on 15 May 2022).

- Kalacska, M.; Arroyo-Mora, J.P.; Lucanus, O. Comparing UAS LiDAR and Structure-from-Motion Photogrammetry for Peatland Mapping and Virtual Reality (VR) Visualization. Drones 2021, 5, 36. [Google Scholar] [CrossRef]

- Gómez-Gutiérrez, Á.; Sanjosé-Blasco, J.J.; Lozano-Parra, J.; Berenguer-Sempere, F.; de Matías-Bejarano, J. Does HDR Pre-Processing Improve the Accuracy of 3D Models Obtained by Means of two Conventional SfM-MVS Software Packages? The Case of the Corral del Veleta Rock Glacier. Remote Sens. 2015, 7, 10269–10294. [Google Scholar] [CrossRef]

- Seitz, S.M.; Curless, B.; Diebel, J.; Scharstein, D.; Szeliski, R. A comparison and evluation of Multi-Voew Serep reconstruction algorithm. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006. [Google Scholar]

- Frazier, A.E.; Hemingway, B.L. A Technical Review of Planet Smallsat Data: Practical Considerations for Processing and Using PlanetScope Imagery. Remote Sens. 2021, 13, 3930. [Google Scholar] [CrossRef]

- Hay, G.J.; Castilla, G. Geographic Object-Based Image Analysis (GEOBIA): A new name for a new discipline. In Geographic Object-Based Image Analysis (GEOBIA): A New Name for a New Discipline; Blaschke, T., Lang, S., Hay, G.J., Eds.; Object-Based Image Analysis; Lecture Notes in Geoinformation and Cartography; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Karlson, M.; Reese, H.; Ostwald, M. Tree Crown Mapping in Managed Woodlands (Parklands) of Semi-Arid West Africa Using WorldView-2 Imagery and Geographic Object Based Image Analysis. Sensors 2014, 14, 22643–22669. [Google Scholar] [CrossRef] [PubMed]

- Kim, M.; Warner, T.; Madden, M.; Atkinson, D. Multi-scale GEOBIA with very high spatial resolution digital aerial imagery: Scale, texture and image objects. Int. J. Remote Sens. 2011, 32, 2825–2850. [Google Scholar] [CrossRef]

- Đurić, N.; Pehani, P.; Oštir, K. Application of In-Segment Multiple Sampling in Object-Based Classification. Remote Sens. 2014, 6, 12138–12165. [Google Scholar] [CrossRef]

- Lucanus, O.; Kalacska, M.; Arroyo-Mora, J.P.; Sousa, L.; Carvalho, L.N. Before and After: A Multiscale Remote Sensing Assessment of the Sinop Dam, Mato Grosso, Brazil. Earth 2021, 2, 303–330. [Google Scholar] [CrossRef]

- National Wetlands Working Group. In Wetlands of Canada. Ecological Land Classification Series; Sustainable Development Branch, Enviromnent Canada: Ottawa, ON, Canada, 1988; p. 452.

- Toriumi, F.Y.; Bittencourt, T.N.; Futai, M.M. UAV-based inspection of bridge and tunnel structures: An application review. Rev. IBRACON De Estrut. E Mater. 2023, 16, e16103. [Google Scholar] [CrossRef]

- Yoon, H.; Shin, J.; Spencer, B.F., Jr. Structural Displacement Measurement Using an Unmanned Aerial System. Comput.-Aided Civ. Infrastruct. Eng. 2018, 33, 183–192. [Google Scholar] [CrossRef]

- Máthé, K.; Buşoniu, L. Vision and Control for UAVs: A Survey of General Methods and of Inexpensive Platforms for Infrastructure Inspection. Sensors 2015, 15, 14887–14916. [Google Scholar] [CrossRef]

- Whiteside, T.G.; Bartolo, R.E. Mapping Aquatic Vegetation in a Tropical Wetland Using High Spatial Resolution Multispectral Satellite Imagery. Remote Sens. 2015, 7, 11664–11694. [Google Scholar] [CrossRef]

- Gao, Y.; Hu, Z.; Wang, Z.; Shi, Q.; Chen, D.; Wu, S.; Gao, Y.; Zhang, Y. Phenology Metrics for Vegetation Type Classification in Estuarine Wetlands Using Satellite Imagery. Sustainability 2023, 15, 1373. [Google Scholar] [CrossRef]

- Knisely, T. Just How Weather Resistant is the Matrice 300 RTK? Available online: https://enterprise-insights.dji.com/blog/matrice-300-weather-resistance (accessed on 10 July 2021).

- Transportation Safety Board of Canada. Rail Transportation Safety Investigation Report R18W0237. 2018; p. 52. Available online: https://www.bst-tsb.gc.ca/eng/rapports-reports/rail/2018/r18w0237/r18w0237.html (accessed on 10 March 2023).

- Neumayer, M.; Teschemacher, S.; Schloemer, S.; Zahner, V.; Rieger, W. Hydraulic Modeling of Beaver Dams and Evaluation of Their Impacts on Flood Events. Water 2020, 12, 300. [Google Scholar] [CrossRef]

- Zhang, W.; Hu, B.; Brown, G.; Meyer, S. Beaver pond identification from multi-temporal and multi- sourced remote sensing data. Geo-Spat. Inf. Sci. 2023, 1–15. [Google Scholar] [CrossRef]

- European Comission. Inspection Drones for Ensuring Safety in Transport Infrastructures. Available online: https://cordis.europa.eu/project/id/861111 (accessed on 20 July 2023).

- Fakhraian, E.; Aghezzaf, E.-H.; Semanjski, S.; Semanjski, I. Overview of European Union Guidelines and Regulatory Framework for Drones in Aviation in the Context of the Introduction of Automatic and Autonomous Flight Operations in Urban Air Mobility. In Proceedings of the DATA ANALYTICS 2022: The Eleventh International Conference on Data Analytics, Valencia, Spain, 13–17 November 2022; pp. 1–7. [Google Scholar]

- Fang, S.X.; O’Young, S.; Rolland, L. Development of Small UAS Beyond-Visual-Line-of-Sight (BVLOS) Flight Operations: System Requirements and Procedures. Drones 2018, 2, 13. [Google Scholar] [CrossRef]

| Date | Sampling Period | Sites | Acquisition Mode | System | Base Station | Altitude (m) |

|---|---|---|---|---|---|---|

| June 2 | 1 | 1A, 1B, 2 | Smart oblique | M300 + P1 | RS2 & Smartnet NTRIP | 100 |

| July 30 | 2 | 1A, 1B | Double Grid | M600P + X5 | DRTK1 * | 50 |

| August 6 | 2 | 2, 3A | Smart oblique | M300 + P1 | DRTK2, RS2 & Smartnet NTRIP | 80 |

| September 9 | 3 | 1A, 1B | Smart oblique | M300 + P1 | DRTK2, RS2 & Smartnet NTRIP | 80 |

| September 10 | 3 | 2, 3A | Smart oblique | M300 + P1 | DRTK2, RS2 & Smartnet NTRIP | 80 |

| October 19 | 4 | 1A, 1B, 2, 3A | Smart oblique | M300 + P1 | DRTK2, RS2 & Smartnet NTRIP | 80 |

| Infrastructure Reference | Vegetation Reference | Water Reference | User’s Accuracy (%) | |

|---|---|---|---|---|

| Infrastructure classification | 22 | 0 | 0 | 100 |

| Vegetation classification | 0 | 84 | 0 | 100 |

| Water classification | 0 | 5 | 33 | 86.8 |

| Producer’s accuracy (%) | 100 | 94.3 | 100 | OA (%) = 96.5 |

| Wetland Reference | Other Reference | User’s Accuracy (%) | |

|---|---|---|---|

| Wetland classification | 52 | 1 | 98.1 |

| Other classification | 23 | 81 | 77.8 |

| Producer’s accuracy (%) | 69.3 | 98.7 | OA (%) = 84.7 |

| No. Photos | Area Orthomosaic (ha) | Total No. Points 3D Cloud (MM) | Avg. Point Density (pts/m2) | Point Cloud File Size (GB) | |

|---|---|---|---|---|---|

| Site 1A | |||||

| 2 June 2021 | 659 | 3.11 | 53.8 | 1664 | 1.37 |

| 30 July 2021 | 605 | 3.11 | 35.9 | 1140 | 1.19 |

| 9 September 2021 | 1017 | 3.11 | 120.0 | 3709 | 3.98 |

| 19 October 2021 | 1019 | 3.11 | 115.6 | 3574 | 4.74 |

| Site 1B | |||||

| 2 June 2021 | 360 | 1.44 | 26.9 | 1786 | 0.89 |

| 30 July 2021 | 405 | 1.44 | 16.7 | 1106 | 0.55 |

| 9 September 2021 | 485 | 1.44 | 37.7 | 2501 | 1.25 |

| 19 October 2021 | 483 | 1.44 | 37.6 | 2495 | 1.25 |

| Site 2 | |||||

| 2 June 2021 | 908 | 4.88 | 95.5 | 1900 | 3.92 |

| 6 August 2021 | 1262 | 4.88 | 119.7 | 2381 | 4.91 |

| 10 September 2021 | 1576 | 4.88 | 136.9 | 2722 | 5.61 |

| 19 October 2021 | 1499 | 4.88 | 135.1 | 2687 | 4.75 |

| Site 3A | |||||

| 6 August 2021 | 529 | 1.79 | 33.2 | 1781 | 1.10 |

| 10 September 2021 | 590 | 1.79 | 45.1 | 2418 | 1.50 |

| 19 October 2021 | 589 | 1.79 | 50.8 | 2724 | 2.08 |

| Total | 11,986 | 43.09 | 39.09 |

| Site 1A | Site 1B | Site 2 | Site 3A | |

|---|---|---|---|---|

| June | 0.15 | 0.25 | 0.79 | - |

| July/August | 0.09 | 0.17 | 0.58 | 0.08 |

| September | 0.02 | 0.20 | 0.56 | 0.09 |

| October | 0.07 | 0.17 | 0.75 | 0.11 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Arroyo-Mora, J.P.; Kalacska, M.; Roghani, A.; Lucanus, O. Assessment of UAS Photogrammetry and Planet Imagery for Monitoring Water Levels around Railway Tracks. Drones 2023, 7, 553. https://doi.org/10.3390/drones7090553

Arroyo-Mora JP, Kalacska M, Roghani A, Lucanus O. Assessment of UAS Photogrammetry and Planet Imagery for Monitoring Water Levels around Railway Tracks. Drones. 2023; 7(9):553. https://doi.org/10.3390/drones7090553

Chicago/Turabian StyleArroyo-Mora, Juan Pablo, Margaret Kalacska, Alireza Roghani, and Oliver Lucanus. 2023. "Assessment of UAS Photogrammetry and Planet Imagery for Monitoring Water Levels around Railway Tracks" Drones 7, no. 9: 553. https://doi.org/10.3390/drones7090553

APA StyleArroyo-Mora, J. P., Kalacska, M., Roghani, A., & Lucanus, O. (2023). Assessment of UAS Photogrammetry and Planet Imagery for Monitoring Water Levels around Railway Tracks. Drones, 7(9), 553. https://doi.org/10.3390/drones7090553