Stockpile Volume Estimation in Open and Confined Environments: A Review

Abstract

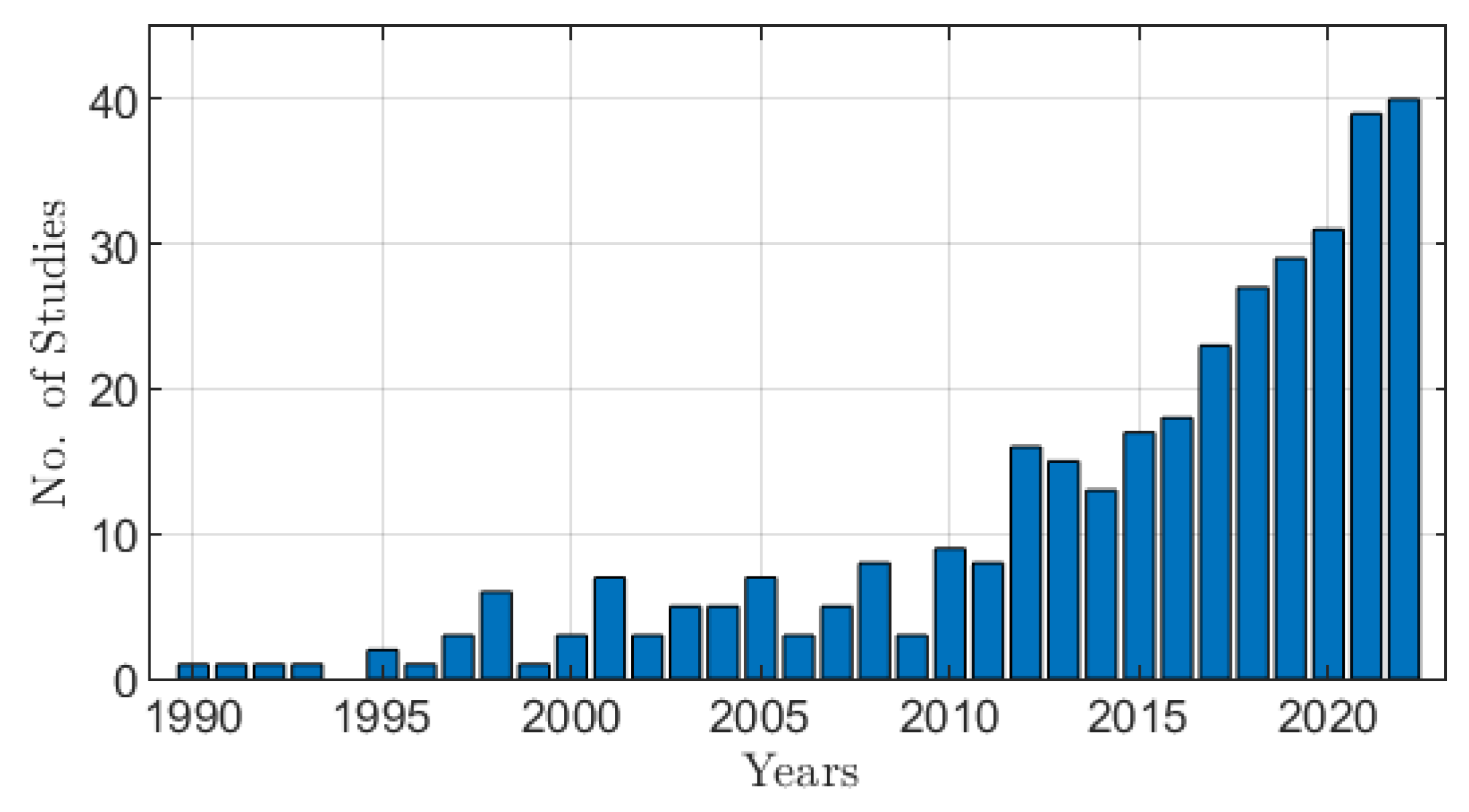

:1. Introduction

1.1. Background

1.2. Research Approach

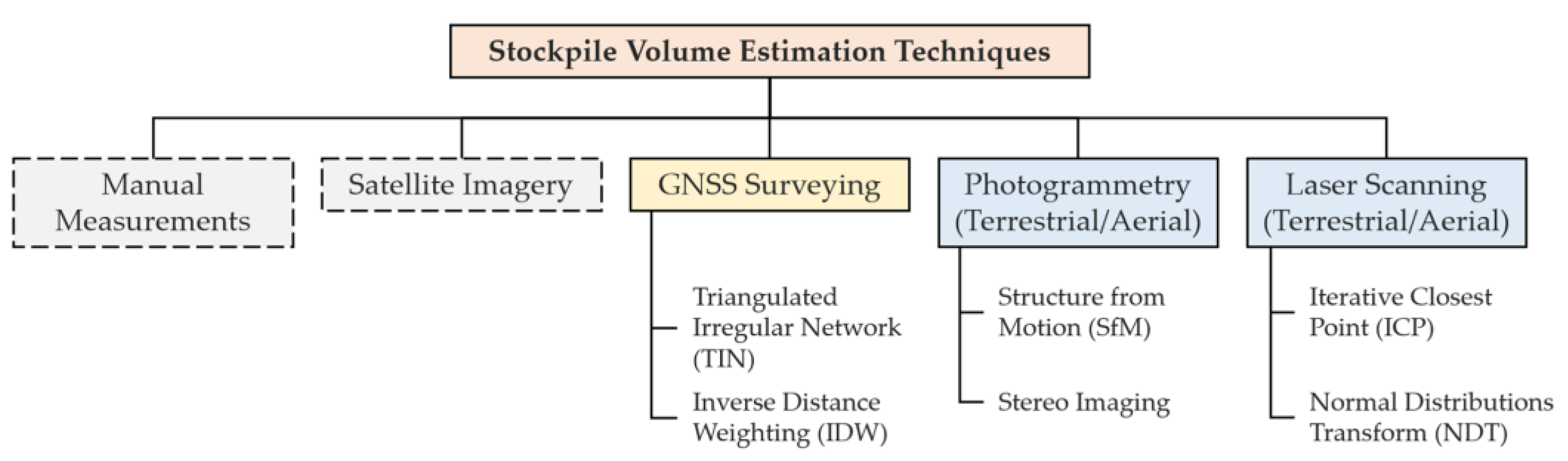

2. Techniques for Estimating Stockpile Volumes

2.1. Overview

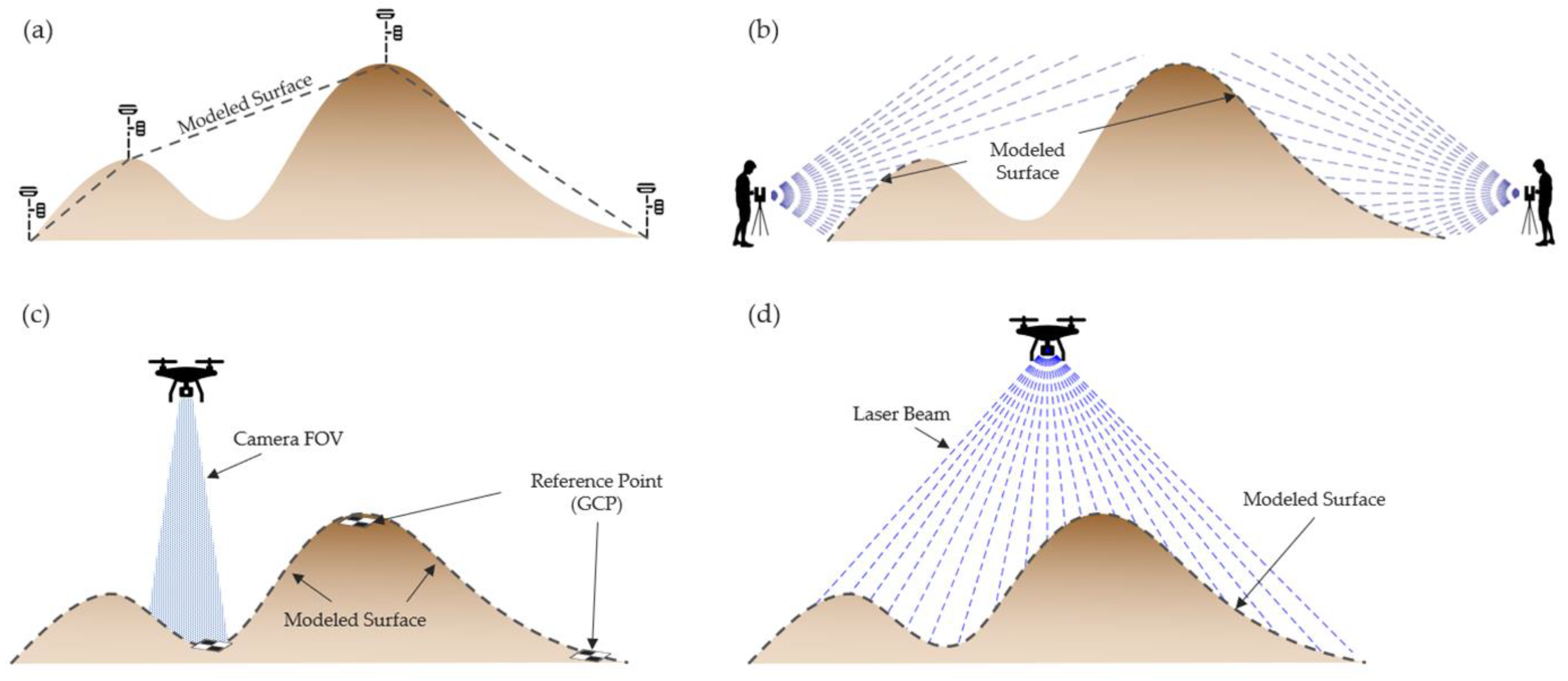

2.2. Manual Measurements

2.3. Satellite Imagery

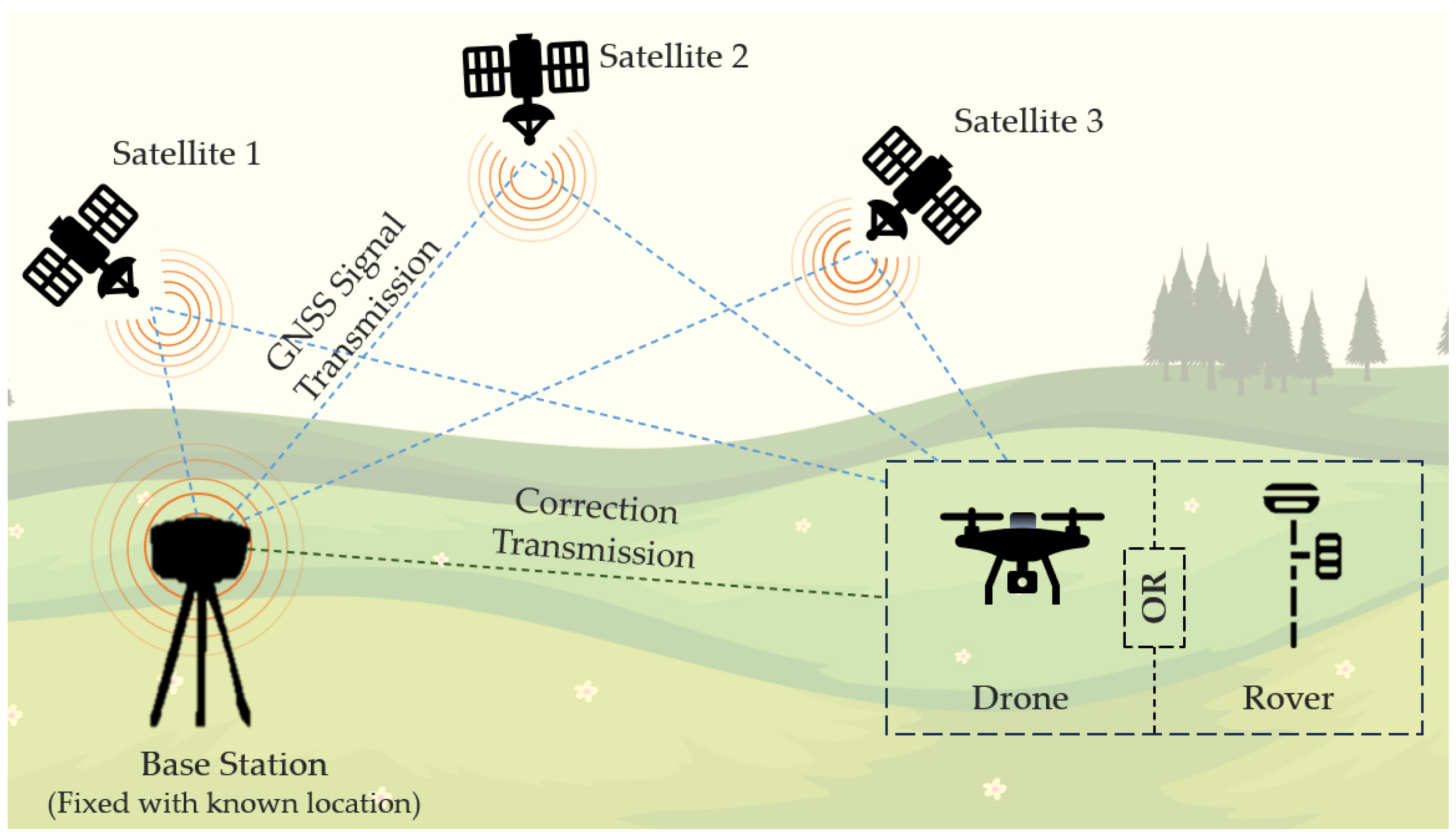

2.4. GNSS Surveying

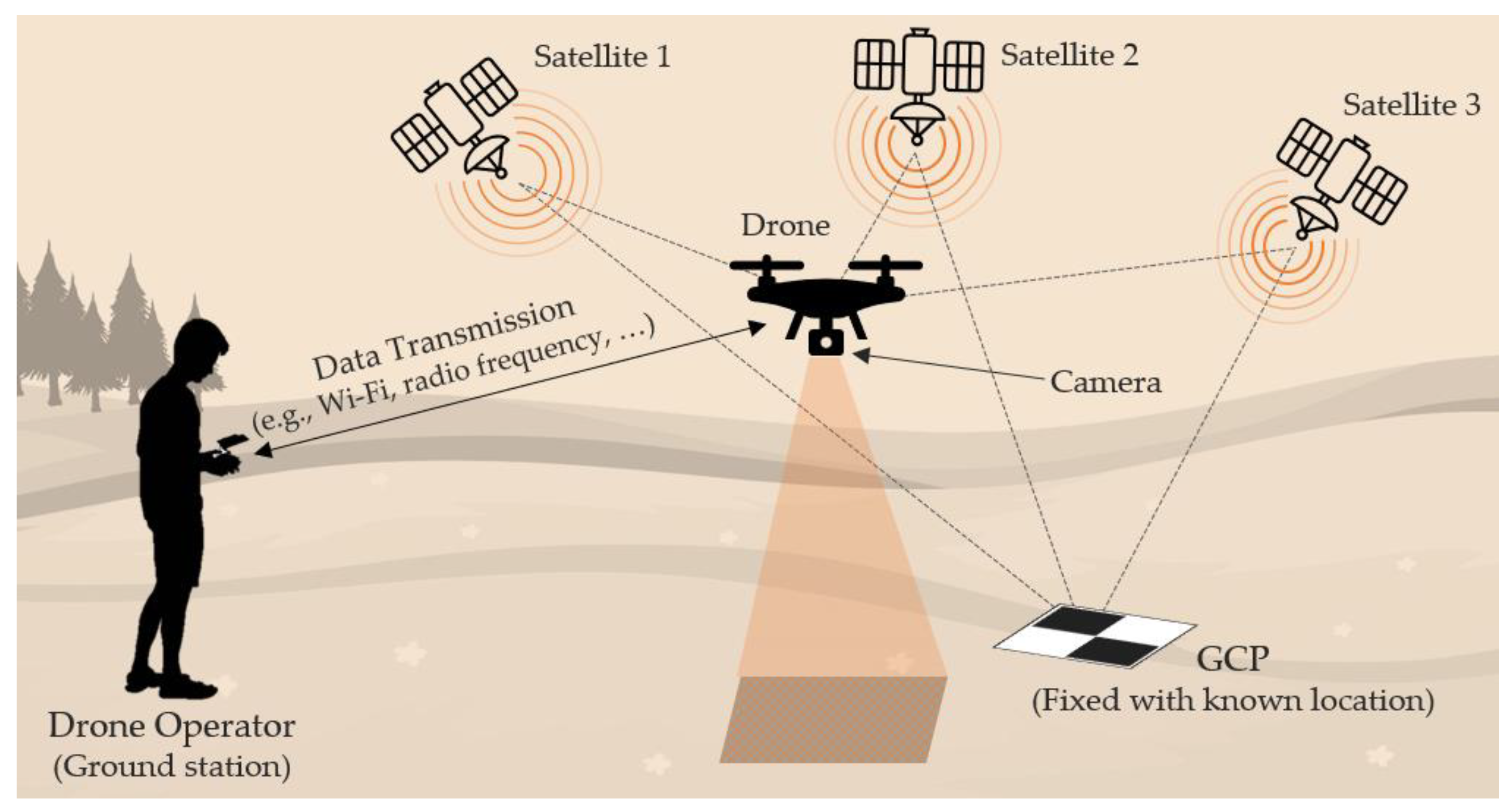

2.5. Photogrammetry Surveying

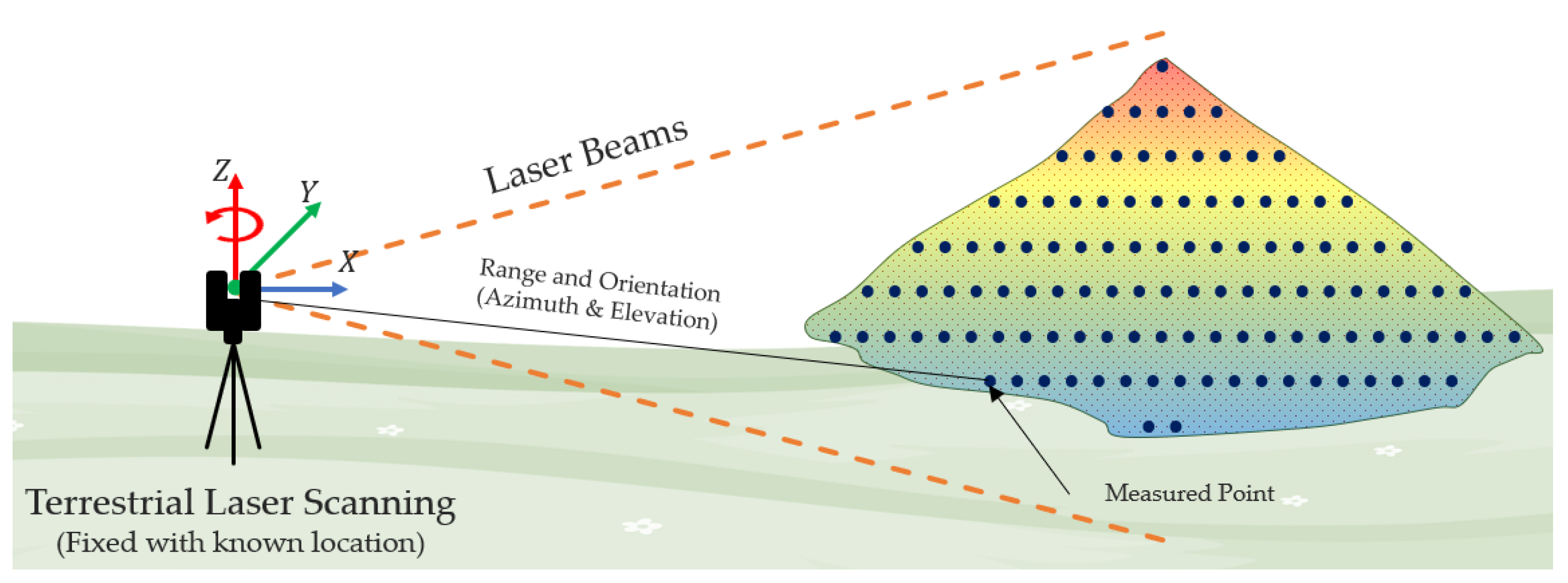

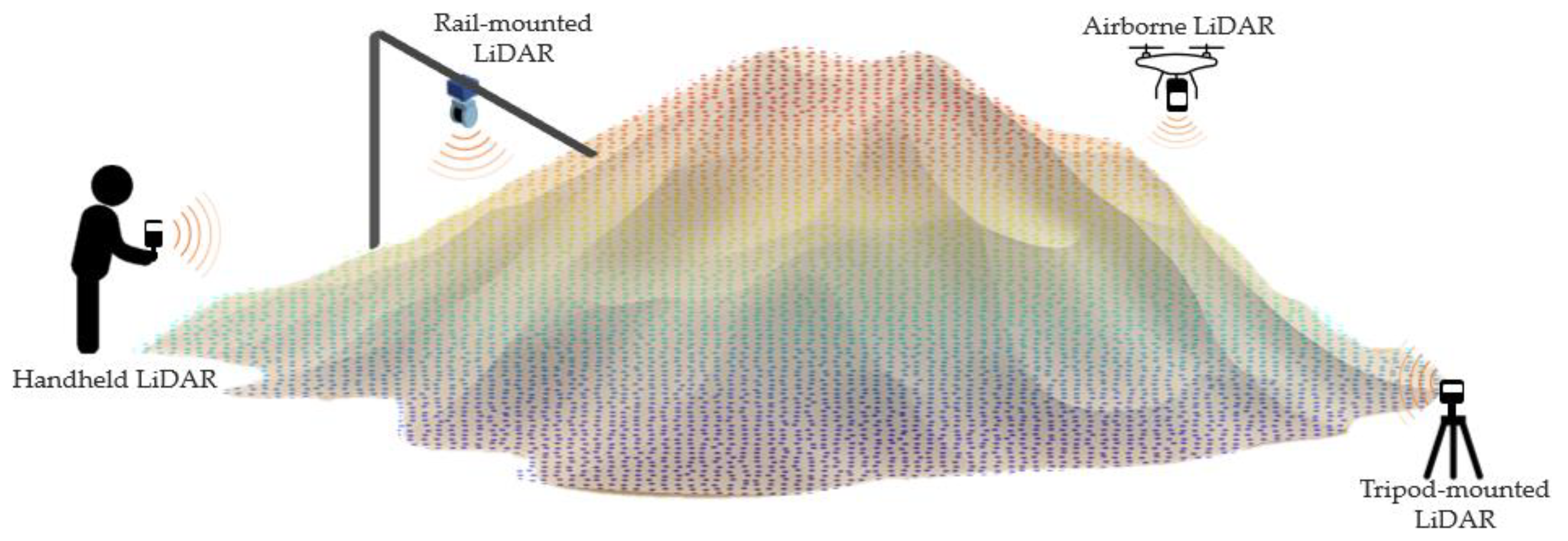

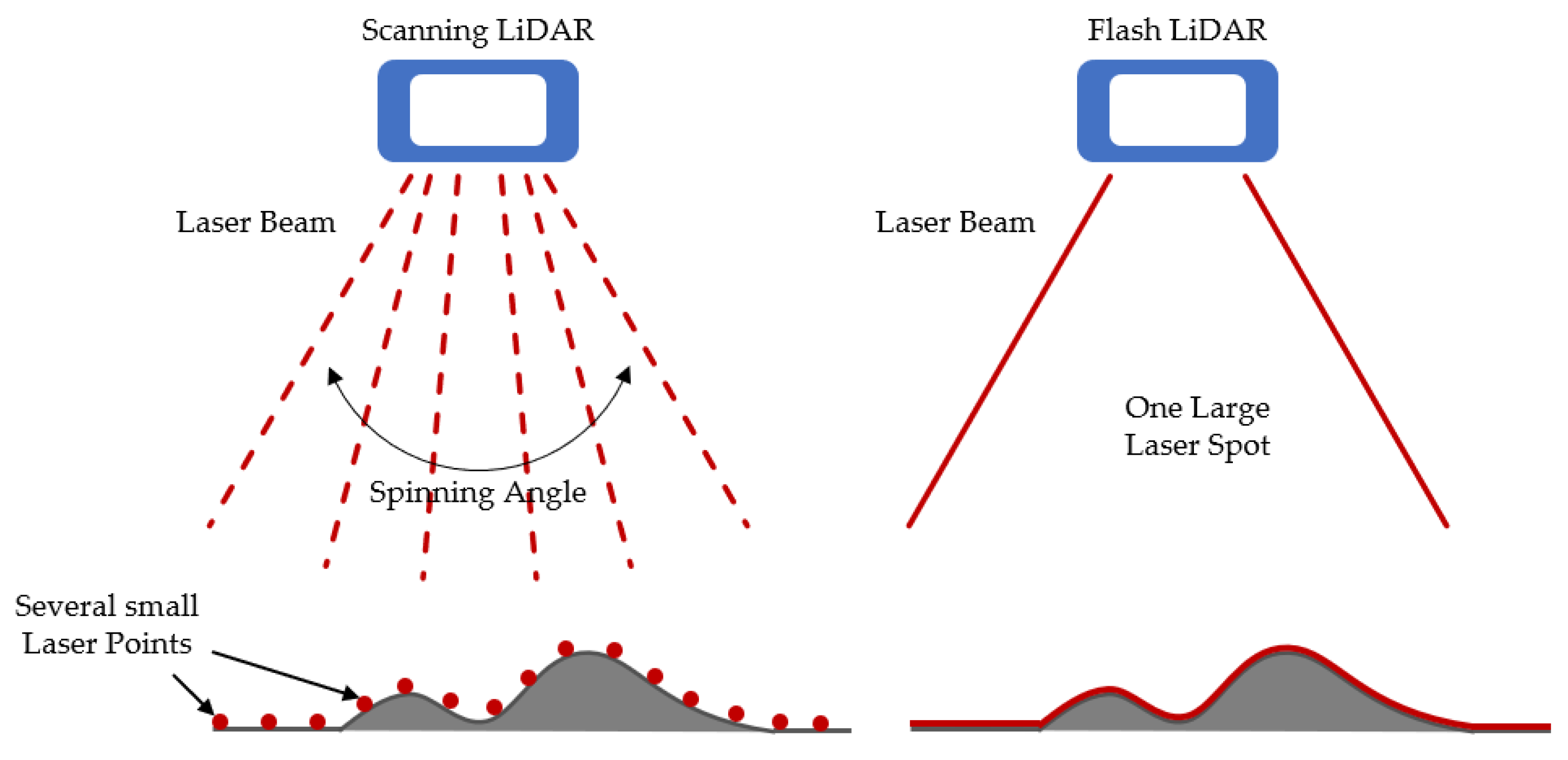

2.6. Terrestrial Laser Scanning (TLS) and Airborne LiDARs

3. Outdoor Stockpile Volume Estimation

3.1. Overview

3.2. Drone Photogrammetry

| Application | Surveyed Area [m2] | Country | Drone/Platform | Image-Processing Software | Reference Volume [m3] | Volumetric Error [%] | Ground Truth | Reference |

|---|---|---|---|---|---|---|---|---|

| Open sand quarry | ≈145,000 | Italy | DJI Phantom 4 | Agisoft Metashape | ≈45,000 | - | - | [6] |

| Stockpiles | ≈3000 | Malaysia | DJI Phantom 4 Pro | Agisoft Photoscan | 6441 | 2.6 | Manual measuring | [61] |

| Stockpiles | 1530 | Nigeria | DJI Mavic Air | Agisoft Metashape Pro | 2750 | 2.3 | Mill machine | [56] |

| Foundation pit | 61,000 | China | DJI Phantom 4 RTK | Bentley ContextCapture | - | - | - | [62] |

| Stockpiles | 463 | Estonia | DJI Phantom 4 Pro | Agisoft Photoscan | 722.5 | 1.1 | TLS | [57] |

| Aibotix Aibot X6 | 0.7 | |||||||

| Stockpiles | 394 | DJI Phantom 4 Pro | 674 | 2.3 | ||||

| Stockpiles | 345 | USA | KongCopter FQ700X8 PRO + lens | Agisoft Metashape | 487.1 | 1.4 | TLS | [58] |

| Bentley ContextCapture | 1.7 | |||||||

| PixElement | 1.1 | |||||||

| Earthwork | ≈70,000 | Korea | VTOL fixed wing | Agisoft Photoscan | ≈104,000 | 1.3 | Design drawing | [59] |

| Multi-rotor drone | ||||||||

| Dam | 45,840 | Kurdistan-Iraq | DJI Phantom 4 Pro | Agisoft Photoscan Pro | 352,551 | <0.3 | GNSS-RTK | [60] |

| Stockpiles | 393.9 | - | Custom drone and custom ground robot | Visual-SLAM | 470.2 | 0.3 | Dense reconstruction | [63] |

| Landfill | 53,628 | 250,057 | 0.3 | |||||

| Landfill | 82,000 | Russia | DJI Phantom 3 Pro | Agisoft Metashape | 406.7 | 0.8 | TLS | [38] |

| X-FLY (specialized) | 0.9 | |||||||

| Landfill | 119,000 | DJI Phantom 3 Pro | DroneDeploy | 358 | 4.9 (no GCP) | |||

| X-FLY (specialized) | 2.1 (no GCP) | |||||||

| Open pit quarry | ≈20,000 | Malawi | DJI Phantom 3 | Pix4D mapper 2.2 Pro | 265.4 | 2.6 | Mill | [64] |

| Charcoal heaps | 80,000 | Brazil | DJI Phantom 3 | Agisoft Metashape Pro | 1,796,223 | 6.5 | GNSS-RTK | [54] |

| Stockpiles | ≈15,000 | - | DJI Phantom 4 Pro | Agisoft Photomodeler | 63,688 | <0.1 | Mill and GNSS-RTK | [65] |

| Fly ash stockpiles | - | USA | DJI Air 2S | Pix4D Mapper | 0.14 | 53 | Known | [55] |

| 1400 | 1649.1 | 11.9 | Mill | |||||

| Earthwork | 20,000 | Korea | DJI Inspire 1 | Agisoft Metashape | 147,316.2 | 2.1 | GNSS-RTK | [52] |

| Construction site | 100,000 | Korea | DJI Phantom 4 Pro | Pix4D Mapper | 354,399 | 0.7 | GNSS-RTK | [66] |

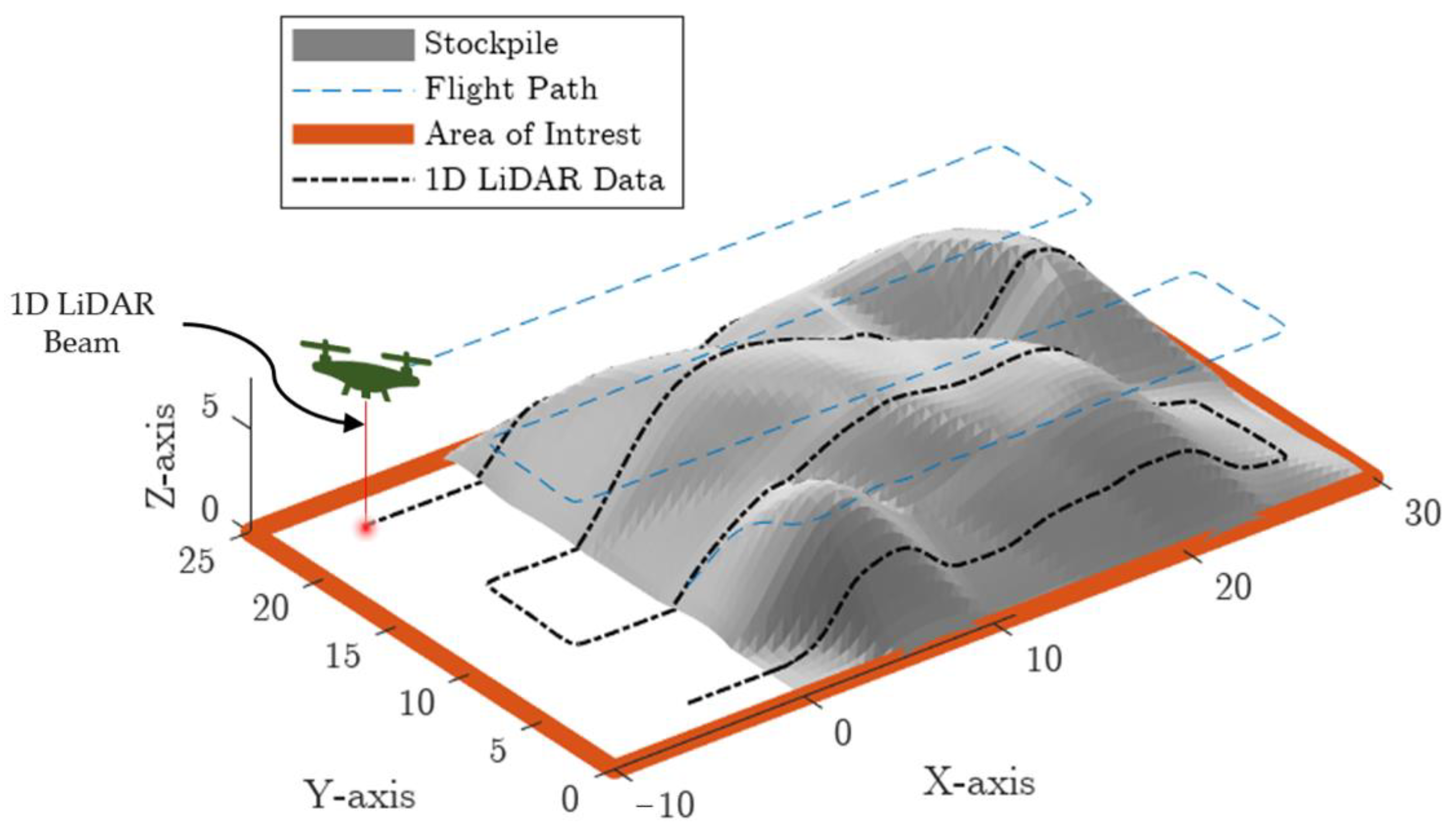

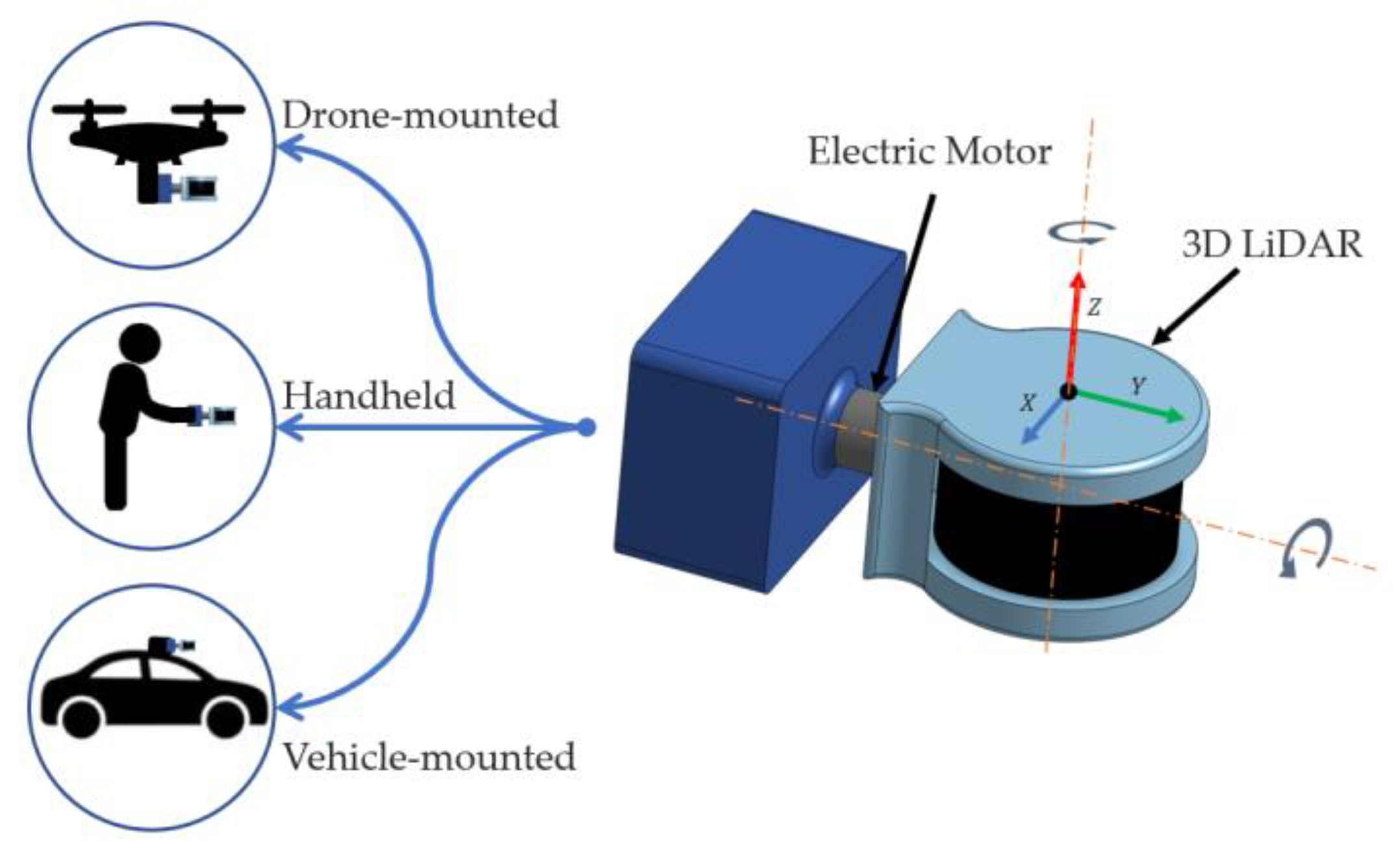

3.3. LiDAR Surveying

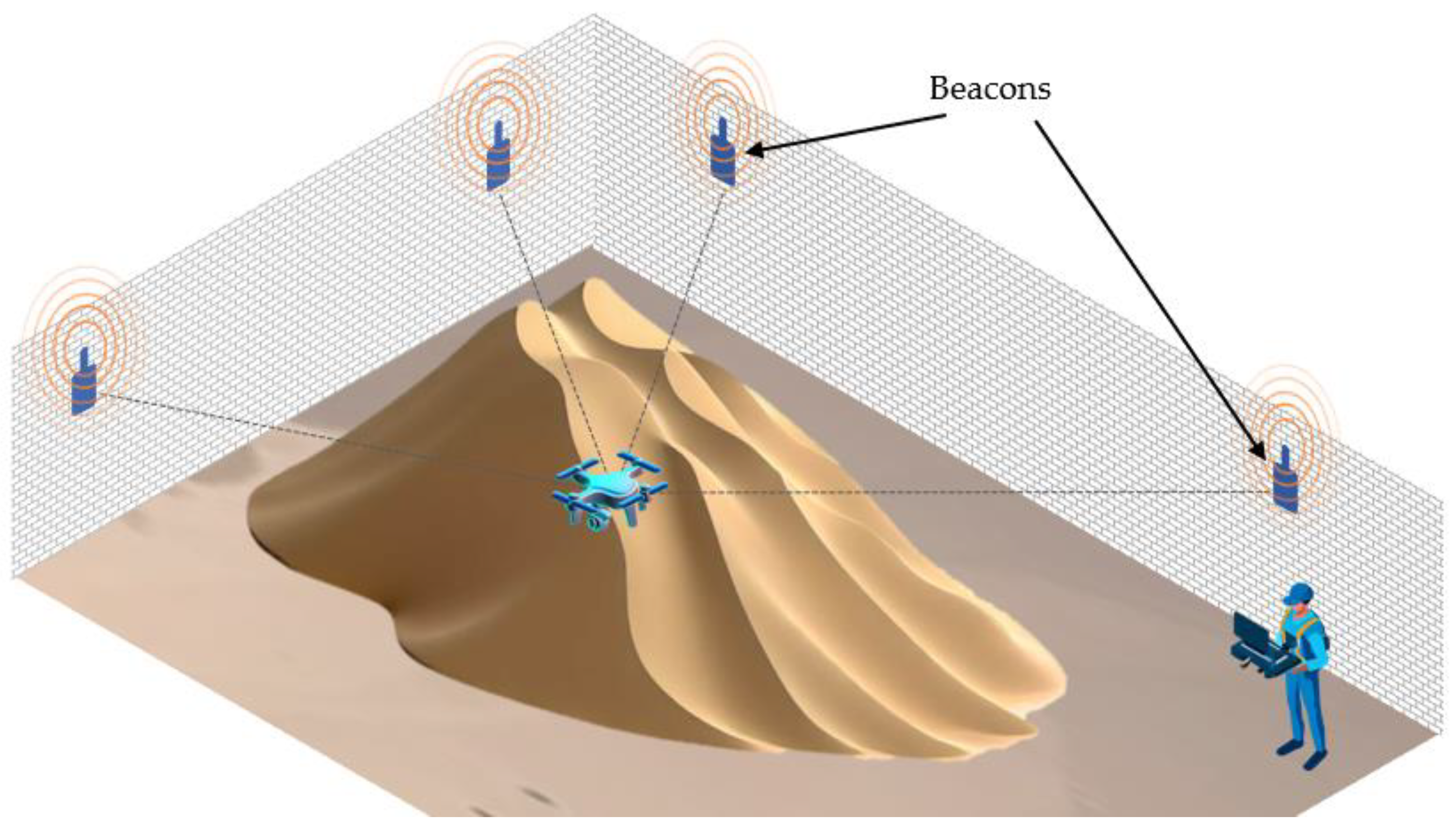

4. Indoor Stockpile Volume Estimation

4.1. Overview

4.2. Challenges to Missions in Confined Spaces

4.3. Present-Day Industrial and Commercial Solutions

4.4. Optimizing Drone Solutions

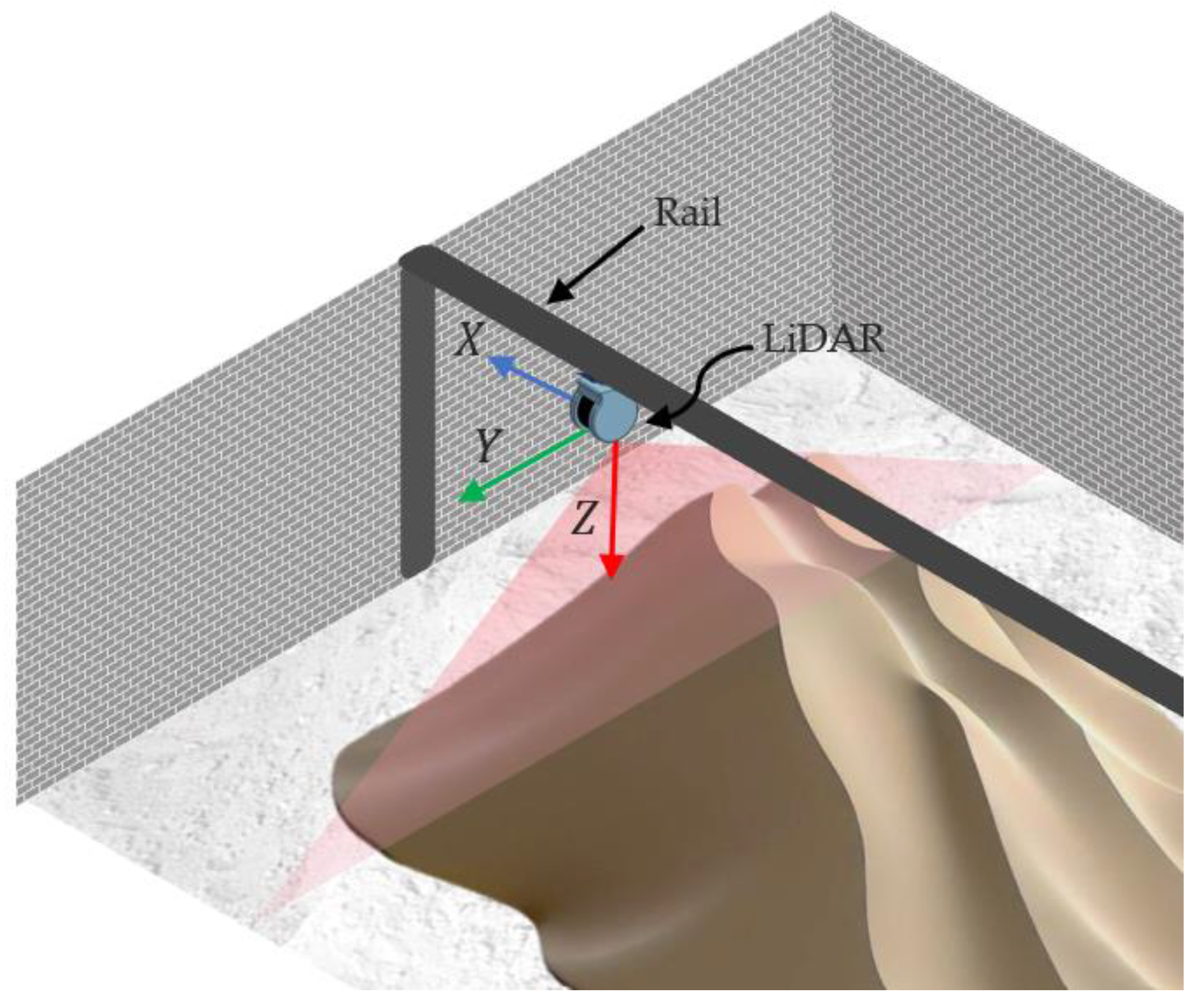

4.5. Sensors Integrated on Rail Platforms

4.6. Tripod-Mounted LiDAR Sensors

4.7. Summary of Indoor Solutions for Stockpile Volume Estimation

5. Discussion and Remarks

5.1. Summary of Techniques

5.2. Photogrammetry- vs. Laser-Based Techniques in Outdoor Surveying Missions

5.3. Future Directions in Indoor Surveying Missions

5.4. Prospective Developments in Aerial Stockpile Scanning for Confined Spaces

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Alsayed, A.; Yunusa-Kaltungo, A.; Quinn, M.K.; Arvin, F.; Nabawy, M.R.A. Drone-Assisted Confined Space Inspection and Stockpile Volume Estimation. Remote Sens. 2021, 13, 3356. [Google Scholar] [CrossRef]

- Liu, J.; Hasheminasab, S.M.; Zhou, T.; Manish, R.; Habib, A. An Image-Aided Sparse Point Cloud Registration Strategy for Managing Stockpiles in Dome Storage Facilities. Remote Sens. 2023, 15, 504. [Google Scholar] [CrossRef]

- Alsayed, A.; Nabawy, M.R.; Yunusa-Kaltungo, A.; Arvin, F.; Quinn, M.K. Towards Developing an Aerial Mapping System for Stockpile Volume Estimation in Cement Plants. In Proceedings of the AIAA Scitech 2021 Forum, Virtual Event, 11–15 & 19–21 January 2021; American Institute of Aeronautics and Astronautics: Reston, VA, USA, 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Dang, T.; Tranzatto, M.; Khattak, S.; Mascarich, F.; Alexis, K.; Hutter, M. Graph-Based Subterranean Exploration Path Planning Using Aerial and Legged Robots. J. Field Robot. 2020, 37, 1363–1388. [Google Scholar] [CrossRef]

- Cao, D.; Zhang, B.; Zhang, X.; Yin, L.; Man, X. Optimization Methods on Dynamic Monitoring of Mineral Reserves for Open Pit Mine Based on UAV Oblique Photogrammetry. Measurement 2023, 207, 112364. [Google Scholar] [CrossRef]

- Vacca, G. UAV Photogrammetry for Volume Calculations. A Case Study of an Open Sand Quarry. In Computational Science and Its Applications—ICCSA 2022 Workshops; Lecture Notes in Computer Science (including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switerland, 2022; Volume 13382, pp. 505–518. [Google Scholar]

- Xiao, H.; Jiang, N.; Chen, X.; Hao, M.; Zhou, J. Slope Deformation Detection Using Subpixel Offset Tracking and an Unsupervised Learning Technique Based on Unmanned Aerial Vehicle Photogrammetry Data. Geol. J. 2023, 58, 2342–2352. [Google Scholar] [CrossRef]

- Bircher, A.; Kamel, M.; Alexis, K.; Oleynikova, H.; Siegwart, R. Receding Horizon Path Planning for 3D Exploration and Surface Inspection. Auton. Robot. 2018, 42, 291–306. [Google Scholar] [CrossRef]

- Yin, H.; Tan, C.; Zhang, W.; Cao, C.; Xu, X.; Wang, J.; Chen, J. Rapid Compaction Monitoring and Quality Control of Embankment Dam Construction Based on UAV Photogrammetry Technology: A Case Study. Remote Sens. 2023, 15, 1083. [Google Scholar] [CrossRef]

- Näsi, R.; Mikkola, H.; Honkavaara, E.; Koivumäki, N.; Oliveira, R.A.; Peltonen-Sainio, P.; Keijälä, N.-S.; Änäkkälä, M.; Arkkola, L.; Alakukku, L. Can Basic Soil Quality Indicators and Topography Explain the Spatial Variability in Agricultural Fields Observed from Drone Orthomosaics? Agronomy 2023, 13, 669. [Google Scholar] [CrossRef]

- Korpela, I.; Polvivaara, A.; Hovi, A.; Junttila, S.; Holopainen, M. Influence of Phenology on Waveform Features in Deciduous and Coniferous Trees in Airborne LiDAR. Remote Sens. Environ. 2023, 293, 113618. [Google Scholar] [CrossRef]

- Blistan, P.; Jacko, S.; Kovanič, Ľ.; Kondela, J.; Pukanská, K.; Bartoš, K. TLS and SfM Approach for Bulk Density Determination of Excavated Heterogeneous Raw Materials. Minerals 2020, 10, 174. [Google Scholar] [CrossRef]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. ‘Structure-from-Motion’ Photogrammetry: A Low-Cost, Effective Tool for Geoscience Applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- Azpúrua, H.; Saboia, M.; Freitas, G.M.; Clark, L.; Agha-mohammadi, A.; Pessin, G.; Campos, M.F.M.; Macharet, D.G. A Survey on the Autonomous Exploration of Confined Subterranean Spaces: Perspectives from Real-Word and Industrial Robotic Deployments. Rob. Auton. Syst. 2023, 160, 104304. [Google Scholar] [CrossRef]

- Jaboyedoff, M.; Oppikofer, T.; Abellán, A.; Derron, M.-H.; Loye, A.; Metzger, R.; Pedrazzini, A. Use of LIDAR in Landslide Investigations: A Review. Nat. Hazards 2012, 61, 5–28. [Google Scholar] [CrossRef]

- Colomina, I.; Molina, P. Unmanned Aerial Systems for Photogrammetry and Remote Sensing: A Review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Sliusar, N.; Filkin, T.; Huber-Humer, M.; Ritzkowski, M. Drone Technology in Municipal Solid Waste Management and Landfilling: A Comprehensive Review. Waste Manag. 2022, 139, 1–16. [Google Scholar] [CrossRef] [PubMed]

- Livers, B.; Lininger, K.B.; Kramer, N.; Sendrowski, A. Porosity Problems: Comparing and Reviewing Methods for Estimating Porosity and Volume of Wood Jams in the Field. Earth Surf. Process Landf. 2020, 45, 3336–3353. [Google Scholar] [CrossRef]

- Deliry, S.I.; Avdan, U. Accuracy of Unmanned Aerial Systems Photogrammetry and Structure from Motion in Surveying and Mapping: A Review. J. Indian Soc. Remote Sens. 2021, 49, 1997–2017. [Google Scholar] [CrossRef]

- Malang, C.; Charoenkwan, P.; Wudhikarn, R. Implementation and Critical Factors of Unmanned Aerial Vehicle (UAV) in Warehouse Management: A Systematic Literature Review. Drones 2023, 7, 80. [Google Scholar] [CrossRef]

- Berra, E.F.; Peppa, M.V. Advances and Challenges of UAV SFM MVS Photogrammetry and Remote Sensing: Short Review. In Proceedings of the 2020 IEEE Latin American GRSS & ISPRS Remote Sensing Conference (LAGIRS), Santiago, Chile, 22–26 March 2020; pp. 533–538. [Google Scholar]

- Son, S.W.; Kim, D.W.; Sung, W.G.; Yu, J.J. Integrating UAV and TLS Approaches for Environmental Management: A Case Study of a Waste Stockpile Area. Remote Sens. 2020, 12, 1615. [Google Scholar] [CrossRef]

- Yakar, M.; Yilmaz, H.M. Using in volume computing of digital close range photogrammetry. In Proceedings of the XXIst ISPRS Congress, Beijing, China, 3–11 July 2008. [Google Scholar]

- d’Autume, M.; Perry, A.; Morel, J.-M.; Meinhardt-Llopis, E.; Facciolo, G. Stockpile Monitoring Using Linear Shape-from-Shading on Planetscope Imagery. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 2, 427–434. [Google Scholar] [CrossRef]

- Gitau, F.; Maghanga, J.K.; Ondiaka, M.N. Spatial Mapping of the Extents and Volumes of Solid Mine Waste at Samrudha Resources Mine, Kenya: A GIS and Remote Sensing Approach. Model. Earth Syst. Environ. 2022, 8, 1851–1862. [Google Scholar] [CrossRef]

- Mari, R.; de Franchis, C.; Meinhardt-Llopis, E.; Facciolo, G. Automatic Stockpile Volume Monitoring Using Multi-View Stereo from Skysat Imagery. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 4384–4387. [Google Scholar]

- Schmidt, B.; Malgesini, M.; Turner, J.; Reinson, J. Satellite Monitoring of a Large Tailings Storage Facility. In Proceedings of the Tailings and Mine Waste Conference (2015: Vancouver, B.C.), Vancouver, Canada, 31 October 2015. [Google Scholar] [CrossRef]

- Sivitskis, A.J.; Lehner, J.W.; Harrower, M.J.; Dumitru, I.A.; Paulsen, P.E.; Nathan, S.; Viete, D.R.; Al-Jabri, S.; Helwing, B.; Wiig, F.; et al. Detecting and Mapping Slag Heaps at Ancient Copper Production Sites in Oman. Remote Sens. 2019, 11, 3014. [Google Scholar] [CrossRef]

- Li, T.; Zhang, H.; Gao, Z.; Chen, Q.; Niu, X. High-Accuracy Positioning in Urban Environments Using Single-Frequency Multi-GNSS RTK/MEMSIMU Integration. Remote Sens. 2018, 10, 205. [Google Scholar] [CrossRef]

- Lewicka, O.; Specht, M.; Stateczny, A.; Specht, C.; Brčić, D.; Jugović, A.; Widźgowski, S.; Wiśniewska, M. Analysis of GNSS, Hydroacoustic and Optoelectronic Data Integration Methods Used in Hydrography. Sensors 2021, 21, 7831. [Google Scholar] [CrossRef]

- Famiglietti, N.A.; Cecere, G.; Grasso, C.; Memmolo, A.; Vicari, A. A Test on the Potential of a Low Cost Unmanned Aerial Vehicle RTK/PPK Solution for Precision Positioning. Sensors 2021, 21, 3882. [Google Scholar] [CrossRef]

- Du, M.; Li, H.; Roshanianfard, A. Design and Experimental Study on an Innovative UAV-LiDAR Topographic Mapping System for Precision Land Levelling. Drones 2022, 6, 403. [Google Scholar] [CrossRef]

- Aber, J.S.; Marzolff, I.; Ries, J.B. Photogrammetry. In Small-Format Aerial Photography; Elsevier: Amsterdam, The Netherlands, 2010; pp. 23–39. [Google Scholar] [CrossRef]

- Szeliski, R. Computer Vision; Springer: London, UK, 2011; ISBN 978-1-84882-934-3. [Google Scholar]

- Dong, Z.; Liang, F.; Yang, B.; Xu, Y.; Zang, Y.; Li, J.; Wang, Y.; Dai, W.; Fan, H.; Hyyppäb, J.; et al. Registration of Large-Scale Terrestrial Laser Scanner Point Clouds: A Review and Benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 163, 327–342. [Google Scholar]

- Cheng, L.; Chen, S.; Liu, X.; Xu, H.; Wu, Y.; Li, M.; Chen, Y. Registration of Laser Scanning Point Clouds: A Review. Sensors 2018, 18, 1641. [Google Scholar] [PubMed]

- Xiong, L.; Wang, G.; Bao, Y.; Zhou, X.; Wang, K.; Liu, H.; Sun, X.; Zhao, R. A Rapid Terrestrial Laser Scanning Method for Coastal Erosion Studies: A Case Study at Freeport, Texas, USA. Sensors 2019, 19, 3252. [Google Scholar] [CrossRef] [PubMed]

- Filkin, T.; Sliusar, N.; Huber-Humer, M.; Ritzkowski, M.; Korotaev, V. Estimation of Dump and Landfill Waste Volumes Using Unmanned Aerial Systems. Waste Manag. 2022, 139, 301–308. [Google Scholar] [CrossRef]

- PS, R.; Jeyan, M.L. Mini Unmanned Aerial Systems (UAV)—A Review of the Parameters for Classification of a Mini UAV. Int. J. Aviat. Aeronaut. Aerosp. 2020, 7, 5. [Google Scholar] [CrossRef]

- Abdelrahman, M.M.; Elnomrossy, M.M.; Ahmed, M.R. Development of Mini Unmanned Air Vehicles. In Proceedings of the 13th International Conference on Aerospace Sciences & Aviation Technology, Cairo, Egypt, 26–28 May 2009. [Google Scholar]

- Goraj, Z.; Cisowski, J.; Frydrychewicz, A.; Grendysa, W.; Jonas, M. Mini UAV Design and Optimization for Long Endurance Mission. In Proceedings of the ICAS Secretariat-26th Congress of International Council of the Aeronautical Sciences 2008, ICAS, Anchorage, AK, USA, 14–19 September 2008; Volume 3, pp. 2636–2647. [Google Scholar]

- Wood, R.; Finio, B.; Karpelson, M.; Ma, K.; Pérez-Arancibia, N.; Sreetharan, P.; Tanaka, H.; Whitney, J. Progress on ‘Pico’ Air Vehicles. Int. J. Rob. Res. 2012, 31, 1292–1302. [Google Scholar] [CrossRef]

- Petricca, L.; Ohlckers, P.; Grinde, C. Micro- and Nano-Air Vehicles: State of the Art. Int. J. Aerosp. Eng. 2011, 2011, 214549. [Google Scholar] [CrossRef]

- Floreano, D.; Wood, R.J. Science, Technology and the Future of Small Autonomous Drones. Nature 2015, 521, 460–466. [Google Scholar] [CrossRef]

- Nabawy, M.R.A.; Marcinkeviciute, R. Scalability of Resonant Motor-Driven Flapping Wing Propulsion Systems. R. Soc. Open Sci. 2021, 8, 210452. [Google Scholar] [CrossRef] [PubMed]

- Shearwood, T.R.; Nabawy, M.R.A.; Crowther, W.J.; Warsop, C. A Novel Control Allocation Method for Yaw Control of Tailless Aircraft. Aerospace 2020, 7, 150. [Google Scholar] [CrossRef]

- Shearwood, T.R.; Nabawy, M.R.A.; Crowther, W.J.; Warsop, C. Coordinated Roll Control of Conformal Finless Flying Wing Aircraft. IEEE Access 2023, 11, 61401–61411. [Google Scholar] [CrossRef]

- Shearwood, T.R.; Nabawy, M.R.; Crowther, W.J.; Warsop, C. Directional Control of Finless Flying Wing Vehicles—An Assessment of Opportunities for Fluidic Actuation. In Proceedings of the AIAA Aviation 2019 Forum, Dallas, TX, USA, 17–21 June 2019; American Institute of Aeronautics and Astronautics: Reston, VA, USA, 2019. [Google Scholar] [CrossRef]

- Shearwood, T.R.; Nabawy, M.R.; Crowther, W.J.; Warsop, C. Three-Axis Control of Tailless Aircraft Using Fluidic Actuators: MAGMA Case Study. In Proceedings of the AIAA AVIATION 2021 Forum, Virtual Event, 2–6 August 2021; American Institute of Aeronautics and Astronautics: Reston, VA, USA, 2021. [Google Scholar] [CrossRef]

- Hassanalian, M.; Abdelkefi, A. Classifications, Applications, and Design Challenges of Drones: A Review. Prog. Aerosp. Sci. 2017, 91, 99–131. [Google Scholar] [CrossRef]

- Shahmoradi, J.; Talebi, E.; Roghanchi, P.; Hassanalian, M. A Comprehensive Review of Applications of Drone Technology in the Mining Industry. Drones 2020, 4, 34. [Google Scholar] [CrossRef]

- Lee, K.; Lee, W.H. Earthwork Volume Calculation, 3D Model Generation, and Comparative Evaluation Using Vertical and High-Oblique Images Acquired by Unmanned Aerial Vehicles. Aerospace 2022, 9, 606. [Google Scholar] [CrossRef]

- Kim, Y.H.; Shin, S.S.; Lee, H.K.; Park, E.S. Field Applicability of Earthwork Volume Calculations Using Unmanned Aerial Vehicle. Sustainability 2022, 14, 9331. [Google Scholar] [CrossRef]

- de Carvalho, L.M.E.; Melo, A.; Umbelino, G.J.M.; Mund, J.-P.; dos Santos, J.G.; Rosette, J.; Silveira, D.; Gorgens, E.B. Charcoal Heaps Volume Estimation Based on Unmanned Aerial Vehicles. South. For. A J. For. Sci. 2021, 83, 303–309. [Google Scholar] [CrossRef]

- Kuinkel, M.S.; Zhang, C.; Liu, P.; Demirkesen, S.; Ksaibati, K. Suitability Study of Using UAVs to Estimate Landfilled Fly Ash Stockpile. Sensors 2023, 23, 1242. [Google Scholar] [CrossRef]

- Ajayi, O.G.; Ajulo, J. Investigating the Applicability of Unmanned Aerial Vehicles (UAV) Photogrammetry for the Estimation of the Volume of Stockpiles. Quaest. Geogr. 2021, 40, 25–38. [Google Scholar] [CrossRef]

- Kokamägi, K.; Türk, K.; Liba, N. UAV Photogrammetry for Volume Calculations. Agron. Res. 2020, 18, 2087–2102. [Google Scholar] [CrossRef]

- Mora, O.E.; Chen, J.; Stoiber, P.; Koppanyi, Z.; Pluta, D.; Josenhans, R.; Okubo, M. Accuracy of Stockpile Estimates Using Low-Cost SUAS Photogrammetry. Int. J. Remote Sens. 2020, 41, 4512–4529. [Google Scholar] [CrossRef]

- Cho, S.; Lim, J.H.; Lim, S.B.; Yun, H.C. A Study on DEM-Based Automatic Calculation of Earthwork Volume for BIM Application. J. Korean Soc. Surv. Geod. Photogramm. Cartogr. 2020, 38, 131–140. [Google Scholar] [CrossRef]

- IDREES, A.; HEETO, F. Evaluation of Uav-Based Dem for Volume Calculation. J. Univ. Duhok 2020, 23, 11–24. [Google Scholar] [CrossRef]

- Rohizan, M.H.; Ibrahim, A.H.; Abidin, C.Z.C.; Ridwan, F.M.; Ishak, R. Application of Photogrammetry Technique for Quarry Stockpile Estimation. IOP Conf. Ser. Earth Environ. Sci. 2021, 920, 012040. [Google Scholar] [CrossRef]

- Jiang, Y.; Liang, W.; Geng, P. Application Research on Slope Deformation Monitoring and Earthwork Calculation of Foundation Pits Based on UAV Oblique Photography. IOP Conf. Ser. Earth Environ. Sci. 2020, 580, 012053. [Google Scholar] [CrossRef]

- Liu, S.; Yu, J.; Ke, Z.; Dai, F.; Chen, Y. Aerial–Ground Collaborative 3D Reconstruction for Fast Pile Volume Estimation with Unexplored Surroundings. Int. J. Adv. Robot. Syst. 2020, 17, 172988142091994. [Google Scholar] [CrossRef]

- Matsimbe, J.; Mdolo, W.; Kapachika, C.; Musonda, I.; Dinka, M. Comparative Utilization of Drone Technology vs. Traditional Methods in Open Pit Stockpile Volumetric Computation: A Case of Njuli Quarry, Malawi. Front Built Environ. 2022, 8, 1037487. [Google Scholar] [CrossRef]

- Mantey, S.; Aduah, M.S. Comparative Analysis of Stockpile Volume Estimation Using UAV and GPS Techniques. Ghana Min. J. 2021, 21, 1–10. [Google Scholar] [CrossRef]

- Park, H.C.; Rachmawati, T.S.N.; Kim, S. UAV-Based High-Rise Buildings Earthwork Monitoring—A Case Study. Sustainability 2022, 14, 10179. [Google Scholar] [CrossRef]

- Matsuura, Y.; Heming, Z.; Nakao, K.; Qiong, C.; Firmansyah, I.; Kawai, S.; Yamaguchi, Y.; Maruyama, T.; Hayashi, H.; Nobuhara, H. High-Precision Plant Height Measurement by Drone with RTK-GNSS and Single Camera for Real-Time Processing. Sci. Rep. 2023, 13, 6329. [Google Scholar] [CrossRef]

- Liu, Z.; Dai, Z.; Zeng, Q.; Liu, J.; Liu, F.; Lu, Q. Detection of Bulk Feed Volume Based on Binocular Stereo Vision. Sci. Rep. 2022, 12, 9318. [Google Scholar] [CrossRef] [PubMed]

- Putra, C.A.; Wahyu Syaifullah, J.; Adila, M. Approximate Volume of Sand Materials Stockpile Based on Structure From Motion (SFM). In Proceedings of the 2020 6th Information Technology International Seminar (ITIS), Surabaya, Indonesia, 14–16 October 2020; pp. 135–139. [Google Scholar]

- Liu, J.; Xu, W.; Guo, B.; Zhou, G.; Zhu, H. Accurate Mapping Method for UAV Photogrammetry Without Ground Control Points in the Map Projection Frame. IEEE Trans. Geosci. Remote Sens. 2021, 59, 9673–9681. [Google Scholar] [CrossRef]

- He, H.; Chen, T.; Zeng, H.; Huang, S. Ground Control Point-Free Unmanned Aerial Vehicle-Based Photogrammetry for Volume Estimation of Stockpiles Carried on Barges. Sensors 2019, 19, 3534. [Google Scholar] [CrossRef]

- Tucci, G.; Gebbia, A.; Conti, A.; Fiorini, L.; Lubello, C. Monitoring and Computation of the Volumes of Stockpiles of Bulk Material by Means of UAV Photogrammetric Surveying. Remote Sens. 2019, 11, 1471. [Google Scholar] [CrossRef]

- Zhang, L.; Grift, T.E. A LIDAR-Based Crop Height Measurement System for Miscanthus Giganteus. Comput. Electron. Agric. 2012, 85, 70–76. [Google Scholar] [CrossRef]

- Siebers, M.; Edwards, E.; Jimenez-Berni, J.; Thomas, M.; Salim, M.; Walker, R. Fast Phenomics in Vineyards: Development of GRover, the Grapevine Rover, and LiDAR for Assessing Grapevine Traits in the Field. Sensors 2018, 18, 2924. [Google Scholar] [CrossRef]

- Foldager, F.; Pedersen, J.; Skov, E.; Evgrafova, A.; Green, O. LiDAR-Based 3D Scans of Soil Surfaces and Furrows in Two Soil Types. Sensors 2019, 19, 661. [Google Scholar] [CrossRef] [PubMed]

- Carabassa, V.; Montero, P.; Alcañiz, J.M.; Padró, J.-C. Soil Erosion Monitoring in Quarry Restoration Using Drones. Minerals 2021, 11, 949. [Google Scholar] [CrossRef]

- Liu, J.; Liu, X.; Lv, X.; Wang, B.; Lian, X. Novel Method for Monitoring Mining Subsidence Featuring Co-Registration of UAV LiDAR Data and Photogrammetry. Appl. Sci. 2022, 12, 9374. [Google Scholar] [CrossRef]

- Amaglo, W.Y. Volume Calculation Based on LiDAR Data. Master’s Thesis, Royal Institute of Technology, Stockholm, Sweden, 2021. [Google Scholar]

- Forte, M.; Neto, P.; Thé, G.; Nogueira, F. Altitude Correction of an UAV Assisted by Point Cloud Registration of LiDAR Scans. In Proceedings of the 18th International Conference on Informatics in Control, Automation and Robotics - ICINCO, Online Streaming, 6–8 July 2021; SciTePress: Setúbal, Portugal, 2021; pp. 485–492, ISBN 978-989-758-522-7. [Google Scholar] [CrossRef]

- Zhang, W.; Yang, D. Lidar-Based Fast 3D Stockpile Modeling. In Proceedings of the 2019 International Conference on Intelligent Computing, Automation and Systems (ICICAS), Chongqing, China, 6–8 December 2019; pp. 703–707. [Google Scholar]

- Zhang, W.; Yang, D.; Li, Y.; Xu, W. Portable 3D Laser Scanner for Volume Measurement of Coal Pile. In Lecture Notes in Electrical Engineering; Springer: Singapore, 2020; Volume 517, pp. 340–347. [Google Scholar]

- Niskanen, I.; Immonen, M.; Hallman, L.; Mikkonen, M.; Hokkanen, V.; Hashimoto, T.; Kostamovaara, J.; Heikkilä, R. Using a 2D Profilometer to Determine Volume and Thickness of Stockpiles and Ground Layers of Roads. J. Transp. Eng. Part B Pavements 2023, 149, 04022074. [Google Scholar] [CrossRef]

- Bayar, G. Increasing Measurement Accuracy of a Chickpea Pile Weight Estimation Tool Using Moore-Neighbor Tracing Algorithm in Sphericity Calculation. J. Food Meas. Charact. 2021, 15, 296–308. [Google Scholar] [CrossRef]

- Zhao, Q.; Wu, Y.; Li, X.; Xu, J.; Meng, Q. A Method of Measuring Stacked Objects Volume Based on Laser Sensing. Meas. Sci. Technol. 2017, 28, 105002. [Google Scholar] [CrossRef]

- de Croon, G.; de Wagter, C. Challenges of Autonomous Flight in Indoor Environments. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1003–1009. [Google Scholar]

- Dissanayake, M.W.M.G.; Newman, P.; Clark, S.; Durrant-Whyte, H.F.; Csorba, M. A Solution to the Simultaneous Localization and Map Building (SLAM) Problem. IEEE Trans. Robot. Autom. 2001, 17, 229–241. [Google Scholar] [CrossRef]

- Huang, B.; Zhao, J.; Liu, J. A Survey of Simultaneous Localization and Mapping with an Envision in 6G Wireless Networks. arXiv 2019, arXiv:1909.05214. [Google Scholar]

- Papachristos, C.; Khattak, S.; Mascarich, F.; Dang, T.; Alexis, K. Autonomous Aerial Robotic Exploration of Subterranean Environments Relying on Morphology–Aware Path Planning. In Proceedings of the 2019 International Conference on Unmanned Aircraft Systems (ICUAS), Atlanta, GA, USA, 11–14 June 2019; pp. 299–305. [Google Scholar]

- Phillips, T.G.; Guenther, N.; McAree, P.R. When the Dust Settles: The Four Behaviors of LiDAR in the Presence of Fine Airborne Particulates. J. Field Robot. 2017, 34, 985–1009. [Google Scholar] [CrossRef]

- Ryde, J.; Hillier, N. Performance of Laser and Radar Ranging Devices in Adverse Environmental Conditions. J. Field Robot. 2009, 26, 712–727. [Google Scholar] [CrossRef]

- GeoSLAM Stockpile Volumes: Laser Technology & Software for Mining. Available online: https://geoslam.com/solutions/stockpile-volumes/ (accessed on 28 February 2023).

- Flyability Elios 3-Digitizing the Inaccessible. Available online: https://www.flyability.com/elios-3 (accessed on 22 August 2022).

- Emesent Hovermap, S.T. Available online: https://www.emesent.com/hovermap-st/ (accessed on 28 February 2023).

- Jones, E.; Sofonia, J.; Canales, C.; Hrabar, S.; Kendoul, F. Applications for the Hovermap Autonomous Drone System in Underground Mining Operations. J. South Afr. Inst. Min. Met. 2020, 120, 49–56. [Google Scholar] [CrossRef]

- BinMaster 3DlevelScanner. Available online: https://www.binmaster.com/products/product/3dlevelscanner (accessed on 28 February 2023).

- ABB VM3D 3D Volumetric Laser Scanner System. Available online: www.abb.com/myvm3d (accessed on 28 February 2023).

- Kumar, C.; Mathur, Y.; Jannesari, A. Efficient Volume Estimation for Dynamic Environments Using Deep Learning on the Edge. In Proceedings of the 2022 IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW), Lyon, France, 30 May–3 June 2022; pp. 995–1002. [Google Scholar]

- Gago, R.M.; Pereira, M.Y.A.; Pereira, G.A.S. An Aerial Robotic System for Inventory of Stockpile Warehouses. Eng. Rep. 2021, 3, e12396. [Google Scholar] [CrossRef]

- Alsayed, A.; Nabawy, M.R.A.; Yunusa-Kaltungo, A.; Quinn, M.K.; Arvin, F. An Autonomous Mapping Approach for Confined Spaces Using Flying Robots. In Towards Autonomous Robotic Systems; Fox, C., Gao, J., Ghalamzan Esfahani, A., Saaj, M., Hanheide, M., Parsons, S., Eds.; Springer International Publishing: Cham, Switerland, 2021; pp. 326–336. [Google Scholar]

- Alsayed, A.; Nabawy, M.R.; Yunusa-Kaltungo, A.; Quinn, M.K.; Arvin, F. Real-Time Scan Matching for Indoor Mapping with a Drone. In Proceedings of the AIAA SCITECH 2022 Forum, Virtual Event, 3–7 January 2022; American Institute of Aeronautics and Astronautics: Reston, VA, USA, 2022. [Google Scholar] [CrossRef]

- Alsayed, A.; Nabawy, M.R.A. Indoor Stockpile Reconstruction Using Drone-Borne Actuated Single-Point LiDARs. Drones 2022, 6, 386. [Google Scholar] [CrossRef]

- Alsayed, A.; Nabawy, M.R.; Arvin, F. Autonomous Aerial Mapping Using a Swarm of Unmanned Aerial Vehicles. In Proceedings of the AIAA AVIATION 2022 Forum, Virtual Event, 27 June–1 July 2022; American Institute of Aeronautics and Astronautics: Reston, VA, USA, 2022. [Google Scholar] [CrossRef]

- Zhao, S.; Lu, T.-F.; Koch, B.; Hurdsman, A. Stockpile Modelling Using Mobile Laser Scanner for Quality Grade Control in Stockpile Management. In Proceedings of the 2012 12th International Conference on Control Automation Robotics & Vision (ICARCV), Guangzhou, China, 5–7 December 2012; pp. 811–816. [Google Scholar]

- Xu, Z.; Lu, X.; Xu, E.; Xia, L. A Sliding System Based on Single-Pulse Scanner and Rangefinder for Pile Inventory. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Chang, D.; Lu, H.; Mi, W. Bulk Terminal Stockpile Automatic Modeling Based on 3D Scanning Technology. In Proceedings of the 2010 International Conference on Future Information Technology and Management Engineering, Changzhou, China, 9–10 October 2010; Volume 1, pp. 67–70. [Google Scholar]

- Zhang, X.; Ou, J.; Yuan, Y.; Shang, Y.; Yu, Q. Projection-Aided Videometric Method for Shape Measurement of Large-Scale Bulk Material Stockpile. Appl. Opt. 2011, 50, 5178–5184. [Google Scholar] [CrossRef]

- Mahlberg, J.A.; Manish, R.; Koshan, Y.; Joseph, M.; Liu, J.; Wells, T.; McGuffey, J.; Habib, A.; Bullock, D.M. Salt Stockpile Inventory Management Using LiDAR Volumetric Measurements. Remote Sens. 2022, 14, 4802. [Google Scholar] [CrossRef]

- Manish, R.; Hasheminasab, S.M.; Liu, J.; Koshan, Y.; Mahlberg, J.A.; Lin, Y.-C.; Ravi, R.; Zhou, T.; McGuffey, J.; Wells, T.; et al. Image-Aided LiDAR Mapping Platform and Data Processing Strategy for Stockpile Volume Estimation. Remote Sens. 2022, 14, 231. [Google Scholar] [CrossRef]

- de Lima, D.P.; Costa, G.H. On the Stockpiles Volume Measurement Using a 2D Scanner. In Proceedings of the 2021 5th International Symposium on Instrumentation Systems, Circuits and Transducers (INSCIT), Campinas, Brazil, 23–27 August 2021; pp. 1–5. [Google Scholar]

- Li, N.; Ho, C.P.; Xue, J.; Lim, L.W.; Chen, G.; Fu, Y.H.; Lee, L.Y.T. A Progress Review on Solid-State LiDAR and Nanophotonics-Based LiDAR Sensors. Laser Photon. Rev. 2022, 16, 2100511. [Google Scholar] [CrossRef]

- Lauter, C. Velodyne’s ‘$100 Lidar’ Is Reborn with an Improved Field of View. Available online: https://www.geoweeknews.com/news/velodyne-s-100-lidar-is-reborn-with-an-improved-field-of-view (accessed on 28 February 2023).

- Digiflec Velabit. Available online: https://digiflec.com/velabit/ (accessed on 28 February 2023).

- Gyagenda, N.; Hatilima, J.V.; Roth, H.; Zhmud, V. A Review of GNSS-Independent UAV Navigation Techniques. Rob. Auton. Syst. 2022, 152, 104069. [Google Scholar] [CrossRef]

- Kunhoth, J.; Karkar, A.G.; Al-Maadeed, S.; Al-Ali, A. Indoor Positioning and Wayfinding Systems: A Survey. Hum.-Centric Comput. Inf. Sci. 2020, 10, 1–41. [Google Scholar]

- Marvelmind Robotics Precise (±2 cm) Indoor Positioning System. Available online: https://marvelmind.com/ (accessed on 28 February 2023).

- Nooploop Precise Positioning, Enabling Industry. Available online: https://www.nooploop.com/en/ (accessed on 7 June 2023).

| Application | Surveyed Area [m2] | Country | Platform | Scanning Payload | Reference Volume [m3] | Volumetric Error [%] | Ground Truth | Reference |

|---|---|---|---|---|---|---|---|---|

| Quarrying site | 12,300 | Sweden | Drone (Matrice 600 Pro) | 3D LiDAR (Velodyne Ultra Puck) | 366.75 | 0.7 | TLS | [78] |

| Coal stockpiles | 200,000 | Brazil | Drone (DJI Matrice 100) | 3D LiDAR (SICK LD-MRS420201) | - | - | - | [79] |

| Coal stockpiles | 1400 | UK | Custom quadcopter | 1D LiDAR (Benewake TFmini) | - | - | - | [1] |

| Gypsum pile | 62.5 | 3 | 2.4 | Truck load | ||||

| Coal piles | - | China | Handheld | 2D LiDAR (SICK LD-MRS400001) | 3000–30,000 | <0.8 | - | [80,81] |

| Several stockpiles on barges | 728 | China | Handheld | 3D LiDAR (Velodyne HDL-32E) | 9437 | 1.6 | Manual measurement | [71] |

| Soil stockpile | 35 | Finland | Excavator | Solid-state 2D profilometer/LiDAR | 9.4 | 1.5–5.5 | Buckets count | [82] |

| Chickpea pile | 0.07 | Turkey | Rail | 2D LiDAR (Hokuyo URG-04LX-UG01) | 0.00375 | 0.5 | Known weight | [83] |

| Stacked boxes | - | China | Rail | 3D LiDAR (SICK LMS511-10100 PRO) | ≈0.16 | ≈3 | Known volume | [84] |

| Challenge | Description |

|---|---|

| GPS Signal | GPS signal loss affects robot/drone localization; however, potential solutions include the use of SLAM algorithms with sensors effective for localization and mapping in GPS-denied environments. |

| Narrow Clearances and Obstructions | Space constraints between the storage ceiling and stockpile peaks, as exemplified in Figure 8, require careful mission planning. |

| Stockpile Texture Variations | Uniform or highly textured surfaces represent a challenge for both visual and LiDAR systems, as uniform surfaces lack distinct features for recognition while highly textured surfaces may lead to uncertainties in identifying unique features. |

| Darkness and Dust | Darkness and dust have significant effects on visual-based approaches; however, LiDAR provides less drift in localization and operates well in poor visibility. |

| Sensor Weight | Most 3D LiDAR sensors can be heavy, requiring larger carrying platforms within aerial missions, which are subsequently harder to fly within confined spaces. |

| Solution | Application | Surveyed Area [m2] | Country | Platform | Scanning Payload | Reference Volume [m3] | Volumetric Error [%] | Ground Truth | Reference |

|---|---|---|---|---|---|---|---|---|---|

| Drone | Sand pile | - | USA | Tarot 650 Sport quadcopter | ZED Mini Stereo camera | 4–25 | 1.2–9.8 | Known volume | [97] |

| Stockpiles | 5320 | USA | DJIMatrice 100 quadrotor | 3D LIDAR (Velodyne VLP 16 Puck Lite) | 306.5 | 2.2 | Known volume | [98] | |

| Stockpiles (Simulation) | 1040 | UK | Genetic quadrotor | 3D LiDAR (Rotary) | 2118 | 3 | Known volume | [99,100] | |

| Stockpiles (Simulation) | 2400 | UK | Genetic quadrotor | 1D LiDAR (Actuated) | 7193–8677 | 1.6 | Known volume | [101] | |

| Stockpiles (Simulation) | 1200 | UK | Multi-agent quadcopter | 1D LiDAR (Depth) | 2422 | 0.2–1.5 | Known volume | [102] | |

| Rail Platform | Small gravel pile | 0.32 | Australia | Rail | 2D LiDAR (Sick LMS200) | 0.03 | 0.6–3.8 | Known volume | [103] |

| Ore pile | 2250 | China | Rail | Two 2D LiDAR (SICK LMS-141) | 711–1573 | 1 | GeoSLAM scanner | [104] | |

| Bulk terminal stockpile | 1,729,000 | China | Stacker reclaimer | 3D LiDAR | - | - | - | [105] | |

| Bulk material stockpile | 3 | China | Rail | Two cameras and a leaser projector | - | - | - | [106] | |

| Tripod-Mounted | Salt stockpile management | - | USA | Tripod /Boom lift | Camera (GoPro Hero 9 RGB) and Two 3D LiDAR (Velodyne VLP-16) | 1648 | 0.1 | Bucket count | [107] |

| - | Tripod | 1438 | 0.0 | TLS | [108] | ||||

| - | 968 | 3.2 | |||||||

| Small grain piles | 0.15 | Brazil | Tripod | 2D LiDAR (OMD30M-R2000) | - | 19.6 | Known volume | [109] | |

| 24.8 | - | 1.5 |

| Techniques | Advantages | Disadvantages |

| GNSS |

|

|

| Drone Photogrammetry |

|

|

| TLS |

|

|

| Airborne LiDAR |

|

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alsayed, A.; Nabawy, M.R.A. Stockpile Volume Estimation in Open and Confined Environments: A Review. Drones 2023, 7, 537. https://doi.org/10.3390/drones7080537

Alsayed A, Nabawy MRA. Stockpile Volume Estimation in Open and Confined Environments: A Review. Drones. 2023; 7(8):537. https://doi.org/10.3390/drones7080537

Chicago/Turabian StyleAlsayed, Ahmad, and Mostafa R. A. Nabawy. 2023. "Stockpile Volume Estimation in Open and Confined Environments: A Review" Drones 7, no. 8: 537. https://doi.org/10.3390/drones7080537

APA StyleAlsayed, A., & Nabawy, M. R. A. (2023). Stockpile Volume Estimation in Open and Confined Environments: A Review. Drones, 7(8), 537. https://doi.org/10.3390/drones7080537