1. Introduction

Vertical take-off and landing (VTOL) aircraft such as helicopters or drones move by using propulsion devices, such as propellers, while hovering in the air. Unlike fixed-wing aircraft, which gain propulsion in one direction, VTOL aircrafts can move in six directions (up, down, left, right, forward, and backward) or rotate around three axes (longitudinal, lateral, and vertical) while hovering in one place. The rotations of VTOL aircrafts along the axes are referred to as roll (rotation along the longitudinal axis), pitch (rotation along the lateral axis), and yaw (rotation along the vertical axis). VTOL aircrafts are typically operated through four controls [

1,

2,

3]: (1) moving vertically by controlling the rotation speed of the propellers, (2) moving forward and backward by tilting the body through pitch rotation, (3) moving left and right by tilting the body through roll rotation, and (4) rotating around the vertical axis through yaw rotation. Since VTOL aircrafts can perform precise hovering in the air using propellers, they are effectively used for military operations, observation and surveillance activities, firefighting, and so forth [

4]. In particular, as VTOL aircrafts with multiple propellers can achieve vertical take-off and landing by precisely controlling the propellers, which enables stable and smooth flight, they can quickly respond to emergency situations through a sudden stop or sharp turn; they are expected to be used as a means of transportation in urban air mobility (UAM), the next generation of urban transportation.

The existing method of controlling VTOL aircrafts, which moves in four ways (up–down movement, front–back movement, left–right movement, and yaw rotation) in 3D space, has nonintuitive eye–hand coordination because the mapping between the movement of the body and the interface is unnatural [

5,

6,

7,

8]. In the case of a helicopter, collective control (which can be controlled up and down with the left hand) is used to control the body up and down. Cyclic control (which can be controlled front–back and left–right with the right hand) is used to control the body front–back and left–right. Two rudder pedals are used to control yaw rotation on both feet. The movement directions of the helicopter are mapped to the arms and legs of the pilot, and the pilot needs to have good eye–hand coordination to control the helicopter’s movements precisely while moving quickly in the air [

9]. However, the problem of eye–hand coordination is more complex in the case of a drone because one of the main causes is the pilot’s point of view. In the case of a helicopter, as the pilot is inside the aircraft, the direction of control by the pilot is the same as the direction of movement of the helicopter. However, drones are controlled by the operator from an external (third-person) perspective. Therefore, if the drone rotates its yaw to change direction, the control direction and the drone’s movement direction become mismatched (e.g., when the drone rotates 90 degrees clockwise if the operator tries to move the drone forward by manipulating the joystick upwards, the drone moves to the right not forward) [

10]. Usability issues related to eye–hand coordination based on the unnatural mapping between the drone’s movement and the drone–control interface are reported, even excluding problems related to perspective. In general, a drone is controlled using a device having two joysticks by manipulating the left joystick with the left hand to move the drone in the forward, backward, leftward, and rightward directions; and manipulating the right joystick with the right hand to control the drone’s altitude (by controlling the joystick upward and downward) and yaw rotation (by controlling the joystick leftward and rightward). Both joysticks are manipulated in the same manner (say, two-dimensional (2D) control), but the mapping of the upward, downward, leftward, and rightward directions of the joystick to the drone’s movement is different between the two joysticks. For example, manipulating the left joystick to the left direction causes the drone to fly leftward while manipulating the right joystick to the left direction causes the drone to rotate in a counterclockwise direction. Therefore, due to the lack of intuitive mapping between the direction of the drone’s movement and the way the controller is manipulated, cognitive errors can occur in controlling the drone and it takes a lot of practice time to develop eye–hand coordination [

2,

10,

11,

12].

The previous studies mainly focused on the interface for intuitive drone control but there is a lack of experimental studies that analyze the performance of drone control in terms of usability of drone–control interface (mapping between the drone’s movements and the drone–control interface). To solve the problem that two-handed drone control is difficult, more intuitive one-handed-control controllers were studied from the aspect of eye–hand coordination [

2,

12,

13] and related products have also been introduced in the market (e.g., Shift, this is engineering Inc., Seongnam, Republic of Korea; FT Aviator, Fluidity Technologies Inc., Houston, TX, USA). Previous studies [

14,

15,

16,

17,

18,

19] have proposed methods utilizing hand gestures to verify the assumption that one-handed controllers or hand-gesture-based control approaches can provide intuitive control over the up–down, front–back, and left–right movements of drones in three-dimensional (3D) space. However, there are few studies that quantitatively compare and analyze the usability difference of these intuitive drone-control methods compared to two-handed controllers.

This study compares the performance of drone control using two different methods by experimentally analyzing the effect of mapping between the manipulation and movement of a drone. This study implemented an interface that controls the drone to be intuitively controlled by hand gestures using Leap Motion (Ultraleap Inc., Mountain View, CA, USA), a hand-gesture recognition device. The usability difference between the Leap Motion (3D controller) and a generally used drone-control device (a gamepad-type device consisting of two joysticks; 2D controller) was compared from the perspective of the drone’s movement trajectory with 20 participants. The drone’s trajectory was measured using a motion analysis system and the distance traveled, the time of movement, and the smoothness of the trajectory were calculated using the trajectory data. Since the mapping between the direction of interface operation and the direction in which the drone moves becomes unnatural in the drone’s yaw rotation [

7,

8,

13], this study only analyzed the remaining three movements (up–down, front–back, and left–right) among the four movements of the drone.

3. Results

Figure 3 illustrates the trajectories of the drone measured by the motion-capture system when controlled by 20 participants using the gamepad (represented by blue lines) and the Leap Motion (represented by red lines). The top view is visualized for the A and C sections, while the front view is shown for the B section. In the A section, the Leap Motion controlled the drone to follow a straighter trajectory compared to the gamepad.

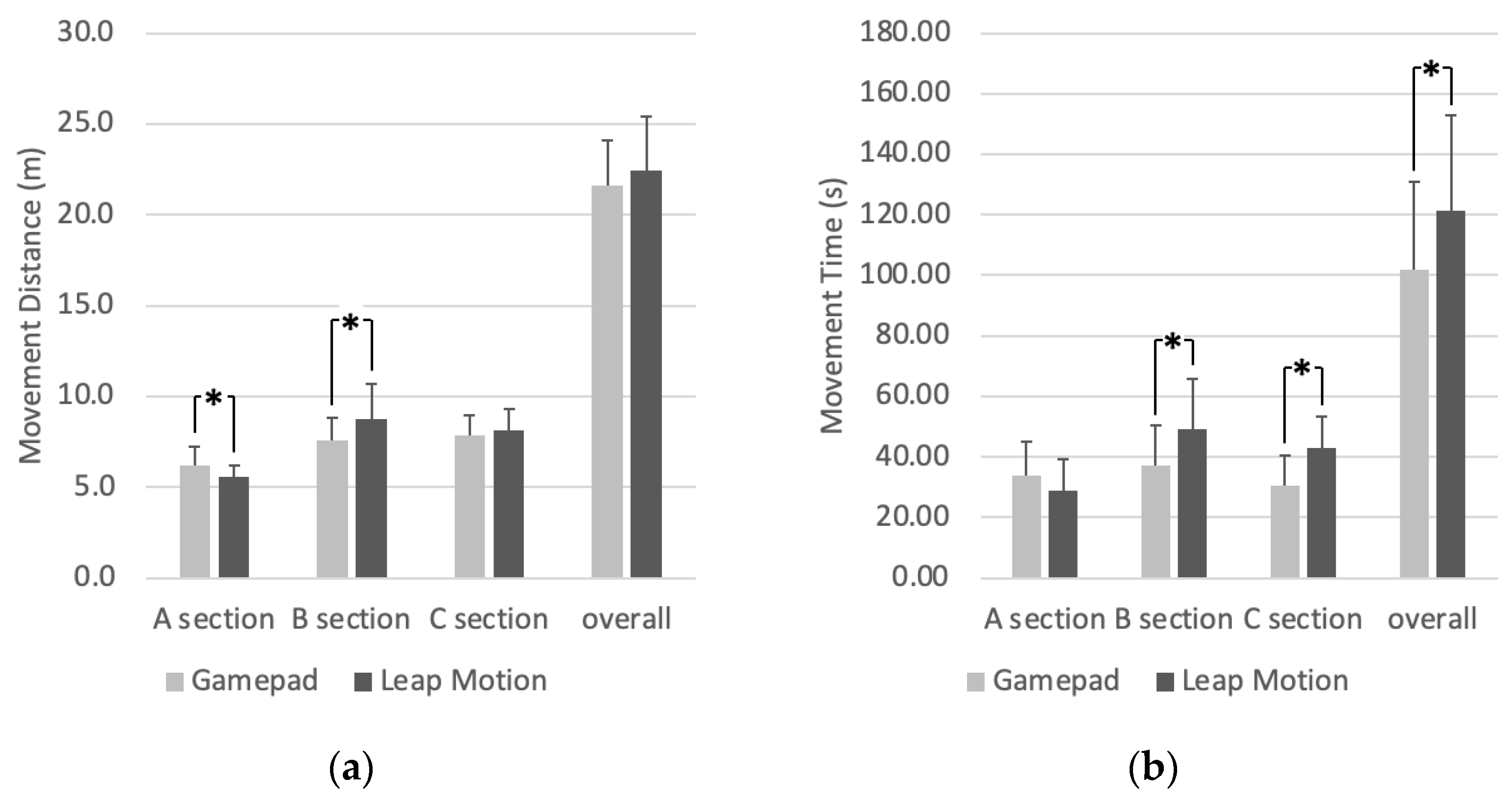

The average movement distances produced by the gamepad and Leap Motion differed slightly by section (

Figure 4a and

Table 2). In the A section, the average movement distance produced by the Leap Motion was significantly shorter (mean difference = 0.6 m) compared to that produced by the gamepad (

p-value = 0.04). In contrast, the B section showed a significantly longer average movement distance (mean difference = 1.2 m) produced by the Leap Motion (

p-value = 0.03). The C section also showed a longer average movement distance (mean difference = 0.3 m) produced by the Leap Motion, though the difference was not significant (

p-value = 0.49). As for the average movement distance in a total of three sections, there was no significant difference observed (mean difference = 0.8 m,

p-value = 0.35).

The results indicate that the Leap Motion had a longer average movement time compared to the gamepad (

Figure 4b). In the A section, the average movement time produced by the Leap Motion was shorter (mean difference = 4.9 s) on average, though the difference was not statistically significant (

p-value = 0.16). In contrast, the B and C sections showed a significantly longer movement time on average for the Leap Motion, with mean differences of 12.0 s and 12.4 s, respectively (

p-value = 0.02 and

p-value < 0.001). The overall average movement time across all sections also showed a longer duration for the Leap Motion compared to the gamepad (mean difference = 19.5 s;

p-value = 0.048).

The results regarding the smoothness of the trajectory, as defined by the accumulated angle change divided by the movement distance, showed that the Leap Motion made different results by course (

Figure 5 and

Table 2). The trajectory smoothness shows a high value when the drone makes sharp turns. In the A and B sections, the Leap Motion produced a smoother trajectory on average with a smaller accumulated angle change. Significant differences were observed in the

X- and

Z-axes in the A section and the

Z-axis in the B section. In contrast, in the C section, the Leap Motion showed a less smooth trajectory on average with a significant difference in the

Y- and

Z-axes compared to the gamepad. While there are no significant differences between the two methods, the overall average smoothness across all sections showed a smoother trajectory for the Leap Motion compared to the gamepad, except for the

Y-axis. This was because two out of twenty participants using Leap Motion in the C section made unnecessary movements in the

Y-axis. They were not treated as outliers, but their data were included in the results.

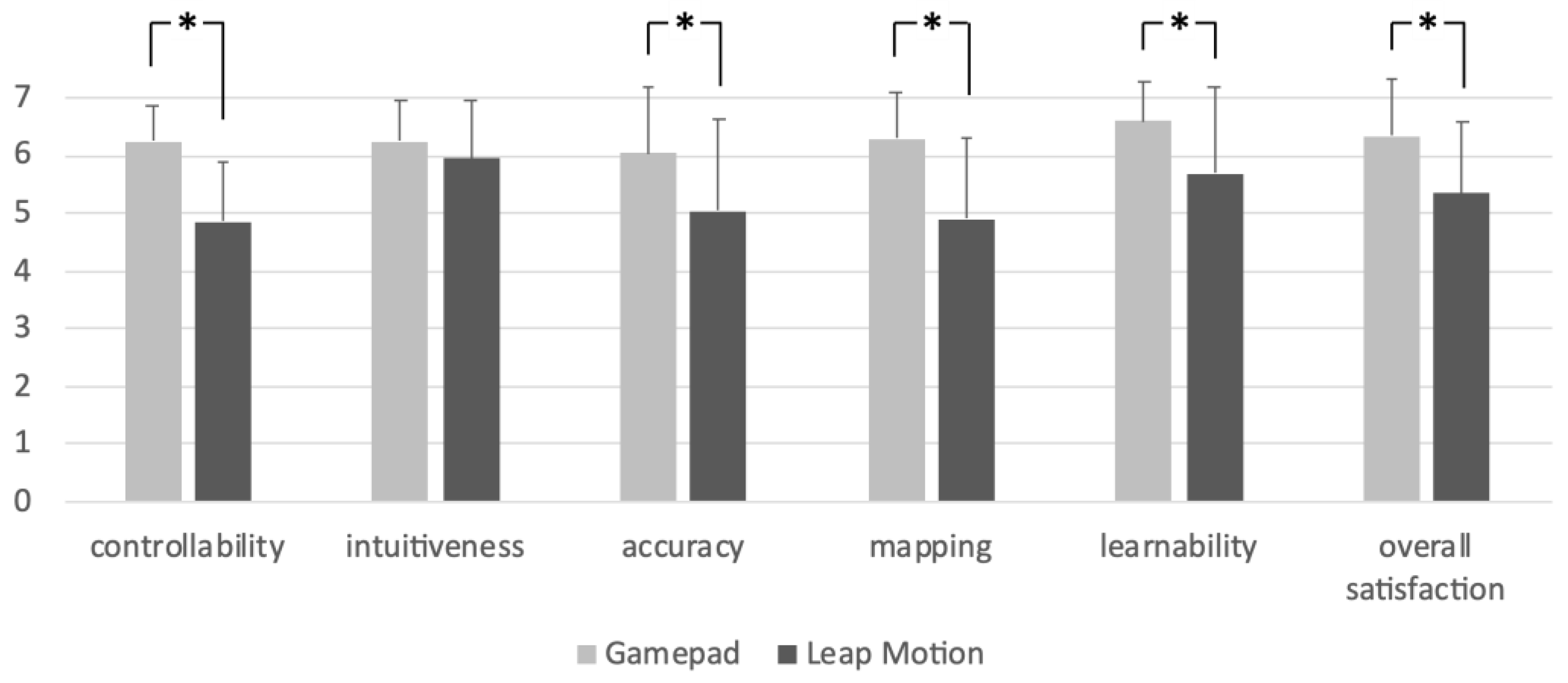

Lastly, regarding the subjective evaluation, the overall scores for the gamepad interface were 5% to 29% higher on average compared to the Leap Motion (

Figure 6 and

Table 2). The differences in scores were statistically significant at

α = 0.05 in most of the usability measures (controllability, accuracy, mapping, and learnability), except for intuitiveness.

4. Discussion

This study aimed to compare the performance of two interfaces, the 2D (gamepad) and 3D (Leap Motion), in controlling a VTOL aircraft. The results showed that the 3D control method, which is an intuitive interface for controlling an object in three-dimensional space, was superior in several critical aspects. First, it can reduce the operator’s cognitive fatigue by providing a more natural and ergonomic control experience, resulting in decreased cognitive errors. It may demonstrate a quicker response compared to the two-joystick interface. This highlights the ability of an intuitive interface to respond rapidly to changing circumstances, which is particularly important for ensuring the safe operation of a VTOL aircraft. Second, the 3D method can provide improved performance in terms of the drone’s movement smoothness, as the drone is operated by following natural human motion. Lastly, the 3D interface also can provide a more immersive experience for the operator, making a better visualization of the VTOL aircraft’s movements in 3D space. Based on these findings, it can be concluded that an intuitive 3D interface (e.g., Leap Motion) with a clear control-manipulation mapping would be not only a more effective and efficient solution but also a more intuitive and safer solution for controlling VTOL aircrafts compared to 2D interfaces [

6,

13].

The results of this study provide insights into the advantages of using a 3D controller, such as the Leap Motion, for controlling VTOL aircrafts in 3D space. The Leap Motion interface demonstrated several benefits in terms of mapping and control. By mapping the drone’s movements to the user’s natural directional gestures, the Leap Motion interface allows for more intuitive and precise control. The natural mapping of the Leap Motion interface resulted in smoother control and lower angle changes during both straight (A section) and up–down (B section) movements. In previous studies, it has been also mentioned that when the direction of the drone is mapped to the control inputs, it allows for intuitive and precise control of the drone. Kim and Chang (2021) invented a handheld controller where the user holds and moves it in three dimensions to control the drone and found that the developed controller provided more natural control compared to the gamepad, resulting in fewer failures in drone-control tasks, such as collisions [

13]. And Di Vincenzo et al. (2022) argued that using hand gestures enables faster and more accurate control of the drone in terms of eye–hand coordination [

20]. A 3D controller that effectively maps the direction of manipulation to the movement of the drone enhances the overall user experience and facilitates more accurate controlling of the drone, which is particularly important in scenarios that require precise control and smooth movements. The advantages of the 3D controller highlight its potential for enhancing the control capabilities of VTOL aircrafts and opening up new possibilities for applications in various industries and domains.

The use of the Leap Motion for controlling a drone presents certain limitations compared to using the gamepad. One limitation is the slower speed of control with the Leap Motion, which can be attributed to the users’ unfamiliarity and difficulty in manipulating the drone’s movements using hand gestures within a specific range in the air. This resulted in a lower subjective usability score, indicating challenges in user adaptation and proficiency with the Leap Motion interface. Particularly, there was a discrepancy between the users’ subjective opinions of the control method and the actual drone-control results in intuitiveness. The Leap Motion interface was shown to be more intuitive for controlling the drone by showing better trajectory smoothness, while the Leap Motion control method was perceived as less intuitive in terms of subjective satisfaction ratings. Moreover, the performance of the Leap Motion was hindered in zigzag movements (C section) due to users’ lack of proficiency, leading to suboptimal control characterized by sharp turns and unnecessary up–down movements. Participants also reported that the Leap Motion was not easy to learn and use, especially for novice users, as it lacks physical feedback mechanisms [

6,

13,

20]. These limitations highlight the necessity for further exploration and the development of effective and user-friendly interfaces to enhance the control of VTOL aircraft s. It is crucial to focus on optimizing the speed, accuracy, and user familiarity of gesture-based drone control, thereby improving overall performance and usability. To address these limitations, future research can explore the use of physical devices that provide intuitive control of the drone in 3D space by incorporating handheld or manipulative actions in six directions. Moreover, consideration for different types of hand gestures is necessary. In this study, the drone was controlled using the Leap Motion by extending the palm and performing movements in six directions. However, the limited space for arm movement above the Leap Motion sometimes caused the hand position to go beyond its sensing range, leading to control errors. Previous studies have explored alternative methods for drone control, such as utilizing wrist rotations (e.g., abduction, adduction, flexion, extension, and rotation) or employing specific hand shapes (e.g., clenched fist, thumb-up, pointing, and V gesture), without the need for extensive arm movement [

3,

17].

This study is limited to analyzing the influence of eye–hand coordination (specifically mapping) on the control of hovering objects by novice users. This study does not compare various controllers and does not analyze other hovering objects besides drones; thus, the results of this study cannot be hastily generalized. Furthermore, advanced control laws used in real-world drone operations were not considered. By focusing on simply moving the drone in the vertical, horizontal, and lateral directions, this study aimed to analyze the impact of eye–hand coordination on the smoothness and precision of hovering object control. Therefore, other factors than controlling the drone’s directions were fixed in the experiment in order to eliminate unexpected influences on the results. Also, this study aimed to analyze the effects of control methods on users without prior drone-operating experience. Lastly, while this study focused on participants who had no bias from previous drone-control experience, it is noted that participants with familiarity with drone control using various methods may show different results.

Future research should address the limitations of this study and further explore the best interfaces for controlling VTOL aircrafts. One potential area of investigation is to examine the issue of yaw rotation, which was excluded from this study for a specific reason. A possible solution could be to include yaw rotation in the mapping of the interface to assess how it impacts the performance and experience of the operator. This could be achieved by combining hand gestures with body movements, such as rotation of the head or body toward the direction of the drone’s head-up orientation. Or, a VR device can be utilized to offer users a first-person perspective and the motion of wrist abduction/adduction can be naturally mapped to yaw rotation for controlling the drone [

3,

6,

21]. Additionally, the comparison between different interfaces could be extended to include more devices or methods, such as touchscreen [

22], hand gestures [

3,

16,

17,

23,

24], body gestures [

5], electromyography [

21], gaze [

20,

25,

26], brain signal [

1], and speech [

16,

24] interfaces. This would provide a more comprehensive understanding of the strengths and weaknesses of different interfaces for controlling VTOL aircrafts. Moreover, as previous studies emphasize the importance of training for safe drone operation [

27,

28], future research needs to investigate not only eye–hand coordination but also the learnability aspect.

The findings of this study have important implications for the design and development of intuitive interfaces for controlling various hovering objects. The superiority of the Leap Motion in terms of reducing cognitive fatigue, providing better performance, quicker response in emergency situations, and a more immersive experience, suggests that an intuitive interface could be useful in various domains. These domains could include the control of VTOL aircrafts such as drones and helicopters, underwater vehicles, construction robots, and robot arms in a manufacturing setting, or dangerous tasks such as bomb disposal, as well as spacecraft. The precise mapping of the interface to the object’s movement is critical for the successful handling of such tasks and requires further exploration.