Enhancing Data Discretization for Smoother Drone Input Using GAN-Based IMU Data Augmentation

Abstract

1. Introduction

2. Materials and Methods

2.1. Description of Materials and Equipment

2.2. Experimental Methodology

- (1)

- Data preprocessing: The input data, which consist of real data samples, were preprocessed to ensure compatibility with the GAN model. This preprocessing step included data normalization, feature extraction, and other necessary data transformations.

- (2)

- Training setup: The GAN model was initialized with appropriate hyperparameters, such as learning rate, batch size, and number of training iterations. These parameters were chosen based on prior knowledge and experimentation to achieve optimal results.

- (3)

- Training loop: The training process was iteratively alternated between updating the generator and discriminator. During each iteration, a batch of real data samples was randomly selected, and a corresponding batch of generated samples was produced by the generator. The discriminator was then trained on both the real and generated samples to improve its ability to distinguish between them. Subsequently, the generator was updated based on the feedback from the discriminator, aiming to generate samples that closely resemble real data.

- (4)

- (5)

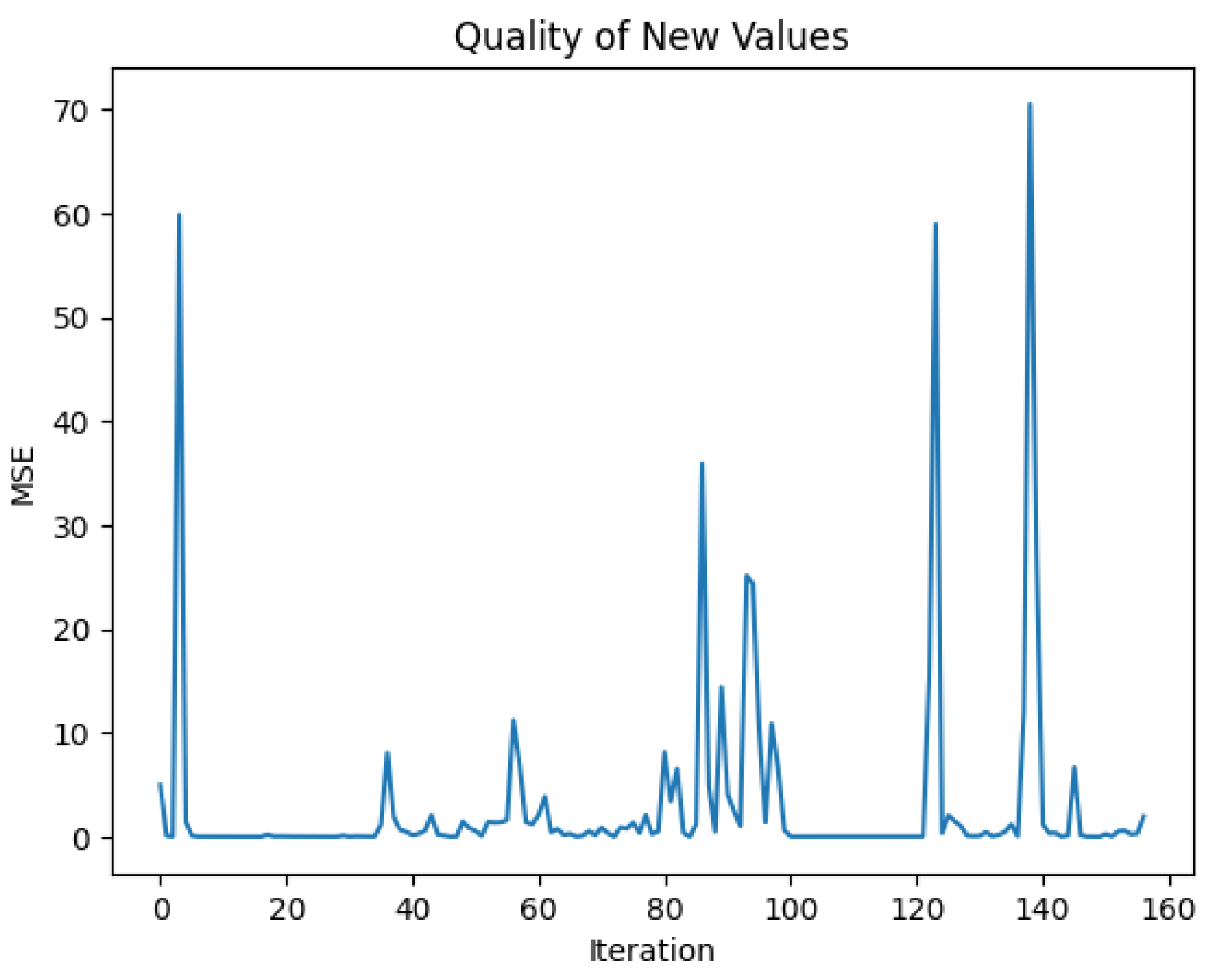

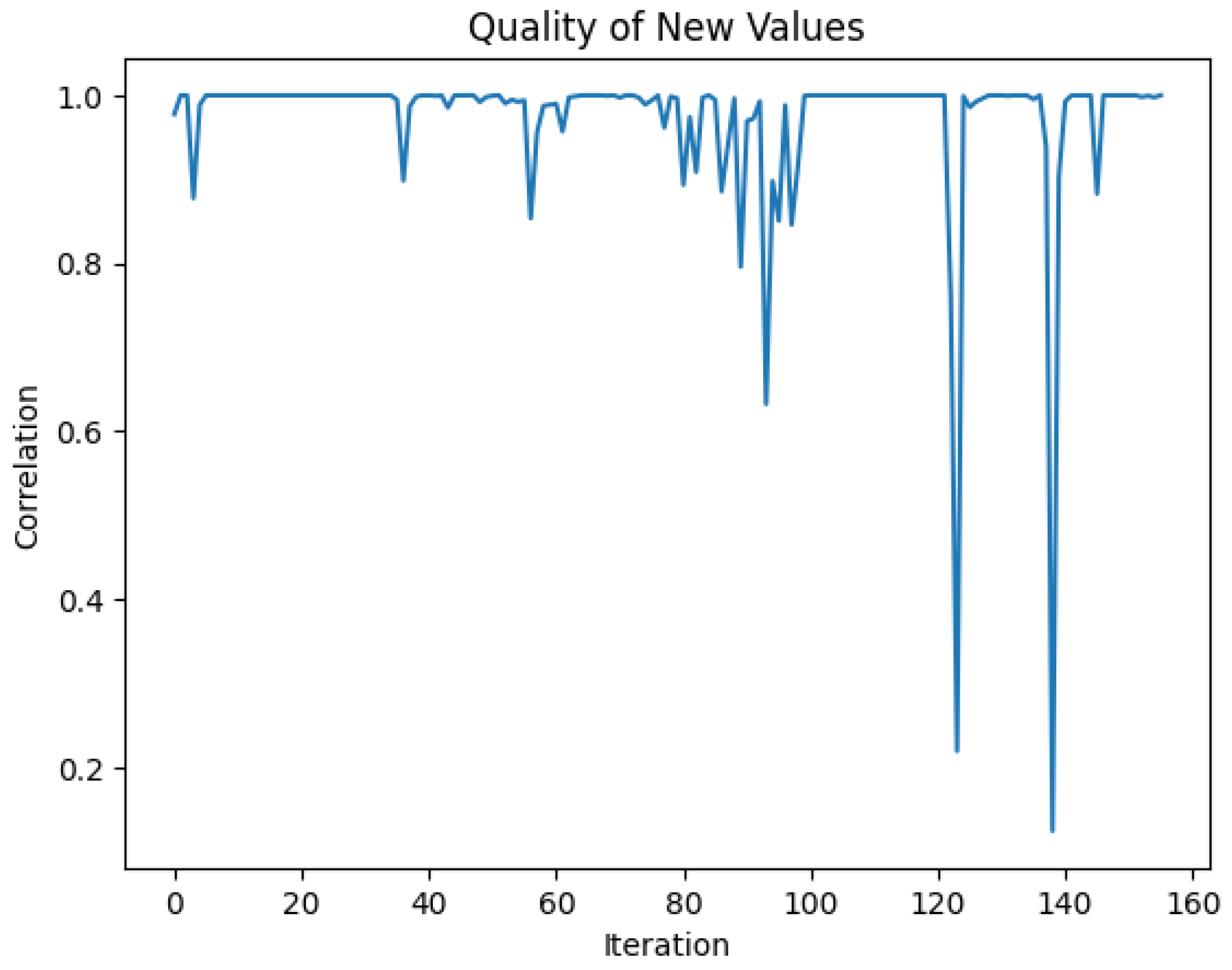

- Model evaluation: After the completion of training, the performance of the trained GAN model was evaluated. This evaluation involved generating new samples using the trained generator and assessing their quality and similarity to the real data. Various metrics, such as the mean squared error, structural similarity index, or other relevant evaluation measures, were used to assess the model’s performance.

3. Results

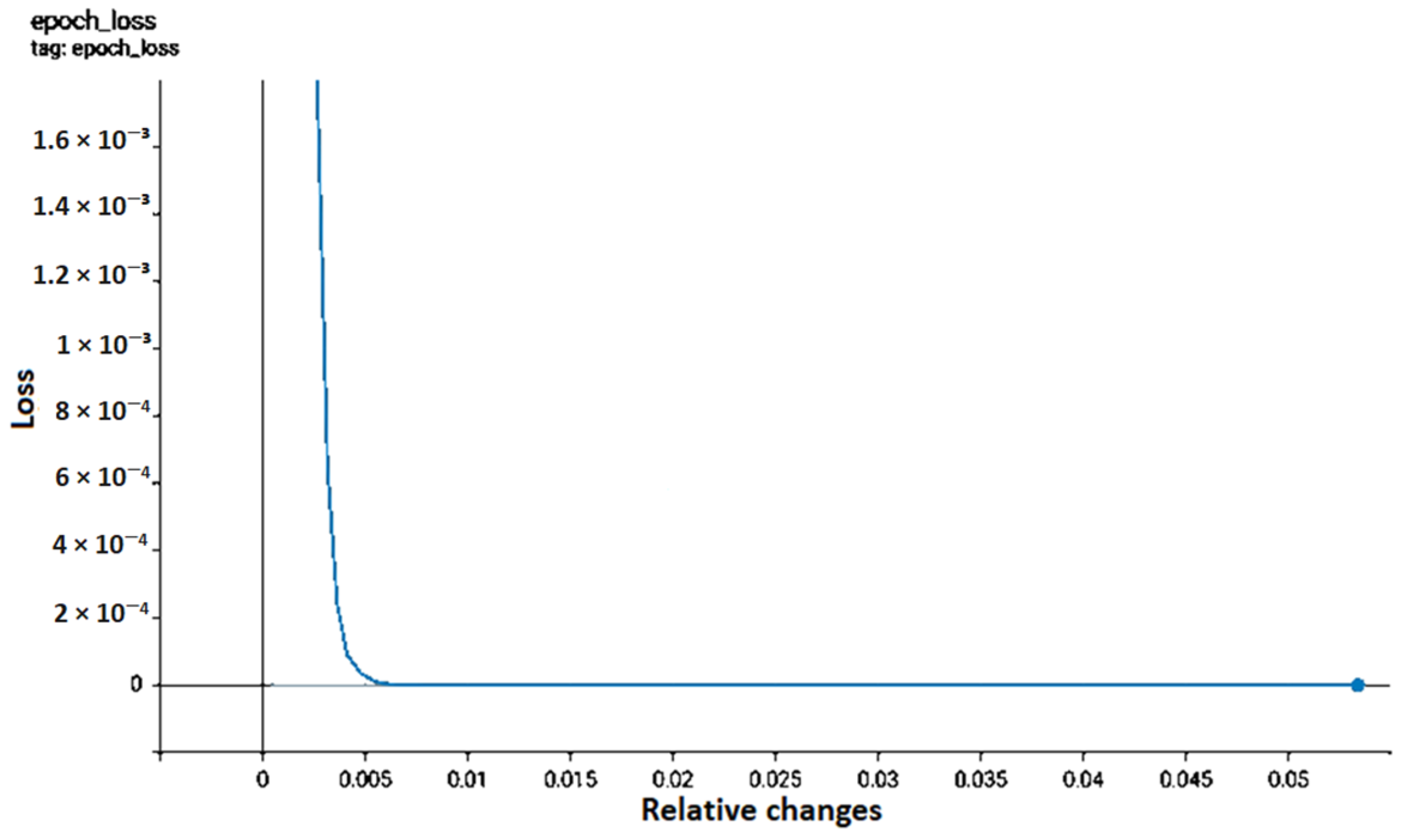

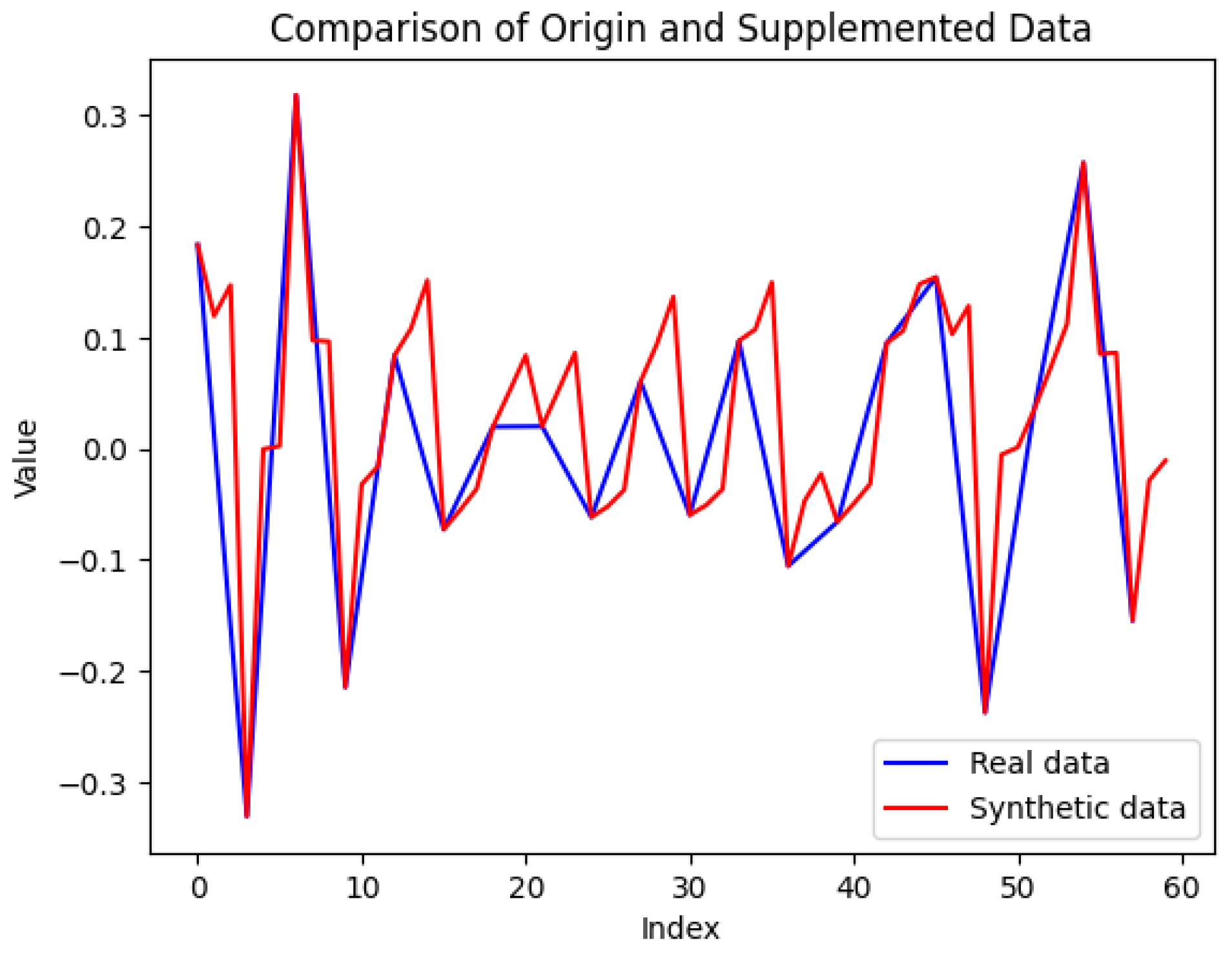

3.1. GAN-Based Generation of Synthetic IMU Data

3.2. Improvement in Data Discretization Process

Comparison of Results

- Root mean square deviation (RMSD): The RMSD measures the average difference between the discretized values obtained from the real and synthetic data. A lower RMSD indicates better agreement between the two datasets.

- Correlation coefficient: The correlation coefficient quantifies the linear relationship between the discretized values derived from real and synthetic data. A higher correlation coefficient suggests a more substantial similarity in the discretization patterns.

- Information loss: Information loss was computed as the reduction in entropy between the original continuous data and the discretized data. A lower information loss signifies better preservation of the original data characteristics during discretization.

3.3. Impact of Improved Data Discretization on Drone Operation

- Flight stability: The improved data discretization demonstrated its effectiveness in enhancing the stability of drone flights. The drone exhibited increased stability by reducing oscillations and fluctuations in roll, pitch, and yaw angles during different flight maneuvers compared to the standard discretization approach. This improvement was particularly evident when the PID controller coefficients were adjusted to make the drone more unstable. In such cases, the drone remained stable for 15% longer when the improved data discretization was enabled.

- Control accuracy: Control accuracy refers to the precision and effectiveness of the drone’s response to control inputs. With the improved data discretization, the drone exhibited enhanced control accuracy, as reflected in the decreased deviations from the desired setpoints.

- The smoothness of drone movements: The overall smoothness of drone movements, including transitions between different flight modes or maneuvers, was assessed. The improved data discretization led to smoother transitions. The drone’s motion exhibited more fluid and continuous trajectories, minimizing jerky or abrupt movements.

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Maharana, S. Commercial drones. In Proceedings of the IRF International Conference, Mumbai, India, 22 January 2017. [Google Scholar]

- Madgwick, S.O.H.; Harrison, A.J.L.; Vaidyanathan, A. Estimation of IMU and MARG orientation using a gradient descent algorithm. In Proceedings of the I 2011 IEEE International Conference on Rehabilitation Robotics, Zurich, Switzerland, 29 June–1 July 2011. [Google Scholar]

- Ahmad, N.; Ghazilla, R.A.R.; Khairi, N.M.; Kasi, V. Reviews on various inertial measurement unit (IMU) sensor applications. Int. J. Signal Process. Syst. 2013, 1, 256–262. [Google Scholar] [CrossRef]

- Kryvenchuk, Y.; Petrenko, D.; Cichoń, D.; Malynovskyy, Y.; Helzhynska, T. Selection of Deep Reinforcement Learning Using a Genetic Algorithm. In Proceedings of the 6th International Conference on Computational Linguistics and Intelligent Systems (COLINS 2022), Gliwice, Poland, 12–13 May 2022. [Google Scholar]

- Sukkarieh, S.; Nebot, E.M.; Durrant-Whyte, H.F. A high integrity IMU/GPS navigation loop for autonomous land vehicle applications. IEEE Trans. Robot. Autom. 1999, 15, 572–578. [Google Scholar] [CrossRef]

- Svedin, J.; Bernland, A.; Gustafsson, A. Small UAV-based high resolution SAR using low-cost radar, GNSS/RTK and IMU sensors. In Proceedings of the 2020 17th European Radar Conference (EuRAD), Utrecht, The Netherlands, 10–15 January 2021. [Google Scholar]

- Mohammadzadeh, M.; Ghadami, A.; Taheri, A.; Behzadipour, S. cGAN-Based High Dimensional IMU Sensor Data Generation for Therapeutic Activities. arXiv 2023, arXiv:2302.07998. [Google Scholar]

- Hassanalian, M.; Abdelkefi, A. Classifications, applications, and design challenges of drones: A review. Prog. Aerosp. Sci. 2017, 91, 99–131. [Google Scholar] [CrossRef]

- Floreano, D.; Wood, R.J. Science, technology and the future of small autonomous drones. Nature 2015, 521, 460–466. [Google Scholar] [CrossRef] [PubMed]

- Kardasz, P.; Doskocz, J. Drones and possibilities of their using. J. Civ. Environ. Eng. 2016, 6, 3. [Google Scholar] [CrossRef]

- Murrieta-Rico, F.N.; Balbuena, D.H.; Rodríguez-Quiñonez, J.C.; Petranovskii, V.; Raymond-Herrera, O.; Gurko, A.G.; Mercorelli, P.; Sergiyenko, O.; Lindner, L.; Valdez-Salas, B.; et al. Resolution improvement of accelerometers measurement for drones in agricultural applications. In Proceedings of the IECON 2016—42nd Annual Conference of the IEEE Industrial Electronics Society, Florence, Italy, 23–26 October 2016. [Google Scholar]

- Motlagh, H.D.K.; Lotfi, F.; Taghirad, H.D.; Germi, S.B. Position Estimation for Drones based on Visual SLAM and IMU in GPS-denied Environment. In Proceedings of the 2019 7th International Conference on Robotics and Mechatronics (ICRoM), Tehran, Iran, 20–21 November 2019. [Google Scholar]

- Alkadi, R.; Al-Ameri, S.; Shoufan, A.; Damiani, E. Identifying Drone Operator by Deep Learning and Ensemble Learning of IMU and Control Data. IEEE Trans. Hum.-Mach. Syst. 2021, 51, 451–462. [Google Scholar] [CrossRef]

- Ochoa-de-Eribe-Landaberea, A.; Zamora-Cadenas, L.; Peñagaricano-Muñoa, O.; Velez, I. UWB and IMU-Based UAV’s Assistance System for Autonomous Landing on a Platform. Sensors 2022, 22, 2347. [Google Scholar] [CrossRef] [PubMed]

- Hoang, M.L.; Carratù, M.; Paciello, V.; Pietrosanto, A. Noise attenuation on IMU measurement for drone balance by sensor fusion. In Proceedings of the 2021 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Glasgow, UK, 17–20 May 2021. [Google Scholar]

- Avalos-Gonzalez, D.; Hernandez-Balbuena, D.; Tyrsa, V.; Kartashov, V.; Kolendovska, M.; Sheiko, S.; Sergiyenko, O.; Melnyk, V.; Murrieta-Rico, F.N. Application of fast frequency shift measurement method for INS in navigation of drones. In Proceedings of the IECON 2018—44th Annual Conference of the IEEE Industrial Electronics Society, Washington, DC, USA, 21–23 October 2018. [Google Scholar]

- Santamaria-Navarro, A.; Thakker, R.; Fan, D.D.; Morrell, B.; Agha-mohammadi, A. Towards Resilient Autonomous Navigation of Drones. In Proceedings of the 19th International Symposium on Robotics Research (ISRR), Geneva, Switzerland, 25–30 September 2022. [Google Scholar]

- Gowda, M.; Manweiler, J.; Dhekne, A.; Choudhury, R.R.; Weisz, J.D. Tracking drone orientation with multiple GPS receivers. In Proceedings of the 22nd Annual International Conference on Mobile Computing and Networking, New York City, NY, USA, 3–7 October 2016. [Google Scholar]

- Ochieng’, V.; Rwomushana, I.; Ong’amo, G.; Ndegwa, P.; Kamau, S.; Makale, F.; Chacha, D.; Gadhia, K.; Akiri, M. Optimum Flight Height for the Control of Desert Locusts Using Unmanned Aerial Vehicles (UAV). Drones 2023, 7, 233. [Google Scholar] [CrossRef]

- Yu, Y.; Srivastava, A.; Canales, S. Conditional LSTM-GAN for Melody Generation from Lyrics. ACM Trans. Multimed. Comput. Commun. Appl. 2021, 17, 1–20. [Google Scholar] [CrossRef]

- Xu, P.; Du, R.; Zhang, Z. Predicting pipeline leakage in petrochemical system through GAN and LSTM. Knowl.-Based Syst. 2019, 175, 50–61. [Google Scholar] [CrossRef]

- Sherry, D.; Alyamkin, V.; Emperore, K. Unreal Engine Physics Essentials; Packt Publishing Ltd.: Birmingham, UK, 2015. [Google Scholar]

- Borase, R.P.; Maghade, D.K.; Sondkar, S.Y.; Pawar, S.N. A review of PID control, tuning methods and applications. Int. J. Dyn. Control 2021, 9, 818–827. [Google Scholar] [CrossRef]

- Christoffersen, P.; Jacobs, K. The importance of the loss function in option valuation. J. Financ. Econ. 2004, 72, 291–318. [Google Scholar] [CrossRef]

- Zhu, G.; Zhao, H.; Liu, H.; Sun, H. A Novel LSTM-GAN Algorithm for Time Series Anomaly Detection. In Proceedings of the 2019 Prognostics and System Health Management Conference, Qingdao, China, 25–27 October 2019. [Google Scholar]

- Gers, F.; Schmidhuber, J.; Cummins, F. Learning to Forget: Continual Prediction with LSTM. Neural Comput. 2000, 12, 2451–2471. [Google Scholar] [CrossRef] [PubMed]

- Ruder, S. An overview of gradient descent optimization algorithms. arXiv 2016, arXiv:1609.04747. [Google Scholar]

- Yildirim, S.; Çabuk, N.; Bakırcıoğlu, V. Design and trajectory control of universal drone system. Measurement 2019, 147, 106834. [Google Scholar] [CrossRef]

| Mean | Variance | |

|---|---|---|

| Real data | 3.3104 | 24.3055 |

| Synthetic | 3.2809 | 23.5582 |

| Metric | Value |

|---|---|

| Mean correlation coefficient | 0.9716 |

| Min. correlation | 0.1245 |

| Max. correlation | 0.9999 |

| Mean RMSE | 3.1614 |

| Min. RMSE | 0.0012 |

| Max. RMSE | 70.4663 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Petrenko, D.; Kryvenchuk, Y.; Yakovyna, V. Enhancing Data Discretization for Smoother Drone Input Using GAN-Based IMU Data Augmentation. Drones 2023, 7, 463. https://doi.org/10.3390/drones7070463

Petrenko D, Kryvenchuk Y, Yakovyna V. Enhancing Data Discretization for Smoother Drone Input Using GAN-Based IMU Data Augmentation. Drones. 2023; 7(7):463. https://doi.org/10.3390/drones7070463

Chicago/Turabian StylePetrenko, Dmytro, Yurii Kryvenchuk, and Vitaliy Yakovyna. 2023. "Enhancing Data Discretization for Smoother Drone Input Using GAN-Based IMU Data Augmentation" Drones 7, no. 7: 463. https://doi.org/10.3390/drones7070463

APA StylePetrenko, D., Kryvenchuk, Y., & Yakovyna, V. (2023). Enhancing Data Discretization for Smoother Drone Input Using GAN-Based IMU Data Augmentation. Drones, 7(7), 463. https://doi.org/10.3390/drones7070463