1. Introduction

Pedestrian tracking and re-identification systems based on machine learning have emerged as a significant solution for various safety and security applications [

1,

2]. These systems utilize a mapping function that is trained to embed images into a compact Euclidean space, such as a unit sphere [

3,

4]. The primary objective of this embedding is to ensure that images depicting the same person are mapped to nearby feature points, while images depicting different people are mapped to distant feature points. However, in real-world scenarios, there may be situational changes such as differences in pedestrian position, orientation, and occlusion within a single scene, which can adversely affect the effectiveness of the embedding approach. To overcome these challenges, it is essential to develop robust pedestrian re-identification systems that can handle such variations. Additionally, the embedding approach should not rely on clothing appearance, given that individuals may wear different clothing over time, spanning days or weeks.

Pedestrian re-identification is a challenging task in computer vision, where the goal is to recognize a pedestrian across multiple camera views. It has been a topic of intense research over the last decade due to its importance in various applications, such as surveillance and forensics [

5,

6,

7]. Traditional approaches rely on hand-crafted features and metrics to match individuals across cameras [

8,

9]. However, with the recent advancements in deep learning, machine learning-based pedestrian re-identification systems have gained significant popularity, outperforming traditional methods. These systems use deep neural networks to learn discriminative feature representations from images and use them for matching individuals across camera views. Despite the considerable progress made, Pedestrian re-identification remains a challenging task, particularly in real-world settings, due to variations in lighting, pose, viewpoint, and occlusion.

Recent advancements in deep learning have revolutionized the field of re-ID [

10,

11]. Deep learning-based approaches, particularly those based on Convolutional Neural Networks (CNNs), have demonstrated remarkable performance on public benchmarks. The core idea behind these approaches is to learn a discriminative embedding that maps images of the same pedestrian to nearby points in the embedding space, while images of different pedestrian are mapped to distant points. The embedding is learned in an end-to-end manner, by jointly optimizing a classification loss and a triplet loss, which encourages the embeddings to preserve inter-class and intra-class distances. However, deep learning-based approaches are still prone to overfitting and suffer from the problem of detecting Out-Of-Distribution (OOD) data, which is data that differs significantly from what the model was trained on. To address these issues, there is a need to develop more robust deep learning-based pedestrian re-identification systems. The detection of OOD is crucial for the robustness of trained models in real-world scenarios.

The performance of many machine learning-based tracking and re-identification applications is significantly influenced by the image acquisition systems utilized, such as static cameras, as well as the associated costs of data collection. Unmanned Aerial Vehicles (UAVs) have recently emerged as a viable alternative for monitoring public spaces, offering a low-cost means of data collection while covering broad and challenging-to-access regions [

12,

13,

14,

15]. The advancements in UAV technology have greatly benefited multi-object tracking (MOT), particularly pedestrian tracking and re-identification, by providing a practical solution to various challenges, such as occlusion, moving cameras, and difficult-to-reach locations. Compared to static cameras, UAVs are considerably more flexible, allowing for adaptability in emplacement location and direction in the Three-Dimensional (3D) space.

In a previous study [

16], we presented a solution to the problem of pedestrian tracking and re-identification from aerial devices. Our approach involved modeling each identity as a von Mises–Fisher (vMF) distribution, which was inspired by a methodology proposed by [

17] for image classification and retrieval. Specifically, we learned a compact embedding for each identity in a unit sphere using a base Convolutional Neural Network (CNN) encoder.

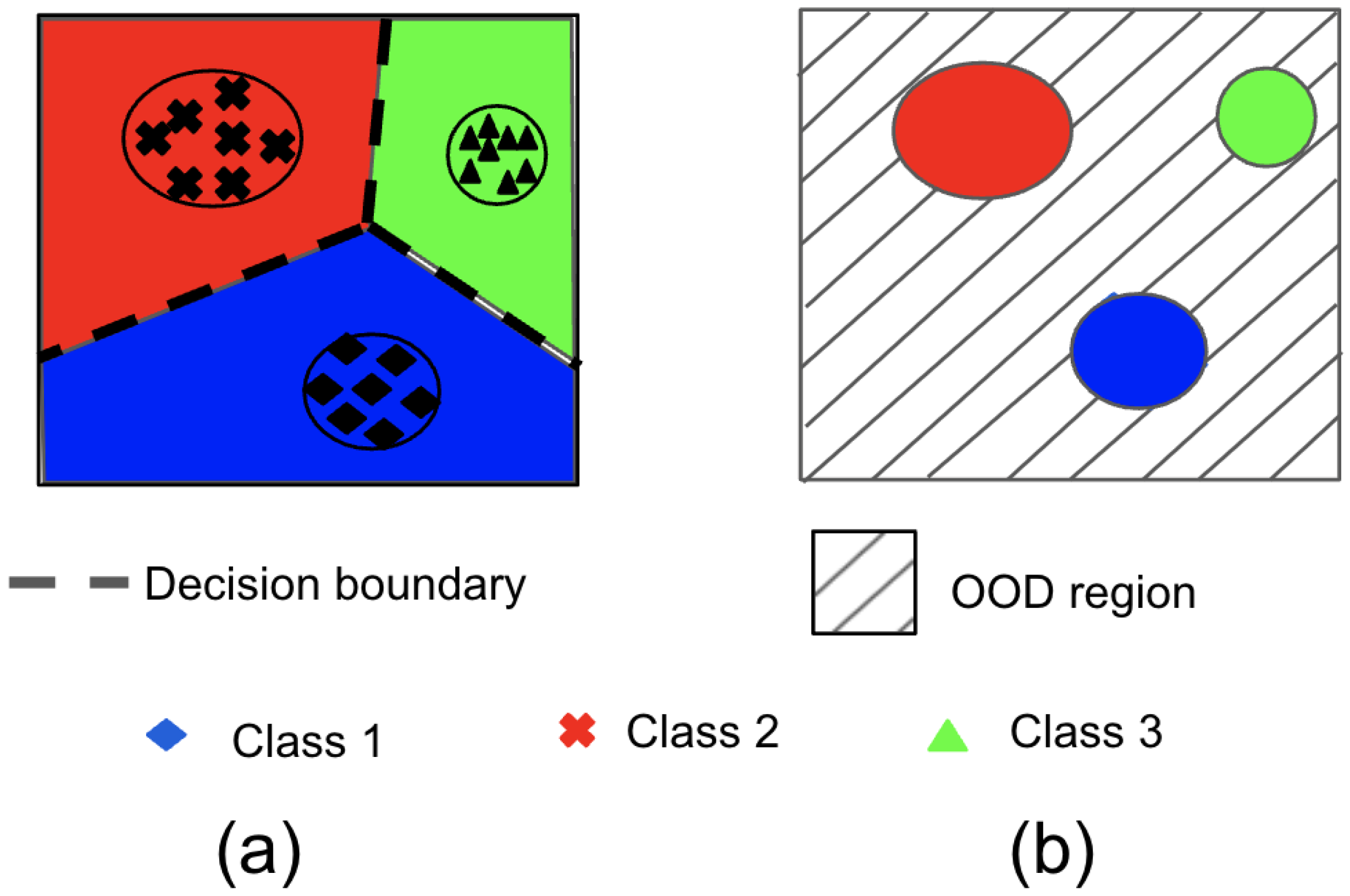

Figure 1 is an illustration of one of the deep learning classification model’s biggest limitation. This limitation happens when it comes to detecting Out-Of-Distribution data. Because the embeddings are learned from only In-Distribution data, they may not be able to accurately represent or detect data that is significantly different from what they were trained on. This means that deep learning models can struggle to identify and handle novel or anomalous data, which is a significant challenge in many real-world applications.

In an open-world environment, it is highly likely that a deployed model will encounter new pedestrians that it has not learned to recognize, which falls under the category of OOD. Additionally, the model is expected to encounter objects that differ from humans, which are also referred to as OOD data points. The OOD data points can be classified into two types of shifts: non-semantic shifts, such as new pedestrians, and semantic shifts, such as objects that differ from humans. The definition of semantic shifts is drawn from [

18]. Therefore, the detection of OOD is crucial in ensuring the robustness of trained models in real-world scenarios.

In order to tackle the challenge of OOD detection, one possible approach is to train a deep learning classifier to distinguish between ID and OOD using real-world OOD data points. However, obtaining or generating sufficient real-world OOD data points in high-dimensional pixel space is a challenging and intractable task. To address this issue, a recent study by [

19] proposed a framework called Virtual Outlier Synthetic (VOS), which synthesizes virtual outliers in the embedding space in an online manner. This framework utilizes the compact embedding of samples from the same object to generate virtual outliers, using a class-conditional multivariate Gaussian distribution, which is consistent with the objective of the vMF method.

The recognition of pedestrians and detection of Out-Of-Distribution (OOD) objects is of great importance for developing robust and efficient surveillance systems. In light of this, the combination of the von Mises–Fisher (vMF) method and Virtual Outlier Synthetic (VOS) framework presents a promising solution for improving the accuracy of pedestrian re-identification and OOD detection. Building upon our previous research, we propose an integrated end-to-end learning framework that leverages the vMF method for modeling each ID, enabling simultaneous recognition of individuals and detection of OOD objects. Our proposed approach builds on the strengths of both the vMF and VOS methods, with the vMF method providing a compact embedding for pedestrian recognition and the VOS framework synthesizing virtual outliers for effective OOD detection.

To address this challenge, the paper proposes an integrated end-to-end learning framework that leverages the vMF method for pedestrian recognition and a Virtual Outlier Synthetic (VOS) framework for effective Out-Of-Distribution (OOD) detection. The proposed approach builds on the strengths of both the vMF and VOS methods to provide a promising solution for improving the accuracy of pedestrian re-identification and OOD detection. This paper aims to contribute to the development of robust and efficient surveillance systems by providing a practical solution to various challenges faced by machine learning-based person re-identification systems, particularly when using UAVs for data collection.

The present paper makes the following key contributions:

A novel end-to-end framework for re-identifying pedestrians and detecting OOD instances from aerial devices.

The first method, to the best of our knowledge, that leverages online virtual outlier synthetic to address OOD in pedestrian re-identification.

The structure of this paper is as follows.

Section 2 provides a background and related work overview.

Section 3 presents a review of the preliminaries related to the vMF method and VOS, providing a basic understanding of directional statistics, along with a review of the VOS framework.

Section 4 describes the dataset case study. In

Section 5, we propose our online pedestrian re-identification and OOD detection framework. In

Section 6, we present the experimental results. Finally, we conclude with a summary of our contributions and potential future work in

Section 8.

2. Related Work

2.1. Pedestrian Re-Identification

Research on pedestrian re-identification encompasses various aspects, ranging from feature-based [

20] to metric-based [

21] approaches, as well as from hand-crafted features to deeply learned features [

22,

23]. In this paper, we focus on three recent and relevant sub-areas within the pedestrian re-identification topic.

Open-world person re-identification is a specific instance of set matching, where the goal is to match one-to-one between two pedestrian sets, namely the probe and gallery sets. This task assumes that each person appears in both sets and aims to identify matching pairs. The open-world setting means that the identity of the pedestrians in the probe set may not be known in advance, which adds an additional layer of complexity to the problem. This is in contrast to the closed-world setting, where all pedestrian identities are known beforehand. In this work, we focus on the open-world person re-identification problem, where the gallery set is assumed to be known.

The generalized-view re-identification problem involves learning discriminative features from two different views obtained by two distinct, stationary cameras [

24,

25]. However, collecting, annotating, and matching data from two separate cameras can be expensive in practical scenarios.

In recent years, there has been a growing interest in pedestrian re-identification from drones, leading to the development of new benchmark datasets [

14,

26]. Drones offer a novel tool for data acquisition, particularly in the field of video surveillance and analysis. This presents new opportunities and challenges for pedestrian detection, tracking, and re-identification, as it helps to overcome some of the limitations associated with static cameras.

2.2. Out-Of-Distribution Detection

The Out-Of-Distribution (OOD) problem may be treated as a classification task, wherein denotes the ID, where , and for C classes. A distribution is utilized to produce . To predict class probabilities, many classification models, including deep neural networks, are trained on datasets. When the model is used in production in the open world, it encounters data derived from a different distribution during inference, where . These two distributions are not identical. A direct approach involves sampling from the distribution. However, sampling from the high-dimensional pixel space is complex and impractical.

The disparity between distributions and can be classified into two primary categories, namely semantic and non-semantic shifts. A semantic shift arises when a novel class appears in , while a non-semantic shift arises when instances of objects from the same class that are present in appear differently in comparison to those observed during the training phase. The latter type is akin to an anomaly detection configuration. To enable the model to function effectively in the production setting, it must detect these types of shifts in the data.

In recent years, many studies have addressed the issue of detecting Out-Of-Distribution (OOD) samples in a classification task setup. A binary scoring function, , is commonly used in these studies, where a high score is assigned to data points from the In-Distribution (ID) and a low score is assigned to OOD samples. This scoring function can be learned using energy models, linear transformations with deep neural networks, or a combination of both techniques.

2.3. Anomaly Detection

Anomaly detection refers to the identification of unusual events, items, or observations that deviate significantly from expected behaviors or patterns. In the context of computer vision, outliers, noise, and novel objects are detected as anomalies when compared to the distribution of known objects. This problem is often encountered in various industrial applications where acquiring images of normal samples is easy, but specifying expected variations in defects is difficult and costly. Such scenarios are often referred to as Out-Of-Distribution (OOD) detection problems, where a model is required to distinguish between samples drawn from the training data distribution and those lying outside its support. Existing work on anomaly detection is predominantly based on learning compact visual representations in a latent space using auto-encoders and GANs. Unsupervised methods using pre-trained CNNs, such as PatchCore and SPADE, as well as PaDIM, are widely used in industrial applications.

Pedestrian re-identification can be framed as an anomaly detection problem, where the task is to identify pedestrians from the known training data distribution and to detect pedestrians that do not belong to this distribution as outliers or anomalies. These anomalous pedestrians could arise due to a variety of reasons such as novel viewpoints, changes in illumination, or occlusions.

2.4. Deep Metric Learning

In numerous machine learning applications, such as multi-object tracking (MOT), it is crucial to establish a measure of similarity among data objects. Metric learning aims to learn a mapping function that quantifies this similarity. Specifically, the objective of metric learning is to minimize the distance between data points belonging to the same category while maximizing the distance between data points from different categories.

In recent years, deep learning has demonstrated remarkable performance in various machine learning tasks, including image classification, image embedding, and multiple object tracking (MOT). The superior representational power of deep learning in extracting highly abstract non-linear features has resulted in the emergence of a new research area known as Deep Metric Learning (DML) [

17,

27,

28,

29]. This field aims to learn a mapping function that quantifies the similarity between data points, with the objective of minimizing the similarity between data points from the same category and maximizing the distance between data points from different categories.

Multiple Object Tracking (MOT) has been enhanced by the success of Deep Metric Learning (DML), which involves training a neural network to extract features and learn a similarity measure between object instance patches.

4. Dataset Description

In recent years, pedestrian tracking and re-identification have become a topic of significant interest owing to their wide-ranging applications, including but not limited to surveillance systems and traffic control. Nevertheless, tracking and re-identification of individuals present a substantial challenge due to the limitations of the acquisition system, particularly when using stationary cameras. In recent years, Unmanned Aerial Vehicles (UAVs) have emerged as a promising alternative for monitoring public areas, as they offer an inexpensive means of data collection while effectively covering large and remote areas that may otherwise be inaccessible.

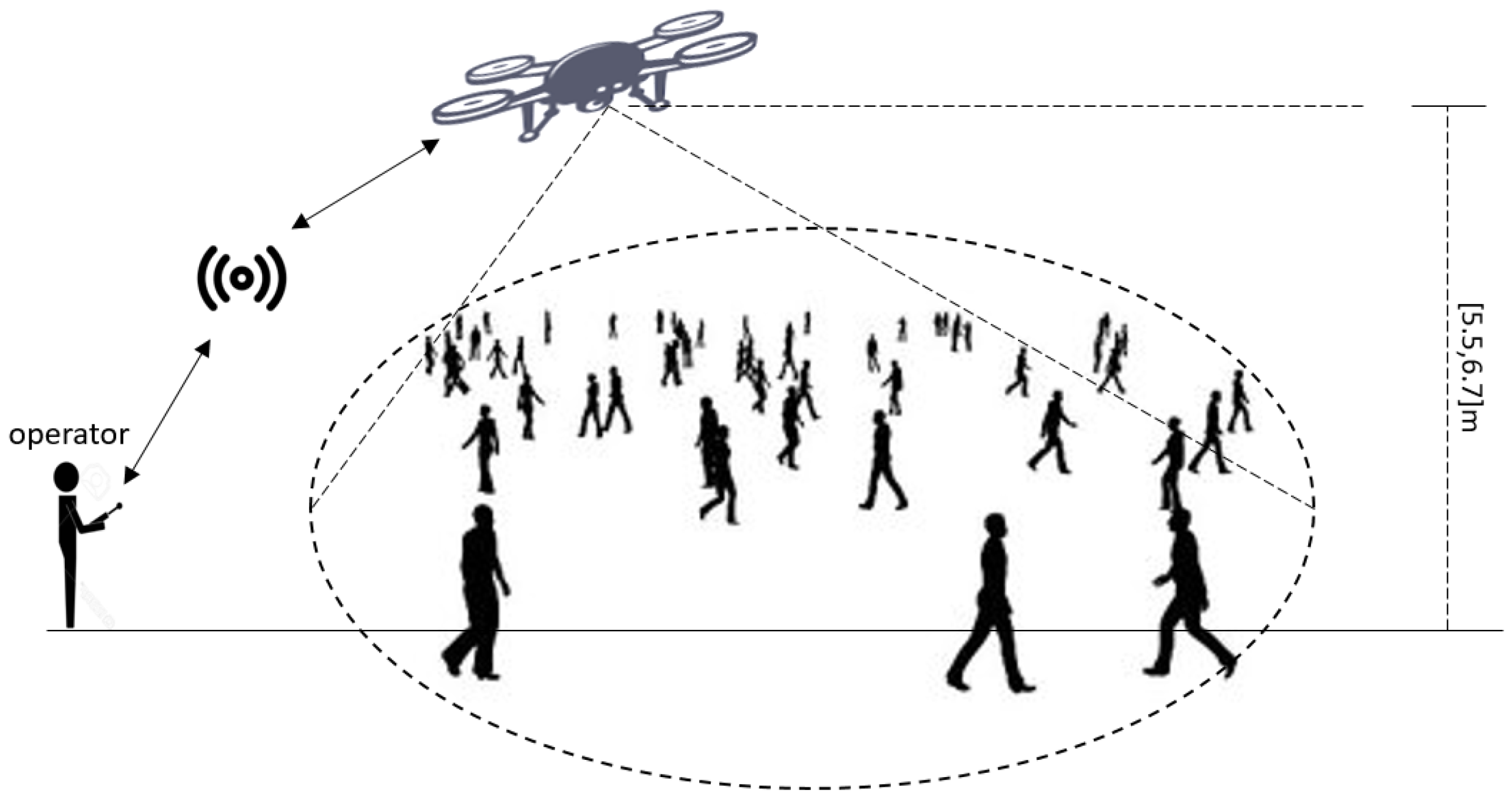

The P-DESTRE dataset, developed by researchers from the University of Beira Interior (Portugal) and the JSS Science and Technology University (India), is a fully annotated dataset for detecting, tracking, re-identificating, and searching pedestrians from aerial devices [

26]. The dataset was collected using “DJI Phantom 4” drones piloted by humans to fly at altitudes ranging from 5.5 to 6.7 m and collect data from a volunteer audience walking. An illustration of how the data was acquired is showed in

Figure 2, along with a statistic summary detailed in

Table 1. The dataset comprises 75 videos recorded at a rate of 30 frames Per Second (FPS), containing a total of 318,745 annotated instances of 269 different IDs. The image resolution is

pixels.

The primary distinguishing feature of the P-DESTRE dataset is the pedestrian search challenge, where data are collected over long periods with constant ID labels across observations. This characteristic distinguishes it from comparable datasets, making it an excellent case study for training and evaluating frameworks for pedestrian tracking and re-identification from aerial devices. In this context, re-identification techniques cannot rely on clothing appearance-based features, which is a key property that distinguishes search from the less challenging re-identification problem.

The P-DESTRE dataset was used for all experiments, and it provides a unique and valuable resource for research on pedestrian tracking and re-identification from aerial devices. In the future, the researchers plan to explore more aerial datasets for further investigation.

5. Methodology

In our prior publication [

16], we introduced a method founded on directional statistics that enables the learning of a condensed representation for each identification (ID) within a unit spherical space. The ID data obtained was a collection of von Mises–Fisher (vMF) distributions that were parameterized by

, where

. The learning procedure for this method was detailed in

Section 2. The aim of this method was to track and re-identify a set of pre-defined pedestrians. Nonetheless, in an open-world scenario such as security environments, the prospect of encountering new pedestrians not present in the dataset is highly probable. Consequently, the model must be capable of detecting such pedestrians as Out-Of-Distribution (OOD).

The proposed scoring functions presented for discriminating between ID and OOD are chiefly derived from the compressed representation of the objects within the ID. These functions enforce the embedding of each class within a compact cluster by the Convolutional Neural Networks (CNNs).

Motivated by this observation, the potential synergy between the vMF-based model and OOD scoring functions warrants exploration. Inspired by the two works presented in the previous section, we propose a framework that merges both approaches.

5.1. Out-Of-Distribution Pedestrian Based on von Mises–Fisher Distribution

5.1.1. Learning

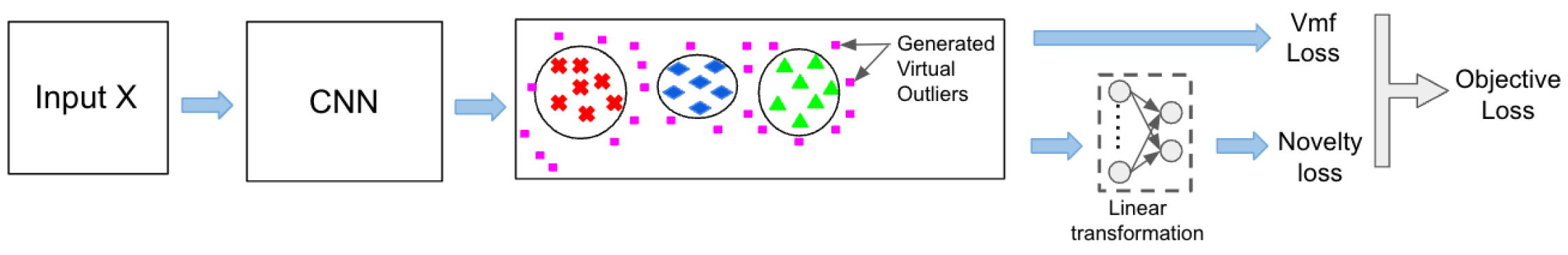

We propose an end-to-end learning framework for pedestrian re-identification and novelty detection that is as simple to train as the traditional training method with soft-max loss. The framework consists of three main components: a representational visual feature based on a Convolutional Neural Network (CNN), the adoption of the VOS method to detect Out-Of-Distribution (OOD) pedestrians, and the generation of hard virtual outliers by sampling from the embedding space. We posit that sampling from the embedding space cannot only aid in detecting novelty but also help build a robust model. By assuming that the score function will help to learn a more compact embedding for each identification while simultaneously detecting OOD pedestrians as non-semantic shifts, we generate hard virtual outliers.

Figure 3 illustrates the proposed framework during the training phase.

Once the hard virtual outliers are generated, two parallel heads are computed. The first head computes the von Mises–Fisher (vMF) loss using the embeddings of the identification. The second head computes the novelty loss over the binary output of the linear transformation. This loss aims to distinguish between virtual outliers and identification embeddings. The objective loss is then computed by taking a weighted sum of the two losses.

In the VOS framework, the uncertainty loss (novelty loss) is defined using the binary sigmoid loss.

where

represents the weights of the classification head for novelty detection,

is the energy score function, and

is the weights of the CNNs base encoder.

To train this framework in an end-to-end manner, we combined the two losses to form one objective training loss.

This is a weighted loss, where

is the weighted of the uncertainty loss. The Algorithm 2 illustrates the different steps of the learning framework.

| Algorithm 2 The learning algorithm. |

| Input: ID data , queue size for Gaussian density estimation, weight for uncertainty regularization, and . |

| Output: pedestrian re-identification parameterized by , and novelty detector parameterized by |

| While train do: |

5.1.2. Inference

In the inference process for a given pedestrian input, two main parts are involved. The first part involves utilizing the OOD scoring function,

, to determine whether the pedestrian is OOD or not. This decision can be made based on a chosen threshold, which is determined by the experimental settings.

Once a pedestrian input is determined to be an ID, the proposed framework uses the vMF framework as the second step to re-identify the pedestrian. The re-identification process involves measuring the similarity between the input embedding in the unit sphere and the learned mean direction, . This comparison is achieved by calculating the cosine similarity between the input pedestrian and the mean direction associated with each pedestrian ID. The input pedestrian is then assigned to the ID of the pedestrian with the highest cosine similarity to its mean direction.

6. Experiments

In this section, we present a comprehensive summary of the experiments conducted to assess the performance of our proposed framework. We followed a standard procedure in all experiments, which involved training the proposed framework on the training set of the In-Distribution (ID) dataset, denoted as . The evaluation was performed on the union of two sets: the validation set of the ID, denoted as , and the Out-Of-Distribution (OOD) dataset, denoted as . It is crucial to note that the ID and OOD datasets should not have any overlapping person identities.

The setting of the ID and OOD datasets can be performed in two distinct ways. Firstly, two separate datasets with no common person identities can be selected and set as the ID and OOD datasets, respectively. Secondly, the same dataset can be divided into ID and OOD by splitting each set into ID and OOD with a predetermined ratio, for the training, validation, and testing sets.

It is important to emphasize that during both the training and validation phases, the framework does not have access to the OOD dataset. The performance of the model was monitored based on the validation set of the ID and the online generated virtual outliers. This involved selecting the appropriate model checkpoint and optimizing the hyper-parameters of the framework. During the testing phase, we used both the test set of and to evaluate the model’s long-term re-identification and OOD detection capabilities.

6.1. Two Different Dataset Settings

6.1.1. ID Dataset

The P-DESTRE dataset

http://http://p-destre.di.ubi.pt/download.html (accessed on 5 March 2023) was utilized as the (ID) data in the pedestrian re-identification experiments. A thorough description of the P-DESTRE dataset can be found in

Section 3. To perform the experiments, the data were randomly divided into 5 folds, with each fold consisting of a 50% learning set, a 10% gallery set, and a 40% query set. This division of data into folds helps to ensure a fair evaluation of the models and provides a comprehensive understanding of the performance of the models across different data splits. Additionally, this also helps to mitigate the risk of overfitting and provides a more robust evaluation of the models, given that the models are tested on unseen data. By using a randomly divided dataset, the results of the experiments are more representative of the models’ general performance and can be better compared to other models and their results. The detailed information regarding this split is provided in

http://p-destre.di.ubi.pt/pedestrian_detection_splits.zip (accessed on 5 March 2023).

6.1.2. OOD Dataset

As OOD, we used CUHK03-NP, which is a widely used dataset in the field of pedestrian re-identification. It contains 14,097 images of 1467 identities [

22]

https://github.com/zhunzhong07/person-re-ranking/blob/master/CUHK03-NP/README.md (accessed on 5 March 2023). All the splits were used in the evaluation as an

. The CUHK03-NP dataset is a commonly used benchmark dataset in the field of computer vision and machine learning, particularly for evaluating person re-identification algorithms. The dataset was collected from two camera views at the Chinese University of Hong Kong and consists of 1467 identities captured in a multi-shot manner. Each identity is captured with both color and depth images, providing a rich source of information for developing and testing algorithms. The dataset has become a popular choice for researchers due to its large scale and the presence of challenging conditions, such as occlusions and viewpoint changes, which pose difficulties for re-identification algorithms. The CUHK03-NP dataset has been used in many recent studies, demonstrating its utility as a benchmark for evaluating the performance of re-identification algorithms under various conditions.

Table 2 summarises the dataset properties.

6.1.3. Training Details

From each image, the bounding boxes are cropped and scaled to patches of dimension

pixels. For the prepossessing, the input patches are normalized using the mean and standard deviation learned from imagenet dataset. The feature extractor architecture is made up of two parts: a base model and a header. As a base model, we used Wide ReseNet-50 (WRN) [

34], with a header consisting of two Fully connected Pooling Layers (FPL) of sizes

neurons. The feature extractor was trained using 50 epochs with a batch size of 64; 128 is the embedding space dimension. The used optimizer is Adam, with a learning rate starting with

and decreasing by a factor of

every 25 of the training epochs. We set the concentration parameter,

, to 15 for the learning algorithm hyper-parameter. This number produced the best outcomes experimentally. We update the mean directions after every epoch.

For hyper-parameters related to VOS, we used 500 samples per identity to estimate the class-conditional Gaussians. We set . We sampled 1000 virtual outliers from the embedding space. We also set . The linear transformation consists of two layers of neurons. The first layer has a number of nodes equal to the number of identities so that the energy based can be computed per identity as designed in VOS.

6.1.4. Two Datasets Settings

In evaluating the framework’s capability in detecting Out-Of-Distribution (OOD) samples, we employed the Area Under the Curve (AUC) based on Precision/Recall curve metric. This metric was chosen for a number of compelling reasons. Firstly, it is a more accurate metric when dealing with imbalanced data, which is the case in the ratio of ID vs. OOD samples in the open world scenario. Secondly, it is preferred when the positive class, in this case ID, is of utmost importance. The results in

Table 3 summarize the performance of a pedestrian tracking and re-identification framework based on the Area Under the Curve based on the Precision/Recall curve (AUC-PR) metric. The framework was tested using two datasets, the ID dataset P-DESTRE and the OOD dataset CUHK03-NP. The results show that the framework had a performance of 63.10% ± 1.64% AUC-PR using the Wide Residual Network (WRN) as its backbone. This suggests that the framework performed well in detecting ODD samples; however, the results should be interpreted with caution as the standard deviation of the results is not provided, which can give an indication of the variance in the performance of the model. Additionally, it would be beneficial to compare the performance with other existing methods to put the results into perspective.

6.2. One Dataset Setting

Another way to evaluate the framework is on the same pedestrian dataset but divided into ID and OOD by identities. Detecting OOD data points in a different dataset setting is expected to be easier comparing to the one dataset setting regarding the image acquisition setups. The differences between the two image acquisitions, such as the type of cameras, the angle, and the lighting, can play a role in obtaining a separable embedding between ID and OOD. Although it is important to detect OOD from a different setup, it is also important to test the model on ID and OOD from the same image acquisition setup. We believe that the one dataset setup is more likely to happen in the open world environment. In addition, to detect the non-semantic shifts (new pedestrians) in the same setup is more challenging, and the model has to rely on features such as face and body characteristics rather than clothing appearances.

Because the P-DESTRE dataset is randomly divided into 5 folds, we divided each fold into two datasets, ID and OOD, based on the identities. The division ration is for ID and OOD, respectively. It is worth mentioning that this division is preformed for each fold.

For the training details, everything is almost the same as in the two different dataset settings, except we lowered the value of to . This can be explained by the fact that distinguishing the ID from the OOD in this setting is more challenging and requires a harder virtual outlier to learn a better score function, .

We evaluated the model the same way we evaluated the two different dataset settings. The results presented in

Table 4 show the performance of a framework using the WRN backbone on the ID dataset. The performance is evaluated using the Area Under the Curve based on Precision-Recall (AUC-PR) metric, and the results are reported as the mean ± standard deviation over multiple runs. The results show that the framework has an AUC-PR of 55.19% ± 3.02%. This indicates that the framework has moderate performance in identifying instances of the positive class (ID) in the P-DESTRE dataset, with some variance in performance between runs.

6.3. Long-Term Pedestrian Re-Identification

The effectiveness of our re-identification method can be evaluated using two methods. Firstly, we calculate the nearest mean direction of the IDs using Equation

. Secondly, we assess the top-N recall performance using commonly used metrics in the field. The results, summarized in

Table 5, demonstrate a significant improvement over other state-of-the-art methods in different metrics. This confirms that the vMF-based feature extractor is able to learn robust features that help in recognizing a person, rather than their clothing appearance. Furthermore, our results show that the integration of the vMF method with the VOS framework leads to even better re-identification performance, as it creates a synergy that pushes the embedding of each identity to be more compact and distinct from other identities and Out-Of-Distribution samples. The comparison between the method ArcFace with COSAM and the proposed vMF identifier with and without the VOS framework was performed in terms of Mean Average Precision (mAP), Rank-1 accuracy, Rank-20 accuracy, and Mean Direction. The results indicate that the vMF identifier, both with and without VOS, significantly outperforms the ArcFace with COSAM method. With a mAP of 40.85% ± 3.42%, the vMF identifier achieved a higher performance compared to the ArcFace with COSAM, which had a mAP of 34.90% ± 6.43%. Similarly, the Rank-1 accuracy and Rank-20 accuracy were higher for the vMF identifier, with values of 63.81% ± 4.50% and 88.61% ± 8.50%, respectively, compared to the 49.88% ± 8.01% and 70.10% ± 11.25% achieved by the ArcFace with COSAM. Furthermore, the vMF identifier also outperformed the ArcFace with COSAM in terms of the Mean Direction with a value of 64.45% ± 3.90%. These results demonstrate the effectiveness of the proposed vMF identifier in pedestrian re-identification.

6.4. Analysis

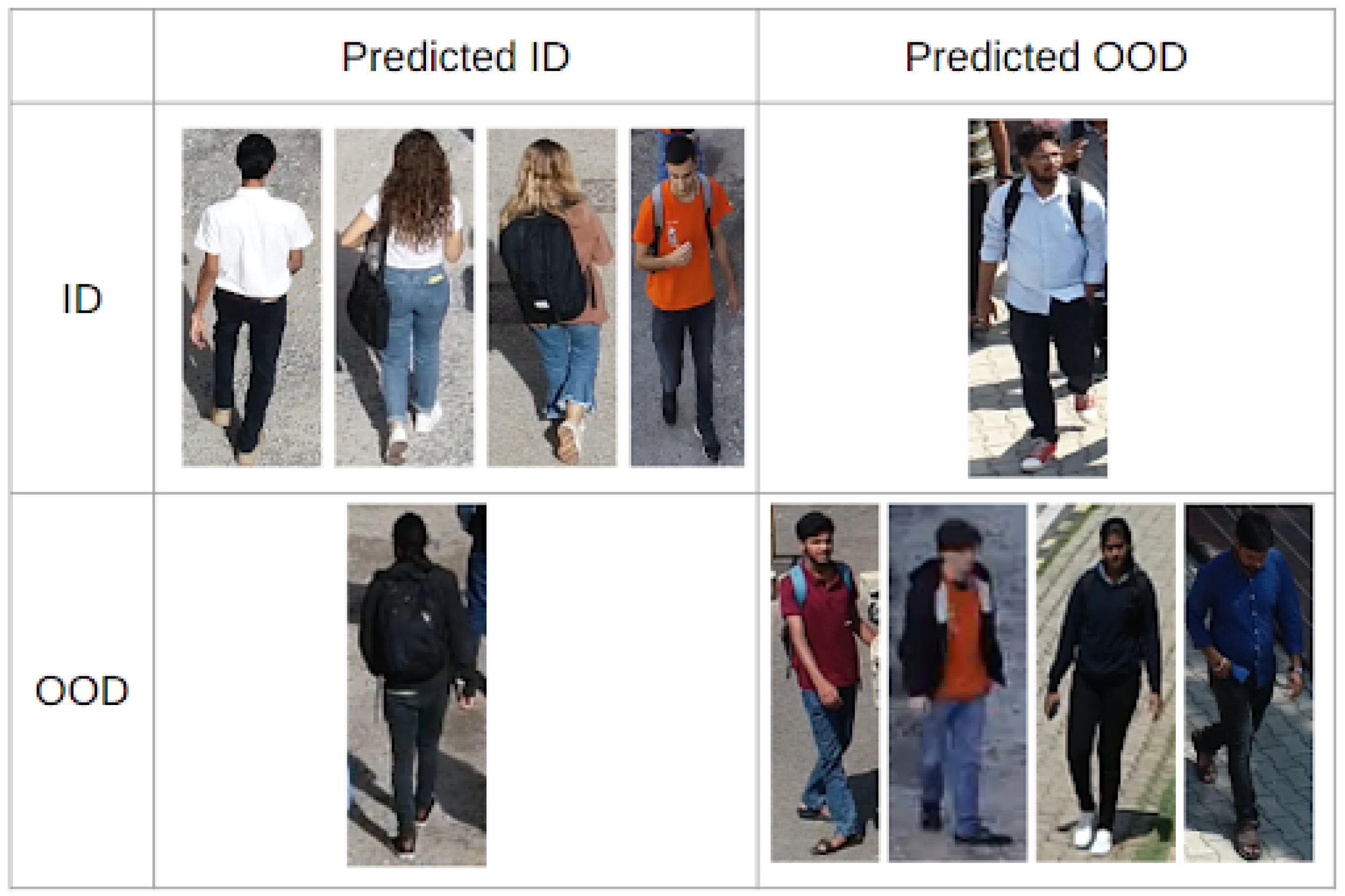

Figure 4 presents a binary confusion matrix that delineates instances of ID and OOD, whereby the model prediction is compared to the ground truth. In delving deeper into examples of wrong predictions, we observed that these instances were frequently mapped in close proximity to the decision boundary where low energy function is learned.

In the case of the example where OOD was predicted as ID, we noted that when an individual’s image was captured from the back, it was often predicted as ID. Conversely, when the same individual’s image was captured from the front, the model predicted it as ID with greater accuracy.

In the instance where ID was predicted as OOD, our analysis suggested that this was a limitation of the trained model. Further improvements in the model are needed to better distinguish between similar-looking identities.