Method of 3D Voxel Prescription Map Construction in Digital Orchard Management Based on LiDAR-RTK Boarded on a UGV

Abstract

1. Introduction

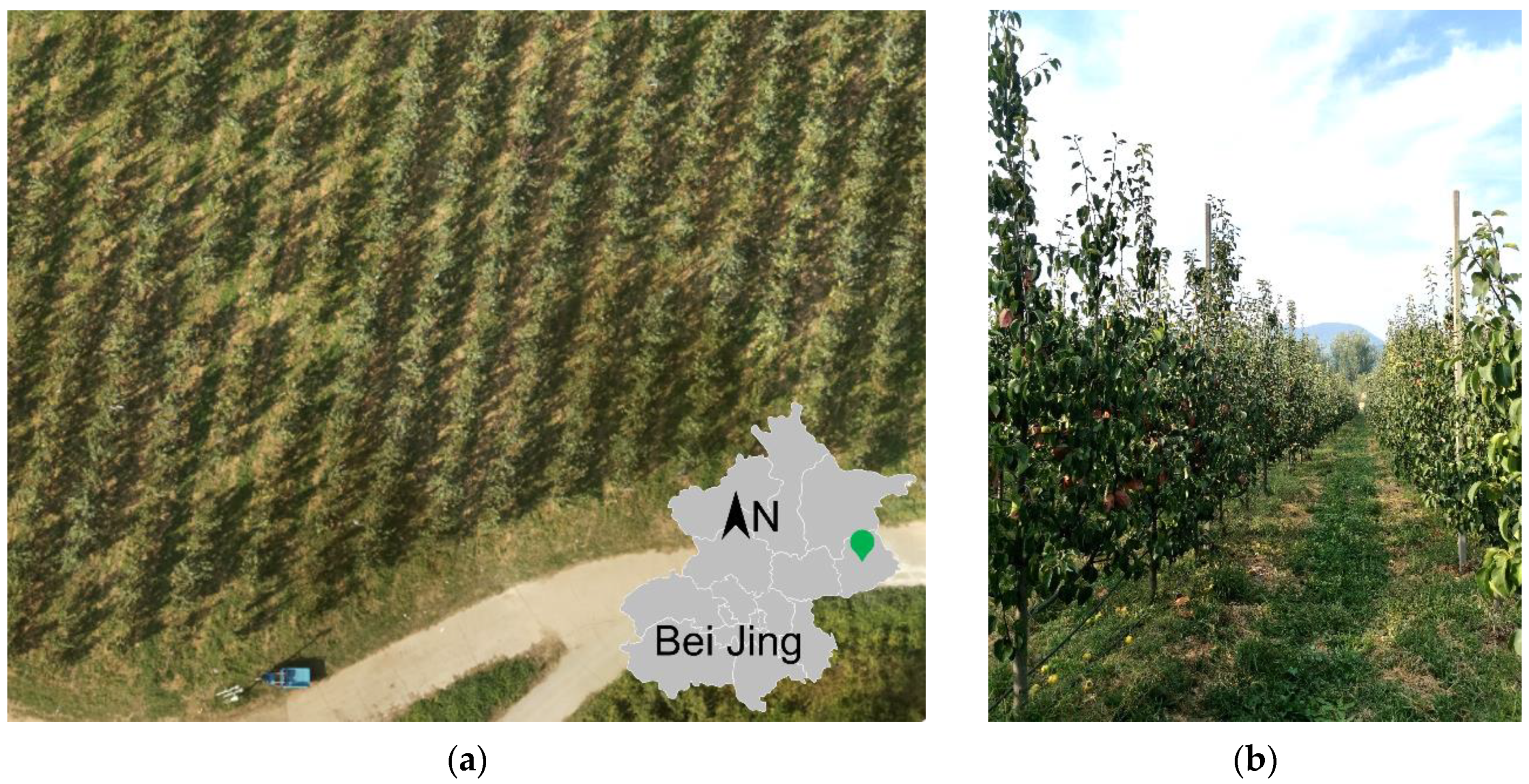

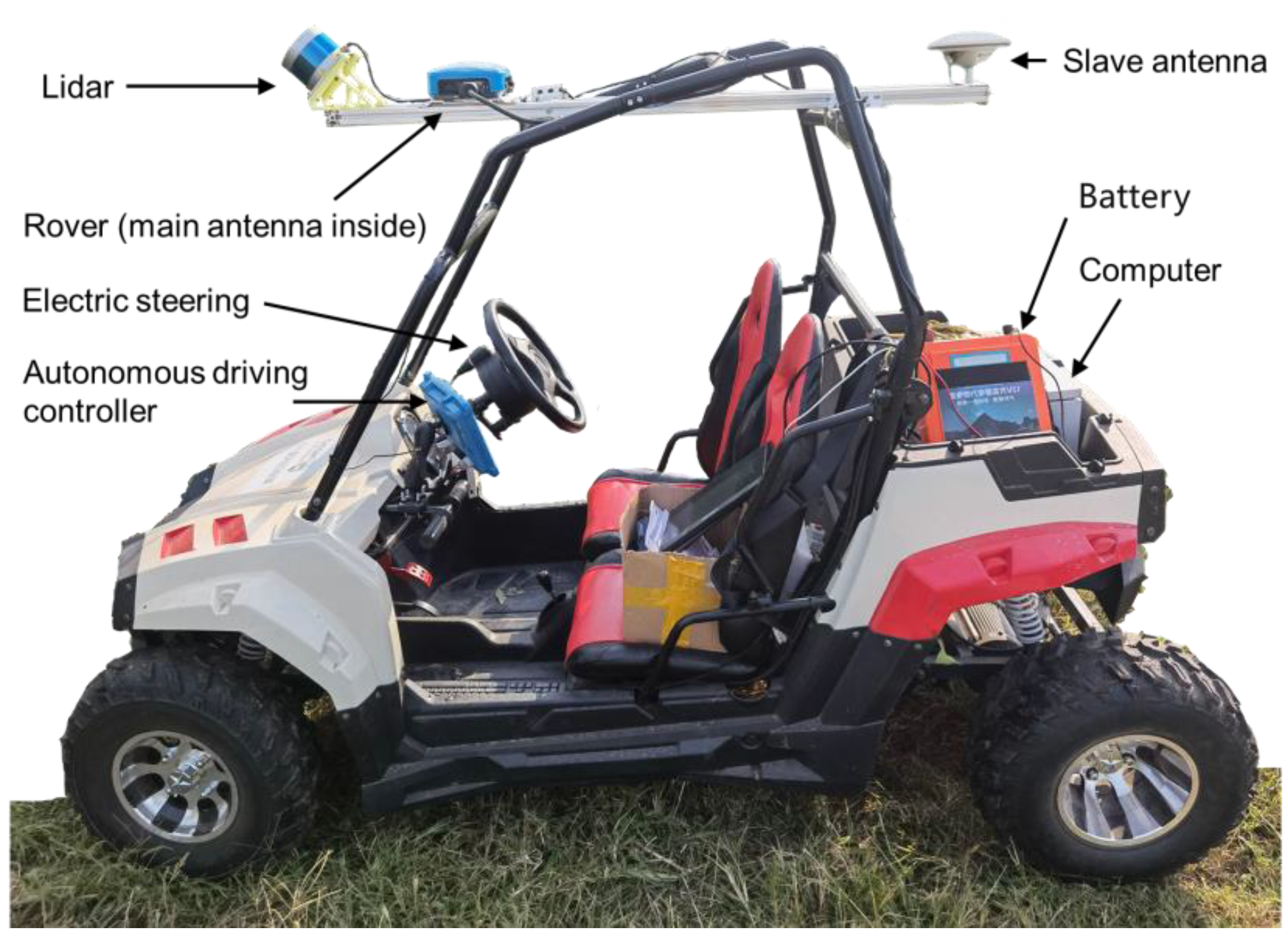

2. Materials and Methods

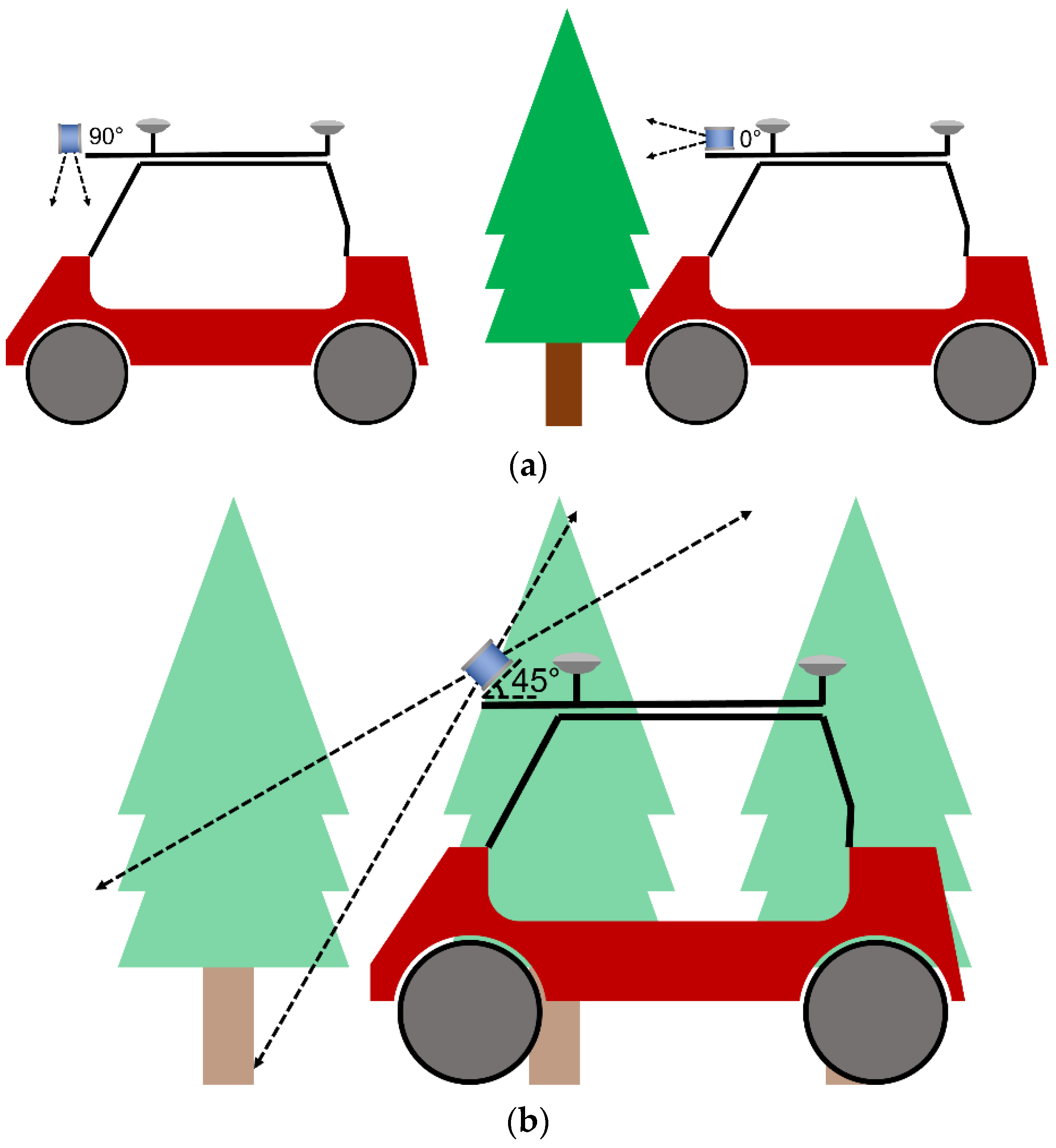

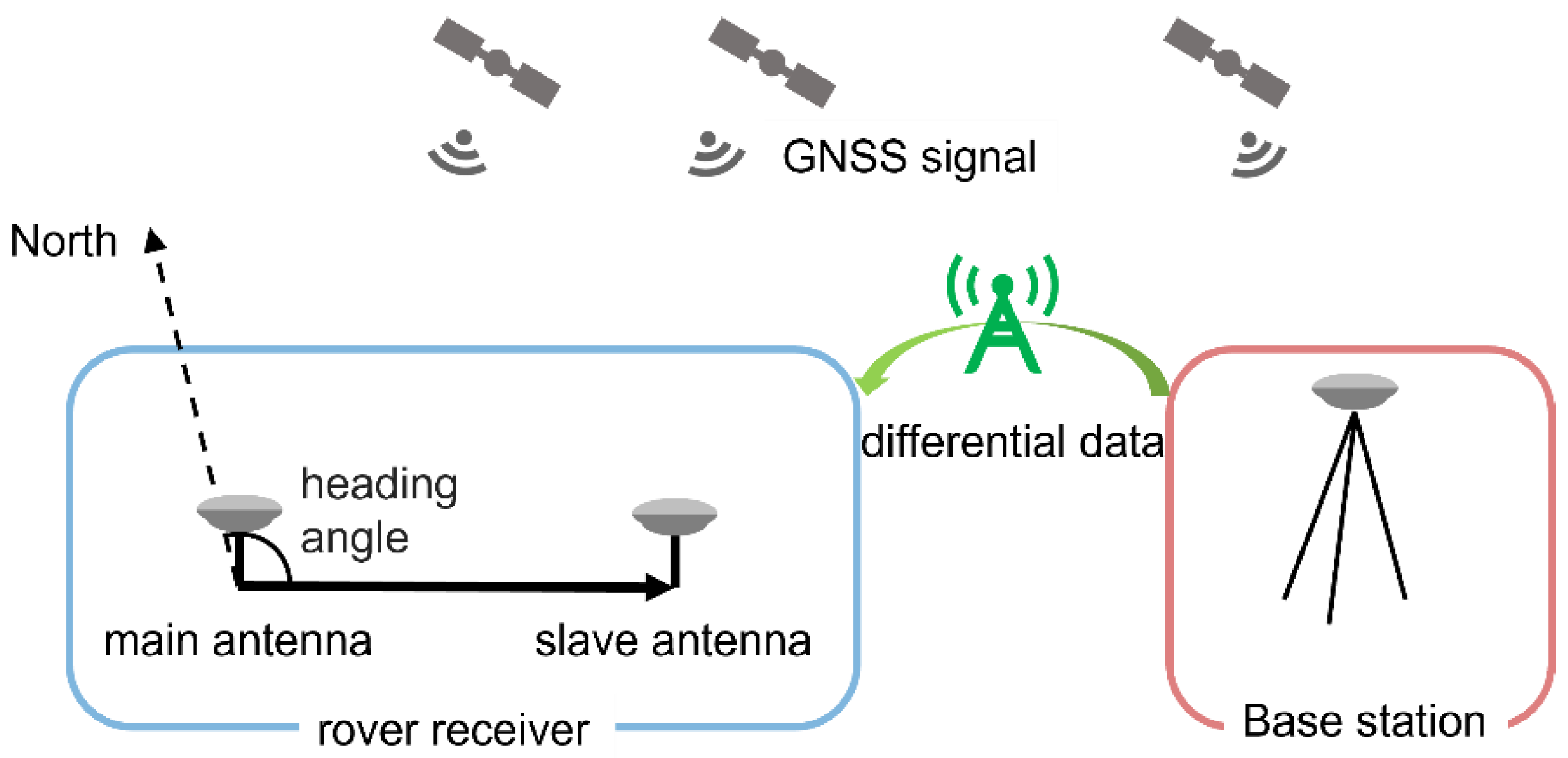

2.1. Design of the LiDAR-RTK Fusion Data Acquisition System

2.2. Voxel Prescription Map Generation

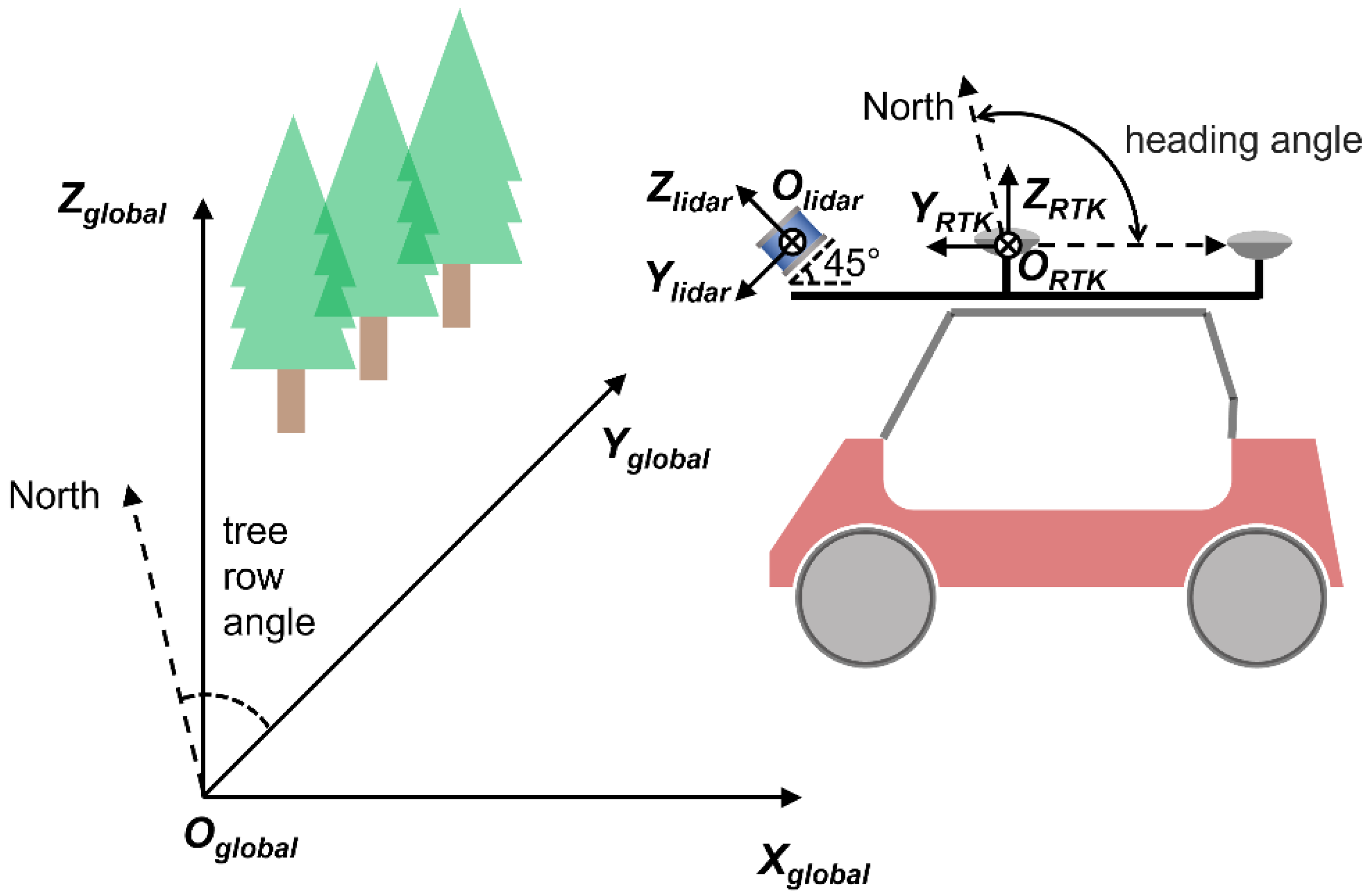

2.2.1. Establish the Coordinate System

2.2.2. Point Cloud Pre-Processing

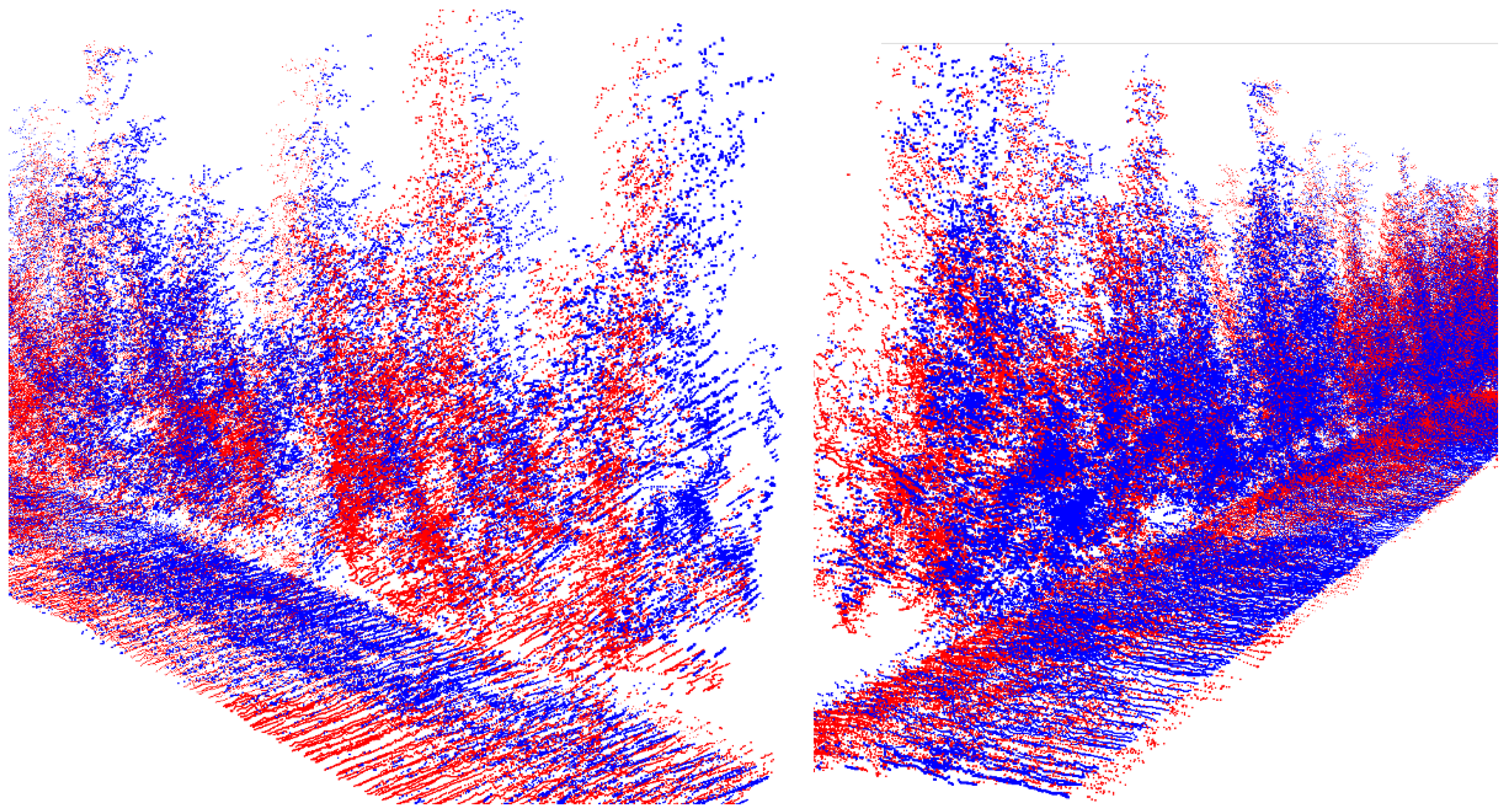

2.2.3. Point Cloud Registration

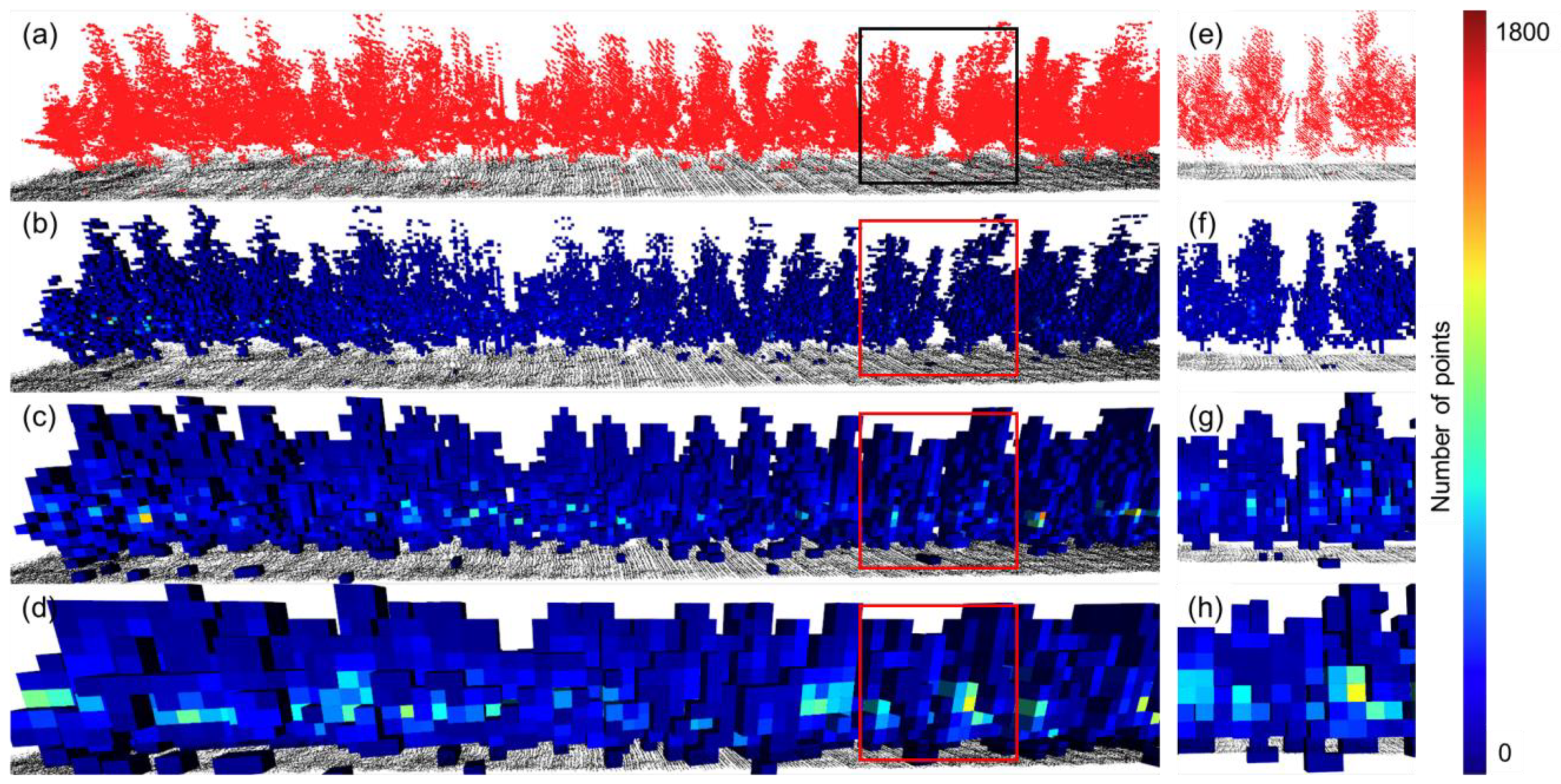

2.2.4. Generating Voxel Prescription Map

2.3. Accuracy Analysis

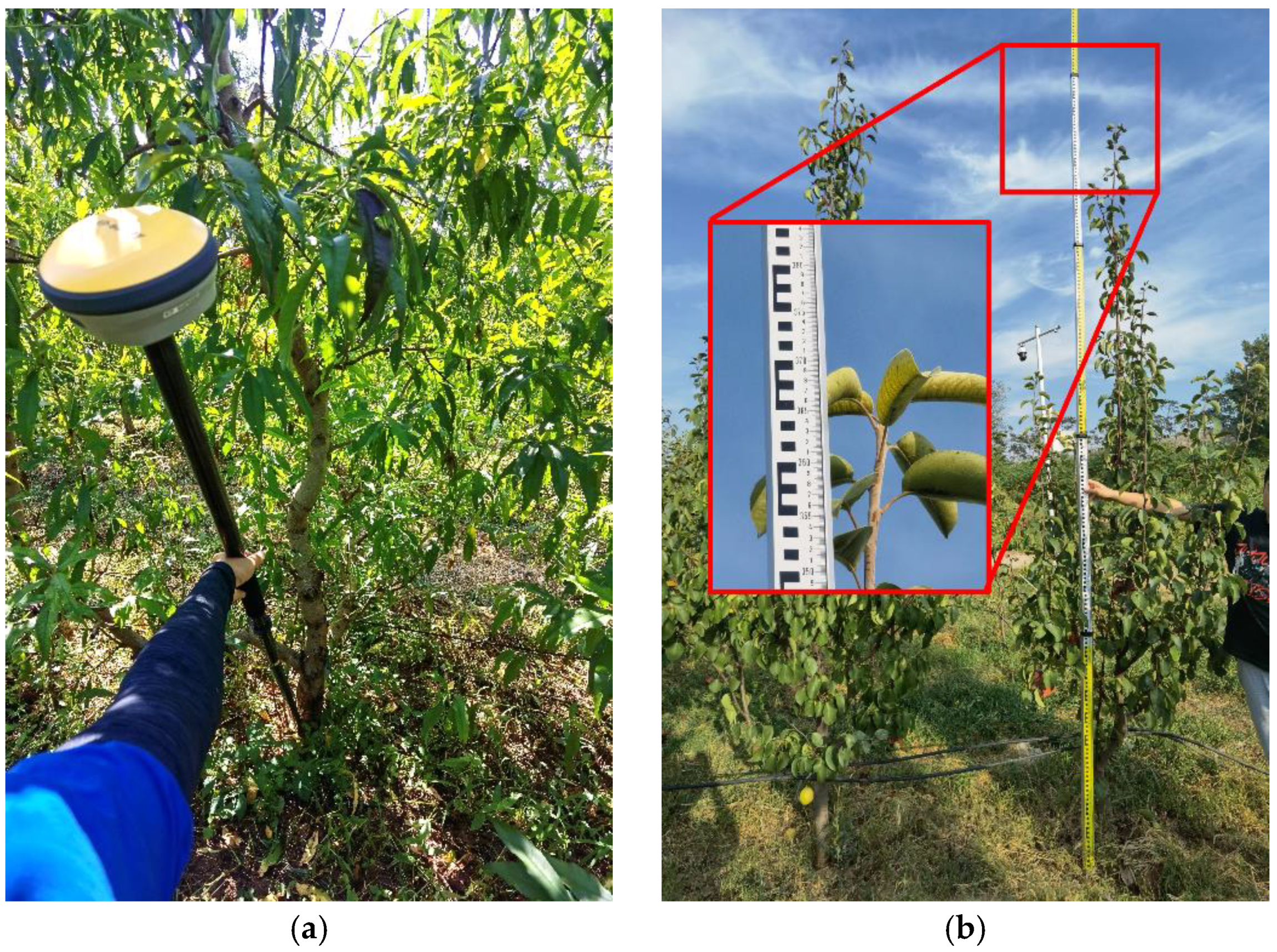

2.3.1. Ground Measurements

2.3.2. Statistical Analysis

3. Results

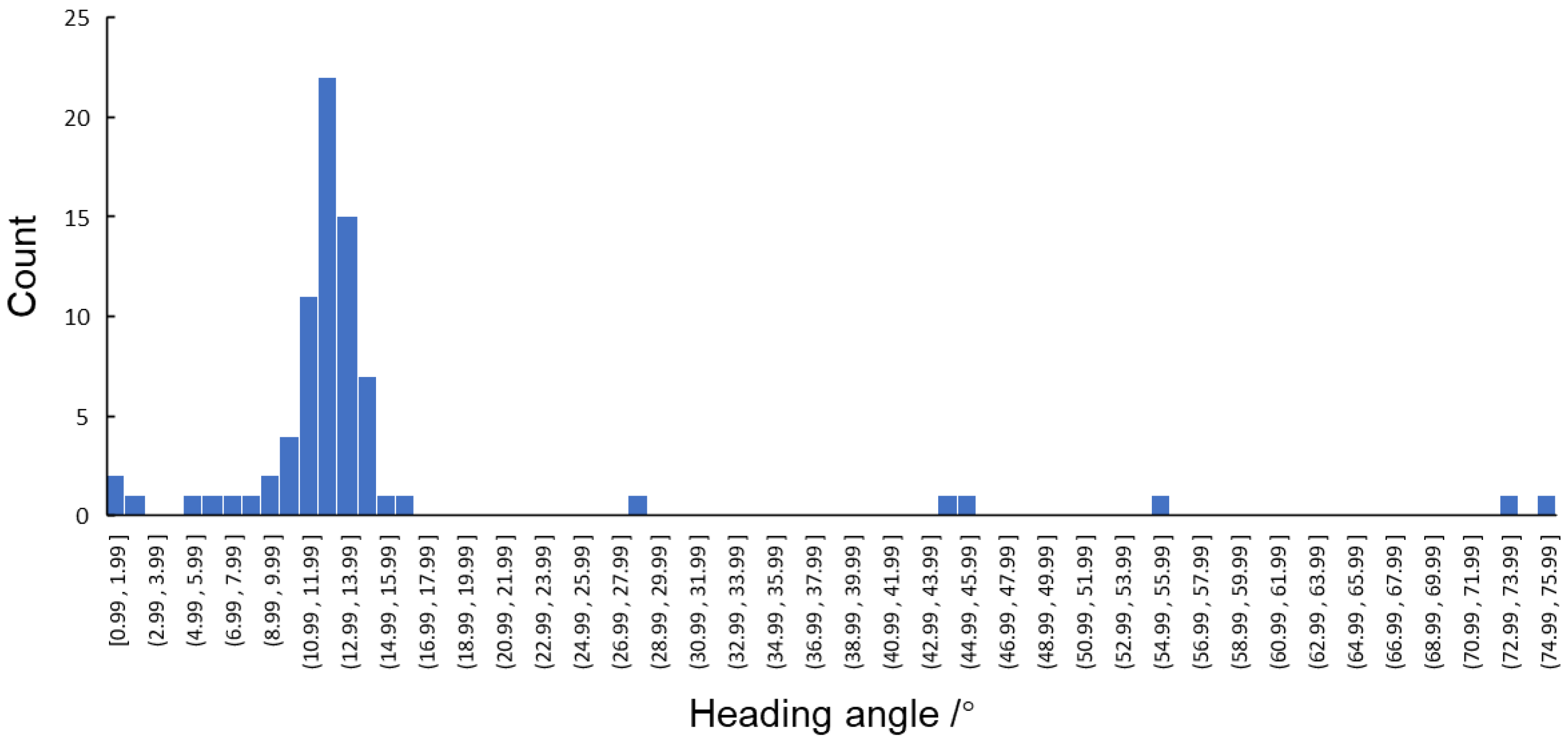

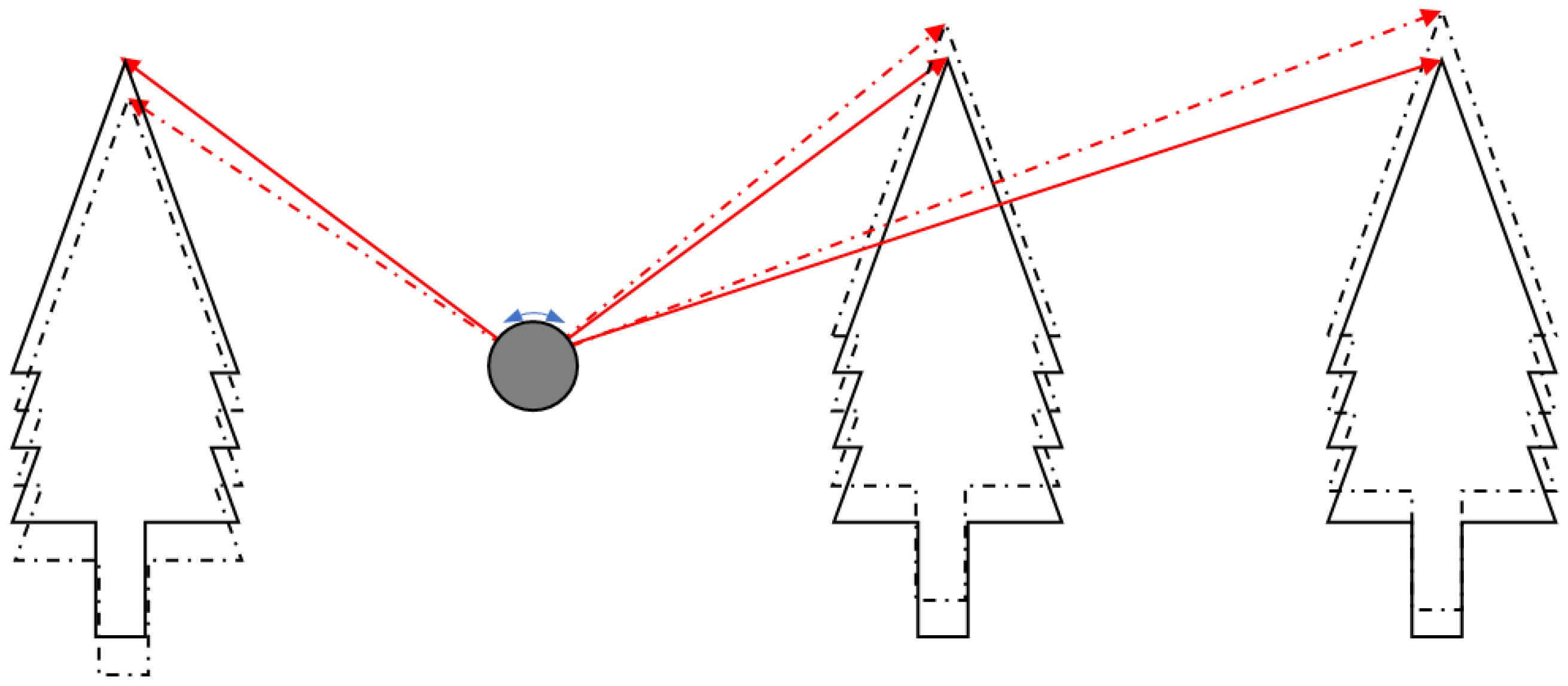

3.1. Measurement of Tree Row Angles

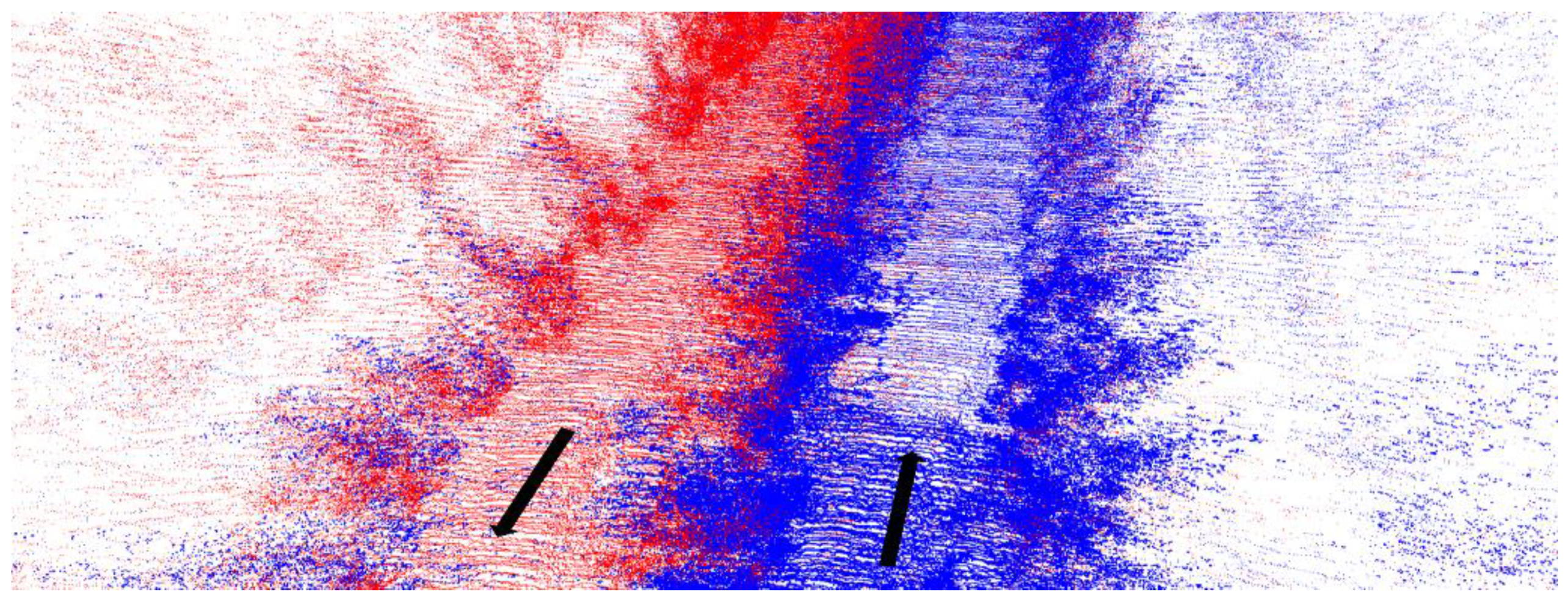

3.2. Visualization of Point Cloud and Voxel Prescription Map

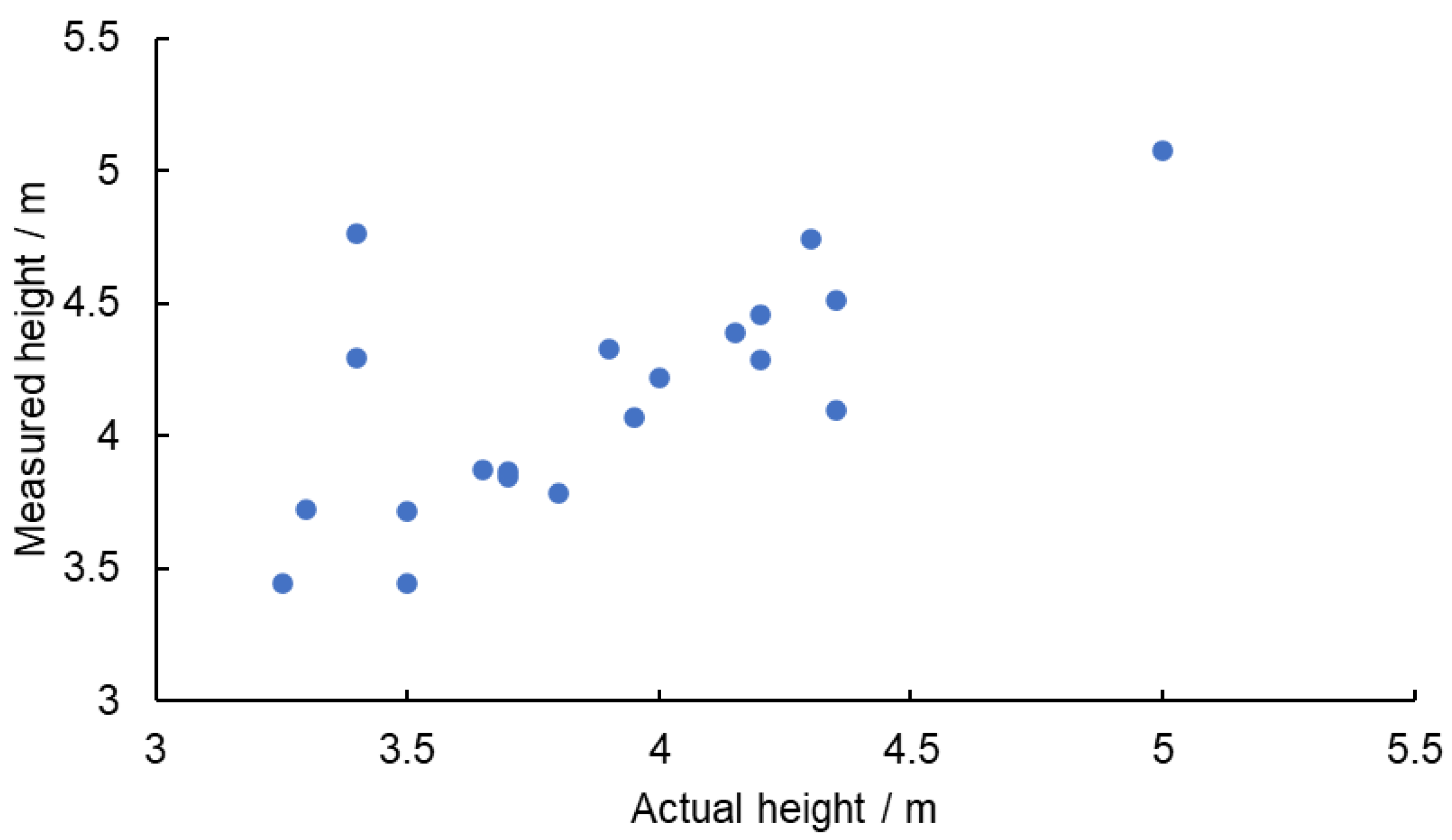

3.3. Accuracy of Tree Height Measurements

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Duga, A.T.; Ruysen, K.; Dekeyser, D.; Nuyttens, D.; Bylemans, D.; Nicolai, B.M.; Verboven, P. Spray Deposition Profiles in Pome Fruit Trees: Effects of Sprayer Design, Training System and Tree Canopy Characteristics. Crop Prot. 2015, 67, 200–213. [Google Scholar] [CrossRef]

- Zhu, H.; Brazee, R.D.; Derksen, R.C.; Fox, R.D.; Krause, C.R.; Ozkan, H.E.; Losely, K. Specially Designed Air-Assisted Sprayer to Improve Spray Penetration and Air Jet Velocity Distribution Inside Dense Nursery Crops. Trans. ASABE 2006, 49, 1285–1294. [Google Scholar] [CrossRef]

- De Cock, N.; Massinon, M.; Salah, S.O.T.; Lebeau, F. Investigation on Optimal Spray Properties for Ground Based Agricultural Applications Using Deposition and Retention Models. Biosyst. Eng. 2017, 162, 99–111. [Google Scholar] [CrossRef]

- Gentil-Sergent, C.; Basset-Mens, C.; Gaab, J.; Mottes, C.; Melero, C.; Fantke, P. Quantifying Pesticide Emission Fractions for Tropical Conditions. Chemosphere 2021, 275, 130014. [Google Scholar] [CrossRef] [PubMed]

- Grella, M.; Marucco, P.; Manzone, M.; Gallart, M.; Balsari, P. Effect of Sprayer Settings on Spray Drift during Pesticide Application in Poplar Plantations (Populus Spp.). Sci. Total Environ. 2017, 578, 427–439. [Google Scholar] [CrossRef]

- Hong, S.-W.; Zhao, L.; Zhu, H. CFD Simulation of Pesticide Spray from Air-Assisted Sprayers in an Apple Orchard: Tree Deposition and off-Target Losses. Atmos. Environ. 2018, 175, 109–119. [Google Scholar] [CrossRef]

- Sinha, R.; Ranjan, R.; Khot, L.R.; Hoheisel, G.; Grieshop, M.J. Drift Potential from a Solid Set Canopy Delivery System and an Axial–Fan Air–Assisted Sprayer during Applications in Grapevines. Biosyst. Eng. 2019, 188, 207–216. [Google Scholar] [CrossRef]

- Rathnayake, A.P.; Chandel, A.K.; Schrader, M.J.; Hoheisel, G.A.; Khot, L.R. Spray Patterns and Perceptive Canopy Interaction Assessment of Commercial Airblast Sprayers Used in Pacific Northwest Perennial Specialty Crop Production. Comput. Electron. Agric. 2021, 184, 106097. [Google Scholar] [CrossRef]

- Otto, S.; Loddo, D.; Baldoin, C.; Zanin, G. Spray Drift Reduction Techniques for Vineyards in Fragmented Landscapes. J. Environ. Manag. 2015, 162, 290–298. [Google Scholar] [CrossRef]

- Garcerá, C.; Doruchowski, G.; Chueca, P. Harmonization of Plant Protection Products Dose Expression and Dose Adjustment for High Growing 3D Crops: A Review. Crop Prot. 2021, 140, 105417. [Google Scholar] [CrossRef]

- Zaman, Q.U.; Esau, T.J.; Schumann, A.W.; Percival, D.C.; Chang, Y.K.; Read, S.M.; Farooque, A.A. Development of Prototype Automated Variable Rate Sprayer for Real-Time Spot-Application of Agrochemicals in Wild Blueberry Fields. Comput. Electron. Agric. 2011, 76, 175–182. [Google Scholar] [CrossRef]

- Gil, E.; Llorens, J.; Llop, J.; Fàbregas, X.; Escolà, A.; Rosell-Polo, J.R. Variable Rate Sprayer. Part 2–Vineyard Prototype: Design, Implementation, and Validation. Comput. Electron. Agric. 2013, 95, 136–150. [Google Scholar] [CrossRef]

- Llorens, J.; Gil, E.; Llop, J.; Escolà, A. Ultrasonic and LIDAR Sensors for Electronic Canopy Characterization in Vineyards: Advances to Improve Pesticide Application Methods. Sensors 2011, 11, 2177–2194. [Google Scholar] [CrossRef] [PubMed]

- Nan, Y.; Zhang, H.; Zheng, J.; Bian, L.; Li, Y.; Yang, Y.; Zhang, M.; Ge, Y. Estimating Leaf Area Density of Osmanthus Trees Using Ultrasonic Sensing. Biosyst. Eng. 2019, 186, 60–70. [Google Scholar] [CrossRef]

- Palleja, T.; Landers, A.J. Real Time Canopy Density Validation Using Ultrasonic Envelope Signals and Point Quadrat Analysis. Comput. Electron. Agric. 2017, 134, 43–50. [Google Scholar] [CrossRef]

- Liu, L.; Liu, Y.; He, X.; Liu, W. Precision Variable-Rate Spraying Robot by Using Single 3D LIDAR in Orchards. Agronomy 2022, 12, 2509. [Google Scholar] [CrossRef]

- Salcedo, R.; Zhu, H.; Ozkan, E.; Falchieri, D.; Zhang, Z.; Wei, Z. Reducing Ground and Airborne Drift Losses in Young Apple Orchards with PWM-Controlled Spray Systems. Comput. Electron. Agric. 2021, 189, 106389. [Google Scholar] [CrossRef]

- Hu, M.; Whitty, M. An Evaluation of an Apple Canopy Density Mapping System for a Variable-Rate Sprayer. IFAC-PapersOnLine 2019, 52, 342–348. [Google Scholar] [CrossRef]

- Sultan Mahmud, M.; Zahid, A.; He, L.; Choi, D.; Krawczyk, G.; Zhu, H.; Heinemann, P. Development of a LiDAR-Guided Section-Based Tree Canopy Density Measurement System for Precision Spray Applications. Comput. Electron. Agric. 2021, 182, 106053. [Google Scholar] [CrossRef]

- Li, Q.; Xue, Y. Total Leaf Area Estimation Based on the Total Grid Area Measured Using Mobile Laser Scanning. Comput. Electron. Agric. 2023, 204, 107503. [Google Scholar] [CrossRef]

- Manandhar, A.; Zhu, H.; Ozkan, E.; Shah, A. Techno-Economic Impacts of Using a Laser-Guided Variable-Rate Spraying System to Retrofit Conventional Constant-Rate Sprayers. Precis. Agric. 2020, 21, 1156–1171. [Google Scholar] [CrossRef]

- Pfeiffer, S.A.; Guevara, J.; Cheein, F.A.; Sanz, R. Mechatronic Terrestrial LiDAR for Canopy Porosity and Crown Surface Estimation. Comput. Electron. Agric. 2018, 146, 104–113. [Google Scholar] [CrossRef]

- Kameyama, S.; Sugiura, K. Estimating Tree Height and Volume Using Unmanned Aerial Vehicle Photography and SfM Technology, with Verification of Result Accuracy. Drones 2020, 4, 19. [Google Scholar] [CrossRef]

- Mahmud, M.S.; He, L.; Heinemann, P.; Choi, D.; Zhu, H. Unmanned Aerial Vehicle Based Tree Canopy Characteristics Measurement for Precision Spray Applications. Smart Agric. Technol. 2023, 4, 100153. [Google Scholar] [CrossRef]

- Sinha, R.; Quirós, J.J.; Sankaran, S.; Khot, L.R. High Resolution Aerial Photogrammetry Based 3D Mapping of Fruit Crop Canopies for Precision Inputs Management. Inf. Process. Agric. 2022, 9, 11–23. [Google Scholar] [CrossRef]

- Brocks, S.; Bendig, J.; Bareth, G. Toward an Automated Low-Cost Three-Dimensional Crop Surface Monitoring System Using Oblique Stereo Imagery from Consumer-Grade Smart Cameras. J. Appl. Remote Sens. 2016, 10, 046021. [Google Scholar] [CrossRef]

- Jay, S.; Rabatel, G.; Hadoux, X.; Moura, D.; Gorretta, N. In-Field Crop Row Phenotyping from 3D Modeling Performed Using Structure from Motion. Comput. Electron. Agric. 2015, 110, 70–77. [Google Scholar] [CrossRef]

- Andújar, D.; Moreno, H.; Bengochea-Guevara, J.M.; de Castro, A.; Ribeiro, A. Aerial Imagery or On-Ground Detection? An Economic Analysis for Vineyard Crops. Comput. Electron. Agric. 2019, 157, 351–358. [Google Scholar] [CrossRef]

- Pierzchała, M.; Giguère, P.; Astrup, R. Mapping Forests Using an Unmanned Ground Vehicle with 3D LiDAR and Graph-SLAM. Comput. Electron. Agric. 2018, 145, 217–225. [Google Scholar] [CrossRef]

- Ji, Y.; Li, S.; Peng, C.; Xu, H.; Cao, R.; Zhang, M. Obstacle Detection and Recognition in Farmland Based on Fusion Point Cloud Data. Comput. Electron. Agric. 2021, 189, 106409. [Google Scholar] [CrossRef]

- Meier, U.; Bleiholder, H.; Buhr, L.; Feller, C.; Hack, H.; Heß, M.; Lancashire, P.; Schnock, U.; Stauß, R.; Van den Boom, T.; et al. The BBCH System to Coding the Phenological Growth Stages of Plants-History and Publications. J. Kult. 2009, 61, 41–52. [Google Scholar] [CrossRef]

- Mahmud, S.; Zahid, A.; He, L.; Choi, D.; Krawczyk, G. LiDAR-Sensed Tree Canopy Correction in Uneven Terrain Conditions Using a Sensor Fusion Approach for Precision Sprayers. Comput. Electron. Agric. 2021, 191, 106565. [Google Scholar] [CrossRef]

- Torresan, C.; Carotenuto, F.; Chiavetta, U.; Miglietta, F.; Zaldei, A.; Gioli, B. Individual Tree Crown Segmentation in Two-Layered Dense Mixed Forests from UAV LiDAR Data. Drones 2020, 4, 10. [Google Scholar] [CrossRef]

- Chen, X.; Wang, S.; Zhang, B.; Luo, L. Multi-Feature Fusion Tree Trunk Detection and Orchard Mobile Robot Localization Using Camera/Ultrasonic Sensors. Comput. Electron. Agric. 2018, 147, 91–108. [Google Scholar] [CrossRef]

- Garforth, J.; Webb, B. Visual Appearance Analysis of Forest Scenes for Monocular SLAM. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–27 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1794–1800. [Google Scholar]

- Qin, J.; Sun, R.; Zhou, K.; Xu, Y.; Lin, B.; Yang, L.; Chen, Z.; Wen, L.; Wu, C. Lidar-Based 3D Obstacle Detection Using Focal Voxel R-CNN for Farmland Environment. Agronomy 2023, 13, 650. [Google Scholar] [CrossRef]

- Berk, P.; Stajnko, D.; Belsak, A.; Hocevar, M. Digital Evaluation of Leaf Area of an Individual Tree Canopy in the Apple Orchard Using the LIDAR Measurement System. Comput. Electron. Agric. 2020, 169, 105158. [Google Scholar] [CrossRef]

- Westling, F.; Underwood, J.; Bryson, M. A Procedure for Automated Tree Pruning Suggestion Using LiDAR Scans of Fruit Trees. Comput. Electron. Agric. 2021, 187, 106274. [Google Scholar] [CrossRef]

| Actual Tree Row Angle/° | Mean | Standard Deviation | |||||

|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | ||

| 12.90 | 12.71 | 12.60 | 12.49 | 13.03 | 12.77 | 12.75 | 0.198 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, L.; Wang, S.; Wang, Z.; Jin, L.; He, X. Method of 3D Voxel Prescription Map Construction in Digital Orchard Management Based on LiDAR-RTK Boarded on a UGV. Drones 2023, 7, 242. https://doi.org/10.3390/drones7040242

Han L, Wang S, Wang Z, Jin L, He X. Method of 3D Voxel Prescription Map Construction in Digital Orchard Management Based on LiDAR-RTK Boarded on a UGV. Drones. 2023; 7(4):242. https://doi.org/10.3390/drones7040242

Chicago/Turabian StyleHan, Leng, Shubo Wang, Zhichong Wang, Liujian Jin, and Xiongkui He. 2023. "Method of 3D Voxel Prescription Map Construction in Digital Orchard Management Based on LiDAR-RTK Boarded on a UGV" Drones 7, no. 4: 242. https://doi.org/10.3390/drones7040242

APA StyleHan, L., Wang, S., Wang, Z., Jin, L., & He, X. (2023). Method of 3D Voxel Prescription Map Construction in Digital Orchard Management Based on LiDAR-RTK Boarded on a UGV. Drones, 7(4), 242. https://doi.org/10.3390/drones7040242