Analytical Framework for Sensing Requirements Definition in Non-Cooperative UAS Sense and Avoid

Abstract

:1. Introduction

- Definition of field of view and surveillance rate requirements in azimuth and elevation as a function of the aircraft speeds and eventual trajectory constraints;

- Derivation of the sensitivity of closest point of approach estimates on sensing accuracy parameters, expressed though approximated derivatives;

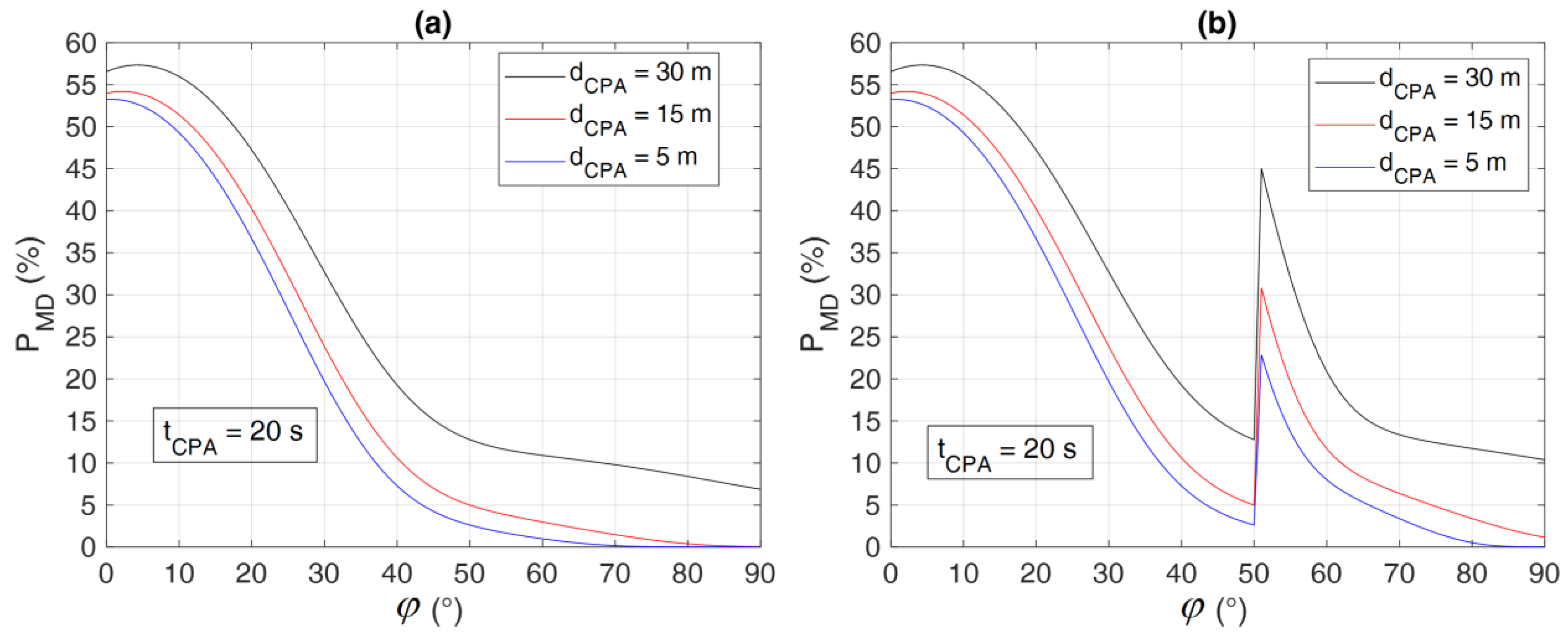

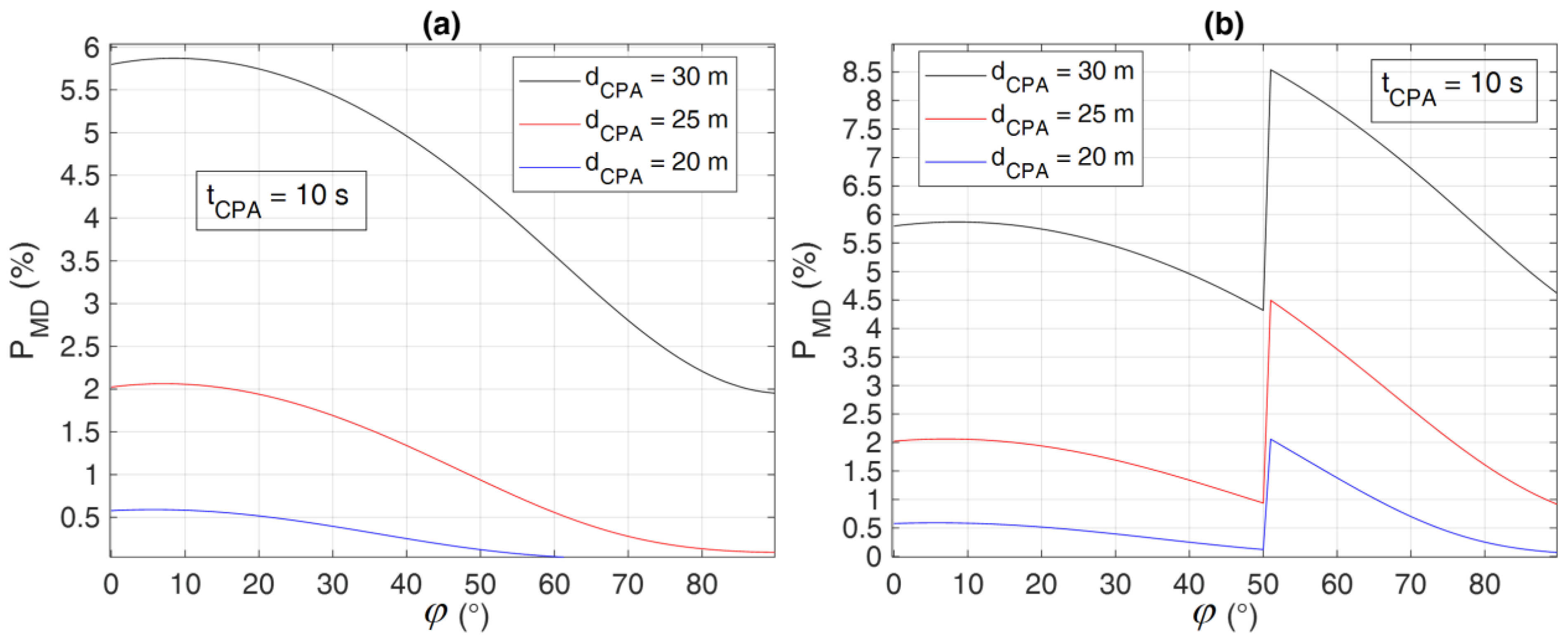

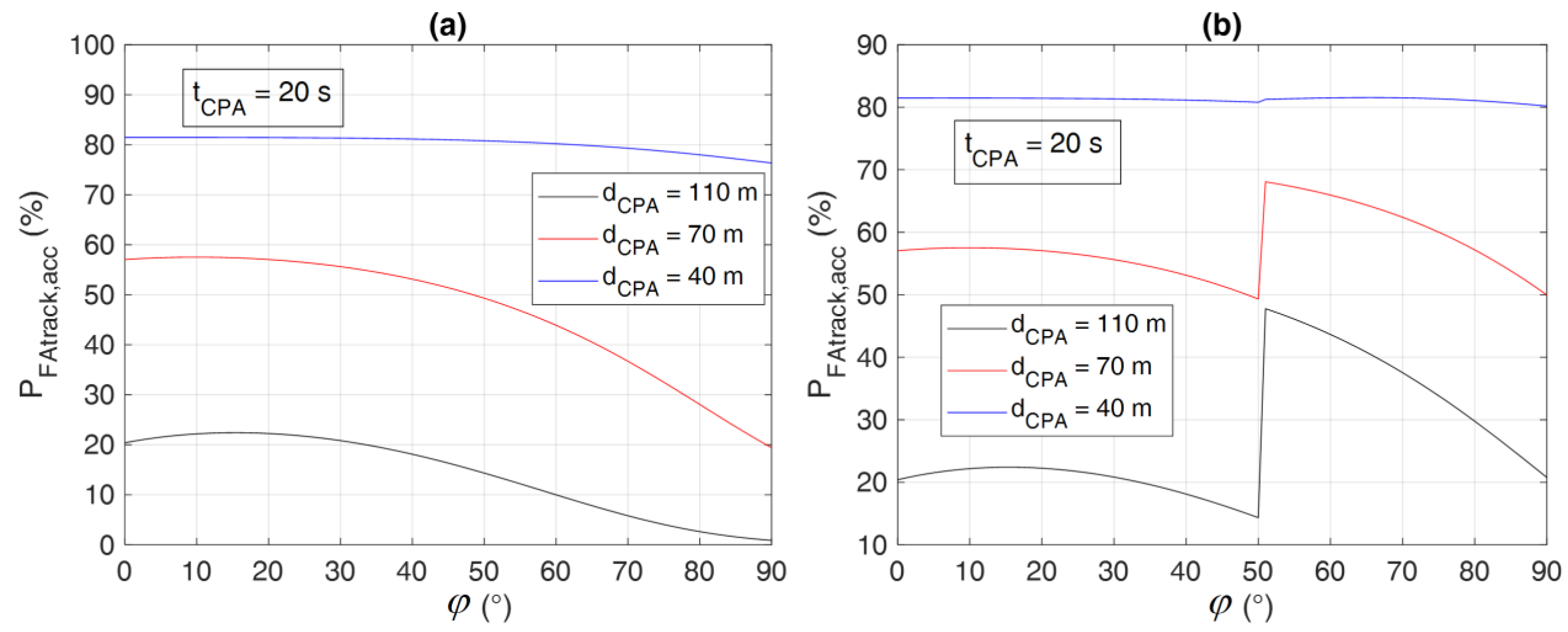

- Evaluation of the dependency of missed and false conflict detection probabilities on sensing accuracy and declaration range, for different conflict geometries;

- Validation of the above theoretical derivations through ad hoc numerical simulations.

2. Methodology for Integrated SAA Requirements Definition

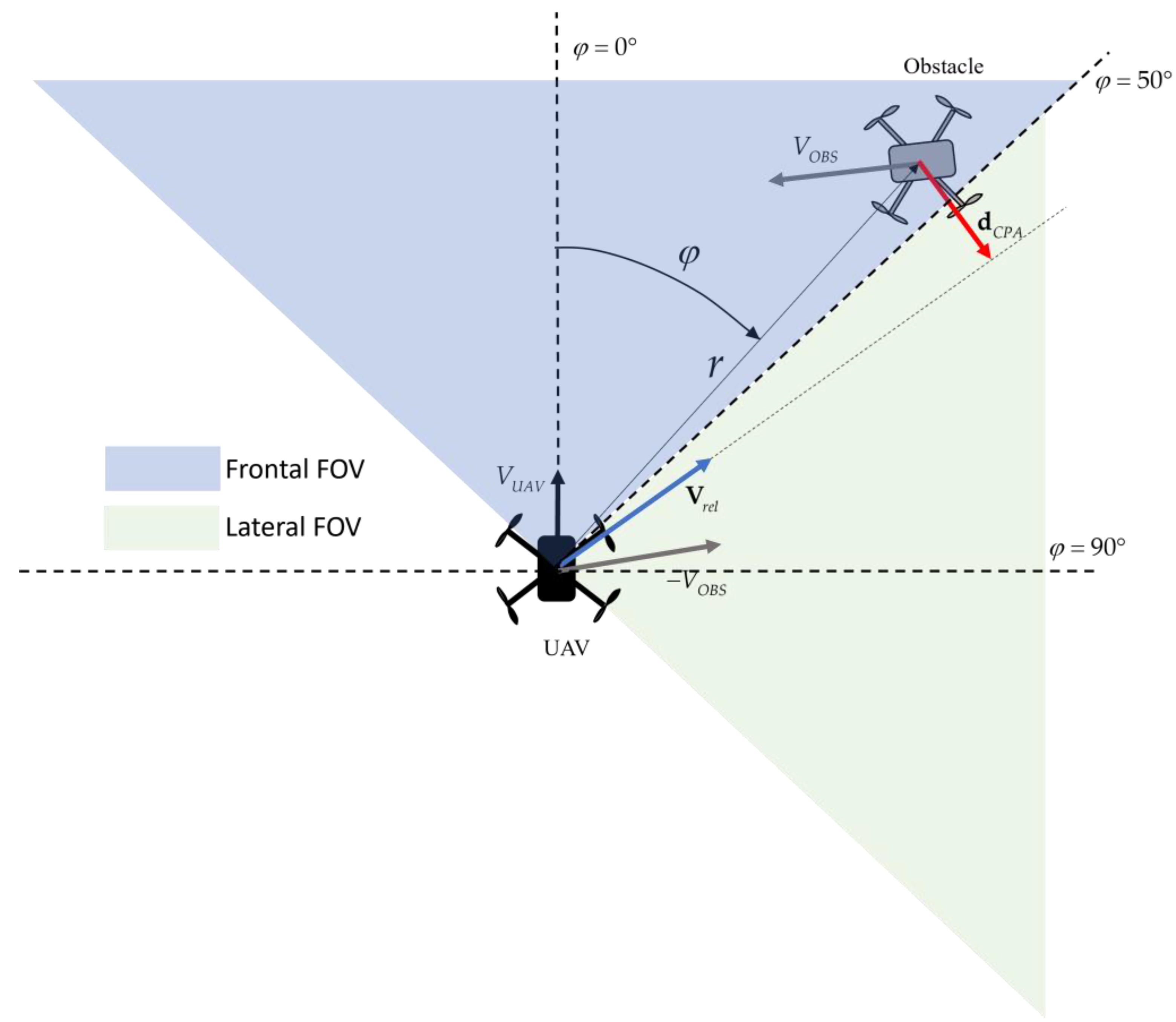

2.1. Field of View-Related Effects and Constraints

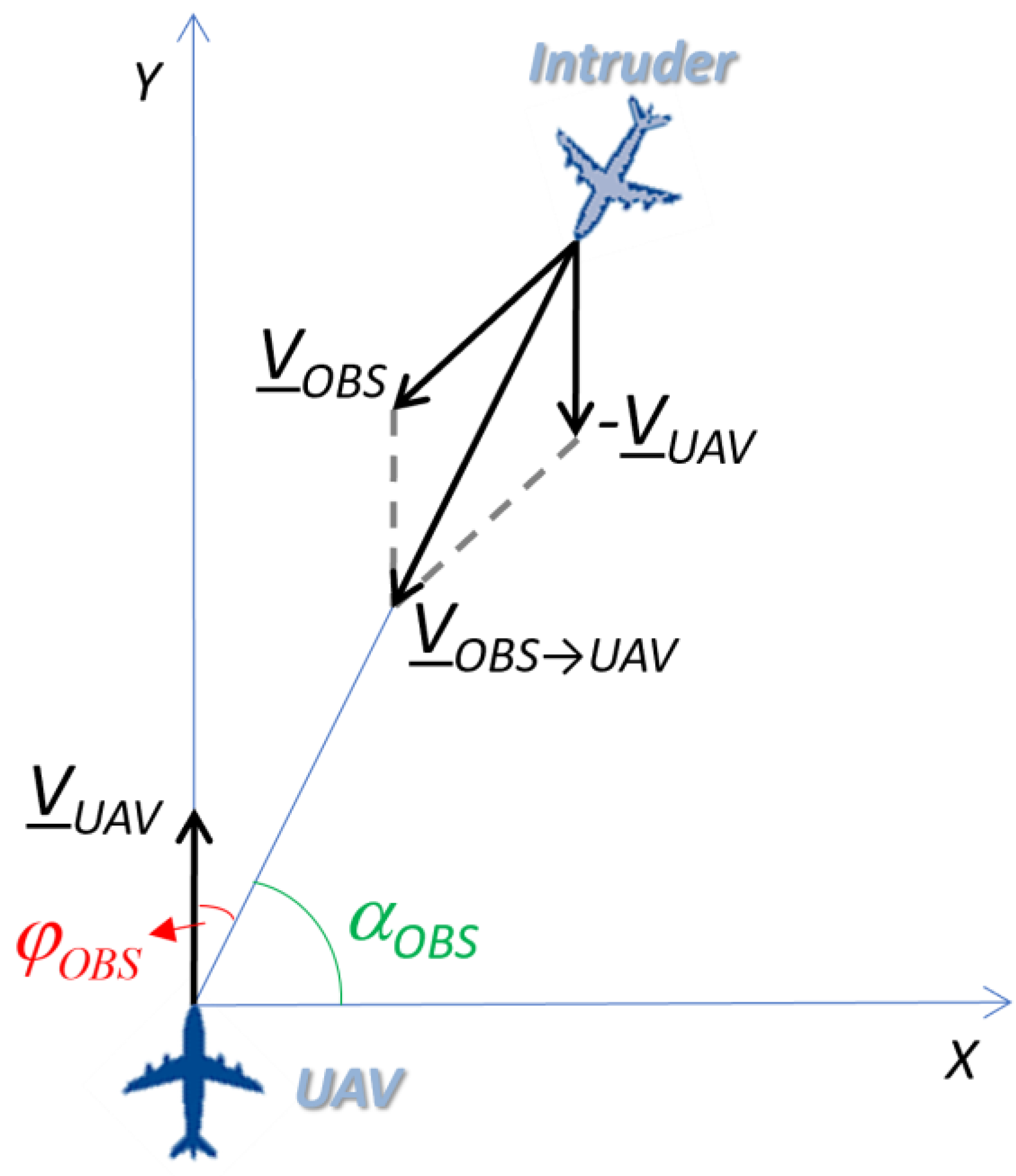

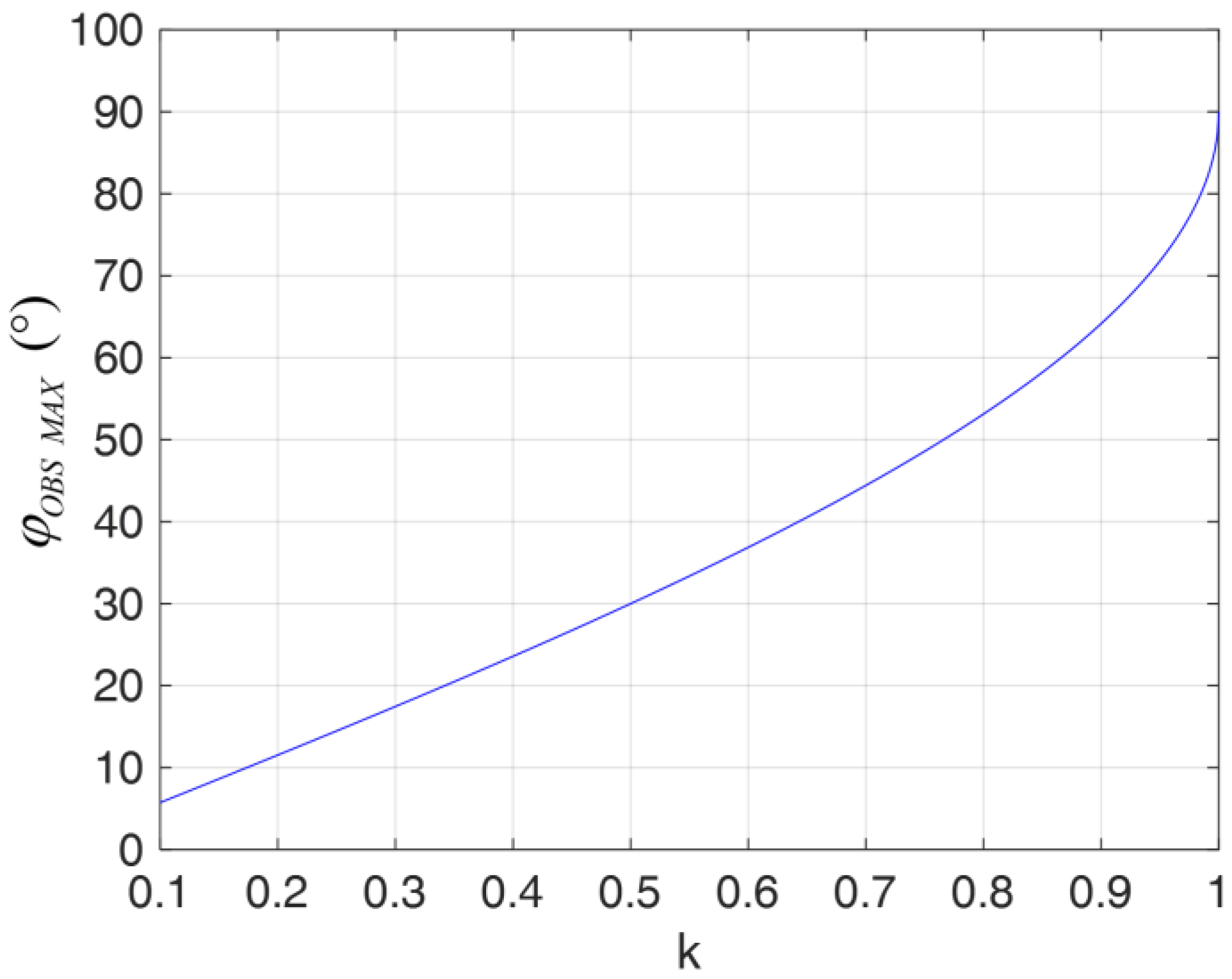

2.1.1. Azimuth

2.1.2. Elevation

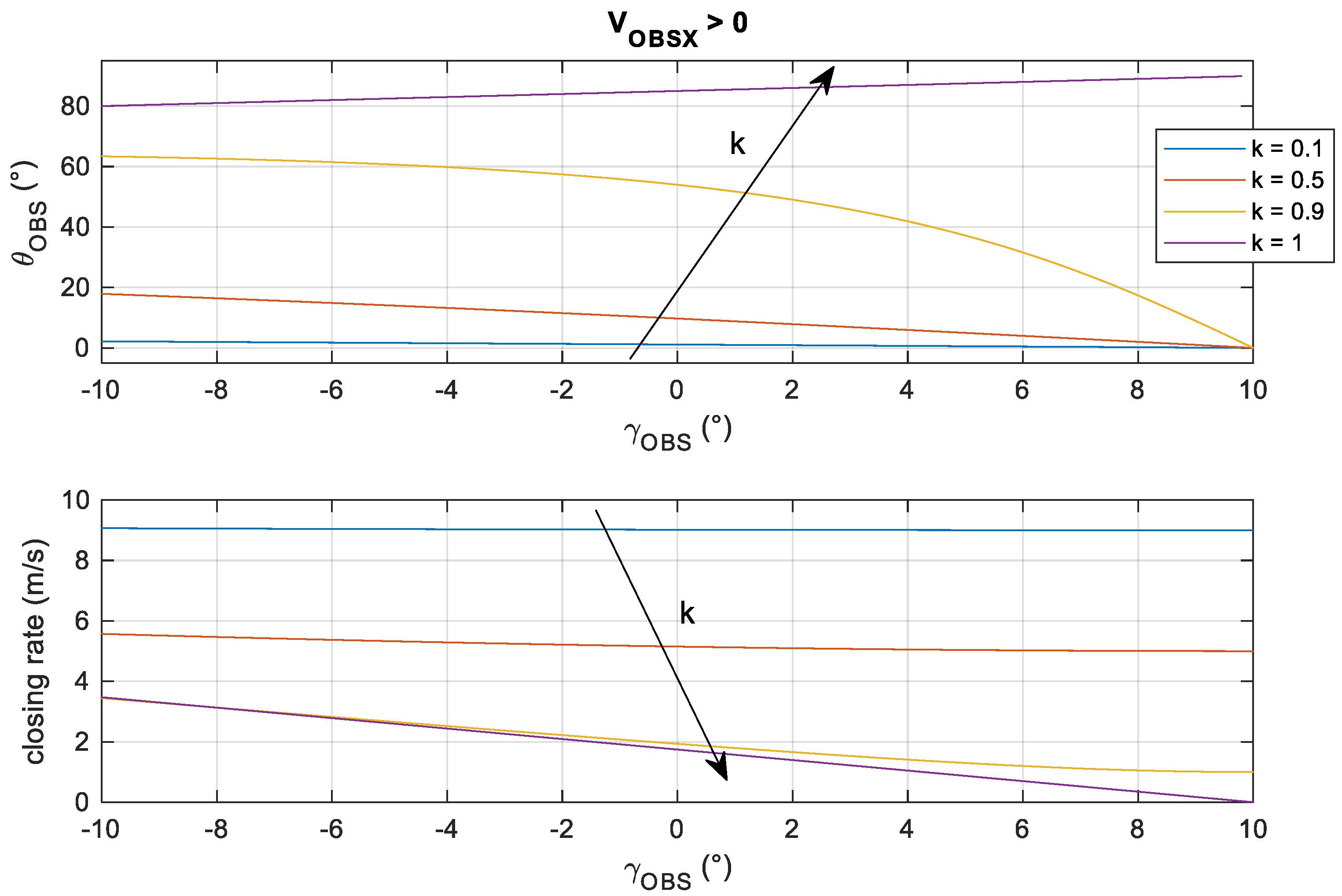

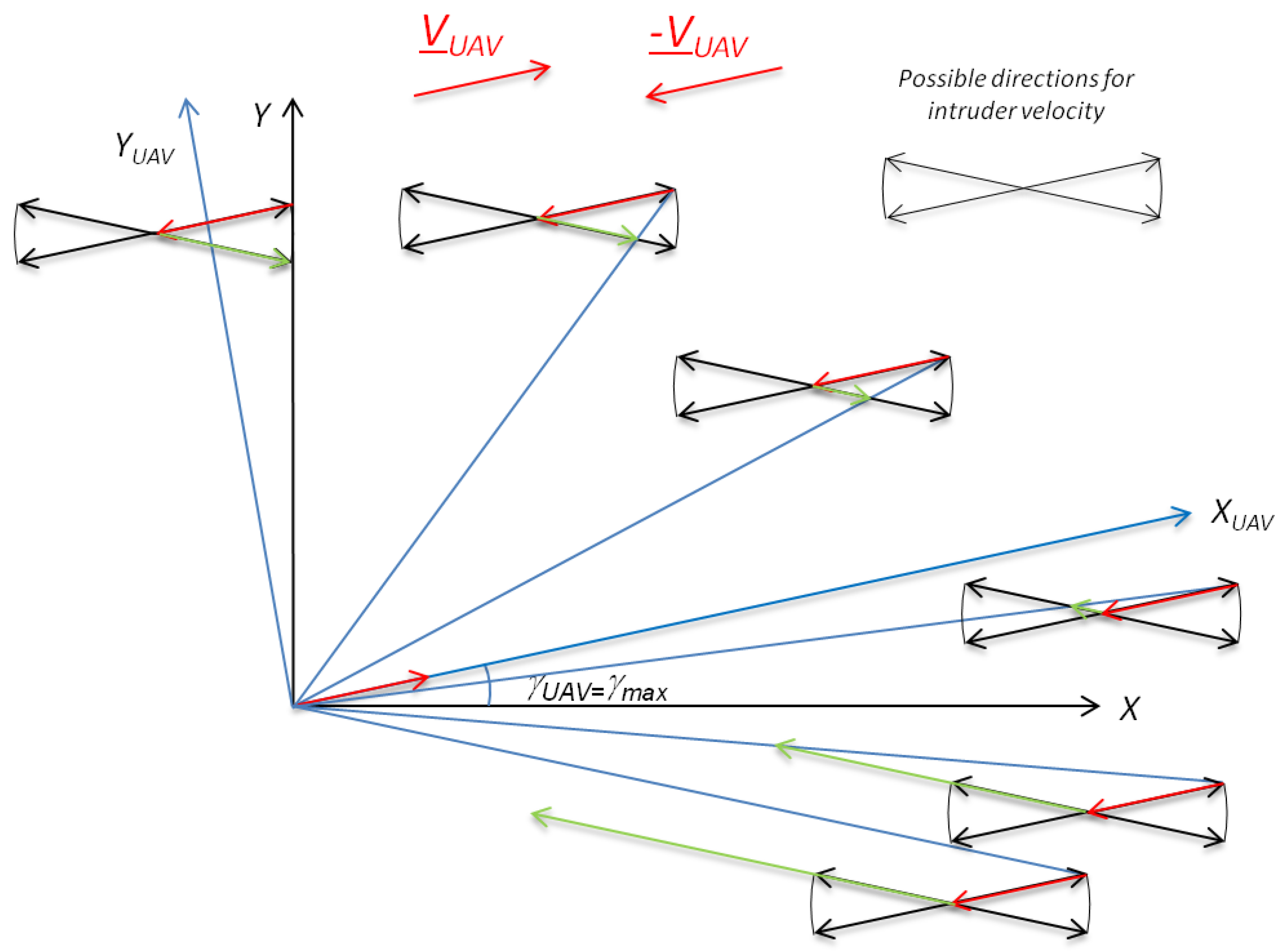

- Fast encounters, i.e., corresponding to approaching geometries (VOBS,X < 0, so the UAV and the intruder move along opposite directions);

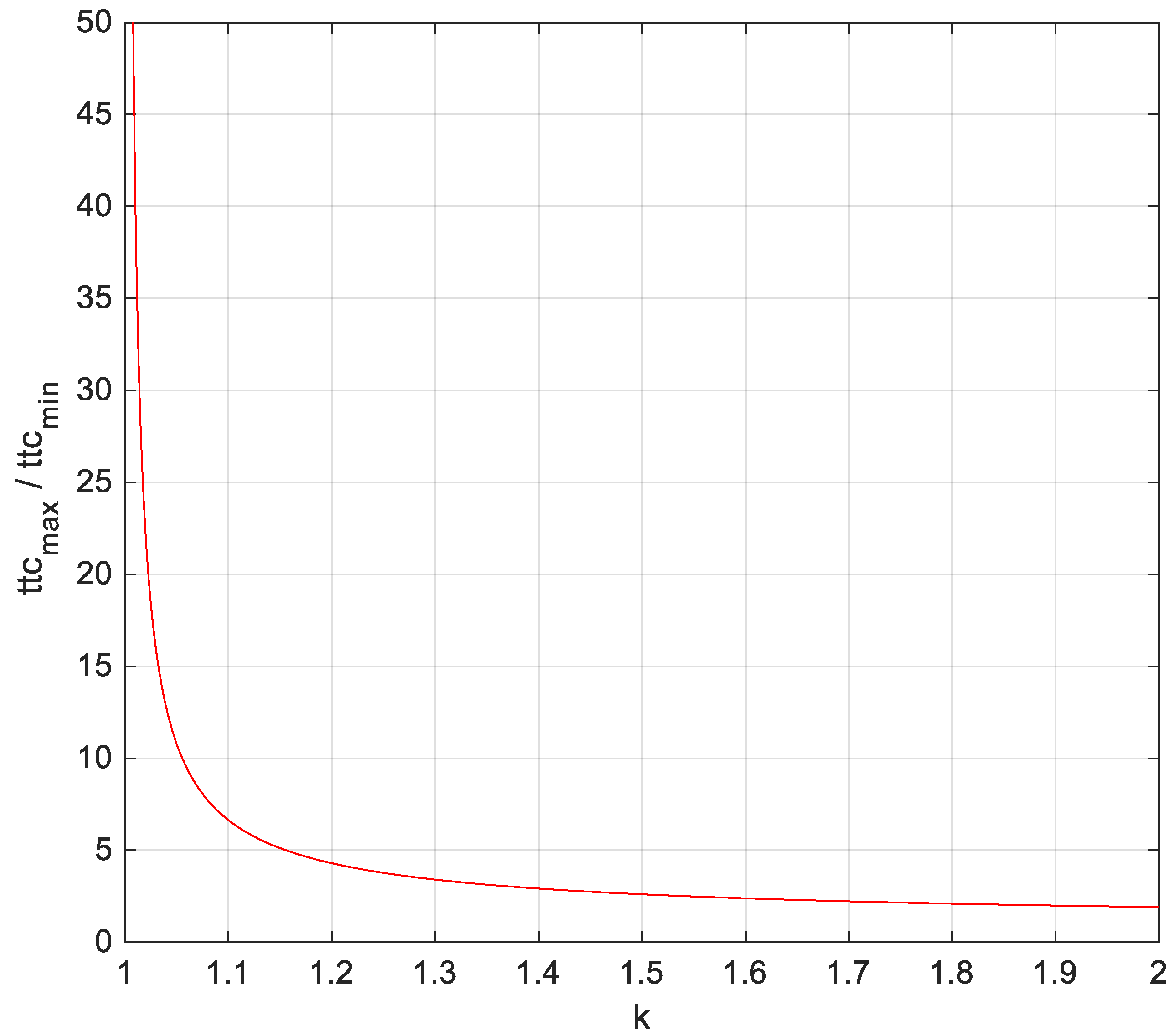

- Slow encounters, i.e., corresponding to converging trajectories (VOBS,X > 0, so the UAV and intruder move along the same direction).

- If all the aircraft have the same flight path angle limits (i.e., γOBS is limited in the range [−10°,10°] in the diagrams), and the ownship is climbing with the maximum flight path angle, then fast encounters correspond to negative elevation angles, while slow encounters occur for positive elevation angles.

- In the fast encounter case, the required FOV in elevation can be limited for any velocity ratio k by constraining the flight path angles. In fact, since θOBS functions are monotonic (see Figure 5), the largest negative obstacle elevation corresponds to the largest positive flight path angle for the obstacle. In particular, for |γOBSmax| = |γUAVmax| = γmax, the limit obstacle elevation angle (i.e., characterized by the maximum absolute value) can be obtained from (21) as follows.

- Consequently, θOBS,abs-max tends to 0 for vanishing k (fixed obstacles), to γmax for k = 1, and to 2γmax if k approaches ∞.

- Slow encounters (Figure 6) are generated only up to k ≃1 (i.e., for obstacles slower than the ownship). In these cases, θOBS,abs-max can be significantly larger than γmax. For k << 1, as in the fast encounter case, θOBS,abs-max vanishes (fixed obstacles). When k increases, the range of elevation angles in which collision geometries are generated increases, while the closing rate decreases. For equal velocities (k = 1), collision conditions can be generated at very large elevation angles (close to 90°), which correspond to quasi-parallel converging trajectories and thus very small closure rates. These conditions can be handled by selecting proper sensors with a relatively small detection range used only for surveilling the airspace above or below the aircraft. Moreover, extremely small range rate values could be considered of low impact in terms of collision risk. Constraining only flight path angles may not allow a reduction of the vertical FOV unless proper rules are also established for aircraft speeds (e.g., defining limited speed ranges can be useful to reduce closure rates that correspond to large elevation angles). These results have an immediate intuitive interpretation that derives from the flight path angle limits and the velocity ratio. A graphical representation to visualize some of the above-described collision conditions is provided in Figure 7.

2.2. Effect of Non-Cooperative Sensing Performance on CPA Estimation Accuracy

- The analysis of the order of magnitude (based on the conditions of a near-collision encounter) is applied to the exact expressions of the derivatives of dCPA,hor and dCPA,ver.

- First, approximated expressions for dCPA,hor and dCPA,ver are obtained under the assumption of near-collision encounters. Second, the corresponding derivatives are computed. Finally, the different contributions are compared based on their order of magnitude.

- As tracking algorithms usually work in stabilized coordinates and account for ownship motion, navigation unit performance may impact tracking uncertainty. This may be especially true for small UAS which can equip very high angular resolution sensors (e.g., LiDAR) and relatively low-performance inertial units. Also, sensors are assumed to be aligned with velocity. In the case of strapdown installation and non-negligible attitude angles, the final uncertainty on stabilized coordinates may merge sensor uncertainties in azimuth and elevation.

- Tracking uncertainty also includes the measurement rate and the probability of detection, which together impact the effective valid measurement rate when firm tracking is achieved.

2.3. Integrated Range-Accuracy Requirements

- The intruder has not been declared yet. The corresponding probability is given by 1 − PFT.

- The intruder has been declared and its relative dynamics lead to a conflict, but tracking error and decision-making criteria do not lead to positive conflict detection. The corresponding probability is the result of the product between PFT and an additional term depending on the tracking accuracy (PMD,track_acc).

- A false intruder is declared, and its estimated kinematics generate a positive conflict detection. The overall probability is given by the product of the probability of false track generation (Pfalse_track) times the probability that this false track corresponds to a conflict (Pfalse_track_conflict). Pfalse_track depends on the settings adopted for the detection and the tracking algorithm, and on the operating environment. Pfalse_track_conflict is a parameter that can be assumed as a constant and mainly depends on the adopted conflict detection criteria.

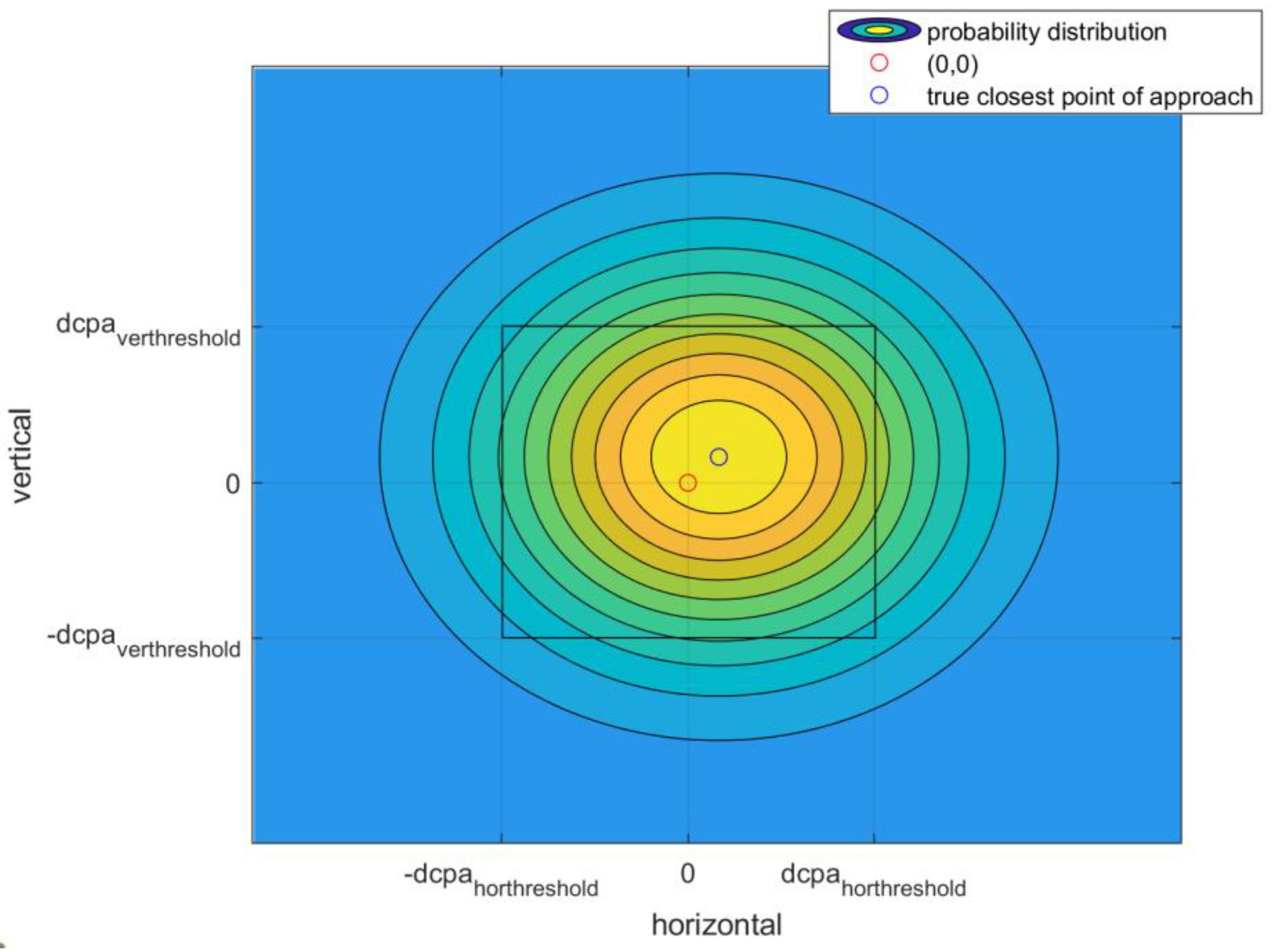

- The intruder has been declared and its relative dynamics dooes not lead to a conflict, but tracking errors and decision-making criteria lead to positive conflict detection. The corresponding probability is the result of the product between PFT and an additional term depending on the tracking accuracy (PFA,track_acc). Again, this latter term can be estimated as a two-dimensional integral based on the components of the dCPA.

3. Numerical Analyses

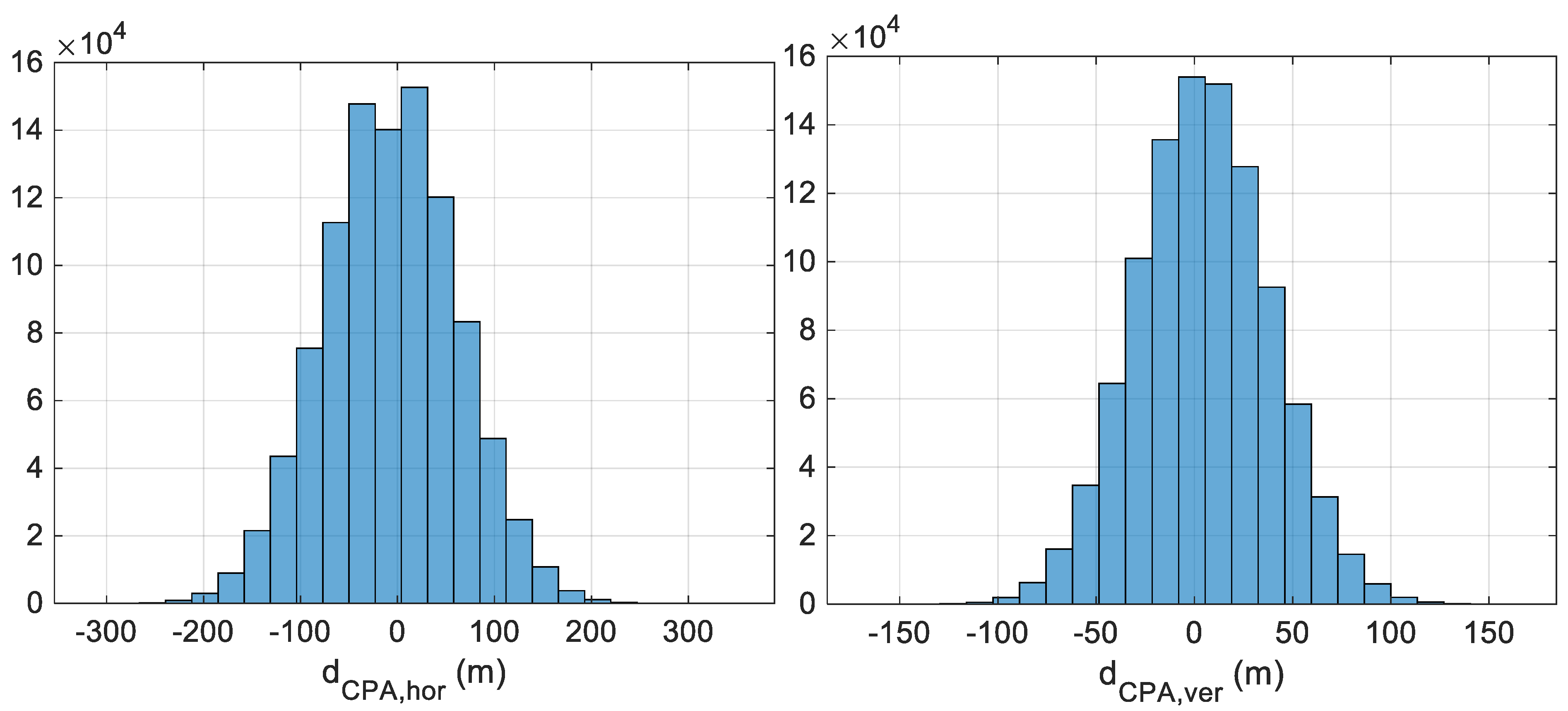

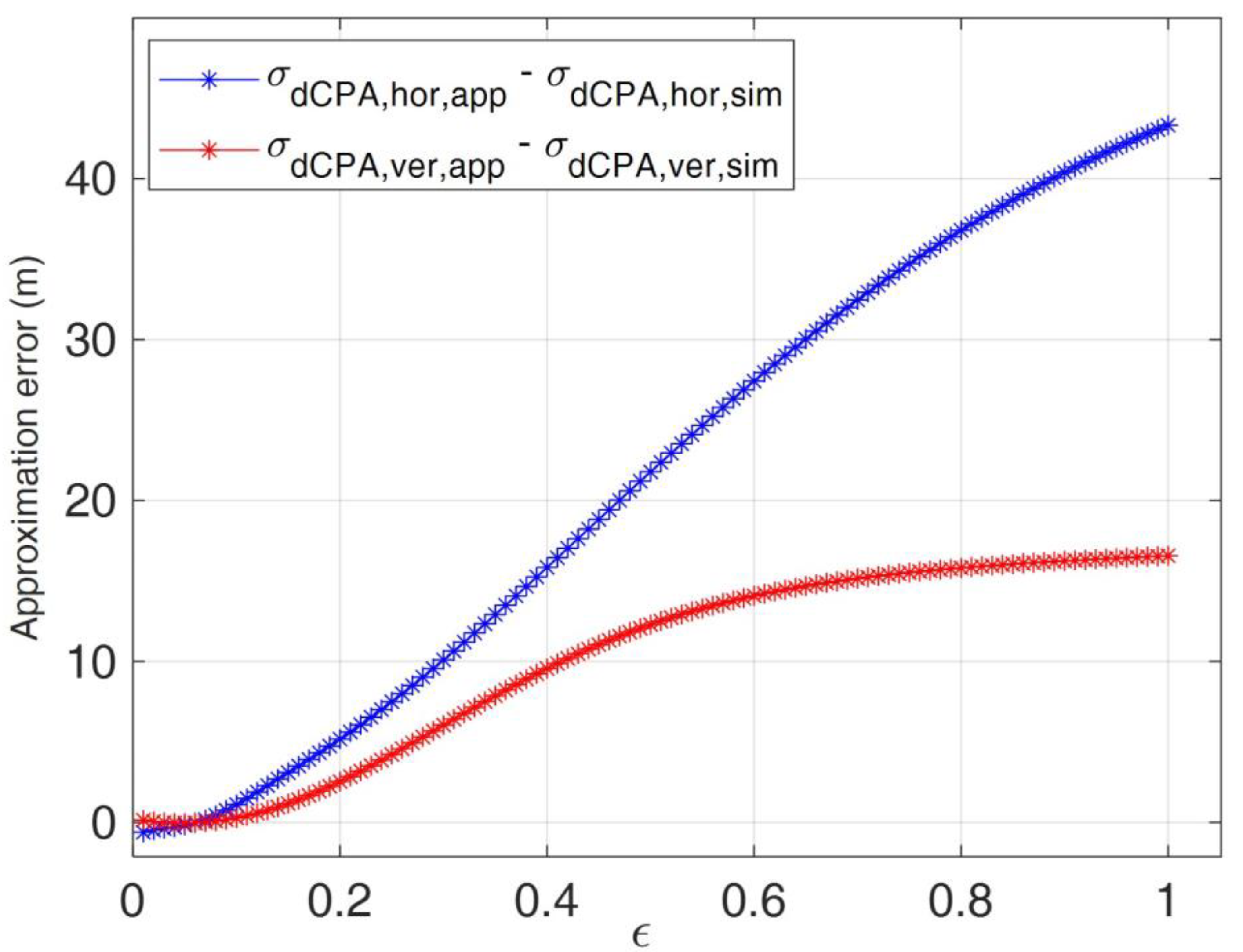

3.1. Statistical Consistency

3.2. Evaluation of Conflict Detection Performance

- tCPA is varied between 0 s and 50 s;

- dCPA is varied between 0 m and 120 m;

- φ is varied between −90° and 90°.

4. Conclusions

- The size of the field of view to be monitored in the horizontal plane (azimuth) can be reduced when the ownship is faster than the obstacle; when this is not true, for a given obstacle declaration range, it is possible to adapt the surveillance rate to the variation of time to collision within the FOV.

- As concerns the vertical plane (elevation), imposing constraints on flight path angles allows for a reduction of the required FOV in elevation in the case of “fast encounters” (approaching trajectories) for any velocity ratio. In the case of “slow encounters” (converging trajectories), constraining only flight path angles does not allow a reduction of the vertical FOV to be surveilled unless proper intervals are also established for aircraft speeds. Defining limited speed ranges, conflicts are generated at large elevation angles but are characterized by low closure rates.

- Regarding the dependence of closest point of approach estimates on sensing accuracy in near-collision conditions for given tcpa, the highest sensitivity concerns the uncertainties on azimuth and elevation angular rates.

- The variation of closure rate within the field of view (i.e., its reduction at increasing azimuth angles) changes the declaration range requirement as well as the impact of sensing errors, which can be exploited to relax, on a statistically consistent basis, the sensing requirements.

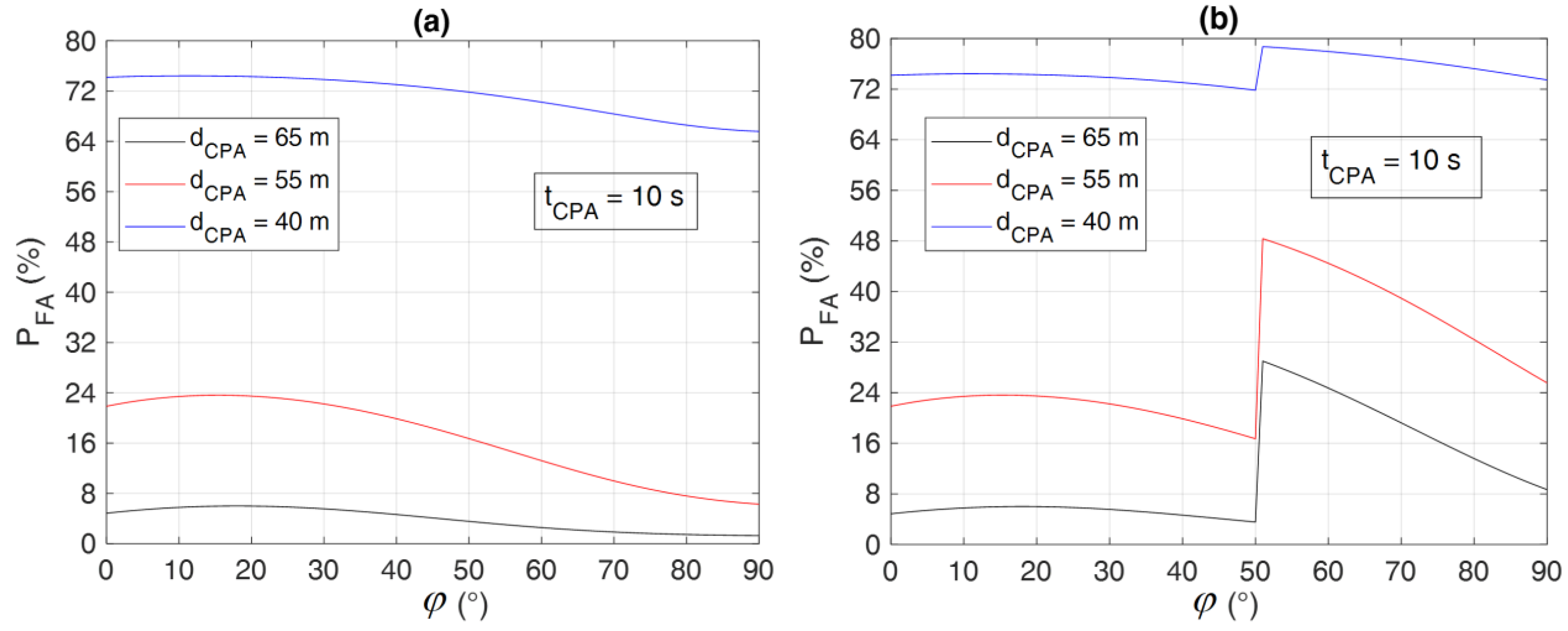

- Numerical simulations of conflict conditions in horizontal scenarios quantitatively demonstrated that a “low-level” radar (i.e., with limited declaration range and azimuth rate accuracy) can be used to monitor lateral encounters (i.e., with intruder azimuth angles larger than 50°) with comparable performance in terms of missed and false conflict detection probabilities to a “high-level” radar handling frontal and quasi-frontal encounters.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Fasano, G.; Accardo, D.; Moccia, A.; Maroney, D. Sense and avoid for unmanned aircraft systems. IEEE Aerosp. Electron. Syst. Mag. 2016, 31, 82–110. [Google Scholar] [CrossRef]

- Zeitlin, A. Performance tradeoffs and the development of standards. In Sense and Avoid in UAS: Research and Applications, 1st ed.; Angelov, P., Ed.; Wiley: New York, NY, USA, 2012; Chapter 2. [Google Scholar]

- Fasano, G.; Accardo, D.; Tirri, A.E.; Moccia, A.; De Lellis, E. Radar/electro-optical data fusion for non-cooperative UAS sense and avoid. Aerosp. Sci. Technol. 2015, 46, 436–450. [Google Scholar] [CrossRef]

- Kotegawa, T. Proof-of-concept airborne sense and avoid system with ACAS-XU flight test. IEEE Aerosp. Electron. Syst. Mag. 2016, 31, 53–62. [Google Scholar] [CrossRef]

- UAS Traffic Management (UTM) Project Technical Interchange Meeting (TIM). Available online: https://nari.arc.nasa.gov/events/utm2021tim/ (accessed on 23 August 2023).

- Barrado, C.; Boyero, M.; Brucculeri, L.; Ferrara, G.; Hately, A.; Hullah, P.; Martin-Marrero, D.; Pastor, E.; Rushton, A.P.; Volkert, A. U-Space Concept of Operations: A Key Enabler for Opening Airspace to Emerging Low-Altitude Operations. Aerospace 2020, 7, 24. [Google Scholar] [CrossRef]

- Jamoom, M.B.; Joerger, M.; Pervan, B. Unmanned aircraft system sense-and-avoid integrity and continuity risk. J. Guid. Control Dyn. 2016, 39, 498–509. [Google Scholar] [CrossRef]

- Huh, S.; Cho, S.; Jung, Y.; Shim, D.H. Vision-based sense-and-avoid framework for unmanned aerial vehicles. IEEE Trans. Aerosp. Electron. Syst. 2015, 51, 3427–3439. [Google Scholar] [CrossRef]

- Opromolla, R.; Fasano, G. Visual-based obstacle detection and tracking, and conflict detection for small UAS sense and avoid. Aerosp. Sci. Technol. 2021, 119, 107167. [Google Scholar] [CrossRef]

- Ma, Z.; Yao, W.; Niu, Y.; Lin, B.; Liu, T. UAV low-altitude obstacle detection based on the fusion of LiDAR and camera. Auton. Intell. Syst. 2021, 1, 12. [Google Scholar] [CrossRef]

- Jilkov, V.P.; Ledet, J.H.; Li, X.R. Multiple model method for aircraft conflict detection and resolution in intent and weather uncertainty. IEEE Trans. Aerosp. Electron. Syst. 2018, 55, 1004–1020. [Google Scholar] [CrossRef]

- Mishra, C.; Maskell, S.; Au, S.K.; Ralph, J.F. Efficient estimation of probability of conflict between air traffic using Subset Simulation. IEEE Trans. Aerosp. Electron. Syst. 2019, 55, 2719–2742. [Google Scholar] [CrossRef]

- Lies, W.A.; Narula, L.; Iannucci, P.; Humphreys, T. Long Range, Low SWaP-C FMCW Radar. IEEE J. Sel. Top. Signal Process. 2021, 15, 1030–1040. [Google Scholar] [CrossRef]

- Weinert, A.; Campbell, D.; Vela, A.; Schuldt, D.; Kurucar, J. Well-clear recommendation for small unmanned aircraft systems based on unmitigated collision risk. J. Air Transp. 2018, 26, 113–122. [Google Scholar] [CrossRef]

- Jones, J.C.; Panken, A.; Lopez, J.A. Surrogate-Based Optimization for Radar Surveillance Requirements to Support RWC and CA for Unmanned Aircraft. In Proceedings of the 2018 IEEE/AIAA 37th Digital Avionics Systems Conference (DASC), London, UK, 23–27 September 2018. [Google Scholar]

- Kochenderfer, M.J.; Edwards, M.W.; Espindle, L.P.; Kuchar, J.K.; Griffith, J.D. Airspace encounter models for estimating collision risk. J. Guid. Control Dyn. 2010, 33, 487–499. [Google Scholar] [CrossRef]

- Lee, H.; Park, B.; Lee, H. Analysis of ADS-B Trajectories in the Republic of Korea with DAA Well Clear Metrics. In Proceedings of the 2018 IEEE/AIAA 37th Digital Avionics Systems Conference (DASC), London, UK, 23–27 September 2018. [Google Scholar]

- Underhill, N.; Weinert, A. Applicability and Surrogacy of Uncorrelated Airspace Encounter Models at Low Altitudes. J. Air Transp. 2021, 29, 137–141. [Google Scholar] [CrossRef]

- Fasano, G.; Accardo, D.; Tirri, A.E.; Moccia, A. Experimental analysis of onboard non-cooperative sense and avoid solutions based on radar, optical sensors, and data fusion. IEEE Aerosp. Electron. Syst. Mag. 2016, 31, 6–14. [Google Scholar] [CrossRef]

- Edwards, M.W.M.; Mackay, J.K. Determining Required Surveillance Performance for Unmanned Aircraft Sense and Avoid. In Proceedings of the AIAA Aviation Forum, Denver, CO, USA, 5–9 June 2017. [Google Scholar]

- Opromolla, R.; Fasano, G.; Accardo, D. Perspectives and sensing concepts for small UAS sense and avoid. In Proceedings of the 2018 IEEE/AIAA 37th Digital Avionics Systems Conference (DASC), London, UK, 23–27 September 2018; pp. 1–10. [Google Scholar]

- Opromolla, R.; Fasano, F.; Accardo, D. Conflict Detection Performance of Non-Cooperative Sensing Architectures for Small UAS Sense and Avoid. In Proceedings of the 2019 Integrated Communications, Navigation and Surveillance Conference (ICNS), Herndon, VA, USA, 9–11 April 2019; pp. 1–12. [Google Scholar]

- Vitiello, F.; Causa, F.; Opromolla, R.; Fasano, G. Improved Sensing Strategies for Low Altitude Non Cooperative Sense and Avoid. In Proceedings of the 2021 IEEE/AIAA 40th Digital Avionics Systems Conference (DASC), San Antonio, TX, USA, 3–7 October 2021; pp. 1–10. [Google Scholar]

- Sharma, P.; Ochoa, C.A.; Atkins, E.M. Sensor constrained flight envelope for urban air mobility. In Proceedings of the AIAA SciTech 2019 Forum, San Diego, CA, USA, 7–11 January 2019; p. 949. [Google Scholar]

- Wikle, J.K.; McLain, T.W.; Beard, R.W.; Sahawneh, L.R. Minimum required detection range for detect and avoid of unmanned aircraft systems. J. Aerosp. Inf. Syst. 2017, 14, 351–372. [Google Scholar] [CrossRef]

- Euliss, G.; Christiansen, A.; Athale, R. Analysis of laser-ranging technology for sense and avoid operation of unmanned aircraft systems: The tradeoff between resolution and power. In Proceedings of the SPIE Defense and Security Symposium, Orlando, FL, USA, 16 April 2008. [Google Scholar]

- Schaefer, R. A standards-based approach to sense-and-avoid technology. In Proceedings of the AIAA 3rd “Unmanned Unlimited” Technical Conference, Workshop and Exhibit, Chicago, IL, USA, 20–23 September 2004; p. 6420. [Google Scholar]

- Utt, J.; McCalmont, J.; Deschenes, M. Development of a sense and avoid system. In Proceedings of the Infotech@ Aerospace, Arlington, VA, USA, 26–29 September 2005; p. 7177. [Google Scholar]

| Horizontal Derivative | Approximated Value from (42) | O(-) | Vertical Derivative | Approximated Value from (43) | O(-) |

|---|---|---|---|---|---|

| 0 | 0 | 0 | 0 | ||

| 0 | 0 | ||||

| R (m) | φ (°) | θ (°) | (m/s) | (°/s) | (°/s) |

|---|---|---|---|---|---|

| 1000 | 50 | 10 | −50 | 0.02 | 0.01 |

| (m) | (°) | (°) | (m/s) | (°/s) | (°/s) |

| 10 | 2 | 1 | 1 | 0.2 | 0.1 |

| σdCPA,hor,sim (m) | σdCPA,ver,sim (m) | σdCPA,hor,app (m) | σdCPA,ver,app (m) |

|---|---|---|---|

| 68.44 | 34.17 | 67.72 | 34.38 |

| Intruder Track. State Estimation Uncertainty | SAARADAR-1 | SAARADAR-2 | |

|---|---|---|---|

| 0° ≤ φ < 90° “High-Level” Radar | 0° ≤ φ < 50° “High-Level” Radar | 50° ≤ φ < 90° “Low-Level” Radar | |

| (m) | 3.25 | 3.25 | 3.25 |

| (m/s) | 0.900 | 0.900 | 0.900 |

| (°/s) | 0.30 | 0.30 | 0.60 |

| (m) | 400 | 400 | 300 |

| (m) | 50 | 50 | 40 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fasano, G.; Opromolla, R. Analytical Framework for Sensing Requirements Definition in Non-Cooperative UAS Sense and Avoid. Drones 2023, 7, 621. https://doi.org/10.3390/drones7100621

Fasano G, Opromolla R. Analytical Framework for Sensing Requirements Definition in Non-Cooperative UAS Sense and Avoid. Drones. 2023; 7(10):621. https://doi.org/10.3390/drones7100621

Chicago/Turabian StyleFasano, Giancarmine, and Roberto Opromolla. 2023. "Analytical Framework for Sensing Requirements Definition in Non-Cooperative UAS Sense and Avoid" Drones 7, no. 10: 621. https://doi.org/10.3390/drones7100621

APA StyleFasano, G., & Opromolla, R. (2023). Analytical Framework for Sensing Requirements Definition in Non-Cooperative UAS Sense and Avoid. Drones, 7(10), 621. https://doi.org/10.3390/drones7100621