Abstract

Real-time object detection based on UAV remote sensing is widely required in different scenarios. In the past 20 years, with the development of unmanned aerial vehicles (UAV), remote sensing technology, deep learning technology, and edge computing technology, research on UAV real-time object detection in different fields has become increasingly important. However, since real-time UAV object detection is a comprehensive task involving hardware, algorithms, and other components, the complete implementation of real-time object detection is often overlooked. Although there is a large amount of literature on real-time object detection based on UAV remote sensing, little attention has been given to its workflow. This paper aims to systematically review previous studies about UAV real-time object detection from application scenarios, hardware selection, real-time detection paradigms, detection algorithms and their optimization technologies, and evaluation metrics. Through visual and narrative analyses, the conclusions cover all proposed research questions. Real-time object detection is more in demand in scenarios such as emergency rescue and precision agriculture. Multi-rotor UAVs and RGB images are of more interest in applications, and real-time detection mainly uses edge computing with documented processing strategies. GPU-based edge computing platforms are widely used, and deep learning algorithms is preferred for real-time detection. Meanwhile, optimization algorithms need to be focused on resource-limited computing platform deployment, such as lightweight convolutional layers, etc. In addition to accuracy, speed, latency, and energy are equally important evaluation metrics. Finally, this paper thoroughly discusses the challenges of sensor-, edge computing-, and algorithm-related lightweight technologies in real-time object detection. It also discusses the prospective impact of future developments in autonomous UAVs and communications on UAV real-time target detection.

1. Introduction

Unmanned aerial vehicles (UAVs) have been widely used in different scenarios, such as agriculture [1], urban traffic [2], and search and rescue [3]. A recent review by Nex et al. [4] has revealed that the majority of published papers related to UAVs are focused on remote sensing studies [5]. This is largely due to the ease of use, flexibility, and relatively moderate costs of UAVs, which have made them a popular instrument in the remote sensing domain [4].

The emergence of more advanced algorithms will enable the realization of autonomous UAVs [4]. Real-time object detection based on UAV remote sensing is an important perception task for achieving fully autonomous UAVs. In this paper, the so-called ‘object’ refers to the instances of visual objects of a particular class in remote sensing imagery [6]. Any remote sensing application consists of two distinct processes: data acquisition and data analysis [7]. Although rapidly acquiring UAV remote sensing imagery is an essential first step, extracting valuable information from the raw imagery is also critical to performing real-time remote sensing. It enables the interpretation and description of the targets being measured in a particular scene. Traditional data processing has matured with well-established workflow solutions [4]. However, users’ demand for autonomous UAVs requires a shift in data processing from offline to real-time. The ultimate goal of real-time object detection is to enable real-time sensing and reasoning via the automatic, rapid, and precise processing of remotely sensed UAV data. Real-time object detection also helps release storage and physical and virtual memory in resource-limited hardware. Accordingly, to achieve such a goal, real-time object detection based on UAV remote sensing is significant for rapidly extracting useful information from remote sensing images [8].

UAV technology is at the intersection of many domains, and the research in its neighbouring fields influences the processing and utilization of remote sensing data [4]. When leveraging the outputs of remote sensing data, all of these tasks strongly rely on the detection of one or multiple domain-related objects [9]. Object detection tasks have been widely investigated since the beginning of computer vision [9,10], as object detection serves as a basis for many other computer vision tasks [6]. As a result, object detection is one of the most critical underlying tasks for processing UAV remote sensing imagery [9]. Object detection has been defined as the procedure of determining the instance of the class to which an object belongs and estimating the object’s location by outputting a bounding box or the silhouette around the object [11]. In UAV remote sensing research, the definition and application of object detection could be broader. For example, in a soybean leaf disease recognition study [12], the output form of the task was not a bounding box but somewhat different colour masks to indicate the healthy and diseased leaves. In computer vision research, these masks often have more precise terminology, called segmentation or semantic segmentation. Similar situations also occur in tasks such as classification [13,14] and monitoring [15,16].

The development of autonomous UAVs for analyzing data in real time is an emerging trend in UAV data processing [4]. Compared with manual post-processing, users’ needs are evolving, resulting in many UAV remote sensing applications having a high demand for real-time image detection. For example, in search and rescue scenarios, integrating real-time object detection into a UAV-based emergency warning system can help rescue workers better tackle the situation [17]. In precision agriculture, generating weed maps in real time onboard is essential for weed control tasks and can reduce the time gap between image collection and herbicide treatment [18]. Several previous reviews also emphasized the importance of real-time object detection. For example, emergency responses can be supported by real-time object detection [7], and people also require UAV real-time object detection for safety reconnaissance and surveillance [19]. However, most of these articles considered and addressed only the algorithm factor of real-time object detection while ignoring how the algorithms can be deployed on UAVs [20]. Other UAV real-time object detection components that have not been fully considered when developing a complete workflow in previous studies, including software and hardware technologies based on the embedded system, need further investigation [10]. One review [21] investigated hardware, algorithms, and paradigms for real-time object detection but was limited to precision agriculture. Another review [22] provided a well-summarized and categorized approach to real-time UAV applications, as well as relevant datasets. However, there was no further discussion on how to implement real-time object detection based on UAVs. From a technological perspective, better-performing miniaturized hardware and the surge of deep learning algorithms both have a positive impact on UAV remote sensing data processing [4]. It is worth reviewing and investigating how these new technologies are applied in UAV real-time object detection tasks.

As a result, most of the aforementioned studies focused on real-time detection algorithm investigations. They should have paid more attention to algorithm deployment, which could be critical to successfully implementing UAV object detection in practice. Although reviews also discussed solutions for UAV real-time object detection algorithms deployed on embedded hardware, they were limited to a specific domain.

This study aims to fill the gaps in the previous work that paid limited attention to onboard UAV real-time object detection implementation. Furthermore, the entire UAV real-time object detection process will be summarized as a hardware and software framework that can be used for subsequent onboard real-time processing. The hardware and software framework can serve as a reference for researchers working in the UAV real-time data processing field and drive them towards the development of more efficient autonomous UAV systems. Therefore, the first research objective of this paper is to summarize the application scenarios and the targets of real-time object detection tasks based on UAV remote sensing in order to demonstrate the necessity of real-time detection. The second research objective is to investigate the current implementation of real-time object detection based on UAV remote sensing to observe the impact of sensors, computing platforms, algorithms, and computing paradigms on real-time object detection tasks in terms of accuracy, speed, and other critical evaluation metrics.

The rest of the paper is organized as follows. Section 2 presents and describes the steps and methodology of our systematic literature review. Then, a general analysis of this systematic review study is provided in Section 3. In Section 4, the quantitative results are visualized and discussed according to the proposed research questions, and Section 5 discusses the current challenges and future opportunities of UAV real-time object detection. Finally, Section 6 concludes the paper.

2. Methodology

This study adopted the guidelines proposed by Kitchenham [23] to undertake a systematic literature review and finally reported and visualized the results. Based on the original guidelines [24], a review protocol has been developed to specify the reviewing methods used to reduce bias at the beginning of the study. The steps in this systematic literature review protocol are documented below.

2.1. Research Questions

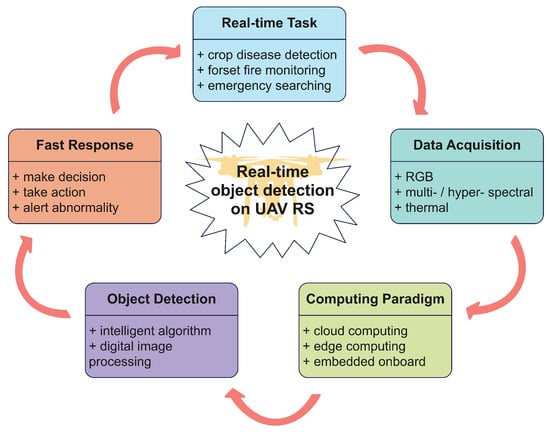

In order to address the problems of real-time object detection based on UAV remote sensing, the complete workflow of real-time object detection tasks in UAV remote sensing needs to be clearly demonstrated. Figure 1 summarizes the process of real-time object detection based on UAVs seen in previous studies [25,26,27] by drawing a concept map to illustrate each step.

Figure 1.

The concept map of real-time object detection based on UAV remote sensing. The closed loop illustrates the complete autonomous workflow, where each node represents one step of real-time object detection.

The complete workflow shown in Figure 1 includes five steps. The starting point comes from the real-time requirements for specific tasks in different application scenarios. Therefore, determining the targets and the task scenarios is critical for selecting UAVs, sensors, and algorithms. Then, during the data acquisition phase, the chosen UAVs are equipped with different sensors to complete data collection according to the task’s needs. The remotely sensed data need to be processed in real time, so computing platform selection is essential for onboard processing. The chosen computing platforms need to establish the computing paradigm for implementing real-time object detection. Meanwhile, the core detection algorithms also need to be deployed on the platform in the object detection phase. The selection of communication methods determines how the data and results are transmitted in the workflow.

Merely obtaining detection results holds limited significance in practical applications, as the essence of real-time detection lies in achieving rapid responsiveness. Hence, the outcomes of real-time object detection serve as crucial outputs for activities such as decision making, system alerts, or machinery operations. These outputs engender value and confer purpose upon this undertaking.

The closed loop in Figure 1 can be used to describe the completed autonomous workflow of UAV real-time object detection. According to the research objectives, this review study will focus on advanced real-time object detection based on remote sensing technology, including application scenarios, hardware selection, algorithm design, and implementation methods. The research questions of this paper are correspondingly proposed as follows.

RQ1. What are the application scenarios and tasks of real-time object detection based on UAV remote sensing?

RQ2. What types of UAV platforms and sensors are used for different real-time object detection applications?

RQ3. Which types of paradigms were used in real-time object detection based on UAV remote sensing?

RQ4. What commonly used computing platforms can support the real-time detection of UAV remote sensing based on edge computing?

RQ5. What algorithms were used for real-time detection based on UAV remote sensing?

RQ6. Which improved methods were used in real-time detection algorithms for UAV remote sensing?

RQ7. How can we evaluate real-time detection based on UAV remote sensing regarding accuracy, speed, and energy consumption?

The formulation of these seven proposed RQs is based on the first four phases outlined in the concept map. It is essential to acknowledge that rapid response constitutes a pivotal stage within the entirety of the workflow. This stage serves as a subsequent action in handling the outputs of real-time detection. Consequently, the exploration of the final phase will be extended to delve into future prospects for attaining autonomous UAV capabilities, as elaborated on in Section 5.

2.2. Search Process

The literature search was conducted on 7 February 2023, and the relevant journal and conference articles were retrieved from two databases (Web of Science and Scopus). This review topic was divided into three aspects: platform, task, and processing method. In order to find more literature that encompassed the ‘detection’ task, the terms on the ‘Task’ aspect were extended to use some other tasks that included ‘detection’, for example, identification, monitoring, segmentation, and classification. Based on these terms, a list of synonyms, abbreviations, and alternative spellings was drawn up, as shown in Table 1.

Table 1.

The list of search terms based on three aspects.

The search strings were constructed by the above terms using the Boolean operators ‘AND’ and ‘OR’, and wildcards were used to match the search better. The following search strings in Table 2 were executed separately in the search engine of two databases.

Table 2.

The search strings for two databases.

2.3. Inclusion and Exclusion Criteria

The search results were imported into the literature management software Endnote. Following automated de-duplication, the remaining documents underwent manual filtering to select the definitive literature for review. Therefore, a set of inclusion and exclusion criteria based on the research questions was used to perform literature screening, mainly by reading titles and abstracts. The full text was read when the title and abstract could not be clearly determined.

Peer-reviewed articles on the following topics that had full texts written in English were included:

- Articles that discussed real-time object detection tasks or algorithms that are applied to UAV remote sensing;

- Articles that specifically mentioned onboard real-time processing and used optic sensors.

The exclusion criteria are shown as follows in Table 3.

Table 3.

The table of exclusion criteria during literature selection.

2.4. Data Extraction

The corresponding evidence was extracted from the retrieved papers to answer the proposed questions properly. For this purpose, we designed and created a data extraction table, including general and detailed information. The general information contained the publication year and the source of the literature. The detailed information included the application categories (scenario, task, and target), hardware components (UAV, sensor, computing platform, and communication), real-time detection method (implementation paradigm, algorithm, and improvement method), and assessment and evaluation metrics (accuracy, speed, latency, and energy). The data were extracted by carefully reading and reviewing the selected papers.

2.5. Data Synthesis

Data synthesis involves collecting and summarizing the results of the included primary studies [24]. In this review study, the results are discussed in detail through descriptive synthesis, and a quantitative synthesis is presented through data visualization to answer the relevant research questions.

3. Analysis of Selected Publications

3.1. Study Selection

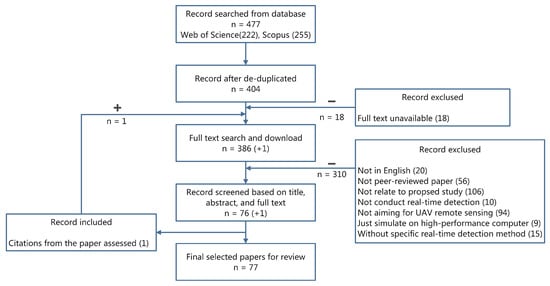

The flow-chart diagram in Figure 2 shows the process of publication selection. A total of 477 records were obtained from searching, of which 222 were from WOS and 255 were from Scopus. After removing duplicates, 404 documents remained, but 18 of them did not have available full text. The remaining 386 documents were screened by reviewing titles, abstracts, and full texts, and 310 documents were excluded based on the exclusion criteria. In addition, one citation from the assessed papers that could potentially contribute to the understanding of the research question was included in the final database after the inclusion and exclusion criteria were evaluated. Finally, 77 pieces of literature were included in our review study.

Figure 2.

The process of publication selection.

3.2. Overview of Reviewed Publications

This subsection analyzes the general information contained in the 77 papers and provides an overview of the papers reviewed.

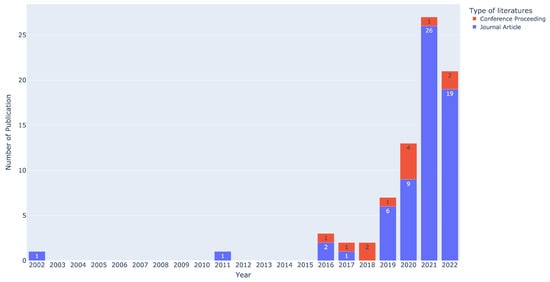

Figure 3 illustrates that the need for real-time object detection was raised by researchers as early as 2002. In that study [28], the authors tried to use a UAV to track a target and maintain its position in the middle of the image. They ensured real-time performance through the combination of a successive-step and multi-block search method. However, it was not until 2019 that the number of relevant studies began to increase dramatically, reaching 27 in 2021. Although the number of studies decreased in 2022, the number remained above 20, and the overall trend has increased during the past years. We insist that real-time object detection thus remains a significant interest in the field of UAV remote sensing.

Figure 3.

The year of publication and the distribution of literature type.

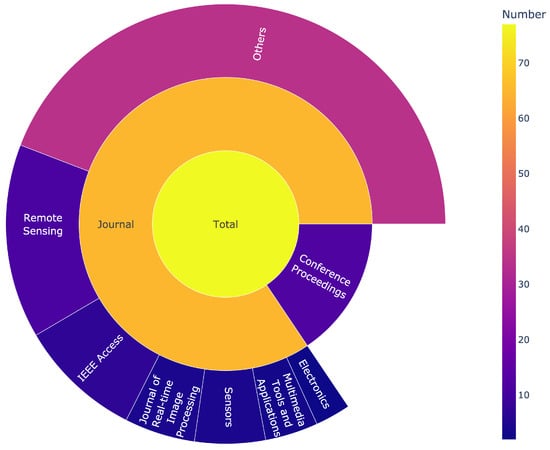

In terms of literature sources, the majority of the literature comes from journals rather than conference proceedings. Figure 4 illustrates the names and the number of journals that have at least two papers, and the remaining journals with only one document are summarized in the ‘Other’ category. All conferences included only one selected paper. Therefore, they are presented as a whole in Figure 4, called ‘Conferences proceedings’.

Figure 4.

Distribution of selected publications in specific journals (Remote Sensing, IEEE Access, Journal of Real-Time Image Processing, Sensors, Multimedia Tools and Applications, and Electronics) or conference proceedings. Only journals with two publications or more are mentioned. Additional publications are presented in the ’Other’ category.

The result indicates that publications on UAV real-time object detection were published in 40 different journals and 12 different conference proceedings. Of these, 46 journals or conference proceedings included only one piece of literature, with the research being relatively scattered across various fields, for example, in electronics [29,30], multimedia applications [31,32,33], and transportation engineering [34,35,36]. The most popular journal is Remote Sensing, which had 11 papers, followed by IEEE Access with 7 papers and Sensors and Journal of Real-time Image Processing with 4. From this, it can be derived that research on UAV real-time object detection has been focused on remote sensing and real-time image processing.

4. Results

In this section, we analyze the 77 selected publications to address the previously formulated research questions using both quantitative analysis and descriptive review.

4.1. Application Scenarios and Tasks of Real-Time Object Detection

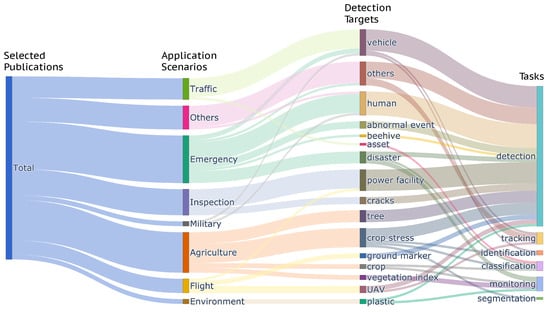

As UAV usability expands across diverse applications, the research focus has shifted towards real-time object detection using UAV remote sensing. This literature review encompasses the scope of real-time object detection scenarios, targets, and task categorizations, as depicted in Figure 5.

Figure 5.

This Sankey diagram of the selected literature illustrates the connectivity among real-time detection scenarios, detection targets, and tasks. Proceeding from left to right, the diagram categorizes and summarizes application scenarios present in the selected literature, the diverse detection targets within varying application contexts, and the tasks executed for each specific target. The width of the flow between nodes signifies the amount of literature pertaining to each node.

We have summarized the seven major scenarios of UAV real-time detection. Figure 5 visually illustrates the specifics of detection targets and tasks. Furthermore, papers focused on algorithmic aspects have been categorized under the ‘Other’ category [33,37,38,39,40,41,42,43,44].

Emergency scenarios appear 20 times within the selection, directly correlated with the imperative for real-time responsiveness during critical situations. The traffic diagram depicted in Figure 5 underscores that in emergency scenarios, the primary detected targets encompass humans [45,46,47,48,49,50,51,52], natural disasters [53,54,55,56,57], and abnormal crowd events [31,58], all of which necessitate swift responses to avoid casualties. An additional subset of detection targets comprises vehicles [28,59] and ships [60], both of which are susceptible to dangerous conditions. In this regard, UAV real-time object detection emerges as a viable solution for addressing these challenges. In particular, providing emergency services during a crisis event is vital for rescue missions [45].

The second prominent application scenario revolves around agriculture, involving a review of seventeen relevant papers. Precision agriculture emphasizes the fast and accurate detection of crop stress, including crop diseases [61,62,63], pests [64], and surrounding weeds [18,65,66,67], as a means to reduce pesticide dependency and promote sustainable farming practices [65]. Real-time information empowers swift responses to disease, pest, and weed propagation, thereby minimizing crop stress impact. The scope of ‘real-time’ technology also applies to vegetation index [68,69] and crop monitoring [70,71], facilitating monitoring systems and informed decision-making. Additionally, this scenario encompasses tree detection [72,73,74,75,76], which is often deployed for the real-time identification of tree canopies. This capability finds utility in precision spraying [75] and fruit yield estimation through counting [73].

The exploration of traffic scenarios has also encompassed numerous real-time detection applications, with vehicles serving as the primary detection target. A pivotal undertaking involves traffic flow analysis, which necessitates real-time detection to derive vehicle speed and traffic density [34,77,78]. Furthermore, real-time vehicle detection finds application in discerning the diverse vehicle types on roads, encompassing cars, trucks, and excavators [36,67,79,80]. Additionally, real-time vehicle detection has proven invaluable for tracking objectives [81,82].

In the inspection scenario, real-time detection assumes a pivotal role in assessing power facility components [29,83,84,85,86,87,88] and detecting surface cracks in bridges and buildings [35,89,90]. These applications are intricately linked to power transmission, building health, and personnel safety.

Within less extensive application scenarios, flight planning emerges, which is primarily employed to ensure UAV flight safety by surveying surrounding targets, such as drones [30,91,92]. Additionally, specific targets are addressed for UAV flight testing [93,94,95].

In terms of environmental protection scenarios, [96,97] underscore the urgency of real-time detection. Particularly, the swift movement of marine plastics mandates instantaneous detection to enable prompt interventions.

Regarding task distribution, the predominant focus rests on detection tasks, comprising 55 studies. While certain tasks diverge from object detection, such as monitoring [56] and tracking [81], it is noteworthy that object detection stands as the foundational stride within visual recognition endeavours [11]. Hence, the remaining studies also necessitate real-time object detection.

4.2. UAV Platforms and Sensors for Different Real-Time Detection Applications

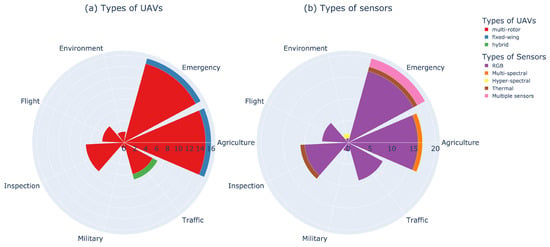

UAVs can be categorized based on various criteria, including purpose, size, load, and power [21]. The most distinguishing factor often lies in their aerodynamic characteristics, leading to classifications into fixed-wing, multi-rotor, and hybrid UAVs [98]. By excluding studies that did not specify UAV types, we conducted a tally of UAV classifications employed across distinct real-time object detection scenarios. The results are presented in Figure 6a.

Figure 6.

(a) Types of UAVs and (b) types of sensors used in different real-time object detection scenarios derived from the selected set of 77 publications.

Fixed-wing UAVs possess immobile wings that rely on forward airspeed for lift generation [98]. Nonetheless, they lack backward movement, hovering, or rotation capabilities [99] and necessitate demanding take-off and landing conditions [21]. Our review distinctly highlights that a mere two studies harnessed fixed-wing UAVs for real-time object detection applications: emergencies [28] and agriculture [76].

In contrast, the domain of real-time detection applications is notably governed by multi-rotor UAVs [85,93,94], which is primarily attributed to their economical manufacturing advantages [99]. An overwhelming majority of investigations gravitate toward this classification. Multi-rotor UAVs ingeniously employ multiple rotors for lift generation [98], facilitating attributes such as vertical landing, agile maneuvers, and swift takeoffs. Furthermore, due to the inherent hovering capability of multi-rotor UAVs, they are especially well-suited for aerial photography [4]. Fixed-wing UAVs require sensors equipped with fast shutters to counteract motion blur resulting from the absence of forward-motion compensation.

The third UAV category encompasses hybrid-wing UAVs, merging fixed-wing and multi-rotor technologies. These hybrids utilize multi-rotors for vertical take-off and landing, while the fixed-wing component serves extended linear coverage. Our review reveals infrequent utilization of hybrid-wing UAVs; they are observed solely in one real-time traffic scenario for asset detection [80].

The sensors of remote sensing employed in real-time object detection encompass RGB [53,64,71,73], multi-spectral [74], hyperspectral [96], thermal infrared [48,84], and the fusion of multiple sensors [50,60]. As evidenced in Figure 6b, the RGB sensor stands as the predominant sensor type for real-time object detection tasks within UAV-based remote sensing. Based on our review, it seems that all studies have defaulted the sensor required for real-time object detection to RGB due to its advantages of being of a high resolution, light weight, and low cost and being easy-to-use.

Multi- and hyper-spectral cameras can capture spectral information from objects of interest [100,101], each with unique characteristics. Multi-spectral sensors offer centimeter-level remote sensing data with several spectral bands, typically spanning blue, green, red, red-edge, and near-infrared. In contrast, hyper-spectral sensors provide a multitude of narrow bands, extending from ultraviolet to longwave [21]. The application of multi-spectral and hyper-spectral sensors in non-real-time object detection based on UAV remote sensing has been extensively explored in various domains. For instance, spectral sensors have found utility in precision agriculture [102,103], landslide monitoring [104], and power line inspection [105]. However, in scenarios where real-time object detection is imperative, our review has revealed a limited number of studies employing spectral sensors. Specifically, only two pieces of literature have been identified that delved into the utilization of spectral sensors for real-time object detection. These studies encompass the detection of tree canopy detection using multi-spectral cameras [74] and the detection of macro plastic in the marine environment using hyper-spectral cameras [96].

Of the articles under our scrutiny, two distinct studies leveraged the potential of thermal infrared cameras to explore their temperature sensitivity. In one instance, the focal point was the detection of photovoltaic panels [84]. In another significant context, the focus shifted towards identifying individuals with elevated body temperatures, which can be potentially indicative of a COVID-19 infection [48].

Furthermore, two additional research studies have reported applications involving the fusion of multiple sensors. In [50], thermal sensors were judiciously deployed for identifying individuals in need of rescue, while an RGB sensor was simultaneously leveraged for precise localization. In another study [60], an RGB camera assumed the role of vessel morphology detection, while thermal infrared technology was adeptly employed to pinpoint heat emissions from engines.

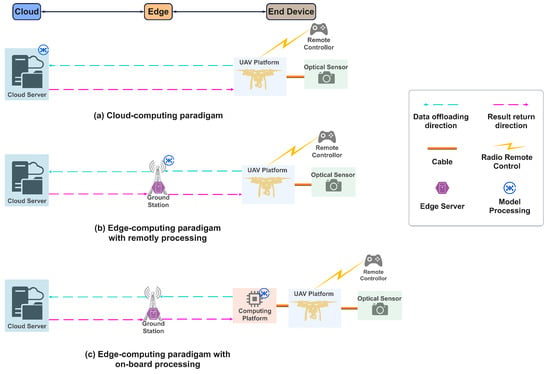

4.3. Two Paradigms for Real-Time Detection

Real-time object detection means that the UAVs equipped with sensors and processors have the capacity to quickly process the collected data and reliably deliver the needed information. Thus, based on our review study, three kinds of paradigms are utilized for real-time object detection: embedded systems, cloud computing, and edge computing. The majority of real-time object detection studies (n = 72) selected the edge computing paradigm, and 4 studies reported using the embedded system. At the same time, there was only one study that selected the cloud computing solution.

Cloud computing is a computing paradigm that provides on-demand services to end-users through a pool of computing resources that includes storage, computing, data, applications, and so on [106]. The cloud computing paradigm is shown in Figure 7a. From our review, the advantages of the cloud computing paradigm are reflected in [73]. The first advantage is that the ground station stores the images collected via UAV and then streams them to the cloud. The data are processed on the cloud, which has powerful computing and storage resources for massive UAV remote sensing data. The second advantage is that the end-users from anywhere at any moment can access the cloud services. In addition, the fault-tolerance mechanism of cloud computing ensures the high reliability of its processing and analysis services, and the ability of the cloud center to dynamically allocate or release resources according to users’ demand is also a strength.

Figure 7.

The paradigm of (a) cloud computing, (b) edge computing with remote processing, and (c) edge computing with onboard processing.

However, cloud computing has some drawbacks when dealing with real-time object detection tasks on UAVs. These drawbacks mainly stem from the growing volume of data at the edge, which is limited by network bandwidth and makes the data difficult to upload to the cloud for processing and analysis [107].

The emergence of edge computing can effectively solve the above problem. There are many definitions of edge computing [106,107,108]. However, the core of the description is that edge computing is the provision of cloud services and IT service environments for users at the edge of the network, with the goal of providing computing, storage, and network bandwidth services close to the end-devices or end-users, thereby reducing latency, ensuring efficient network operation and service delivery, and improving the user experience.

For real-time UAV object detection, the edge end is usually the UAV that generates an enormous volume of data. Based on the above concept of edge computing, we can divide edge computing into two forms:

- One form where the edge end is unable to perform large computations; it just processes partial data and then offloades to the edge server (ground station) for processing. Figure 7b shows the paradigm of edge computing in this form. In this case, the so-called ground station is a generic name and usually is a high-performance computing platform, such as a laptop [59], mobile phone [48,51] or single-board [57].

- Another form where the edge end is also the edge server, which has the capability of processing data while collecting data. This paradigm can be seen in Figure 7c. There are many of these forms of edge computing in our review [31,34,58,84,94], which integrated sensors and embedded computing platforms onto the UAV and treated them as a whole, which is the edge end. During the flight of the UAV, the images are captured and processed simultaneously to obtain the detection results.

It is worth mentioning that some real-time object detection based on UAV remote sensing is one part of a complete system [31], so the results normally will upload to the cloud center, but the edge computing for real-time detection ends at the computing platform or ground station providing needed information. The subsequent tasks will not be discussed here.

Edge computing cannot be developed without embedded systems, and as we noted, edge end computing platforms are built through embedded systems. However, some early studies of real-time target detection [58,82] used embedded devices whose performance could not meet the standards required for edge computing, so they were put into a separate category.

4.4. Computing Platforms Used for Edge Computing

Based on the analysis in the previous section, real-time object detection primarily utilizes edge computing to implement, so the computing platforms used for real-time edge computing are important. They can provide a reference for selecting hardware for subsequent studies. In the meantime, GPU-based computing platforms can provide more computing power for deep learning algorithms, which are popular for image processing. Table 4 lists three typical GPU-based computing platforms that are frequently used in edge computing applications in the selected literature.

Table 4.

List of commonly used GPU-based computing platform examples. The number of symbols ‘+’ in the table represents the level of performance, with more ‘+’ indicating stronger performance. The critical parameters of (central processing unit (CPU), graphics processing unit (GPU), memory, power, and AI performance are compared. The price of these computing platforms is also listed for comparison. Some typical studies’ applications in the selected literature are also listed.

The Nvidia Jetson TX2 is a typical and the most used computing platform. The reason for this may come from the fact that it has a relatively balanced processing power, power consumption, and price. The widespread use of GPU-based computing platforms is also a side note to the development of artificial intelligence, especially deep learning, which has become almost preferred in the field of image recognition, making GPU platforms more popular due to their parallel computing performance.

In this review study, field programmable gate arrays (FPGA) are not used much, with only one mention in the literature [69], although it is also ideal for these edge computing applications due to its reprogramming ability and low-power characteristics. Some other GPU-based computing platforms have also been reported, such as the tensor processing unit (TPU), which is optimized for tensor computing [74], and the Intel Neural Compute Stick, which is based on a visual computing unit (VPU) [31,75]. Some CPU-based computing platforms have also been reviewed, such as the Raspberry Pi series. Although Raspberry Pi is normally used as a comparison item in benchmark studies, it has also been used as the core computing platform in some studies. For example, an insulator detection study used a lightweight algorithm on a Raspberry Pi 4B to achieve real-time detection [87].

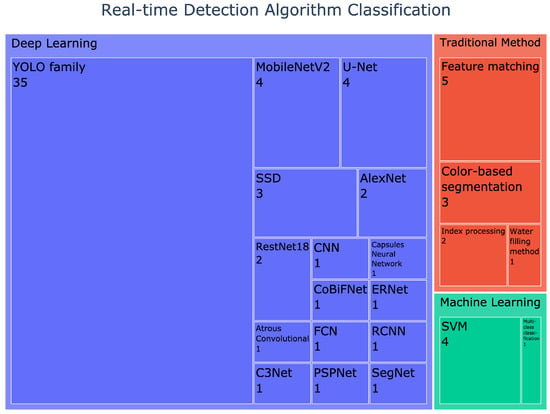

4.5. Real-Time Object Detection Algorithms

Based on our review of the selected literature, three categories of algorithms have been employed for real-time detection in unmanned aerial vehicle (UAV) remote sensing: traditional methods, machine learning, and deep learning. Previous surveys [8,12,21] have indicated that deep learning stands as a pivotal approach for achieving real-time detection in UAV remote sensing owing to its widespread application in image detection. The findings of this study further corroborate this assertion. With the exception of one reference that does not explicitly outline a specific algorithm, among the remaining literature, 60 works utilized deep learning algorithms, 5 employed machine learning methods, and 11 adopted conventional approaches. Figure 8 illustrates the distribution of specific algorithms utilized within each category.

Figure 8.

Classification of different real-time detection algorithms. The tree map shows the specific algorithms in each category and the number of uses.

The so-called “traditional methods” predominantly refer to approaches grounded in digital image processing techniques and analyze characteristics such as the morphology and colour of the target object. To elaborate, articles utilizing feature-matching methods [28,72,81,93,109] are encompassed within this category, including a geometric approach for matching morphological features [93]. Additionally, segmentation methods based on colour [57,73,86] have also been mentioned. Furthermore, studies targeting non-RGB sensors incorporate techniques such as signal processing, as seen in applications such as the water-filling method [84]. Some research focuses on computing vegetation indices [68,69], which can be calculated by using the required bands to obtain the detection results.

Regarding machine learning, most research used the support vector machine (SVM) algorithm [37,60,79,110]. While deploying SVM alone usually does not achieve the requirement of real-time detection, in these studies, commonly modified or added methods were adopted to speed up the algorithm, such as the bag of features approach, whose origin stems from the bag of words model and which was used to simplify the model [79]. Moreover, research using multi-class classification has also been documented [96].

In recent years, deep learning has found extensive application in image detection tasks. However, it also imposes significant demands on computational and storage resources, rendering its deployment in real-time tasks challenging [21]. As a result, there is often a need to streamline deep learning models. In our investigation, we identified 4 articles [42,54,63,77] utilising custom-designed networks, while the remaining 56 references optimized pre-existing models for application in real-time detection tasks within UAV remote sensing.

Deep learning-based object detection has been categorized into two-stage and one-stage algorithms [111]. Although two-stage detection was successful in the early stages, its speed has been an important challenge. A one-stage object detection algorithm takes the entire image and passes it through a fixed grid-based CNN rather than in patches. Among the literature optimizing pre-existing models, the majority of the studies tended to favour the selection of one-stage models. Notably, the YOLO (You Only Look Once) model, in its various versions, represented a substantial portion with a total of 35 articles. The versions of YOLO included, for example, YOLOV3-Tiny [94], YOLOv4 [43], YOLOV5 [97], etc. Additionally, another single-stage detection algorithm, SSD (Single Shot Detection) [40], was featured in 3 articles. Two studies were reported to employ two-stage detection algorithms, specifically RCNNs (regions with convolutional neural networks) [83]. Furthermore, several other deep learning algorithms with light-weight architectures have been used for real-time object detection, including MobileNetV2 [36,41,52,95], U-Net [66,67,74,76], ResNet18 [38,65], AlexNet [18,44], FCN [58], and SegNet [71].

4.6. Technologies Used for Improving UAV Real-Time Object Detection Algorithms

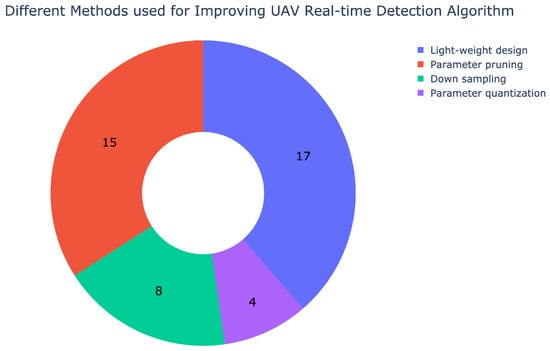

In pursuit of enhanced performance, deep learning models are often designed to be deeper and more complex, which inevitably introduces computing latency. However, in real-time tasks, precision is not the only criterion. Therefore, the aforementioned architectures need further refinement to suit the resource-constrained environment of unmanned aerial vehicles (UAVs). Among the 60 articles employing deep learning models, we conducted an analysis and found that 42 articles have optimized the speed of UAV real-time detection. Among these, 36 articles proposed algorithmic optimisations. Figure 9 illustrates the frequency of the different optimisation methods mentioned across the 33 articles discussing algorithmic improvements.

Figure 9.

Number of uses of improving methods for real-time UAV detection algorithms.

The remaining 6 articles did not choose algorithmic optimisations. Instead, they pursued enhancements to the overall speed of the real-time detection system through strategies such as refining output results in conjunction with GPU hardware architecture. For instance, they selectively output only the portions of the image containing detected objects, thereby reducing transmission overhead [61,80].

The essence of lightweight design lies in the substitution of compact convolution filters for their bulkier counterparts. Specifically, larger convolution filters are replaced by multiple smaller ones, which are subsequently concatenated to achieve a comparable outcome. In our investigation, this approach is commonly applied to the backbone network of the YOLO algorithm, yielding promising results such as model size reduction and improved inference speed. In [75], ShuffleNetV2 replaced the backbone network of YOLOV3-Tiny. In [90], MobileNetV2 substituted for the DarkNet53 backbone of YOLOV3. Moreover, in [43,56,88], MobileNetV3 was adopted to replace the CSPDarkNet53 backbone in YOLOV4.

There are parameter redundancies in deep learning networks, which not only increase the size of the model but also slow down the running speed. Thus, pruning the parameters of deep learning models to achieve higher processing speeds is also a way to lighten these models [21]. For instance, studies have reported parameter pruning in the fully connected layers [83], as well as pruning applied to each layer of the model [64,82]. Further pruning involves reducing the number of channels in convolutional layers [38,55,111,112].

The purpose of parameter quantisation is to reduce the volume of trained models during storage and transmission. Typically, the focus is not on designing smaller model architectures but rather on employing lower-bit fixed representations. This approach reduces model storage space and significantly decreases the required computational resources by diminishing model precision. In [74], model parameters were quantized into 8-bit integers, facilitating real-time execution while maintaining an acceptable level of accuracy loss. Leveraging TensoRT on GPU hardware also enables model quantisation for accelerated performance. This approach has been employed in References [35,40,94].

Furthermore, the downsampling of images [49,70] by reducing their resolution can also effectively reduce computational load and subsequently enhance inference speed. In our review, downsampling is often employed in conjunction with other optimization techniques for improved outcomes. For instance, in [58], downsampling synergizes with lightweight design for algorithmic refinement. Similarly, in [54,55,64], downsampling is combined with parameter pruning for optimisation.

4.7. UAV Real-Time Object Detection Evaluation

Real-time object detection based on UAVs has four evaluation aspects. Accuracy is an important metric for evaluating detection algorithms. However, in real-time tasks, detection speed is also essential. In addition, the latency performance considers the communication delay, computation delay, and so on during the progress from image acquisition to output. Last but not least, energy consumption is important, especially for practical operations.

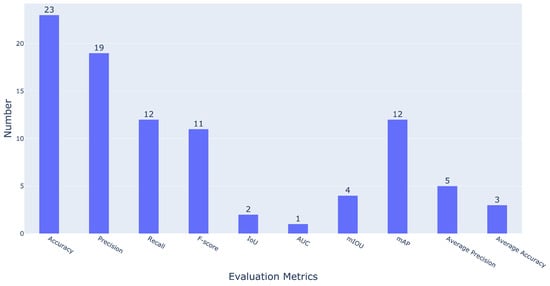

4.7.1. Accuracy

According to our review, ten evaluation metrics were used in the literature. As Figure 10 shows, the accuracy was used as the highest number as an evaluation metric. Accuracy is defined as the ratio of the number of correctly predicted samples to the total number of predicted samples. Although there are 23 studies using accuracy as an evaluation criterion for model performance, it is often criticized for failing to reflect the proportion of true positives, and it is questionable whether the detection results are comprehensive. In addition, precision, recall, F-score, and mAP, which appear more than 10 times, can better present the model’s performance by considering true positives, true negatives, false positives, and false negatives. These are the most commonly used metrics for object detection; we also have some other metrics that can evaluate the model to some extent.

Figure 10.

The number of studies using different metrics for accuracy.

The average accuracy represents the average recall rate of each class in multi-object detection. Compared with accuracy, this metric can better reflect the impact of class imbalance. AUC means the area under the curve of an ROC. However, since an ROC is not easy to measure, the average precision is equivalent to calculating the area under the precision/recall curve. Meanwhile, the IoU is a simple measurement standard for object detection, which represents the intersection ratio of the ground truth and the prediction, and the mIoU is the average of each class.

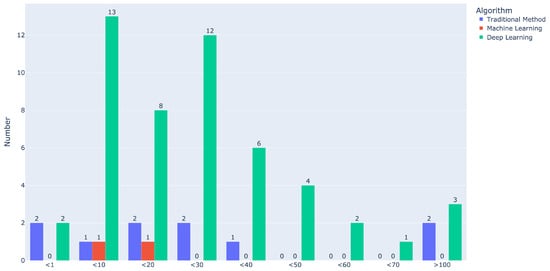

4.7.2. Speed

As a study on real-time target detection, real time is of prime importance here, and the computation time of target detection can be considered as the most time-consuming item in the whole task. We counted the 63 pieces of literature that reported the detection speed while they used different units. For easy comparison, the units were standardized to frame rate, noted as FPS (frame per second), which is a more common metric in the literature. A higher frame rate indicates a faster speed and shorter time consumption. Some studies recorded the speed in more than one different experiment situation, and the shortest time consumption in the literature was used to represent that study’s result. The detection speed was divided into 9 categories, and the distribution is shown in Figure 11.

Figure 11.

Detection speed interval distribution. The detection speed was divided into 9 categories, which are less than 1 FPS, 10 FPS, 20 FPS, 30 FPS, 40 FPS, 50 FPS, 60 FPS, 70 FPS, and more than 100 FPS. The figures outside the bar indicate the number of studies in that speed interval. The blue, red, and green bars represent the literature that used traditional methods, machine learning, and deep learning, respectively.

Although such a comparison does not account for factors such as input image size, hardware capabilities, algorithm complexity, network conditions, or other potential influences on task detection speed, it does highlight that the majority of applications concentrate detection speeds within the range of 1 to 30 frames per second (FPS). As a result, detection speeds falling within this range can generally be considered as fulfilling the real-time processing demands for most scenarios. Indeed, faster detection must be better in real practice, but the faster speed sacrifices detection accuracy to some extent, for example, by using simple algorithms. Two studies that used traditional methods for real-time detection reported more than 60 FPS. They both selected RGB cameras to detect vegetation indices and obtain the 311 FPS result in the case of low image resolution [69]. Another study using FPGA hardware and software co-design optimized implementation to achieve 107 FPS [68], which has some specificity and cannot be a general approach for every application.

4.7.3. Latency

Based on the review results, there are not many studies discussing latency in real-time object detection. In the cloud-computing paradigm, latency mainly refers to the transmission time from the end device to the cloud center. In our database, the edge computing paradigms accounted for 95.8%. In these studies, based on edge computing, they mainly adopted onboard processing, so the latency between edge end and edge server has not been studied much.

However, one study [88] indicated that offloading the computing to the edge server can reduce the computing delay. The author stated that the total delay consists of the delay in UAV data transmission to the edge server, the UAV computing delay, and the edge server computing delay. The study [80] also considered different communication methods. They compared the transfer time over Wi-Fi and 4G.

4.7.4. Energy Consumption

Energy consumption is an evaluation metric that could be easily overlooked, and as is evidenced by our review, just 6 studies (< 10%) reported energy consumption. Considering that detection missions are carried out on UAVs, the batteries of these UAVs have to provide energy for their own flight, the computing platform, and data transmission at the same time. However, the energy seems not easy to calculate. In [88], a data transmission energy consumption model, a UAV computing energy consumption model, and a UAV flight energy consumption model were developed to estimate the total energy consumption over the whole real-time object detection task.

5. Discussion

In this survey, 77 studies were included in a systematic review of real-time object detection based on UAV remote sensing. These studies covered different application scenarios and were analyzed in terms of hardware selection, algorithm development, and improvement to achieve real-time detection over the past 20 years. In summary, real-time object detection is a technology that is increasingly in demand in UAV remote sensing because of its advantages related to higher detection efficiency and faster response times. In this section, the research challenges and the outlook for realizing UAV real-time object detection are discussed.

5.1. Current Challenges

5.1.1. Sensor Usage for UAV Real-Time Object Detection

Promising performance for various real-time object detection applications has been shown for four types of sensors and one type of sensor combination that can be used on UAVs, namely RBG, multi-spectral, hyper-spectral, and thermal sensors, as well as the combination of RGB and thermal sensors. Based on the analysis of the sensors deployed, the challenges of sensors in real-time applications are further discussed. Although the choice of sensors for UAV real-time object detection tasks comes in part from the needs of the measured targets and scenarios, more importantly, RGB sensors are used in most studies because of their smaller data size, more accessibility to setup, and the more superficial processing of RGB images. Meanwhile, the lower price of the RGB camera is also the reason to choose it, especially in some large-scale applications [113]. However, it is important to note that the spectral information provided by RGB is limited.

Multi-spectral and hyper-spectral cameras can obtain more band information than RGB cameras, especially hyper-spectral imagery containing fine spectral resolution, although this also means a larger data volume and increasing computational complexity. According to the investigation conducted in this article, there are only two studies on real-time object detection using hyper-spectral data with UAVs. One of the studies [96] aimed to detect plastics in the ocean by selecting three feature bands, including bands sensitive to non-plastics, PE polymers, and PET polymers. The authors combined the data from these three bands as training data and used a linear classifier for supervised learning to obtain the plastic detection algorithm. The advantage of the linear classifier is that it requires very little computing resources, making it possible to implement real-time detection on lightweight computing platforms mounted on drones. However, the authors also emphasized the disadvantage of this approach, which is the inability to automatically extract features and the need for a large amount of pre-processing compared to deep learning algorithms that require more computing resources. Another study [114] focused on ship detection in the maritime environment. The authors proposed a complete solution for real-time hyper-spectral detection based on UAVs. The solution includes a hyper-spectral system with a camera and control board, a geographic positioning system with a Global Positioning System (GPS) and an inertial measurement unit (IMU), and a data processing system with a CPU. They used a processing method called hyper-spectral derivative anomaly detection (HYDADE) to analyze peak values in the spectral response by processing the first and second derivatives of the spectrum obtained for each pixel and comparing them with an adaptive threshold to detect ships. Based on these two cases, it can be observed that in order to achieve real-time detection, researchers have employed relatively simple algorithms for processing hyper-spectral data to adapt to the limited computing resources on UAVs. Therefore, it is noteworthy that there are only a few algorithms available for the real-time processing of hyper-spectral images up to this point. However, in other scenarios, especially in agriculture, although hyper-spectral offline processing has been widely used in applications such as disease detection [102] and crop identification [115], it is still limited in its real-time application. Therefore, considering the potential application of deep learning in the field of edge computing, its integration is expected to play a significant role in real-time detection. Thus, it may be worthwhile to explore the possibility of developing a real-time detection algorithm for hyper-spectral images using neural network architectures.

Furthermore, the enormous volumes of hyper-spectral data not only limit them to real-time processing on the low-performance embedded processors but also pose challenges for transmitting data in the edge computing environment. Compressing hyper-spectral data to achieve their real-time transmission is a solution [116]. However, the performance-constricted computing platform onboard also limits the compression algorithm due to the high data rate produced by hyper-spectral sensors. Therefore, in the above study [116], the authors also mentioned several requirements for using a compression algorithm onboard to achieve real-time hyper-spectral imagery transmission, including its low computation cost, a high compression ratio, an error-resilient nature, and a high level of parallelism that allows for taking advantage of low-power graphics processing units (LPGPUs) to speed up the compression process. These requirements are very similar to those found in the space environment for satellites [117]. In this review, [118] introduced different hyper-spectral image compression algorithms for remote sensing. They concluded that exploring parallel and hardware implementation is beneficial for reducing computation time and computation power and improving performance. From the algorithm optimization aspect, in this work [119], they reuse the information extracted from one of the compressed hyper-spectral frames to avoid repeat execution in the subsequent hyper-spectral frames based on HyperLCA [120], which is a compression algorithm for satellites. Moreover, this study demonstrated that the proposed algorithm could be implemented in a Jetson Xavier NX on a UAV to compress in real-time hyper-spectral data captured by a Specim FX10 camera, which can transmit to ground stations at up to 200 FPS.

On the other hand, lossless and near-lossless compression showed limited research [118], and it is also difficult to attain ratios better than 4:1 just depending on lossless compression [121]. Some research proposed a combination of image size and encoding algorithms for lossless image compression in the medical field [122]. Thus, in order to achieve the real-time transmission of hyper-spectral data, it is necessary to perform lossy compression to meet the compression ratios imposed by the transmission acquisition data rate and transmission bandwidth, but the quality of the hyper-spectral data received by the edge server (or ground station) must be sufficient to guarantee the minimum standards required by the tasks [116]. Similarly, other sensors, such as RGB and multi-spectral sensors, should also consider the impact of compression algorithms on data quality (resolution) during transmission when applying the edge computing paradigm to transmit data to edge servers for detection tasks and computation.

In terms of multi-spectral sensors, although processing multi-spectral data is not as complex as hyper-spectral data, some algorithms quickly slow down as the number of bands to be processed increases or their parameters change [123]. In one selected study [74], the authors successfully trained a deep learning network to detect trees using one-band spectral images. This indicates that multi-spectral sensors have a similar challenge as hyper-spectral sensors, which need to know the spectral information from targets of interest to conduct spectral matching instead of going further to exploit the advantages of multi-spectral or hyper-spectral data including more spectrum information to detect specific targets.

5.1.2. Edge Computing Paradigm for UAV Real-Time Object Detection

In this review, edge computing is the most commonly used paradigm for real-time detection, rather than cloud computing, as we illustrated in Figure 8. Cloud computing needs to upload large amounts of raw data to the cloud, which is a great challenge for network bandwidth. Although 5G can provide large bandwidth and low latency to realize quick transmission between the data generator end and the cloud center [124], in our investigation, using 5G technology is not the first choice for object detection based on UAV remote sensing applications. A possible reason for this is that the capacity of the network is measured by the bandwidth per cubic meter, and for UAV remote sensing applications, the number of 5G base stations and the number of connected devices in a large space may limit the performance of 5G [124]. Thus, despite some success with 5G in object detection that is not in the UAV remote sensing scenario, this problem still exists to some extent [125]. The odds of edge computing is that it allows for more functions to be deployed to end devices at the edge to enable them to process the generated data, thus solving computationally intensive offloading and latency problems [126].

There are two kinds of edge computing paradigms. One is to deploy a local edge server (ground station) for UAV information processing (Figure 7b), and the other one is to implement a UAV-onboard real-time embedded platform (Figure 7c).

Undoubtedly, the ideal situation for UAV real-time object detection tasks is to perform onboard computation, which avoids the raw data transfer, which usually puts pressure on the transmission of the sensor-generated imagery data in object detection using computer vision. At the same time, although embedded edge computing platforms with GPU acceleration are available in the industry and have been validated in different UAV real-time detection scenarios, their computing power is still not comparable to that of professional-level computing platforms, which has led to a lot of research focusing on how to optimize algorithms [56,65,85], and most commonly, compress computer vision models that consist of deep neural networks. In [127], the authors pointed out that onboard processing could greatly increase the energy consumption of the UAV, making it impossible to take advantage of practical applications. In particular, the battery packs of commercial UAVs are for propulsion [128], and once this power is allocated to onboard processing and data transmission, the flight time will be significantly reduced, which will also lead to a decrease in the overall efficiency of the detection tasks [129]. Although an external battery can be chosen to power the computing platform exclusively, this in turn will increase the payload on the UAV and thus reduce the flight time as well.

In this review, only 7 studies attempted to consider computing offloading. Computation offloading can significantly reduce energy consumption and computing resource requirements by a large margin, and this is a current area of research on mobile edge computing [130]. Some studies indicated that offloading processing is a feasible solution for real-time tasks, which takes into account energy consumption and processing speed [125,127,129]. There are four types of offloading types: binary offloading, partial offloading, hierarchical architectures, and distributed computing [126]. The offloading type depends on the UAV’s flying time and the computational capacity of both the UAV-aided edge server and the ground edge server, and [131] pointed out that energy consumption and task completion time are the most crucial performance metrics for designing an offloading algorithm. The included literature in this review did not discuss the offloading technology applied in UAVs. One challenge mentioned by [132] indicated that the ground edge server may not have sufficient energy to execute an offloaded task. Another issue is the offloading delay, which was raised in [131] and which has several causes, such as obstacles, low-frequency channels, and task size, as well as the constant innovations in neural networks that cannot be ignored [126]. Additionally, service latency could affect UAV real-time object detection, as mentioned in [131], and a higher latency causes system overhead, which severely deteriorates offloading performance.

5.1.3. Lightweight Real-Time Object Detection Algorithms Based on UAVs

Real-time object detection algorithms run on resource-limited edge computing platforms, and those models need to be relatively lightweight, thereby reducing the reliance on computational resources as well as reducing inference time to meet real-time requirements. In [6], the authors highlighted that it has been challenging to improve the detection time of detectors, and they provided an overview of the speed-up techniques, including feature map shared computation, cascaded detection, network pruning and quantification, lightweight network design, and numerical acceleration. In our review study on UAV real-time object detection, the most commonly used methods to improve the speed of the algorithm are network pruning [54,56,65,112], quantification [67,82,92], and the use of a lightweight network design.

Network pruning is an essential technique for both memory size and bandwidth reduction, and it can remove the redundancy of a network and parameters, which can reduce computations without a significant impact on accuracy. In [133], the authors demonstrated the possibility of pruning 90% of all weights in a ResNet-50 network trained on ImageNet, with a loss of less than 3% accuracy. However, in [134], the authors noted that although network pruning sometimes progressively improves accuracy by escaping the local minimum, the accuracy gains are better realized by switching to a better architecture [135]. In the meantime, the performance could also be bottlenecked by the structure itself, and a network architecture search and knowledge distillation can be options for further compression [136]. From the practical point of view, due to the limitation of embedded device resources, large-capacity optimization networks are not allowed, so in [137], the authors pointed out that when pruning a network, it is best to consider the deployment environment, target device, and speed/accuracy trade-offs to meet the needs of the constrained environment.

To perform network quantization is to compress a neural network by reducing its value precision, i.e., by converting FP32 numbers to lower-bit representations, which can accelerate inference speed [136]. However, the literature also mentioned that quantization usually leads to accuracy loss due to information loss during the quantization process. In UAV real-time object detection tasks, it is a common idea to adopt a compact network as the initial model. Unfortunately, the accuracy drop during quantization is especially obvious in compact networks. Some advanced quantization techniques [136], including asymmetric quantization [40] and calibration-based quantization [64], can improve the accuracy. In practice, 8-bit quantization is widely used, and in our review studies [67,74], good trade-offs between accuracy and compression were achieved in real-time object detection.

5.1.4. Other Challenges for Real-Time Object Detection on UAV Remote Sensing

Since the implementation of real-time object detection for UAVs is a complex task, there are still some neglected issues that need to be taken into account.

In the detection process of UAVs, remote sensing images are often collected by setting up missions in the system. Mature UAVs have stable flight control systems and autonomous navigation through active or passive sensors [4]. However, in order to achieve the task of real-time target detection, with the potential for UAVs to penetrate deeper into the environment rather than just detecting at high altitudes, consideration needs to be given to whether there is competition for computational resources between increasingly complex autonomous navigation and obstacle avoidance strategies and target detection, as well as the challenges posed by increased energy consumption.

For UAV real-time target detection, the collected data need to be read onboard. However, commercial UAV manufacturers usually do not open-source their flight control, map transmission, or other systems due to copyright considerations, and only a few UAV series are open to a limited API interface. These studies [138,139] used the SDKs provided by DJI. This certainly makes secondary development more difficult in situations where the required information is not directly available. Although the option of using open-source flight control, such as PX4 Autopilot (https://px4.io/ (accessed on 6 June 2023)), exists, there is also a significant amount of design expertise required to ensure its stability, safety, and legality. This may account for the lack of implementation of real-time object detection on UAVs from a practical operation perspective during this review process.

5.2. Future Outlook

5.2.1. Autonomous UAV Real-Time Object Detection

As we illustrated in Figure 1,for the concept of real-time object detection based on UAV remote sensing, when the computing platform executes the detection models, outputting the results and real-time responses can make the real-time object detection tasks more automatic and form a closed loop. The idea of autonomous UAV tasks is to reduce human operation, where humans can just issue commands to deploy, monitor, and terminate the missions of UAVs. The UAV can conduct remote sensing according to a specified mission plan and run its algorithms automatically to output results. These results can be sent to humans, machines, or systems to make quick decisions and respond. In [140], the authors used UAVs to identify infected plants and link the results to the system. Then, the system decided the chemical and exact amount of spray to use. In this simulation, the researchers developed an automatic collaboration between UAVs to detect garbage pollution across water surfaces and USVs to pick up garbage [141], thus establishing more reliable frameworks to realize a collaborative platform between UAVs and systems or robots (air-to-air and air-to-ground) in real-time detection scenarios [142]. This will have the opportunity to further bridge the gap between UAVs and manned aerial and terrestrial surveys [4].

In fact, the demand for UAV real-time object detection comes from the demand for real-time response. It is meaningless if only a real-time detection result is obtained. Humans need to use real-time detection results to reduce losses and improve efficiency. To achieve this, some technologies, including multi-machine collaborative work [143,144], knowledge graphs [145], natural language processing [146], and blockchain [147], are needed.

5.2.2. Communication in Real-Time Object Detection Based on UAV Remote Sensing

As we discussed in the previous section, edge computing is an ideal technology for achieving real-time object detection, except for improving lightweight models and offloading technology. In contrast, the development of communication technologies cannot be ignored.

The limitation of 5G basically arises from the need to reach data rates of up to 100 Mbps and 50 Mbps for downlink and uplink, respectively [125]. Compared to 5G, 6G needs more significant improvements in performance, such as bandwidth, delay, and coverage, which expands 5G’s requirements for scenarios such as ultra-low latency, massive connections, and ultra-large bandwidth to achieve higher peak transmission rates [148]. Benefiting from the large communication bandwidth and high transmission rate of the 6G mobile network, UAVs can achieve efficient data collection in a shorter period, which also overcomes the problem of insufficient collection time caused by the short battery life of UAVs. In addition, the challenge of real-time detection is not only solved by reducing latency and increasing network bandwidth but also by considering technologies such as pre-training and online learning to solve real-time tasks.

The above-mentioned discussion points indicate that it is essential to support machine learning or deep learning algorithms at the edge. Future generations of wireless communication systems have the opportunity to solve this issue. The communication channels in THz and optical frequency regimes, while exploiting modulation methods capable of 10 b/symbol, can achieve data rates of around 100 Tb/s with such assumptions in mind to provide a communication link that can enable massive computations to be conducted remotely from the device or machine that is undertaking real-time processing at the edge of the network [149].

However, there are also some challenges that need to be considered, such as how to enable the interaction between the data generated by UAVs in the airspace network and other heterogeneous networks and how to solve the problem of insufficient spectrum resources caused by massive UAV access to a 6G network. Additionally, even though there is automatic battery replacement technology for UAVs, it still cannot solve the root problem of short battery life [150].

6. Conclusions

This study reviewed the recent literature on real-time object detection based on UAV remote sensing. We investigated the advances in aerial real-time object detection and comprehensively considered different aspects, including application scenarios, hardware usage, algorithm deployment, and evaluation metrics. Accordingly, the current research status and challenges are presented, and a basis and suggestions for future research have been proposed.

We have adopted a systematic literature review approach by first defining a concept map for real-time object detection by UAV remote sensing, and according to the concept map analysis, seven research questions were analyzed (RQs).

The review findings well-illustrated seven research questions. More real-time object detection research exists in emergency rescue, precision agriculture, traffic, and public facility inspection scenarios (RQ1). Multi-rotor UAVs are the most-used platforms due to their ability to hover, thus providing easy access to remote sensing images. Although thermal and spectral sensors have emerged for studies, they currently need to become mainstream for real-time detection but are limited by the form and size of their image data. Thus, RGB images are being studied more in UAV remote sensing (RQ2). Most real-time detection adopts the edge computing paradigm, and the number of studies using the onboard processing strategy is much greater than those using the computation offloading strategy (RQ3). GPU-based edge computing platforms are widely used to complete real-time object detection in UAV remote sensing images. However, some applications also use CPU, VPU, TPU, and FPGA according to their requirements (RQ4). With the widespread use of deep learning, it has become the first choice for real-time object detection tasks (RQ5), so it is vital to optimize these algorithms to better deploy them on resource-constrained computing platforms. Optimization can be carried out, e.g., by the use of lightweight convolutional layers, by pruning parameters, utilizing low-rank factorization, and incorporating knowledge distillation (RQ6). Finally, for evaluation metrics, in addition to accuracy and speed, latency and energy deserve equal attention (RQ7).

In conclusion, this paper has highlighted the challenges and key issues associated with implementing real-time object detection for UAV remote sensing. Through a comprehensive analysis of sensor usage, edge computing, and model compression, this paper has identified areas that require further research and development. Future research needs to explore real-time processing solutions for multi-spectral and hyper-spectral images and investigate the possibilities of hardware and algorithms under different edge computing paradigms. As mentioned in this paper, bridging multiple disciplines, such as robotics and remote sensing, is crucial to fostering innovation and progress in real-time UAV remote sensing, and collaborative research efforts that connect different fields of study can further leverage the latest technologies for the benefit of society.

Author Contributions

Conceptualization, Z.C., L.K. and J.V.; methodology, Z.C.; formal analysis, Z.C.; investigation, Z.C.; data curation, Z.C.; writing—original draft preparation, Z.C.; writing—review and editing, Z.C., L.K., J.V. and W.W.; visualization, Z.C.; supervision, L.K., J.V. and W.W.; project administration, L.K, J.V. and W.W.; funding acquisition, L.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Key R&D Program of China (2021ZD0110901) and the Science and Technology Planning Project of the Inner Mongolia Autonomous Region (2021GG0341).

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Yang, X.; Smith, A.; Bourchier, R.; Hodge, K.; Ostrander, D.; Houston, B. Mapping flowering leafy spurge infestations in a heterogeneous landscape using unmanned aerial vehicle Red-Green-Blue images and a hybrid classification method. Int. J. Remote Sens. 2021, 42, 8930–8951. [Google Scholar]

- Feng, J.; Wang, J.; Qin, R. Lightweight detection network for arbitrary-oriented vehicles in UAV imagery via precise positional information encoding and bidirectional feature fusion. Int. J. Remote Sens. 2023, 44, 1–30. [Google Scholar]

- Alsamhi, S.H.; Shvetsov, A.V.; Kumar, S.; Shvetsova, S.V.; Alhartomi, M.A.; Hawbani, A.; Rajput, N.S.; Srivastava, S.; Saif, A.; Nyangaresi, V.O. UAV computing-assisted search and rescue mission framework for disaster and harsh environment mitigation. Drones 2022, 6, 154. [Google Scholar] [CrossRef]

- Nex, F.; Armenakis, C.; Cramer, M.; Cucci, D.A.; Gerke, M.; Honkavaara, E.; Kukko, A.; Persello, C.; Skaloud, J. UAV in the advent of the twenties: Where we stand and what is next. ISPRS J. Photogramm. Remote Sens. 2022, 184, 215–242. [Google Scholar]

- Chabot, D. Trends in drone research and applications as the Journal of Unmanned Vehicle Systems turns five. J. Unmanned Veh. Syst. 2018, 6, vi–xv. [Google Scholar] [CrossRef]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object detection in 20 years: A survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar]

- Aposporis, P. Object detection methods for improving UAV autonomy and remote sensing applications. In Proceedings of the 2020 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining (ASONAM), Virtual, 7–10 December 2020; pp. 845–853. [Google Scholar]

- Ghaffarian, S.; Valente, J.; Van Der Voort, M.; Tekinerdogan, B. Effect of attention mechanism in deep learning-based remote sensing image processing: A systematic literature review. Remote Sens. 2021, 13, 2965. [Google Scholar]

- Cazzato, D.; Cimarelli, C.; Sanchez-Lopez, J.L.; Voos, H.; Leo, M. A survey of computer vision methods for 2d object detection from unmanned aerial vehicles. J. Imaging 2020, 6, 78. [Google Scholar]

- Osco, L.P.; Junior, J.M.; Ramos, A.P.M.; de Castro Jorge, L.A.; Fatholahi, S.N.; de Andrade Silva, J.; Matsubara, E.T.; Pistori, H.; Gonçalves, W.N.; Li, J. A review on deep learning in UAV remote sensing. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102456. [Google Scholar]

- Pathak, A.R.; Pandey, M.; Rautaray, S. Application of deep learning for object detection. Procedia Comput. Sci. 2018, 132, 1706–1717. [Google Scholar]

- Tetila, E.C.; Machado, B.B.; Menezes, G.K.; Oliveira, A.d.S.; Alvarez, M.; Amorim, W.P.; Belete, N.A.D.S.; Da Silva, G.G.; Pistori, H. Automatic recognition of soybean leaf diseases using UAV images and deep convolutional neural networks. IEEE Geosci. Remote Sens. Lett. 2019, 17, 903–907. [Google Scholar]

- Feng, Q.; Liu, J.; Gong, J. UAV remote sensing for urban vegetation mapping using random forest and texture analysis. Remote Sens. 2015, 7, 1074–1094. [Google Scholar]

- Nevalainen, O.; Honkavaara, E.; Tuominen, S.; Viljanen, N.; Hakala, T.; Yu, X.; Hyyppä, J.; Saari, H.; Pölönen, I.; Imai, N.N.; et al. Individual tree detection and classification with UAV-based photogrammetric point clouds and hyperspectral imaging. Remote Sens. 2017, 9, 185. [Google Scholar]

- Byun, S.; Shin, I.K.; Moon, J.; Kang, J.; Choi, S.I. Road traffic monitoring from UAV images using deep learning networks. Remote Sens. 2021, 13, 4027. [Google Scholar] [CrossRef]

- Sherstjuk, V.; Zharikova, M.; Sokol, I. Forest fire-fighting monitoring system based on UAV team and remote sensing. In Proceedings of the 2018 IEEE 38th International Conference on Electronics and Nanotechnology (ELNANO), Kyiv, Ukraine, 22–24 April 2018; pp. 663–668. [Google Scholar]