Relative Localization within a Quadcopter Unmanned Aerial Vehicle Swarm Based on Airborne Monocular Vision

Abstract

:1. Introduction

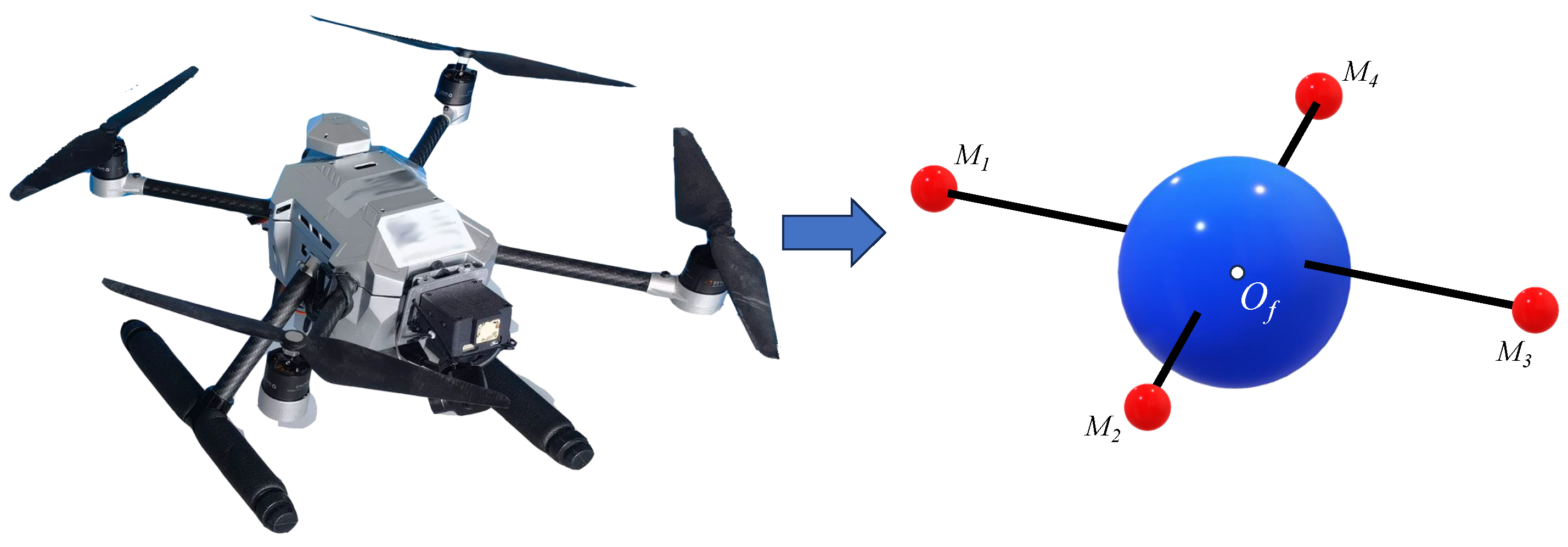

- We propose a new idea of directly using only the rotor motors as the basis for localization and use the deep-learning-based YOLOv8-pose keypoint detection algorithm to achieve fast and accurate detection of UAVs and their motors. Compared to other visual localization information sources, we do not add additional conditions and data acquisition is more direct and precise.

- A more suitable algorithm for solving the PnP (Perspective-n-Point) problem is derived based on the image plane 2D coordinates of rotor motors and the shape feature information of the UAV. Our algorithm is optimized for the application target, reduces the complexity of the algorithm by exploiting the geometric features of the UAV, and is faster and more accurate than classical algorithms.

- For the multi-solution problem of P3P, we propose a new scheme to determine the unique correct solution based on the pose information instead of the traditional reprojection method, which solves the problem of occluded motors during visual relative localization. The proposed method breaks the limitations of classical methods and reduces the amount of data necessary for visual localization.

2. Related Work

2.1. Monocular Visual Localization

2.2. Target and Keypoint Detection

2.3. Solving the PnP Problem

3. Detection of UAVs and Motors

3.1. Detection Model Training

3.2. Sequencing of Motor Keypoints

| Algorithm 1. Sorting the four motors |

|

4. Relative Position Solution Method

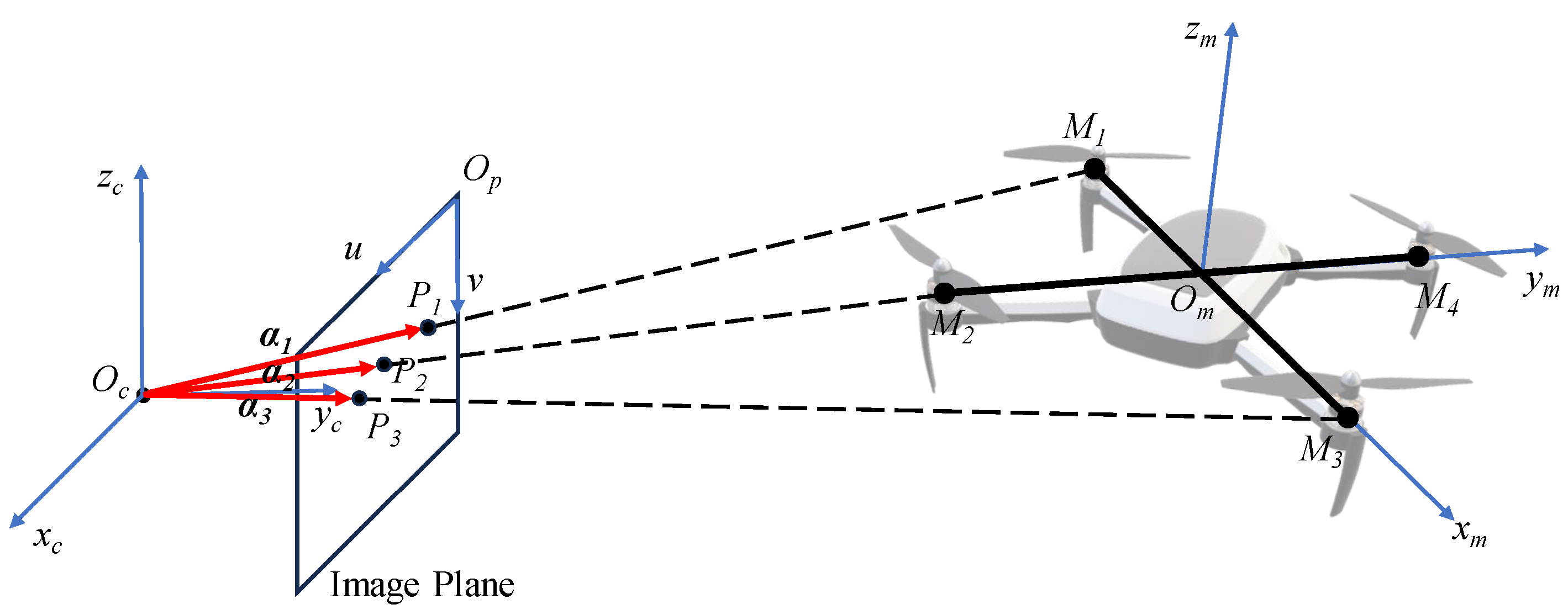

4.1. Problem Model

4.2. Improved Solution Scheme for the P3P Problem

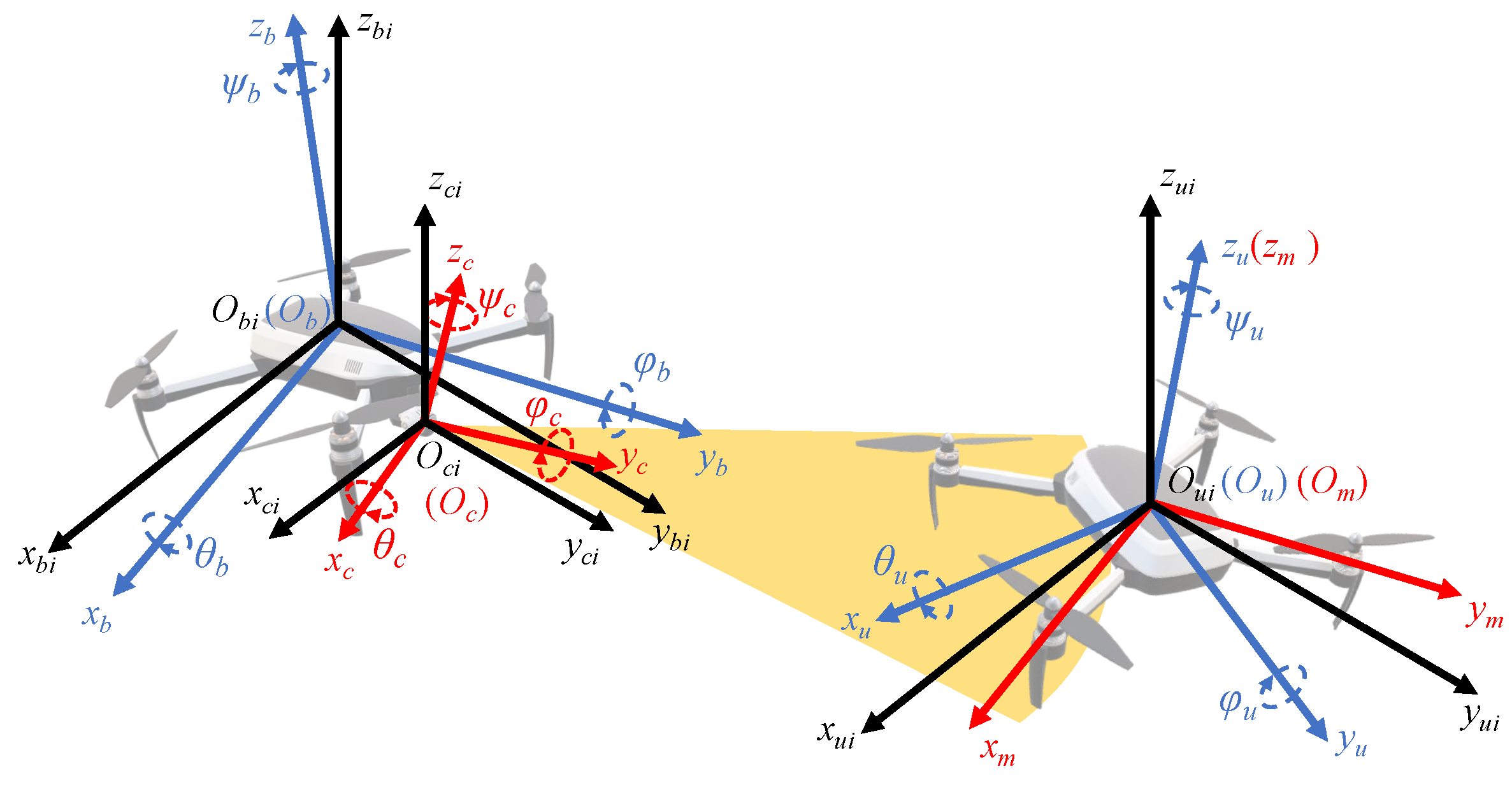

4.3. Conversion of Coordinate Systems

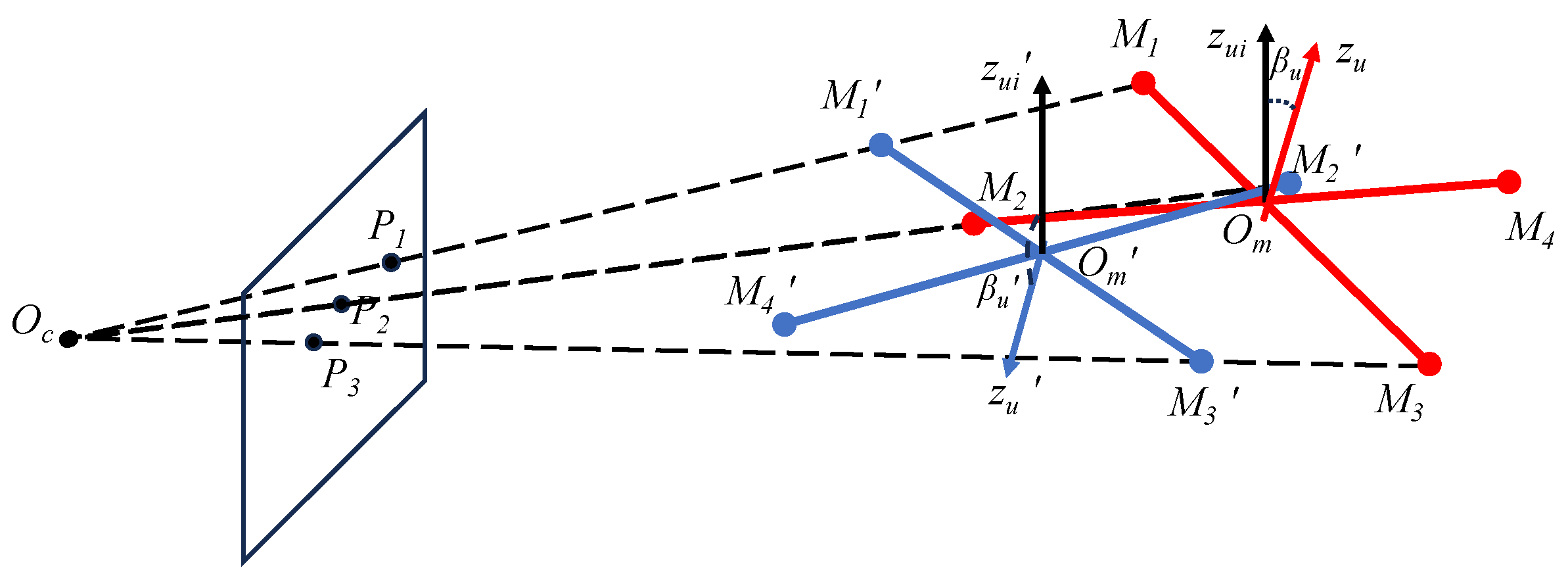

4.4. Determination of Correct Solution

| Algorithm 2. Determining the correct solution |

|

4.5. Four Motors Detected

4.6. Two Motors Detected

5. Experimental Results and Analysis

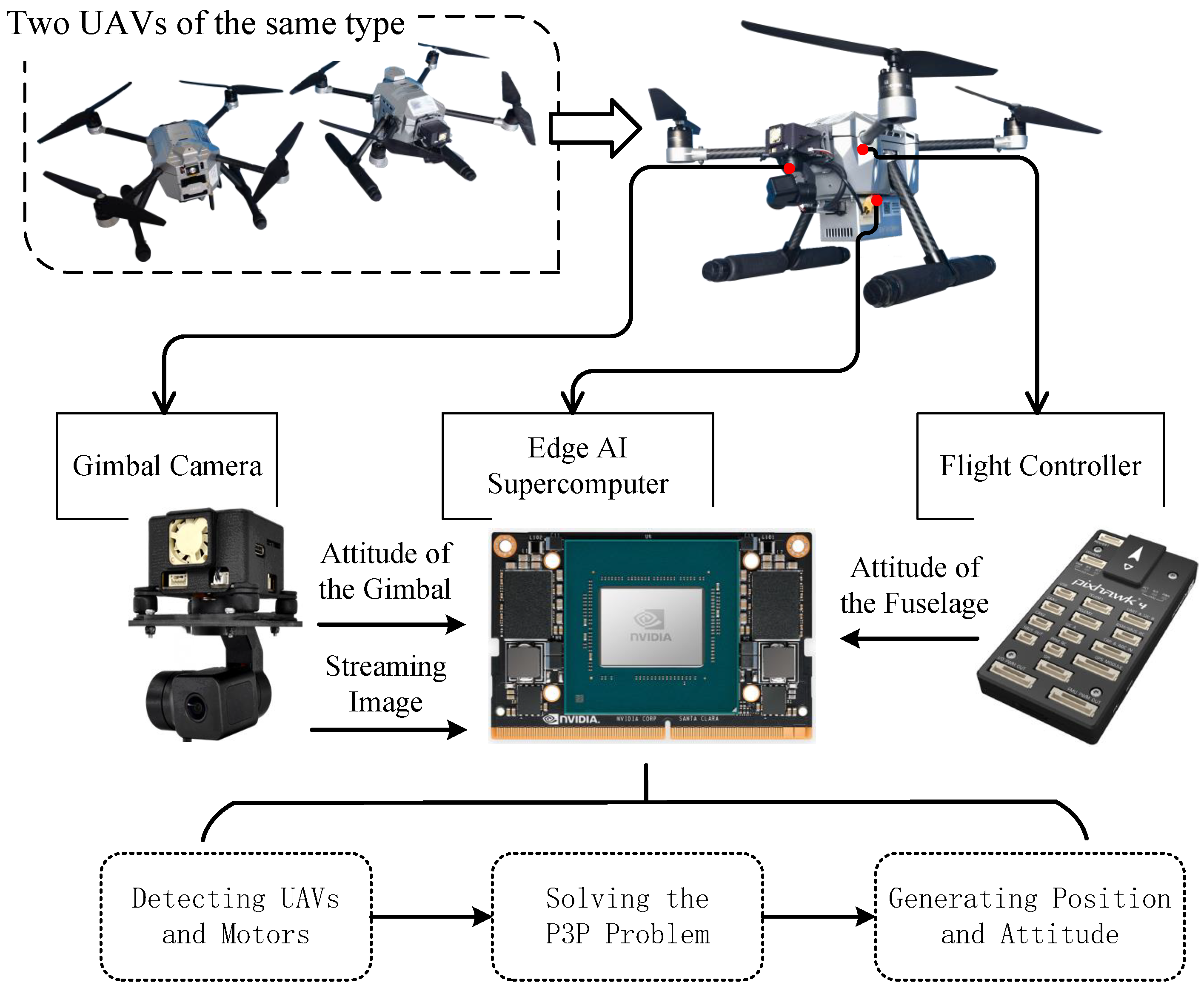

5.1. Experiment Platform

5.2. Detection Performance Experiment

5.3. Relative Localization Simulation Experiment

5.3.1. Simulation Model

5.3.2. Execution Speed

5.3.3. Computational Accuracy

5.3.4. System Experiment

6. Conclusions

- Our study validates the feasibility of accurately detecting UAV motors in real time using the YOLOv8-pose attitude detection algorithm.

- Our PnP solution algorithm derived based on the geometric features of the UAV proved to be faster and more stable.

- Through the validation of a large number of stochastic experiments, we propose for the first time a fast scheme based on the rationality of UAV attitude to deal with the PnP multi-solution problem, which ensures the stability of the scheme when the visual information is incomplete.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Yayli, U.C.; Kimet, C.; Duru, A.; Cetir, O.; Torun, U.; Aydogan, A.C.; Padmanaban, S.; Ertas, A.H. Design optimization of a fixed wing aircraft. Adv. Aircr. Spacecr. Sci. 2017, 1, 65–80. [Google Scholar]

- Wang, X.; Shen, L.; Liu, Z.; Zhao, S.; Cong, Y.; Li, Z.; Jia, S.; Chen, H.; Yu, Y.; Chang, Y.; et al. Coordinated flight control of miniature fixed-wing UAV swarms: Methods and experiments. Sci. China Inf. Sci. 2019, 62, 134–150. [Google Scholar] [CrossRef]

- Hellaoui, H.; Bagaa, M.; Chelli, A.; Taleb, T.; Yang, B. On Supporting Multiservices in UAV-Enabled Aerial Communication for Internet of Things. IEEE Internet Things J. 2023, 10, 13754–13768. [Google Scholar] [CrossRef]

- Zhu, Q.; Liu, R.; Wang, Z.; Liu, Q.; Han, L. Ranging Code Design for UAV Swarm Self-Positioning in Green Aerial IoT. IEEE Internet Things J. 2023, 10, 6298–6311. [Google Scholar] [CrossRef]

- Li, B.; Jiang, Y.; Sun, J.; Cai, L.; Wen, C.Y. Development and Testing of a Two-UAV Communication Relay System. Sensors 2016, 16, 1696. [Google Scholar] [CrossRef]

- Ganesan, R.; Raajini, M.; Nayyar, A.; Sanjeevikumar, P.; Hossain, E.; Ertas, A. BOLD: Bio-Inspired Optimized Leader Election for Multiple Drones. Sensors 2020, 11, 3134. [Google Scholar] [CrossRef]

- Zhou, L.; Leng, S.; Liu, Q.; Wang, Q. Intelligent UAV Swarm Cooperation for Multiple Targets Tracking. IEEE Internet Things J. 2022, 9, 743–754. [Google Scholar] [CrossRef]

- Cheng, C.; Bai, G.; Zhang, Y.A.; Tao, J. Resilience evaluation for UAV swarm performing joint reconnaissance mission. Chaos 2019, 29, 053132. [Google Scholar] [CrossRef]

- Luo, L.; Wang, X.; Ma, J.; Ong, Y. GrpAvoid: Multigroup Collision-Avoidance Control and Optimization for UAV Swarm. IEEE Trans. Cybern. 2023, 53, 1776–1789. [Google Scholar] [CrossRef]

- Qi, Y.; Zhong, Y.; Shi, Z. Cooperative 3-D relative localization for UAV swarm by fusing UWB with IMU and GPS. J. Phys. Conf. Ser. 2020, 1642, 012028. [Google Scholar] [CrossRef]

- Hu, J.; Hu, J.; Shen, Y.; Lang, X.; Zang, B.; Huang, G.; Mao, Y. 1D-LRF Aided Visual-Inertial Odometry for High-Altitude MAV Flight. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 5858–5864. [Google Scholar]

- Masselli, A.; Hanten, R.; Zell, A. Localization of Unmanned Aerial Vehicles Using Terrain Classification from Aerial Images. In Intelligent Autonomous Systems 13, Proceedings of the 13th International Conference IAS-13, Padova, Italy, 15–18 July 2014; Springer: Cham, Switzerland, 2016; pp. 831–842. [Google Scholar]

- Lin, H.; Zhan, J. GNSS-denied UAV indoor navigation with UWB incorporated visual inertial odometry. Measurement 2023, 206, 112256. [Google Scholar] [CrossRef]

- Zhang, M.; Han, S.; Wang, S.; Liu, X.; Hu, M.; Zhao, J. Stereo Visual Inertial Mapping Algorithm for Autonomous Mobile Robot. In Proceedings of the 2020 3rd International Conference on Intelligent Robotic and Control Engineering (IRCE), Oxford, UK, 10–12 August 2020; pp. 97–104. [Google Scholar]

- Jiang, Y.; Gao, Y.; Song, W.; Li, Y.; Quan, Q. Bibliometric analysis of UAV swarms. J. Syst. Eng. Electron. 2022, 33, 406–425. [Google Scholar] [CrossRef]

- Mueller, F.d.P. Survey on Ranging Sensors and Cooperative Techniques for Relative Positioning of Vehicles. Sensors 2017, 17, 271. [Google Scholar] [CrossRef]

- Dai, M.; Li, H.; Liang, J.; Zhang, C.; Pan, X.; Tian, Y.; Cao, J.; Wang, Y. Lane Level Positioning Method for Unmanned Driving Based on Inertial System and Vector Map Information Fusion Applicable to GNSS Denied Environments. Drones 2023, 7, 239. [Google Scholar] [CrossRef]

- Garcia-Fernandez, M.; Alvarez-Lopez, Y.; Las Heras, F. Autonomous Airborne 3D SAR Imaging System for Subsurface Sensing: UWB-GPR on Board a UAV for Landmine and IED Detection. Remote Sens. 2019, 11, 2357. [Google Scholar] [CrossRef]

- Fan, S.; Zeng, R.; Tian, H. Mobile Feature Enhanced High-Accuracy Positioning Based on Carrier Phase and Bayesian Estimation. IEEE Internet Things J. 2022, 9, 15312–15322. [Google Scholar] [CrossRef]

- Song, H.; Choi, W.; Kim, H. Robust Vision-Based Relative-Localization Approach Using an RGB-Depth Camera and LiDAR Sensor Fusion. IEEE Trans. Ind. Electron. 2016, 63, 3725–3736. [Google Scholar] [CrossRef]

- Liu, Z.; Zhang, W.; Zheng, J.; Guo, S.; Cui, G.; Kong, L.; Liang, K. Non-LOS target localization via millimeter-wave automotive radar. J. Syst. Eng. Electron. 2023, 1–11. [Google Scholar] [CrossRef]

- Arafat, M.Y.; Alam, M.M.; Moh, S. Vision-Based Navigation Techniques for Unmanned Aerial Vehicles: Review and Challenges. Drones 2023, 7, 89. [Google Scholar] [CrossRef]

- Fan, H.; Wen, L.; Du, D.; Zhu, P.; Hu, Q.; Ling, H. VisDrone-SOT2020: The Vision Meets Drone Single Object Tracking Challenge Results. In Proceedings of the Computer Vision—ECCV 2020 Workshops, Glasgow, UK, 23–28 August 2020; pp. 728–749. [Google Scholar]

- Zhao, X.; Yang, Q.; Liu, Q.; Yin, Y.; Wei, Y.; Fang, H. Minimally Persistent Graph Generation and Formation Control for Multi-Robot Systems under Sensing Constraints. Electronics 2023, 12, 317. [Google Scholar] [CrossRef]

- Yan, J.; Zhang, Y.; Kang, B.; Zhu, W.P.; Lun, D.P.K. Multiple Binocular Cameras-Based Indoor Localization Technique Using Deep Learning and Multimodal Fusion. IEEE Sens. J. 2022, 22, 1597–1608. [Google Scholar] [CrossRef]

- Yasuda, S.; Kumagai, T.; Yoshida, H. Precise Localization for Cooperative Transportation Robot System Using External Depth Camera. In Proceedings of the IECON 2021—47th Annual Conference of the IEEE Industrial Electronics Society, Toronto, ON, Canada, 13–16 October 2021; pp. 1–7. [Google Scholar]

- Li, J.; Li, H.; Zhang, X.; Shi, Q. Monocular vision based on the YOLOv7 and coordinate transformation for vehicles precise positioning. Connect. Sci. 2023, 35, 2166903. [Google Scholar] [CrossRef]

- Lin, F.; Peng, K.; Dong, X.; Zhao, S.; Chen, B.M. Vision-based formation for UAVs. In Proceedings of the 11th IEEE International Conference on Control and Automation (ICCA), Taichung, Taiwan, 18–20 June 2014; pp. 1375–1380. [Google Scholar]

- Zhao, B.; Chen, X.; Jiang, J.; Zhao, X. On-board Visual Relative Localization for Small UAVs. In Proceedings of the 2020 Chinese Control and Decision Conference (CCDC), Hefei, China, 22–24 August 2020; pp. 1522–1527. [Google Scholar]

- Zhao, H.; Wu, S. A Method to Estimate Relative Position and Attitude of Cooperative UAVs Based on Monocular Vision. In Proceedings of the 2018 IEEE CSAA Guidance, Navigation and Control Conference (CGNCC), Xiamen, China, 10–12 August 2018; pp. 1–6. [Google Scholar]

- Walter, V.; Staub, N.; Saska, M.; Franchi, A. Mutual Localization of UAVs based on Blinking Ultraviolet Markers and 3D Time-Position Hough Transform. In Proceedings of the 2018 IEEE 14th International Conference on Automation Science and Engineering (CASE), Munich, Germany, 20–24 August 2018; pp. 298–303. [Google Scholar]

- Li, S.; Xu, C. Efficient lookup table based camera pose estimation for augmented reality. Comput. Animat. Virtual Worlds 2011, 22, 47–58. [Google Scholar] [CrossRef]

- Zhao, B.; Li, Z.; Jiang, J.; Zhao, X. Relative Localization for UAVs Based on April-Tags. In Proceedings of the 2020 Chinese Control and Decision Conference (CCDC), Hefei, China, 22–24 August 2020; pp. 444–449. [Google Scholar]

- Pan, T.; Deng, B.; Dong, H.; Gui, J.; Zhao, B. Monocular-Vision-Based Moving Target Geolocation Using Unmanned Aerial Vehicle. Drones 2023, 7, 87. [Google Scholar] [CrossRef]

- Jin, R.; Jiang, J.; Qi, Y.; Lin, D.; Song, T. Drone Detection and Pose Estimation Using Relational Graph Networks. Sensors 2019, 19, 1479. [Google Scholar] [CrossRef]

- Zhao, Z.Q.; Zheng, P.; Xu, S.T.; Wu, X. Object Detection With Deep Learning: A Review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef]

- Chen, C.; Zheng, Z.; Xu, T.; Guo, S.; Feng, S.; Yao, W.; Lan, Y. YOLO-Based UAV Technology: A Review of the Research and Its Applications. Drones 2023, 7, 190. [Google Scholar] [CrossRef]

- Li, Y.; Fan, Q.; Huang, H.; Han, Z.; Gu, Q. A Modified YOLOv8 Detection Network for UAV Aerial Image Recognition. Drones 2023, 7, 304. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Laughing, Q.; Kwon, Y.; Kayzwer; Michael, K.; Sezer, O.; Mu, T.; Shcheklein, I.; Boguszewski, A.; et al. Ultralytics YOLOv8. Available online: https://docs.ultralytics.com/tasks/pose/ (accessed on 25 September 2023).

- Maji, D.; Nagori, S.; Mathew, M.; Poddar, D. YOLO-Pose: Enhancing YOLO for Multi Person Pose Estimation Using Object Keypoint Similarity Loss. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), New Orleans, LA, USA, 19–24 June 2022; pp. 2636–2645. [Google Scholar]

- Gao, X.; Hou, X.; Tang, J.; Cheng, H. Complete solution classification for the Perspective-Three-Point problem. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 930–943. [Google Scholar]

- Abdel-Aziz, Y.I.; Karara, H.M. Direct Linear Transformation from Comparator Coordinates into Object Space Coordinates in Close-Range Photogrammetry. Photogramm. Eng. Remote Sens. 2015, 81, 103–107. [Google Scholar] [CrossRef]

- Lepetit, V.; Moreno-Noguer, F.; Fua, P. EPnP: An Accurate O(n) Solution to the PnP Problem. Int. J. Comput. Vis. 2009, 81, 155–166. [Google Scholar] [CrossRef]

- Penate-Sanchez, A.; Andrade-Cetto, J.; Moreno-Noguer, F. Exhaustive Linearization for Robust Camera Pose and Focal Length Estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2387–2400. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Xu, C. A Stable Direct Solution of Perspective-three-Point Problem. Int. J. Pattern Recognit. Artif. Intell. 2011, 25, 627–642. [Google Scholar] [CrossRef]

- Kneip, L.; Scaramuzza, D.; Siegwart, R. A Novel Parametrization of the Perspective-Three-Point Problem for a Direct Computation of Absolute Camera Position and Orientation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Colorado Springs, CO, USA, 20–25 June 2011; pp. 2969–2976. [Google Scholar]

- Wolfe, W.; Mathis, D.; Sklair, C.; Magee, M. The perspective view of three points. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 66–73. [Google Scholar] [CrossRef]

- Amovlab. Prometheus Autonomous UAV Opensource Project. Available online: https://github.com/amov-lab/Prometheus (accessed on 1 May 2023).

| The set of points corresponding to all values of i. | |

| Coordinates in the specified coordinate system. | |

| The spatial coordinate system with O as the origin and , and as the positive directions of the coordinate axes. | |

| The angle between the rays and with O as the vertex. | |

| Matrices, including vectors. | |

| A vector with A as the starting point and B as the ending point. | |

| The displacement matrix of the -coordinate system with respect to the -coordinate system. | |

| The rotation matrix of the -coordinate system with respect to the -coordinate system. | |

| Multiply matrix with matrix . | |

| The transpose of the matrix. | |

| The modulus of the vector. |

| Algorithms | Time [ms] | Proportionality |

|---|---|---|

| Ours | 0.534 | 1 |

| Gao’s | 1.845 | 3.46 |

| IM | 2.614 | 4.90 |

| AP3P | 0.722 | 1.35 |

| [cm] | ||

|---|---|---|

| 0.5 | ||

| 1.0 | ||

| 1.5 |

| [cm] | Ours | Gao’s | IM | AP3P |

|---|---|---|---|---|

| 0.5 | 0.015 | 0.019 | 0.242 | 0.239 |

| 1.0 | 0.024 | 0.029 | 0.251 | 0.239 |

| 1.5 | 0.030 | 0.036 | 0.252 | 0.240 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Si, X.; Xu, G.; Ke, M.; Zhang, H.; Tong, K.; Qi, F. Relative Localization within a Quadcopter Unmanned Aerial Vehicle Swarm Based on Airborne Monocular Vision. Drones 2023, 7, 612. https://doi.org/10.3390/drones7100612

Si X, Xu G, Ke M, Zhang H, Tong K, Qi F. Relative Localization within a Quadcopter Unmanned Aerial Vehicle Swarm Based on Airborne Monocular Vision. Drones. 2023; 7(10):612. https://doi.org/10.3390/drones7100612

Chicago/Turabian StyleSi, Xiaokun, Guozhen Xu, Mingxing Ke, Haiyan Zhang, Kaixiang Tong, and Feng Qi. 2023. "Relative Localization within a Quadcopter Unmanned Aerial Vehicle Swarm Based on Airborne Monocular Vision" Drones 7, no. 10: 612. https://doi.org/10.3390/drones7100612

APA StyleSi, X., Xu, G., Ke, M., Zhang, H., Tong, K., & Qi, F. (2023). Relative Localization within a Quadcopter Unmanned Aerial Vehicle Swarm Based on Airborne Monocular Vision. Drones, 7(10), 612. https://doi.org/10.3390/drones7100612