A Modified YOLOv4 Deep Learning Network for Vision-Based UAV Recognition

Abstract

:1. Introduction

1.1. Challenges in Drone Recognition

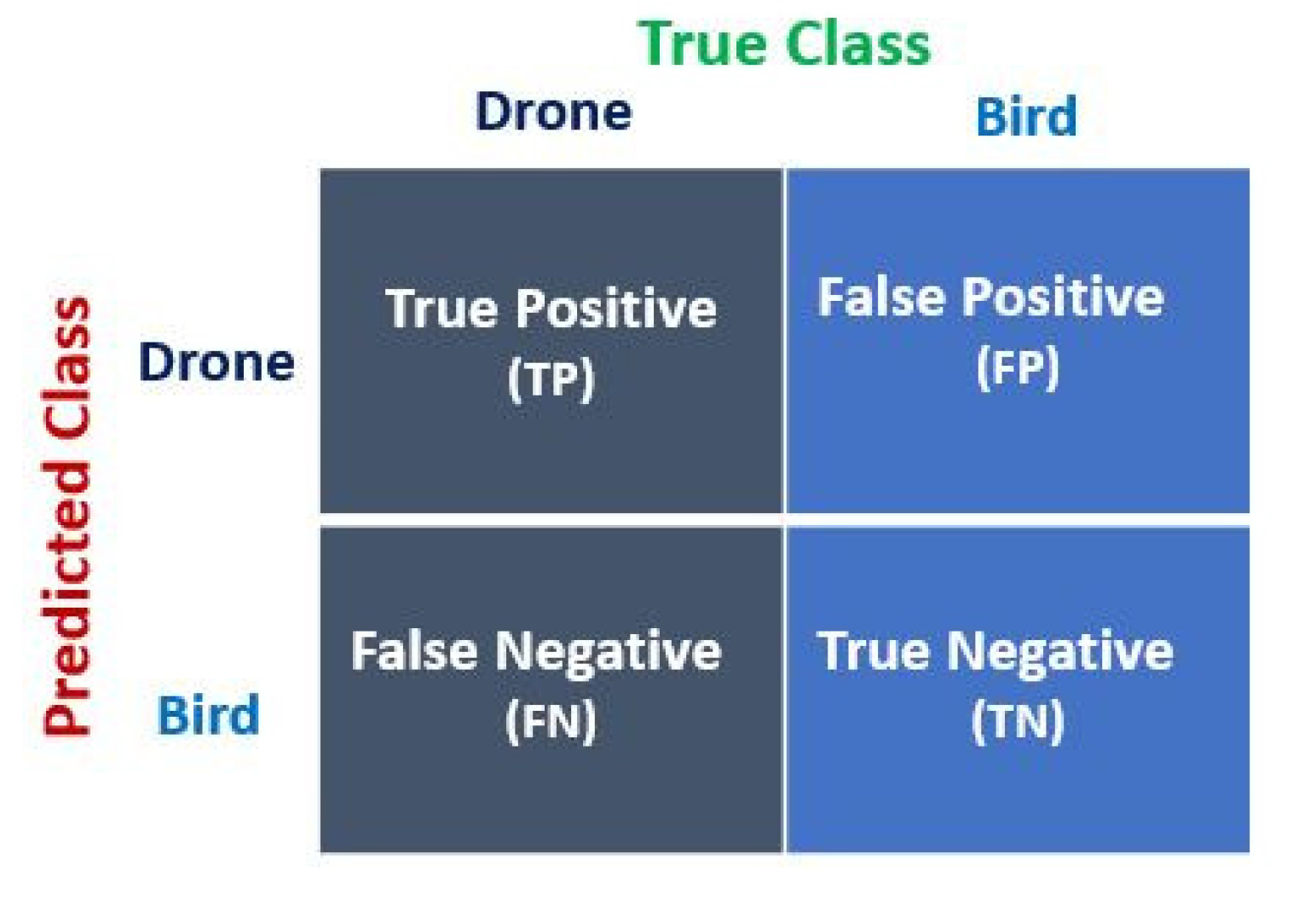

1.1.1. Confusion of Drones and Birds

1.1.2. Crowded Background

1.1.3. Small Drone Size

2. State of the Art Work

3. Related Works

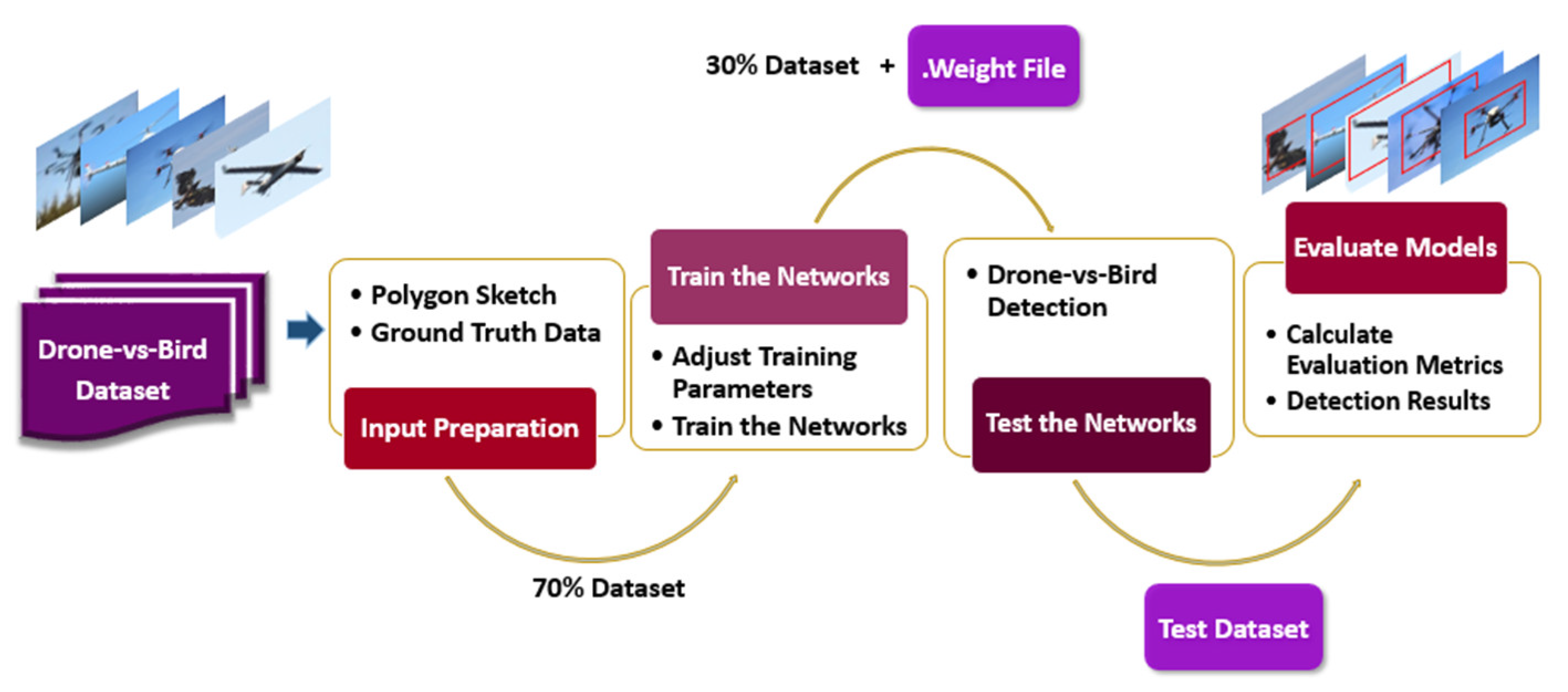

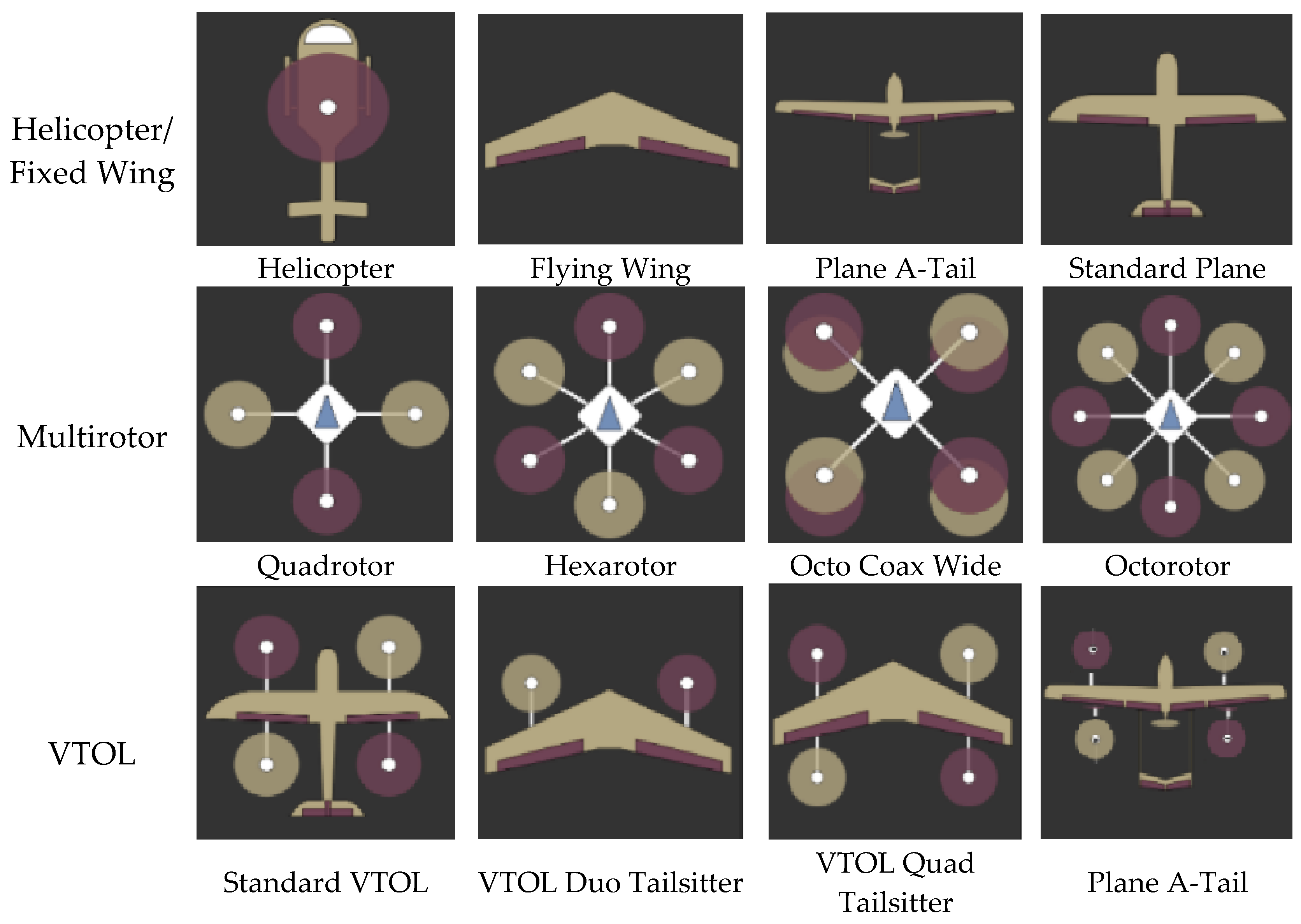

4. Methodology

4.1. Input Preparation

4.2. Train the Networks

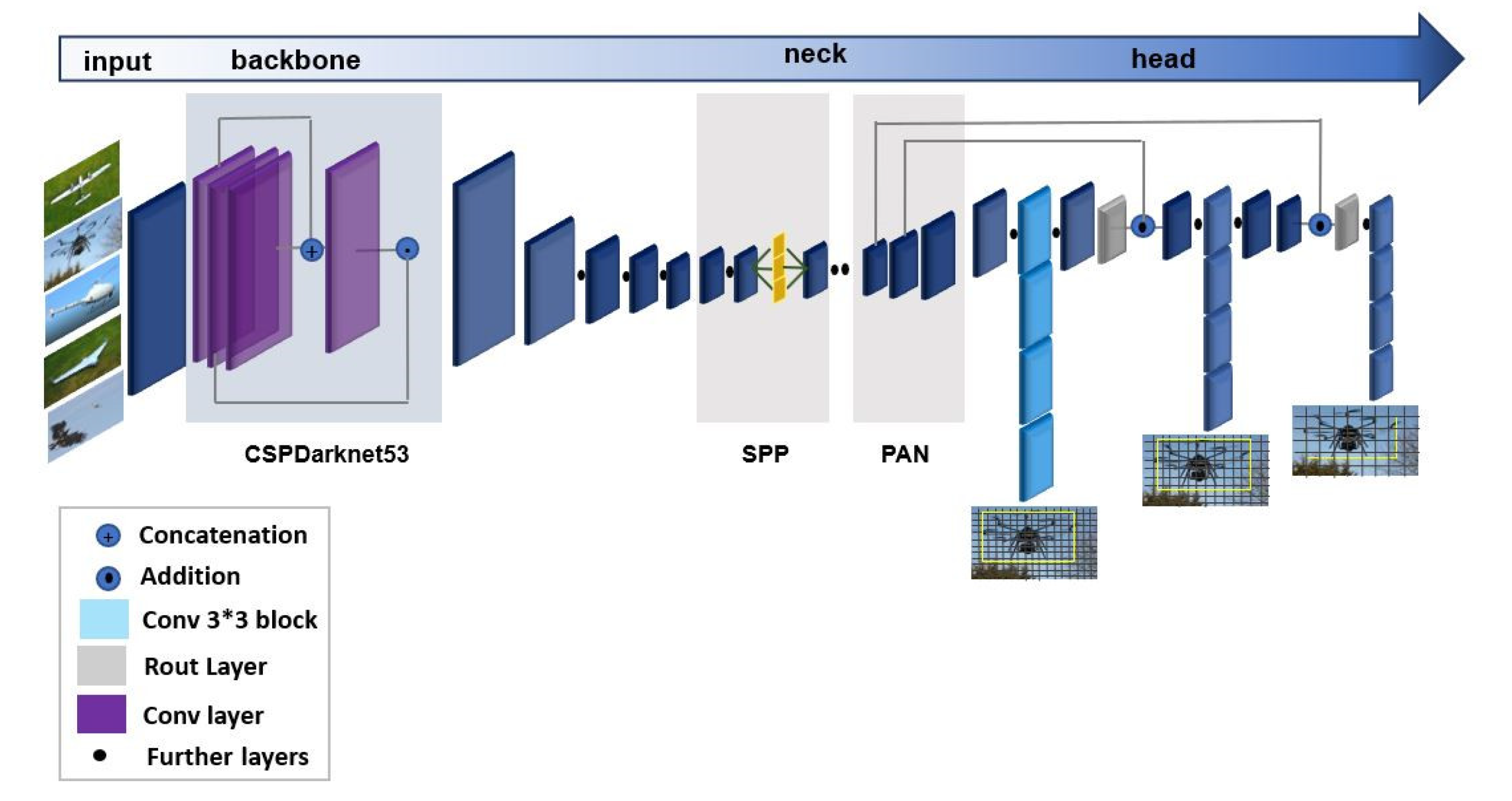

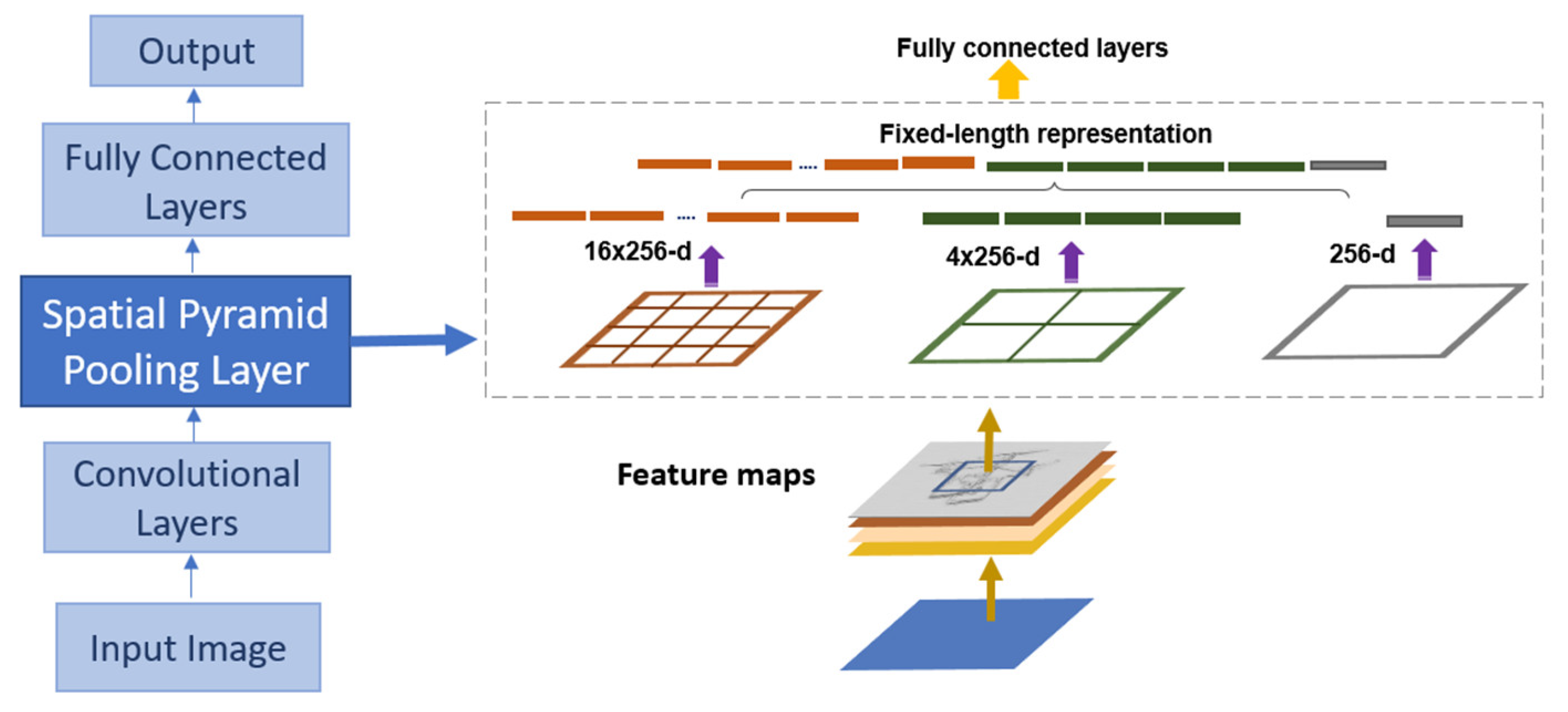

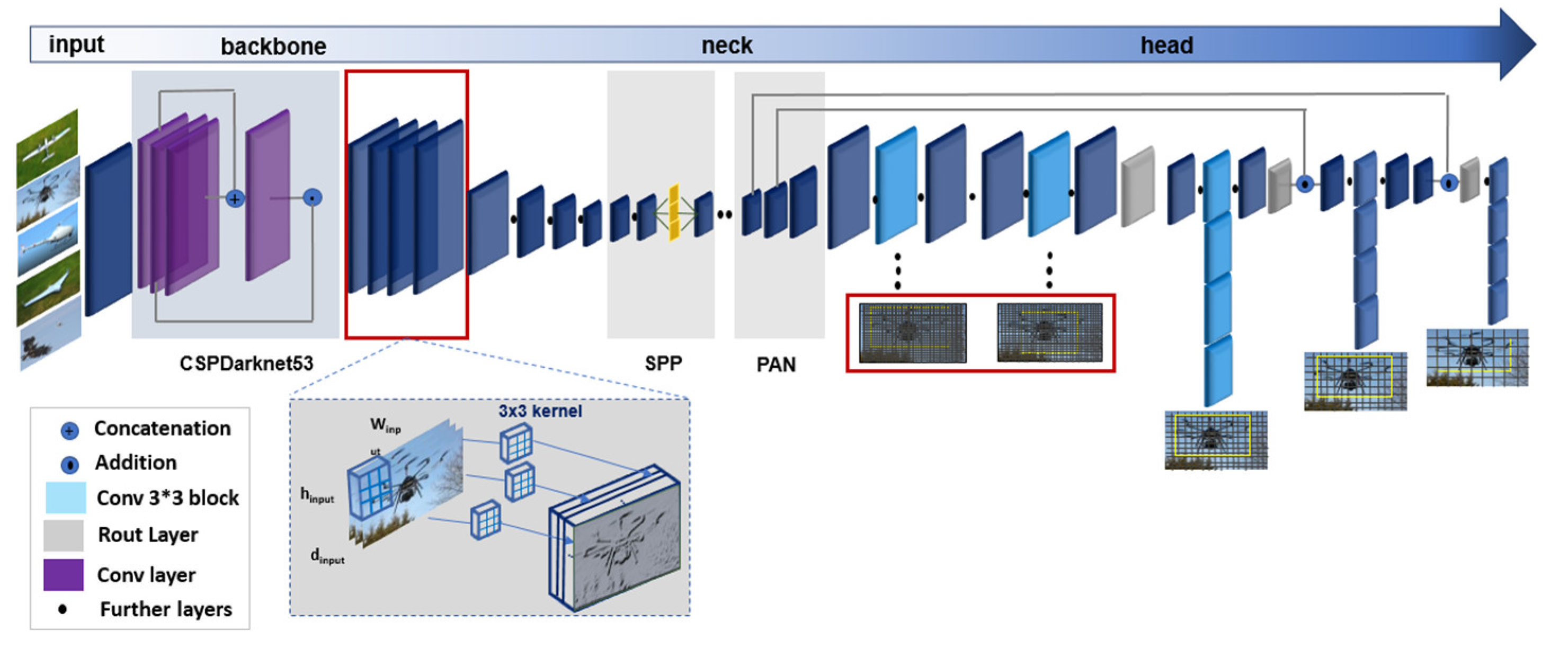

4.2.1. YOLOv4 Deep Learning Network Architecture

4.2.2. The Modified YOLOv4 Deep Learning Network Architecture

4.3. Test the Networks

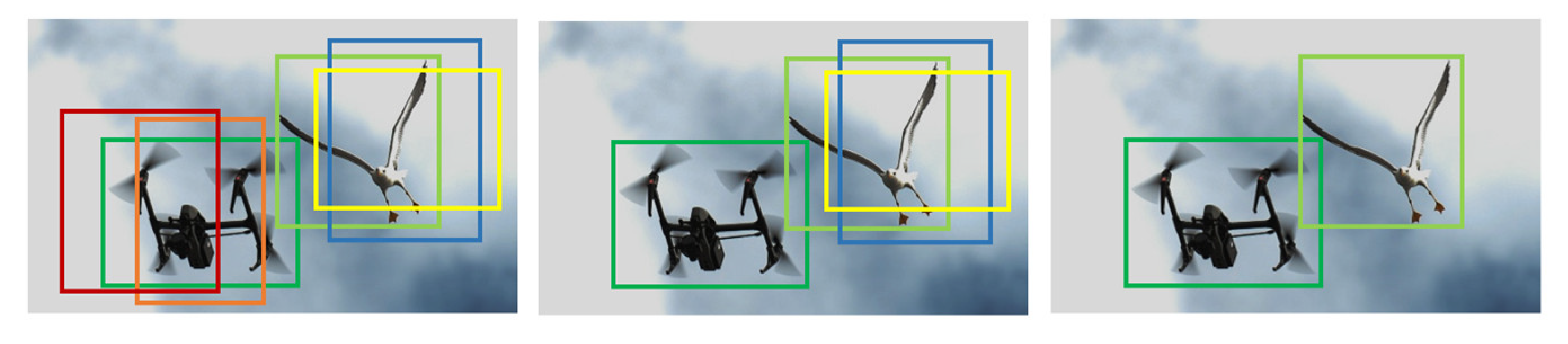

- Select the predicted bounding box with the highest confidence level;

- Calculate IoU (the intersection and overlap of the selected box and other boxes) (Equation (1));

- Remove boxes with an overlap of more than the default IoU threshold of 7% with the selected box;

- Repeat steps 1–3.

4.4. Evaluation Metrics

5. Experiments and Results

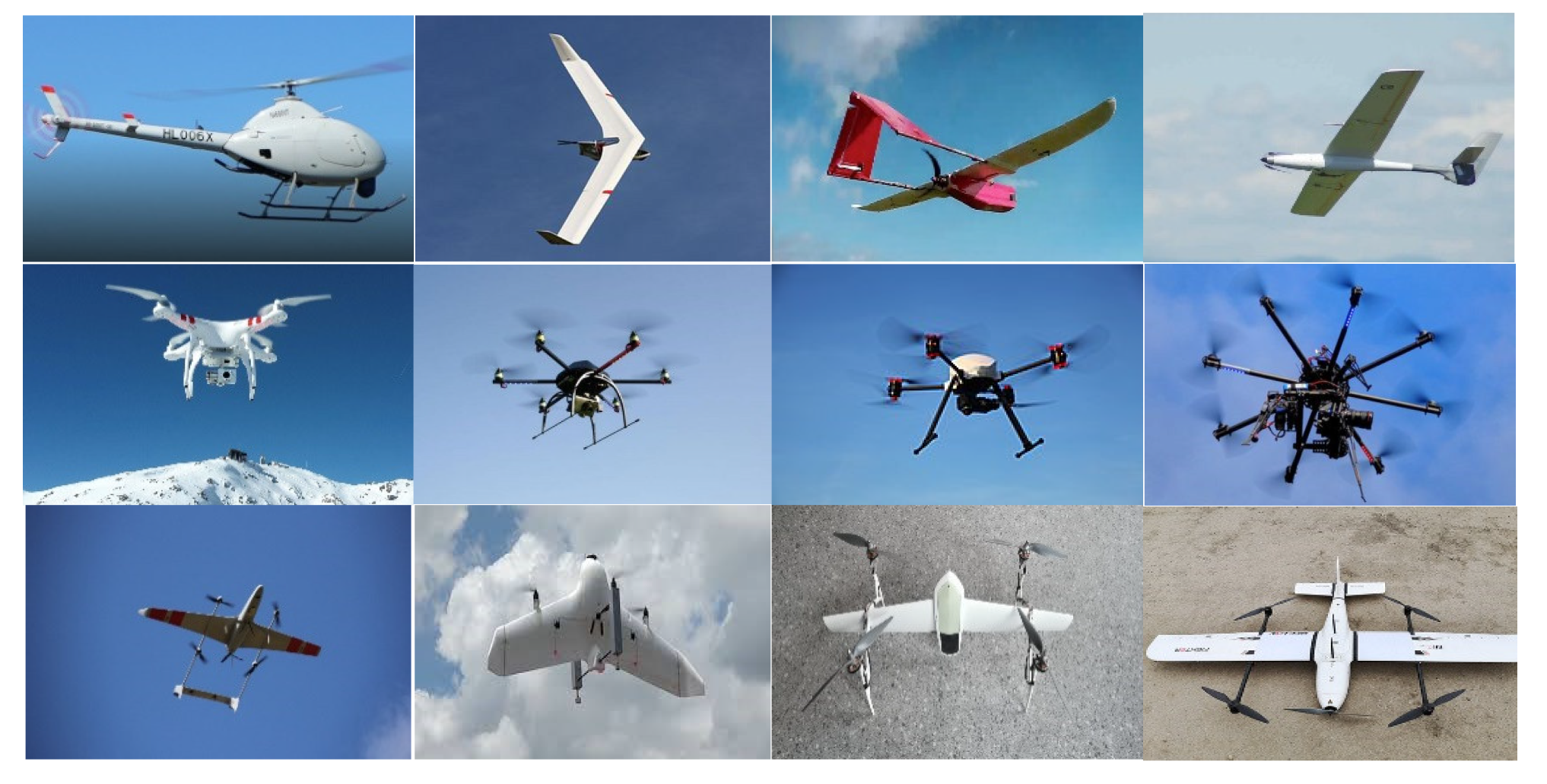

5.1. Data Preparation

5.2. Model Implementation and Training Results

- The input image size was set to 160 × 160;

- The subdivision and the batch parameters were changed to 1 and 64, respectively (these settings were made to avoid errors due to lack of memory);

- The learning rate was changed to 0.0005;

- The step parameter was changed to 24,000 and 27,000, (with 80% and 90% of the number of iterations, respectively);

- The size of the filter in three convolutional layers near the YOLO layers was changed to 30 according to the number of classes.

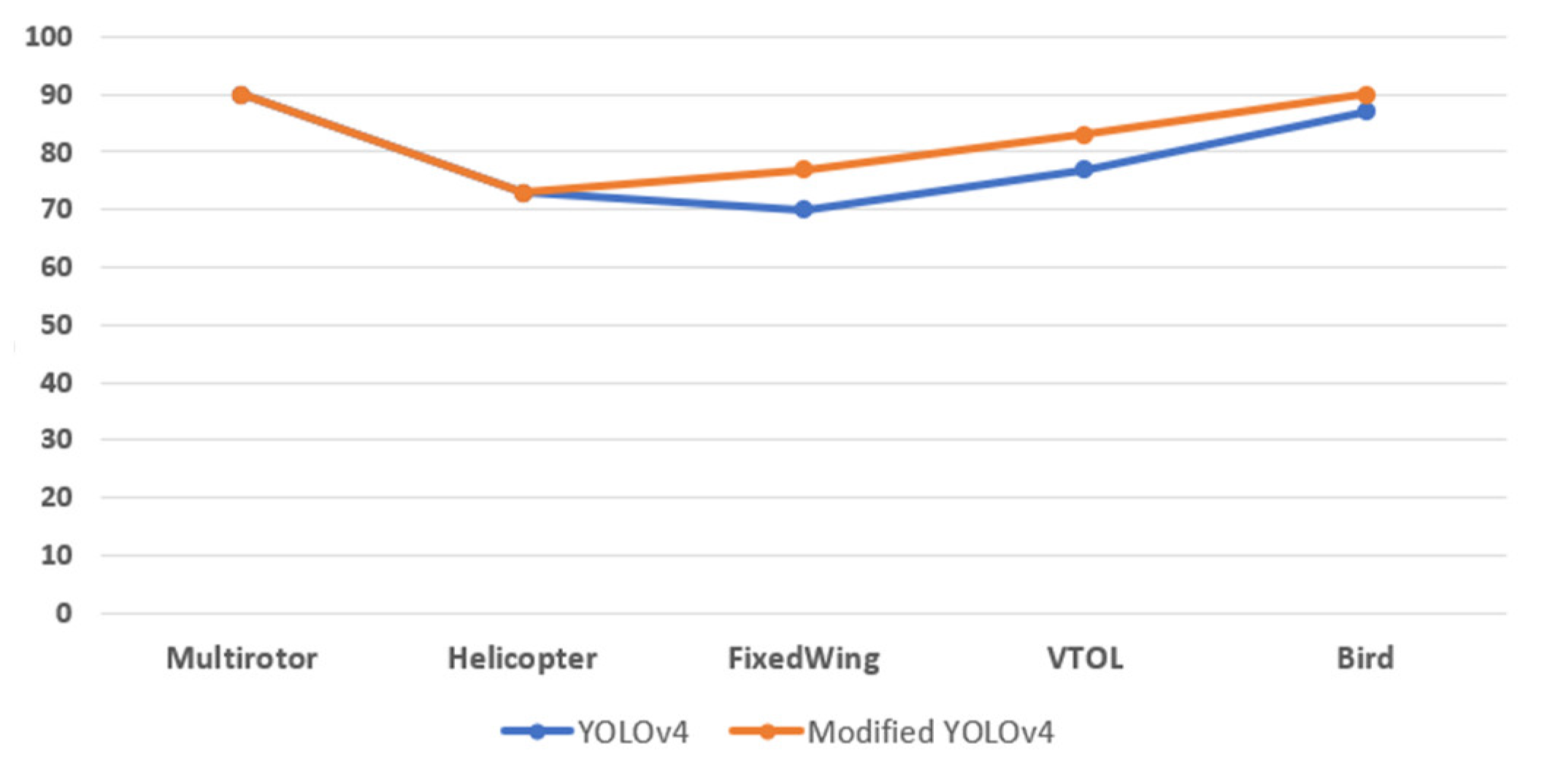

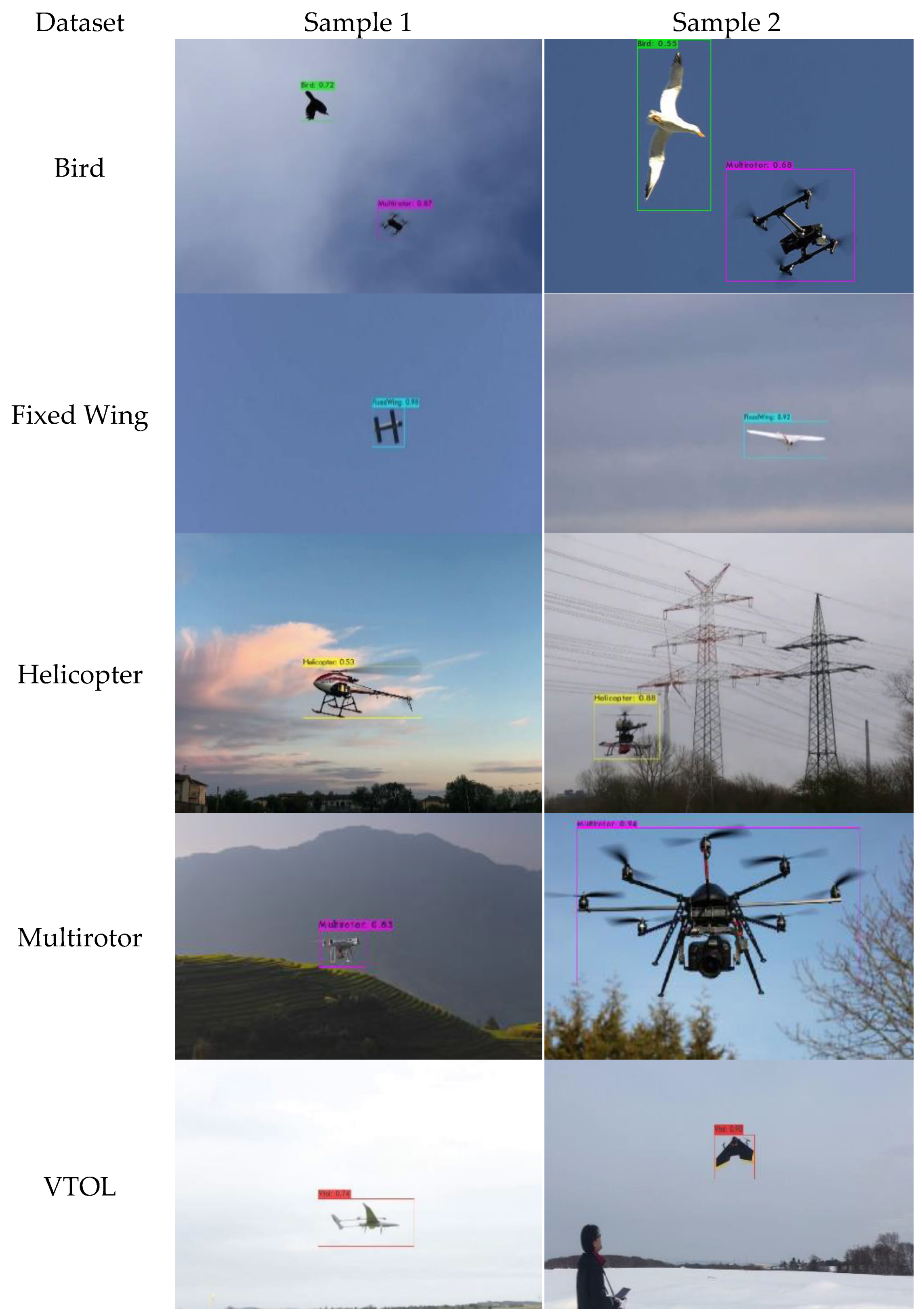

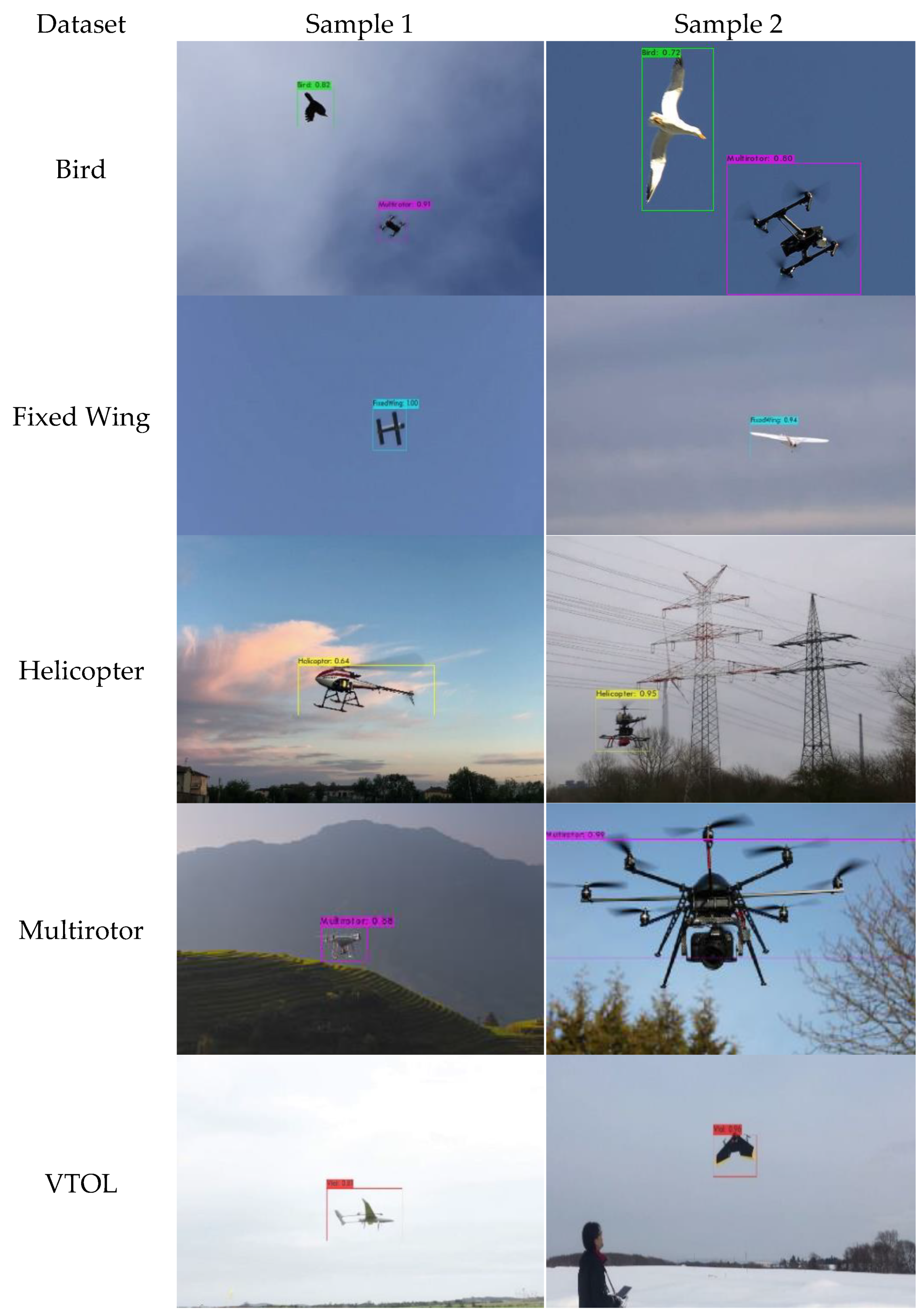

5.3. Evaluation of the YOLOv4 and Modified YOLOv4 Models

5.4. Addressing the Challenges in the Modified YOLOv4 Model

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Mueller, M.; Smith, N.; Ghanem, B. A Benchmark and Simulator for UAV Tracking; Springer: Berlin/Heidelberg, Germany, 2016; Volume 9905, pp. 445–461. [Google Scholar]

- Wu, M.; Xie, W.; Shi, X.; Shao, P.; Shi, Z. Real-time drone detection using deep learning approach. In Proceedings of the International Conference on Machine Learning and Intelligent Communications, Hangzhou, China, 6–8 July 2018; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Bansod, B.; Bansod, B.; Singh, R.; Thakur, R.; Singhal, G. A comparision between satellite based and drone based remote sensing technology to achieve sustainable development: A review. J. Agric. Environ. Int. Dev. 2017, 111, 383–407. [Google Scholar]

- Orusa, T.; Orusa, R.; Viani, A.; Carella, E.; Borgogno Mondino, E. Geomatics and EO Data to Support Wildlife Diseases Assessment at Landscape Level: A Pilot Experience to Map Infectious Keratoconjunctivitis in Chamois and Phenological Trends in Aosta Valley (NW Italy). Remote Sens. 2020, 12, 3542. [Google Scholar] [CrossRef]

- Chiu, M.; Xu, X.; Wei, Y.; Huang, Z.; Schwing, A.; Brunner, R.; Khachatrian, H.; Karapetyan, H.; Dozier, I.; Rose, G.; et al. Agriculture-Vision: A Large Aerial Image Database for Agricultural Pattern Analysis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Anwar, M.Z.; Kaleem, Z.; Jamalipour, A. Machine Learning Inspired Sound-Based Amateur Drone Detection for Public Safety Applications. IEEE Trans. Veh. Technol. 2019, 68, 2526–2534. [Google Scholar] [CrossRef]

- Sathyamoorthy, D. A Review of Security Threats of Unmanned Aerial Vehicles and Mitigation Steps. J. Def. Secur. 2015, 6, 81–97. [Google Scholar]

- Yaacoub, J.-P.; Noura, H.; Salman, O.; Chehab, A. Security Analysis of Drones Systems: Attacks, Limitations, and Recommendations. Internet Things 2020, 11, 100218. [Google Scholar] [CrossRef]

- Semkin, V.; Yin, M.; Hu, Y.; Mezzavilla, M.; Rangan, S. Drone Detection and Classification Based on Radar Cross Section Signatures. In Proceedings of the 2020 International Symposium on Antennas and Propagation (ISAP), Osaka, Japan, 25–28 January 2021. [Google Scholar]

- Haag, M.U.D.; Bartone, C.G.; Braasch, M.S. Flight-test evaluation of small form-factor LiDAR and radar sensors for sUAS detect-and-avoid applications. In Proceedings of the 2016 IEEE/AIAA 35th Digital Avionics Systems Conference (DASC), Sacramento, CA, USA, 25–29 September 2016. [Google Scholar]

- Svanstrom, F.; Englund, C.; Alonso-Fernandez, F. Real-Time Drone Detection and Tracking With Visible, Thermal and Acoustic Sensors. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2020. [Google Scholar]

- Andraši, P.; Radišić, T.; Muštra, M.; Ivošević, J. Night-time Detection of UAVs using Thermal Infrared Camera. Transp. Res. Procedia 2017, 28, 183–190. [Google Scholar] [CrossRef]

- Nguyen, P.; Ravindranatha, M.; Nguyen, A.; Han, R.; Vu, T. Investigating Cost-effective RF-based Detection of Drones. In Proceedings of the 2nd Workshop on Micro Aerial Vehicle Networks, Systems, and Applications for Civilian Use, Singapore, 26 June 2016; pp. 17–22. [Google Scholar]

- Humphreys, T.E. Statement on the Security Threat Posed by Unmanned Aerial Systems and Possible Countermeasures; Oversight and Management Efficiency Subcommittee, Homeland Security Committee: Washington, DC, USA, 2015.

- Drozdowicz, J.; Wielgo, M.; Samczynski, P.; Kulpa, K.; Krzonkalla, J.; Mordzonek, M.; Bryl, M.; Jakielaszek, Z. 35 GHz FMCW drone detection system. In Proceedings of the 2016 17th International Radar Symposium (IRS), Krakow, Poland, 10–12 May 2016. [Google Scholar]

- Liu, H.; Fan, K.; Ouyang, Q.; Li, N. Real-time small drones detection based on pruned yolov4. Sensors 2021, 21, 3374. [Google Scholar] [CrossRef]

- Seidaliyeva, U.; Alduraibi, M.; Ilipbayeva, L.; Almagambetov, A. Detection of loaded and unloaded UAV using deep neural network. In Proceedings of the 2020 4th IEEE International Conference on Robotic Computing (IRC), Taichung, Taiwan, 9–11 November 2020. [Google Scholar]

- Ashraf, M.; Sultani, W.; Shah, M. Dogfight: Detecting Drones from Drones Videos. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 7063–7072. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2013. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. In Computer Vision–ECCV 2014; Springer International Publishing: Cham, Switzerland, 2014. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. In Proceedings of the 28th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; MIT Press: Cambridge, MA, USA, 2015; Volume 1, pp. 91–99. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Volume 9905, pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. arXiv 2016, arXiv:1506.02640. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Chaurasia, R.; Mohindru, V. Unmanned aerial vehicle (UAV): A comprehensive survey. In Unmanned Aerial Vehicles for Internet of Things (IoT) Concepts, Techniques, and Applications; Wiley: New Delhi, India, 2021; pp. 1–27. [Google Scholar]

- Gu, H.; Lyu, X.; Li, Z.; Shen, S.; Zhang, F. Development and experimental verification of a hybrid vertical take-off and landing (VTOL) unmanned aerial vehicle (UAV). In Proceedings of the 2017 International Conference on Unmanned Aircraft Systems (ICUAS), Miami, FL, USA, 13–16 June 2017. [Google Scholar]

- Cai, G.; Lum, K.; Chen, B.M.; Lee, T.H. A brief overview on miniature fixed-wing unmanned aerial vehicles. In Proceedings of the IEEE ICCA, Xiamen, China, 9–11 June 2010. [Google Scholar]

- Kotarski, D.; Piljek, P.; Pranjić, M.; Grlj, C.G.; Kasać, J. A Modular Multirotor Unmanned Aerial Vehicle Design Approach for Development of an Engineering Education Platform. Sensors 2021, 21, 2737. [Google Scholar] [CrossRef] [PubMed]

- Cai, G.; Chen, B.M.; Lee, T.H.; Lum, K.Y. Comprehensive nonlinear modeling of an unmanned-aerial-vehicle helicopter. In Proceedings of the AIAA Guidance, Navigation and Control Conference and Exhibit, Honolulu, HI, USA, 18–21 August 2008. [Google Scholar]

- Qin, B.; Zhang, D.; Tang, S.; Wang, M. Distributed Grouping Cooperative Dynamic Task Assignment Method of UAV Swarm. Appl. Sci. 2022, 12, 2865. [Google Scholar] [CrossRef]

- Shafiq, M.; Ali, Z.A.; Israr, A.; Alkhammash, E.H.; Hadjouni, M. A Multi-Colony Social Learning Approach for the Self-Organization of a Swarm of UAVs. Drones 2022, 6, 104. [Google Scholar] [CrossRef]

- Ali, Z.A.; Han, Z.; Masood, R.J. Collective Motion and Self-Organization of a Swarm of UAVs: A Cluster-Based Architecture. Sensors 2021, 21, 3820. [Google Scholar] [CrossRef]

- Xu, C.; Zhang, K.; Jiang, Y.; Niu, S.; Yang, T.; Song, H. Communication Aware UAV Swarm Surveillance Based on Hierarchical Architecture. Drones 2021, 5, 33. [Google Scholar] [CrossRef]

- Li, Y. Research and application of deep learning in image recognition. In Proceedings of the 2022 IEEE 2nd International Conference on Power, Electronics and Computer Applications (ICPECA), Shenyang, China, 21–23 January 2022; IEEE: Piscataway, NJ, USA, 2022. [Google Scholar]

- Pathak, A.R.; Pandey, M.; Rautaray, S. Application of deep learning for object detection. Procedia Comput. Sci. 2018, 132, 1706–1717. [Google Scholar] [CrossRef]

- Deng, L.; Yu, D. Deep Learning: Methods and Applications. In Foundations and Trends® in Signal Processing; Now Publishers Inc.: Hanover, NH, USA, 2014; Volume 7, pp. 197–387. [Google Scholar]

- Nalamati, M.; Kapoor, A.; Saqib, M.; Sharma, N.; Blumenstein, M. Drone Detection in Long-Range Surveillance Videos. In Proceedings of the 2019 16th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Taipei, Taiwan, 18–21 September 2019; pp. 1–6. [Google Scholar]

- Unlu, E.; Zenou, E.; Riviere, N.; Dupouy, P.-E. Dupouy Deep learning-based strategies for the detection and tracking of drones using several cameras. IPSJ Trans. Comput. Vis. Appl. 2019, 11, 7. [Google Scholar] [CrossRef] [Green Version]

- Mahdavi, F.; Rajabi, R. Drone Detection Using Convolutional Neural Networks. In Proceedings of the 2020 6th Iranian Conference on Signal Processing and Intelligent Systems (ICSPIS), Mashhad, Iran, 23–24 December 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar]

- Behera, D.K.; Raj, A.B. Drone detection and classification using deep learning. In Proceedings of the 2020 4th International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 13–15 May 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar]

- Shi, Q.; Li, J. Objects Detection of UAV for Anti-UAV Based on YOLOv4. In Proceedings of the 2020 IEEE 2nd International Conference on Civil Aviation Safety and Information Technology (ICCASIT), Weihai, China, 14–16 October 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar]

- Xun, D.T.W.; Lim, Y.L.; Srigrarom, S. Drone detection using YOLOv3 with transfer learning on NVIDIA Jetson TX2. In Proceedings of the 2021 2nd International Symposium on Instrumentation, Control, Artificial Intelligence, and Robotics (ICA-SYMP), Bangkok, Thailand, 20–22 January 2021; IEEE: Piscataway, NJ, USA, 2021. [Google Scholar]

- Isaac-Medina, B.K.; Poyser, M.; Organisciak, D. Unmanned aerial vehicle visual detection and tracking using deep neural networks: A performance benchmark. arXiv 2021, arXiv:2103.13933. [Google Scholar]

- Singha, S.; Aydin, B. Automated Drone Detection Using YOLOv4. Drones 2021, 5, 95. [Google Scholar] [CrossRef]

- Samadzadegan, F.; Javan, F.D.; Mahini, F.A.; Gholamshahi, M. Detection and Recognition of Drones Based on a Deep Convolutional Neural Network Using Visible Imagery. Aerospace 2022, 9, 31. [Google Scholar] [CrossRef]

- Roche, R. QGroundControl (QC). 2019. Available online: http://qgroundcontrol.com/ (accessed on 20 May 2022).

- Wang, C.; Liao, H.M.; Wu, Y.; Chen, P.; Hsieh, J.; Yeh, I. CSPNet: A New Backbone that can Enhance Learning Capability of CNN. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Ñanculef, R.; Radeva, P.; Balocco, S. Training Convolutional Nets to Detect Calcified Plaque in IVUS Sequences. In Intravascular Ultrasound; Elsevier: Amsterdam, The Netherlands, 2020; pp. 141–158. [Google Scholar]

- Wang, L.; Lee, C.-Y.; Tu, Z.; Lazebnik, S. Training deeper convolutional networks with deep supervision. arXiv 2015, arXiv:1505.02496. [Google Scholar]

- Hosang, J.; Benenson, R.; Schiele, B. Learning non-maximum suppression. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Redmon, J. Darknet: Open Source Neural Networks in C. 2013–2016. Available online: http://pjreddie.com/darknet/ (accessed on 20 May 2022).

| Dataset | Model | Num of Images | Precision % | Recall % | F1-Score % |

|---|---|---|---|---|---|

| Bird | YOLOv4 | 1570 | 81 | 87 | 84 |

| Modified YOLOv4 | 87 | 90 | 89 | ||

| Fixed Wing | YOLOv4 | 1570 | 88 | 70 | 78 |

| Modified YOLOv4 | 88 | 77 | 82 | ||

| Helicopter | YOLOv4 | 1570 | 81 | 73 | 77 |

| Modified YOLOv4 | 88 | 73 | 80 | ||

| Multirotor | YOLOv4 | 1570 | 77 | 90 | 83 |

| Modified YOLOv4 | 79 | 90 | 84 | ||

| VTOL | YOLOv4 | 1570 | 72 | 77 | 74 |

| Modified YOLOv4 | 74 | 83 | 78 | ||

| Total | YOLOv4 | 7850 | 80 | 79 | 79 |

| Modified YOLOv4 | 83 | 83 | 83 |

| Dataset | Model | Num of Images | Accuracy % | mAP % | IoU % |

|---|---|---|---|---|---|

| Total | YOLOv4 | 7850 | 79 | 79 | 80 |

| Modified YOLOv4 | 83 | 83 | 84 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dadrass Javan, F.; Samadzadegan, F.; Gholamshahi, M.; Ashatari Mahini, F. A Modified YOLOv4 Deep Learning Network for Vision-Based UAV Recognition. Drones 2022, 6, 160. https://doi.org/10.3390/drones6070160

Dadrass Javan F, Samadzadegan F, Gholamshahi M, Ashatari Mahini F. A Modified YOLOv4 Deep Learning Network for Vision-Based UAV Recognition. Drones. 2022; 6(7):160. https://doi.org/10.3390/drones6070160

Chicago/Turabian StyleDadrass Javan, Farzaneh, Farhad Samadzadegan, Mehrnaz Gholamshahi, and Farnaz Ashatari Mahini. 2022. "A Modified YOLOv4 Deep Learning Network for Vision-Based UAV Recognition" Drones 6, no. 7: 160. https://doi.org/10.3390/drones6070160

APA StyleDadrass Javan, F., Samadzadegan, F., Gholamshahi, M., & Ashatari Mahini, F. (2022). A Modified YOLOv4 Deep Learning Network for Vision-Based UAV Recognition. Drones, 6(7), 160. https://doi.org/10.3390/drones6070160