sUAS Monitoring of Coastal Environments: A Review of Best Practices from Field to Lab

Abstract

:1. Introduction

2. Previous Reviews of sUAS Monitoring of Coastal Environments

2.1. sUAS Regulations

2.2. Cameras and Platforms

2.3. Calibration Procedures

2.4. Validation Procedures

2.5. Literature Review Gaps

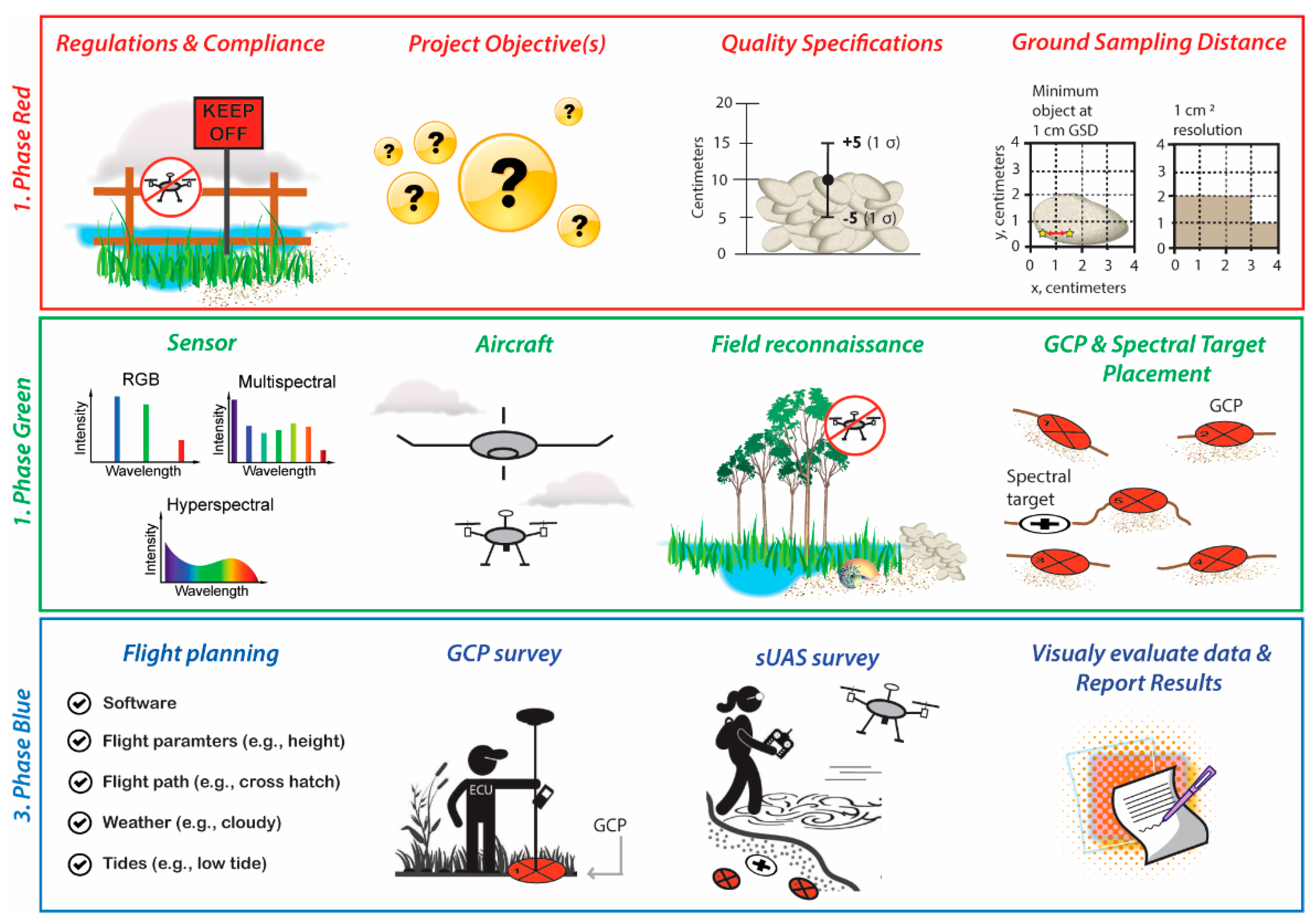

3. sUAS Photo-Based Surveys of Coastal Environments: Best Practices

3.1. sUAS Photo-Based Surveys

3.2. sUAS Photo-Based Processing of Dense Point Clouds, DEMs and Orthomosaics

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Leven, L.A.; Boesch, D.F.; Covich, A.; Dahm, C.; Erséus, C.; Ewel, K.C.; Kneib, R.T.; Moldenke, A.; Palmer, M.A.; Shelgrove, P.; et al. The function of marine critical zone transition zones and the importance of sediment biodiversity. Ecosystems 2001, 4, 430–451. [Google Scholar] [CrossRef]

- Matthews, M.W. A current review of empirical procedures of remote sensing in inland and near-coastal transitional waters. Int. J. Remote Sens. 2011, 32, 6855–6899. [Google Scholar] [CrossRef]

- Klemas, V.V. Coastal and environmental remote sensing from unmanned aerial vehicles: An overview. J. Coast. Res. 2015, 31, 1260–1267. [Google Scholar] [CrossRef] [Green Version]

- Kislik, C.; Dronova, I.; Kelly, M. UAVs in support of algal bloom research: A review of current applications and future opportunities. Drones 2018, 2, 35. [Google Scholar] [CrossRef] [Green Version]

- Adade, R.; Aibinu, A.M.; Ekumah, B.; Asaana, J. Unmanned Aerial Vehicle (UAV) applications in coastal zone management—A review. Environ. Mont. Assess. 2021, 193, 1–12. [Google Scholar] [CrossRef]

- Oleksyn, S.; Tosetto, L.; Raoult, V.; Joyce, K.E.; Willimason, J.E. Going Batty: The challenges and opportunities of using drone to monitor the behavior and habitat use of rays. Drones 2021, 5, 12. [Google Scholar] [CrossRef]

- Morgan, G.R.; Hodgson, M.E.; Wang, C.; Schill, S.R. Unmanned aerial remote sensing of coastal vegetation: A review. Ann. GIS 2022, 1–15. [Google Scholar] [CrossRef]

- Ridge, J.; Seymour, A.; Rodriguez, A.B.; Dale, J.; Newton, E.; Johnston, D.W. Advancing UAS Methods for Monitoring Coastal Environments. In Proceedings of the AGU Fall Meeting, New Orleans, LA, USA, 11–15 December 2017. [Google Scholar]

- Johnston, D.W. Unoccupied Aircraft Systems in Marine Science and Conservation. Annu. Rev. Mar. Sci. 2019, 11, 439–463. [Google Scholar] [CrossRef] [Green Version]

- Windle, A.E.; Poulin, S.K.; Johnston, D.W.; Ridge, J.T. Rapid and accurate monitoring of intertidal Oyster Reef Habitat using unoccupied aircraft systems and structure from motion. Remote Sens. 2019, 11, 2394. [Google Scholar] [CrossRef] [Green Version]

- Rees, A.F.; Avens, L.; Ballorain, K.; Bevan, E.; Broderick, A.C.; Carthy, R.R.; Christianen, M.J.A.; Duclos, G.T.; Heithaus, M.R.; Johnston, J.W.; et al. The potential of unmanned aerial systems for sea turtle research and conservation: A review and future directions. Endang. Species Res. 2018, 35, 81–100. [Google Scholar] [CrossRef] [Green Version]

- Schofield, G.; Esteban, N.; Katselidis, K.A.; Hays, G.C. Drones for research on sea turtles and other marine invertebrates—A review. Biol. Conserv. 2019, 238, 108214. [Google Scholar] [CrossRef]

- Kandrot, S.; Hayes, S.; Holloway, P. Applications of Uncrewed Aerial Vehicles (UAV) Technology to Support Integrated Coastal Zone Management and the UN Sustainable Development Goals at the Coast. Estuaries Coast. 2021, 1–20. [Google Scholar] [CrossRef] [PubMed]

- Khedmatgozar Dolati, S.S.; Caluk, N.; Mehrabi, A.; Khedmatgozer Dolati, S.S. Non-detructuve testing applications for steel bridges. Appl. Sci. 2021, 11, 9757. [Google Scholar] [CrossRef]

- Garcia-Soto, C.; Seys, J.J.C.; Zielinski, O.; Busch, J.A.; Luna, S.I.; Baez, J.C.; Domegan, C.; Dubsky, K.; Kotynska-Zielinska, I.; Loubat, P.; et al. Marine Citizen Science: Current State in Europe and New Technological Developments. Front. Mar. Sci. 2021, 8, 621472. [Google Scholar] [CrossRef]

- Nowlin, M.B.; Roady, S.E.; Newton, E.; Johnston, D.W. Applying unoccupied aircraft systems to study human behavior in marine science and conservation programs. Front. Mar. Sci. 2019, 6, 567. [Google Scholar] [CrossRef] [Green Version]

- Beaucage, P.; Glazer, A.; Choisnard, J.; Yu, W.; Bernier, M.; Benoit, R.; Lafrance, G. Wind assessment in a coastal environment using synthetic aperture radar satellite imagery and a numerical weather prediction model. Can. J. Remote Sens. 2007, 33, 368–377. [Google Scholar] [CrossRef]

- Seier, G.; Hodl, C.; Abermann, J.; Schottl, S.; Maringer, M.; Hofstadler, D.N.; Probstl-Haider, U.; Lieb, G.H. Unmanned aircraft systems for protected areas: Gadgetry or necessity? J. Nat. Conserv. 2021, 64, 126078. [Google Scholar] [CrossRef]

- Ciaccio, F.; Troisi, S. Montoring marine environments with Atutonomous Underwater Vehicles: A bibliometric analysis. Res. Eng. 2021, 9, 100205. [Google Scholar]

- Lally, H.; O’Connor, I. Can drone be used to conduct water sampling in aquatic environments? A review. Sci. Total Envron. 2019, 20, 569–575. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

- Griffiths, D.; Burningham, H. Comparison of pre- and self-calibrated camera calibration models for UAS-derived nadir imagery for a SfM application. Prog. Phys. Geog. 2019, 43, 215–235. [Google Scholar] [CrossRef] [Green Version]

- Oconner, P.L.; Smith, M.J.; James, M.R. Cameras and settings for aerial surveys in the geosciences: Optimising image data. Prog. Phys. Geog. 2017, 41, 325–344. [Google Scholar] [CrossRef] [Green Version]

- Cooper, H.M.; Wasklewicz, T.; Zhu, Z.; Lewis, W.; Lecompte, K.; Heffentrager, M.; Smaby, R.; Brady, J.; Howard, R. Evaluating the ability of multi-sensor techniques to capture topographic complexity. Sensors 2021, 21, 2105. [Google Scholar] [CrossRef] [PubMed]

- Cruzan, M.B.; Weinstein, B.G.; Grasty, M.R.; Kohrn, B.F.; Hendrickson, E.C.; Arredondo, T.M.; Thompson, P.G. Small unmanned aerial vehicles (micro-UAVs, drones) in plant ecology. Appl. Plant Sci. 2016, 4, 1600041. [Google Scholar] [CrossRef] [PubMed]

- Tmušic, G.; Salvator, M.; Helge, A.; James, M.R.; Goncalves, G.; Ben-Dor, E.; Brook, A.; Polinova, M.; Arranz, J.J.; Mészáros, J.; et al. Current practices in UAS-based environmental monitoring. Remote Sens. 2020, 12, 1001. [Google Scholar] [CrossRef] [Green Version]

- Rodriguez, A.B.; Fodrie, F.J.; Ridge, J.T.; Lindquist, N.L.; Theuerkauf, E.J.; Coleman, S.E.; Grabowski, J.H.; Brodeur, M.C.; Gittman, R.K.; Keller, D.A.; et al. Oyster reefs can outpace sea-level rise. Nat. Clim. Change 2014, 4, 493–497. [Google Scholar] [CrossRef]

- Singh, K.K.; Frazier, A.E. A meta-analysis and review of unmanned aircraft system (UAS) imagery for terrestrial applications. Int. J. Remote Sens. 2018, 39, 5078–5098. [Google Scholar] [CrossRef]

- Aasen, H.; Bolten, A. Multi-temporal high-resolution imaging spectroscopy with hyperspectral 2D imagers—From theory to application. Remote Sens. Environ. 2018, 205, 374–389. [Google Scholar] [CrossRef]

- Roth, L.; Hund, A.; Aasen, H. PhenoFly Planning Tool: Flight planning for high-resolution optical remote sensing with unmanned aerial systems. Plant Methods 2018, 14, 116. [Google Scholar] [CrossRef] [Green Version]

- Assmann, J.J.; Kerby, J.T.; Cunlie, A.M.; Myers-Smith, I.H. Vegetation monitoring using multispectral sensors—Best practices and lessons learned from high latitudes. J. Unmanned Veh. Syst. 2019, 7, 54–75. [Google Scholar] [CrossRef] [Green Version]

- Smith, G.M.; Milton, E.J. The use of the empirical line method to calibrate remotely sensed data to reflectance. Int. J. Remote Sens. 1999, 20, 2653–2662. [Google Scholar] [CrossRef]

- Iqbal, F.; Lucieer, A.; Barry, K. Simplified radiometric calibration for UAS-mounted multispectral sensor. Eur. J. Remote Sens. 2018, 51, 301–313. [Google Scholar] [CrossRef]

- Conte, P.; Girelli, V.A.; Mandanici, E. Structure from Motion for aerial thermal imagery at city scale: Pre-processing, camera calibration, accuracy assessment. ISPRS J. Photogramm. Remote Sens. 2018, 146, 320–333. [Google Scholar] [CrossRef]

- Agisoft LLC. Agisoft Metashape User Manual; Professional Edition, Version 1.8; Agisoft LLC: St. Petersburg, Russia, 2022. [Google Scholar]

- Granshaw, S.I. Bundle adjustment methods in engineering photogrammetry. Photogram. Rec. 1980, 56, 181–207. [Google Scholar] [CrossRef]

- James, M.R.; Robson, S.; Smith, M.W. 3-D uncertainty-based topographic change detection with structure-from-motion photogrammetry: Precision maps for ground control and directly georeferenced surveys. Earth Surf. Process. Landf. 2017, 42, 1769–1788. [Google Scholar] [CrossRef]

- Wackrow, R.; Chandler, J.H. Minimizing systematic error surfaces in digital elevation models using oblique convergent imagery. Photgram. Rec. 2011, 26, 16–31. [Google Scholar] [CrossRef] [Green Version]

- James, M.R.; Robson, S. Straightforward reconstruction of 3D surfaces and topography with a camera: Accuracy and geoscience application. J. Geophys. Res. 2012, 117, F03017. [Google Scholar] [CrossRef] [Green Version]

- Agisoft LLC. Metashape Python Reference, Release 1.8.2; Agisoft LLC: St. Petersburg, Russia, 2022. [Google Scholar]

- Rupnik, E.; Daakir, M.; Deseiligny, P. MicMac—A free, open-source solution for photogrammetry. Open Geospat. Data Softw. Stand. 2017, 2, 14. [Google Scholar] [CrossRef]

- Pinton, D.; Canestrelli, A.; Wilkenson, B.; Ifju, P.; Ortega, A. Estimating ground elevation and vegetation characteristics in coastal salt marshes using UAV-based LiDAR and digital aerial photogrammetry. Remote Sens. 2021, 13, 4506. [Google Scholar] [CrossRef]

- Wang, D.; Xing, S.; He, Y.; Yu, J.; Xu, Q.; Li, P. Evaluation of new lightweight UAV-borne topo-bathymetric LiDAR for shallow water bathymetry and object detection. Sensors 2022, 22, 1379. [Google Scholar] [CrossRef]

| Reference | Regulations | Sensors | Platforms | Collection | Calibration | Validation | Processing | Software | Challenges | Benefits | Applications |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Morgan et al. (2022) [7] | □ | √ | √ | √ | √ | √ | □ | □ | √ | √ | □ |

| Adade et al. (2021) [5] | □ | √ | √ | □ | □ | √ | □ | √ | √ | □ | √ |

| Kandrot et al. (2021) [13] | √ | □ | √ | □ | □ | √ | □ | □ | √ | □ | √ |

| Oleksyn et al. (2021) [6] | √ | □ | □ | □ | □ | □ | □ | □ | √ | √ | √ |

| Ridge and Johnston (2020) [8] | □ | √ | √ | □ | □ | □ | □ | □ | √ | √ | √ |

| Johnston et al. (2019) [9] | √ | √ | √ | □ | √ | □ | □ | □ | √ | □ | √ |

| Schofield et al. (2019) [12] | □ | □ | □ | □ | □ | □ | □ | □ | □ | √ | √ |

| Kislik (2018) [4] | √ | √ | √ | □ | √ | √ | □ | √ | √ | √ | √ |

| Rees et al. (2018) [11] | √ | □ | □ | √ | □ | □ | □ | □ | √ | √ | √ |

| Klemas (2015) [3] | √ | □ | √ | □ | □ | □ | □ | □ | √ | √ | √ |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guan, S.; Sirianni, H.; Wang, G.; Zhu, Z. sUAS Monitoring of Coastal Environments: A Review of Best Practices from Field to Lab. Drones 2022, 6, 142. https://doi.org/10.3390/drones6060142

Guan S, Sirianni H, Wang G, Zhu Z. sUAS Monitoring of Coastal Environments: A Review of Best Practices from Field to Lab. Drones. 2022; 6(6):142. https://doi.org/10.3390/drones6060142

Chicago/Turabian StyleGuan, Shanyue, Hannah Sirianni, George Wang, and Zhen Zhu. 2022. "sUAS Monitoring of Coastal Environments: A Review of Best Practices from Field to Lab" Drones 6, no. 6: 142. https://doi.org/10.3390/drones6060142

APA StyleGuan, S., Sirianni, H., Wang, G., & Zhu, Z. (2022). sUAS Monitoring of Coastal Environments: A Review of Best Practices from Field to Lab. Drones, 6(6), 142. https://doi.org/10.3390/drones6060142