UAV Mapping and 3D Modeling as a Tool for Promotion and Management of the Urban Space

Abstract

:1. Introduction

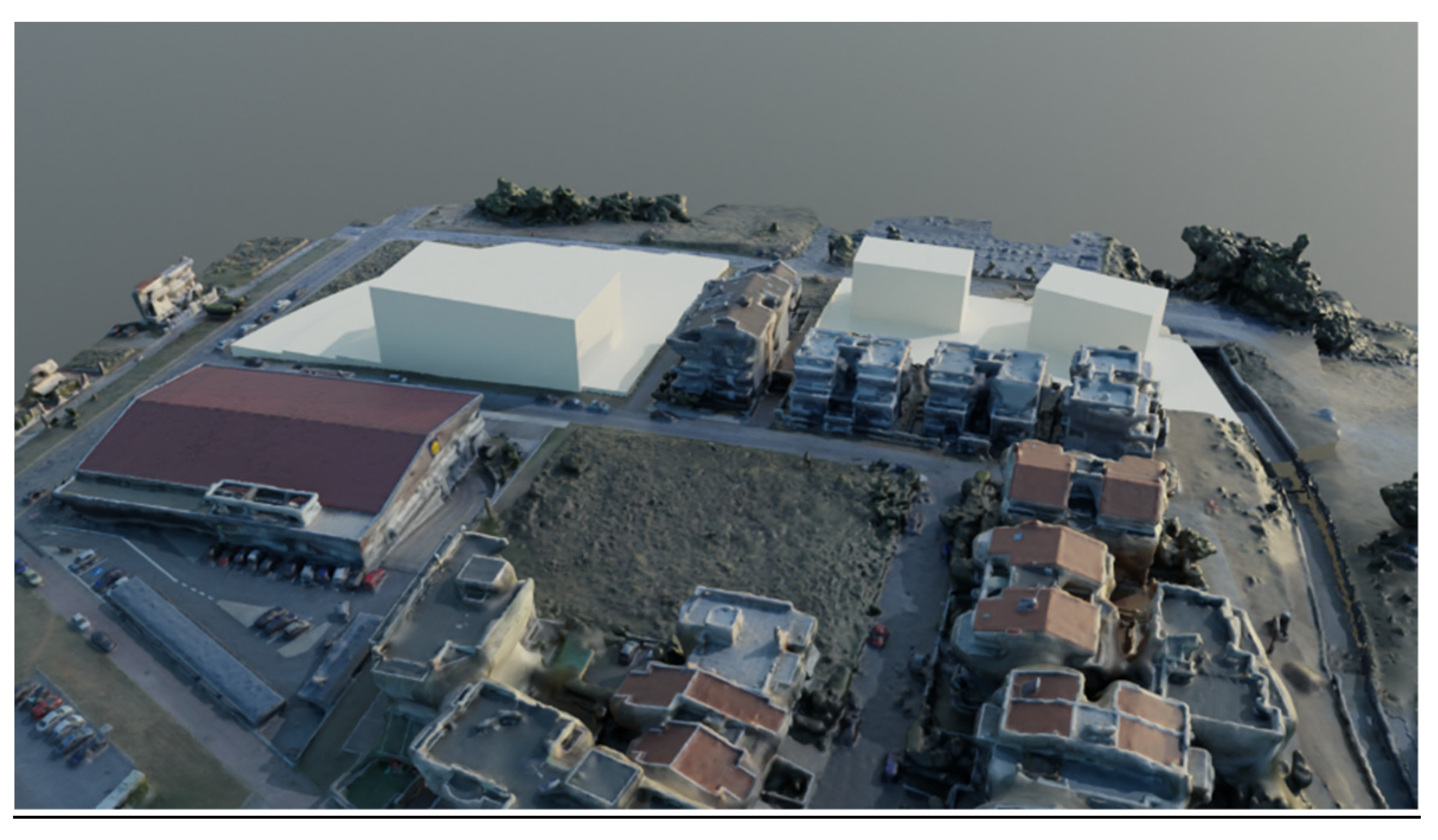

1.1. Mapping in the Urban Space

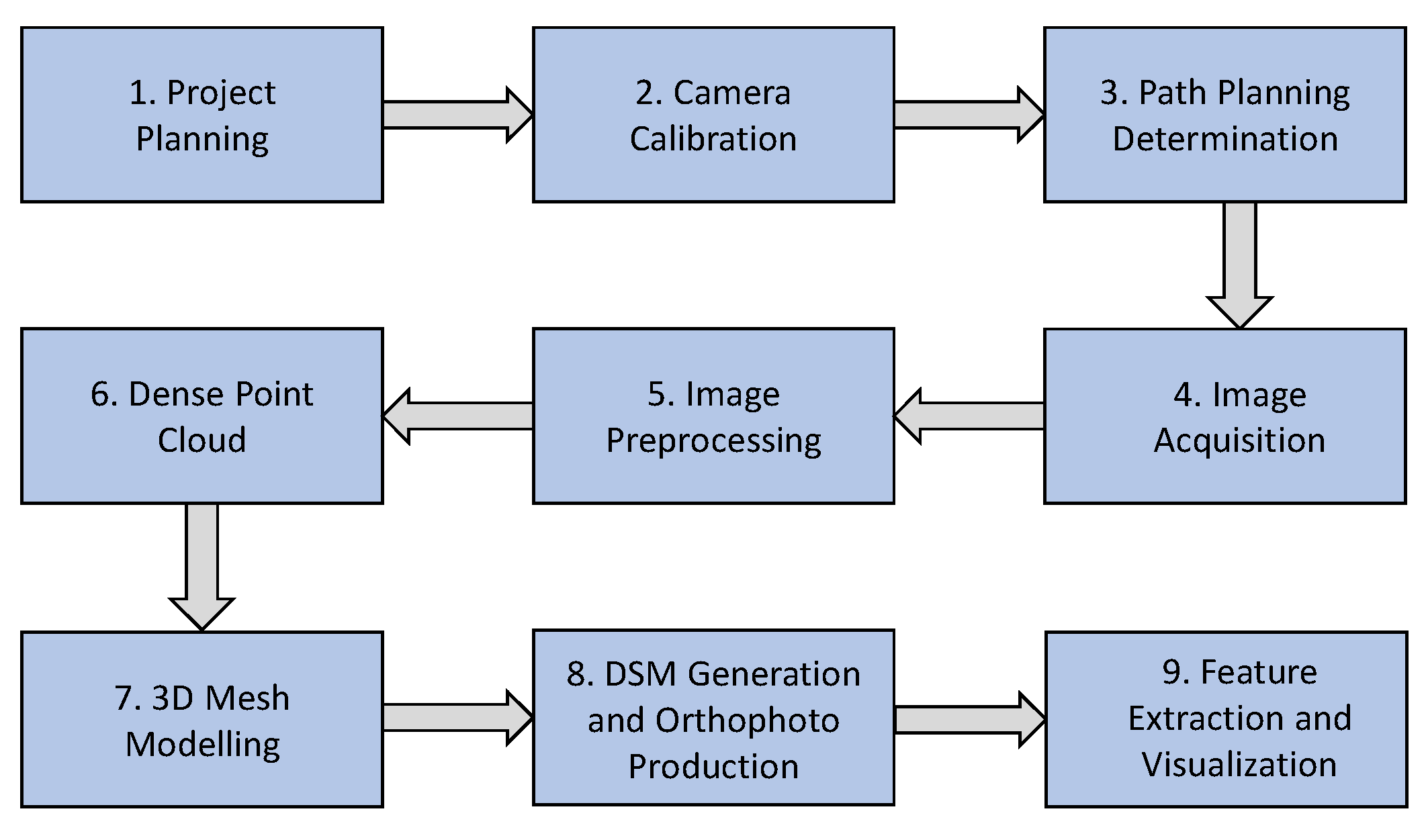

1.2. A Methodological Framework

2. Materials and Methods

2.1. Study Area

2.2. The UAV System

2.3. Planning and Acquisition of Aerial Images

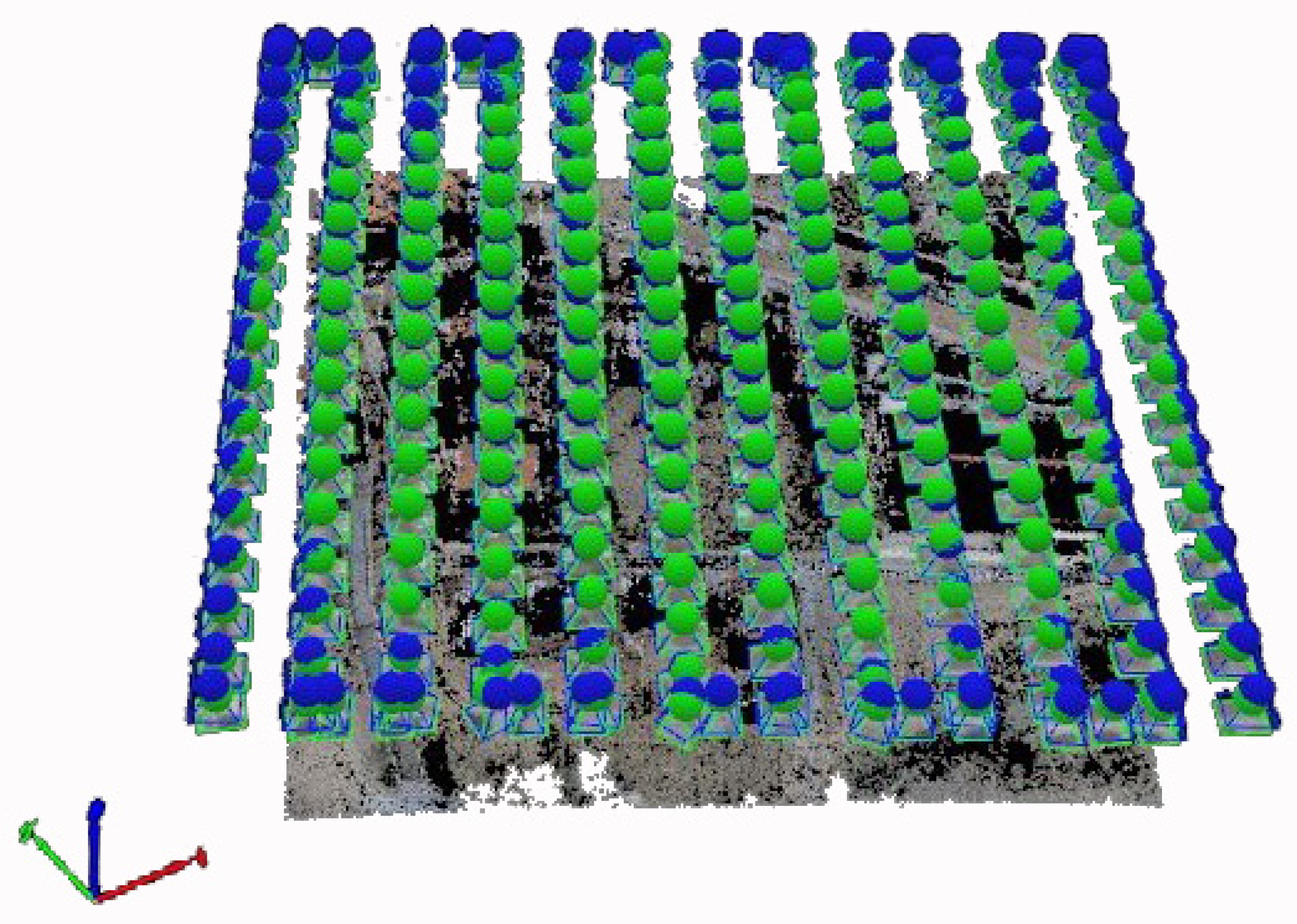

2.4. Data Extraction and Surface Reconstruction

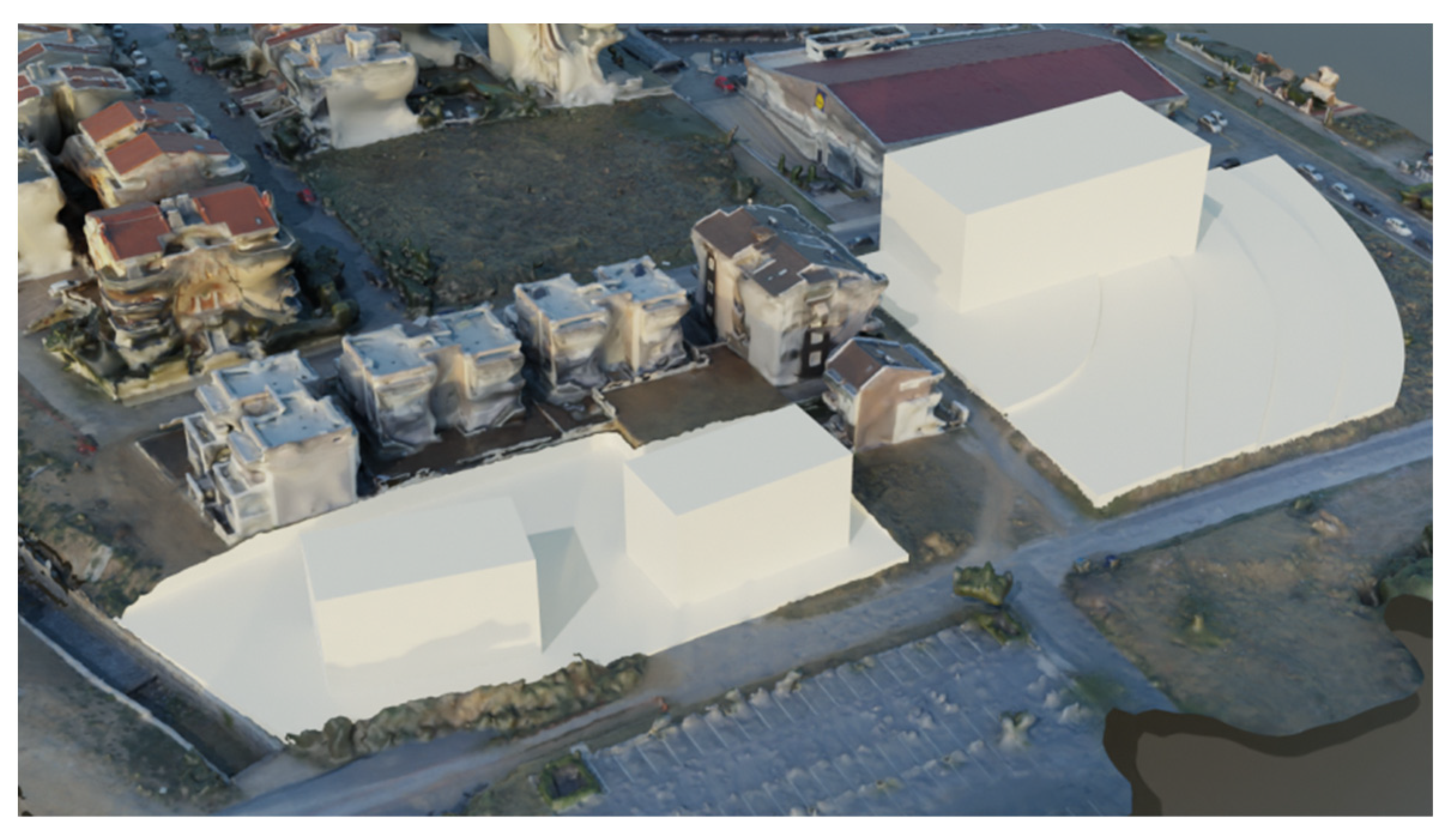

2.5. Texture Mapping and Rendering

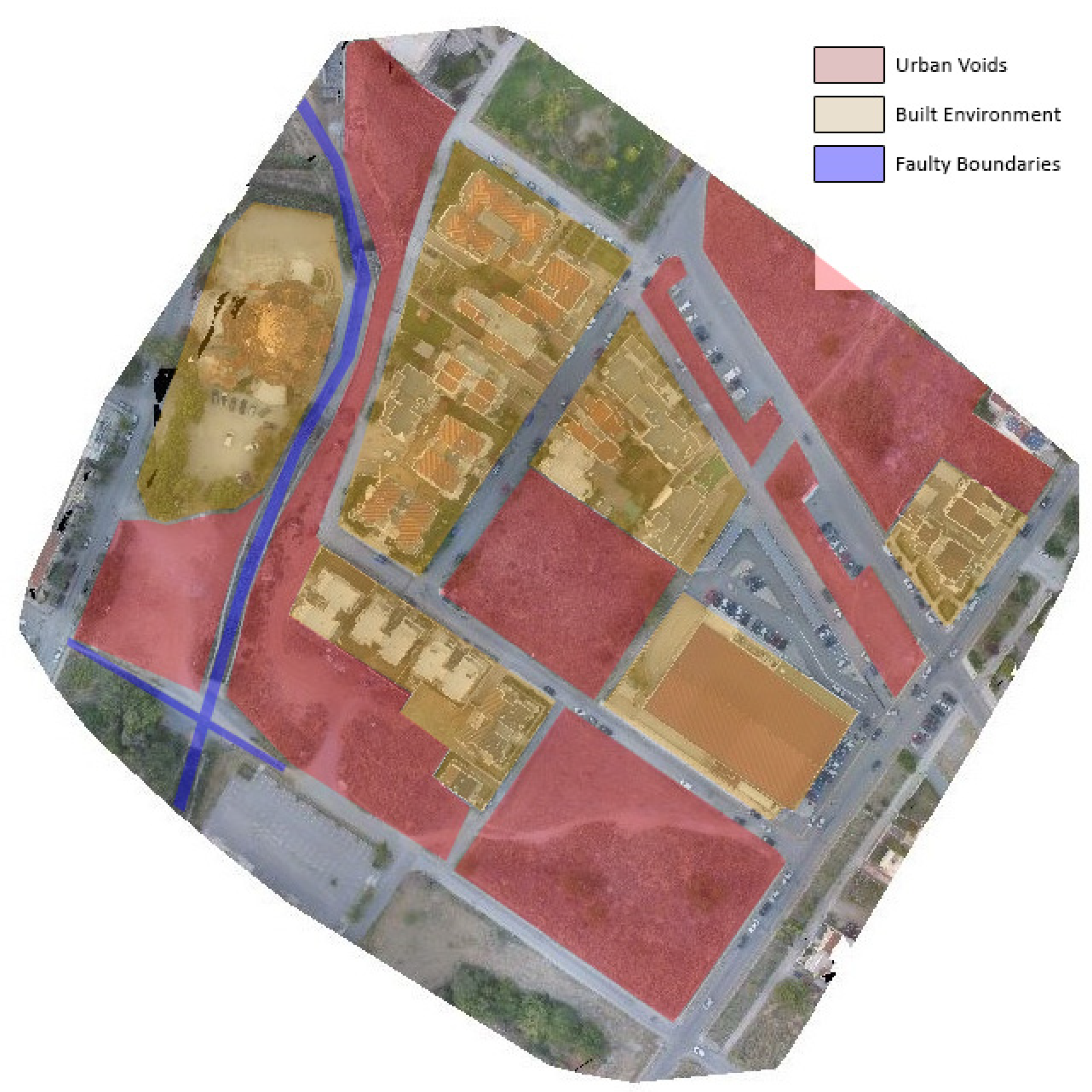

3. Results

4. Discussion and Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- United Nations. World Urbanization Prospects: The 2018 Revision; United Nations: New York, NY, USA, 2019. [Google Scholar]

- Prakash, M.; Ramage, S.; Kavvada, A.; Goodman, S. Open earth observations for Sustainable Urban Development. Remote Sens. 2020, 12, 1646. [Google Scholar] [CrossRef]

- Van der Linden, S.; Okujeni, A.; Canters, F.; Degericx, J.; Heiden, U.; Hostert, P.; Priem, F.; Somers, B.; Thiel, F. Imaging spectroscopy of urban environments. Surv. Geophys. 2018, 40, 471–488. [Google Scholar] [CrossRef] [Green Version]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef] [Green Version]

- Barazzetti, L.; Remondino, F.; Scaioni, M.; Brumana, R. Fully automatic UAV image-based sensor orientation. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 38, 6. [Google Scholar]

- Calantropio, A.; Chiabrando, F.; Sammartano, G.; Spanò, A.; Teppati Losè, L. UAV strategies validation and remote sensing data for damage assessment in post-disaster scenarios. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII-3/W4, 121–128. [Google Scholar] [CrossRef] [Green Version]

- Remondino, F.; Barazzetti, L.; Nex, F.; Scaioni, M.; Sarazzi, D. UAV photogrammetry for mapping and 3D modeling—Current status and future perspectives. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2011, XXXVIII-1/C22, 25–31. [Google Scholar] [CrossRef] [Green Version]

- Wich, S.; Koh, L. Conservation drones: The use of unmanned aerial vehicles by ecologists. GIM Int. 2012, 26, 29–33. [Google Scholar]

- Everaerts, J. The use of unmanned aerial vehicles (UAVs) for remote sensing and mapping. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, XXXVII Pt B1, 1187–1192. [Google Scholar]

- NASA. Remote Sensors; NASA: Washington, DC, USA, 2022. Available online: https://earthdata.nasa.gov/learn/remote-sensors (accessed on 17 April 2022).

- Shakhatreh, H.; Sawalmeh, A.H.; Al-Fuqaha, A.; Dou, Z.; Almaita, E.; Khalil, I.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned Aerial Vehicles (UAVs): A survey on civil applications and key research challenges. IEEE Access 2019, 7, 48572–48634. [Google Scholar] [CrossRef]

- Yao, H.; Qin, R.; Chen, X. Unmanned aerial vehicle for remote sensing applications: A review. Remote Sens. 2019, 11, 1443. [Google Scholar] [CrossRef] [Green Version]

- Wellmann, T.; Lausch, A.; Andersson, E.; Knapp, S.; Cortinovis, C.; Jache, J.; Scheuer, S.; Kremer, P.; Mascarenhas, A.; Kraemer, R.; et al. Remote sensing in urban planning: Contributions towards ecologically sound policies? Landsc. Urban Plan. 2020, 204, 103921. [Google Scholar] [CrossRef]

- Li, M.; Nan, L.; Smith, N.; Wonka, P. Reconstructing building mass models from UAV images. Comput. Graph. 2016, 54, 84–93. [Google Scholar] [CrossRef] [Green Version]

- He, M.; Petoukhov, S.; Hu, Z. Advances in Intelligent Systems, Computer Science and Digital Economics; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; ISBN 978-3-030-39216-1. [Google Scholar]

- Koeva, M.; Muneza, M.; Gevaert, C.; Gerke, M.; Nex, F. Using UAVs for map creation and updating. A case study in Rwanda. Surv. Rev. 2016, 50, 312–325. [Google Scholar] [CrossRef] [Green Version]

- Irschara, A.; Kaufmann, V.; Klopschitz, M.; Bischof, H.; Leberl, F. Towards fully automatic photogrammetric reconstruction using digital images taken from UAVs. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2010, 38 Pt 7A, 65–70. [Google Scholar]

- Drone Aware. GR. HCAA. Available online: https://dagr.hcaa.gr/el/#map_page (accessed on 17 April 2022).

- Phantom 4 Pro-DJI. DJI Official. Available online: https://www.dji.com/gr/phantom-4-pro (accessed on 10 February 2022).

- PIX4Dmapper: Professional Photogrammetry Software for Drone Mapping. Pix4D. Available online: https://www.pix4d.com/product/pix4dmapper-photogrammetry-software (accessed on 10 February 2022).

- Foundation, Blender. Home of the Blender Project—Free and Open 3D Creation Software. blender.org. Available online: https://www.blender.org (accessed on 10 February 2022).

- Biljecki, F.; Ledoux, H.; Stoter, J. An improved LOD specification for 3D building models. Comput. Environ. Urban Syst. 2016, 59, 25–37. [Google Scholar] [CrossRef] [Green Version]

| Characteristics | Platform |

|---|---|

| UAV model: | DJI Phantom 4 Pro |

| Take-off weight: | 1388 g |

| Max. flight time: | 30 min |

| Characteristics | Sensors |

| Camera sensor: | 1″ CMOS |

| Resolution: | 20 MP |

| Lens: | FOV 84 24 mm f: 2.8-f: 11 |

| Max. video rec. resolution: | 4K 60 FPS |

| Operating frequency: | 2.4 GHz/5.8 GHz |

| Geolocation: | On-board GPS |

| Parameters | Values |

|---|---|

| Camera model: | DJI FC300C |

| F-stop: | f: 2.8 |

| Focal length: | 4 mm |

| Dimensions: | 4000 × 3000 |

| GSD (ground sampling distance) | 2.56 cm/px |

| Horizontal and vertical resolution: | 72 dpi |

| Bit depth: | 24 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Skondras, A.; Karachaliou, E.; Tavantzis, I.; Tokas, N.; Valari, E.; Skalidi, I.; Bouvet, G.A.; Stylianidis, E. UAV Mapping and 3D Modeling as a Tool for Promotion and Management of the Urban Space. Drones 2022, 6, 115. https://doi.org/10.3390/drones6050115

Skondras A, Karachaliou E, Tavantzis I, Tokas N, Valari E, Skalidi I, Bouvet GA, Stylianidis E. UAV Mapping and 3D Modeling as a Tool for Promotion and Management of the Urban Space. Drones. 2022; 6(5):115. https://doi.org/10.3390/drones6050115

Chicago/Turabian StyleSkondras, Alexandros, Eleni Karachaliou, Ioannis Tavantzis, Nikolaos Tokas, Elena Valari, Ifigeneia Skalidi, Giovanni Augusto Bouvet, and Efstratios Stylianidis. 2022. "UAV Mapping and 3D Modeling as a Tool for Promotion and Management of the Urban Space" Drones 6, no. 5: 115. https://doi.org/10.3390/drones6050115

APA StyleSkondras, A., Karachaliou, E., Tavantzis, I., Tokas, N., Valari, E., Skalidi, I., Bouvet, G. A., & Stylianidis, E. (2022). UAV Mapping and 3D Modeling as a Tool for Promotion and Management of the Urban Space. Drones, 6(5), 115. https://doi.org/10.3390/drones6050115