The Time of Day Is Key to Discriminate Cultivars of Sugarcane upon Imagery Data from Unmanned Aerial Vehicle

Abstract

:1. Introduction

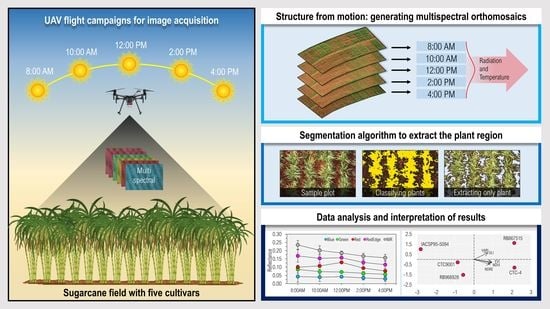

2. Material and Methods

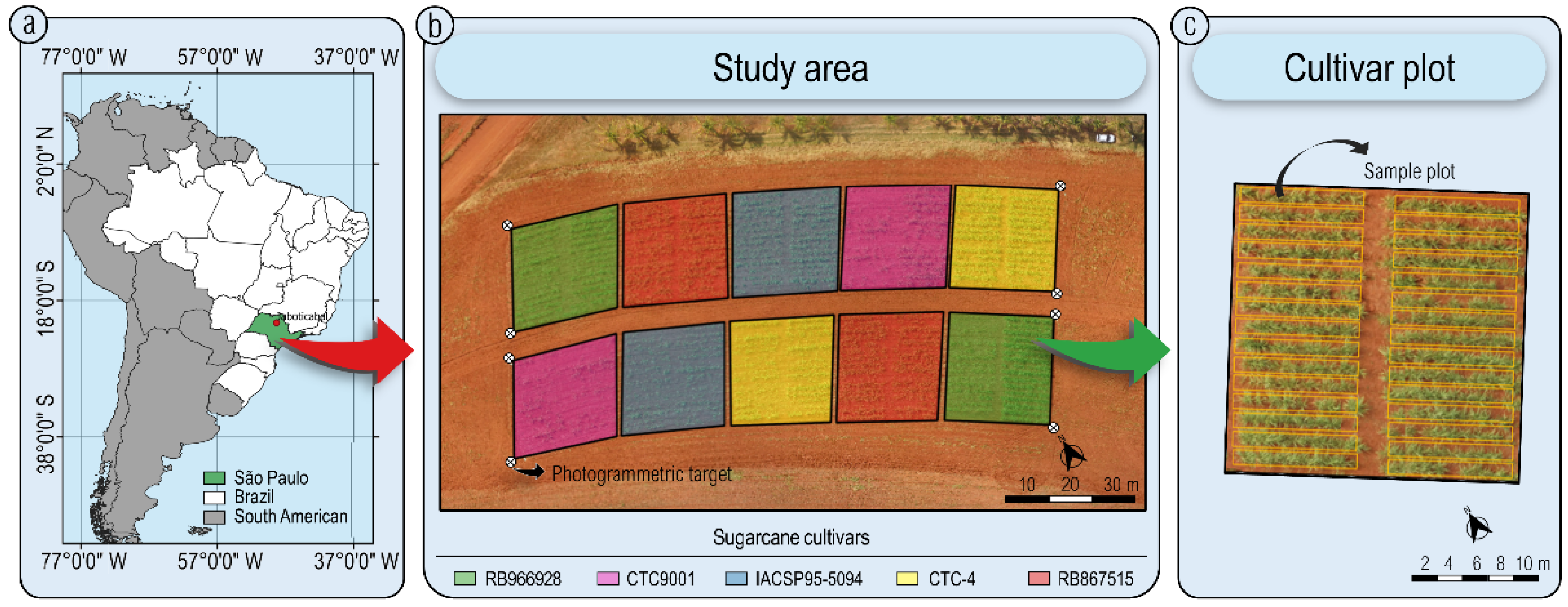

2.1. Study Area

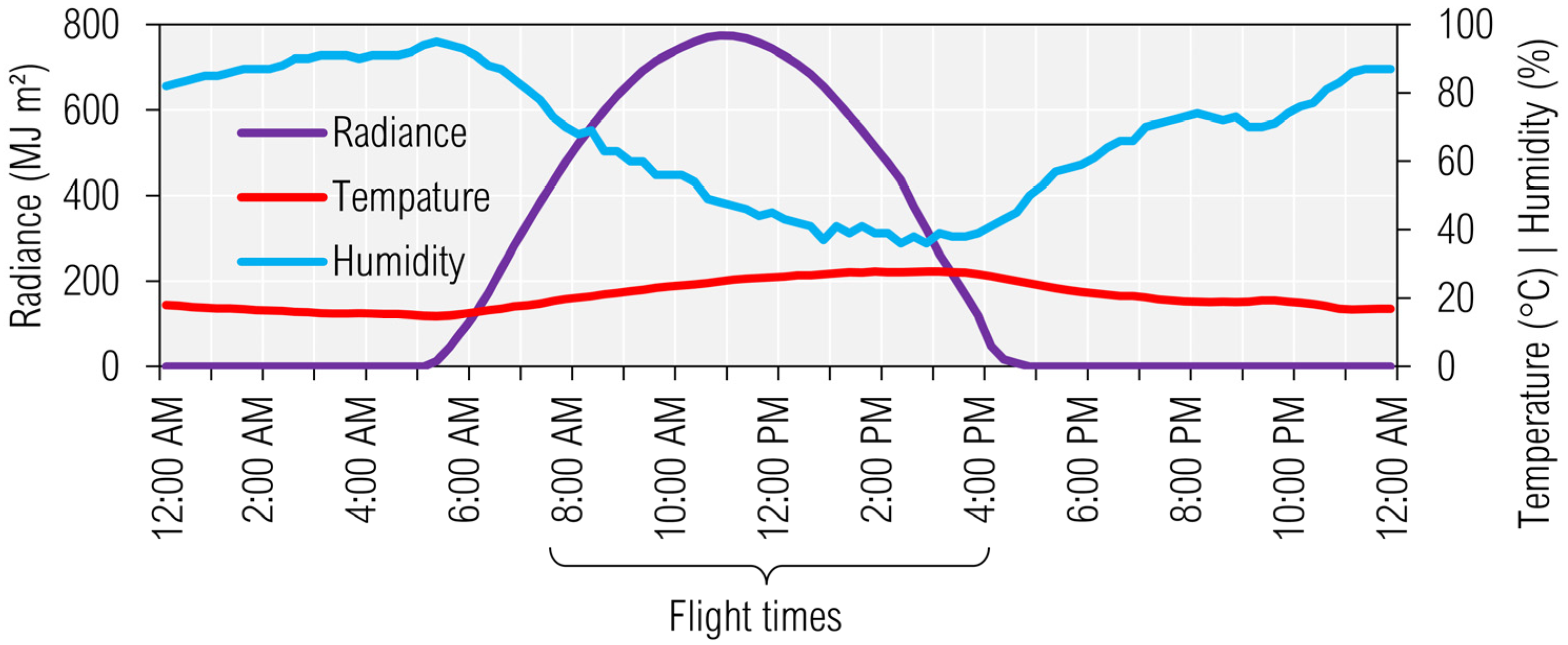

2.2. Data-Acquisition Platform’s Description

2.3. Structure from Motion Photogrammetric Processing

2.4. Image Segmentation

2.5. Spectral Data Extraction

2.6. Data Analysis

3. Results

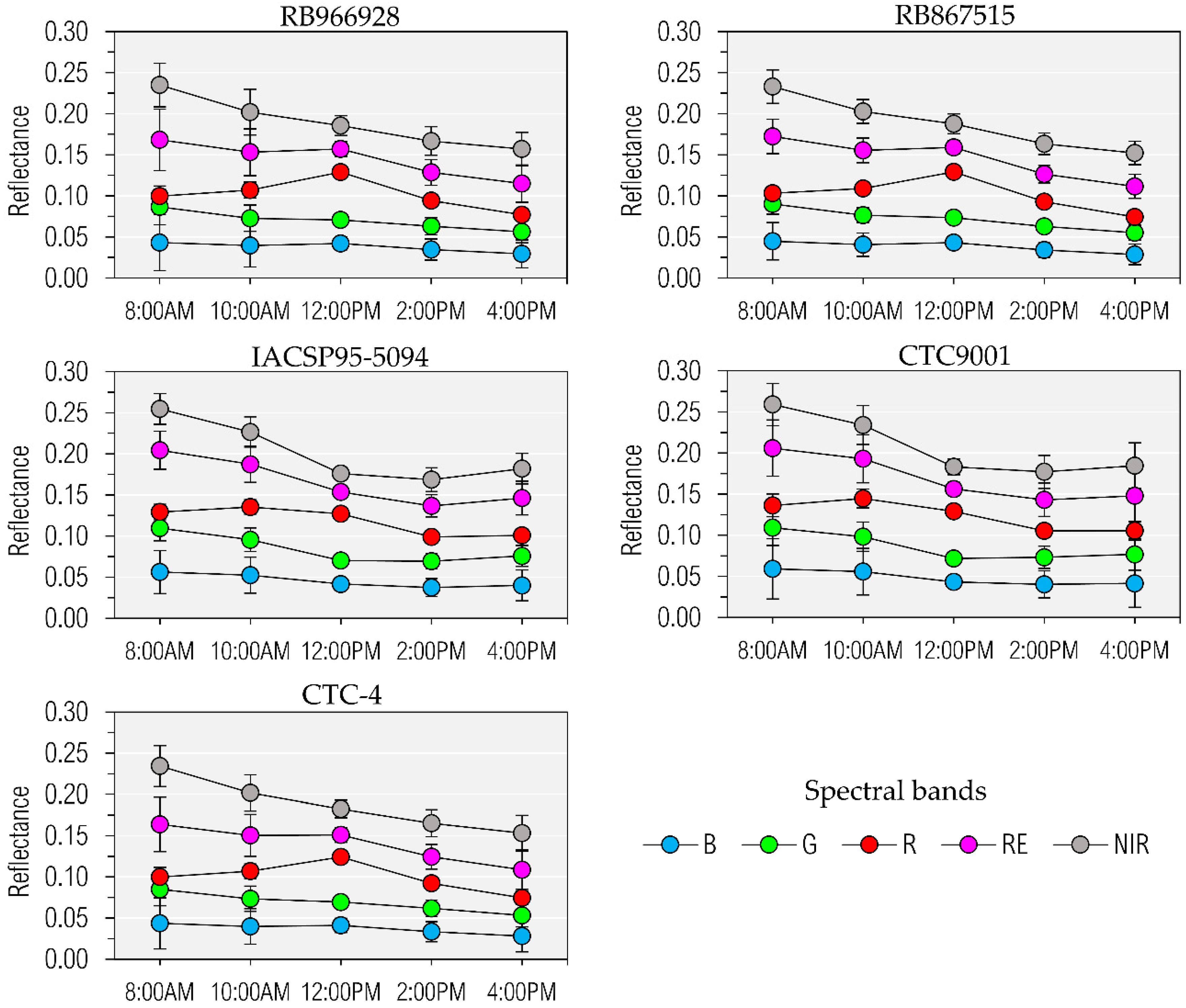

3.1. Spectral Variation throughout the Day

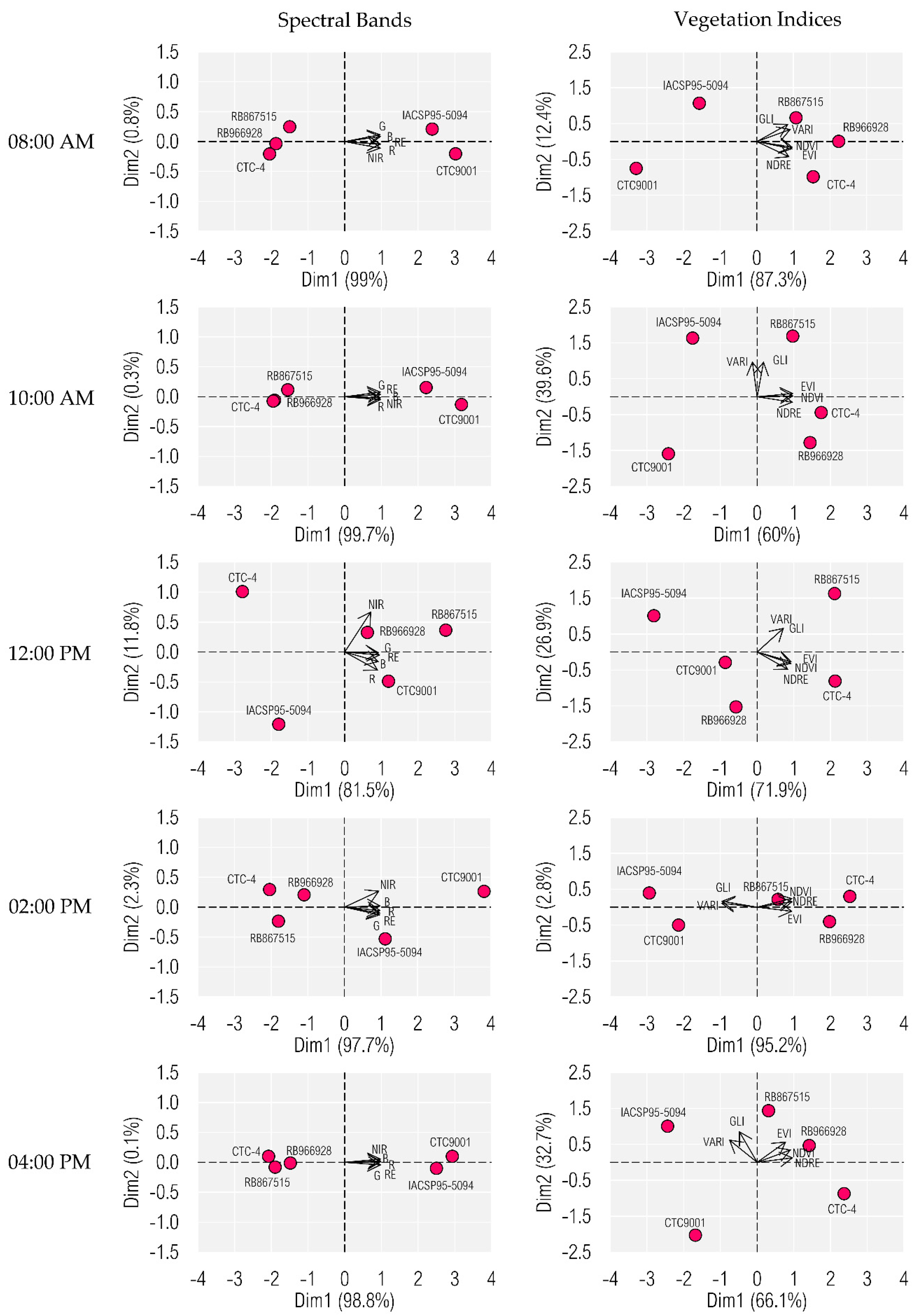

3.2. Discriminating on PCA for Sugarcane Cultivars upon Spectral Bands and Vegetation Indices

4. Discussion

4.1. Spectral Variation throughout the Day

4.2. Discrimination of Cultivars upon Spectral Bands and VIs

4.3. The Value of this Study to Advance the Field of UAVs for Sugarcane and the Ways Forward

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| DEM | digital model elevation |

| EVI | enhanced vegetation index |

| GLI | green leaf index |

| HTP | high-throughput phenotyping |

| NDRE | normalized difference rededge index |

| NDVI | normalized difference vegetation index |

| PAR | photosynthetically active radiation |

| PCA | principal components analysis |

| PBRs | protecting plant breeders |

| RDA | redundancy analysis |

| SfM | structure from motion |

| UAVs | unmanned aerial vehicles |

| VARI | visible atmospherically resistant index |

| VIs | vegetation indices |

References

- Kai, P.M.; Da Costa, R.M.; De Oliveira, B.M.; Fernandes, D.S.A.; Felix, J.; Soares, F. Discrimination of Sugarcane Varieties by Remote Sensing: A Review of Literature. In Proceedings of the Proceedings—2020 IEEE 44th Annual Computers, Software, and Applications Conference, COMPSAC 2020, Madrid, Spain, 13–17 July 2020; pp. 1212–1217. [Google Scholar]

- Barbosa Júnior, M.R.; Tedesco, D.; Corrêa, R.G.; Moreira, B.R.A.; Silva, R.P.; Zerbato, C. Mapping Gaps in Sugarcane by UAV RGB Imagery: The Lower and Earlier the Flight, the More Accurate. Agronomy 2021, 11, 2578. [Google Scholar] [CrossRef]

- Natarajan, S.; Basnayake, J.; Wei, X.; Lakshmanan, P. High-Throughput Phenotyping of Indirect Traits for Early-Stage Selection in Sugarcane Breeding. Remote Sens. 2019, 11, 2952. [Google Scholar] [CrossRef] [Green Version]

- Barbosa Júnior, M.R.; Moreira, B.R.d.A.; Brito Filho, A.L.; Tedesco, D.; Shiratsuchi, L.S.; Silva, R.P. UAVs to Monitor and Manage Sugarcane: Integrative Review. Agronomy 2022, 12, 661. [Google Scholar] [CrossRef]

- Flores, D.; González-Hernández, I.; Lozano, R.; Vazquez-Nicolas, J.M.; Toral, J.L.H. Automated Agave Detection and Counting Using a Convolutional Neural Network and Unmanned Aerial Systems. Drones 2021, 5, 4. [Google Scholar] [CrossRef]

- Von Bueren, S.K.; Burkart, A.; Hueni, A.; Rascher, U.; Tuohy, M.P.; Yule, I.J. Deploying Four Optical UAV-Based Sensors over Grassland: Challenges and Limitations. Biogeosciences 2015, 12, 163–175. [Google Scholar] [CrossRef] [Green Version]

- Amaral, L.R.; Zerbato, C.; Freitas, R.G.; Barbosa Júnior, M.R.; Simões, I.O.P.d.S. UAV Applications in Agriculture 4.0. Rev. Ciência Agronômica 2020, 51, 1–15. [Google Scholar] [CrossRef]

- Manfreda, S.; McCabe, M.F.; Miller, P.E.; Lucas, R.; Madrigal, V.P.; Mallinis, G.; Ben Dor, E.; Helman, D.; Estes, L.; Ciraolo, G.; et al. On the Use of Unmanned Aerial Systems for Environmental Monitoring. Remote Sens. 2018, 10, 641. [Google Scholar] [CrossRef] [Green Version]

- Sangha, H.S.; Sharda, A.; Koch, L.; Prabhakar, P.; Wang, G. Impact of Camera Focal Length and SUAS Flying Altitude on Spatial Crop Canopy Temperature Evaluation. Comput. Electron. Agric. 2020, 172, 105344. [Google Scholar] [CrossRef]

- Azevedo, F.; Cardoso, J.S.; Ferreira, A.; Fernandes, T.; Moreira, M.; Campos, L. Efficient Reactive Obstacle Avoidance Using Spirals for Escape. Drones 2021, 5, 51. [Google Scholar] [CrossRef]

- Avola, G.; Di Gennaro, S.F.; Cantini, C.; Riggi, E.; Muratore, F.; Tornambè, C.; Matese, A. Remotely Sensed Vegetation Indices to Discriminate Field-Grown Olive Cultivars. Remote Sens. 2019, 11, 1242. [Google Scholar] [CrossRef] [Green Version]

- Caruso, G.; Palai, G.; Marra, F.P.; Caruso, T. High-Resolution UAV Imagery for Field Olive (Olea Europaea L.) Phenotyping. Horticulturae 2021, 7, 258. [Google Scholar] [CrossRef]

- Galidaki, G.; Panagiotopoulou, L.; Vardoulaki, T. Use of UAV-Borne Multispectral Data and Vegetation Indices for Discriminating and Mapping Three Indigenous Vine Varieties of the Greek Vineyard. J. Cent. Eur. Agric. 2021, 22, 762–770. [Google Scholar] [CrossRef]

- Oliveira, L.F.; Scharf, P.C. Diurnal Variability in Reflectance Measurements from Cotton. Crop Sci. 2014, 54, 1769–1781. [Google Scholar] [CrossRef]

- Gianquinto, G.; Orsini, F.; Pennisi, G.; Bona, S. Sources of Variation in Assessing Canopy Reflectance of Processing Tomato by Means of Multispectral Radiometry. Sensors 2019, 19, 4730. [Google Scholar] [CrossRef] [Green Version]

- Ma, D.; Rehman, T.U.; Zhang, L.; Maki, H.; Tuinstra, M.R.; Jin, J. Modeling of Diurnal Changing Patterns in Airborne Crop Remote Sensing Images. Remote Sens. 2021, 13, 1719. [Google Scholar] [CrossRef]

- Rossi, M. Mapa Pedológico Do Estado de São Paulo: Revisado e Ampliado; Institulo Florestal: São Paulo, Brazil, 2017; Volume 1, ISBN 9788564808164. [Google Scholar]

- Köppen, W.; Geiger, R. Klimate Der Erde; Verlag Perthes: Gotha, Germany, 1928. [Google Scholar]

- Jiang, S.; Jiang, C.; Jiang, W. Efficient Structure from Motion for Large-Scale UAV Images: A Review and a Comparison of SfM Tools. ISPRS J. Photogramm. Remote Sens. 2020, 167, 230–251. [Google Scholar] [CrossRef]

- Ashapure, A.; Jung, J.; Chang, A.; Oh, S.; Yeom, J.; Maeda, M.; Maeda, A.; Dube, N.; Landivar, J.; Hague, S.; et al. Developing a Machine Learning Based Cotton Yield Estimation Framework Using Multi-Temporal UAS Data. ISPRS J. Photogramm. Remote Sens. 2020, 169, 180–194. [Google Scholar] [CrossRef]

- Rodríguez, J.; Lizarazo, I.; Prieto, F.; Angulo-Morales, V. Assessment of Potato Late Blight from UAV-Based Multispectral Imagery. Comput. Electron. Agric. 2021, 184, 106061. [Google Scholar] [CrossRef]

- Bhatnagar, S.; Gill, L.; Ghosh, B. Drone Image Segmentation Using Machine and Deep Learning for Mapping Raised Bog Vegetation Communities. Remote Sens. 2020, 12, 2602. [Google Scholar] [CrossRef]

- Kawamura, K.; Asai, H.; Yasuda, T.; Soisouvanh, P.; Phongchanmixay, S. Discriminating Crops/Weeds in an Upland Rice Field from UAV Images with the SLIC-RF Algorithm. Plant Prod. Sci. 2021, 24, 198–215. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B. Generating the Blood Exposome Database Using a Comprehensive Text Mining and Database Fusion Approach. Environ. Health Perspect. 2019, 127, 2825–2830. [Google Scholar] [CrossRef]

- Davis, P.A.; Burns, C. Photobiology in Protected Horticulture. Food Energy Secur. 2016, 5, 223–238. [Google Scholar] [CrossRef]

- Souza, R.; Buchhart, C.; Heil, K.; Plass, J.; Padilla, F.M.; Schmidhalter, U. Effect of Time of Day and Sky Conditions on Different Vegetation Indices Calculated from Active and Passive Sensors and Images Taken from Uav. Remote Sens. 2021, 13, 1691. [Google Scholar] [CrossRef]

- Rasmussen, J.; Ntakos, G.; Nielsen, J.; Svensgaard, J.; Poulsen, R.N.; Christensen, S. Are Vegetation Indices Derived from Consumer-Grade Cameras Mounted on UAVs Sufficiently Reliable for Assessing Experimental Plots? Eur. J. Agron. 2016, 74, 75–92. [Google Scholar] [CrossRef]

- Verger, A.; Vigneau, N.; Chéron, C.; Gilliot, J.M.; Comar, A.; Baret, F. Green Area Index from an Unmanned Aerial System over Wheat and Rapeseed Crops. Remote Sens. Environ. 2014, 152, 654–664. [Google Scholar] [CrossRef]

- Sukhova, E.; Sukhov, V. Relation of Photochemical Reflectance Indices Based on Differentwavelengths to the Parameters of Light Reactions in Photosystems I and II in Pea Plants. Remote Sens. 2020, 12, 1312. [Google Scholar] [CrossRef] [Green Version]

- Wang, N.; Suomalainen, J.; Bartholomeus, H.; Kooistra, L.; Masiliūnas, D.; Clevers, J.G.P.W. Diurnal Variation of Sun-Induced Chlorophyll Fluorescence of Agricultural Crops Observed from a Point-Based Spectrometer on a UAV. Int. J. Appl. Earth Obs. Geoinf. 2021, 96, 102276. [Google Scholar] [CrossRef]

- Zhang, N.; Su, X.; Zhang, X.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.; Tian, Y. Monitoring Daily Variation of Leaf Layer Photosynthesis in Rice Using UAV-Based Multi-Spectral Imagery and a Light Response Curve Model. Agric. For. Meteorol. 2020, 291, 108098. [Google Scholar] [CrossRef]

- Silva Junior, C.A.; Nanni, M.R.; Shakir, M.; Teodoro, P.E.; Oliveira-Júnior, J.F.; Cezar, E.; Gois, G.; Lima, M.; Wojciechowski, J.C.; Shiratsuchi, L.S. Soybean Varieties Discrimination Using Non-Imaging Hyperspectral Sensor. Infrared Phys. Technol. 2018, 89, 338–350. [Google Scholar] [CrossRef]

- Jensen, J.R. Remote Sensing of the Environment: An Earth Resource Perspective 2/E; Pearson Education: London, UK, 2009. [Google Scholar]

- Goulas, Y.; Fournier, A.; Daumard, F.; Champagne, S.; Ounis, A.; Marloie, O.; Moya, I. Gross Primary Production of a Wheat Canopy Relates Stronger to Far Red Than to Red Solar-Induced Chlorophyll Fluorescence. Remote Sens. 2017, 9, 97. [Google Scholar] [CrossRef] [Green Version]

- Campbell, P.K.E.; Huemmrich, K.F.; Middleton, E.M.; Ward, L.A.; Julitta, T.; Daughtry, C.S.T.; Burkart, A.; Russ, A.L.; Kustas, W.P. Diurnal and Seasonal Variations in Chlorophyll Fluorescence Associated with Photosynthesis at Leaf and Canopy Scales. Remote Sens. 2019, 11, 488. [Google Scholar] [CrossRef] [Green Version]

- Springer, K.R.; Wang, R.; Gamon, J.A. Parallel Seasonal Patterns of Photosynthesis, Fluorescence, and Reflectance Indices in Boreal Trees. Remote Sens. 2017, 9, 691. [Google Scholar] [CrossRef] [Green Version]

- Hoel, B.O.; Solhaug, K.A. Effect of Irradiance on Chlorophyll Estimation with the Minolta SPAD-502 Leaf Chlorophyll Meter. Ann. Bot. 1998, 82, 389–392. [Google Scholar] [CrossRef] [Green Version]

- Crusiol, L.G.T.; Carvalho, J.d.F.C.; Sibaldelli, R.N.R.; Neiverth, W.; do Rio, A.; Ferreira, L.C.; Procópio, S.d.O.; Mertz-Henning, L.M.; Nepomuceno, A.L.; Neumaier, N.; et al. NDVI Variation According to the Time of Measurement, Sampling Size, Positioning of Sensor and Water Regime in Different Soybean Cultivars. Precis. Agric. 2016, 18, 470–490. [Google Scholar] [CrossRef] [Green Version]

- Zuur, A.F.; Ieno, E.N.; Smith, G.M. Principal Component Analysis and Redundancy Analysis. In Analysing Ecological Data; Springer: New York, NY, USA, 2007; pp. 193–224. [Google Scholar]

- Johnson, R.M.; Zimba, P.V.; Viator, R.P.; Veremis, J.C.; Richard, E.P., Jr.; Zimba, P.V. Discrimination of Sugarcane Varieties with Pigment Profiles and High Resolution, Hyperspectral Leaf Reflectance Data. J. Assoc. Sugar Cane Technol. 2008, 28, 63–75. [Google Scholar]

- Kyratzis, A.C.; Skarlatos, D.P.; Menexes, G.C.; Vamvakousis, V.F.; Katsiotis, A. Assessment of Vegetation Indices Derived by UAV Imagery for Durum Wheat Phenotyping under a Water Limited and Heat Stressed Mediterranean Environment. Front. Plant Sci. 2017, 8, 1114. [Google Scholar] [CrossRef] [Green Version]

- Basso, B.; Cammarano, D.; Cafiero, G.; Marino, S.; Alvino, A. Cultivar Discrimination at Different Site Elevations with Remotely Sensed Vegetation Indices. Ital. J. Agron. 2011, 6, e1. [Google Scholar] [CrossRef]

- Maresma, A.; Chamberlain, L.; Tagarakis, A.; Kharel, T.; Godwin, G.; Czymmek, K.J.; Shields, E.; Ketterings, Q.M. Accuracy of NDVI-Derived Corn Yield Predictions Is Impacted by Time of Sensing. Comput. Electron. Agric. 2020, 169, 105236. [Google Scholar] [CrossRef]

- Sinha, P.; Robson, A.; Schneider, D.; Kilic, T.; Mugera, H.K.; Ilukor, J.; Tindamanyire, J.M. The Potential of In-Situ Hyperspectral Remote Sensing for Differentiating 12 Banana Genotypes Grown in Uganda. ISPRS J. Photogramm. Remote Sens. 2020, 167, 85–103. [Google Scholar] [CrossRef]

- Visser, O.; Sippel, S.R.; Thiemann, L. Imprecision Farming? Examining the (in)Accuracy and Risks of Digital Agriculture. J. Rural Stud. 2021, 86, 623–632. [Google Scholar] [CrossRef]

| Band Number | Band Region | Center Wavelength (nm) | Bandwidth FWHM (nm) |

|---|---|---|---|

| 1 | Blue | 475 | 20 |

| 2 | Green | 560 | 20 |

| 3 | Red | 668 | 10 |

| 4 | RedEdge | 717 | 10 |

| 5 | NIR | 840 | 40 |

| Flight Time | Flight Altitude (m) | Number of Images | Overlap (%) | GSD (cm) | Sensor Inclination (°) | ||

|---|---|---|---|---|---|---|---|

| Start | End | Side | Front | ||||

| 8:01 AM | 8:12 AM | 30 | 1955 | 80 | 70 | 2.25 | 90 |

| 10:03 AM | 10:13 AM | 30 | 1960 | 80 | 70 | 2.15 | 90 |

| 12:00 PM | 12:11 PM | 30 | 1965 | 80 | 70 | 2.13 | 90 |

| 2:02 PM | 2:12 PM | 30 | 1965 | 80 | 70 | 2.06 | 90 |

| 4:00 PM | 4:10 PM | 30 | 1950 | 80 | 70 | 2.14 | 90 |

| VI | Nomenclature | Equation |

| NDVI | Normalized Difference Vegetation Index | |

| NDRE | Normalized Difference Red Edge Index | |

| EVI | Enhanced Vegetation Index | |

| VARI | Visible Atmospherically Resistant Index | |

| GLI | Green Leaf Index |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Barbosa Júnior, M.R.; Tedesco, D.; Carreira, V.d.S.; Pinto, A.A.; Moreira, B.R.d.A.; Shiratsuchi, L.S.; Zerbato, C.; Silva, R.P.d. The Time of Day Is Key to Discriminate Cultivars of Sugarcane upon Imagery Data from Unmanned Aerial Vehicle. Drones 2022, 6, 112. https://doi.org/10.3390/drones6050112

Barbosa Júnior MR, Tedesco D, Carreira VdS, Pinto AA, Moreira BRdA, Shiratsuchi LS, Zerbato C, Silva RPd. The Time of Day Is Key to Discriminate Cultivars of Sugarcane upon Imagery Data from Unmanned Aerial Vehicle. Drones. 2022; 6(5):112. https://doi.org/10.3390/drones6050112

Chicago/Turabian StyleBarbosa Júnior, Marcelo Rodrigues, Danilo Tedesco, Vinicius dos Santos Carreira, Antonio Alves Pinto, Bruno Rafael de Almeida Moreira, Luciano Shozo Shiratsuchi, Cristiano Zerbato, and Rouverson Pereira da Silva. 2022. "The Time of Day Is Key to Discriminate Cultivars of Sugarcane upon Imagery Data from Unmanned Aerial Vehicle" Drones 6, no. 5: 112. https://doi.org/10.3390/drones6050112

APA StyleBarbosa Júnior, M. R., Tedesco, D., Carreira, V. d. S., Pinto, A. A., Moreira, B. R. d. A., Shiratsuchi, L. S., Zerbato, C., & Silva, R. P. d. (2022). The Time of Day Is Key to Discriminate Cultivars of Sugarcane upon Imagery Data from Unmanned Aerial Vehicle. Drones, 6(5), 112. https://doi.org/10.3390/drones6050112