The Use of Drones to Determine Rodent Location and Damage in Agricultural Crops

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Area

2.2. Rodent Trapping

2.3. Rodent Burrows

2.4. Crop Biomass and Yield Sampling

2.5. Drone-Based Remote Sensing

2.6. Data Analyses

2.7. Statistical Processing

3. Results

3.1. Rodent Trapping

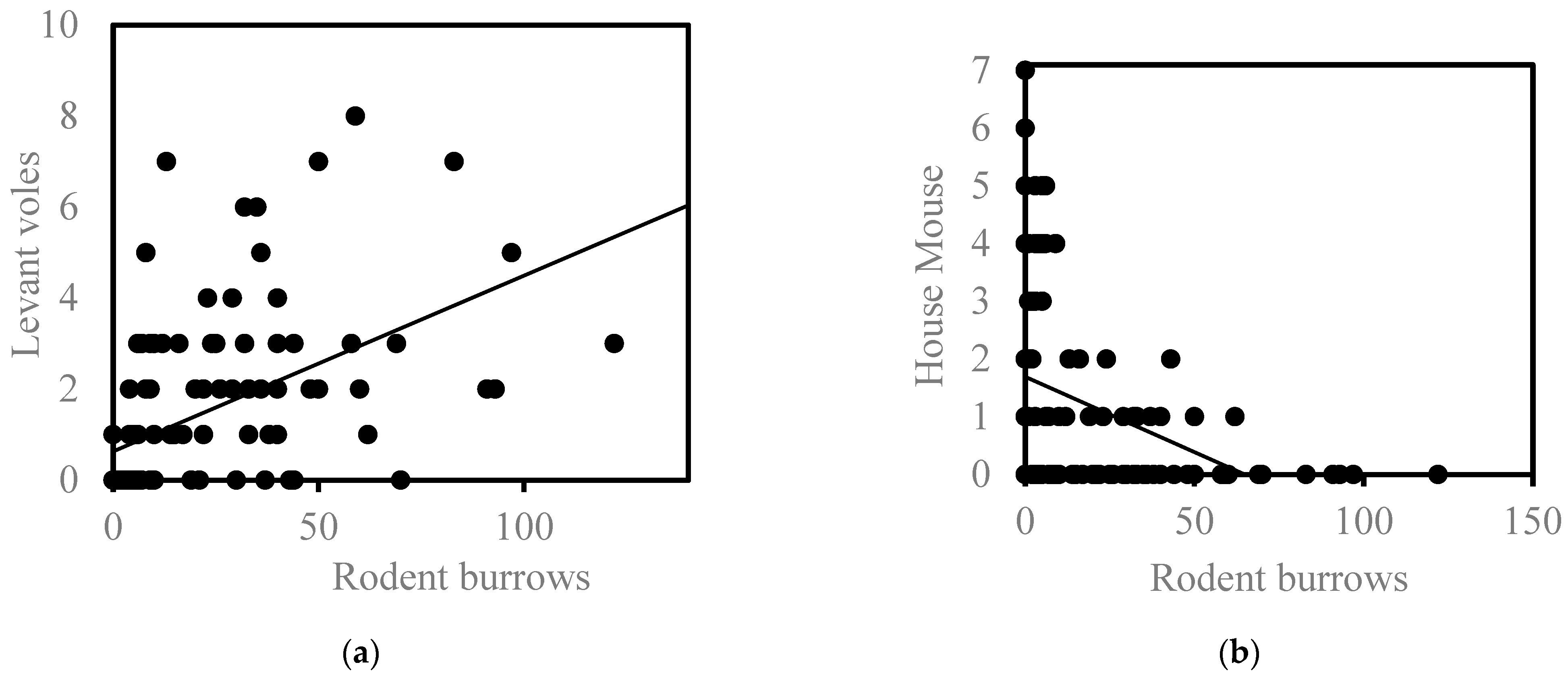

3.2. Relationship between the Number of Rodent Burrows and the Number of Rodents Trapped

3.3. Relationship between Crop Yield and Biomass

3.4. Relationship of Rodent Burrow Number to NDVI and Biomass

4. Discussion

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Jiao, J.; Wang, Y.; Han, L.; Su, D. Comparison of Water Distribution Characteristics for Two Kinds of Sprinklers Used for Center Pivot Irrigation Systems. Appl. Sci. 2017, 7, 421. [Google Scholar] [CrossRef]

- Buick, R. Precision Agriculture: An Integration of Information Technologies with Farming. In Proceedings of the NZ Plant Protection Conference; New Zealand Plant Protection Society: Wellington, New Zealand, 1997; Volume 50, pp. 176–184. [Google Scholar]

- Li, Y.; Su, D. Alfalfa Water Use and Yield under Different Sprinkler Irrigation Regimes in North Arid Regions of China. Sustainability 2017, 9, 1380. [Google Scholar] [CrossRef]

- Makundi, R.H.; Oguge, O.; Mwanjabe, P.S. Rodent pest management in East Africa—An ecological approach. In Ecologically-Based Management of Rodent Pests; Singleton, G.R., Hinds, L.A., Leirs, H., Zhang, Z., Eds.; ACIAR: Canberra, Australia, 1999; pp. 460–476. [Google Scholar]

- Stenseth, N.C.; Leirs, H.; Skonhoft, A.; Davis, S.A.; Pech, R.P.; Andreassen, H.P.; Singleton, G.R.; Lima, M.; Machang’u, R.S.; Makundi, R.H.; et al. Mice, Rats, and People: The Bio-Economics of Agricultural Rodent Pests. Front. Ecol. Environ. 2003, 1, 367–375. [Google Scholar] [CrossRef]

- Heroldová, M.; Tkadlec, E. Harvesting Behaviour of Three Central European Rodents: Identifying the Rodent Pest in Cereals. Crop Prot. 2011, 30, 82–84. [Google Scholar] [CrossRef]

- Witmer, G.; Singleton, G.R. Sustained agriculture: The need to manage rodent damage. In USDA National Wildlife Research Center–Staff Publications; Wager, F., Ed.; Nova Science Publishers: Hauppauge, NY, USA, 2010; pp. 1–38. [Google Scholar]

- FAO. FAO Statistical Pocketbook 2015; FAO: Rome, Italy, 2015; ISBN 9789251088029. [Google Scholar]

- Tobin, M.E.; Fall, M.W. Pest Control: Rodents; Eolss Publishers: Oxford, UK, 2009. [Google Scholar]

- Witmer, G. Rodents in Agriculture: A Broad Perspective. Agronomy 2022, 12, 1458. [Google Scholar] [CrossRef]

- Baldwin, R.; Salmon, T.; Schmidt, R.; Timm, R. Perceived Damage and Areas of Needed Research for Wildlife Pests of California Agriculture. Integr. Zool. 2014, 9, 265–279. [Google Scholar] [CrossRef] [PubMed]

- Salmon, T.P.; Lawrence, S.J. Anticoagulant Resistance in Meadow Voles (Microtus Californicus). In Proceedings of the 22nd Vertebrate Pest Conference; University of California; Davis, CA, USA, 2006. [Google Scholar]

- Erickson, W.A.; Urban, D.J. Potential Risks of Nine Rodenticides to Birds and Nontarget Mammals: A Comparative Approach; US Environmental Protection Agency, Office of Prevention, Pesticides and Toxic Substances: Washington, DC, USA, 2004. [Google Scholar]

- Witmer, G.W.; Moulton, R.S.; Baldwin, R.A. An Efficacy Test of Cholecalciferol plus Diphacinone Rodenticide Baits for California Voles (Microtus Californicus Peale) to Replace Ineffective Chlorophacinone Baits. Int. J. Pest. Manag. 2014, 60, 275–278. [Google Scholar] [CrossRef]

- Kross, S.M.; Bourbour, R.P.; Martinico, B.L. Agricultural Land Use, Barn Owl Diet, and Vertebrate Pest Control Implications. Agric. Ecosyst. Environ. 2016, 223, 167–174. [Google Scholar] [CrossRef]

- Moran, S.; Keidar, H. Checklist of Vertebrate Damage to Agriculture in Israel. Crop Prot. 1993, 12, 173–182. [Google Scholar] [CrossRef]

- Motro, Y. Economic Evaluation of Biological Rodent Control Using Barn Owls Tyto Alba in Alfalfa. In Proceedings of the 8th European Vertebrate Pest Management Conference, Berlin, Germany, 26–30 September; 2011; Volume 432, pp. 79–80. [Google Scholar]

- Cohen-Shlagman, L.; Yom-Tov, Y.; Hellwing, S. The Biology of the Levant Vole Microtus Guentheri in Israel. I. Population Dynamics in the Field. Zeit. Zaugetierkunde 1984, 49, 135–147. [Google Scholar]

- Cohen-Shlagman, L.; Hellwing, S.; Yom-Tov, Y. The Biology of the Levant Vole, Microtus Guentheri in Israel II. The Reproduction and Growth in Captivity. Zeit. Zaugeteirkunde 1984, 49, 149–156. [Google Scholar]

- Mendelssohnm, H.; Yom-Tov, Y. Fauna Palaestina, Mammalia of Israel; The Israel Academy of Science and Humanities: Jerusalem, Israel, 1999. [Google Scholar]

- Motro, Y.; Ghendler, Y.; Muller, Y.; Goldin, Y.; Chagina, O.; Rimon, A.; Harel, Y.; Motro, U. A Comparison of Trapping Efficacy of 11 Rodent Traps in Agriculture. Mamm. Res. 2019, 64, 435–443. [Google Scholar] [CrossRef]

- Haim, A.; Shana, U.; Brandes, O.; Gilboa, A. Suggesting the Use of Integrated Methods for Vole Population Management in Alfalfa Fields. Integr. Zool. 2007, 2, 184–190. [Google Scholar] [CrossRef] [PubMed]

- Monks, J.M.; Wills, H.P.; Knox, C.D. Testing Drones as a Tool for Surveying Lizards. Drones 2022, 6, 199. [Google Scholar] [CrossRef]

- Plaza, J.; Sánchez, N.; García-Ariza, C.; Pérez-Sánchez, R.; Charfolé, F.; Caminero-Saldaña, C. Classification of Airborne Multispectral Imagery to Quantify Common Vole Impacts on an Agricultural Field. Pest. Manag. Sci. 2022, 78, 2316–2323. [Google Scholar] [CrossRef]

- Addink, E.A.; De Jong, S.M.; Davis, S.A.; Dubyanskiy, V.; Burdelov, L.A.; Leirs, H. The Use of High-Resolution Remote Sensing for Plague Surveillance in Kazakhstan. Remote Sens. Environ. 2010, 114, 674–681. [Google Scholar] [CrossRef]

- Wilschut, L.I.; Addink, E.A.; Heesterbeek, J.A.P.; Dubyanskiy, V.M.; Davis, S.A.; Laudisoit, A.; Begon, M.; Burdelov, L.A.; Atshabar, B.B.; de Jong, S.M. Mapping the Distribution of the Main Host for Plague in a Complex Landscape in Kazakhstan: An Object-Based Approach Using SPOT-5 XS, Landsat 7 ETM+, SRTM and Multiple Random Forests. Int. J. Appl. Earth Obs. Geoinf. 2013, 23, 81–94. [Google Scholar] [CrossRef]

- Christie, K.S.; Gilbert, S.L.; Brown, C.L.; Hatfield, M.; Hanson, L. Unmanned Aircraft Systems in Wildlife Research: Current and Future Applications of a Transformative Technology. Front. Ecol. Environ. 2016, 14, 241–251. [Google Scholar] [CrossRef]

- Watts, A.C.; Perry, J.H.; Smith, S.E.; Burgess, M.A.; Wilkinson, B.E.; Szantoi, Z.; Ifju, P.G.; Percival, H.F. Small Unmanned Aircraft Systems for Low-Altitude Aerial Surveys. J. Wildl. Manag. 2010, 74, 1614–1619. [Google Scholar] [CrossRef]

- Anderson, K.; Gaston, K.J. Lightweight Unmanned Aerial Vehicles Will Revolutionize Spatial Ecology. Front. Ecol. Environ. 2013, 11, 138–146. [Google Scholar] [CrossRef]

- Sun, D.; Zheng, J.H.; Ma, T.; Chen, J.J.; Li, X.; Sun, D.; Zheng, J.H.; Ma, T.; Chen, J.J.; Li, X. The Analysis of Burrows Recognition Accuracy in XINJIANG’S Pasture Area Based on Uav Visible Images with Different Spatial Resolution. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 1575–1579. [Google Scholar] [CrossRef]

- Ezzy, H.; Charter, M.; Bonfante, A.; Brook, A. How the Small Object Detection via Machine Learning and Drone-Based Remote-Sensing Imagery Can Support the Achievement of Sdg2: A Case Study of Vole Burrows. Remote Sens. 2021, 13, 3191. [Google Scholar] [CrossRef]

- Szeliski, R. Computer Vision: Algorithms and Applications; Springer Science & Business Media: New York, NY, USA, 2010. [Google Scholar]

- Zhao, F.; Zhao, W.; Yao, L.; Liu, Y. Self-Supervised Feature Adaption for Infrared and Visible Image Fusion. Inf. Fusion 2021, 76, 189–203. [Google Scholar] [CrossRef]

- Fu, Y.; Zhang, T.; Zheng, Y.; Zhang, D.; Huang, H. Joint Camera Spectral Response Selection and Hyperspectral Image Recovery. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 256–272. [Google Scholar] [CrossRef] [PubMed]

- Yuan, X.; Tian, J.; Reinartz, P. Generating Artificial near Infrared Spectral Band from Rgb Image Using Conditional Generative Adversarial Network. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 5, 279–285. [Google Scholar] [CrossRef]

- Illarionova, S.; Shadrin, D.; Trekin, A.; Ignatiev, V.; Oseledets, I. Generation of the Nir Spectral Band for Satellite Images with Convolutional Neural Networks. Sensors 2021, 21, 5646. [Google Scholar] [CrossRef]

- Aslahishahri, M.; Stanley, K.G.; Duddu, H.; Shirtliffe, S.; Vail, S.; Bett, K.; Pozniak, C.; Stavness, I. From RGB to NIR: Predicting of near infrared reflectance from visible spectrum aerial images of crops. In Proceedings of the IEEE International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2017; pp. 1312–1322. [Google Scholar] [CrossRef]

- Nguyen, R.M.; Prasad, D.K.; Brown, M.S. Training-based spectral reconstruction from a single RGB image. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 186–201. [Google Scholar]

- Arad, B.; Ben-Shahar, O. Sparse recovery of hyperspectral signal from natural RGB images. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 19–34. [Google Scholar]

- Fu, Y.; Zheng, Y.; Zhang, L.; Huang, H. Spectral Reflectance Recovery From a Single RGB Image. IEEE Trans. Comput. Imaging 2018, 4, 382–394. [Google Scholar] [CrossRef]

- Jia, Y.; Zheng, Y.; Gu, L.; Subpa-Asa, A.; Lam, A.; Sato, Y.; Sato, I. From RGB to Spectrum for Natural Scenes via Manifold-Based Mapping. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4705–4713. [Google Scholar]

- Navarro-Castilla, Á.; Barja, I. Does Predation Risk, through Moon Phase and Predator Cues, Modulate Food Intake, Antipredatory and Physiological Responses in Wood Mice (Apodemus Sylvaticus)? Behav. Ecol. Sociobiol. 2014, 68, 1505–1512. [Google Scholar] [CrossRef]

- Liro, A. Renewal of Burrows by the Common Vole as the Indicator of Its Numbers. Acta Theriol. 1974, 19, 259–272. [Google Scholar] [CrossRef]

- Bodenheimer, F.S. Dynamics of Vole Populations in the Middle East; Israel Scientific Research Council: Jerusalem, Israel, 1949; pp. 19–25. [Google Scholar]

- Kan, I.; Motro, Y.; Horvitz, N.; Kimhi, A.; Leshem, Y.; Yom-Tov, Y.; Nathan, R. Agricultural Rodent Control Using Barn Owls: Is It Profitable? Am. J. Agric. Econ. 2013, 96, 733–752. [Google Scholar] [CrossRef]

- Brook, A.; Dor, E. Ben Supervised Vicarious Calibration (SVC) of Hyperspectral Remote-Sensing Data. Remote Sens Environ. 2011, 115, 1543–1555. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. Adv. Neural Inf. Process. Syst. 2014, 27, 139–144. [Google Scholar]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A.; Research, B.A. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Johnson, J.; Alahi, A.; Fei-Fei, L. Perceptual losses for real-time style transfer and super-resolution. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Cham, Switzerland, 2016; pp. 694–711. [Google Scholar]

- Huang, X.; Liu, M.-Y.; Belongie, S.; Kautz, J. Multimodal unsupervised image-to-image translation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; Springer: Cham, Switzerland, 2018; pp. 172–189. [Google Scholar]

- Polinova, M.; Jarmer, T.; Brook, A. Spectral Data Source Effect on Crop State Estimation by Vegetation Indices. Environ. Earth Sci. 2018, 77, 752. [Google Scholar] [CrossRef]

- Moran, S.; Keidar, H. Assessment of toxic bait efficacy in field trials by counts of burrow openings. In Proceedings of the Proceedings of the Sixteenth Vertebrate Pest Conference, Santa Clara, CA, USA, 28 February–3 March 1994; University of California Division of Agriculture and Natural Resource: Oakland, CA, USA, 1994; pp. 168–174. [Google Scholar]

- Noy-Meir, I. Dominant Grasses Replaced by Ruderal Forbs in a Vole Year in Undergrazed Mediterranean Grasslands in Israel. J. Biogeogr. 1988, 15, 579–587. [Google Scholar] [CrossRef]

- Wolf, Y. The Levant Vole, Microtus Guentheri (Danford et Alston, 1882). Economic Importance and Control. Bull. OEPP EPPO Bull. 1977, 7, 277–281. [Google Scholar] [CrossRef]

- Kay, B.; Twigg, L.; Korn, T.; Nicol, H. The Use of Artifical Perches to Increase Predation on House Mice (Mus Domesticus) by Raptors. Wildl. Res. 1994, 21, 95–105. [Google Scholar] [CrossRef]

- Campion, M.; Ranganathan, P.; Faruque, S. Uav Swarm Communication and Control Architectures: A Review. J. Unmanned Veh. Syst. 2019, 7, 93–106. [Google Scholar] [CrossRef]

| MSE (mW/sq m/Str/nm) | SAM (Angle in Radians) | |

|---|---|---|

| SVC * black 100% | 52 | 0.01 |

| SVC grey 50% | 34 | 0.004 |

| SVC grey 25% | 22 | 0.006 |

| SVC grey 17% | 18 | 0.009 |

| SVC white 100% (natural gravel) | 42 | 0.016 |

| Crop | 64 | 0.03 |

| Soil | 24 | 0.007 |

| MAE (10−3) | MAPE (%) | |

|---|---|---|

| GAN model | 18.27 | 1.83 |

| NDVI | 19.45 | |

| Biomass | 22.83 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Keshet, D.; Brook, A.; Malkinson, D.; Izhaki, I.; Charter, M. The Use of Drones to Determine Rodent Location and Damage in Agricultural Crops. Drones 2022, 6, 396. https://doi.org/10.3390/drones6120396

Keshet D, Brook A, Malkinson D, Izhaki I, Charter M. The Use of Drones to Determine Rodent Location and Damage in Agricultural Crops. Drones. 2022; 6(12):396. https://doi.org/10.3390/drones6120396

Chicago/Turabian StyleKeshet, Dor, Anna Brook, Dan Malkinson, Ido Izhaki, and Motti Charter. 2022. "The Use of Drones to Determine Rodent Location and Damage in Agricultural Crops" Drones 6, no. 12: 396. https://doi.org/10.3390/drones6120396

APA StyleKeshet, D., Brook, A., Malkinson, D., Izhaki, I., & Charter, M. (2022). The Use of Drones to Determine Rodent Location and Damage in Agricultural Crops. Drones, 6(12), 396. https://doi.org/10.3390/drones6120396