1. Introduction

Bridges are core infrastructures directly connected to the safety of the people and require continuous maintenance and investment at the government level. In order to effectively maintain the bridge, it is necessary to prevent damage by preemptively taking precautions against factors affecting the common life of the bridge. Missing the golden time of bridge maintenance can cause a huge economic burden to repair and improve performance. Therefore, it is economical to respond early if defects, damages or deteriorations are found through bridge inspection. Recently, issues related to the safety problems of bridges have increased due to the aging and collapse of bridges, and a lot of the budget has been invested in solving them. In the U.S., the MAP-21 (Moving Ahead for Progress in the 21st Century) [

1] policy was established to manage the infrastructures based on performance evaluation in 2012, and the FAST Act (Fixing America’s Surface Transportation Act) [

2] policy was proposed in 2016. After implementing MAP-21, FAST Act consolidated transportation investment programs to reduce investment redundancy and increase efficiency, and increased investment in the maintenance of existing infrastructures. In Japan, maintenance guidelines for improving the safety and performance of bridges were developed through the ‘Management Council for Infrastructure Strategy’ in 2013, and ‘Infrastructure Systems Export Strategy [

3]’ was proposed in 2014 to maintain bridges. In addition, In the UK and Germany, ‘National Infrastructure Plan (2010) [

4]’ and ‘The 2030 Federal Transport Infrastructure Plan (2016) [

5]’ were established for infrastructure improvement and management, respectively. In Korea, ‘Special Act on the Safety Control of Public Structures’ was established in 1997 to conduct safety inspections and maintenance of bridges. In this way, many countries are investing in managing infrastructures, and efficient bridge maintenance studies are being conducted.

Recently, the most active research in the field of bridge maintenance is to evaluate the condition of the bridge quickly and accurately using an unmanned aerial vehicle (UAV) and imaging devices. The unmanned aircraft system (UAS) which consists of a UAV, an imaging device for damage collection, and sensors for system operation, has many advantages and is expected to solve limitations of the conventional manpower-based bridge inspection method. In other words, a UAS makes the bridge inspection process safer and faster and makes it possible to efficiently manage the bridge. A UAS can approach the top of the tower, cables, and spot of members, which are difficult to reach, without vehicle control. In addition, collected images can be detected and objectively quantified using image processing techniques (IPTs) and deep learning to ensure the reliability of bridge inspection. The main advantage of IPTs is the ability to detect and classify most of the damages (e.g., crack, corrosion, and efflorescence) on the surface of the bridge. Damage detection using IPTs has been mainly studied for cracks, which are the most structurally influential indicators of concrete bridges among various damages. Cracks on the image represent a sharp change of image brightness and can be detected mathematically through edge-detection techniques [

6,

7]. In order to identify cracks in bridges, Abdel-Qader et al. [

8] compared the performance by applying four edge-detection techniques, which are fast Haar transform (FHT), fast Fourier transform, Sobel, and Canny. Hutchinson et al. [

9] detected the crack by statistically finding the optimal parameter set of the edge-detection algorithms and Nguyen et al. [

10] proposed edge detection using 2D geometric features for cracks with tree-like branches. However, the traditional methods using IPTs require designing filters or finding optimal parameters for detecting cracks. Moreover, there is a limitation in that it is difficult to quickly analyze a large number of images collected from a vision sensor with a UAV. Therefore, recently, deep learning-based damage detections that can classify specific objects and estimate their location in the images without image filters and parameters have been widely used. Deep learning has already been found to exceed the performance of IPTs in detecting objects and estimating their location using images. Vision-based structure damage detection using deep architecture is accompanied by advances in the field of deep learning. Initially, convolutional neural networks (CNNs) using sliding windows were used for crack detection [

11,

12]. However, since CNN requires high computational costs, it was difficult to detect quickly. In addition, early research studies were time-consuming and required the cost to collect enough damage images for the training of deep learning. To overcome this problem, a deep learning technique based on transfer learning that can update a new object using a well-trained network from a large image set has been proposed [

13]. After that, various damages were considered, and different deep learning networks were used depending on the purposes (i.e., high accuracy, fast speed, low computational cost, segmentation, etc.) [

14,

15,

16,

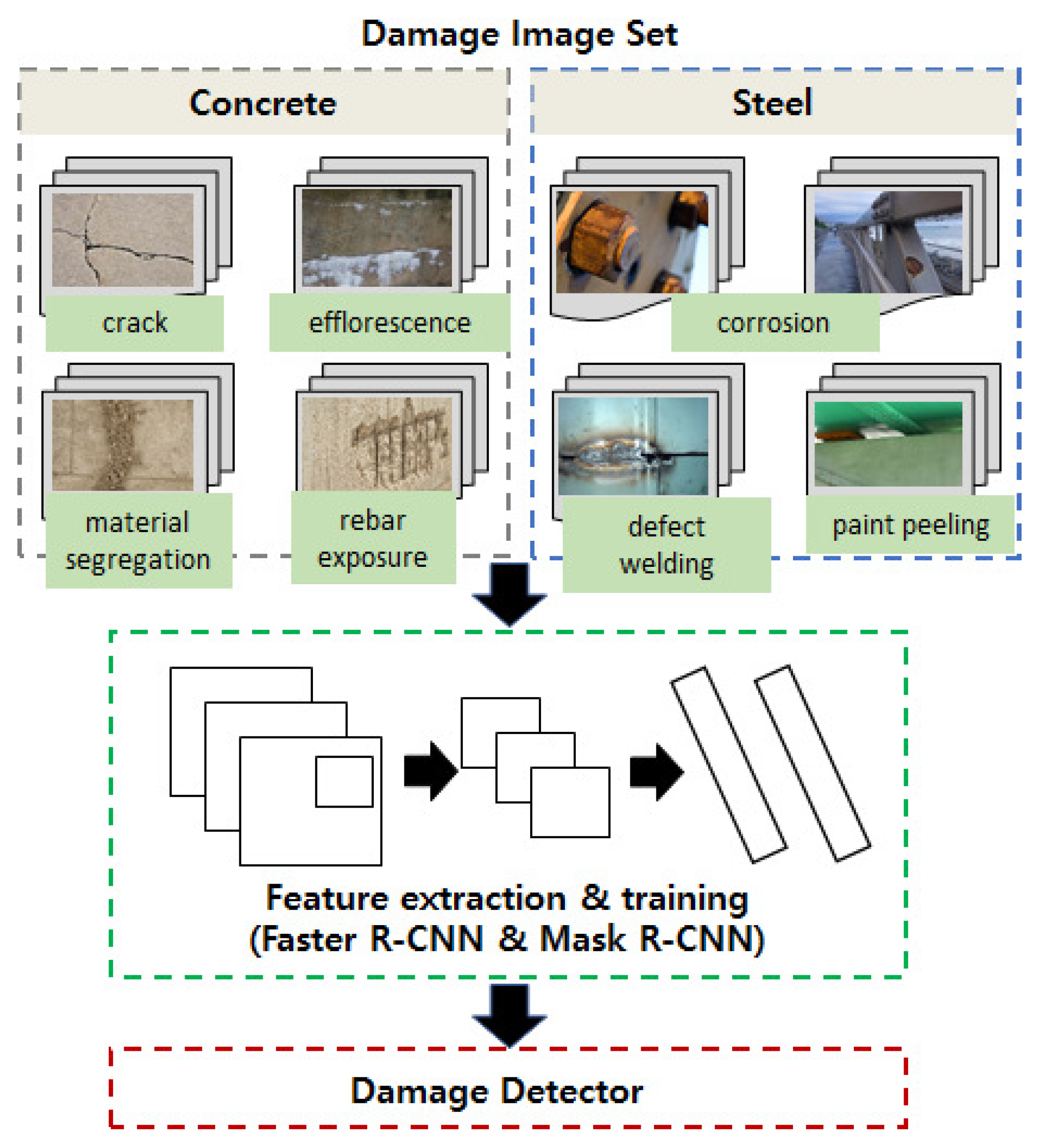

17].

The need for bridge maintenance efficiency and the improvement of image analysis performance has been an opportunity for the UAS-based bridge inspection research to be activated, and pilot tests have been conducted in many countries [

18,

19,

20,

21,

22]. In the early stages, only a single image was captured with a vision sensor by approaching the UAS at a specific point where damage is suspected. In addition, Kim et al. [

23] used a commercial drone to perform aging concrete bridge inspection according to a general procedure (i.e., background model generation through pre-flight, crack detection using deep learning, crack quantification using IPTs, and damage display). In recent years, bridge inspection studies have been conducted to build more complex and difficult tasks by using UAS equipped with various sensors. However, recent studies have faced major challenges that were unthinkable when inspecting bridges using a UAS. Jung et al. [

24] divided UAV-based bridge inspection procedures into pre-inspection, main inspection, and post-inspection phases and considered the role of each phase. Other UAS-based bridge inspection studies include location estimation of a UAV in GPS-shaded areas, 3D background model generation, and image quality improvement [

25,

26,

27]. However, there are not many studies comparing the results of the manpower-based inspection and UAS-based bridge inspection directly. Yoon et al. [

28] conducted a study comparing UAS-based bridge inspection results and human visual inspection results through three-dimensional image coordinates, but focused only on comparing the location of the damage.

This paper deals with the results of UAS-based bridge inspection and condition assessment conducted in the research project. The UAS-based bridge inspection technologies developed in the research project were carried out as the basis of the UAS-based Bridge Management System (U-BMS) for the bridge management department of the government to perform bridge inspection tasks and make decisions. In this research project, hardware technologies such as a UAV for bridge inspection, a ground control system (GCS), an autonomous flying platform using various sensors (i.e., IMU, GPS, camera, 3D LiDAR), and an IoT-based image coordinate estimation system were developed. In addition, the software part developed damage detection and classification using IPTs and deep learning, 3D model generation using the point cloud method, image quality evaluation and improvement, and bridge condition assessment techniques. This paper focused more on the results of the bridge inspection than on the hardware part.

This paper has the following contributions in relation to UAS-based bridge inspection and condition assessment. One of the primary contributions is to carry out all stages of UAV-based bridge inspection procedures from the planning stage to the condition assessment stage. As bridge inspection using UAVs received attention, many studies were conducted and element technologies were developed. However, there are still limitations to putting the technologies into practical use in-service bridges [

24,

29,

30,

31]. This research project solved the challenge problems through field tests, and it has great meaning in automating and integrating the bridge inspection procedure. Another contribution is to compare the results of UAS-based automatic bridge inspection with human-based visual inspection results. For efficient bridge management, objective structural damage detection is required. In this study, the damage of the target member in the bridge was measured, and the results of UAS-based automatic damage detection and appearance damage map were compared with the existing visual inspection results. Through this, it was confirmed that the UAS-based bridge inspection and condition assessment method has objective results. It is judged that such research results can be utilized for practical inspection of UAS-based structures in the future. This study has novelty and contribution in that it derives the results of the entire inspection process while performing field tests for each stage of the UAS-based bridge inspection according to the inspection procedure. Additionally, by comparing the results of the UAS-based bridge inspection with the human-based inspection results, we were able to confirm the performance. Finally, there is a contribution in that it suggests a method to manage UAS-based bridge inspection data and utilize inspection history.

The rest of this paper is organized as follows.

Section 2 briefly describes the UAS-based bridge inspection procedure and key techniques considered in this study. In

Section 3, the results of the pilot test are presented. Comparison results with manpower inspection are discussed in

Section 4. Finally, a conclusion of the findings is presented in

Section 5.

3. Field Test

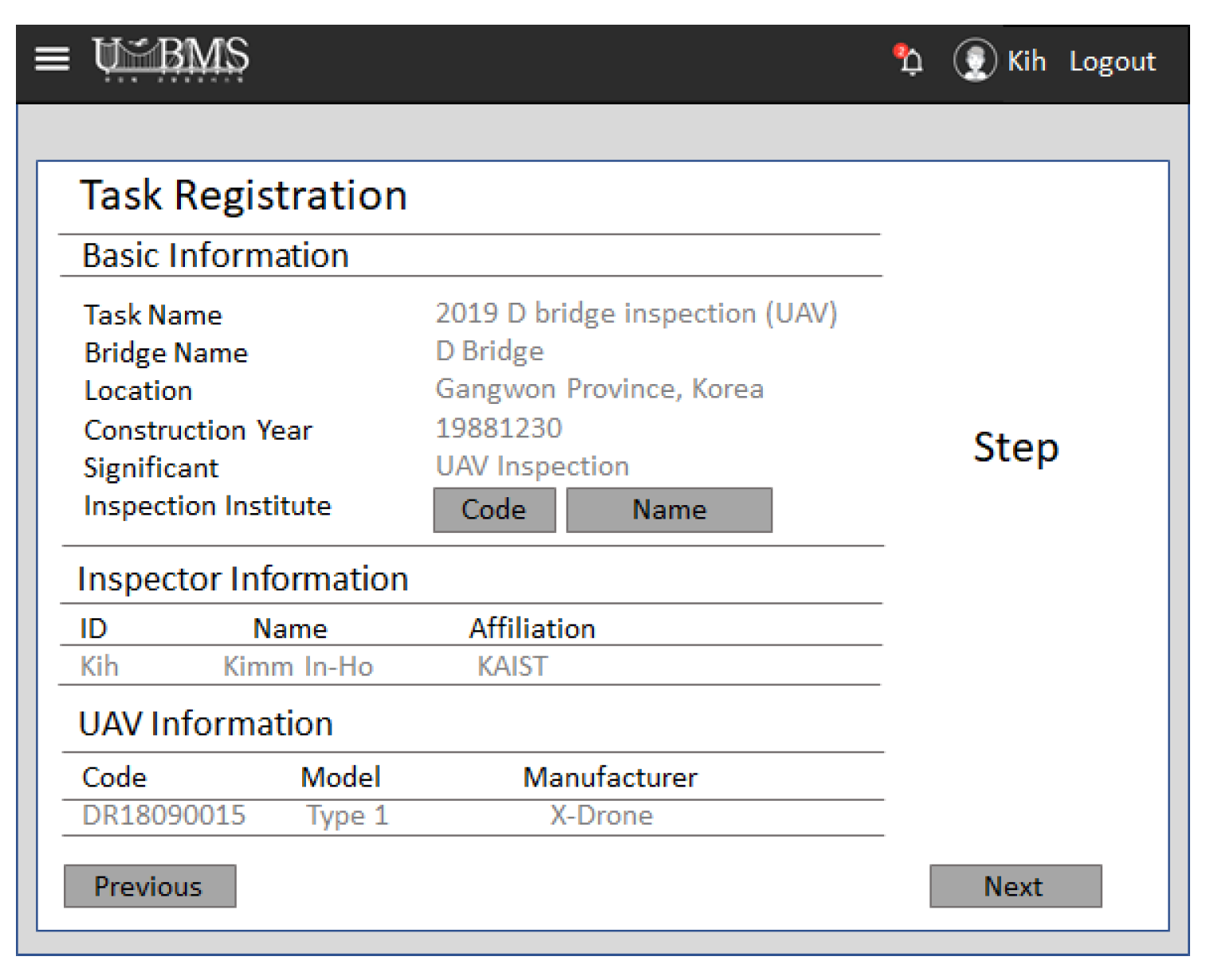

This section discusses the field test results performed in this study. In consideration of accessibility and safety, the target bridge was selected as the D Bridge in Gangwon province, Korea, as shown in

Figure 6. The D bridge is a structure in which 10 steel boxes and 10 PSC box girders are combined, with a width of 11.0 m and a total span of 2000 m. The span of the test bed is the area where the PSC and steel box are connected as shown in the figure, and the corresponding piers (i.e., pier 29–31) were targeted. Task definition is performed on U-BMS and can input basic task information (i.e., target bridge, date, inspection type), inspector and UAS information, and inspection scope as shown in

Figure 7. The preliminary information analysis and preparation step are carried out considering the existence of bridge information. In addition, the inspection scenario and flight path are planned.

In the case of the D bridge, it was constructed approximately 30 years ago, but it is a well-managed B-grade bridge that has bridge information with appearance inspection results. Although there were drawings or damage information on the bridge, a preliminary flight was performed to obtain a 3D/2D model of the bridge considering that there was no bridge information. In the preliminary flight, images were collected for 3D model generation taking into account the longitudinal and lateral overlap, and as shown in

Figure 8, a 3D point cloud-based background model of the bridge was generated using Pix4D. The 3D point cloud technique can extract and match feature points from geo-tagged images containing the UAS information (i.e., location, pose), and the 3D model is made by mesh and texture. Digitizing the 3D/2D background model of the bridge is an essential task to manage data and make decisions on the U-BMS. It can also be used to draw a bridge damage map. The final step in the pre-inspection phase is preparation for the main inspection. This step confirms the UAS-based bridge inspection scenario and flight path. If the record of performing UAS-based bridge inspection in the past is on the U-BMS, it can be used or modified, otherwise, a new record must be created. Lastly, the UAS setup and flight path setup are performed prior to the main flight for bridge inspection. UAS setup includes inspection of sensors installed on the UAV for flight and camera setup. In this study, a high-resolution DSLR camera (i.e., Sony alpha 9) with a lens (i.e., ZEISS Batis 85 mm f/1.8) was used. The camera and lens were determined considering the minimum pixel size that can be obtained when the distance between the target member and the UAS is 2 to 3 m. In addition, the JPEG format and RAW data were simultaneously stored on the high-speed SD memory card in order to improve the quality of the image in the future. For mapping and localization, the Robot Operating System (ROS) read the 3D point cloud map obtained from the preliminary flight with a 3D LiDAR. In addition, 3D path designation is performed considering the size of the UAS and obstacles. Finally, the flight preparation is completed after the inspection path is transmitted to the mini PC mounted on the UAS through the LTE network. The main flight proceeds according to the UAV location estimation technique mentioned in

Section 2.2 and the optimal path set in advance.

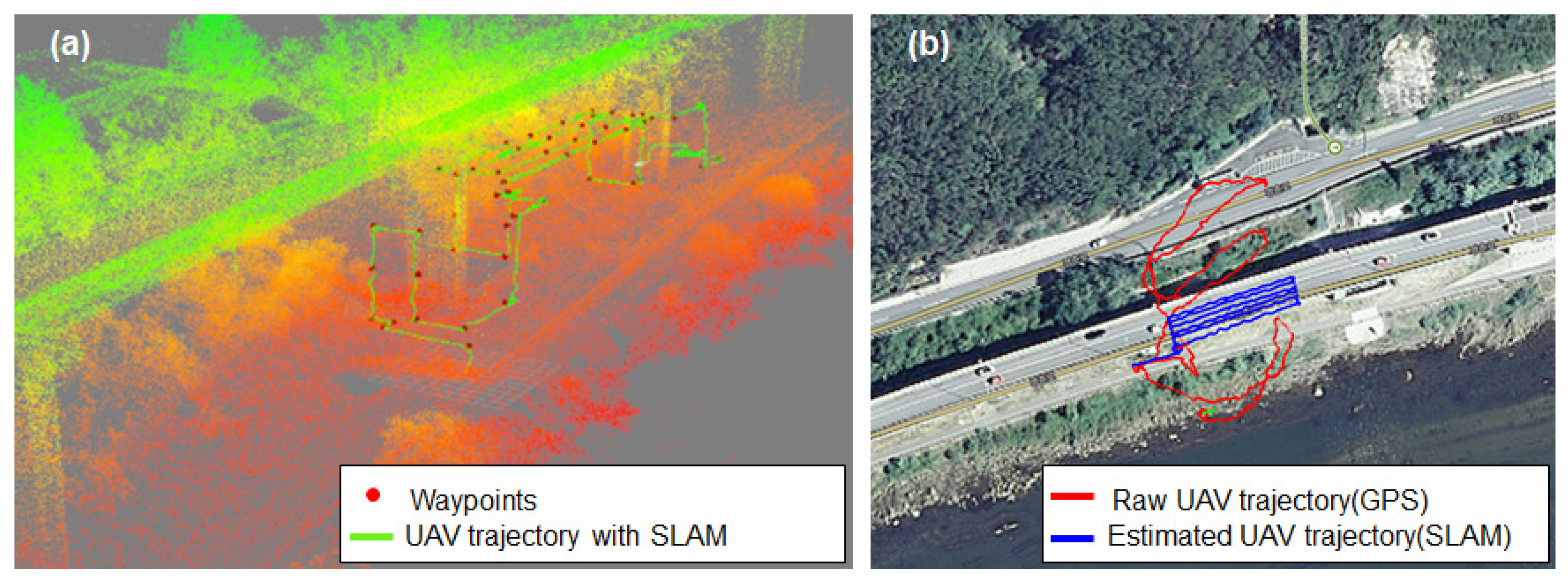

Figure 9 shows the UAV trajectory in the field test. Based on the 3D point cloud map, it can be confirmed that the UAV flight along the pre-considered waypoint in

Figure 9a. In general, the lower part of the deck on a bridge is not well received by GPS. This can be confirmed through the red line in

Figure 9b. Nevertheless, the reason why the UAV was able to fly under the deck stably was that the location of the UAV was estimated using SLAM technology.

Figure 9b shows the trajectory comparison with raw GPS and estimated location using the SLAM under the deck slab of the D bridge. In the area where GPS is well received, the results of GPS raw data and SLAM-based location estimation are similar, and the error is small even with the pre-made graph construction. However, since GPS shading occurs under the bridge, the red line, which is the result of GPS mapping, appears to deviate from the flight path, and the blue line, indicating the location estimation result, shows the stable position of the UAV on the flight path.

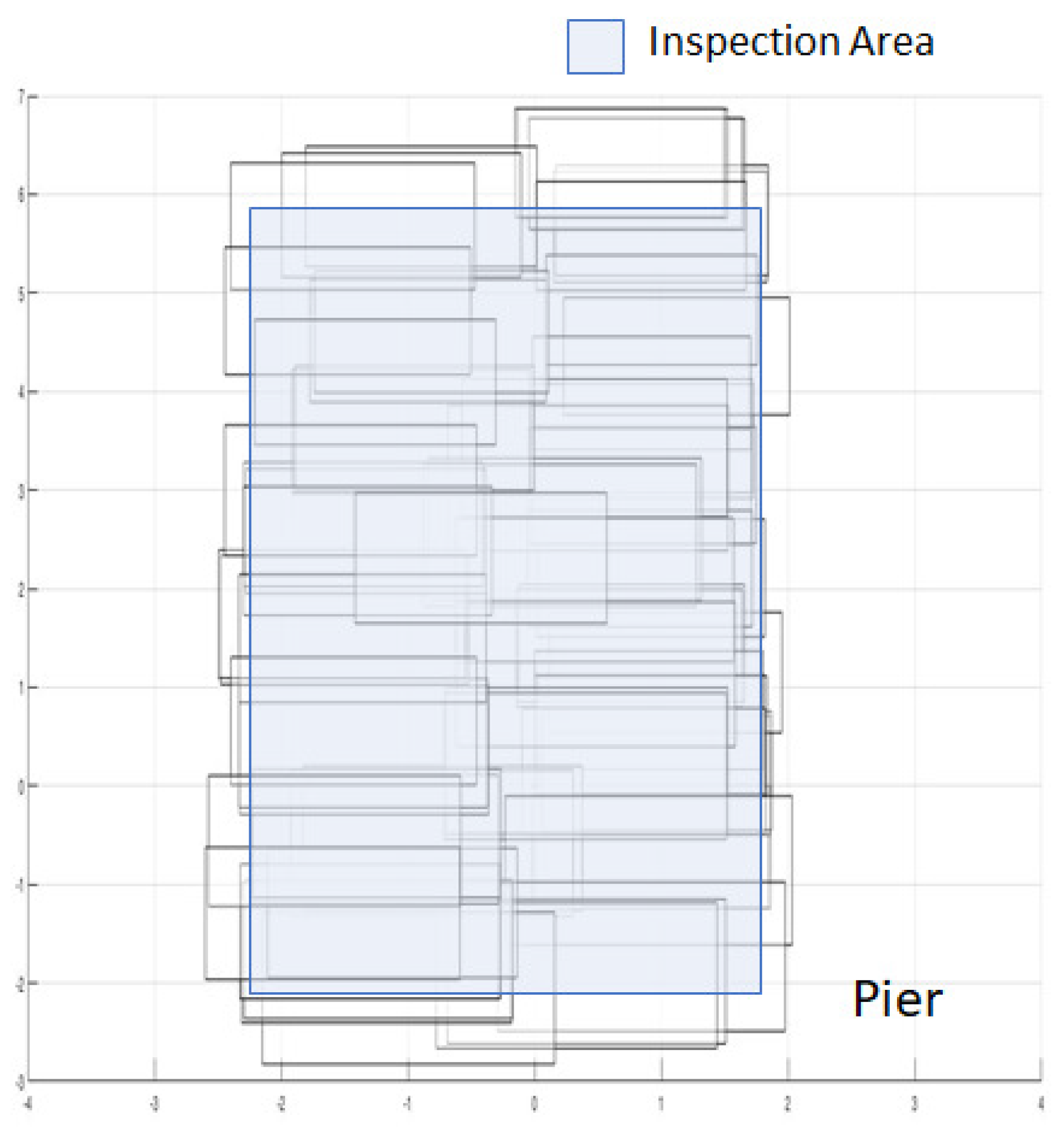

As a final phase of the main inspection phase, the missing area detection and image quality assessment mentioned in

Section 2.3 were performed on-site. In most bridge inspection studies with UAS, this step is not considered. After returning from the field, if a missing area or low-quality image is found in the critical inspection area, a proper evaluation cannot be performed and the experiment must be repeated. Therefore, it is necessary to carry out the corresponding process on-site. In the experiment, considering the stable flight speed of the UAV, the frame per second (FPS) of the camera was set to 10, and the overlap rate between frames was 50%.

Figure 10 shows the result of missing area detection using 270 images for the front side of the pier. By performing missing area detection based on image coordinate estimation at the field of the bridge, it was confirmed that UAS-based bridge inspection covered the target inspection area. If the missing area is detected, the supplementary inspection is performed by additional flying to cover the inspection range. Then, the image quality assessment is conducted for the improved images using IPTs.

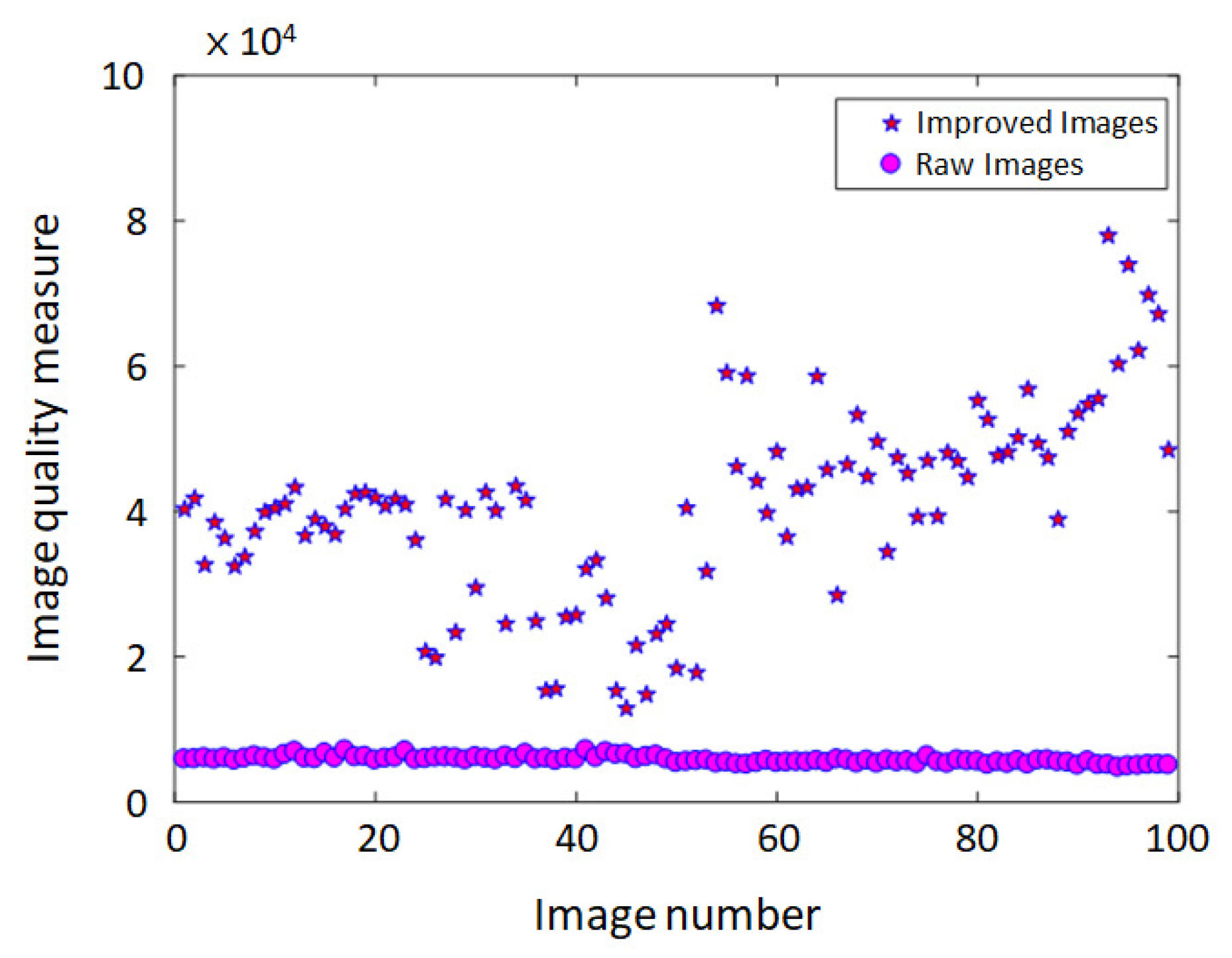

Figure 11 shows the image quality assessment results for 100 images acquired through UAS. Image enhancements in this step took into account motion blur, illumination, and noise, which frequently occur in UAS-based bridge inspection images. As a result of using the basic image processing algorithm, it was confirmed that the image was improved by three times or more compared to the original images. However, some images do not appear to have improved much because the image quality evaluation index developed in this study is related to motion blur affected by the vibrations of the UAS. In the image quality index, it was not possible to confirm the improvement of the image quality, but it was confirmed that the quality was improved by visually checking these images. These results demonstrate the need to diversify the index when evaluating the quality of images acquired from UAS.

After securing data for damage detection and bridge condition evaluation on-site, the post-inspection phase is carried out. This step is carried out in the office and consists of image quality improvement, automatic damage detection, damage expression and management, and bridge condition grading. First, an image processing algorithm is used to improve the quality of an image that has received an appropriate score in the image quality evaluation step, and deep learning-based automatic damage detection was performed to detect and quantify damage on the bridge surface. As mentioned in

Section 2 for damage detection, six damage classes were considered for concrete and five damage classes for steel. However, no special damage other than small corrosion was found in the steel box span considered in this study. This is a result of strict standards and management for damage to steel box bridges in Korea, but in the case of steel, it is difficult to detect because the cracks appear bright and shiny as the cracks are filled with microcracks [

37]. Therefore, it is very difficult to detect cracks in the steel box even with a visual inspection, so special equipment is used. This paper deals with PSC girders and piers as they compare visual inspections and UAV-based inspections. In this study, two deep learning algorithms were used to detect surface damage. First, Faster R-CNN was used to check the extent of two-way cracks and damage. Since this method represents a box for the detected object, the area for the range can be calculated. Another method used Mask R-CNN to detect damage. In this method, the detected object is displayed in masked form, and the corresponding pixel can be counted. Based on the information about the coordinates and pixel size of the image in Step 6, you can determine the number of pixels for the width and length of the damage in the masked damage. Thus, the detected damage can be quantified in mm. In this study, RoI was considered to detect a crack width of 200 mm, and the pixel size was obtained according to the distance between the UAS and the target structure. In this study, various types of damage that can occur in bridges were found by learning and it was confirmed that it can detect even the smallest damage well.

4. Validation and Discussions

In order to perform the UAS-based inspection and the manpower-based appearance inspection on the target bridge, the manpower-based inspection was performed with a ladder truck as shown in

Figure 12. The manpower inspection consisted of a ladder truck driver and two bridge inspection experts, and the bridge inspection was performed through visual inspection. In addition, the inspection of the UAS-based bridge was performed by a UAS pilot and co-pilot.

For comparison of the two inspection methods, an experiment was performed on the same member, and the area where the railing structure was installed above the pier was excluded from the inspection for safety reasons.

Figure 13 and

Figure 14 show examples of human-based visual inspection and UAS-based bridge inspection results for concrete piers and steel box spans, respectively. In the figure, the gray area indicates the inspection exclusion area, the green box indicates the damage that matches the human inspection result, and the blue box indicates the damage that was not found by the human inspection but was additionally detected by the UAS-based bridge inspection. As a result, the UAS-based inspection results found all damage detected through the human-based visual inspection results, and efflorescences were additionally detected. The reason for the additional detection of efflorescence was that the color of the concrete surface was changed and efflorescence was in progress, which was confirmed by bridge inspection experts. This shows that damage progress can be detected through UAS-based bridge inspection. In addition, it can be confirmed that the detailed shape of the crack, which was difficult to express specifically with the conventional visual inspection method, can be expressed as shown in

Figure 13. In this study, the objective of this study was to quantify the 0.3mm crack corresponding to the C grade in the relative evaluation grade of the concrete bridge. This requires keeping the UAS close to the structure, which results in a small field of view (FOV). Therefore, it is difficult to confirm the shape of a long crack with only one image. Moreover, extracting feature points from multiple images and stitching them is not easy. In this study, to solve this problem, the damage was connected using the coordinates of the four vertices of the image. In the results of the UAS-based check-in

Figure 13, the inset represents the results of this check. In steel box girder, corrosion of steel and bolts, painting peeling, and steel member deformation were detected as shown in

Figure 14. However, since the steel member deformation was not included in the damage detection range in this study, it was not confirmed in the UAS-based bridge inspection results. In the results, both inspection methods did not detect cracks in the steel bridge. If a crack is found in the steel bridge, it is judged to have a significant structural defect and cannot be serviced. For this reason, most steel bridges in service are practically free of cracks. However, even if there are cracks, it will be difficult to detect fine cracks using general vision-based object detection. In addition, the steel member is coated by paint, so it is impossible to detect the cracks inside. To solve this problem, an attached-type UAV equipped with a laser module and a thermal imaging camera was developed and applied to apply active-thermography in this project. However, the results of these techniques are omitted because an attached-type UAS is not the scope of this paper.

In the results of the damage map, the damage to the steel box girder and the concrete span did not significantly affect the bridge damage grade. Therefore, only the damage detected in the pier was quantified and shown in

Table 2. In the table, the same damage as the result of the manpower test is indicated by the same number as in

Figure 13, and the damage additionally detected in the UAS test is indicated by an alphabet, and the width (mm) and length (mm) of the crack were denoted by cw and L, respectively. As can be seen in the table, the human-based visual inspection result has a rough value up to the first decimal place. However, UAS-based crack inspection results have more detailed values compared to human inspection. Additionally, UAS-based bridge inspections revealed four more damages (i.e., ⓐ, ⓑ, ⓒ, and ⓓ) more than the visual inspection for the same pier. One additional crack was found at the bottom of the pier, and the other three efflorescences were detected. Among them, efflorescence was calculated by multiplying the number of pixels and the size of the detected mask because it is calculated as the area (A, m) of damage corresponding to the evaluation of the bridge condition. In addition, the reason for the detection of efflorescence was confirmed to be due to the occurrence of discoloration on the concrete surface as mentioned above. However, efflorescence was confirmed by a bridge inspection expert, and it shows that maintenance can be performed in advance using the proposed damage detection method, although further studies may be needed. Other PSC girders, deck slabs and piers appeared similar to those shown in the damage maps and

Table 2.

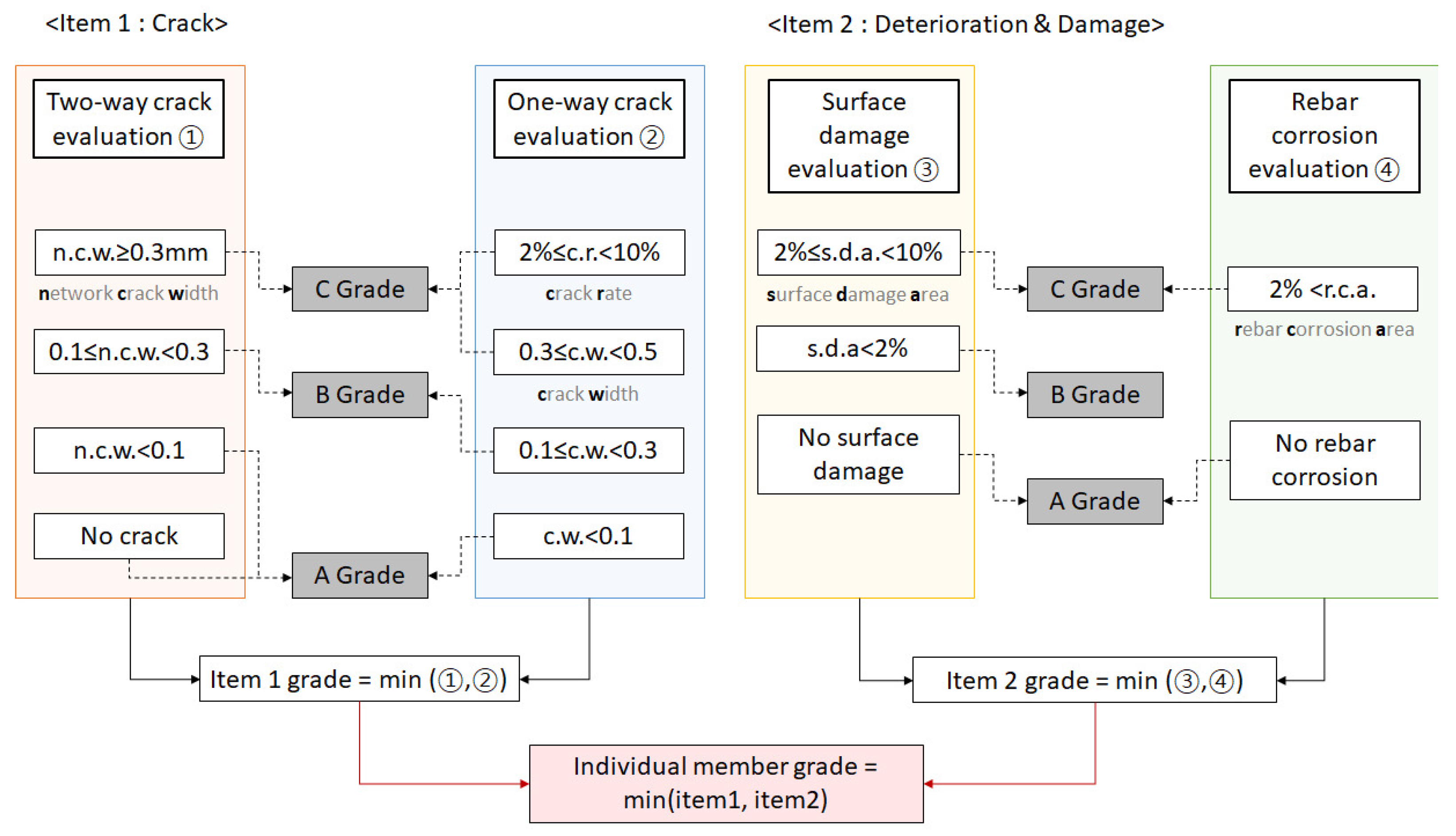

Finally, bridge condition assessment was performed targeting the area shown in

Figure 6. The PSC girder is between piers 29 and 30, and the steel girder is between piers 30 and 31.

Table 3 shows the bridge condition grade determined based on the results of the damage map for the target bridges. The PSC girder was graded as C due to cracks exceeding 0.3 mm, and the steel girder was graded as B due to corrosion and paint loss area, and the width of the cracks identified in the three piers including pier 31 showing the damage map in

Figure 13 was 0.3 mm or less. Nevertheless, pier 30 and pier 31 were graded C because the surface damage area was between 2–10% as shown in

Figure 5. In order to determine the bridge condition grade in the bridge maintenance manual and regulations, the durability evaluation of the structure should be included in South Korea. Therefore, it is difficult for UAS-based bridge inspection results to replace the existing manpower-based bridge maintenance system. However, it can replace visual inspection and is expected to be able to efficiently perform bridge inspection.

5. Conclusions

This paper compared the results of the UAS-based bridge inspection and the manpower-based inspection through the test bed. To this end, in consideration of the existing bridge inspection procedures, field tests and performance evaluations were performed in accordance with the UAS-based bridge inspection detailed procedures. As UAS-based bridge inspection received a lot of attention, many studies on key technologies have been conducted, but studies that performed the entire bridge inspection procedure are lacking, so this study has contributed. The bridge inspection procedure was roughly divided into pre-inspection, main inspection, and post-inspection, and detailed steps were considered for each phase. In the pre-inspection phase, necessary information for bridge inspection was secured and preparations were made for flight. In the main inspection phase, images of the bridge surface were collected using UAS, image coordinate estimation, missing area detection, and image quality evaluation were performed. Finally, in the post-inspection phase, image quality improvement, deep learning-based damage detection, and damage quantification were performed. Moreover, U-BMS was developed to visualize, store and manage the damage data obtained from the test. Finally, a bridge condition assessment was performed based on the results of the UAS-based bridge inspection. The UAS was developed to perform inspections in the GPS shadow area of the bridge, and stability was secured through position and posture estimation technology. In this study, manpower-based bridge inspection was performed using bridge inspection experts to verify and compare the results of UAS-based bridge inspection. As a result, the UAS-based test was able to quantify the damage with more accurate values compared to the human-based inspection, and it was possible to detect the early stage of the damage process. Due to this difference, some of the piers considered in this study had different bridge condition grades. As a result of performing an actual measurement to evaluate the difference between the two results, it was confirmed that the UAS-based bridge inspection has feasibility. However, bridge inspection and management are different, and it is difficult to determine the durability evaluation of a bridge with UAS. However, UAS-based bridge inspection is expected to be complementary to each other because it is fast, cost-effective, and enables objective damage detection.