Abstract

The growing interest in Artificial Intelligence is pervading several domains of technology and robotics research. Only recently has the space community started to investigate deep learning methods and artificial neural networks for space systems. This paper aims at introducing the most relevant characteristics of these topics for spacecraft dynamics control, guidance and navigation. The most common artificial neural network architectures and the associated training methods are examined, trying to highlight the advantages and disadvantages of their employment for specific problems. In particular, the applications of artificial neural networks to system identification, control synthesis and optical navigation are reviewed and compared using quantitative and qualitative metrics. This overview presents the end-to-end deep learning frameworks for spacecraft guidance, navigation and control together with the hybrid methods in which the neural techniques are coupled with traditional algorithms to enhance their performance levels.

1. Introduction

One of the major breakthrough in the last decade in autonomous systems has been the development of an older concept named Artificial Intelligence (AI). This term is vast and addresses several fields of research. Moreover, Artificial Intelligence is a broad term that is often confused with one of its sub-clustering terms. The well-known artificial neural networks (ANNs) are nearly as old as Artificial Intelligence, and they represent a tool, or a model, rather than a method by which to implement AI in autonomous systems. Nearly all deep learning algorithms can be described as particular instances of a standard architecture: the idea is to combine a dataset for specification, a cost function, an optimization procedure and a model, as reported in [1]. Actually, for guidance, navigation and control, using a dataset produces poor results due to distribution mismatching. Even for the case where training is done in a simulated environment but not during deployment, the need to update the dataset using simulated observations and actions during training is justified by the mentioned dataset distribution mismatch, as thoroughly presented in [2]. Additionally, updating the dataset with incremental observations tends to reduce overfitting problems. This survey presents the theoretical basis for the foundational work of [1,3,4,5]. In this overview, the focus is to catch a glimpse of the current trends in the implementation of AI-based techniques in space applications, in particular for what concerns hybrid applications of artificial neural networks and classical algorithms within the domains of guidance, navigation and control. Even though the survey is restricted to these domains, the topic is still very broad, and different perspectives can be found in recent surveys [6,7,8,9,10,11,12]. Most of the analyzed surveys focus on a limited application, deeply investigating the technical solutions for a particular scenario. Table 1 compares the existing works with this manuscript.

Table 1.

Comparison between recent survey works and this review.

The range of applications is from preliminary spacecraft design to mission operations, with an emphasis on guidance and control algorithms coupled with navigation; finally, perturbed dynamics reconstruction and classification of astronomical objects are emerging topics. Due to the very large number of applications, it is the authors’ intent to narrow down the discussion to spacecraft guidance, navigation and control (GNC), and the dynamics reconstruction domain. Nevertheless, besides those falling into the above-mentioned domains, the most promising applications in space of AI-based techniques are mentioned within the discussion of the most common network architectures.

The major contributions of this paper are:

- to introduce the bases of machine learning and deep learning that are rapidly growing within the space community;

- to present a review of the most common artificial neural network architectures used in the space domain, together with emerging techniques that are still theoretical;

- to present specific applications extrapolating the underlying cores of the different algorithms; in particular, the hybrid applications are highlighted, where novel Artificial Intelligence techniques are coupled with traditional algorithms to solve their shortcomings;

- to provide a performance comparison of different neural approaches used in guidance, navigation and control applications that exist in the literature. In general, it is hard to attribute quantitative metrics to such evaluations, since the applicative scenarios reported in the literature are different. The paper attempts to condense the information into a more qualitative comparison.

The paper is structured as follows: Section 2 presents the foundations of machine learning, deep learning and artificial neural networks, together with a brief theoretical overview of the main training approaches; Section 3 presents an overview of the most used artificial neural networks in the spacecraft dynamics identification, navigation, guidance and control applications. Section 4 reports the applications of several artificial neural networks in the context of spacecraft system identification and guidance, navigation and control systems. Finally, Section 5 draws the conclusions of the paper.

2. Machine Learning and Deep Learning

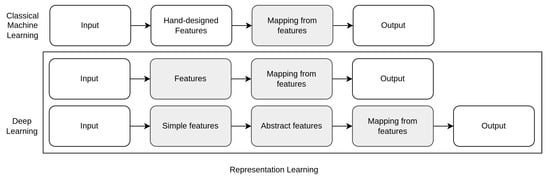

This section provides the theoretical basis of machine learning and deep learning, which are fundamental to understanding the core characteristics of these approaches. The discussion focuses on the domain features that are useful and commonly adopted in specific space-based applications. The research on machine learning (ML) and deep learning (DL) is complex and extremely vast. In order to acquire proper knowledge on the topic, the author suggests referring to [1]. Hereby, only the most relevant concepts are reported in order to contextualize the work developed in the paper. The first important distinction to mark is that between the terms machine learning and deep learning. The highlights of the two approaches are reported in Figure 1.

Figure 1.

Differences between machine learning and deep learning [1].

Machine Learning learns to map input to output given a certain world representation (features) hand-crafted for each task.

Deep learning is a particular kind of machine learning that aims at representing the world as a nested hierarchy of concepts, which are self-detected by the deep learning architecture itself.

The paradigm of ML and DL is to develop algorithms that are data-driven. The information to carry out the task is gathered and derived from either structured or unstructured data. In general, one would have a given experience , which can be easily thought as a set of data . It is possible to divide the algorithms into three different approaches:

- Supervised learning: Given the known outputs , we learn to yield the correct output when new datasets are fed.

- Unsupervised Learning: The algorithms exploit regularities in the data to generate an alternative representation used for reasoning, predicting or clustering.

- Reinforcement Learning: Producing actions that affect the environment and receiving rewards . Reinforcement learning is all about learning what to do (i.e., mapping situations to actions) so as to maximize a numerical reward.

Even though the boundaries between the approaches are often blurred, the focus of this survey is to discuss algorithms that take advantage and inspiration from supervised and reinforcement learning. For this reason, few additional details are provided for such approaches. Tentative clusters of the different learning approaches and their most used algorithms are reported in Table 2.

Table 2.

A comprehensive summary for the different learning approaches.

2.1. Supervised Learning

Supervised learning consists of learning to associate some output with a given input, coherently with the set of examples of inputs and targets [1]. Quite often, the targets are provided by a human supervisor. Nevertheless, supervised learning refers also to approaches in which target states are automatically retrieved by the machine learning model; we use the term this way often throughout this survey. The typical applications of supervised learning are classification and regression. In a few words, classification is the task of assigning a label to a set of input data from among a finite group of labels. The output is a probability distribution of the likelihood of a certain input of belonging to a certain class. On the other hand, regression aims at modeling the relationships between a certain number of features and a continuous target variable. The regression task is largely employed in supervised learning reported in this survey. Supervised learning is applicable to multiple tasks, both offline [13,14] and online [15,16].

2.2. Unsupervised Learning

Unsupervised learning algorithms are fed with a dataset containing many features. The system learns to extrapolate patterns and properties of the structure of this dataset. As reported in [1], in the context of deep learning, the aim is to learn the underlying probability distribution of dataset, whether explicitly as in density estimation or implicitly for tasks such as synthesis or de-noising. Some other unsupervised learning algorithms perform other tasks, such as clustering, which consists of dividing the dataset into separate sets, i.e., clusters of similar experiences and data. The unsupervised learning approach has not yet seen widespread employment in the spacecraft GNC domain.

2.3. Reinforcement Learning

Reinforcement learning is learning what to do, how to map observations to actions, so as to maximize a numerical reward signal. The learner is not told which actions to take, but instead must discover which actions yield the most reward by trying them [5].

One of the challenges that arises in reinforcement learning, and not in other kinds of learning, is the trade-off between exploration and exploitation. The agent typically needs to explore the environment in order to learn a proper optimal policy, which determines the required action in a given perceived state. At the same time, the agent needs to exploit such information to actually carry out the task. In the space domain, only for online deployed applications, the balance must be shifted towards exploitation, for practical reasons. Another distinction that ought to be made is between model-free and model-based reinforcement learning techniques, as shown in Table 3. Model-based methods rely on planning as their primary component, and model-free methods primarily rely on learning. Although there are remarkable differences between these two kinds of methods, there are also great similarities. We call an environmental model whatever information the agent can use to make predictions on what will be the reaction of the environment to a certain action. The environmental model can be known analytically, partially or completely unknown, i.e., to be learned. The model-based algorithms need a representation of the environment. If the agent requires learning the model completely, the exploration is still very important, especially in the first phases of the training. It is worth mentioning that some algorithms start off by mostly exploring, and adaptively trade off exploitation and exploration during optimization, typically ending with very little exploration. For the reasons above, the model-based approach seems to be beneficial in the context of this survey, as it merges the advantages of analytical base models, learning and planning. It is important to report some of the key concepts of reinforcement learning:

- Policy: defines the learning agent’s way of behaving at a given time. Mapping from perceived states of the environment to actions to be taken when in those states.

- Reward: at each time step, the environment sends to the reinforcement learning agent a single number called the reward.

- Value Function: the total amount of reward an agent can expect to accumulate in the future, starting from that state.

Table 3.

Differences between model-based and model-free reinforcement learning. In space, a deterministic representation of a dynamical model is generally available. Nevertheless, some scenarios are unknown (small bodies) or partially known (perturbations).

Table 3.

Differences between model-based and model-free reinforcement learning. In space, a deterministic representation of a dynamical model is generally available. Nevertheless, some scenarios are unknown (small bodies) or partially known (perturbations).

| Model-Free | Model-Based |

|---|---|

| Unknown system dynamics | Learnt system dynamics |

| The agent is not able to make predictions | The agent makes prediction |

| Need for explorations | More sample efficient |

| Lower computational cost | Higher computational cost |

The standard reinforcement learning theory states that an agent is capable of obtaining a policy which provides the mapping between a set of states , where is the set of possible states, for an action , where is the set of possible actions. The dynamics of the agent are basically represented by a transition probability from one state to another at a given time step. In general, the learned policy can be deterministic or stochastic , meaning that the control action follows a conditional probability distribution across the states. Every time the agent performs an action, it receives a reward : the ultimate goal of the agent is to maximize the accumulated discounted reward from a given time step k to the end of the horizon N, which could be in an infinite horizon. The coefficient is the discount rate, which determines how much more current rewards are to be preferred to future rewards. As mentioned, the value function is the total amount of reward an agent can expect to accumulate in the future, in a given state. Note that the value function is obviously associated with a policy:

In most of the reinforcement learning applications, a very important concept is the action-value function :

The remarkable difference between it and the value function is the fact that the action-value function tells you the expected cumulative reward at a certain state, given a certain action. The optimal policy is the one that maximizes the value function . In general, an important remark is that reinforcement learning was originally developed for discrete Markov decision processes. This limitation, which is not solved for many RL methods, implies the necessity of discretizing the problem into a system with a finite number of actions. This is sometimes hard to grasp in a domain in which the variables are typically continuous in space and time (think about the states or the control action) or often discretized in time for implementation. Thus, the application of reinforcement learning requires smart ways to treat the problem and dedicated recasting of the problem itself. The reinforcement learning problem has been tackled using several approaches, which can be divided into two main categories: the policy-based methods and the value-based methods. The former ones search for the policy that behaves correctly in a specific environment [17,18,19,20,21,22]; the latter ones try to value the utility of taking a particular action in a specific state of the environment [23,24]. A common categorization adopted in the literature for identifying the different methods is described below:

- Value-based methods: These methods seek to find optimal value function V and action-value function Q, from which the optimal policy is directly derived. The value-based methods evaluate states and actions. Value-based methods are, for instance, Q-learning, DQN and SARSA [24].

- Policy-based methods: They are methods whose aim is to search for the optimal policy directly, which provides a feasible framework for continuous control. The most employed policy-based methods are: advantage actor+critic, cross-entropy methods, deep deterministic policy gradient and proximal policy optimization [17,18,19,20,21,22].

An additional distinction in reinforcement learning is on-policy and off-policy. On-policy methods attempt to evaluate or improve the policy that is used to make decisions during training, whereas off-policy methods evaluate or improve a policy different from the one used to generate the data, i.e., the experience.

A thorough review, beyond the scope of this paper, is necessary to survey the methods and approaches of reinforcement learning and deep reinforcement learning to space. Some very promising examples were developed in [18,19,20,23,24], and the most active topics in space applications are reviewed in Section 4.3.

2.4. Artificial Neural Networks

Artificial neural networks represent nonlinear extensions to the linear machine learning (or deep learning) models presented in Section 2. A thorough description of artificial neural networks is far beyond the scope of this work. Hereby, the set of concepts necessary to understand the work is reported. In particular, the universal approximation theory is described, which forms the foundation for all the algorithms developed in this paper. The most significant categorization of deep neural networks is into feedforward and recurrent networks. Deep feedforward networks, also often called multilayer perceptrons (MLPs), are the most common deep learning models. The feedforward network is designed to approximate a given function f. According to the task to execute, the input is mapped to an output value. For instance, for a classifier, the network maps an input x to a category y. A feedforward network defines a mapping and learns the values of the parameters w (weights) that result in the best function approximation. These models are called feedforward because information flows from the input layer, through the intermediate ones, up to the output y. Feedback connections are not present in which outputs of the model are fed back as input to the network itself. When feedforward neural networks are extended to include feedback connections, they are called recurrent neural networks.

The essence of deep learning, and machine learning also, is learning world structures from data. All the algorithms falling into the aforementioned categories are data-driven. This means that, despite the possibility of exploiting an analytical representation of the environment, the algorithms need to be fed with structures of data to perform the training. The learning process can be defined as the algorithm by which the free parameters of a neural network are adapted through a process of stimulation by the environment in which it works. The type of learning is the set of instructions for how the parameters are changed, as explained in Section 2. Typically, the following sequence is followed:

- the environment stimulates the neural network;

- the neural network makes changes to the free parameters;

- the neural network responds in a new way according to the new structure.

As one might easily expect, there are several learning algorithms that can consequently be split into different types. It is possible to divide the supervised learning philosophy into batch and incremental learning [4]. Batch learning is suitable for the spatial distribution of data in a stationary environment, meaning that there is no significant time correlation of data, and the environment reproduces itself identically in time. Thus, for such applications, it is possible to gather the data into a whole batch that is presented to the learner simultaneously. Once the training has been successfully completed, the neural networks should be able to capture the underlying statistical behavior of the stationary environment. This kind of statistical memory is used to make predictions exploiting the batch dataset that was presented. This does not mean that batch learning is not capable of transferring knowledge to unseen environments or adapting to real-time applications, as shown in [18,25]. On the other hand, in several applications, the environment is non-stationary, meaning that information signals coming from the environment may vary with time. Batch learning in then inadequate, as there are no means to track and adapt to the varying environmental stimuli. Hence, for on-board learning applications, it is favorable to employ what is called incremental learning (or online or continuous learning) in which the neural network constantly adapts its free parameters to the incoming information in a real-time fashion, as proposed in [15,16,26,27,28,29,30].

2.4.1. Universal Approximation Theorem

The universal approximation theorem takes the following classical form [4]. Let be a non-constant, bounded and continuous function (called the activation function). Let denote the m-dimensional unit hypercube . The space of real-valued continuous functions on is denoted by . Then, given any and any function , there exist an integer N, real constants and real vectors for , such that we may define:

As an approximate realization of the function f,

For all .

2.4.2. Training Algorithms

The basis for most of the supervised learning algorithms is represented by back-propagation. In general, finding the weights of an artificial neural network means determining the optimal set of variables that minimizes a given loss function. Given structured data, comprising input x and target t, one can define the loss function at the output of neuron j for the datum presented:

where is the output value of the output neuron. It is possible to extend this definition to derive a mean indication of the loss function for the complete output layer. We can define a total energy error of the network for the presented input–target pair:

where is the set of output neurons of the network. As stated, the total energy error of the network represents the loss function to be minimized during training. Indeed, this function is dependent on all the free parameters of the network, synaptic weights and biases. In order to minimize the energy error function, we need to find those weights that vanish the derivative of the function itself and minimize the argument:

Closed-form solutions are practically never available, thus it is common practice to use iterative algorithms that make use of the derivative of the error function to converge to the optimal value. The back-propagation algorithm is basically a smart way to compute those derivatives, which can then be employed using traditional minimization algorithms, such as [31,32]:

- Batch gradient descent;

- Stochastic gradient descent;

- Conjugate gradient;

- Newton and quasi-Newton methods;

- Levenberg–Marquardt;

- Backpropagation through time.

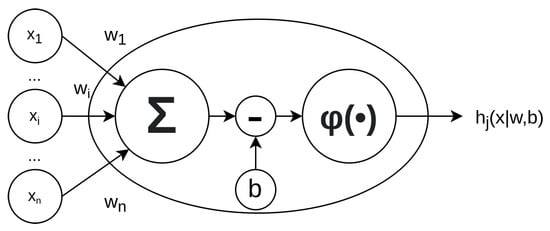

A slightly different approach, highly tailored to the specific application, is the training through Lyapunov stability-based methods, which will be discussed for the particular application of dynamics reconstruction. Let us consider a simple method that can be applied specifically to sequential learning, in the most common network architecture, but easily extended to batch learning. With reference to Figure 2, the induced local field of neuron j, which is the input of the activation function at neuron j, can be expressed as:

where is the set of neurons that share a connection with layer j and is the bias term of neuron j. The output of a neuron is the result of the application of the activation function to the local field :

Figure 2.

Elementary artificial neuron architecture.

In gradient-based approaches, the correction to the synaptic weights is performed according to the direction identified by the partial derivatives (i.e., gradient), which can be calculated according to the chain rule as:

Hence, the update to the synaptic weights is calculated as a gradient descent step in the weight space using the derivative of Equation (10):

where is the tunable learning-rate parameter. The back-propagation algorithm entails two passages through the network: the forward pass and the backward pass. The former evaluates the output of the network and the function signal of each neuron. The weights are unaltered during the forward pass. The backward pass starts from the output layer by passing the loss function back to the input layer, calculating the local gradient for each neuron.

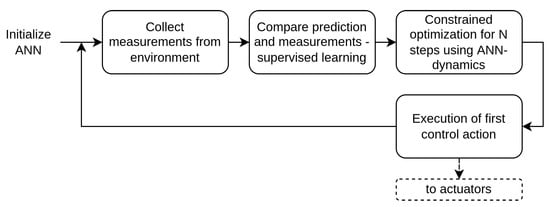

2.4.3. Incremental Learning

Incremental learning stands for the process of updating the weights each time a pair of input–target () is presented. The two mentioned passes are executed at each step. This is the mode utilized for an online application where the training process can potentially never stop, as the data keep on being presented to the network. In incremental learning, often referred to online learning, the system is trained continuously as new data instances become available. They could be clustered in mini-batches or come as datum by datum. Online learning systems are tuned to set how fast they should adapt to incoming data: typically, such a parameter is referred to as learning rate [33]. A high learning rate means that the system reacts immediately to new data, by adapting itself quickly. However, a high learning rate means that the system will also tend to forget and replace the old data. On the other hand, a low learning rate makes the system more stiff, meaning that it will learn more slowly. Additionally, the system will be less sensitive to noise present in the new data or to mini-batches containing non-representative data points, such as outliers. In addition, Lyapunov-based methods are very suitable for incremental learning due to their inherent step-wise trajectory evaluation of the stability of the learning rule. Two examples of incremental learning system are shown in Figure 3 and Figure 4.

Figure 3.

Schematics of online incremental learning for spacecraft guidance [27].

Figure 4.

Example of incremental learning for spacecraft navigation [29].

2.4.4. Batch Learning

Batch learning algorithms execute the weight updates only after all the input–target data are presented to the network [1,33]. One complete presentation of the training dataset is typically called an epoch. Hence, after each epoch it is possible to define an average energy error function, which replaces Equation (6) in the back-propagation algorithm:

The forward and backward passes are performed after each epoch. In batch learning, the system is not capable of learning while running. The training dataset consists of all the available data. This generally takes a lot of time and computational effort, given the typical dataset sizes. For this reason, the batch learning is generally performed on the ground. The system that is trained with batch learning first learns offline and then is deployed and runs without updating itself: it just applies what it has learned.

2.4.5. Overfitting and Online Sampling

In machine learning, a very common issue encountered in a wrong training process is overfitting. In general terms, overfitting refers to the behavior of a model to perform well on the training data, without generalizing correctly. Complex models, such as deep neural networks, are capable of extracting underlying patterns in the data, but if the dataset is not chosen coherently, the model will most likely form non-existing patterns, or simply patterns that are not useful for generalization [1,33]. The main causes of overfitting can be:

- the training dataset is too noisy;

- the training dataset is too small, which causes sampling noise;

- the training set includes uninformative features.

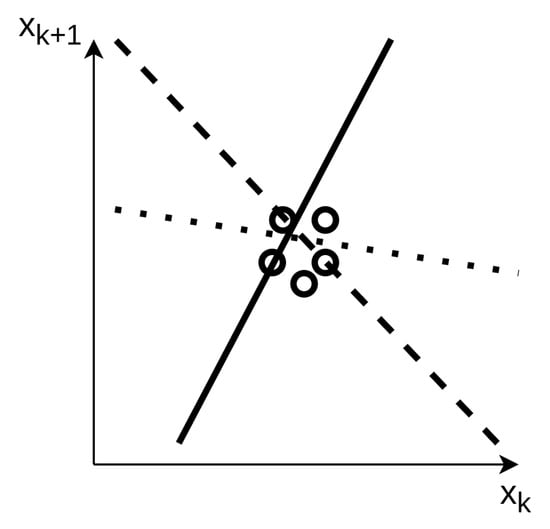

For instance, in dynamics reconstruction, a high sampling frequency of the state and action is not beneficial for the training. Suppose the first batch of data for learning is very much localized in a given portion of space, say, . Several hyper-surfaces approximate the given transition between and . For the sake of explanation, Figure 5 demonstrates the concept in a fictitious 1D model identification. The learning data are enclosed in a restricted region; hence, several curves yield a low loss function in the back-propagation algorithm, but the model is definitely not suitable for generalization. The limitation of the dataset to a bounded and restricted region is not beneficial for identification of dynamics. Especially in a preliminary learning process, this would drive the neural network to a wrong convergence point.

Figure 5.

1D dataset for incremental online learning [27].

3. Types of Artificial Neural Networks

This section provides insights on the most used architecture in the space domain. The reader is suggested to refer to [1,3] for a comprehensive outlook on the core working principles of neural networks. This survey targets the application of artificial neural networks in space systems; thus, only relevant architectures, namely, those actually investigated and implemented in spacecraft GNC systems, are detailed. A summary of the most common neural network architectures is reported in Table 4.

Table 4.

Most popular activation functions used in MLP.

3.1. Feed-Forward Networks

Feedforward neural networks (FFNN) are the oldest and most common network architecture, and they form the fundamental basis for most of the deep learning models. The term feedforward refers to the information flow that the network possesses: the network is evaluated starting from to the output . The network generates an acyclic graph. Two important design parameters to take into account when designing a neural network are:

- Depth: Typical neural networks are actually nested evaluations of different functions, commonly named input, hidden and output layers. In practical applications, low-level features of the dataset are captured by the initial layers up to high-level features learned in the subsequent layer, all the way to the output layer.

- Width: Each layer is generally a vector valued function. The size of this vector valued function, represented by the number of neurons, is the width of the model or layer.

Feedforward networks are a conceptual stepping stone on the path to recurrent networks [1,3,4].

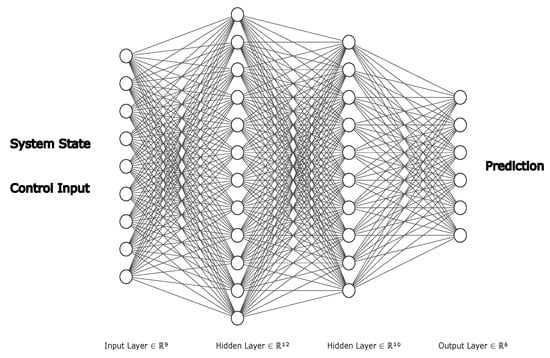

3.1.1. Multilayer Perceptron

The multilayer perceptron is the most used deep model that is developed to build an approximation of a given function [1,3,4]. The network defines the mapping between input and output and learns the optimal values of the weights that yield the best function approximation. The elementary unit of the MLP is the neuron. With reference to Figure 2, the induced local field of neuron j, which is the input of the activation function at neuron j, can be expressed as:

where is the set of neurons that share a connection with layer j; is the bias term of neuron j. The output of a neuron is the result of the application of the activation function to the local field :

The activation function (also known as unit function or transfer function) performs a non-linear transformation of the input state. The most common activation functions are reported in Table 5. Among the most commonly used, at least in spacecraft related applications, are the hyperbolic tangent and the ReLu unit. The softmax function is basically an indirect normalization: it maps a n-dimensional vector x into a normalized n-dimensional output vector. Hence, the output vector values represent probabilities for each of the input elements. The softmax function is often used in the final output layer of a network; therefore, it is generally different from the activation functions used in each hidden layer. For the sake of completeness, a perceptron is originally defined as a neuron that has the Heaviside function as the activation function. An example of an MLP is reported in Section 4. The MLP has been successfully applied in classification, regression and function approximations.

Table 5.

Summary of most common ANN architecture used in space dynamics, guidance, navigation and control domain. The training types are supervised (S), unsupervised (U) and reinforcement learning (R).

3.1.2. Radial-Basis Function Neural Network

A radial-basis-function neural network is a single-layer shallow network whose neurons are Gaussian functions. This network architecture possesses a quick learning process, which makes it suitable for online dynamics identification and reconstruction. The highlights of the mathematical expression of the RBFNN are reported here for clarity. For a generic state input , the components of the output vector of the network are:

In a compact form, the output of the network can be expressed as:

where for , is the trained weight matrix and is the vector containing the output of the radial basis functions, evaluated at the current system state. The RBF network learns to designate the input to a center, and the output layer combines the outputs of the radial basis function and weight parameters to perform classification or inference. Radial basis functions are suitable for classification, function approximation and time series prediction problems. Typically, the RBF network has a simpler structure and a much faster training process with respect to MLP, due to the inherent capability of approximating nonlinear functions using shallow architecture. As one could note, the main difference in the RBFNN with respect to the MLP is that the kernel is a nonlinear function of the information flow: in other words, the actual input to the layer is the nonlinear radial function evaluated at the input data , most commonly Gaussian ones. The most used radial-basis functions that can be used and that are found in space applications are [15,29,39]:

where r is the distance from the origin, c is the center of the RBF, is a control parameter to tune the smoothness of the basis function and is a generic bias. The number of neurons is application-dependent, and it shall be selected by trading off the training time and approximation [29], especially for incremental learning applications. The same consideration holds for the parameters , which impact the shape of the Gaussian functions. A high value for sharpens the Gaussian bell-shape, whereas a low value spreads it on the real space. On the one hand, a narrow Gaussian function increases the responsiveness of the RBF network; on the other hand, in the case of limited overlapping of the neuronal functions due to overly narrow Gaussian bells, the output of the network vanishes. Hence, ideally, the parameter is selected based on the order of magnitude of the exponential argument in the Gaussian function. The output of the neural network hidden layer, namely, the radial functions evaluation, is normalized:

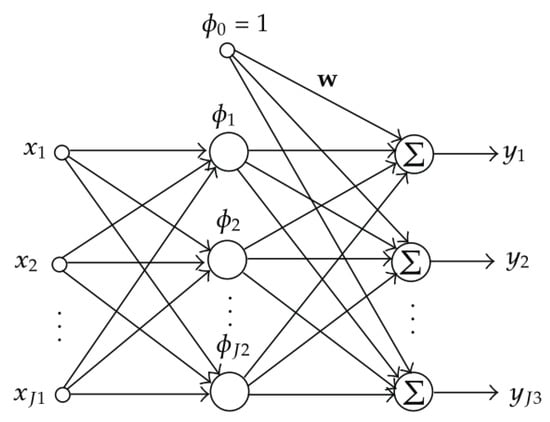

The classic RBF network presents an inherent localized characteristic, whereas the normalized RBF network exhibits good generalization properties, which decreases the curse of dimensionality that occurs with classic RBFNN [39]. A schematic of a RBFNN is reported in Figure 6.

Figure 6.

Architecture of the RBF network. The input, hidden and output layers have J1, J2 and J3 neurons, respectively [39].

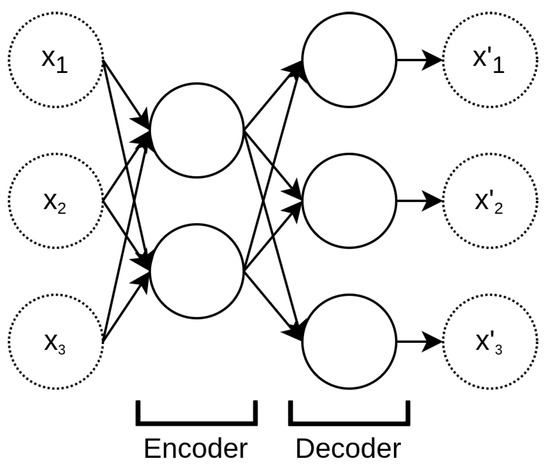

3.1.3. Autoencoders

The autoencoder is a particular feedforward neural network trained using unsupervised learning. The autoencoder learns to reproduce the unit mapping from a certain information input vector to itself. The topological constraint dictates that the number of neurons in the next layer must be lower than the previous one. Such a constraint forces the network to learn a description of the input vector that belongs to the lower-dimensional space of the subsequent layers without losing information. The amount of information lost while encoding a downsizing the input vector is measured by the fitting discrepancy between the input and the reconstructed vector [31,32,40]. The desired lower-dimensional vector concentrating the information contained in the input vector is the layer at which the network starts growing again; see Figure 7. It is important to note that the structure of an autoencoder is exactly the same as the MLP, with the additional constraint of having the same numbers of input and output nodes.

Figure 7.

Basic autoencoder structure.

The autoencoders are widely used for unsupervised applications: typically, they are used for denoising, dimensionality reduction and data representation learning.

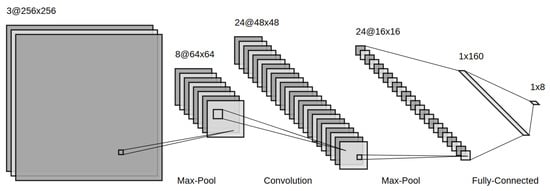

3.1.4. Convolutional Neural Networks

Feedforward networks are of extreme importance to machine learning applications in the space domain. A specialized kind of feedforward network, often referred as a stand-alone type, is the convolutional neural network (CNN) [11]. Convolutional networks are specifically tailored for image processing; for instance, CNNs are used for object recognition, image segmentation and classification. The main reason why traditional feedforward networks are not suitable for handling images is due to the fact that one image can be thought of as a large matrix array. The number of weights, or parameters, to efficiently process large two-dimensional images (or three if more image channels are involved) quickly explodes as the image resolution grows. In general, given a network of width W and depth D, the number of parameters for a fully connected network is . For instance, a low resolution image has a width of , by simply unrolling the image into a 1D array: this means that . A high resolution image, e.g., , quickly reaches . This shortcoming results in complex training procedures, very much subject to overfitting. The convolutional neural network paradigm stands for the idea of reducing the number of parameters starting from the main assumptions:

- Low-level features are local;

- Features are translationally invariant;

- High-level features are composed of low-level features.

Such assumptions allow a reduction in the number of parameters while achieving better generalization and improved scalability to large datasets. Indeed, instead of using fully connected layers, a CNN uses local connectivity between neurons; i.e., a neuron is only connected to nearby neurons in the next layer [32]. The basic components of a convolutional neural network are:

- Convolutional layer: the convolutional layer is core of the CNN architecture. The convolutional layer is built up by neurons which are not connected to every single neuron from the previous layer but only to those falling inside their receptive field. Such architecture allows the network to identify low-level features in the very first hidden layer, whereas high-level features are combined and identified at later stages in the network. A neuron’s weight can be thought of as a small image, called the filter or convolutional kernel, which is the size of the receptive field. The convolutional layer mimics the convolution operation of a convolutional kernel on the input layer to produce an output layer, often called the feature map. Typically, the neurons that belong to a given convolutional layer all share the same convolutional kernel: this is referred to as parameter sharing in the literature. For this reason, the element-wise multiplication of each neuron’s weight by its receptive field is equivalent to a pure convolution in which the kernel slides across the input layer to generate the feature map. In mathematical terms, a convolutional layer, with convolutional kernel , operating on the previous layer (being either an intermediate feature map or the input image), performs the following operation:where is the position of the output feature map.

- Activation layer: An activation function is utilized as a decision gate that aids the learning process of intricate patterns. The selection of an appropriate activation function can accelerate the learning process [11]. The most common activation functions are the same as those used for the MLP and are presented in Table 4.

- Pooling layer: The objective of a pooling layer is to sub-sample the input image or the previous layer in order to reduce the computational load, the memory usage and the number of parameters, which prevents overfitting while training [11,33]. The pooling layer works exactly with the same principle of the receptive field. However, a pooling neuron has no weights; hence, it aggregates the inputs by calculating the maximum or the average within the receptive field as output.

- Fully-connected layer: Similarly to MLP as for traditional CNN architectures, a fully connected layer is often added right before the output layer to further capture non-linear relationships of the input features [11,32]. The same considerations discussed for MLP hold for CNN fully connected layers.

An example of a CNN architecture is shown in Figure 8.

Figure 8.

Example CNN architecture with convolutional, max-pooling and fully connected layers.

3.2. Recurrent Neural Networks

Recurrent neural networks comprise all the architectures that present at least one feedback loop in their layer interactions [1]. A subdivision that is seldom used is between finite and infinite impulse recurrent networks. The former is given by a directed acyclic graph (DAG) that can be unrolled in time and replaced with a feedforward neural network. The latter is a directed cyclic graph (DCG) that cannot be unrolled and replaced similarly [32]. Recurrent neural networks have the capability of handling time-series data efficiently. The connections between neurons form a directed graph, which allows internal state memory. This enables the network to exhibit temporal dynamic behaviors.

3.2.1. Layer-Recurrent Neural Network

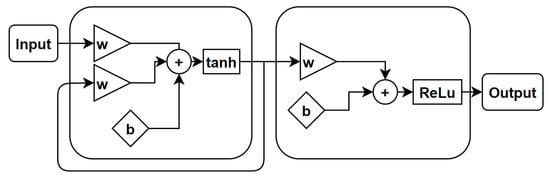

The core of the layer-recurrent neural network (LRNN) is similar to that of the standard MLP [1]. This means that the same considerations for model depth, width and activation functions hold in the same manner. The only addition is that in the LRNN, there is a feedback loop with a single delay around each layer of the network, except for the last layer. A schematic of the LRNN is sketched in Figure 9.

Figure 9.

Schematic of a layer-recurrent neural network. The feedback loop is a tap-delayed signal rout.

3.2.2. Nonlinear Autoregressive Exogenous Model

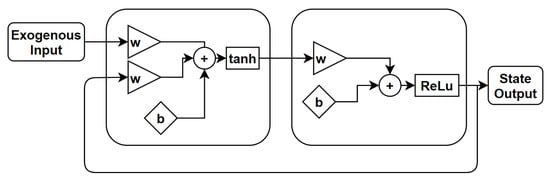

The nonlinear autoregressive exogenous model is an extension of the LRNN that uses the feedback coming from the output layer [41]. The LRNN owns dynamics only at the input layer. The nonlinear autoregressive network with exogenous inputs (NARX) is a recurrent dynamic network with feedback connections enclosing several layers of the network. The NARX model is based on the linear ARX model, which is commonly used in time-series modeling. The defining equation for the NARX model is

where y is the network output and u is the exogenous input, as shown in Figure 10. Basically, it means that the next value of the dependent output signal y is regressed on previous values of the output signal and previous values of an independent (exogenous) input signal. It is important to remark that, for a one tap-delay NARX, the defining equation takes the form of an autonomous dynamical system.

Figure 10.

Schematic of a nonlinear autoregressive exogenous model. The feedback loop is a tap-delayed signal rout [27].

3.2.3. Hopfield Neural Network

The formulation of the network was due to Hopfield [42], but the formulation by Abe [43] is reportedly the most suited for combinatorial optimization problems [44], which are of great interest in the space domain. For this reason, here the most recent architecture is reported. A schematic of the network architecture is shown in Figure 11.

Figure 11.

The Hopfield neural network structure [44].

In synthesis, the dynamics of the i-th out of N neurons is written as:

where is the total input of the i-th neuron; and are parameters corresponding, respectively, to the synaptic efficiency associated with the connection from neuron j to neuron i and the bias of the neuron i. The term is basically the equivalent of the activation function:

where is a user-defined coefficient, and is the user-defined amplitude of the activation function. The recurrent structure of the network entails the dynamics of the neurons; hence, it would be more correct to refer to and as functions of time or any other independent variable. An important property of the network, which will be further discussed in the application for parameter identification, is that the Lyapunov stability theorem can be used to guarantee its stability. Indeed, since a Lyapunov function exists, the only possible long-term behavior of the neurons is to asymptotically approach a point that belongs to the set of fixed points, meaning where , being the Lyapunov function of the system, in the form:

where the right-hand term is expressed in a compact form, with , the vector of s neuron states, and , the bias vector. A remarkable property of the network is that the trajectories always remain within the hypercube as long as the initial values belong to the hypercube too [44,45]. For implementation purposes, the discrete version of the HNN is employed, as was done in [44,46].

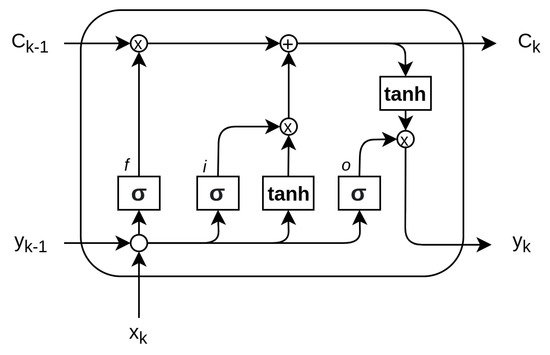

3.2.4. Long Short-Term Memory

The long-short term memory network is a type of recurrent neural network widely used for making predictions based on times series data. LSTM, first proposed by Hochreiter [47], is a powerful extension of the standard RNN architecture because it solves the issue of vanishing gradients, which often occur in network training. In general, the repeating module in a standard RNN contains a single layer. This means that if the RNN is unrolled, you can replicate the recurrent architecture by juxtaposing a single layer of nuclei. LSTMs can also be unrolled, but the repeating module owns four interacting layers or gates. The basic LSTM architecture is shown in Figure 12.

Figure 12.

The core components of the LSTM are the cell (C), the input gate (i), the output gate (o) and the forget gate (f) [47].

The core idea is that the cell state lets the information flow: it is modified by the three gates, composed of a sigmoid neural net layer and a point-wise multiplication operation. The sigmoid layer of each gate outputs a value that defines how much of the core information is let through. The basic components of the LSTM network are summarized here:

- Cell state (C): The cell state is the core element. It conveys information through different time steps. It is modified by linear interactions with the gates.

- Forget gate (f): The forget gate is used to decide which information to let through. It looks at the input and output of the previous step and yields a number for each element of the cell state. In compact form:

- Input gate (i): The input gate is used to decide what piece of information to include in the cell state. The sigmoid layer is used to decide on which value to update, whereas the describes the entities for modification, namely, the values. It then generates a new estimate for the cell state :

- Memory gate: The memory gate multiplies the old cell state with the output of the forget gate and adds it to the output of the input gate. Often, the memory gate is not reported as a stand-alone gate, due to the fact that it represents a modification of the cell state itself, without a proper sigmoid layer:

- Output gate: The output gate is the final step that delivers the actual output of the network , a filtered version of the cell state. The layer operations read:

In contrast to deep feedforward neural networks, having a recurrent architecture, LSTMs contain feedback connections. Moreover, LSTMs are well suited not only for processing single data points, such as input vectors, but efficiently and effectively handle sequences of data. For this reason, LSTMs are particularly useful for analyzing temporal series and recurrent patterns.

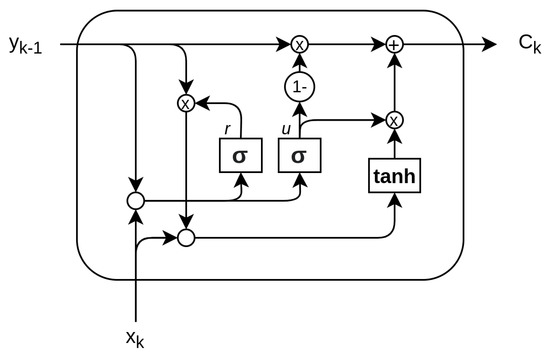

3.2.5. Gated Recurrent Unit

The gated recurrent unit (GRU) was proposed by Cho [48] to make each recurrent unit adaptively capture dependencies of different time scales. Similarly to the LSTM unit, the GRU has gating units that modulate the flow of information inside the unit, but without having a separate memory cells [48,49]. The basic components of GRU share similarities with LSTM. Traditionally, different names are used to identify the gates:

- Update gate (u): The update gate defines how much the unit updates its value or content. It is a simple layer that performs:

- Reset gate r: The reset gate effectively makes the unit process the input sequence, allowing it to forget the previously computed state:

The output of the network is calculated through a two-step update, entailing a candidate update activation calculated in the activation h layer and the output :

A schematic of GRU network is reported in Figure 13.

Figure 13.

The core components of the GRU are the reset gate (r) and the update gate (u) coupled with the activation output composed of the layer.

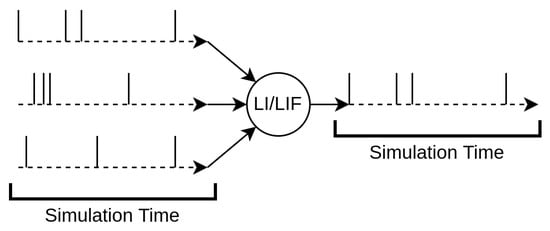

3.3. Spiking Neural Networks

Spiking neural networks (SNN) are becoming increasingly interesting to the space domain due to their low-power and energy efficiency. Indeed, small satellite missions entail low-computational-power devices and in general lower system power budgets. For this reason, SNNs represent a promising candidate for the implementation of neural-based algorithms used for many machine learning applications: among those, the scene classification task is of primary importance for the space community. SNNs are the third generation of artificial neural networks (ANNs), where each neuron in the network uses discrete spikes to communicate in an event-based manner. SNNs have the potential advantage of achieving better energy efficiency than their ANN counterparts. While generally a loss of accuracy in SNN models is reported, new algorithms and training techniques can help with closing the gap in accuracy performance while keeping the low-energy profile. Spiking neural networks (SNNs) are inspired by information processing in biology. The main difference is that neurons in ANNs are mostly non-linear but continuous function evaluations that operate synchronously. On the other hand, biological neurons employ asynchronous spikes that signal the occurrence of some characteristic events by digital and temporally precise action potentials. In recent years, researchers from the domains of machine learning, computational neuroscience, neuromorphic engineering and embedded systems design have tried to bridge the gap between the big success of DNNs in AI applications and the promise of spiking neural networks (SNNs) [50,51,52]. The large spike sparsity and simple synaptic operations (SOPs) in the network enable SNNs to outperform ANNs in terms of energy efficiency. Nevertheless, the accuracy performance, especially in complex classification tasks, is still superior for deep ANNs. In the space domain, the SNNs are at the earliest stage of research: mission designers strive to create algorithms characterized by great computational efficiency and low power applications; hence, the SNNs represent an interesting opportunity that ought to be mentioned in this review, although they are not yet applied to guidance, navigation and control applications. Finally, SNNs on neuromorphic hardware exhibit favorable properties such as low power consumption, fast inference and event-driven information processing. This makes them interesting candidates for the efficient implementation of deep neural networks particularly utilized in image classification.

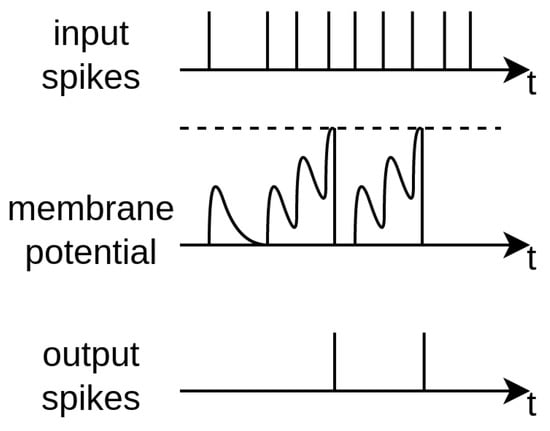

The most peculiar feature of SNNs is that the neurons possess temporal dynamics: typically, an electrical analogy is used to describe their behavior. Each neuron has a voltage potential that builds up depending on the input current that it receives. The input current is generally triggered by the spikes the neuron receives. A schematic of the neuron parameters can be seen in Figure 14 and Figure 15. There are numerous neural architectures that combine these notions into a set of mathematical equations; nevertheless, the two most common alternatives are the integrate-and-fire neuron and the leaky-integrate-and-fire neuron.

Figure 14.

Architecture of a simple spiking neuron. Spikes are received as inputs, which are then either integrated or summed depending on the neuron model. The output spikes are generated when the internal state of the neuron reaches a given threshold.

Figure 15.

An illustrative schematic depicting the membrane potential reaching the threshold, which generates output spikes [51].

3.3.1. Types of Neurons

- Integrate and fire (IF): The IF neuron model assumes that spike initiation is governed by a voltage threshold. When the synaptic membrane reaches and exceeds a certain threshold, the neuron fires a spike and the membrane is set back to the resting voltage . In mathematical terms, its simplest form reads:

- Leaky integrate and fire (LIF): The LIF neuron is a slightly modified version of the IF neuron model. Indeed, it entails an exponential decrease in membrane potential when not excited. The membrane charges and discharges exponentially in response to injected current. The differential equation governing such behavior can be written as:where is the leak conductance and V is again the membrane potential with respect to the rest value.

As mentioned, the list is not extensive, and the reader is suggested to refer to [53] for a comprehensive review of neuron models.

3.3.2. Coding Schemes

The transition between dense data and sparse spiking patterns requires a coding mechanism for input coding and output decoding. For what concerns the input coding, the data can be transformed from dense to sparse spikes in different ways, among which the most used are:

- Rate coding: it converts the input intensity into a firing rate or spike count;

- Temporal (or latency) coding: it converts the input intensity to a spike time or relative spike time.

Similarly, in output decoding, the data can be transformed from sparse spikes to network output (such as classification class) in different ways, among which the most used are:

- Rate coding: it selects the output neuron with the highest firing rate, or spike count, as the predicted class;

- Temporal (or latency) coding: it selects the output neuron that fires first, or before a given threshold time, as the predicted class

Roughly speaking, the current literature agrees on specific advantages for both the coding techniques. On one hand, the rate coding is more error tolerant given the reduced sparsity of the neuron activation. Moreover, the accuracy and learning convergence have shown superior results in rate-based applications so far. On the other hand, given the inherent sparsity of the encoding-decoding scheme, latency-based approaches tend to outperform the rate-based architectures in inference, training speed and, above all, power consumption.

4. Applications in Space

This section provides an overview of the space domain tasks that are currently being investigated by the research community. The paper highlights the characteristics that are peculiar to GNC algorithms, referencing other domains’ literature when needed. In addition, a short paragraph on the challenges of dataset availability and data validation is presented.

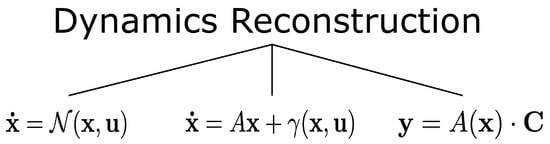

4.1. Identification of Neural Spacecraft Dynamics

The capability of using an ANN to approximate the underlying dynamics of a spacecraft is used to enhance the on-board model accuracy and flexibility to provide the spacecraft with a higher degree of autonomy. There are different approaches in the literature that could be adopted to tackle the system identification and dynamics reconstruction task, as show in Figure 16. In the following section, the three analyzed methods are described; recall the universal approximation theorem reported in Section 2.

Figure 16.

Identification of system dynamics: different approaches to reconstructing system dynamical behavior.

4.1.1. Fully Neural Dynamics Learning

The dynamical model of a system delivers the derivative of the system state, given the actual system state and external input. Such an input–output structure can be fully approximated by an artificial neural network model. The dynamics are entirely encapsulated in the weights and biases of the network . The neural network is stimulated by the actual state and the external output. In turn, the time derivative of the state, or simply the system state at the next discretization step, is yielded as output, as shown in Figure 17:

Figure 17.

Dynamical reconstruction as a neural network model.

The method relies on the universal approximation theorem, since it is based on the assumption that there exists an ANN that approximates the dynamical function with a predefined approximation error. The training set is simply composed of input–output pairs, where the input is a stacked vector of system states and control vectors.

The result is that the full dynamics is encapsulated into a neural network model that can be used to generate prediction of future states [16,54]. The dynamics reconstruction based solely on artificial neural networks largely benefits by the employment of recurrent neural networks, rather than simpler feedforward networks. Indeed, literature, although rather poor, employ Recurrent Neural Networks to perform the task [25,27,54]. The recurrent architecture owns an inherently more complex structure but maintains a brief evaluation time make it a suitable architecture for on-board applications. As mentioned, Recurrent Networks have the capability of handling time-series data efficiently because the connections between neurons form a directed graph, which allows an internal state memory. This enables the network to exhibit temporal dynamic behaviors. When dealing with dynamics identification, it is crucial to exploit the temporal evolution of the states; hence, RNN shows superior performances with respect to MLP. In [16], the different propagation of the feedforward (MLP) and recurrent neural networks, initialized equally and trained in the same scenario, are compared. The prediction position and velocity accuracy, compared with the analytical nonlinear J2-perturbed model, demonstrates the superior performance in dynamics reconstruction by employing recurrent architectures.

The methodology reported so far relied on FFNN or RNN that are based on the function approximation of the state-space representation of the dynamics. They do not explicitly reconstruct the definition of the Markov problem. An alternative is to use a predictive autoencoder (AEs), a modified version of traditional autoencoders, which is able to learn a nonlinear model in a state-space representation from a dataset comprising input–output tuples [40].

A tentative qualitative summary of the most used ANN architecture for system identification is reported in Table 6. The learning time represents the effectiveness of the incremental learning performed on-board; hence, it is estimated with reference to the characteristic time of the motion, for instance, the orbital period.

Table 6.

Summary of typical and most successful neural networks for fully neural dynamics learning.

4.1.2. Dynamical Uncertainties and Disturbance Reconstruction

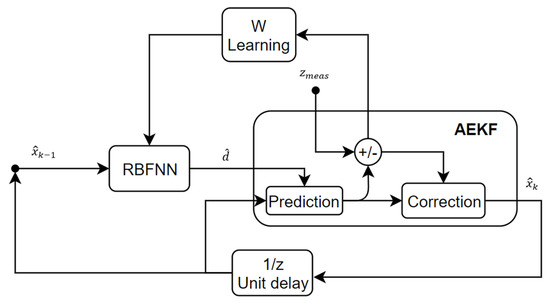

The second method uses the capability of the artificial neural networks to approximate an unknown function. In particular, it is wise to exploit all analytical knowledge we may have of the environment. Nevertheless, most of the time, the analytical models encompass linearization and do not model perturbations, either because they are analytically complex or are simply unknown. In view of future space missions involving other bodies, such as asteroids that produce gravitational perturbations which are highly uncertain and are subjected to unknown physical influences, the capability of ANNs to estimate the states of the satellites can also be used to estimate the uncertainties that could be analyzed further while understanding the physical phenomena. To improve the performance of the navigation or the control, neural networks are employed to compensate for the effects of the modeling error, external disturbance and nonlinearities. Most of the researchers focused their efforts on coupling the neural network with a controller to take into account modeling errors, as found in [34,35,38,59]. In this field, the ANN is used to compensate for external disturbance [35], or to compensate for model inversion [59] or spacecraft uncertain properties [34]. Nevertheless, besides eliminating spacecraft uncertainties used in the controller, the system identification can be used also to enhance disturbance estimation and navigation overall, as in [29,30]. In [29], a radial-basis-function-neural-network-aided adaptive extended Kalman filter (RBFNN-AEKF) for state and disturbance estimation was developed. The neural network estimates the unmodeled terms which are fed into the EKF as an additional term to the state and covariance prediction step. The approach proposed by Harl et al. [30], the modified state observer, allows for the estimation of uncertainties in nonlinear dynamics, and in addition, providing estimates of the system states. The observer structure contains neural networks whose outputs are the uncertainties in the system. In brief, the methods can be summarized into three key-points:

- The ANN is used to learn and output an estimate of the disturbance or mismodeled terms that is used in the guidance, navigation and control to deliver a better state, disturbance or error estimate.

- The ANN learning is fully performed incrementally online. This means that no prior knowledge or learning has to be performed beforehand. This dramatically increases the flexibility of the approach.

- The ANN learning does not replace the GNC system, but it rather enhances it and makes it more robust.

Regardless of the application, the goal of this dynamics reconstruction approach is to reconstruct a perturbation term, whatever the source is. It is remarkable that one could derive an underlying architecture that is common to all the mentioned researches. The idea is to have an observer and a supervised learning method that makes use of the Lyapunov stability theorem to guarantee convergence of the estimation. In general, the simplest form of each algorithm can be developed as follows. Let us assume the actual system dynamics are described by the following set of non-linear differential equations:

where d is the external disturbance term. The actual system dynamics can be rewritten as:

where the term d captures all the non-linearities together with the disturbances external to the system, namely, . The state observer can be constructed as follows:

where is estimated using the ANN system that we are deploying and is the observer gain matrix. The observer error dynamics can be derived as:

The neural network learning, meaning the weights update rule, generally relies on the observer error dynamics [29,30,59], targeting convergence and stability of the weights matrix and the error e. Let us assume that we want to use radial-basis-function neural network: when invoking the universal approximation theorem for neural networks, we can assume there exists an ideal approximation of the disturbance term d:

where is a bounded arbitrary approximation error. Consequently, the error in estimation can be written as:

By adding and subtracting the term and performing few mathematical manipulations, Equation (39) can be expressed as:

where and the bounded term . The aim of the learning rule is to drive the dynamics error to zero, and also to force the weights to converge to the ideal ones. Namely:

If we reason for a single channel, i.e., the single state approach, by introducing i = 1:6, the following Lyapunov function can be constructed to derive the stable learning rule:

In order to fulfill the hypothesis of the Lyapunov stability theorem, the weights update rule is derived to drive the derivative in Equation (42) . Recalling from Equation (40) that , the expression for a single-state weight update rule:

In compact form, we can express the weights matrix update rule:

The reader may want to modify the presented core algorithm into a more sophisticated architecture. Nevertheless, the literature currently adopts this as a consolidated approach. A tentative qualitative summary of the most used ANN architecture for a disturbance dynamics term reconstruction is reported in Table 7. As previously mentioned, the learning time represents the effectiveness of the incremental learning performed on-board; hence, it is estimated with reference to the characteristic time of the motion, for instance, the orbital period.

Table 7.

Summary of typical and most successful neural networks for dynamics uncertainty learning.

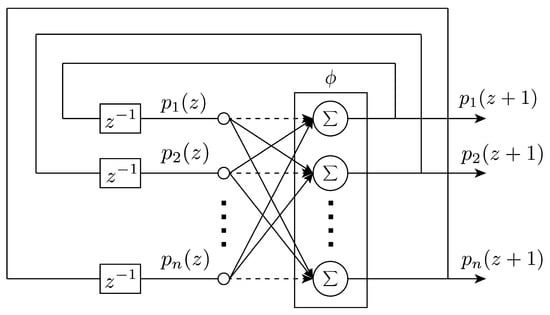

4.1.3. System Identification through Reconstruction of Parameters

The third method has been developed under the framework of parameter reconstruction. Basically, the artificial neural network is employed to refine the uncertain parameters of a given dynamical model. This method is particularly suitable when the uncertain environment influences primal system constants (e.g., inertia parameters, spherical harmonics and drag coefficients). Differently from the disturbance approximation, the analytical framework is here known, and only characteristic values of system parameters are reconstructed. Moreover, given the physical knowledge of the parameters to be reconstructed, the method has a very promising scientific outcome. For instance, the gravity expansion of asteroids and planets can be approximated online while flying, delivering a rough shape reconstruction of the body. Nevertheless, many applications overlap with the disturbance reconstruction approach, in which the disturbance function to approximate is a constant parameter, as assumed in many estimation algorithms, such as the well-established Kalman Filter. For this reason, many researchers used MLP neural networks to carry out the task. For instance, Chu et al. [36] proposed a deep network MLP to estimate inertia parameters. The angular rates and control torques of combined spacecraft are set as the input of a deep neural network model, and conversely, the inertia tensor is then set as the output. Training the MLP model refers to the process of extracting a higher abstract feature, i.e., the inertia tensor [36,37]. Another approach to solve the parametric reconstruction is to use a recurrent neural network. In particular, Hopfield neural networks are investigated in [44,45,61]. The core of the algorithm is the following: if the model is reformulated into linear-in-parameter form, the identification problem can be reformulated as an optimization problem:

In particular, when defining a general prediction error , the resulting combinatorial optimization problem is [44]:

where typically correspond to measurements, is the linear-in-parameter matrix and is the estimated parameter vector [44]. Other examples entail the usage of BPANN, another name for MLP, for the Earth or other bodies gravity field approximation, as presented in [62,63]. A tentative qualitative summary of the most used ANN architecture for parametric dynamics reconstruction is reported in Table 8.

Table 8.

Summary of typical and most successful neural networks for parametric learning. The learning times for the MLP applications are not available.

4.2. Convolutional Neural Networks for Vision-Based Navigation

Convolutional neural networks are the best candidates with which to process visual data. For this reason, CNNs have quickly become of great interest to vision-based navigation designers. In particular, CNNs have been employed in end-to-end or hybrid approaches for spacecraft navigation, mainly in two scenarios: proximity operations during a close approach with uncooperative targets and planetary or asteroid landing. The algorithm architecture typically relies on a CNN that either segments or classifies images, whose output is fed to the navigation system, composed of estimation and sensor fusion algorithms.

4.2.1. CNN for Pose Estimation

CNNs have been recently becoming a promising solution for the pose estimation and initialization of target spacecraft. Most of the algorithms used in spacecraft GNC are hybrid approaches and make use of artificial neural networks only partially. Nevertheless, end-to-end methods are currently being explored. A targeted review of CNNs for pose estimation is reported in [64]. Generally, in a CNN-based method, a pre-training is necessary to develop a model capable of performing regression or classification to estimate the pose. The monocular image is then fed to such a model online to retrieve the pose. Depending on the selected architecture adopted to solve for the relative pose, these methods can either rely on a wire-frame 3D model of the target spacecraft or solely on the 2D images used in the training, and hence they can either be referred to as non-model based or model-based [64]. One solution to the problem of satellite pose estimation was provided by [65]. It is a typical approach for model-based hybrid architecture, exploiting object detection networks and requiring 3D models of the spacecraft. It consists of a monocular pose estimation technique composed of different steps:

- An object detection network is used to identify a bounding box surrounding the target spacecraft. Typical CNN architectures are HRNet and Faster RCNN.

- A second regressive network is used to predict the position of the landmark features utilized during training.

- A traditional PnP problem was solved using 2D–3D correspondences to retrieve the camera pose.

Another approach is the end-to-end pose estimation architecture presented in [66]. The approach entails a feature extraction method using a pre-trained CNN (modified ResNet 50) backbone. CNN features are fed into a sub-sampling convolution for compression. The generated intermediate feature vectors are then used as input to two separate branches: the first branch uses a shallow MLP that regresses the 3D locations of the features. The second branch uses probabilistic orientation soft classification to generate an orientation estimate. Sharma et al. [67] proposed a three-branch architecture. The first branch of the CNN bootstraps a state-of-the-art object detection algorithm to detect a 2D bounding box around the target spacecraft in the input image. The region inside the 2D bounding box is then used by the other two branches of the CNN to determine the relative attitude by initially classifying the input region into discrete coarse attitude labels before regressing to a finer estimate. The SPN method then uses a novel Gauss–Newton algorithm to estimate the relative position by using the constraints imposed by the detected 2D bounding box and the estimated relative attitude. Recently, the approach presented in [68] replicates the three-module structure entailing the object detection, keypoint regression and single image pose estimation in three different neural networks. The first module uses an object detection network to generate a 2D bounding box. Such task is executed by a CNN YOLOv3 with a MobileNetv2 backbone, which are well-established CNN architectures. The bounding box output is used in the second module to extract a region of interest from the input image. The cropped image is fed to a keypoint regression network (KRN) which regresses locations of known surface keypoints of the target. Eventually, a traditional PnP solver is used to retrieve the single-image pose estimation. Given the usage of PnP algorithms, the estimated 2D keypoints require the corresponding 3D locations, meaning that a 3D model of the spacecraft is needed. Nevertheless, a potential solution to the model unavailability is the 3D keypoints recovery preparatory step. Finally, Pasqualetto et al. [69] investigated the potentials of using a hourglass neural network to extract the corners of a target spacecraft prior to the pose estimation. In this method, the output of neural network is a set of so called heatmaps around the features used in the offline training. The coordinates of each heatmap’s peak intensity characterize the predicted feature location; the intensity indicates the confidence of locating the corresponding keypoint at this position.

4.2.2. CNNs for Planetary and Asteroid Landing

The use of AI in scenarios for the moon or planetary landing is still at an early stage, and few works exist in the literature concerning image-based navigation with AI. The presented solutions are all based on a supervised learning approach. Images with different lighting and surface viewing conditions are used for training. One idea is to substitute classical IP algorithms, providing a first estimate of the lander state (e.g., pose or altitude and position), which can be later refined by means of a navigation filter. Similar approaches have been implemented in the robotics field, where by pre-training on a large dataset, the methods estimate the absolute camera pose in a scene. In a landing scenario, the knowledge of the landing area can be exploited, if available. Therefore, the convolutional neural network can be trained with an appropriate dataset of synthetic images of the landing area at different relative poses and in different illumination conditions. The CNN is used to extract features that are then passed to a fully connected layer, which performs a regression and directly outputs the absolute camera pose. The regression task can be executed by an LSTM. The CNN-LSTM has proven excellent performance and is very well developed for image processing and model prediction. The use of a recurrent network brings the advantage of also retrieving time-series information. This can allow also estimating the velocity of the lander. According to an extensive review of the applications, one can make a general distinction between two macro-methods:

- Hybrid approaches: they utilize CNNs for processing images, extracting features and classifying or regressing the state at the initial condition, but they are always coupled with traditional image processing or a navigation algorithm (e.g., PnP and feature tracking).

- End-to-end approaches: they are developed to complete the whole visual odometry pipeline, from the image input to the state estimate output.

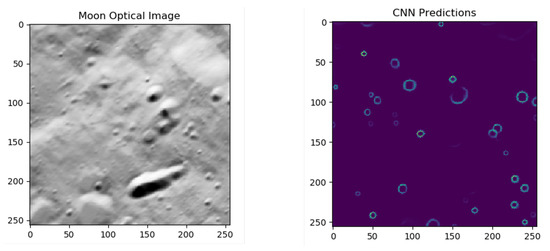

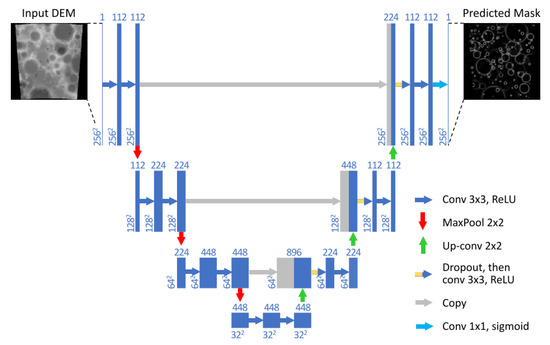

As an interesting example, although not directly applied to space systems, the technique presented in [70] relies on end-to-end learning for estimating the pose of a UAV during landing. In particular, the global position and orientation of the robot are the final output of the AI architecture. The AI system processes two kinds of inputs: images and measurements from an IMU. The architecture comprises a CNN that takes as input streams of images and acts as a feature extractor. Such a CNN is built starting from ResNet18, pre-trained on the ImageNet dataset. An LSTM processes the IMU measurements, which are available at a higher frequency than images. An intermediate fully connected layer fuses the inertial and visual features coming from the CNN and the LSTM. Then, such vector is passed to the core LSTM, along with the previous hidden state, allowing one to model the dynamics and connections between sequences of features. Finally, a fully connected layer maps the feature to the desired pose output. Similarly, the architecture proposed by Furfaro et al. [25] comprises a CNN and an LSTM. The final output of the AI system is a thrust profile to control the spacecraft landing. The CNN’s input consists of three subsequent static images. This choice is motivated by the need of retrieving some dynamical information [25]. The whole visual odometry pipeline has been learned completely in the work by Wang [71]. The approach proposed in [71] exploits a deep learning system based on a monocular visual odometry (VO) algorithm to estimate poses from raw RGB images. Since it is trained and deployed in an end-to-end manner, it infers poses directly from a sequence of raw RGB images without adopting any module in the conventional VO pipeline. The AI system comprises the convolutional neural network that automatically learns effective feature representation for the visual odometry problem, but also a recurrent network, which implicitly models sequential dynamics and relations. The final output is the absolute pose of the vehicle. This architecture differs from the one presented in [70], because here two consecutive frames are stacked together and only images are considered as inputs. A hybrid approach specifically developed for lunar landing was presented by [72] and re-adapted by [14,73,74]. The approach is based on the work by Silburt et al. [75]: a deep learning approach was used to identify lunar craters; in particular, a Unet-CNN, shown in Figure 18, was used for input images’ segmentation, as shown in Figure 19. Some traditional navigation strategies are based on lunar crater matching; therefore, an AI method was investigated as part of a hybrid approach, as in [72,76,77], where a RANSAC-based nearest neighbor algorithm was used for matching the detected craters to database ones. The advantage of such a hybrid approach is to combine a crater detection method that is robust to illumination conditions and the reliability of a traditional pose estimation pipeline. An example of an input image and an output mask is shown in Figure 18. This is a powerful technique for absolute navigation where database objects can be used for learning. The state estimation requires a navigation filter or feature post-processing, such as computation of an essential matrix or retrieval of relative vectors, to complete the navigation pipeline. A qualitative summary of the most promising methods is reported in Table 9.

Figure 18.

Input image and output mask from the CNN [14].

Figure 19.

Convolutional neural network (CNN) architecture, based on Unet [75,79]. Boxes represent cross-sections of sets of square feature maps.

Table 9.

Summary of neural-aided methods for optical navigation for planetary and lunar landing. IP stands for image processing and represents the necessity for an hybrid method to be implemented (e.g., matching and PnP).

A very interesting application of an ANN to planetary landing is the autonomous collision avoidance presented by Lunghi et al. [78]. An MLP is fed with an image of the landing area. The neural system outputs a hazard map, which is then exploited to select the best target, in terms of safety, guidance constraints and scientific interest. The ANN’s generalization properties allow the system to correctly operate also in conditions not explicitly considered during calibration.