Abstract

Explosive ordnance disposal (EOD) robots can replace humans that work in hazardous environments to ensure worker safety. Thus, they have been widely developed and deployed. However, existing EOD robots have some limitations in environmental adaptation, such as a single function, slow action speed, and limited vision. To overcome these shortcomings and solve the uncertain problem of bomb disposal on the firing range, we have developed an intelligent bomb disposal system that integrates autonomous unmanned aerial vehicle (UAV) navigation, deep learning, and other technologies. For the hardware structure of the system, we design an actuator constructed by a winch device and a mechanical gripper to grasp the unexploded ordnance (UXO), which is equipped under the six-rotor UAV. The integrated dual-vision Pan-Tilt-Zoom (PTZ) pod is applied in the system to monitor and photograph the deployment site for dropping live munitions. For the software structure of the system, the ground station exploits the YOLOv5 algorithm to detect the grenade targets for real-time video and accurately locate the landing point of the grenade. The operator remotely controls the UAV to grasp, transfer, and destroy grenades. Experiments on explosives defusal are performed, and the results show that our system is feasible with high recognition accuracy and strong maneuverability. Compared with the traditional mode of explosives defusal, the system can provide decision-makers with accurate information on the location of the grenade and at the same time better mitigate the potential casualties in the explosive demolition process.

1. Introduction

With the continuous strengthening of practical military training, the proper throwing of grenades is essential [1]. Due to the manufacturing process of military enterprises, the turbulence of storage and transportation, and the mishandling during training, an explosion rate of 100% after throwing a live ammunition grenade is not guaranteed, resulting in a UXO. Especially while training new recruits who are not familiar with and do not understand the operations of grenade throwing, coupled with tension and their inner fears, the probability of UXO in actual training cannot be underestimated, and the UXOs produced in training can explode at any time, which not only affects training, but also seriously threatens personal safety [2].

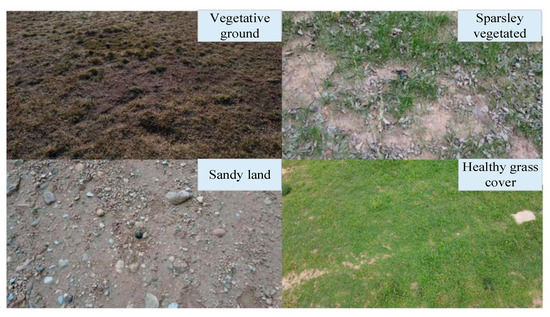

Currently, most unexploded ordnance detection and disposal technologies are conducted manually or with EOD vehicles. They are destroyed, defused, or relocated depending on the situation on-site and the risk factor [3]. There are two main forms of explosive discharge: direct detonation and ineffective explosives. Both forms have the characteristics of a slow elimination rate and incomplete detonation. Since there are many types of grenades and different forms, there is also great uncertainty regarding the disposal scheme, required equipment, key technology, and EOD equipment for handling explosives. Currently, military training with live ammunition mostly uses the all-plastic hand grenade without a steel ball, which has a small volume and a dark color. The ground of the training area for throwing grenades is uneven, the color resembles the color of the grenade. Moreover, there are a large number of explosive fragments in the training area, which further complicates the search for unexploded ordnance. The area surrounding the grenade throwing site is shown in Figure 1.

Figure 1.

Environment of grenade throwing site.

In recent years, the combination of computer vision technology and UAV technology has become increasingly convergent, removing the basic technical limitations for UAVs in addressing perceptual problems, secondary development, and application [4,5]. According to the real needs in explosives search and disposal, we have developed an intelligent EOD system based on a six-rotor UAV and deep learning technology. The system is able to detect, recognize, and seize unexploded ordnance on its own. The UAV surveys and films the site with a high-resolution camera. The transmitter in the sky sends the digital signal of the captured video, and the ground station detects the returned video to accurately locate the UXO. A small winch device and mechanical grapple are installed at the base of the six-rotor UAV. The operator remotely controls the system to grab, transport, and destroy the UXO. The entire process of removing and destroying UXO is well outside the kill radius of explosives, significantly reducing casualties, which is important for army security and stability. Compared to conventional EOD robots, the system has the advantage of accurate target recognition, rapid action, high mobility, and ease of operation. It overcomes the shortcomings of the traditional land EOD robot, such as slow action speed, limited field of view, and poor adaptability to the environment. The main contributions of this paper are as follows:

(1) An intelligent EOD system based on a six-rotor UAV was developed. Compared with the traditional blasting method, it reduces the involvement of personnel, shortens the blasting time, avoids direct contact between personnel and UXOs, and makes the blasting work safer and more efficient.

(2) Based on the principle of a deep neural network, the target recognition method is adopted by YOLOv5, which can realize the automatic recognition of UXOs in patrol, complete the recognition task accurately and efficiently, and provide accurate information about the location of the UXOs to the decision-makers in the field during difficult EOD operations.

(3) Based on the UAV safety and security requirements, a mechanical gripper with a corrugated inner edge was developed to firmly grip UXOs, and a winch device was developed to complete the lifting of the mechanical gripper and ensure that the UAV is outside the kill radius of the UXO’s explosion during the blast removal process.

2. Related Work

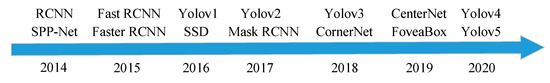

In some military shooting ranges or training grounds, the performance test of weapons is often carried out, and it is inevitable that UXOs and various bullet bodies will appear, which seriously threatens the safety of personnel. The existing explosives removal and transfer work is carried out through two different systems or processes. Professionals wear special EOD suits and carry out detection in the explosion area with a hand-held EOD machine. Multi-dimensional scanning should be carried out many times during detection. There are defects to this method of detection and transfer: (1) when detecting and transferring, professionals need to wear explosion-proof clothes, the weight of which is about 10 kg, and the safety of EOD personnel cannot be fully guaranteed, which easily causes great psychological pressure; (2) the process of detection and transfer is not flexible enough, and the transfer can only be carried out after the detection personnel leave, resulting in a long recovery time for EOD. If special tools can be used to automatically recognize and use machines for grasping and centralized destruction, the danger can be effectively reduced or eliminated. Most of the general EOD robots are land robots, which are greatly affected by the harsh environment of the shooting range and can easily overturn [6,7,8,9]. In addition, the EOD vehicle is inconvenient to move in rugged and mountainous areas with complex geographical conditions. If the UAV can be used for UXO search as an EOD, it can ensure personnel safety and handle emergencies efficiently. UAVs are characterized by flexible mobility and quick inspections, which can extremely improve the efficiency of EOD. Using UAVs to search for UXOs and other dangerous goods can greatly save costs and ensure personnel safety to a greater extent [10,11,12,13,14]. At present, the common target detection methods of UAVs are mainly divided into two-stage target detection algorithms and single-stage target detection algorithms [15,16,17]. The two-stage algorithm uses Faster R-CNN as an example. First, the convolutional neural network is used to extract the features of the input image, and the region proposal network (RPN) is used to obtain the feature region candidate box more efficiently, which is conducive to balancing the proportion of positive and negative samples. Finally, the candidate boxes are classified, and the location information is regressed [18,19,20]. The single-stage algorithm uses the ‘you only look once’ (YOLO) series as an example. This target detection model cancels the extraction of candidate boxes, directly predicts and classifies the target location through the end-to-end method, and converts target detection into regression prediction with a single network, which fundamentally improves the detection speed [21,22,23,24,25]. Although the two-stage target detection algorithm has high accuracy, its speed is slow, making it difficult to meet real-time performance in practical application scenarios. The development process of target detection based on deep learning is shown in Figure 2.

Figure 2.

Development history of deep learning detection algorithms.

In recent years, with the wide application of a deep learning neural network, the complexity of target detection algorithms is higher and the model is larger, which brings great challenges to the computing power of hardware and puts forward higher requirements for computing speed, reliability, and integration of processors [26,27]. The YOLOv1 algorithm, proposed by Redmon J. et al., accomplishes the first-order anchor-free detection and directly uses a convolutional neural network to complete classification and regression tasks at the same time, but there is a problem of low detection accuracy [28]. Redmon J. and others introduced optimization strategies such as batch-normalization (BN) and multi-scale training based on YOLOv1 and obtained a YOLOv2 target detection algorithm with better robustness and higher accuracy. The YOLOv3 algorithm adopts the DarkNet53 network structure and introduces multi-scale prediction and nine anchor boxes of different sizes, which greatly improves the accuracy of the YOLOv3 algorithm while ensuring real-time performance [29]. The YOLOv4 network model was proposed by Alexey in April 2020. The main design purpose of YOLOv4 is to balance the relationship between detection speed and detection accuracy, such that it can be better applied in a practical application environment [30]. The YOLOv5 network model was first released by Ultralytics in June 2020 [31]. YOLOv5 is considerably higher than YOLOv4 in flexibility and speed, the optimization of accuracy and speed is more balanced, and it has great advantages in the hardware deployment of the model. Therefore, the YOLOv5 model was selected to realize the detection of UXOs. To better release the application potential of UAV in the fields of explosives search and disposal, to better realize effective hazard protection, and to better reduce the personal safety risk of front-line staff, it is suggested to speed up the application of UAV, learn from the application mode and development experience of UAV in other business fields, and constantly carry out scientific and technological empowerment and accurate implementation. This will allow front-line staff to make greater contributions to national security and public security on the premise of ensuring their own safety.

3. Our Approach

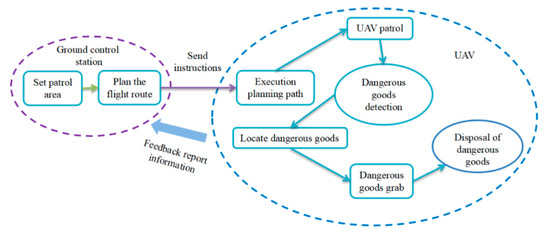

During the development of the intelligent EOD system based on a six-rotor UAV, the use environment and habits of the system are fully considered, and the system has strong reliability, operability, and completeness. The workflow of the system is shown in Figure 3. First, the operator delimits the UXO inspection area in the ground station. The ground station plans the flight path according to the delimited area and sends the plan to the flight platform to execute the planned path. During the flight process, the UAV uses the dual-vision-integrated PTZ carried by the UAV to capture the visual image information of the UAV patrol area in real time. The ground station detects and recognizes the image information to assist in locating the landing point of the UXO, and then the operator controls the UAV to complete the subsequent processing, such as grasping and transferring dangerous objects.

Figure 3.

System workflow chart.

3.1. Hardware Design

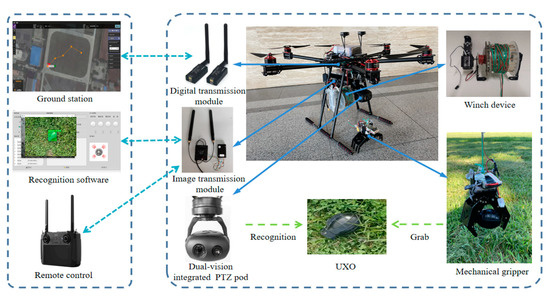

To realize the function of a UAV grabbing grenade and throwing it to the preset area, the hardware structure of the system is shown in Figure 4. The system uses a PIXHAWK flight control board to control the six-rotor UAV. The supporting external equipment of the UAV includes: safety switch, remote control receiving module, power module, dual GPS (built-in Compass) module, etc. The ground station is used to control and monitor the flight of the UAV, realize the visual image processing algorithm, and complete the automatic recognition and positioning of the grenade. The remote controller is used to control the flight of the UAV and display the visual image information of the dual-vision-integrated PTZ and UAV patrol area in real time. The digital transmission module is used for the ground station to receive and send the flight control system command. The global navigation satellite system (GNSS) and PIXHAWK flight control system complete the UAV position estimation and obtain the current flight speed of the UAV according to the captured satellite data. The winch device plays the role of controlling the up and down lifting of the mechanical gripper. The mechanical gripper is used to grasp the UXO and drop it to the designated safe position.

Figure 4.

Hardware structure of the system.

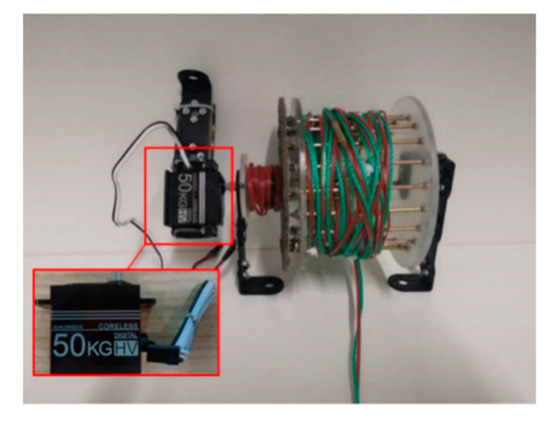

3.1.1. Design of Winch Device

To achieve the function of grabbing UXOs and throwing them into the preset area, we integrated the winch device, mechanical gripper, and dual-vision-integrated PTZ pod with the six-rotor UAV. After the system detects the landing position of the UXO, the operator controls the UAV to hover over the UXO at a fixed point. To do so, the UAV can firmly grasp the UXO and safely drop it to a specified position while in the hovering state. Install a winch device on the load platform under the UAV. The winch device is mainly composed of a 360° continuously rotating steering engine and rotary table. The steering engine can be controlled by the aircraft model remote controller to continuously rotate forward and in reverse. To prevent the UAV from being damaged by the explosion in the process of grasping UXOs, the hovering UAV is connected to the mechanical gripper with a 5 m suspension wire, which is wound on the winch shaft of the winch device, and the mechanical gripper is lifted and lowered by driving the winch shaft forward and in reverse through the steering engine. To realize the remote control of the mechanical gripper, one end of the suspension line is connected to the remote-control receiver to receive the control signal of the gripper. The winch device is shown in Figure 5.

Figure 5.

Winch device.

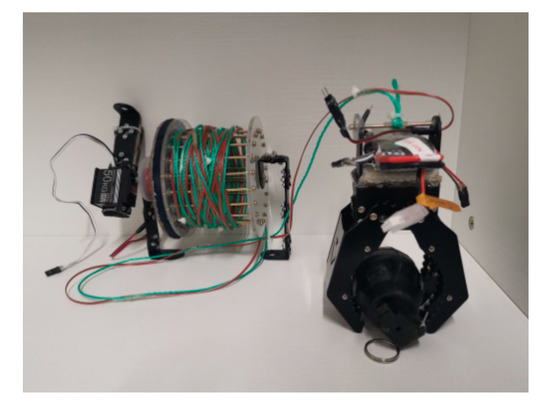

3.1.2. Design of Mechanical Gripper

After the measurement and analysis of the size and weight of the grenade to be grabbed, it was decided to adopt a closed mechanical gripper composed of hard aluminum alloy and glass fiber material. The maximum opening of the mechanical gripper is 110 mm: 110 mm long and 57 mm wide. The internal edge of the gripper adopts a wave design. After clamping the UXO, using the internal edge of the wave design, the UXO can be supported from multiple angles, such that the clamping can be more stable. To meet the requirements of grasping torque, the steering engine of the mechanical gripper adopts DG-995MG, with a torque of 25 kgf.cm, and the torque at the end of the gripper is 25 kgf.cm/11 cm = 2.28 kgf.cm. The torque transmitted through the mechanical gripper can meet the requirements of firmly grasping a grenade. The mechanical gripper is shown in Figure 6.

Figure 6.

Mechanical gripper.

3.1.3. Dual-Vision-Integrated PTZ Pod

The recognition and detection module used in the system is the TSHD10T3 dual-vision-integrated PTZ pod. TSHD10T3 is a professional dual-vision pod with integrated 10× optical zoom, high-precision two-axis brushless stabilization, and zoom. The PTZ adopts a high-precision encoder field-oriented control (FOC) scheme, characterized by high stability, small volume, lightweight, and low power consumption. The visible light movement adopts a low illumination sensor with 4 million effective pixels. The image extracted by this system is output by HDMI interface, which outputs 1920 * 1080 pixel high-definition color image, and the output frame rate is 60 FPS. The pod meets the needs of recognition and ensures the real-time performance of image recognition. The dual-vision-integrated PTZ pod is shown in Figure 7.

Figure 7.

Dual-vision-integrated PTZ pod.

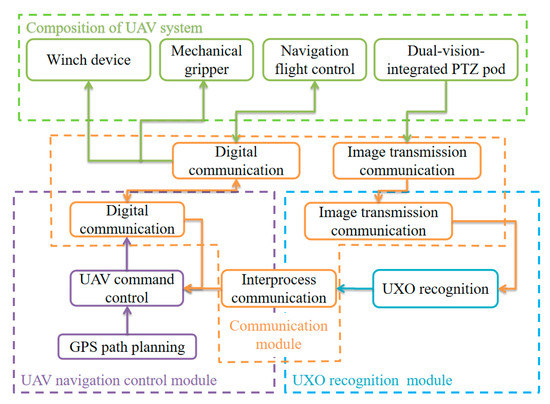

3.2. Software Design

The software architecture flow of the intelligent EOD system of the six-rotor UAV is shown in Figure 8. The software part mainly includes the UAV control module, UXO recognition module, and multi-process information communication module. The UXO recognition module is mainly used to recognize UXOs. The multi-process information communication module is mainly responsible for the timing coordination and information sharing of software processes in the scheduling system and the coordinated operations, such as creating processes and closing processes to prevent software collapse caused by process blocking. The biggest advantage of this architecture is its flexibility, i.e., the system can expand new functional modules at any time according to the actual needs by opening a new subprocess-supporting shared memory.

Figure 8.

System software overall architecture chart.

3.2.1. UAV Navigation Control Module

The system cannot only control the flight of the UAV by a remote controller, but it can also control its flight through the UAV ground control station. The ground station is the command center of the entire UAV system. Its main functions include mission planning, flight route calibration, etc. The operator delimits the patrol area in the ground station and the ground station plans the flight path in the delimited search area and sends the planned digital signal to the flight platform through the digital transmission device to execute the planned path. When the UAV detects the patrol area during flight, if a UXO is found on the ground or in the grass, the detection and recognition software on the ground station will send an alarm of “UXO found” to remind the operator. During the mission, the UAV maintains contact with the ground control station through a wireless data link.

3.2.2. UXO Detection Module

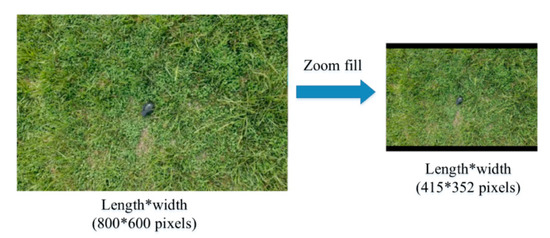

The system adopts the YOLOv5 detection model to accomplish the dual-vision intelligent detection of visible light and infrared light of the UXO, which greatly reduces the restrictions on the use and scope of the equipment, and the model can perform tasks the entire day. The model does not rely on a high-performance computing platform and can better complete the task of UXO detection. YOLOv5 is the latest real-time target detection algorithm of the YOLO series. It inherits the advantages of the YOLOv4 algorithm and optimizes the backbone network to improve the accuracy of small target detection. The mosaic data enhancement method is adopted as the input of YOLOv5, and random scaling, random clipping, and random arrangement are used for splicing to improve the detection effect of small targets. In addition, the algorithm integrates adaptive anchor box calculations. During each training, the optimal anchor box value shown in different training sets is adaptively calculated. In network training, the network outputs the prediction frame on the basis of the initial anchor frame, compares it with the real frame to calculate the gap between them, and then reversely updates and iterates the network parameters. Since the size of the training images cannot be guaranteed to be the same, the original YOLO images are uniformly scaled to a standard size, as shown in Figure 9, and then sent to the detection network [32]. The introduction of an adaptive image scaling algorithm reduces the black edges at both ends of the image height, and the number of calculations will be reduced during reasoning, i.e., the speed of target detection will be improved.

Figure 9.

Adaptive scaling.

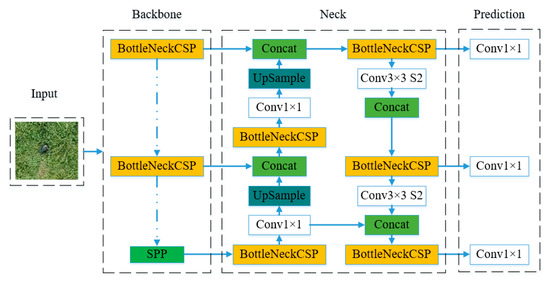

The YOLOv5 network structure is divided into input, backbone, neck, and prediction according to the processing stage [33,34]. The network structure is shown in Figure 10.

Figure 10.

YOLOv5 network structure diagram.

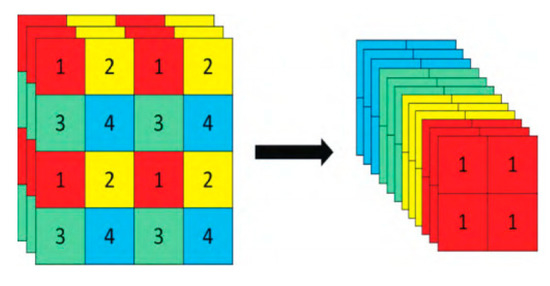

The input part completes basic processing tasks such as data enhancement, adaptive picture scaling, anchor box calculation, etc. The feature extraction network of YOLOv5 performs down-sampling and convolution on the input image and obtains feature images with different scales while obtaining feature information. The feature maps of deep neural networks are sparse and have poor spatial geometric feature representation capabilities, but their receptive fields are wide and can obtain high-level semantic information. However, the feature map of the shallow neural network is dense, so it has strong spatial geometric feature details with a small receptive field and weak semantic representation ability. Combining the high-level semantic information in the deep network and the geometric information in the shallow network can improve the detection performance of the neural network. If down-sampling is carried out in the network and the characteristic map with the same scale as the input image is used for calculation, the number of parameters and calculation will increase sharply, the requirements for hardware equipment will be improved, and it is difficult to realize engineering application. Therefore, before inputting the image into YOLOv5, we will reduce the image size. The network structure of YOLOv5 mainly includes a feature extraction network, feature fusion network, and detection network. The feature extraction network serves as the backbone network for target detection and extracts feature information from images. As the backbone network, the backbone part includes a focus structure and CSP structure. The key step of the focus structure is slicing, as shown in Figure 11. For example, the original image 416 × 416 × 3 is connected to the focus structure and transformed into a feature map of 208 × 208 × 12 through a slicing operation. Next, 32 convolution kernel operations are performed to transform into a feature map of 208 × 208 × 32 [35].

Figure 11.

Slicing operation of YOLOv5 algorithm.

The main function of the neck network in the target detection network is to fuse the features extracted by the backbone network, improve the model’s detection ability for targets of varying scales, and diversify the features learned by the network. The neck feature fusion network uses a PANet pyramid structure to realize the fusion of different scales, which aids in the detection of small targets and the recognition of targets of the same size and different scales. Predictions include bounding box loss function and non-maximum suppression (NMS), which is used to classify and locate targets. The loss function used in YOLOv5 is defined as:

where is the target true position frame, is the prediction frame, and represents the smallest circumscribed rectangle of frames and . used in YOLOv5 as a loss function effectively solves the problem when the bounding boxes do not coincide. In the target detection prediction result processing stage, NMS is performed for many target frames, i.e., the category prediction frame with the maximum local score is retained, and the prediction frame with a low score is discarded to obtain an optimal target box.

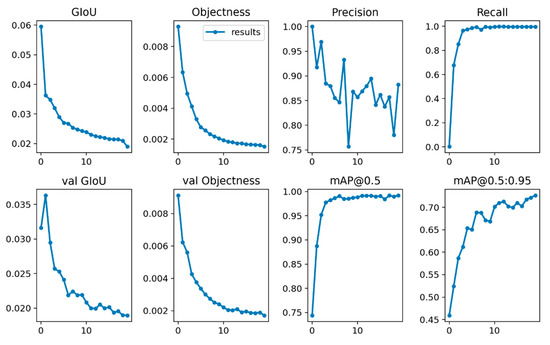

For grenade model training, PyTorch is the software framework, and the hardware uses CPU: Intel(R) Core(TM) i9-9940X and GPU: 4 NVIDIA RTX 2080 Ti. To better obtain the characteristics of the data and improve the model performance and generalization ability, the model training adopts the cosine annealing algorithm, setting Epoch to 50 and batch size to 32. The visualization results of the model training are shown in Figure 12. The x-axis in the figure represents the epoch. Objectness represents the average value of target detection loss, and the smaller the value, the more accurate the target detection. The accuracy rate indicates the proportion of correct predictions in the prediction results. The recall rate represents the proportion of all targets that are correctly predicted. Val GloU indicates the GloU loss of the validation set. Val Objectness refers to the loss targeted by the prediction in the validation set. mAP@0.5 means that when the intersection over union (IoU) threshold is set to 0.5, the average precision (AP) of each category is calculated, and then all categories are averaged to obtain the mean average precision (mAP) value. mAP@0.5:0.95 represents the mAP obtained by averaging AP calculated at different IoU thresholds (from 0.5 to 0.95, step size 0.05: 0.5, 0.55, 0.6, 0.65, 0.7, 0.75, 0.8, 0.85, 0.9, 0.95). The results demonstrate that the training model achieves a good model performance and satisfies the accuracy requirements of real-time target detection.

Figure 12.

Training visualization results.

4. Experimental Results and Discussion

To comprehensively evaluate the feasibility and practicality of the intelligent EOD system of a six-rotor UAV, a real-life UXO capture test was carried out in this paper. The physical display of the system is shown in Figure 13.

Figure 13.

Intelligent EOD system based on six-rotor UAV.

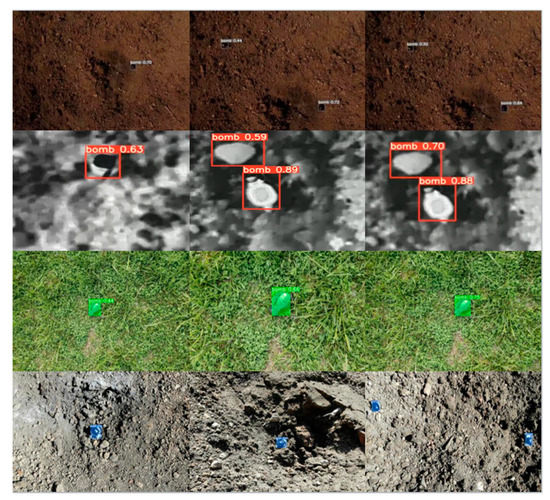

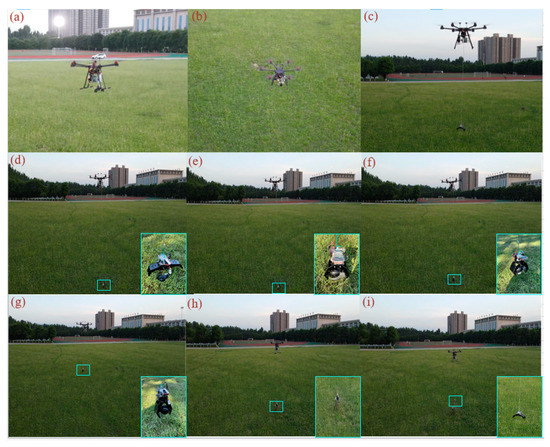

The system planned the UXO search area through the ground station and sent the corresponding flight command to the UAV through a digital transmission communication to control the movement of the six-rotor UAV. The dual-vision-integrated PTZ pod carried by the UAV was used to capture the visual image information of the UAV patrol area in real-time, which then transmitted and processed the visual image signal through the image transmission sending end. The image transmission receiving end received the video signal and then introduced the data to the ground station. Real-time video is detected and recognized by the ground station, which can locate the UXO position, then we operate a platform system to complete the follow-up process, i.e., grasping and transfer. Taking the grenade UXO as an example, the flying altitude of the UAV is 3 m, the detection and recognition results of the system in a crater, night, soil, grass, and gravel are shown in Figure 14. It can be seen from the figure that the algorithm used in this paper has high accuracy in the actual scene test and can well provide auxiliary positioning for follow-up UXO capture and processing.

Figure 14.

UXO detection and recognition results in each environment.

When the system recognizes a UXO, the ground station will trigger an alarm. At this time, the UAV pilot controls the winch device and mechanical gripper through the model remote control to accurately grasp the UXO. In this process, the operator can maintain a safe distance for operation, which greatly reduces the probability of casualties in the process of explosion removal. The workflow of the intelligent EOD system of the six-rotor UAV is shown in Figure 15. First, the target area is patrolled for the UXO. Second, if the UXO bomb is found, a retracting device lowers the mechanical gripper. Third, the mechanical gripper firmly grasps the UXO. Fourth, the UAV places the transfer UXO in the explosion-proof barrel. Fifth, the UAV flies away and the EOD work is completed.

Figure 15.

System EOD workflow. (a) UAV take off. (b) Patrol UXO. (c) UAV hover. (d) Lowering of mechanical gripper. (e) Holding of a UXO. (f) Raising of mechanical gripper. (g) Transferring UXO. (h) Placing of a UXO in an explosion-proof barrel. (i) UAV flying away.

5. Conclusions

The traditional methods of explosives search and disposal rely on hand-held detectors or explosives search dogs to search for UXOs, and then destroy, dismantle, or transfer them on-site according to the actual situation. The basic principle is that the movable UXOs are transferred first. However, it is easy to cause casualties during the transfer process. In view of this situation, this paper designs an intelligent EOD based on a six-rotor UAV. The system is a UAV platform with comprehensive functions and high integration. Combined with UAV autonomous navigation, deep learning, and other technologies, intelligent explosion detection and EOD tasks can be completed safely and efficiently. The experimental results show that the system has good practicality and can ensure the powerful promotion of EOD to the greatest extent. In the future, the system can also be widely used in many aspects, such as emergency early warning, battlefield reconnaissance, material sampling in dangerous areas, disaster relief, and express delivery.

Author Contributions

Conceptualization, X.Y., J.F. and R.L.; methodology, J.F. and R.L.; software, F.G.; investigation, Q.L. and J.F.; resources, X.Y.; writing—original draft preparation, J.F. and R.L.; writing—review and editing, X.Y., J.F. and Q.L.; visualization, J.F. and J.Z.; supervision, J.F. and X.Y.; project administration, X.Y.; funding acquisition, X.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant 61806209, in part by the Natural Science Foundation of Shaanxi Province under Grant 2020JQ-490, in part by the Aeronautical Science Fund under Grant 201851U8012. (Corresponding author: Ruitao Lu).

Data Availability Statement

The datasets used or analysed during the current study are available from the corresponding author on reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Mei, Z.; Mei, C.; Tao, Y.; Yao, L. Study of Risk Factors, Organization Method, and Risk Assessment in Troops’ Live Hand Grenade Training. In Proceedings of the Lecture Notes in Electrical Engineering, Lisbon, Portugal, 29–31 July 2016; pp. 481–487. [Google Scholar]

- Medda, A.; Funk, R.; Ahuja, K.; Kamimori, G. Measurements of Infrasound Signatures From Grenade Blast during Training. Mil. Med. 2021, 186, 523–528. [Google Scholar] [CrossRef]

- Potter, A.W.; Hunt, A.P.; Pryor, J.L.; Pryor, R.R.; Stewart, I.B.; Gonzalez, J.A.; Xu, X.; Waldock, K.A.; Hancock, J.W.; Looney, D.P. Practical method for determining safe work while wearing explosive ordnance disposal suits. Saf. Sci. 2021, 141, 105328. [Google Scholar] [CrossRef]

- Barisic, A.; Car, M.; Bogdan, S. Vision-based system for a real-time detection and following of UAV. In Proceedings of the 2019 Workshop on Research, Education and Development of Unmanned Aerial Systems (RED UAS), Cranfield, UK, 25–27 November 2019; pp. 156–159. [Google Scholar] [CrossRef]

- Xi, J.; Wang, C.; Yang, X.; Yang, B. Limited-Budget Output Consensus for Descriptor Multiagent Systems With Energy Constraints. IEEE Trans. Cybern. 2020, 50, 4585–4598. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Deng, S.; Cai, H.; Li, K.; Cheng, Y.; Ni, Y.; Wang, Y. The Design and Analysis of a Light Explosive Ordnance Disposal Manipulator. In Proceedings of the 2018 2nd International Conference on Robotics and Automation Sciences (ICRAS), Wuhan, China, 23–25 June 2018; pp. 1–5. [Google Scholar]

- Gao, F.; Zhang, H.; Li, Y.; Deng, W. Research on target recognition and path planning for EOD robot. Int. J. Comput. Appl. Technol. 2018, 57, 325. [Google Scholar] [CrossRef]

- Lee, H.J.; Ryu, J.-K.; Kim, J.; Shin, Y.J.; Kim, K.-S.; Kim, S. Design of modular gripper for explosive ordinance disposal robot manipulator based on modified dual-mode twisting actuation. Int. J. Control Autom. Syst. 2016, 14, 1322–1330. [Google Scholar] [CrossRef]

- Xiong, W.; Chen, Z.; Wu, H.; Xu, G.; Mei, H.; Ding, T.; Yang, Y. Fetching trajectory planning of explosive ordnance disposal robot. Ferroelectrics 2018, 529, 67–79. [Google Scholar] [CrossRef]

- Akhloufi, M.; Couturier, A.; Castro, N. Unmanned Aerial Vehicles for Wildland Fires: Sensing, Perception, Cooperation and Assistance. Drones 2021, 5, 15. [Google Scholar] [CrossRef]

- Jo, Y.-I.; Lee, S.; Kim, K.H. Overlap Avoidance of Mobility Models for Multi-UAVs Reconnaissance. Appl. Sci. 2020, 10, 4051. [Google Scholar] [CrossRef]

- Shafique, A.; Mehmood, A.; Elhadef, M. Detecting Signal Spoofing Attack in UAVs Using Machine Learning Models. IEEE Access 2021, 9, 93803–93815. [Google Scholar] [CrossRef]

- Grogan, S.; Pellerin, R.; Gamache, M. The use of unmanned aerial vehicles and drones in search and rescue operations-a survey. Prolog 2018, 2018, 1–13. [Google Scholar]

- Ding, X.; Guo, P.; Xu, K.; Yu, Y. A review of aerial manipulation of small-scale rotorcraft unmanned robotic systems. Chin. J. Aeronaut. 2019, 32, 200–214. [Google Scholar] [CrossRef]

- Lu, R.; Yang, X.; Li, W.; Fan, J.; Li, D.; Jing, X. Robust Infrared Small Target Detection via Multidirectional Derivative-Based Weighted Contrast Measure. IEEE Geosci. Remote Sens. Lett. 2020, 1, 1–5. [Google Scholar] [CrossRef]

- Lu, R.; Yang, X.; Jing, X.; Chen, L.; Fan, J.; Li, W.; Li, D. Infrared Small Target Detection Based on Local Hypergraph Dissimilarity Measure. IEEE Geosci. Remote Sens. Lett. 2021, 1, 1–5. [Google Scholar] [CrossRef]

- Boudjit, K.; Ramzan, N. Human detection based on deep learning YOLO-v2 for real-time UAV applications. J. Exp. Theor. Artif. Intell. 2021, 2021, 1–18. [Google Scholar] [CrossRef]

- Avola, D.; Cinque, L.; Diko, A.; Fagioli, A.; Foresti, G.; Mecca, A.; Pannone, D.; Piciarelli, C. MS-Faster R-CNN: Multi-Stream Backbone for Improved Faster R-CNN Object Detection and Aerial Tracking from UAV Images. Remote Sens. 2021, 13, 1670. [Google Scholar] [CrossRef]

- Liu, Y.; Yang, F.; Hu, P. Parallel FPN Algorithm Based on Cascade R-CNN for Object Detection from UAV Aerial Images. Laser Optoelectron. Prog. 2020, 57, 201505. [Google Scholar] [CrossRef]

- Yang, Y.; Gong, H.; Wang, X.; Sun, P. Aerial Target Tracking Algorithm Based on Faster R-CNN Combined with Frame Differencing. Aerospace 2017, 4, 32. [Google Scholar] [CrossRef] [Green Version]

- Sadykova, D.; Pernebayeva, D.; Bagheri, M.; James, A. IN-YOLO: Real-Time Detection of Outdoor High Voltage Insulators Using UAV Imaging. IEEE Trans. Power Deliv. 2020, 35, 1599–1601. [Google Scholar] [CrossRef]

- Francies, M.L.; Ata, M.M.; Mohamed, M.A. A robust multiclass 3D object recognition based on modern YOLO deep learning algorithms. Concurr. Comput. Pract. Exp. 2021, 23, e6517. [Google Scholar] [CrossRef]

- Liu, T.; Pang, B.; Zhang, L.; Yang, W.; Sun, X. Sea Surface Object Detection Algorithm Based on YOLO v4 Fused with Reverse Depthwise Separable Convolution (RDSC) for USV. J. Mar. Sci. Eng. 2021, 9, 753. [Google Scholar] [CrossRef]

- Kim, J.; Cho, J. A Set of Single YOLO Modalities to Detect Occluded Entities via Viewpoint Conversion. Appl. Sci. 2021, 11, 6016. [Google Scholar] [CrossRef]

- Zhao, Z.; Han, J.; Song, L. YOLO-Highway: An Improved Highway Center Marking Detection Model for Unmanned Aerial Vehicle Autonomous Flight. Math. Probl. Eng. 2021, 2021, 1–14. [Google Scholar] [CrossRef]

- Mittal, P.; Singh, R.; Sharma, A. Deep learning-based object detection in low-altitude UAV datasets: A survey. Image Vis. Comput. 2020, 104, 104046. [Google Scholar] [CrossRef]

- Diez, Y.; Kentsch, S.; Fukuda, M.; Caceres, M.; Moritake, K.; Cabezas, M. Deep Learning in Forestry Using UAV-Acquired RGB Data: A Practical Review. Remote Sens. 2021, 13, 2837. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef] [Green Version]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-J.M. YOLOv4 Optimal Speed and Accuracy of Object Detection. In Proceedings of the Computer Vision and Pattern Recognition. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Jia, W.; Xu, S.; Liang, Z.; Zhao, Y.; Min, H.; Li, S.; Yu, Y. Real-time automatic helmet detection of motorcyclists in urban traffic using improved YOLOv5 detector. IET Image Process. 2021, 15, 3623–3637. [Google Scholar] [CrossRef]

- Yao, J.; Qi, J.; Zhang, J.; Shao, H.; Yang, J.; Li, X. A Real-Time Detection Algorithm for Kiwifruit Defects Based on YOLOv5. Electron. 2021, 10, 1711. [Google Scholar] [CrossRef]

- Yan, B.; Fan, P.; Lei, X.; Liu, Z.; Yang, F. A Real-Time Apple Targets Detection Method for Picking Robot Based on Improved YOLOv5. Remote Sens. 2021, 13, 1619. [Google Scholar] [CrossRef]

- Malta, A.; Mendes, M.; Farinha, T. Augmented Reality Maintenance Assistant Using YOLOv5. Appl. Sci. 2021, 11, 4758. [Google Scholar] [CrossRef]

- Liau, Y.Y.; Ryu, K. Status Recognition Using Pre-Trained YOLOv5 for Sustainable Human-Robot Collaboration (HRC) System in Mold Assembly. Sustainability 2021, 13, 12044. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).