Leaf-Off and Leaf-On UAV LiDAR Surveys for Single-Tree Inventory in Forest Plantations

Abstract

:1. Introduction

- Develop a UAV mobile mapping system for forest inventory and conduct rigorous system calibration;

- Assess the relative accuracy of multi-temporal LiDAR point clouds;

- Examine the level of detail captured by UAV LiDAR under different leaf cover scenarios and management practices;

- Develop an individual tree localization and segmentation approach; and

- Conduct exhaustive testing using UAV LiDAR over managed and unmanaged plantation under different leaf cover conditions.

2. Data Acquisition System and Dataset Description

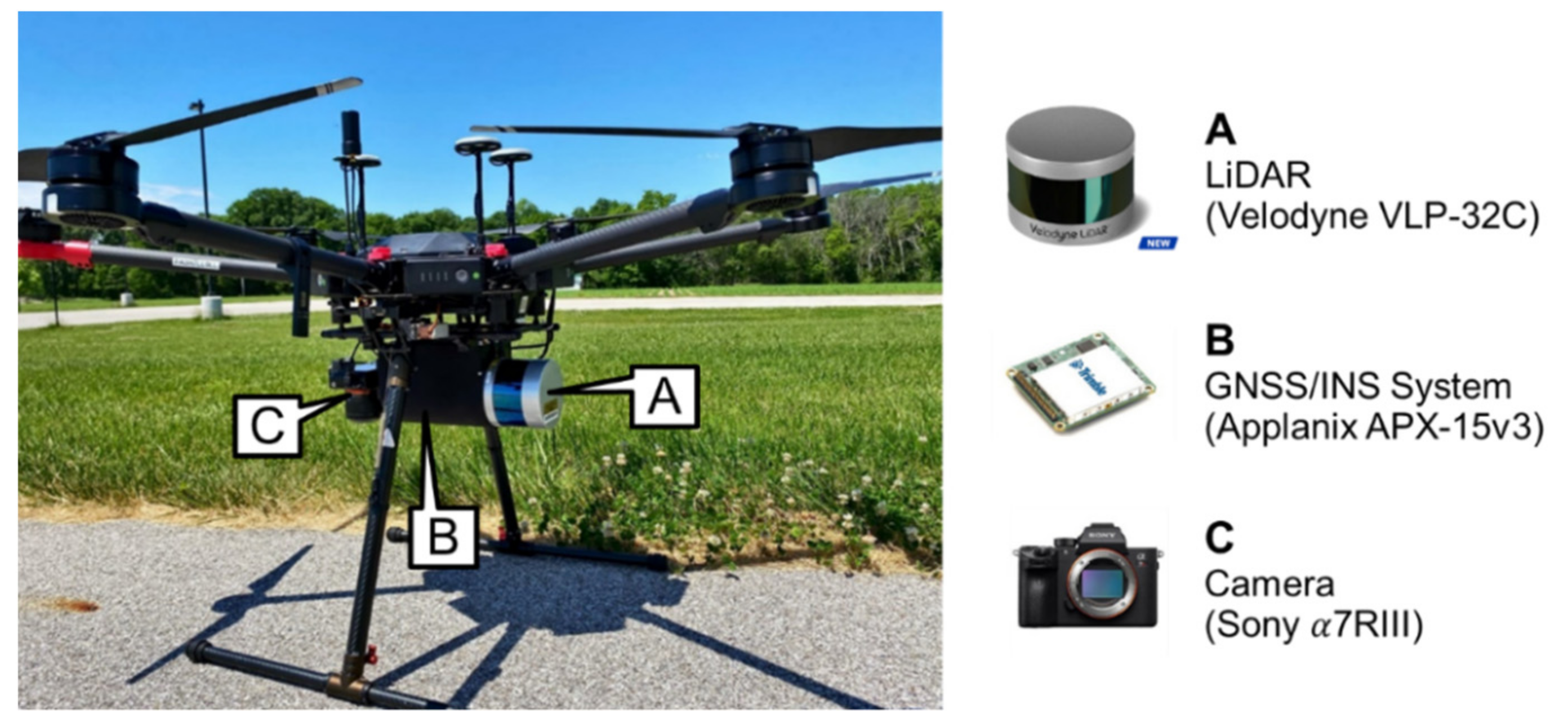

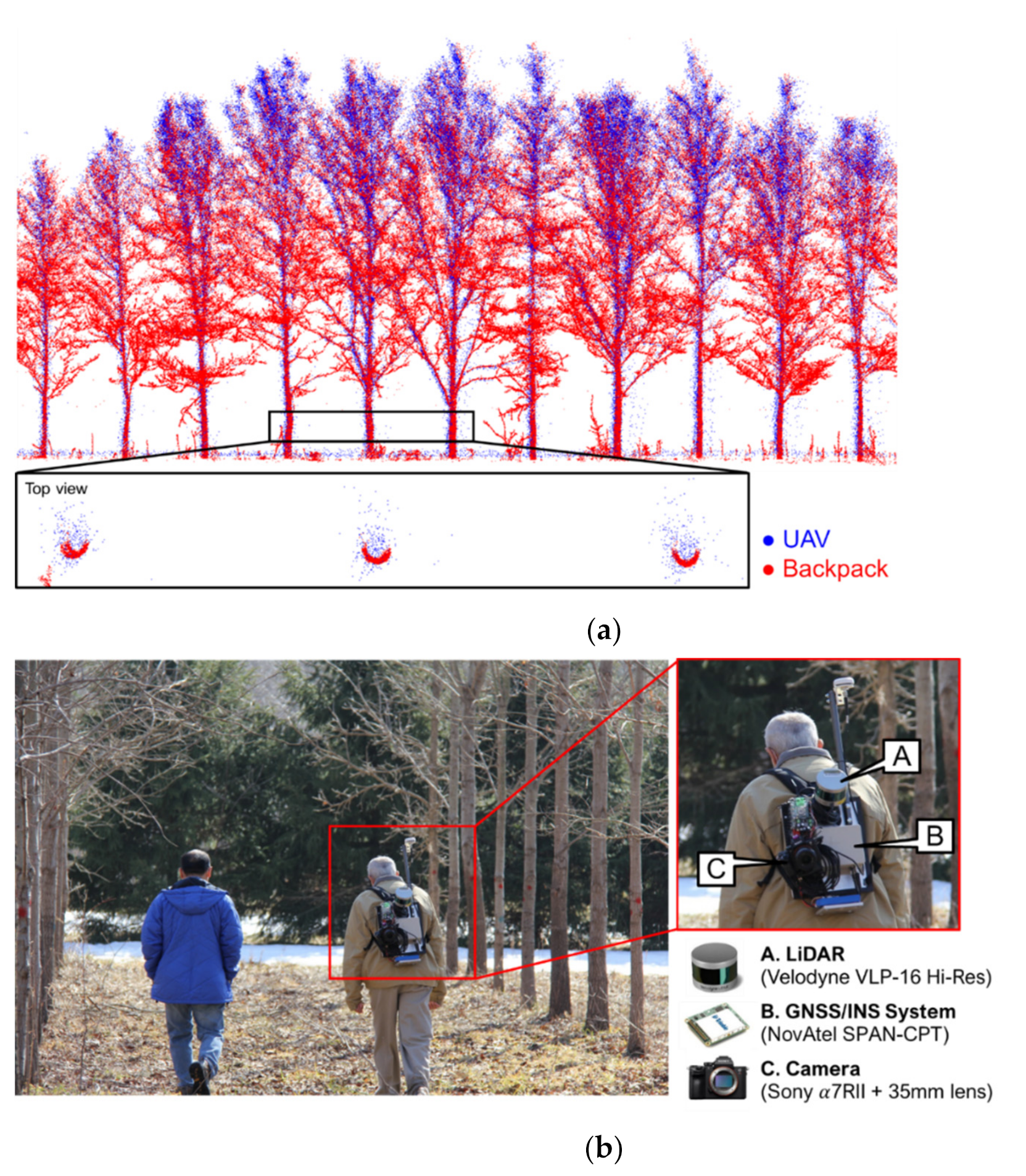

2.1. System Description and Calibration

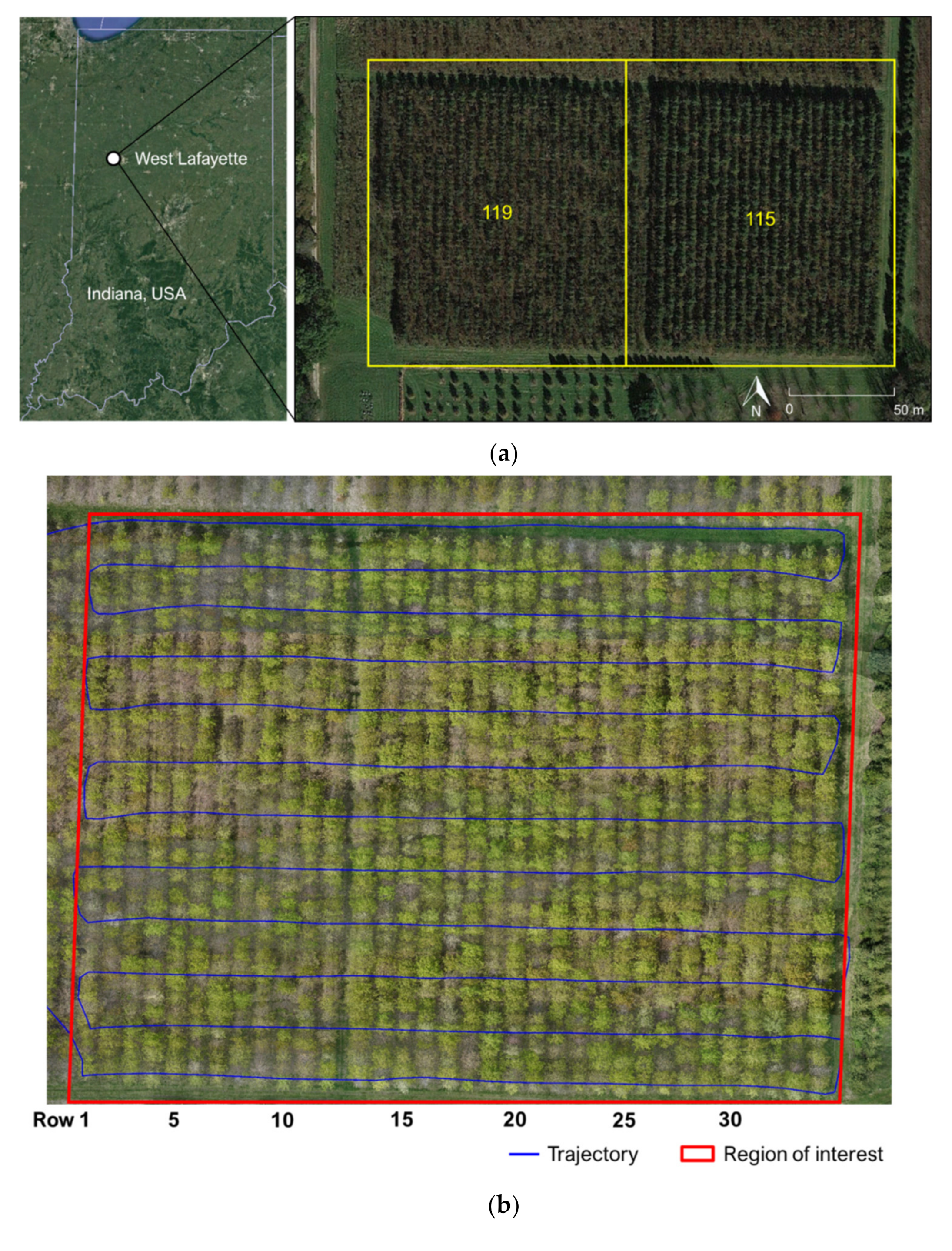

2.2. Study Site and Data Acquisition

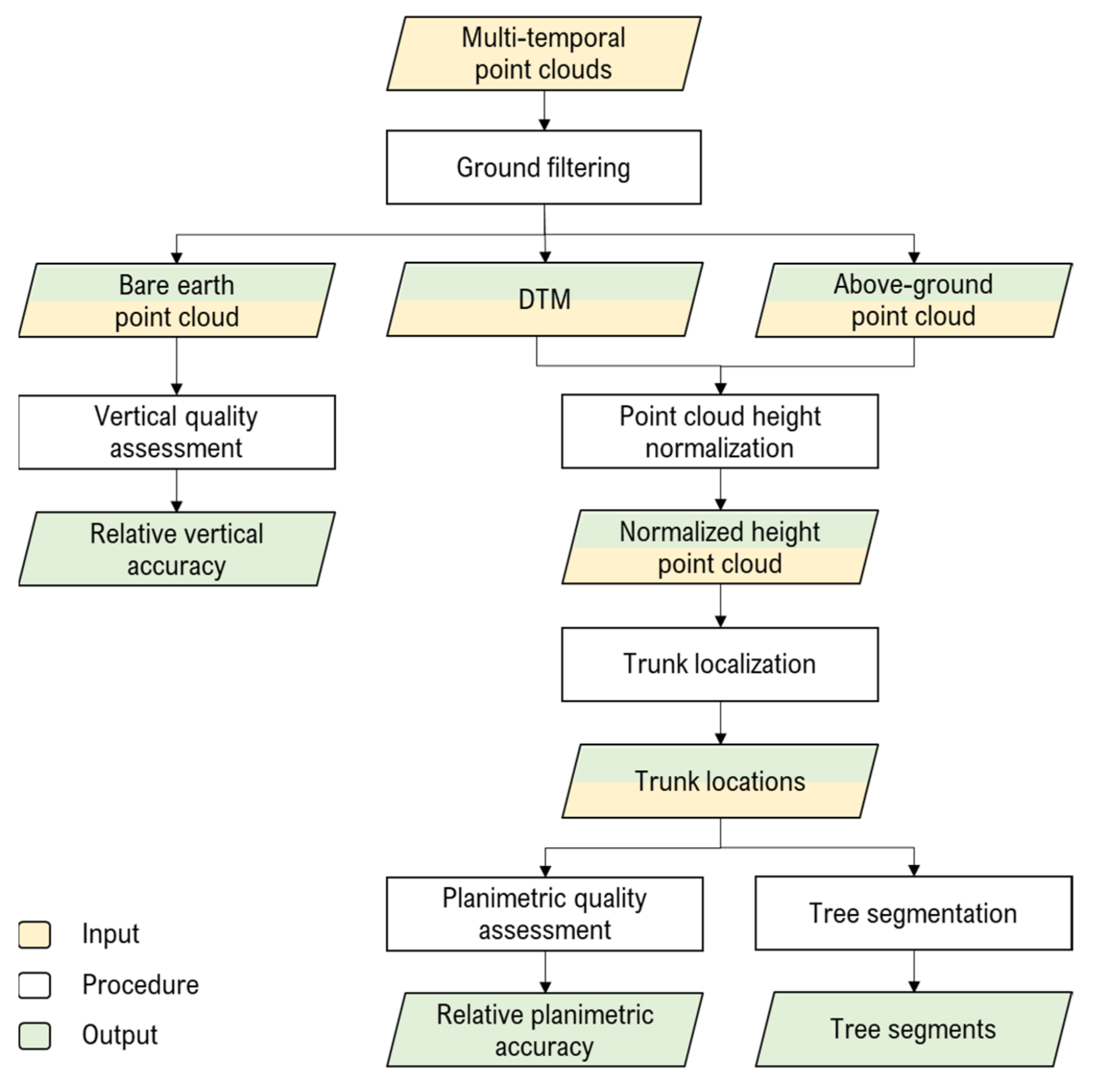

3. Methodology

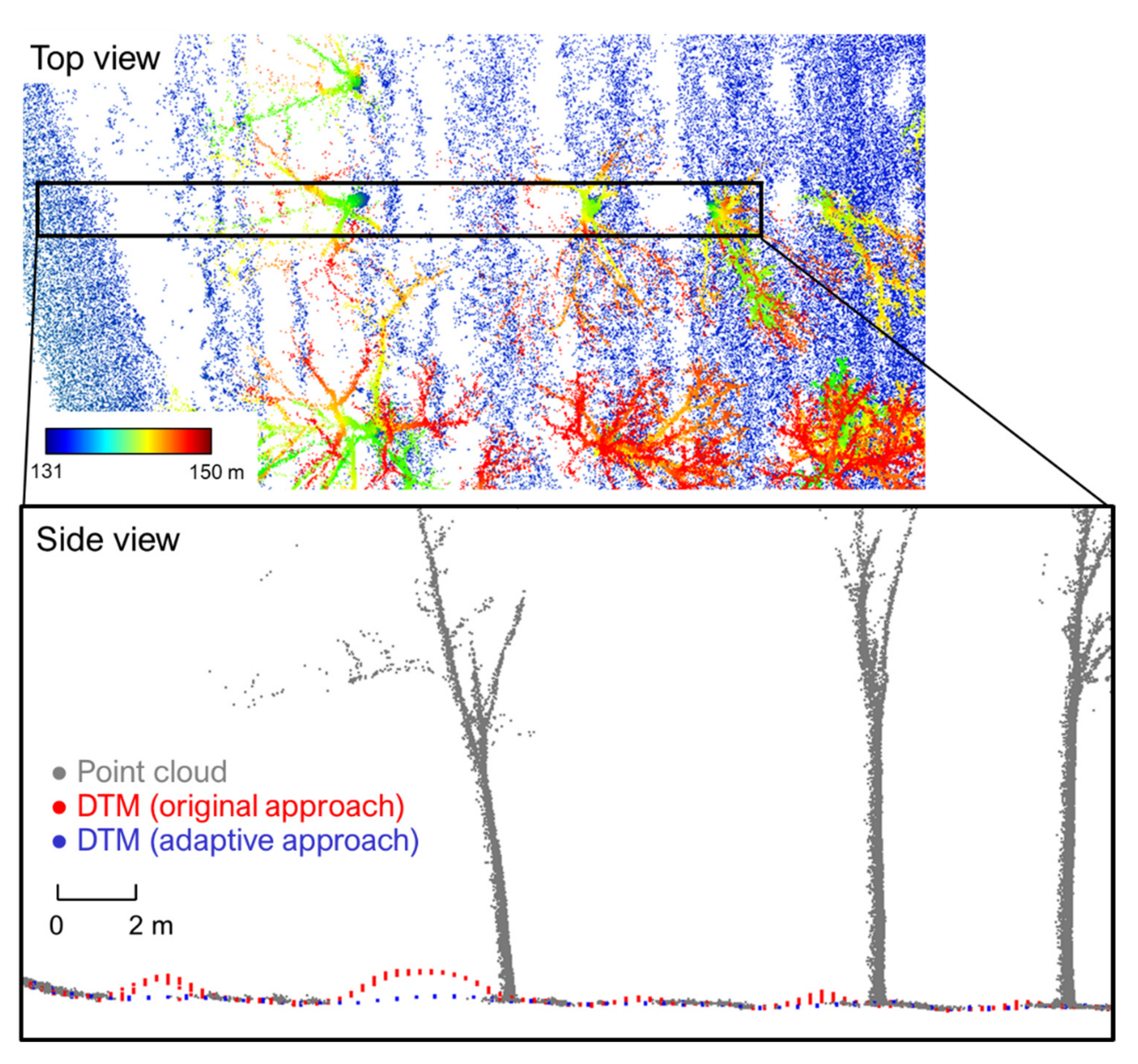

3.1. Ground Filtering and Point Cloud Height Normalization

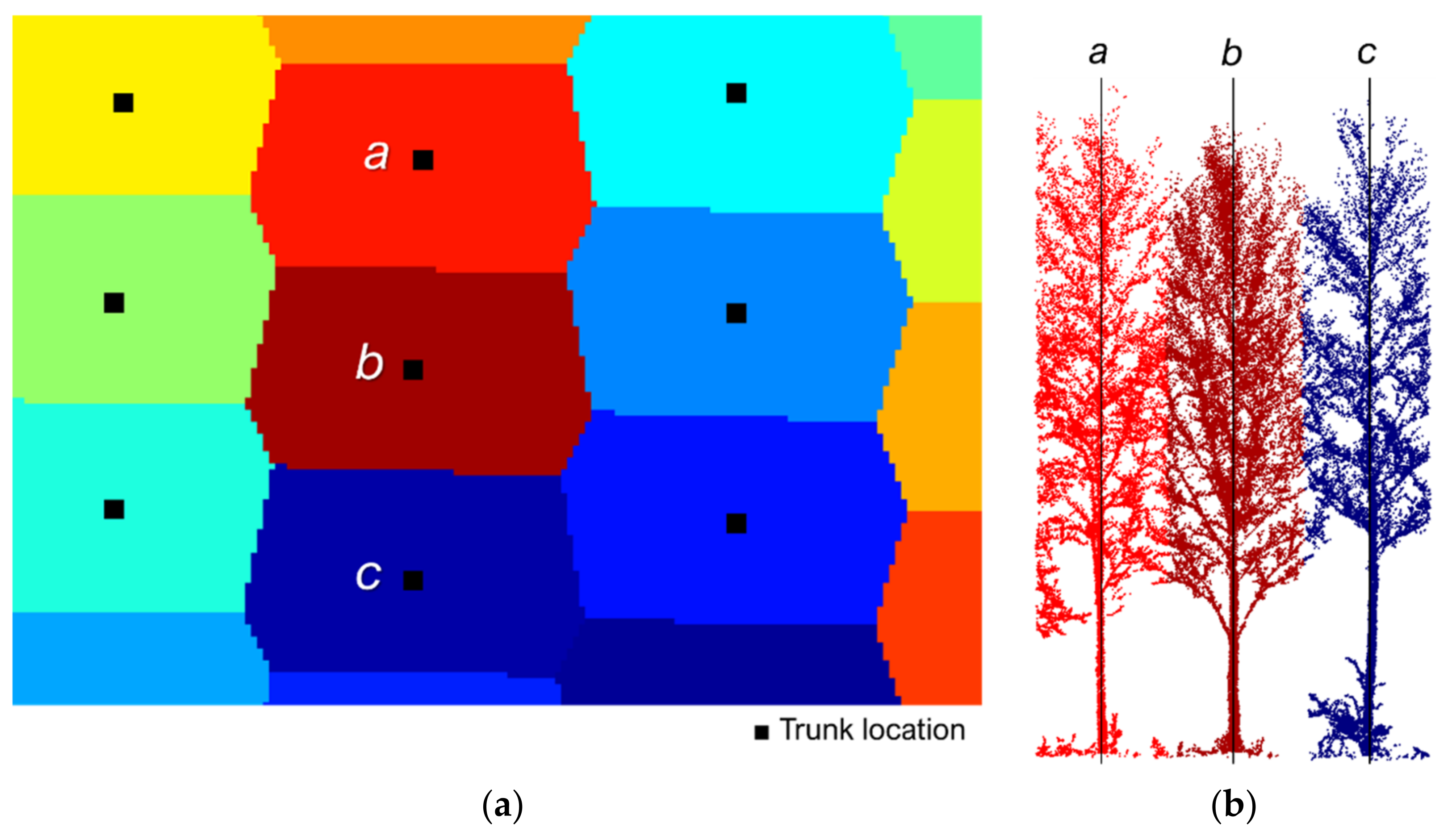

3.2. Tree Localization and Segmentation

3.3. Point Cloud Quality Assessment

4. Experimental Results

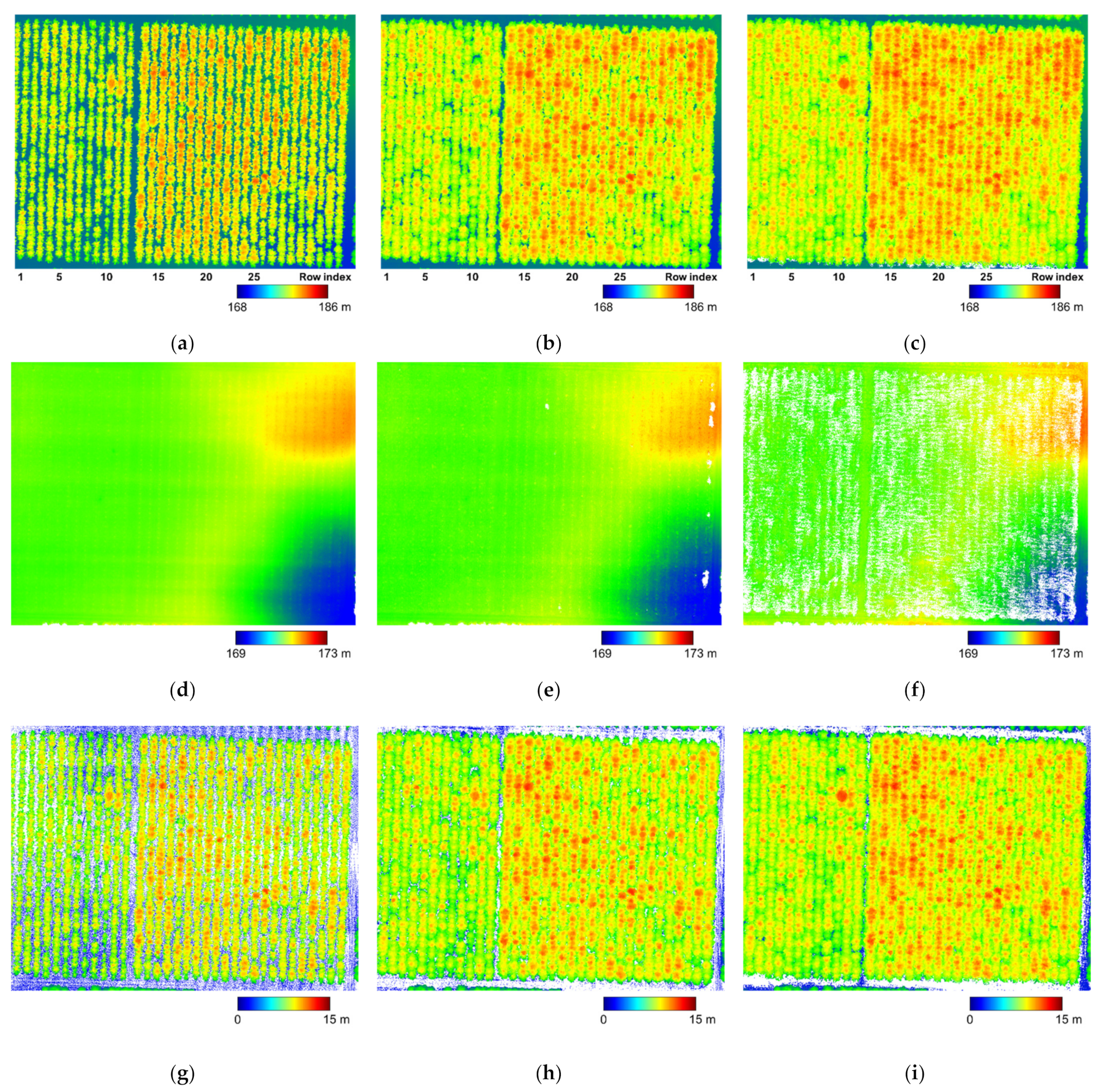

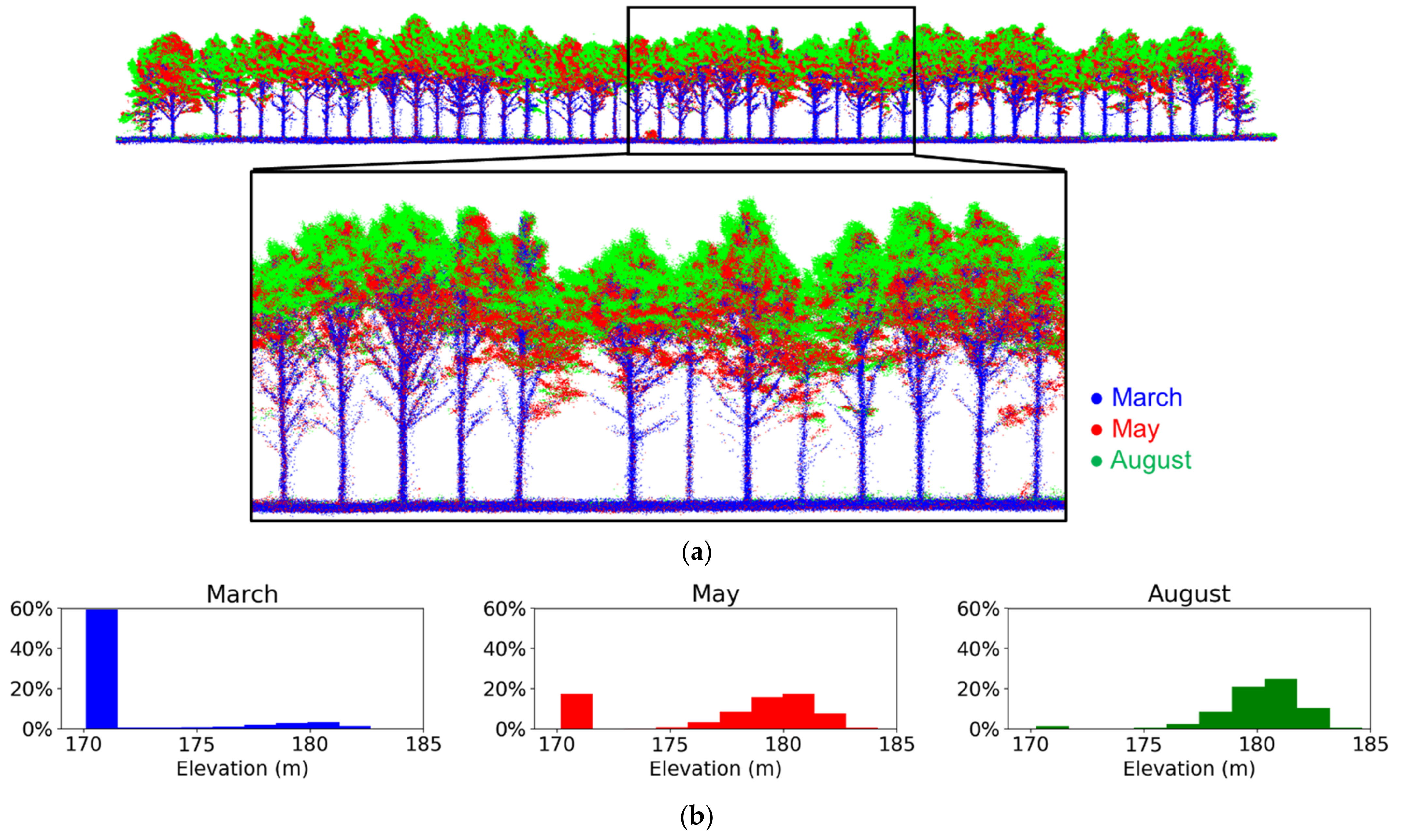

4.1. UAV LiDAR Data under Different Leaf Cover Scenarios

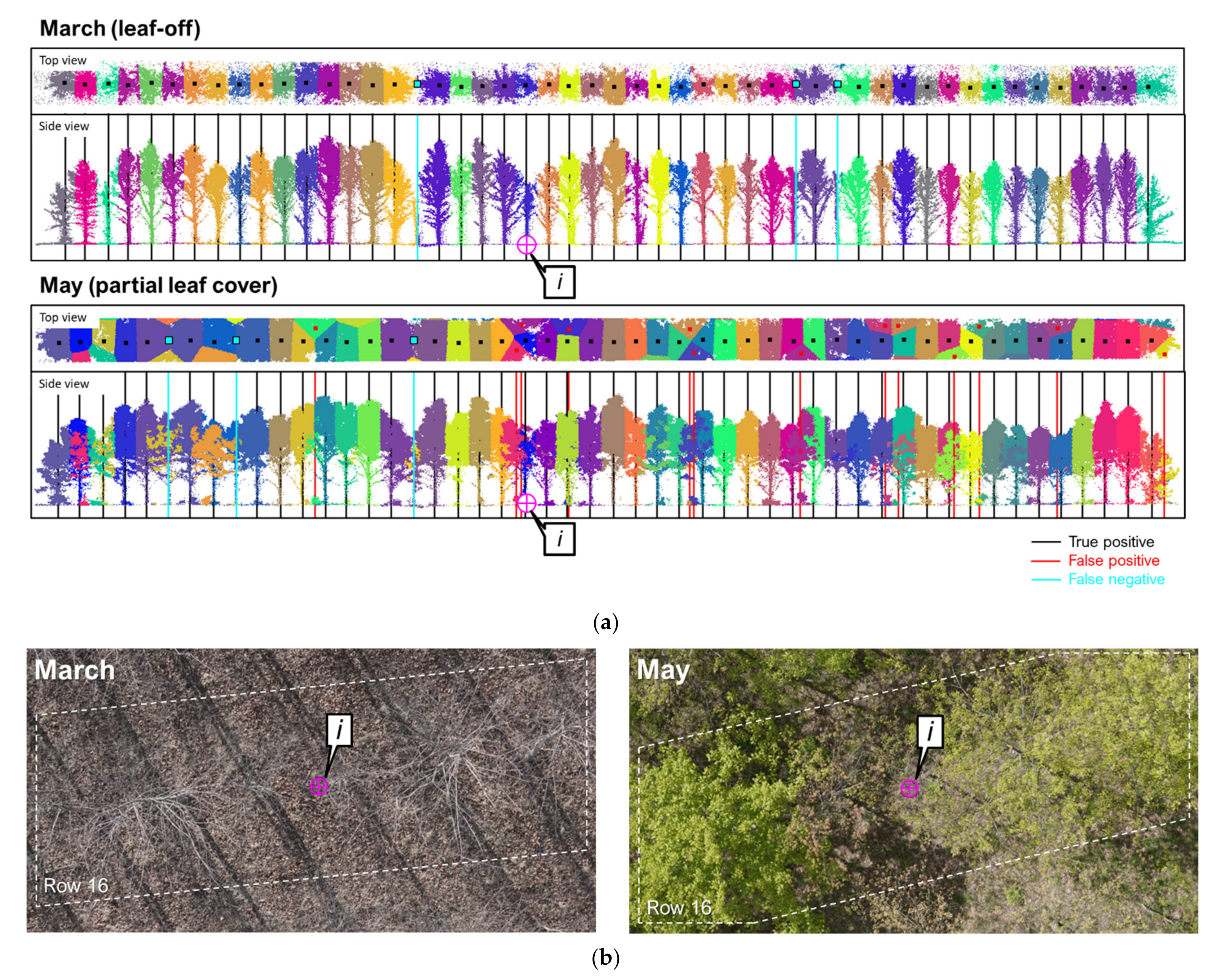

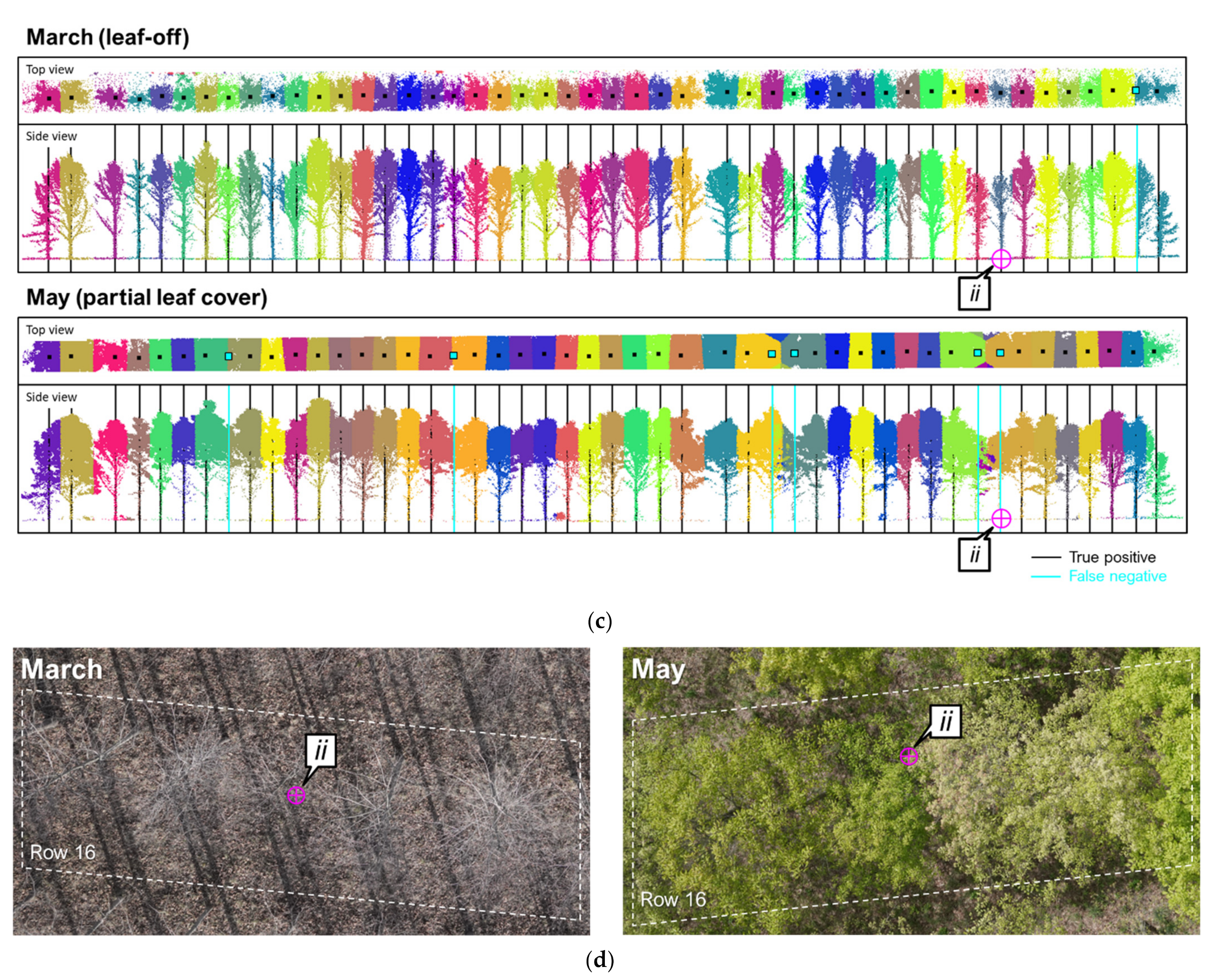

4.2. Tree Localization and Segmentation Results for Different Leaf Cover Scenarios

4.3. Quantitative Relative Quality Assessment of Multi-Temporal Point Clouds

5. Discussion

- Rigorous system calibration ensures the quality of multi-temporal LiDAR point clouds. It is also the key for reconstructing a large swath across the flying direction, which leads to the high side-lap percentage and thus high point density of the derived point cloud.

- The proposed trunk localization approach utilizes both point density and height. Compared to the DBSCAN that solely relies on point density, it is more reliable when dealing with noisy and sparse point clouds.

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- White, J.C.; Coops, N.C.; Wulder, M.A.; Vastaranta, M.; Hilker, T.; Tompalski, P. Remote sensing technologies for enhancing forest inventories: A review. Can. J. Remote Sens. 2016, 42, 619–641. [Google Scholar] [CrossRef] [Green Version]

- Kelly, M.; di Tommaso, S. Mapping forests with Lidar provides flexible, accurate data with many uses. Calif. Agric. 2015, 69, 14–20. [Google Scholar] [CrossRef]

- Beland, M.; Parker, G.; Sparrow, B.; Harding, D.; Chasmer, L.; Phinn, S.; Antonarakis, A.; Strahler, A. On promoting the use of lidar systems in forest ecosystem research. For. Ecol. Manag. 2019, 450, 117484. [Google Scholar] [CrossRef]

- Khosravipour, A.; Skidmore, A.; Isenburg, M.; Wang, T.; Hussin, Y.A. Generating pit-free canopy height models from airborne Lidar. Photogramm. Eng. Remote Sens. 2014, 80, 863–872. [Google Scholar] [CrossRef]

- Lindberg, E.; Holmgren, J.; Olofsson, K.; Wallerman, J.; Olsson, H. Estimation of tree lists from airborne laser scanning by combining single-tree and area-based methods. Int. J. Remote Sens. 2010, 31, 1175–1192. [Google Scholar] [CrossRef] [Green Version]

- Maltamo, M.; Næsset, E.; Bollandsås, O.M.; Gobakken, T.; Packalén, P. Non-parametric prediction of diameter distributions using airborne laser scanner data. Scand. J. For. Res. 2009, 24, 541–553. [Google Scholar] [CrossRef]

- Maltamo, M. Estimation of timber volume and stem density based on scanning laser altimetry and expected tree size distribution functions. Remote Sens. Environ. 2004, 90, 319–330. [Google Scholar] [CrossRef]

- Packalen, P.; Vauhkonen, J.; Kallio, E.; Peuhkurinen, J.; Pitkänen, J.; Pippuri, I.; Strunk, J.; Maltamo, M. Predicting the spatial pattern of trees by airborne laser scanning. Int. J. Remote Sens. 2013, 34, 5154–5165. [Google Scholar] [CrossRef]

- Tao, S.; Guo, Q.; Li, L.; Xue, B.; Kelly, M.; Li, W.; Xu, G.; Su, Y. Airborne Lidar-derived volume metrics for aboveground biomass estimation: A comparative assessment for conifer stands. Agric. For. Meteorol. 2014, 198–199, 24–32. [Google Scholar] [CrossRef]

- Swatantran, A.; Dubayah, R.; Roberts, D.; Hofton, M.; Blair, J.B. Mapping biomass and stress in the Sierra Nevada using lidar and hyperspectral data fusion. Remote Sens. Environ. 2011, 115, 2917–2930. [Google Scholar] [CrossRef] [Green Version]

- Hyde, P.; Dubayah, R.; Peterson, B.; Blair, J.; Hofton, M.; Hunsaker, C.; Knox, R.; Walker, W. Mapping forest structure for wildlife habitat analysis using waveform lidar: Validation of montane ecosystems. Remote Sens. Environ. 2005, 96, 427–437. [Google Scholar] [CrossRef]

- Bohlin, J.; Wallerman, J.; Fransson, J.E.S. Forest variable estimation using photogrammetric matching of digital aerial images in combination with a high-resolution DEM. Scand. J. For. Res. 2012, 27, 692–699. [Google Scholar] [CrossRef]

- White, J.C.; Wulder, M.A.; Vastaranta, M.; Coops, N.C.; Pitt, D.; Woods, M. The utility of image-based point clouds for forest inventory: A comparison with airborne laser scanning. Forests 2013, 4, 518–536. [Google Scholar] [CrossRef] [Green Version]

- Goodbody, T.R.H.; Coops, N.C.; White, J.C. Digital aerial photogrammetry for updating area-based forest inventories: A review of opportunities, challenges, and future directions. Curr. For. Rep. 2019, 5, 55–75. [Google Scholar] [CrossRef] [Green Version]

- LaRue, E.; Wagner, F.; Fei, S.; Atkins, J.; Fahey, R.; Gough, C.; Hardiman, B. Compatibility of aerial and terrestrial LiDAR for quantifying forest structural diversity. Remote Sens. 2020, 12, 1407. [Google Scholar] [CrossRef]

- Zhu, X.; Skidmore, A.K.; Darvishzadeh, R.; Niemann, K.O.; Liu, J.; Shi, Y.; Wang, T. Foliar and woody materials discriminated using terrestrial LiDAR in a mixed natural forest. Int. J. Appl. Earth Obs. Geoinf. 2018, 64, 43–50. [Google Scholar] [CrossRef]

- Barbeito, I.; Dassot, M.; Bayer, D.; Collet, C.; Drössler, L.; Löf, M.; del Rio, M.; Ruiz-Peinado, R.; Forrester, D.I.; Bravo-Oviedo, A.; et al. Terrestrial laser scanning reveals differences in crown structure of Fagus sylvatica in mixed vs. pure European forests. For. Ecol. Manag. 2017, 405, 381–390. [Google Scholar] [CrossRef]

- Tao, S.; Wu, F.; Guo, Q.; Wang, Y.; Li, W.; Xue, B.; Hu, X.; Li, P.; Tian, D.; Li, C.; et al. Segmenting tree crowns from terrestrial and mobile LiDAR data by exploring ecological theories. ISPRS J. Photogramm. Remote Sens. 2015, 110, 66–76. [Google Scholar] [CrossRef] [Green Version]

- Miller, Z.M.; Hupy, J.; Chandrasekaran, A.; Shao, G.; Fei, S. Application of postprocessing kinematic methods with UAS remote sensing in forest ecosystems. J. For. 2021, 119, 454–466. [Google Scholar] [CrossRef]

- Li, L.; Chen, J.; Mu, X.; Li, W.; Yan, G.; Xie, D.; Zhang, W. Quantifying understory and overstory vegetation cover using UAV-based RGB imagery in forest plantation. Remote Sens. 2020, 12, 298. [Google Scholar] [CrossRef] [Green Version]

- Wallace, L.; Lucieer, A.; Malenovský, Z.; Turner, D.; Vopěnka, P. Assessment of forest structure using two UAV techniques: A comparison of airborne laser scanning and structure from motion (SfM) point clouds. Forests 2016, 7, 62. [Google Scholar] [CrossRef] [Green Version]

- Waite, C.E.; van der Heijden, G.M.F.; Field, R.; Boyd, D.S. A view from above: Unmanned aerial vehicles (UAV s) provide a new tool for assessing liana infestation in tropical forest canopies. J. Appl. Ecol. 2019, 56, 902–912. [Google Scholar] [CrossRef]

- Budianti, N.; Mizunaga, H.; Iio, A. Crown structure explains the discrepancy in leaf phenology metrics derived from ground- and UAV-based observations in a Japanese cool temperate deciduous forest. Forests 2021, 12, 425. [Google Scholar] [CrossRef]

- Iizuka, K.; Yonehara, T.; Itoh, M.; Kosugi, Y. Estimating tree height and diameter at breast height (DBH) from digital surface models and orthophotos obtained with an unmanned aerial system for a Japanese cypress (Chamaecyparis obtusa) forest. Remote Sens. 2018, 10, 13. [Google Scholar] [CrossRef] [Green Version]

- Moreira, B.; Goyanes, G.; Pina, P.; Vassilev, O.; Heleno, S. Assessment of the influence of survey design and processing choices on the accuracy of tree diameter at breast height (DBH) measurements using UAV-based photogrammetry. Drones 2021, 5, 43. [Google Scholar] [CrossRef]

- Ni, W.; Dong, J.; Sun, G.; Zhang, Z.; Pang, Y.; Tian, X.; Li, Z.; Chen, E. Synthesis of leaf-on and leaf-off unmanned aerial vehicle (UAV) stereo imagery for the inventory of aboveground biomass of deciduous forests. Remote Sens. 2019, 11, 889. [Google Scholar] [CrossRef] [Green Version]

- Moudrý, V.; Urban, R.; Štroner, M.; Komárek, J.; Brouček, J.; Prošek, J. Comparison of a commercial and home-assembled fixed-wing UAV for terrain mapping of a post-mining site under leaf-off conditions. Int. J. Remote Sens. 2019, 40, 555–572. [Google Scholar] [CrossRef]

- Aguilar, F.J.; Rivas, J.R.; Nemmaoui, A.; Peñalver, A.; Aguilar, M.A. UAV-based digital terrain model generation under leaf-off conditions to support teak plantations inventories in tropical dry forests. A case of the coastal region of Ecuador. Sensors 2019, 19, 1934. [Google Scholar] [CrossRef] [Green Version]

- Lin, Y.; Hyyppa, J.; Jaakkola, A. Mini-UAV-borne LIDAR for fine-scale mapping. IEEE Geosci. Remote Sens. Lett. 2011, 8, 426–430. [Google Scholar] [CrossRef]

- Wallace, L. Assessing the stability of canopy maps produced from UAV-LiDAR data. In Proceedings of the 2013 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Melbourne, VIC, Australia, 21–26 July 2013; pp. 3879–3882. [Google Scholar]

- Wallace, L.; Lucieer, A.; Watson, C.; Turner, D. Development of a UAV-LiDAR System with application to forest inventory. Remote Sens. 2012, 4, 1519–1543. [Google Scholar] [CrossRef] [Green Version]

- Wallace, L.; Lucieer, A.; Watson, C.S. Evaluating tree detection and segmentation routines on very high resolution UAV LiDAR data. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7619–7628. [Google Scholar] [CrossRef]

- Guo, Q.; Su, Y.; Hu, T.; Zhao, X.; Wu, F.; Li, Y.; Liu, J.; Chen, L.; Xu, G.; Lin, G.; et al. An integrated UAV-borne lidar system for 3D habitat mapping in three forest ecosystems across China. Int. J. Remote. Sens. 2017, 38, 2954–2972. [Google Scholar] [CrossRef]

- Wu, X.; Shen, X.; Cao, L.; Wang, G.; Cao, F. Assessment of individual tree detection and canopy cover estimation using unmanned aerial vehicle based light detection and ranging (UAV-LiDAR) data in planted forests. Remote Sens. 2019, 11, 908. [Google Scholar] [CrossRef] [Green Version]

- Cai, S.; Zhang, W.; Jin, S.; Shao, J.; Li, L.; Yu, S.; Yan, G. Improving the estimation of canopy cover from UAV-LiDAR data using a pit-free CHM-based method. Int. J. Digit. Earth 2021, 14, 1477–1492. [Google Scholar] [CrossRef]

- Hyyppa, J.; Kelle, O.; Lehikoinen, M.; Inkinen, M. A segmentation-based method to retrieve stem volume estimates from 3-D tree height models produced by laser scanners. IEEE Trans. Geosci. Remote Sens. 2001, 39, 969–975. [Google Scholar] [CrossRef]

- Popescu, S.; Wynne, R.H.; Nelson, R.F. Measuring individual tree crown diameter with lidar and assessing its influence on estimating forest volume and biomass. Can. J. Remote Sens. 2003, 29, 564–577. [Google Scholar] [CrossRef]

- Koch, B.; Heyder, U.; Weinacker, H. Detection of individual tree crowns in airborne lidar data. Photogramm. Eng. Remote Sens. 2006, 72, 357–363. [Google Scholar] [CrossRef] [Green Version]

- Chen, Q.; Baldocchi, D.; Gong, P.; Kelly, M. Isolating individual trees in a savanna woodland using small footprint lidar data. Photogramm. Eng. Remote Sens. 2006, 72, 923–932. [Google Scholar] [CrossRef] [Green Version]

- Jeronimo, S.M.A.; Kane, V.R.; Churchill, D.J.; McGaughey, R.J.; Franklin, J.F. Applying LiDAR individual tree detection to management of structurally diverse forest landscapes. J. For. 2018, 116, 336–346. [Google Scholar] [CrossRef] [Green Version]

- Shao, G.; Shao, G.; Fei, S. Delineation of individual deciduous trees in plantations with low-density LiDAR data. Int. J. Remote Sens. 2018, 40, 346–363. [Google Scholar] [CrossRef]

- Li, W.; Guo, Q.; Jakubowski, M.K.; Kelly, M. A new method for segmenting individual trees from the lidar point cloud. Photogramm. Eng. Remote Sens. 2012, 78, 75–84. [Google Scholar] [CrossRef] [Green Version]

- Jakubowski, M.K.; Li, W.; Guo, Q.; Kelly, M. Delineating individual trees from lidar data: A comparison of vector- and raster-based segmentation approaches. Remote Sens. 2013, 5, 4163–4168. [Google Scholar] [CrossRef] [Green Version]

- Lu, X.; Guo, Q.; Li, W.; Flanagan, J. A bottom-up approach to segment individual deciduous trees using leaf-off lidar point cloud data. ISPRS J. Photogramm. Remote Sens. 2014, 94, 1–12. [Google Scholar] [CrossRef]

- Hyyppä, E.; Hyyppä, J.; Hakala, T.; Kukko, A.; Wulder, M.A.; White, J.C.; Pyörälä, J.; Yu, X.; Wang, Y.; Virtanen, J.-P.; et al. Under-canopy UAV laser scanning for accurate forest field measurements. ISPRS J. Photogramm. Remote Sens. 2020, 164, 41–60. [Google Scholar] [CrossRef]

- Velodyne Ultra Puck Datasheet. Available online: https://velodynelidar.com/products/ultra-puck/ (accessed on 26 May 2021).

- Applanix APX-15 Datasheet. Available online: https://www.applanix.com/products/dg-uavs.htm (accessed on 26 April 2020).

- Ravi, R.; Lin, Y.-J.; Elbahnasawy, M.; Shamseldin, T.; Habib, A. Simultaneous system calibration of a multi-LiDAR multicamera mobile mapping platform. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1694–1714. [Google Scholar] [CrossRef]

- Habib, A.; Lay, J.; Wong, C. LIDAR Error Propagation Calculator. Available online: https://engineering.purdue.edu/CE/Academics/Groups/Geomatics/DPRG/files/LIDARErrorPropagation.zip (accessed on 10 October 2021).

- Lin, Y.-C.; Manish, R.; Bullock, D.; Habib, A. Comparative analysis of different mobile LiDAR mapping systems for ditch line characterization. Remote Sens. 2021, 13, 2485. [Google Scholar] [CrossRef]

- Zhang, W.; Qi, J.; Wan, P.; Wang, H.; Xie, D.; Wang, X.; Yan, G. An easy-to-use airborne LiDAR data filtering method based on cloth simulation. Remote Sens. 2016, 8, 501. [Google Scholar] [CrossRef]

- Lin, Y.-C.; Habib, A. Quality control and crop characterization framework for multi-temporal UAV LiDAR data over mechanized agricultural fields. Remote Sens. Environ. 2021, 256, 112299. [Google Scholar] [CrossRef]

- Ravi, R.; Habib, A. Least squares adjustment with a rank-deficient weight matrix and its applicability towards image/LiDAR data processing. Photogramm. Eng. Remote Sens. 2021, 87, 717–733. [Google Scholar]

| 13 March 2021 | 11 May 2021 | 2 August 2021 | |

|---|---|---|---|

| Number of flight lines | 12 | 12 | 12 |

| Flying height (m) | 40 | 40 | 40 |

| Lateral distance (m) | 11 | 11 | 11 |

| Ground speed (m/s) | 3.5 | 3.5 | 3.5 |

| Sidelap percentage (%) | 95 | 95 | 95 |

| Duration (s) | 650 | 661 | 655 |

| Number of images captured | 451 | 465 | 484 |

| Dataset | Number of Points (Million) | Percentage (%) | |||

|---|---|---|---|---|---|

| Total | Bare Earth | Above-Ground | Bare Earth | Above-Ground | |

| March (leaf-off) | 143.7 | 121.4 | 22.3 | 84 | 16 |

| May (partial leaf cover) | 116.2 | 40.8 | 75.4 | 35 | 65 |

| August (full leaf cover) | 112.6 | 7.5 | 105.1 | 7 | 93 |

| Dataset | Point Density (Points/m2) | |||

|---|---|---|---|---|

| 25th Percentile | Median | 75th Percentile | ||

| Original point cloud | March (leaf-off) | 1000 | 3600 | 5500 |

| May (partial leaf cover) | 1100 | 2500 | 4500 | |

| August (full leaf cover) | 1000 | 2500 | 4600 | |

| Bare earth point cloud | March (leaf-off) | 900 | 3300 | 4900 |

| May (partial leaf cover) | 600 | 1100 | 1700 | |

| August (full leaf cover) | 100 | 200 | 600 | |

| March | May | August | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Plot 119 | Plot 115 | Total | Plot 119 | Plot 115 | Total | Plot 119 | Plot 115 | Total | ||

| Total number of trees | 585 | 1080 | 1665 | 585 | 1080 | 1665 | 585 | 1080 | 1665 | |

| Proposed approach | True positive | 567 | 1034 | 1601 | 539 | 923 | 1462 | 0 | 0 | 0 |

| False positive | 0 | 0 | 0 | 151 | 29 | 180 | 2 | 2 | 2 | |

| False negative | 18 | 46 | 64 | 46 | 157 | 203 | 585 | 1080 | 1665 | |

| Precision | 1.00 | 1.00 | 1.00 | 0.78 | 0.97 | 0.89 | 0.00 | 0.00 | 0.00 | |

| Recall | 0.97 | 0.96 | 0.96 | 0.92 | 0.85 | 0.88 | 0.00 | 0.00 | 0.00 | |

| F1 score | 0.98 | 0.98 | 0.98 | 0.85 | 0.91 | 0.88 | N/A | N/A | N/A | |

| DBSCAN | True positive | 584 | 1078 | 1662 | 524 | 853 | 1377 | 8 | 1 | 9 |

| False positive | 6 | 0 | 6 | 225 | 45 | 270 | 291 | 91 | 382 | |

| False negative | 1 | 2 | 3 | 61 | 227 | 288 | 577 | 1079 | 1656 | |

| Precision | 0.99 | 1.00 | 1.00 | 0.70 | 0.95 | 0.84 | 0.03 | 0.01 | 0.02 | |

| Recall | 1.00 | 1.00 | 1.00 | 0.90 | 0.79 | 0.83 | 0.01 | 0.00 | 0.01 | |

| F1 score | 0.99 | 1.00 | 1.00 | 0.79 | 0.86 | 0.83 | 0.02 | 0.00 | 0.01 | |

| Plot 119 | Plot 115 | Total | |||||

|---|---|---|---|---|---|---|---|

| March (leaf-off) | Mean | 0.02 | 0.02 | −0.02 | 0.01 | −0.01 | 0.01 |

| Std. Dev. | 0.08 | 0.07 | 0.08 | 0.07 | 0.08 | 0.07 | |

| RMSE | 0.08 | 0.08 | 0.08 | 0.07 | 0.08 | 0.07 | |

| May (partial leaf cover) | Mean | 0.01 | 0.02 | −0.02 | 0.02 | −0.01 | 0.02 |

| Std. Dev. | 0.12 | 0.08 | 0.08 | 0.09 | 0.10 | 0.09 | |

| RMSE | 0.12 | 0.08 | 0.09 | 0.09 | 0.10 | 0.09 | |

| Reference | Source | Number of Observations | |||||

|---|---|---|---|---|---|---|---|

| Parameter | Std. Dev. | Parameter | Std. Dev. | ||||

| March (leaf-off) | May (partial leaf cover) | 1538 | 0.085 | 0.011 | 0.002 | −0.006 | 0.002 |

| Reference | Source | Number of Observations | |||

|---|---|---|---|---|---|

| Parameter | Std. Dev. | ||||

| March (leaf-off) | May (partial leaf cover) | 11,918 | 0.021 | 0.011 | 1.91 |

| March (leaf-off) | August (full leaf cover) | 11,098 | 0.071 | 0.109 | 6.75 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, Y.-C.; Liu, J.; Fei, S.; Habib, A. Leaf-Off and Leaf-On UAV LiDAR Surveys for Single-Tree Inventory in Forest Plantations. Drones 2021, 5, 115. https://doi.org/10.3390/drones5040115

Lin Y-C, Liu J, Fei S, Habib A. Leaf-Off and Leaf-On UAV LiDAR Surveys for Single-Tree Inventory in Forest Plantations. Drones. 2021; 5(4):115. https://doi.org/10.3390/drones5040115

Chicago/Turabian StyleLin, Yi-Chun, Jidong Liu, Songlin Fei, and Ayman Habib. 2021. "Leaf-Off and Leaf-On UAV LiDAR Surveys for Single-Tree Inventory in Forest Plantations" Drones 5, no. 4: 115. https://doi.org/10.3390/drones5040115

APA StyleLin, Y.-C., Liu, J., Fei, S., & Habib, A. (2021). Leaf-Off and Leaf-On UAV LiDAR Surveys for Single-Tree Inventory in Forest Plantations. Drones, 5(4), 115. https://doi.org/10.3390/drones5040115