Quantifying the Spatial Variability of Annual and Seasonal Changes in Riverscape Vegetation Using Drone Laser Scanning

Abstract

:1. Introduction

2. Materials and Methods

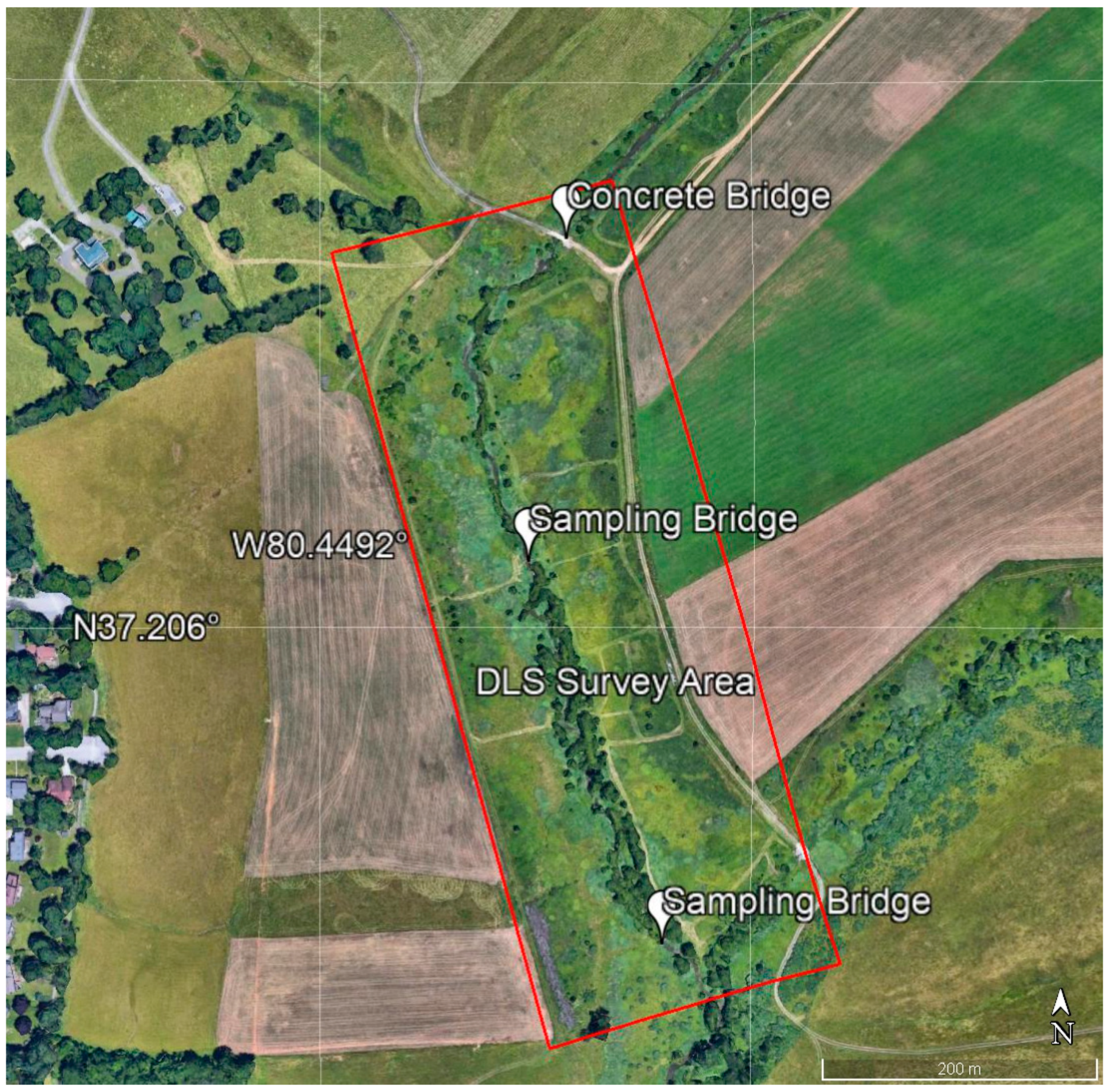

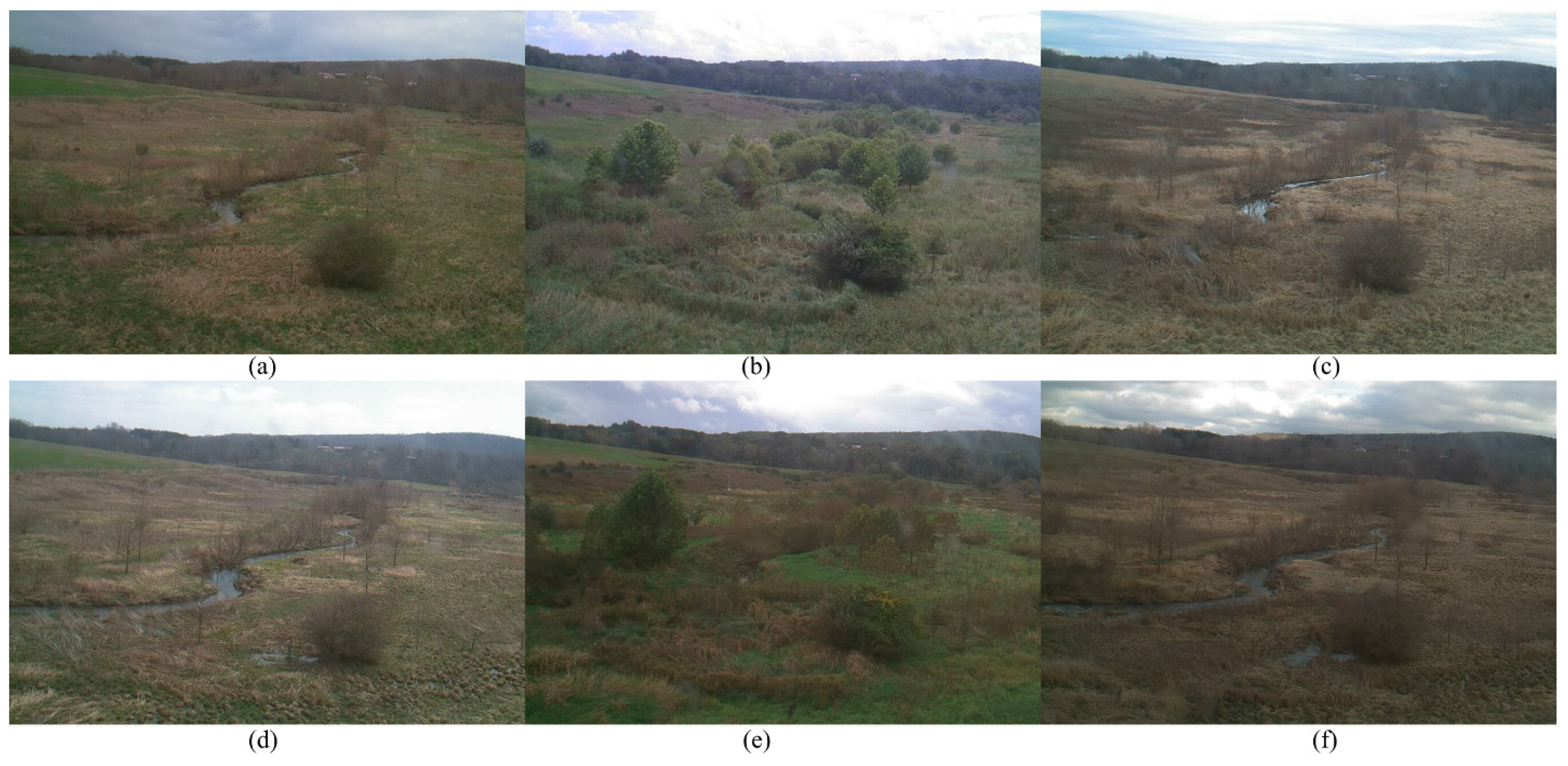

2.1. Study Area

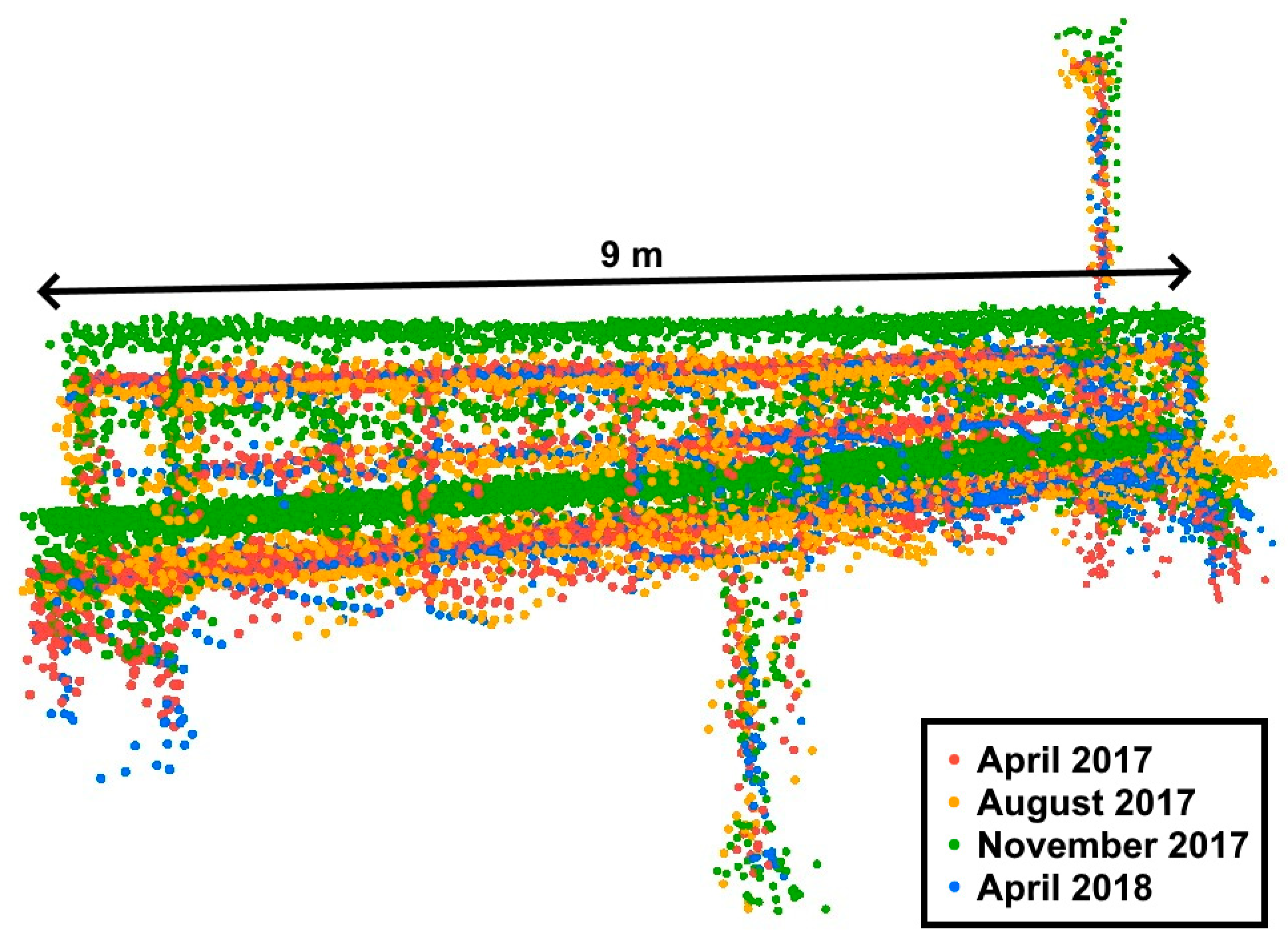

2.2. Lidar Data Collection

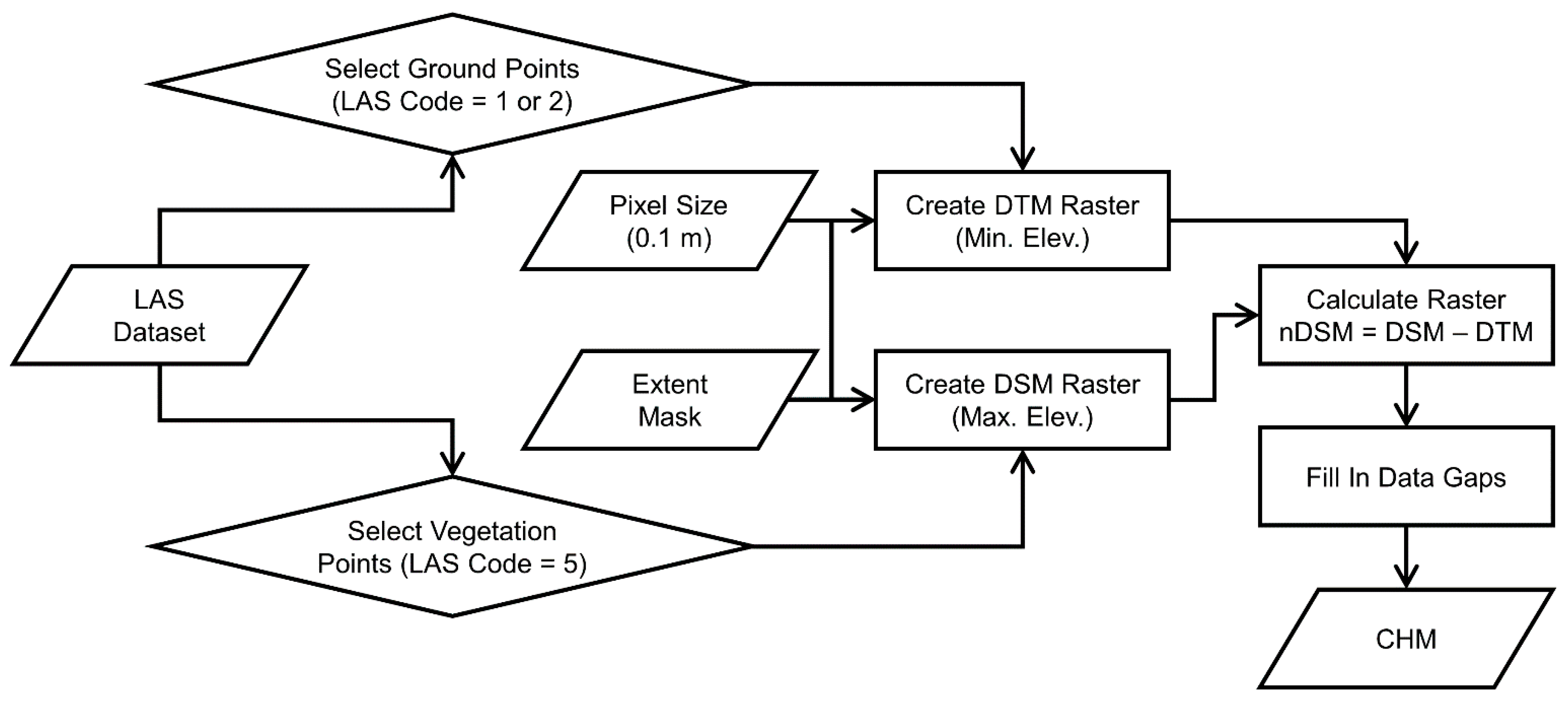

2.3. Lidar Data Preprocessing

2.4. Lidar Data Classification

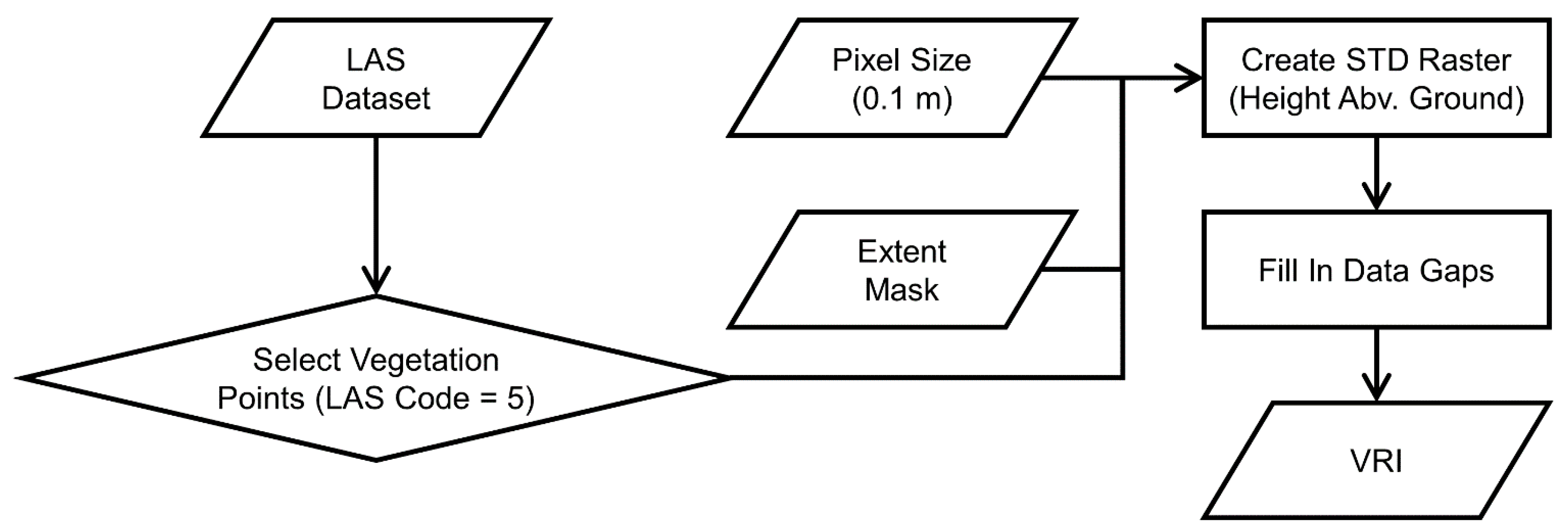

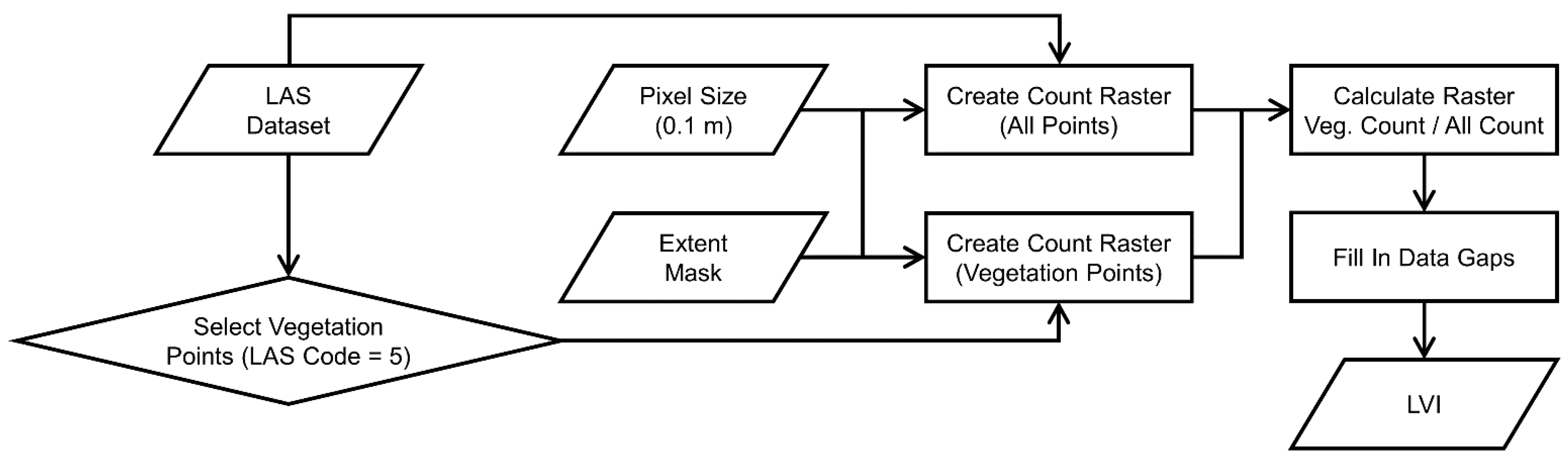

2.5. Lidar Vegetation Metrics

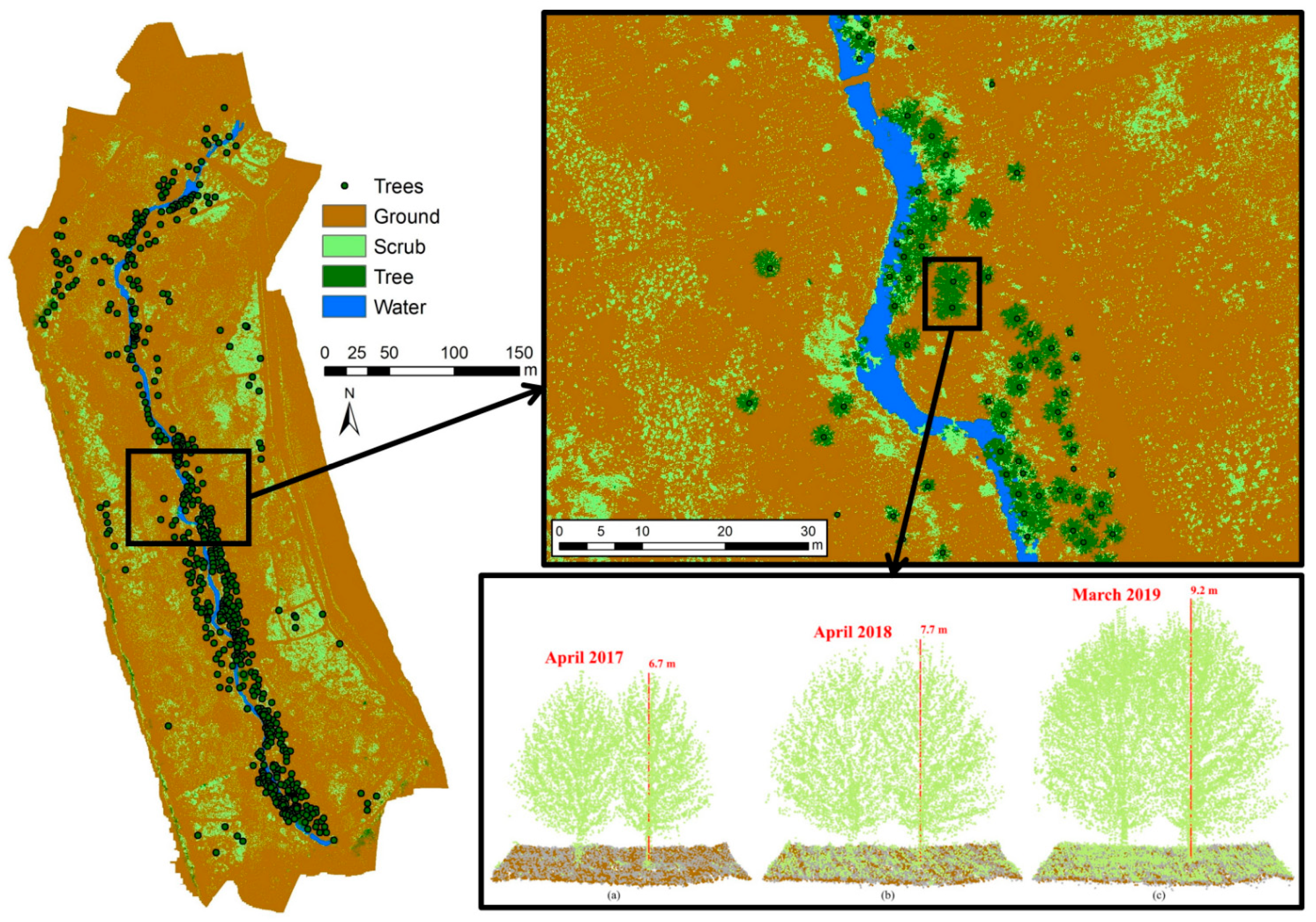

2.6. Vegetation Classification, Distance to Water, and Tree Identification

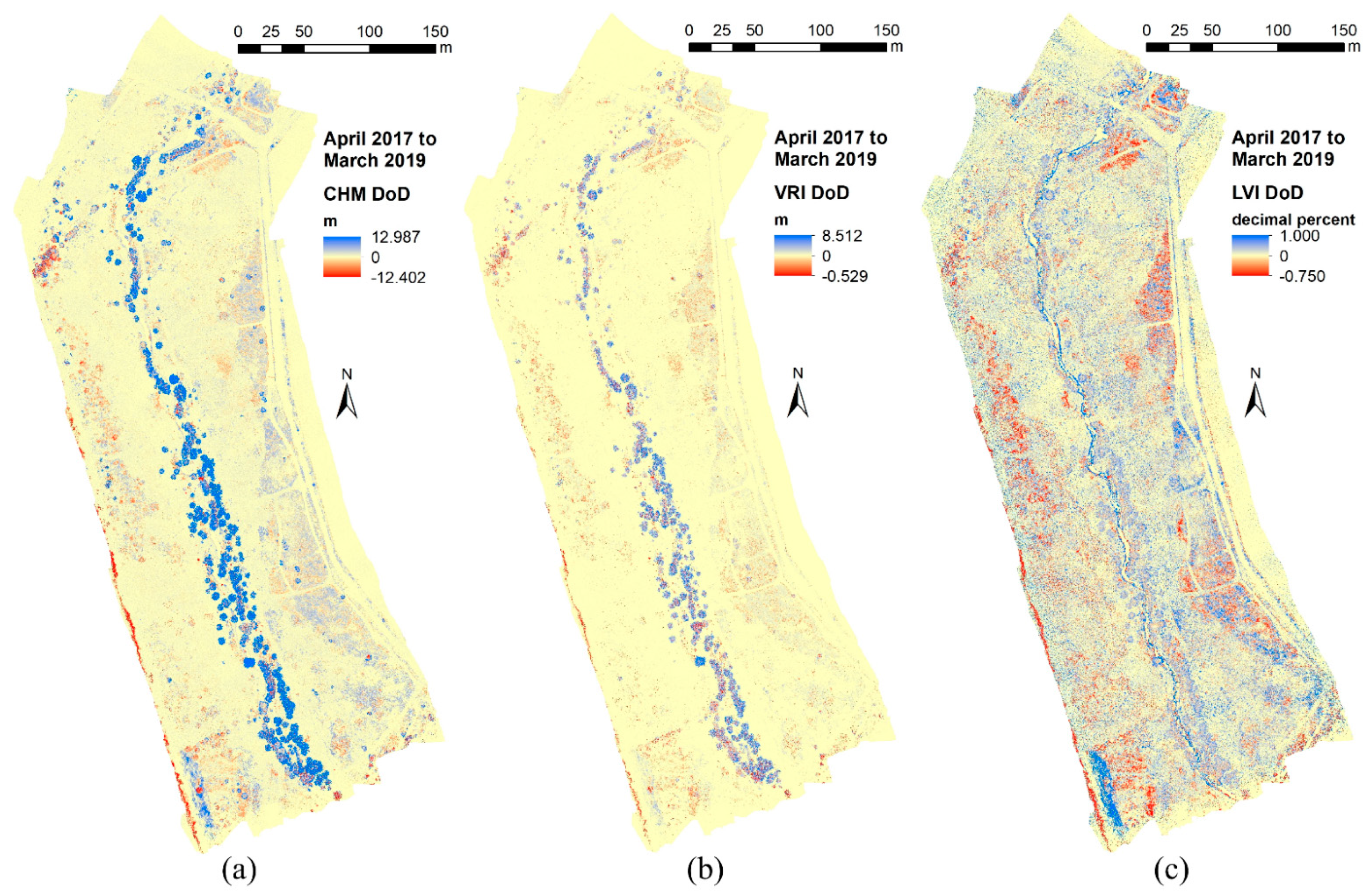

2.7. Annual and Seasonal Change Detection

3. Results

3.1. Point Density Comparison and Elevation Bias between the Six Lidar Scans

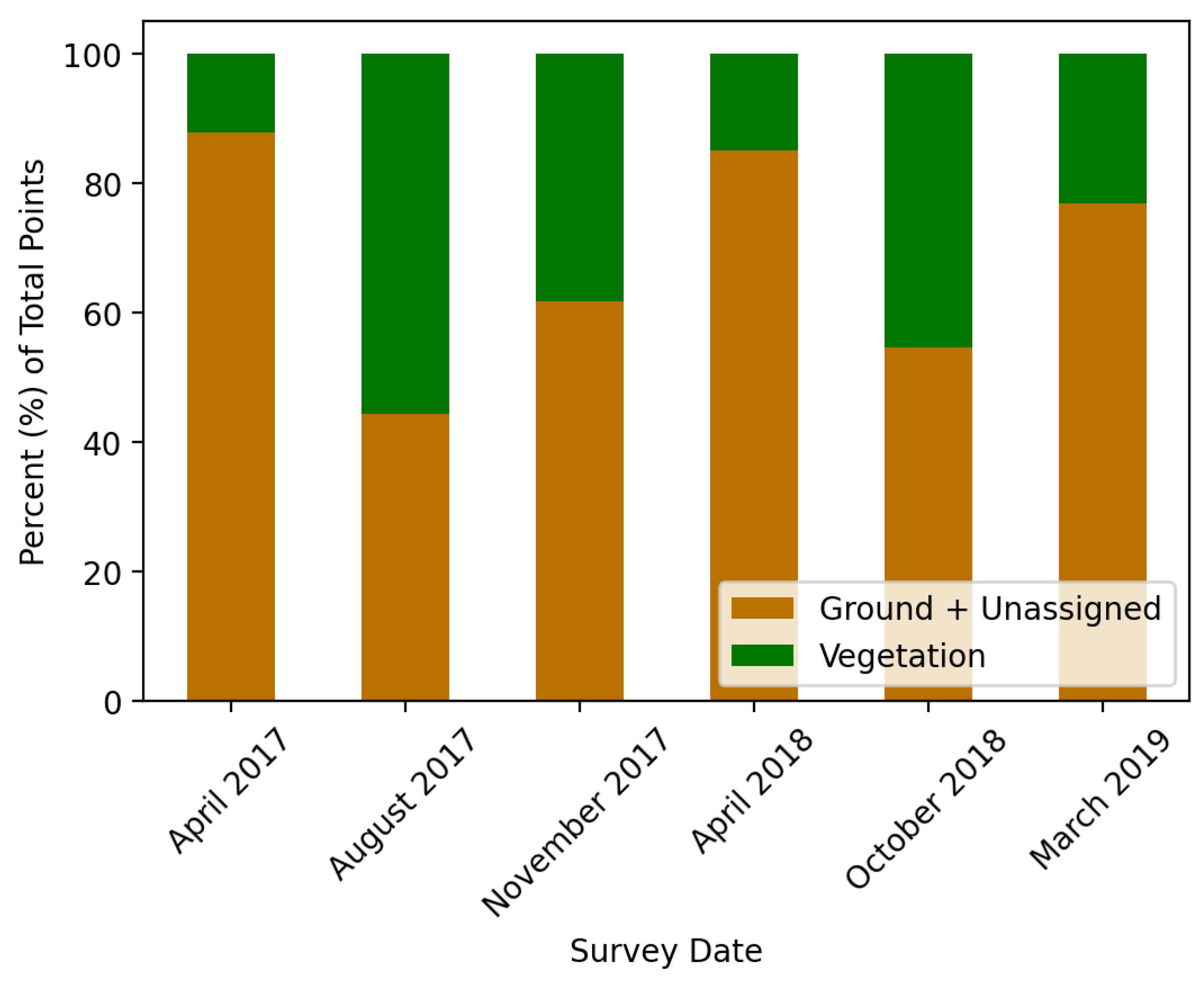

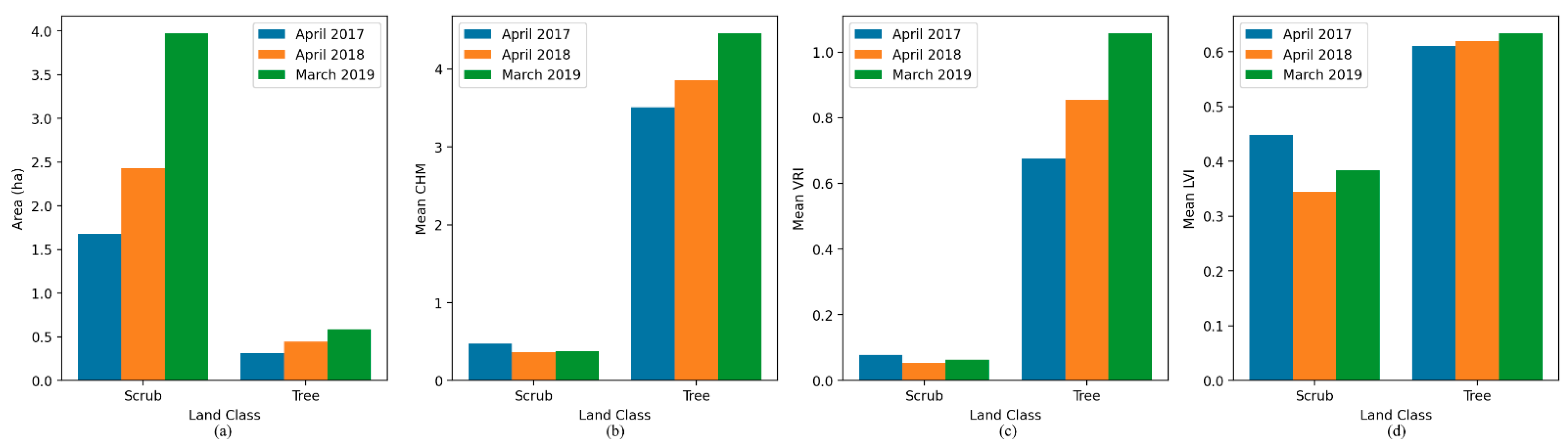

3.2. Annual and Seasonal Change of Lidar Point Classifications

3.3. Annual Change of Maximum Tree Height

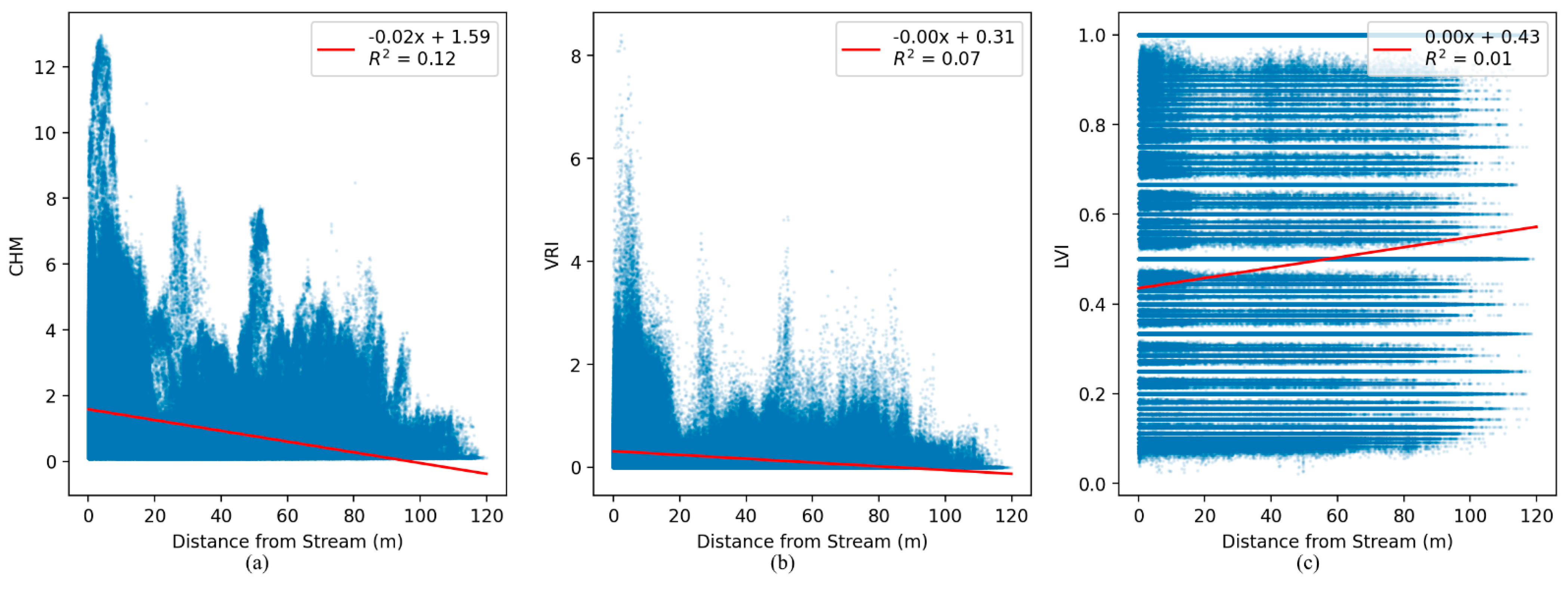

3.4. Baseline Lidar Vegetation Metrics

3.5. Annual Change of Lidar Vegetation Metrics

3.6. Seasonal Change of Lidar Vegetation Metrics

4. Discussion

Limitations with the Current Study and Future Research

5. Conclusions

- Trees were defined by annual change, while seasonal variability dominated scrub.

- Trees closer to the stream (within 20 m) grew faster than trees farther from the stream (greater than 20 m), although this trend was not observed with scrub.

- The trends observed in height (CHM) and roughness (VRI) were very similar and the differences were mainly in terms of the scale of each metric between scrub and trees.

- Height (CHM) and roughness (VRI) were more influenced by annual change.

- Density (LVI) was more influenced by seasonal variability.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Fausch, K.D.; Torgersen, C.E.; Baxter, C.V.; Li, H.W. Landscapes to Riverscapes: Bridging the Gap between Research and Conservation of Stream Fishes. BioScience 2002, 52, 483–498. [Google Scholar] [CrossRef] [Green Version]

- Carbonneau, P.; Fonstad, M.A.; Marcus, W.A.; Dugdale, S.J. Making Riverscapes Real. Geomorphology 2012, 137, 74–86. [Google Scholar] [CrossRef]

- Dietrich, J.T. Riverscape Mapping with Helicopter-Based Structure-from-Motion Photogrammetry. Geomorphology 2016, 252, 144–157. [Google Scholar] [CrossRef]

- Farid, A.; Rautenkranz, D.; Goodrich, D.C.; Marsh, S.E.; Sorooshian, S. Riparian Vegetation Classification from Airborne Laser Scanning Data with an Emphasis on Cottonwood Trees. Can. J. Remote Sens. 2006, 32, 15–18. [Google Scholar] [CrossRef]

- Heritage, G.; Hetherington, D. Towards a Protocol for Laser Scanning in Fluvial Geomorphology. Earth Surf. Process. Landf. 2007, 32, 66–74. [Google Scholar] [CrossRef]

- Resop, J.P.; Kozarek, J.L.; Hession, W.C. Terrestrial Laser Scanning for Delineating In-Stream Boulders and Quantifying Habitat Complexity Measures. Photogramm. Eng. Remote Sens. 2012, 78, 363–371. [Google Scholar] [CrossRef] [Green Version]

- Woodget, A.S.; Austrums, R.; Maddock, I.P.; Habit, E. Drones and Digital Photogrammetry: From Classifications to Continuums for Monitoring River Habitat and Hydromorphology. Wiley Interdiscip. Rev. Water 2017, 4, 1–20. [Google Scholar] [CrossRef] [Green Version]

- Yang, B.; Hawthorne, T.L.; Torres, H.; Feinman, M. Using Object-Oriented Classification for Coastal Management in the East Central Coast of Florida: A Quantitative Comparison between UAV, Satellite, and Aerial Data. Drones 2019, 3, 60. [Google Scholar] [CrossRef] [Green Version]

- Resop, J.P.; Lehmann, L.; Hession, W.C. Drone Laser Scanning for Modeling Riverscape Topography and Vegetation: Comparison with Traditional Aerial Lidar. Drones 2019, 3, 35. [Google Scholar] [CrossRef] [Green Version]

- Huang, C.; Peng, Y.; Lang, M.; Yeo, I.-Y.; McCarty, G. Wetland Inundation Mapping and Change Monitoring Using Landsat and Airborne LiDAR Data. Remote Sens. Environ. 2014, 141, 231–242. [Google Scholar] [CrossRef]

- Anders, N.S.; Seijmonsbergen, A.C.; Bouten, W. Geomorphological Change Detection Using Object-Based Feature Extraction from Multi-Temporal LiDAR Data. IEEE Geosci. Remote Sens. Lett. 2013, 10, 1587–1591. [Google Scholar] [CrossRef] [Green Version]

- Okyay, U.; Telling, J.; Glennie, C.L.; Dietrich, W.E. Airborne Lidar Change Detection: An Overview of Earth Sciences Applications. Earth-Sci. Rev. 2019, 198, 102929. [Google Scholar] [CrossRef]

- Resop, J.P.; Hession, W.C. Terrestrial Laser Scanning for Monitoring Streambank Retreat: Comparison with Traditional Surveying Techniques. J. Hydraul. Eng. 2010, 136, 794–798. [Google Scholar] [CrossRef]

- O’Neal, M.A.; Pizzuto, J.E. The Rates and Spatial Patterns of Annual Riverbank Erosion Revealed through Terrestrial Laser-Scanner Surveys of the South River, Virginia. Earth Surf. Process. Landf. 2011, 36, 695–701. [Google Scholar] [CrossRef]

- Saarinen, N.; Vastaranta, M.; Vaaja, M.; Lotsari, E.; Jaakkola, A.; Kukko, A.; Kaartinen, H.; Holopainen, M.; Hyyppä, H.; Alho, P. Area-Based Approach for Mapping and Monitoring Riverine Vegetation Using Mobile Laser Scanning. Remote Sens. 2013, 5, 5285–5303. [Google Scholar] [CrossRef] [Green Version]

- Day, S.S.; Gran, K.B.; Belmont, P.; Wawrzyniec, T. Measuring Bluff Erosion Part 1: Terrestrial Laser Scanning Methods for Change Detection. Earth Surf. Process. Landf. 2013, 38, 1055–1067. [Google Scholar] [CrossRef]

- Flener, C.; Vaaja, M.; Jaakkola, A.; Krooks, A.; Kaartinen, H.; Kukko, A.; Kasvi, E.; Hyyppä, H.; Hyyppä, J.; Alho, P. Seamless Mapping of River Channels at High Resolution Using Mobile LiDAR and UAV-Photography. Remote Sens. 2013, 5, 6382–6407. [Google Scholar] [CrossRef] [Green Version]

- Leyland, J.; Hackney, C.R.; Darby, S.E.; Parsons, D.R.; Best, J.L.; Nicholas, A.P.; Aalto, R.; Lague, D. Extreme Flood-Driven Fluvial Bank Erosion and Sediment Loads: Direct Process Measurements Using Integrated Mobile Laser Scanning (MLS) and Hydro-Acoustic Techniques. Earth Surf. Process. Landf. 2017, 42, 334–346. [Google Scholar] [CrossRef]

- Andersen, H.-E.; McGaughey, R.J.; Reutebuch, S.E. Estimating Forest Canopy Fuel Parameters Using LIDAR Data. Remote Sens. Environ. 2005, 94, 441–449. [Google Scholar] [CrossRef]

- Huang, W.; Dolan, K.; Swatantran, A.; Johnson, K.; Tang, H.; O’Neil-Dunne, J.; Dubayah, R.; Hurtt, G. High-Resolution Mapping of Aboveground Biomass for Forest Carbon Monitoring System in the Tri-State Region of Maryland, Pennsylvania and Delaware, USA. Environ. Res. Lett. 2019, 14, 095002. [Google Scholar] [CrossRef] [Green Version]

- Mundt, J.T.; Streutker, D.R.; Glenn, N.F. Mapping Sagebrush Distribution Using Fusion of Hyperspectral and Lidar Classifications. Photogramm. Eng. Remote Sens. 2006, 72, 47–54. [Google Scholar] [CrossRef] [Green Version]

- Arcement, G.J.; Schneider, V.R. Guide for Selecting Manning’s Roughness Coefficients for Natural Channels and Flood Plains; United States Geological Survey: Denver, CO, USA, 1989.

- You, H.; Wang, T.; Skidmore, A.K.; Xing, Y. Quantifying the Effects of Normalisation of Airborne LiDAR Intensity on Coniferous Forest Leaf Area Index Estimations. Remote Sens. 2017, 9, 163. [Google Scholar] [CrossRef] [Green Version]

- Kato, A.; Moskal, L.M.; Schiess, P.; Swanson, M.E.; Calhoun, D.; Stuetzle, W. Capturing Tree Crown Formation through Implicit Surface Reconstruction Using Airborne Lidar Data. Remote Sens. Environ. 2009, 113, 1148–1162. [Google Scholar] [CrossRef]

- Jakubowski, M.K.; Li, W.; Guo, Q.; Kelly, M. Delineating Individual Trees from Lidar Data: A Comparison of Vector- and Raster-Based Segmentation Approaches. Remote Sens. 2013, 5, 4163–4186. [Google Scholar] [CrossRef] [Green Version]

- Wu, X.; Shen, X.; Cao, L.; Wang, G.; Cao, F. Assessment of Individual Tree Detection and Canopy Cover Estimation Using Unmanned Aerial Vehicle Based Light Detection and Ranging (UAV-LiDAR) Data in Planted Forests. Remote Sens. 2019, 11, 908. [Google Scholar] [CrossRef] [Green Version]

- Dorn, H.; Vetter, M.; Höfle, B. GIS-Based Roughness Derivation for Flood Simulations: A Comparison of Orthophotos, LiDAR and Crowdsourced Geodata. Remote Sens. 2014, 6, 1739–1759. [Google Scholar] [CrossRef] [Green Version]

- Lefsky, M.A.; Harding, D.J.; Keller, M.; Cohen, W.B.; Carabajal, C.C.; Espirito-Santo, F.D.B.; Hunter, M.O.; de Oliveira, R. Estimates of Forest Canopy Height and Aboveground Biomass Using ICESat. Geophys. Res. Lett. 2005, 32. [Google Scholar] [CrossRef] [Green Version]

- Zhou, T.; Popescu, S. Waveformlidar: An R Package for Waveform LiDAR Processing and Analysis. Remote Sens. 2019, 11, 2552. [Google Scholar] [CrossRef] [Green Version]

- Chow, V.T. Open-Channel Hydraulics; McGraw-Hill: New York, NY, USA, 1959; ISBN 978-0-07-085906-7. [Google Scholar]

- Prior, E.M.; Aquilina, C.A.; Czuba, J.A.; Pingel, T.J.; Hession, W.C. Estimating Floodplain Vegetative Roughness Using Drone-Based Laser Scanning and Structure from Motion Photogrammetry. Remote Sens. 2021, 13, 2616. [Google Scholar] [CrossRef]

- Barilotti, A.; Sepic, F.; Abramo, E.; Crosilla, F. Improving the Morphological Analysis for Tree Extraction: A Dynamic Approach to Lidar Data. In Proceedings of the ISPRS Workshop on Laser Scanning 2007 and SilviLaser 2007, Espoo, Finland, 12–14 September 2007. [Google Scholar]

- Wynn, T.; Hession, W.C.; Yagow, G. Stroubles Creek Stream Restoration; Virginia Department of Conservation and Recreation: Richmond, VA, USA, 2010.

- Wynn-Thompson, T.; Hession, W.C.; Scott, D. StREAM Lab at Virginia Tech. Resour. Mag. 2012, 19, 8–9. [Google Scholar] [CrossRef]

- YellowScan YellowScan Surveyor: The Lightest and Most Versatile UAV LiDAR Solution. Available online: https://www.yellowscan-lidar.com/products/surveyor/ (accessed on 21 March 2020).

- Isenburg, M. Processing Drone LiDAR from YellowScan’s Surveyor, a Velodyne Puck Based System. Available online: https://rapidlasso.com/2017/10/29/processing-drone-lidar-from-yellowscans-surveyor-a-velodyne-puck-based-system/ (accessed on 4 September 2021).

- Wheaton, J.M.; Brasington, J.; Darby, S.E.; Sear, D.A. Accounting for uncertainty in DEMs from repeat topographic surveys: Improved sediment budgets. Earth Surf. Process. Landf. 2010, 35, 136–156. [Google Scholar] [CrossRef]

- Williams, R. DEMs of Difference. Geomorphol. Tech. 2012, 2, 1–17. [Google Scholar]

| Class | Definition |

|---|---|

| Ground | Points most likely representing bare earth topography |

| Unassigned | Points between 0 m < height above ground < 0.1 m |

| Vegetation | Points between 0.1 m < height above ground < 15 m |

| Building | Points identified as human-made or built structures (e.g., bridges or cars) |

| Noise | Points identified as noise (e.g., bird-hits or lidar artifacts) |

| Lidar Vegetation Metrics | Definition | Value Range | Units |

|---|---|---|---|

| Canopy height model (CHM) | Measure of vegetation height (veg. elev.) − (ground elev.) | 0 to ~13 | Meters |

| Vegetative roughness index (VRI) | Measure of vegetative roughness St. dev. of vegetation height | 0 to ~9 | Meters |

| Lidar vegetation index (LVI) | Measure of vegetation density (count veg.)/(count all points) | 0 to 1 | Decimal Percent |

| Scan Date | Point Count | Point Density (All Returns/m2) | Pulse Density (First Returns/m2) |

|---|---|---|---|

| April 2017 | 41,661,008 | 502.43 | 492.39 |

| August 2017 | 42,148,141 | 508.30 | 501.89 |

| November 2017 | 42,389,739 | 511.21 | 490.65 |

| April 2018 | 42,994,259 | 518.51 | 501.61 |

| October 2018 | 42,584,883 | 513.57 | 500.07 |

| March 2019 | 51,135,652 | 616.69 | 590.30 |

| Scan Date | Mean Z Bias (m) Bridge DEMs | Mean Z Bias (m) Ground DTMs |

|---|---|---|

| April 2017 | Baseline | Baseline |

| August 2017 | −0.02 | 0.25 |

| November 2017 | −0.01 | 0.06 |

| April 2018 | 0.01 | −0.01 |

| October 2018 | −0.02 | 0.17 |

| March 2019 | −0.03 | −0.03 |

| Scrub Land Class Areas | Tree Land Class Areas | |||

|---|---|---|---|---|

| Vegetation Metric | Annual Trend (R2) | Seasonal Variability (NRMSE) | Annual Trend (R2) | Seasonal Variability (NRMSE) |

| Height (CHM) | 0.16 | 17.8% | 0.70 | 5.1% |

| Roughness (VRI) | 0.08 | 25.6% | 0.70 | 9.2% |

| Density (LVI) | 0.08 | 23.1% | 0.00 | 17.8% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Resop, J.P.; Lehmann, L.; Hession, W.C. Quantifying the Spatial Variability of Annual and Seasonal Changes in Riverscape Vegetation Using Drone Laser Scanning. Drones 2021, 5, 91. https://doi.org/10.3390/drones5030091

Resop JP, Lehmann L, Hession WC. Quantifying the Spatial Variability of Annual and Seasonal Changes in Riverscape Vegetation Using Drone Laser Scanning. Drones. 2021; 5(3):91. https://doi.org/10.3390/drones5030091

Chicago/Turabian StyleResop, Jonathan P., Laura Lehmann, and W. Cully Hession. 2021. "Quantifying the Spatial Variability of Annual and Seasonal Changes in Riverscape Vegetation Using Drone Laser Scanning" Drones 5, no. 3: 91. https://doi.org/10.3390/drones5030091

APA StyleResop, J. P., Lehmann, L., & Hession, W. C. (2021). Quantifying the Spatial Variability of Annual and Seasonal Changes in Riverscape Vegetation Using Drone Laser Scanning. Drones, 5(3), 91. https://doi.org/10.3390/drones5030091