1. Introduction

Multirotor drones are widely used in many applications [

1]. The multirotor can hover from a fixed position or fly as programmed while capturing video from a distance. This capture is cost-effective and does not require highly trained personnel.

A thermal imaging camera produces an image by detecting infrared (IR) radiation emitted by objects [

2,

3]. Because the thermal imaging camera uses the objects’ temperature instead of their visible properties, no illumination is required, and consistent imaging day and night is possible. Thermal images also pass through many visible obscurants, such as smoke, dust, haze, and light foliage [

4]. The long-wavelength (LW) IR band (8–14 μm) has less atmospheric attenuation and most of the radiation emitted by the human body is included in this band [

5]. This allows a LWIR thermal imaging camera to detect human activity day and night. The multirotor equipped with a thermal imaging camera can be used for search and rescue missions in hazardous areas as well as security and surveillance [

6,

7]. It is also useful for many applications such as wildlife monitoring, agricultural and industrial inspection [

8,

9]. However, compared to visible light images, the image resolution is smaller and thermal images do not provide texture and color information. The image quality can be varied by the climate and surrounding objects. Moreover, the unpredictable motion of the platform poses more challenges than a fixed camera [

10]. Thus, suitable intelligent image processing is required in order to overcome the shortcomings of aerial thermal imaging and retain its advantages.

There is an increasing number of studies for people detection using thermal images captured by a drone [

11,

12,

13,

14,

15], although there have been no studies for people tracking with the same configuration. The temperature difference was estimated using the spatial gray level co-occurrence matrix [

11], but humans were captured at a relatively short distance. In [

12], a two-stage hot-spot detection approach was proposed to recognize a person with a moving thermal camera, but the dataset was not obtained from the drone. In [

13], an autonomous unmanned helicopter platform was used to detect humans with thermal and color imagery. Human and fire detection was studied with optical and thermal sensors from high altitude unmanned aerial vehicle images [

14]. However, in [

13] and [

14], thermal and visual images were used together for detection. The multi-level method was applied to the thermal images obtained by a multirotor for people segmentation [

15].

Non-human object tracking from drones using a LWIR band thermal camera can be found in [

16,

17]. In [

16], a boat is captured and tracked with the Kalman filter and a constant velocity motion model. A colored-noise measurement model was adopted to track a small vessel [

17]. However, in [

16,

17], a fixed-wing drone capable of maintaining stable flight was used at sea, which is high detection and low false alarm environment.

Human detection and tracking using stationary thermal imaging cameras have been studied in [

18,

19,

20,

21,

22]. A contour-based background-subtraction to extract foreground objects was presented [

18]. A local adaptive thresholding method performs the pedestrian detection [

19]. In [

20], humans and animals were detected in difficult weather conditions using YOLO. People were detected and tracked from aerial thermal view based on the particle filter [

21]. The Kalman filter with the multi-level segmentation was adopted to track people in thermal images [

22]. Various targets in a thermal image database were tracked by the weighted correlation filter [

23].

Multiple targets are simultaneously tracked by estimating their kinematic state such as position, velocity, and acceleration [

24]. The interacting multiple model (IMM) estimator using multiple Kalman filters has been developed [

25] and successfully applied to track multiple high maneuvering targets [

26]. Recently, multiple moving vehicles were successfully tracked by a flying multirotor with a visual camera [

27]. Data association, which assigns measurements to tracks, is also an important task for tracking multiple targets in a cluttered environment. The nearest neighbor (NN) measurement–track association is the most effective in computing and has been successfully applied to precision target tracking [

27,

28,

29].

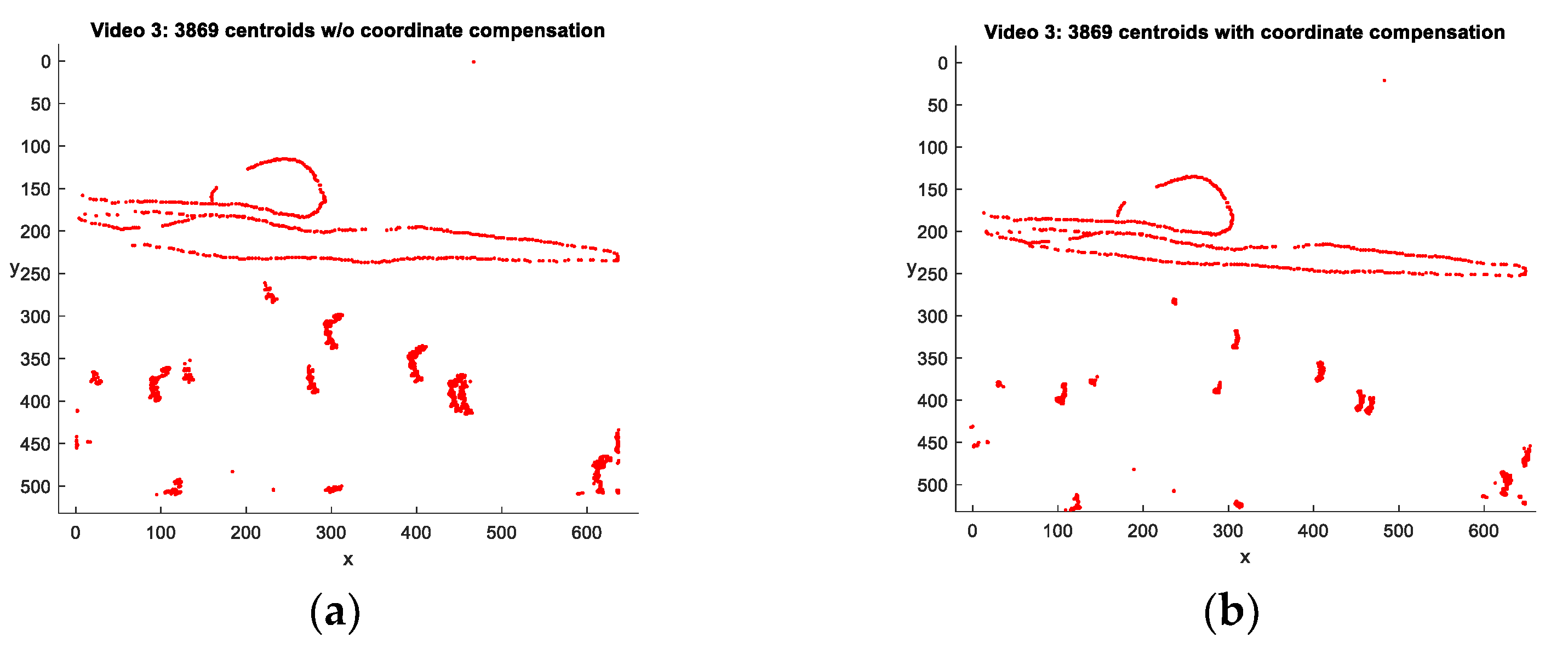

This paper addresses moving people detection and tracking with IR thermal video captured by a multirotor. First, considering the unstable motion of the drone, the global matching is performed to compensate the coordinate system of each frame [

30], then each frame of the video is analyzed through

k-means clustering [

31,

32] and morphological operations. Incorrectly segmented areas are eliminated using object size and shape information based on squareness and rectangularity [

33,

34]. In each frame, the centroid of the segmented area is considered the measured position and input to the next tracking stage.

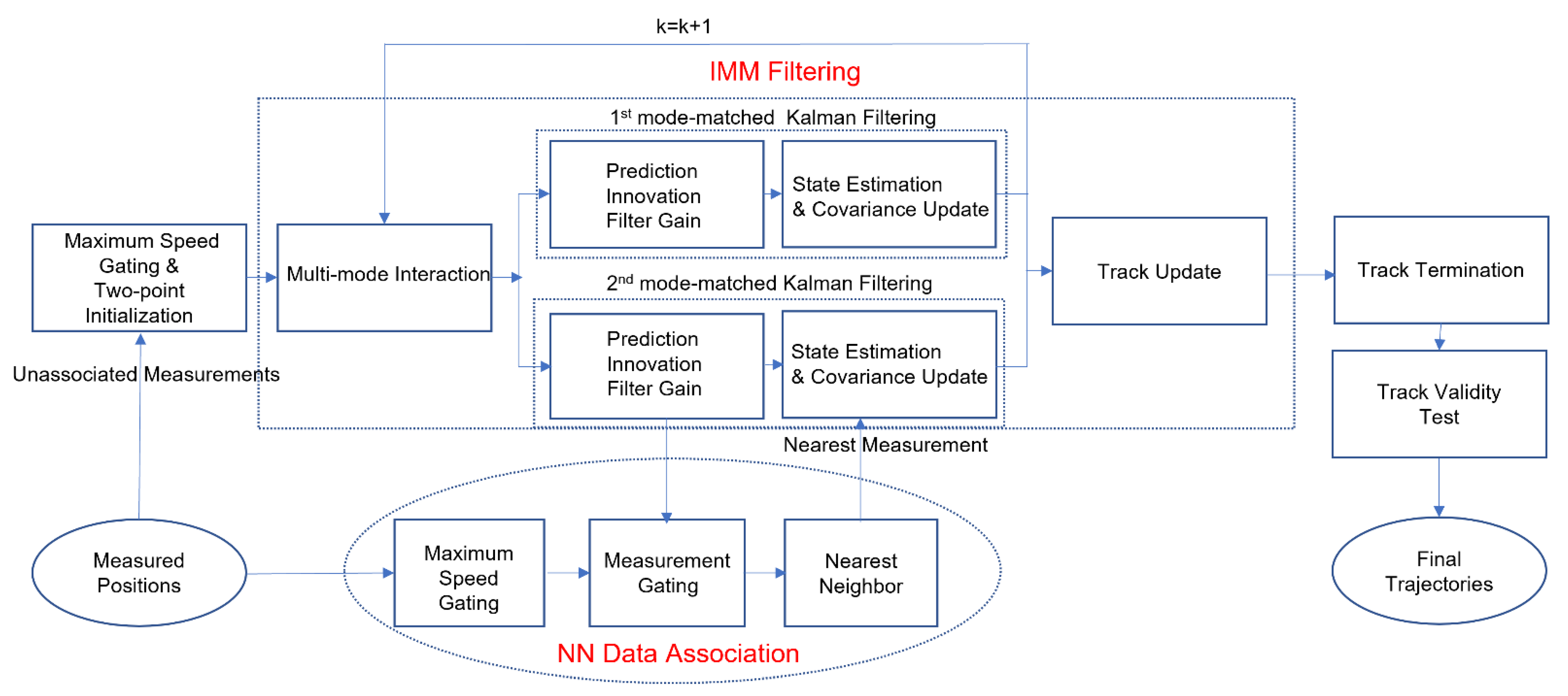

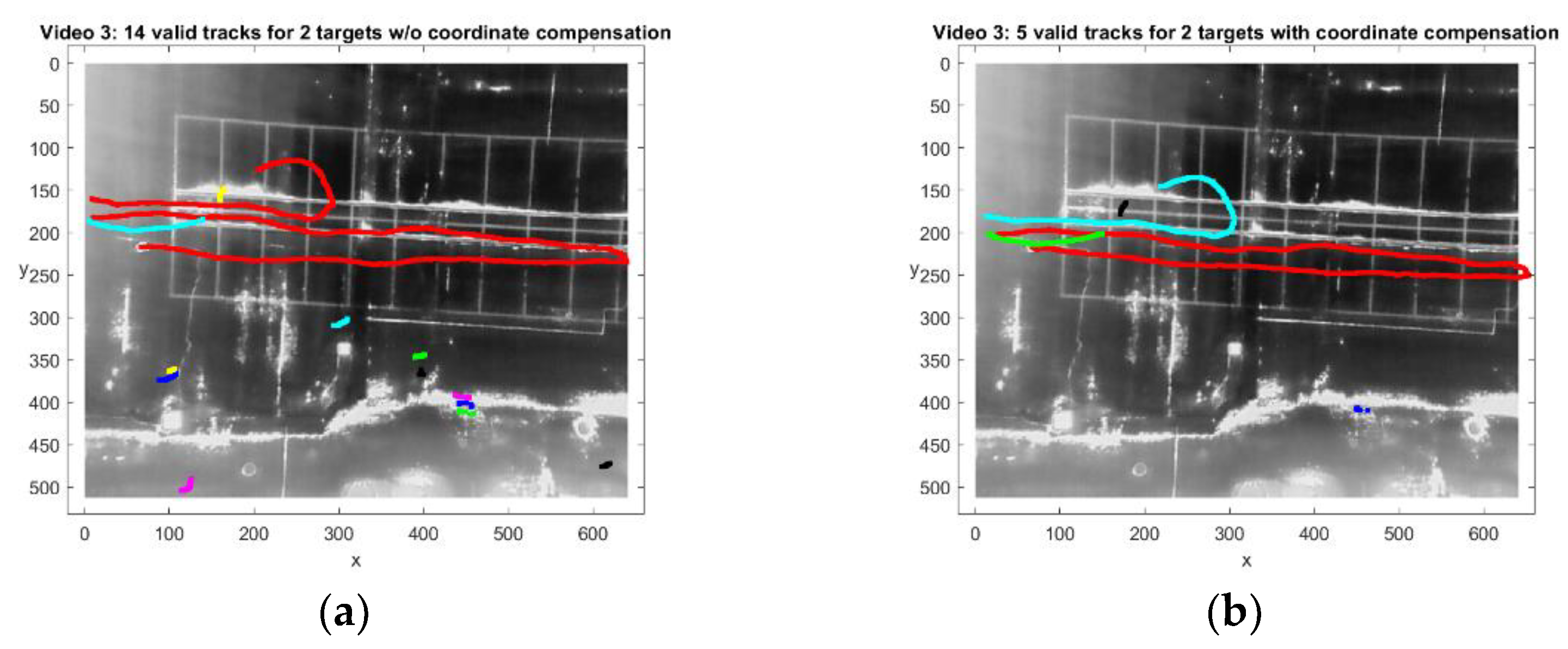

The tracking is performed by the IMM filter to estimate the kinematic state of the target. The track is initialized by the two-point differential initialization following the maximum speed gating. For measurements to track associations, the speed and position gating process is applied sequentially to exclude measurements outside the validation region. Then, the NN association assigns the closest valid measurements to tracks. The track is terminated if either of the two criteria is satisfied. One criterion is the maximum number of updates without a valid measurement and the other criterion is the minimum speed of the target. Even a stationary object can establish tracks because of the drone’s turbulence. The minimum target speed was set to eliminate false tracks caused by stationary objects in heavy false alarm environments. Finally, a validity test is performed to check the continuity of the track [

27].

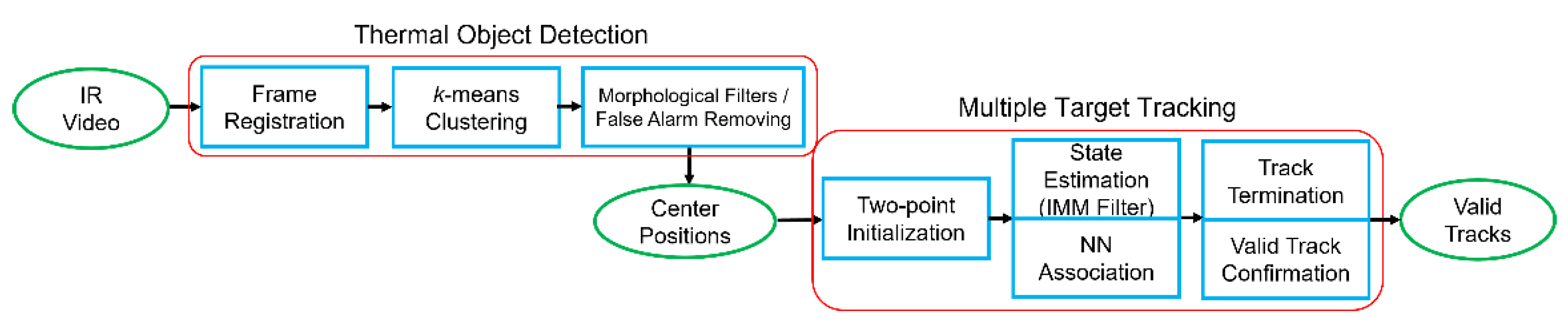

Figure 1 shows a block diagram of detecting and tracking people with thermal video captured by a multirotor.

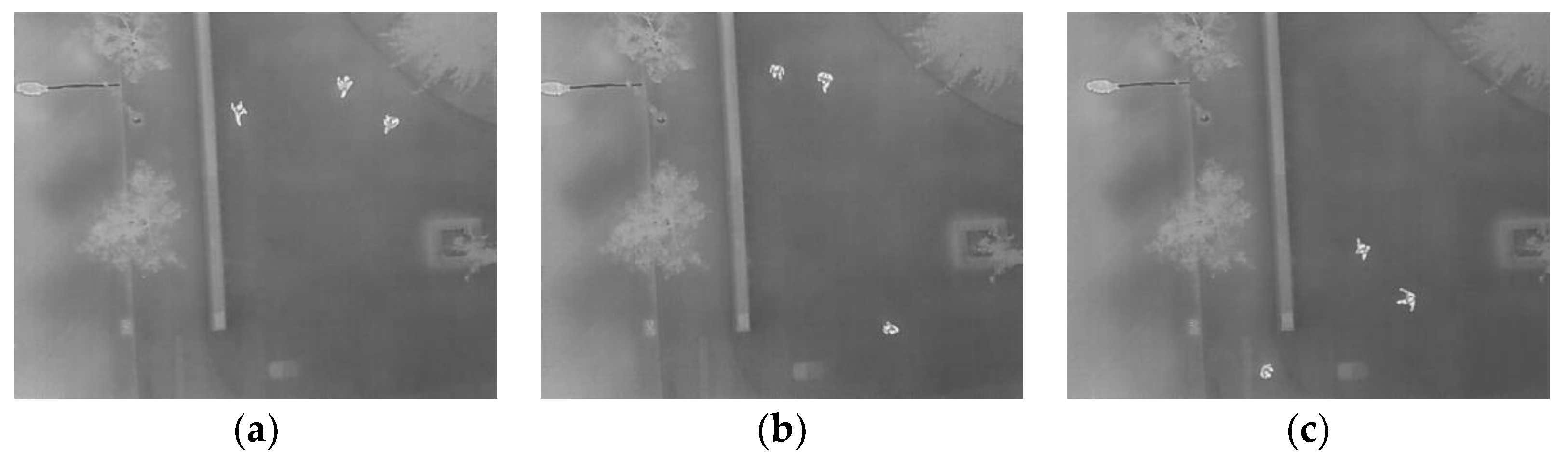

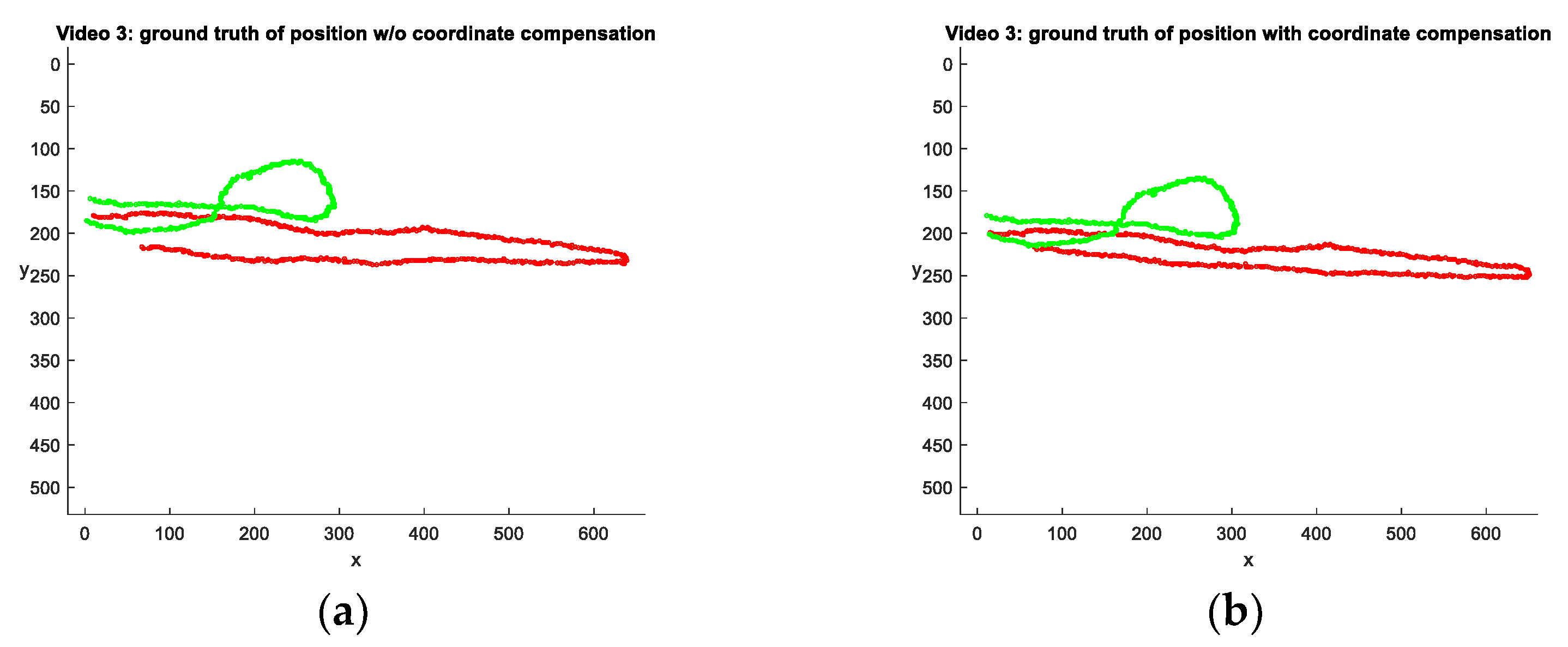

In the experiment, a drone hovering from a fixed position captures three IR thermal videos. The thermal camera operates in the LWIR band, which is suitable for detecting objects on surfaces. The first and second videos (

Videos 1 and 2) were captured on a winter night from an altitude of 30 m and 45 m, respectively. A total of eight people walk or run for 40 s in

Video 1 and three hikers walk, stand, or sit in the mountains for 30 s in

Video 2. They were covered with leaves in

Video 2. The third video (

Video 3) was captured on a windy summer day from an altitude of 100 m. Two people walk in a complex background for 50 s. The average detection rates are about 91.4%, 91.8%, 79.8% for

Videos 1–3, respectively. The false alarm rates are 1.08, 0.28, and 6.36 per frame for

Videos 1–3, respectively. The average position and velocity root mean square error (RMSE) are calculated as 0.077 m and 0.528 m/s, respectively for

Video 1, 0.06 m, and 0.582 m/s, respectively for

Video 2, and 0.177 m and 1.838 m/s, respectively for

Video 3. Three segmented tracks are generated on one target in

Video 3, but the number of false tracks is reduced from 10 to 1 by the proposed termination scheme. A two-stage scheme of detection and tracking has been proposed and successfully applied to various thermal videos. To the best of my knowledge, this is the first study to track people with a thermal imaging camera mounted on a small drone.

The rest of the paper is organized as follows. Object detection based on

k-means clustering is presented in

Section 2. Target tracking with the IMM filter and the track termination criteria is described in

Section 3.

Section 4 demonstrates the experimental results. The conclusion follows in

Section 5.

2. People Detection in Thermal Images

This section describes object detection in IR thermal images. The human detection in IR images consists of coordinate compensation between the reference frame and other frames,

k-means clustering, morphological operations, and false alarm removing based on the size and shape of the target. The coordinates are corrected to compensate for unstable motion of the platform. The global matching between two frames is performed by minimizing the sum of absolute difference (SAD) as follows,

where

I1 and

Ik are the first and the

k-th frame,

Sx and

Sy are the image sizes in the

x and

y directions, respectively, and

NK is the total number of frames. The coordinates of the frame

Ik are compensated by

. Then, the

k-means clustering is performed to group the pixels in each frame into multiple clusters to minimize the following cost function:

where

Nc is the number of clusters,

Cj is the pixel set of the

j-th cluster, and

μj is the mean of pixel intensities in the

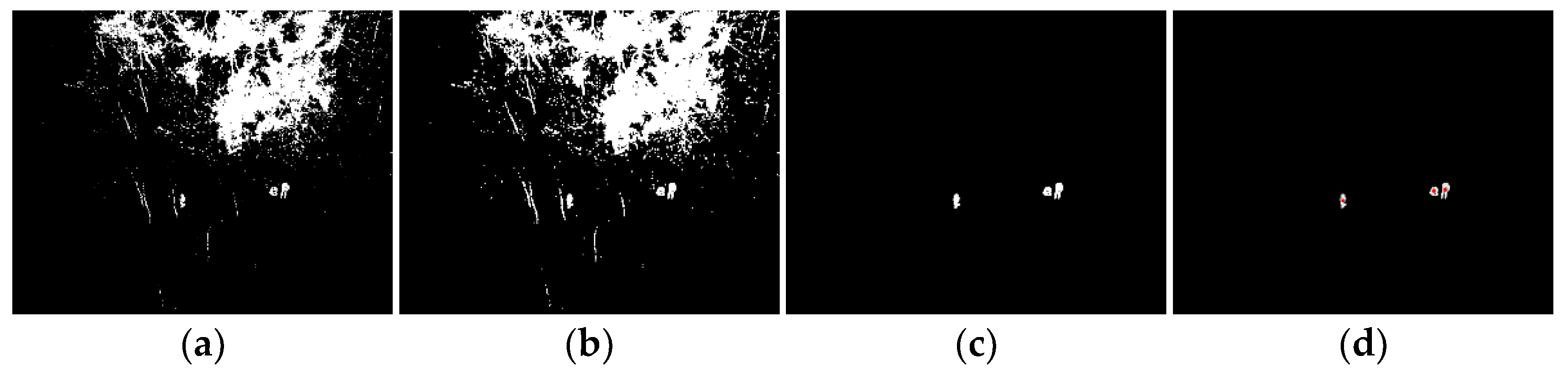

j-th cluster. The pixels in the cluster with the largest mean are labeled as areas of alternate (white) objects that produce a binary image. The morphological operations, closing (dilation and erosion) is applied to the binary image. The dilation filter connects fragmented areas of one object. The erosion filter removes very small clutters and recovers the dilated boundary. Finally, the object area is tested for size and two shape properties. One is the ratio between the minor and major axes of the basic rectangle, which measures squareness, and the other is the ratio between the object size and the basic rectangle size, which measures rectangularity [

33,

34]. The basic rectangle is defined as the smallest rectangle that includes the object. Therefore, four parameters are utilized to eliminate false alarms: maximum (

θmax) and minimum (

θmin) size of the basic rectangle in the imaging plane, the minimum squareness, and the minimum rectangularity.

Figure 2 illustrates object imaging and a basic rectangle with its major and minor axes. The selection process for the number of clusters and the four parameters will be described in

Section 4.