Unmanned Aerial Vehicles for Wildland Fires: Sensing, Perception, Cooperation and Assistance

Abstract

1. Introduction

2. Fire Assistance

3. Sensing Instruments

3.1. Infrared Spectrum

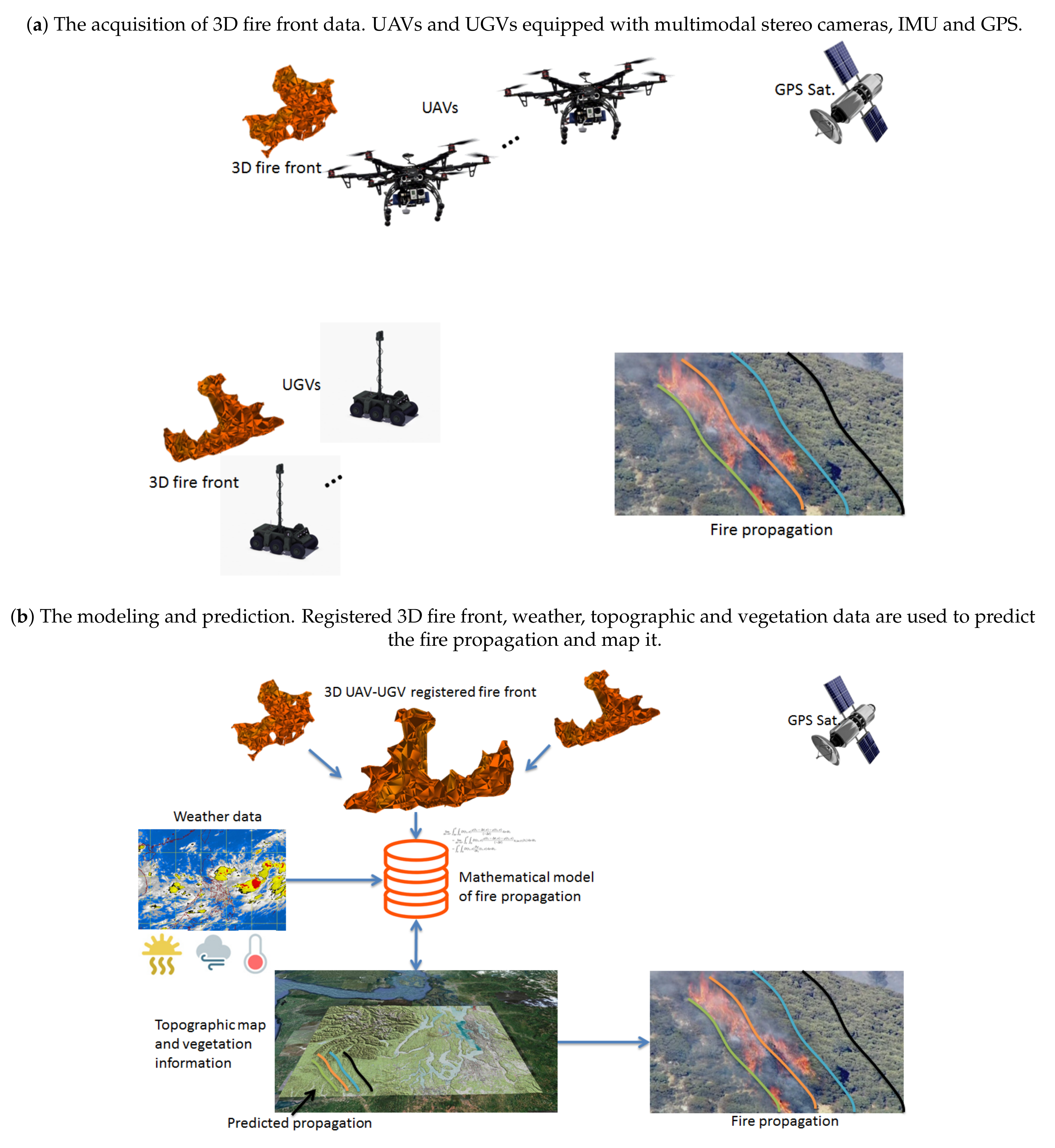

3.2. Visible Spectrum

3.3. Multispectral Cameras

3.4. Other Sensors

4. Fire Detection and Segmentation

4.1. Fire Segmentation

4.1.1. Color Segmentation

4.1.2. Motion Segmentation

4.2. Fire Detection and Features Extraction

4.3. Considerations in UAV Applications

5. Wildland Fire Datasets

6. Fire Geolocation and Fire Modeling

7. Coordination Strategy

7.1. Single UAV

7.2. Centralized

7.3. Decentralized

8. Cooperative Autonomous Systems for Wildland Fires

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Jolly, W.; Cochrane, M.; Freeborn, P.; Holden, Z.; Brown, T.; Williamson, G.; Bowman, D. Climate-induced variations in global wildfire danger from 1979 to 2013. Nat. Commun. 2015, 6, 7537. [Google Scholar] [CrossRef]

- Kelly, R.; Chipman, M.; Higuera, P.; Stefanova, I.; Brubaker, L.; Hu, F. Recent burning of boreal forests exceeds fire regime limits of the past 10,000 years. Proc. Natl. Acad. Sci. USA 2013, 110. [Google Scholar] [CrossRef]

- Amadeo, K. Wildfire Facts, Their Damage, and Effect on the Economy. 2019. Available online: https://www.thebalance.com/wildfires-economic-impact-4160764 (accessed on 30 December 2019).

- Westerling, A.L.; Hidalgo, H.G.; Cayan, D.R.; Swetnam, T.W. Warming and Earlier Spring Increase Western U.S. Forest Wildfire Activity. Science 2006, 313, 940–943. [Google Scholar] [CrossRef]

- Insurance Information Institute. Facts + Statistics: Wildfires. Available online: https://www.iii.org/fact-statistic/facts-statistics-wildfires (accessed on 30 December 2019).

- U.S. Global Change Research Program. Climate Science Special Report: Fourth National Climate Assessment. 2017. Available online: https://science2017.globalchange.gov/chapter/8/ (accessed on 30 December 2019).

- Chien, S.; Doubleday, J.; Mclaren, D.; Davies, A.; Tran, D.; Tanpipat, V.; Akaakara, S.; Ratanasuwan, A.; Mandl, D. Space-based sensorweb monitoring of wildfires in Thailand. In Proceedings of the 2011 IEEE International Geoscience and Remote Sensing Symposium, Vancouver, BC, Canada, 24–29 July 2011; pp. 1906–1909. [Google Scholar] [CrossRef]

- Chiaraviglio, N.; Artés, T.; Bocca, R.; López, J.; Gentile, A.; Ayanz, J.S.M.; Cortés, A.; Margalef, T. Automatic fire perimeter determination using MODIS hotspots information. In Proceedings of the 2016 IEEE 12th International Conference on e-Science, Baltimore, MD, USA, 24–27 October 2016; pp. 414–423. [Google Scholar] [CrossRef]

- Fukuhara, T.; Kouyama, T.; Kato, S.; Nakamura, R.; Takahashi, Y.; Akiyama, H. Detection of small wildfire by thermal infrared camera with the uncooled microbolometer array for 50-kg class satellite. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4314–4324. [Google Scholar] [CrossRef]

- Yoon, I.; Noh, D.K.; Lee, D.; Teguh, R.; Honma, T.; Shin, H. Reliable wildfire monitoring with sparsely deployed wireless sensor networks. In Proceedings of the 2012 IEEE 26th International Conference on Advanced Information Networking and Applications, Fukuoka, Japan, 26–29 March 2012; pp. 460–466. [Google Scholar] [CrossRef]

- Tan, Y.K.; Panda, S.K. Self-autonomous wireless sensor nodes with wind energy harvesting for remote sensing of wind-driven wildfire spread. IEEE Trans. Instrum. Meas. 2011, 60, 1367–1377. [Google Scholar] [CrossRef]

- Lin, H.; Liu, X.; Wang, X.; Liu, Y. A fuzzy inference and big data analysis algorithm for the prediction of forest fire based on rechargeable wireless sensor networks. Sustain. Comput. Informatics Syst. 2018, 18, 101–111. [Google Scholar] [CrossRef]

- Marder, J. NASA Tracks Wildfires From Above to Aid Firefighters Below. 2019. Available online: https://www.nasa.gov/feature/goddard/2019/nasa-tracks-wildfires-from-above-to-aid-firefighters-below (accessed on 30 December 2019).

- Bumberger, J.; Remmler, P.; Hutschenreuther, T.; Toepfer, H.; Dietrich, P. Potentials and Limitations of Wireless Sensor Networks for Environmental. In Proceedings of the AGU Fall Meeting, San Francisco, CA, USA, 9–13 December 2013; Volume 2013. [Google Scholar]

- Nex, F.; Remondino, F. Preface: Latest Developments, Methodologies, and Applications Based on UAV Platforms. Drones 2019, 3, 26. [Google Scholar] [CrossRef]

- Ollero, A.; de Dios, J.M.; Merino, L. Unmanned aerial vehicles as tools for forest-fire fighting. For. Ecol. Manag. 2006, 234, S263. [Google Scholar] [CrossRef]

- Ambrosia, V.; Wegener, S.; Zajkowski, T.; Sullivan, D.; Buechel, S.; Enomoto, F.; Lobitz, B.; Johan, S.; Brass, J.; Hinkley, E. The Ikhana unmanned airborne system (UAS) western states fire imaging missions: From concept to reality (2006–2010). Geocarto Int. 2011, 26, 85–101. [Google Scholar] [CrossRef]

- Hinkley, E.A.; Zajkowski, T. USDA forest service—NASA: Unmanned aerial systems demonstrations—Pushing the leading edge in fire mapping. Geocarto Int. 2011, 26, 103–111. [Google Scholar] [CrossRef]

- Yuan, C.; Youmin, Z.; Zhixiang, L. A survey on technologies for automatic forest fire monitoring, detection, and fighting using unmanned aerial vehicles and remote sensing techniques. Can. J. For. Res. 2015, 45, 783–792. [Google Scholar] [CrossRef]

- Skorput, P.; Mandzuka, S.; Vojvodic, H. The use of Unmanned Aerial Vehicles for forest fire monitoring. In Proceedings of the 2016 International Symposium ELMAR, Zadar, Croatia, 12–14 September 2016; pp. 93–96. [Google Scholar] [CrossRef]

- Restas, A. Forest Fire Management Supporting by UAV Based Air Reconnaissance Results of Szendro Fire Department, Hungary. In Proceedings of the 2006 First International Symposium on Environment Identities and Mediterranean Area, Corte-Ajaccio, France, 10–13 July 2006; pp. 73–77. [Google Scholar] [CrossRef]

- Laszlo, B.; Agoston, R.; Xu, Q. Conceptual approach of measuring the professional and economic effectiveness of drone applications supporting forest fire management. Procedia Eng. 2018, 211, 8–17. [Google Scholar] [CrossRef]

- Bailon-Ruiz, R.; Lacroix, S. Wildfire remote sensing with UAVs: A review from the autonomy point of view. In Proceedings of the 2020 International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 1–4 September 2020; pp. 412–420. [Google Scholar] [CrossRef]

- Twidwell, D.; Allen, C.R.; Detweiler, C.; Higgins, J.; Laney, C.; Elbaum, S. Smokey comes of age: Unmanned aerial systems for fire management. Front. Ecol. Environ. 2016, 14, 333–339. [Google Scholar] [CrossRef]

- Qin, H.; Cui, J.Q.; Li, J.; Bi, Y.; Lan, M.; Shan, M.; Liu, W.; Wang, K.; Lin, F.; Zhang, Y.F.; et al. Design and implementation of an unmanned aerial vehicle for autonomous firefighting missions. In Proceedings of the 2016 12th IEEE International Conference on Control and Automation (ICCA), Kathmandu, Nepal, 1–3 June 2016; pp. 62–67. [Google Scholar] [CrossRef]

- Aydin, B.; Selvi, E.; Tao, J.; Starek, M.J. Use of fire-extinguishing balls for a conceptual system of drone-assisted wildfire fighting. Drones 2019, 3, 17. [Google Scholar] [CrossRef]

- Johnson, K. DJI R&D Head Dreams of Drones Fighting Fires by the Thousands in ‘Aerial Aqueduct’. 2019. Available online: https://venturebeat.com/2019/04/20/dji-rd-head-dreams-of-drones-fighting-fires-by-the-thousands-in-aerial-aqueduct/ (accessed on 30 December 2019).

- Watts, A.; Ambrosia, V.; Hinkley, E. Unmanned Aircraft Systems in Remote Sensing and Scientific Research: Classification and Considerations of Use. Remote Sens. 2012, 4, 1671–1692. [Google Scholar] [CrossRef]

- Abdullah, Q.A. iGEOG 892 Geospatial Applications of Unmanned Aerial Systems (UAS): Classification of the Unmanned Aerial Systems. Available online: https://www.e-education.psu.edu/geog892/node/5 (accessed on 5 August 2020).

- Martínez-de Dios, J.; Merino, L.; Ollero, A.; Ribeiro, L.M.; Viegas, X. Multi-UAV experiments: Application to forest fires. In Multiple Heterogeneous Unmanned Aerial Vehicles; Springer: Berlin/Heidelberg, Germany, 2007; pp. 207–228. [Google Scholar] [CrossRef]

- Ghamry, K.; Kamel, M.; Zhang, Y. Multiple UAVs in forest fire fighting mission using particle swarm optimization. In Proceedings of the 2017 International Conference on Unmanned Aircraft Systems (ICUAS), Miami, FL, USA, 13–16 June 2017; pp. 1404–1409. [Google Scholar] [CrossRef]

- Casbeer, D.; Kingston, D.; Beard, R.; McLain, T. Cooperative forest fire surveillance using a team of small unmanned air vehicles. Int. J. Syst. Sci. 2006, 37, 351–360. [Google Scholar] [CrossRef]

- Martins, A.; Almeida, J.; Almeida, C.; Figueiredo, A.; Santos, F.; Bento, D.; Silva, H.; Silva, E. Forest fire detection with a small fixed wing autonomous aerial vehicle. IFAC 2007, 40, 168–173. [Google Scholar] [CrossRef]

- Merino, L.; Caballero, F.; Martínez-de Dios, J.; Ollero, A. Cooperative fire detection using Unmanned Aerial Vehicles. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation, Barcelona, Spain, 18–22 April 2005; pp. 1884–1889. [Google Scholar] [CrossRef]

- Merino, L.; Caballero, F.; Martínez-de Dios, J.; Ferruz, J.; Ollero, A. A cooperative perception system for multiple UAVs: Application to automatic detection of forest fires. J. Field Robot. 2006, 23, 165–184. [Google Scholar] [CrossRef]

- Sujit, P.; Kingston, D.; Beard, R. Cooperative forest fire monitoring using multiple UAVs. In Proceedings of the 2007 46th IEEE Conference on Decision and Control, New Orleans, LA, USA, 12–14 December 2007; pp. 4875–4880. [Google Scholar] [CrossRef]

- Alexis, K.; Nikolakopoulos, G.; Tzes, A.; Dritsas, L. Coordination of helicopter UAVs for aerial forest-fire surveillance. In Applications of Intelligent Control to Engineering Systems; Springer: Dordrecht, The Netherlands, 2009; Volume 39, Chapter 7; pp. 169–193. [Google Scholar]

- Bradley, J.; Taylor, C. Georeferenced mosaics for tracking fires using unmanned miniature air vehicles. J. Aerosp. Comput. Inf. Commun. 2011, 8, 295–309. [Google Scholar] [CrossRef]

- Kumar, M.; Cohen, K.; Homchaudhuri, B. Cooperative control of multiple uninhabited aerial vehicles for monitoring and fighting wildfires. J. Aerosp. Comput. Inf. Commun. 2011, 8, 1–16. [Google Scholar] [CrossRef]

- Martínez-de Dios, J.; Merino, L.; Caballero, F.; Ollero, A. Automatic forest-fire measuring using ground stations and Unmanned Aerial Systems. Sensors 2011, 11, 6328–6353. [Google Scholar] [CrossRef] [PubMed]

- Pastor, E.; Barrado, C.; Royo, P.; Santamaria, E.; Lopez, J.; Salami, E. Architecture for a helicopter-based unmanned aerial systems wildfire surveillance system. Geocarto Int. 2011, 26, 113–131. [Google Scholar] [CrossRef]

- Belbachir, A.; Escareno, J.; Rubio, E.; Sossa, H. Preliminary results on UAV-based forest fire localization based on decisional navigation. In Proceedings of the 2015 Workshop on Research, Education and Development of Unmanned Aerial Systems (RED-UAS), Cancún, Mexico, 23–25 November 2015; pp. 377–382. [Google Scholar] [CrossRef]

- Karma, S.; Zorba, E.; Pallis, G.C.; Statheropoulos, G.; Balta, I.; Mikedi, K.; Vamvakari, J.; Pappa, A.; Chalaris, M.; Xanthopoulos, G.; et al. Use of unmanned vehicles in search and rescue operations in forest fires: Advantages and limitations observed in a field trial. Int. J. Disaster Risk Reduct. 2015, 13, 307–312. [Google Scholar] [CrossRef]

- Merino, L.; Caballero, F.; Martínez-de Dios, J.R.; Maza, I.; Ollero, A. An unmanned aircraft system for automatic forest fire monitoring and measurement. J. Intell. Robot. Syst. 2012, 65, 533–548. [Google Scholar] [CrossRef]

- Merino, L.; Martínez-de Dios, J.; Ollero, A. Cooperative unmanned aerial systems for fire detection, Monitoring, and Extinguishing. In Handbook of Unmanned Aerial Vehicles; Springer: Dordrecht, The Netherlands, 2015; Chapter 112; pp. 2693–2722. [Google Scholar]

- Ghamry, K.; Zhang, Y. Cooperative control of multiple UAVs for forest fire monitoring and detection. In Proceedings of the 2016 12th IEEE/ASME International Conference on Mechatronic and Embedded Systems and Applications (MESA), Auckland, New Zealand, 29–31 August 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Ghamry, K.; Zhang, Y. Fault-tolerant cooperative control of multiple UAVs for forest fire detection and tracking mission. In Proceedings of the 2016 3rd Conference on Control and Fault-Tolerant Systems (SysTol), Barcelona, Spain, 7–9 September 2016; pp. 133–138. [Google Scholar] [CrossRef]

- Sun, H.; Song, G.; Wei, Z.; Zhang, Y.; Liu, S. Bilateral teleoperation of an unmanned aerial vehicle for forest fire detection. In Proceedings of the 2017 IEEE International Conference on Information and Automation (ICIA), Macau, China, 18–20 July 2017; pp. 586–591. [Google Scholar] [CrossRef]

- Yuan, C.; Liu, Z.; Zhang, Y. Fire detection using infrared images for UAV-based forest fire surveillance. In Proceedings of the 2017 International Conference on Unmanned Aircraft Systems (ICUAS), Miami, FL, USA, 13–16 June 2017; pp. 567–572. [Google Scholar] [CrossRef]

- Yuan, C.; Liu, Z.; Zhang, Y. UAV-based forest fire detection and tracking using image processing techniques. In Proceedings of the 2015 International Conference on Unmanned Aircraft Systems (ICUAS), Denver, CO, USA, 9–12 June 2015; pp. 639–643. [Google Scholar] [CrossRef]

- Yuan, C.; Liu, Z.; Zhang, Y. Vision-based forest fire detection in aerial images for firefighting using UAVs. In Proceedings of the 2016 International Conference on Unmanned Aircraft Systems, Arlington, VA, USA, 7–10 June 2016; pp. 1200–1205. [Google Scholar] [CrossRef]

- Yuan, C.; Ghamry, K.; Liu, Z.; Zhang, Y. Unmanned aerial vehicle based forest fire monitoring and detection using image processing technique. In Proceedings of the 2016 IEEE Chinese Guidance, Navigation and Control Conference (CGNCC), Nanjing, China, 12–14 August 2016; pp. 1870–1875. [Google Scholar] [CrossRef]

- Yuan, C.; Liu, Z.; Zhang, Y. Aerial images-based forest fire detection for firefighting using optical remote sensing techniques and unmanned aerial vehicles. J. Intell. Robot. Syst. 2017, 88, 635–654. [Google Scholar] [CrossRef]

- Lin, Z.; Liu, H.H.; Wotton, M. Kalman filter-based large-scale wildfire monitoring with a system of UAVs. IEEE Trans. Ind. Electron. 2018, 66, 606–615. [Google Scholar] [CrossRef]

- Wardihani, E.; Ramdhani, M.; Suharjono, A.; Setyawan, T.A.; Hidayat, S.S.; Helmy, S.W.; Triyono, E.; Saifullah, F. Real-time forest fire monitoring system using unmanned aerial vehicle. J. Eng. Sci. Technol. 2018, 13, 1587–1594. [Google Scholar]

- Zhao, Y.; Ma, J.; Li, X.; Zhang, J. Saliency Detection and Deep Learning-Based Wildfire Identification in UAV Imagery. Sensors 2018, 18, 712. [Google Scholar] [CrossRef]

- Pham, H.; La, H.; Feil-Seifer, D.; Deans, M. A distributed control framework for a team of unmanned aerial vehicles for dynamic wildfire tracking. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 6648–6653. [Google Scholar] [CrossRef]

- Pham, H.X.; La, H.M.; Feil-Seifer, D.; Deans, M.C. A distributed control framework of multiple unmanned aerial vehicles for dynamic wildfire tracking. IEEE Trans. Syst. Man Cybern. Syst. 2018, 50, 1537–1548. [Google Scholar] [CrossRef]

- Julian, K.D.; Kochenderfer, M.J. Distributed wildfire surveillance with autonomous aircraft using deep reinforcement learning. J. Guid. Control. Dyn. 2019, 42, 1768–1778. [Google Scholar] [CrossRef]

- Jiao, Z.; Zhang, Y.; Xin, J.; Mu, L.; Yi, Y.; Liu, H.; Liu, D. A deep learning based forest fire detection approach using UAV and YOLOv3. In Proceedings of the 2019 1st International Conference on Industrial Artificial Intelligence (IAI), Shenyang, China, 23–25 July 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Jiao, Z.; Zhang, Y.; Mu, L.; Xin, J.; Jiao, S.; Liu, H.; Liu, D. A YOLOv3-based Learning Strategy for Real-time UAV-based Forest Fire Detection. In Proceedings of the 2020 Chinese Control And Decision Conference (CCDC), Hefei, China, 22–24 August 2020; pp. 4963–4967. [Google Scholar] [CrossRef]

- Seraj, E.; Gombolay, M. Coordinated control of uavs for human-centered active sensing of wildfires. In Proceedings of the 2020 American Control Conference (ACC), Denver, CO, USA, 1–3 July 2020; pp. 1845–1852. [Google Scholar] [CrossRef]

- Lin, Z.; Liu, H. Enhanced cooperative filter for wildfire monitoring. In Proceedings of the 2015 54th IEEE Conference on Decision and Control (CDC), Osaka, Japan, 15–18 December 2015; pp. 3075–3080. [Google Scholar] [CrossRef]

- Allison, R.; Johnston, J.; Craig, G.; Jennings, S. Airborne optical and thermal remote sensing for wildfire detection and monitoring. Sensors 2016, 16, 1310. [Google Scholar] [CrossRef] [PubMed]

- Johnston, J.; Wooster, M.; Lynham, T. Experimental confirmation of the MWIR and LWIR grey body assumption for vegetation fire flame emissivity. Int. J. Wildland Fire 2014, 23, 463–479. [Google Scholar] [CrossRef]

- Ball, M. FLIR Unveils MWIR Thermal Camera Cores for Drone Applications. 2018. Available online: https://www.unmannedsystemstechnology.com/2018/12/new-mwir-thermal-camera-cores-launched-for-drone-applications/ (accessed on 30 December 2019).

- Esposito, F.; Rufino, G.; Moccia, A.; Donnarumma, P.; Esposito, M.; Magliulo, V. An integrated electro-optical payload system for forest fires monitoring from airborne platform. In Proceedings of the 2007 IEEE Aerospace Conference, Big Sky, MT, USA, 3–10 March 2007; pp. 1–13. [Google Scholar] [CrossRef]

- National Aeronautics and Space Administration. Ikhana UAV Gives NASA New Science and Technology Capabilities. 2007. Available online: https://www.nasa.gov/centers/dryden/news/NewsReleases/2007/07-12.html (accessed on 30 December 2019).

- Ghamry, K.; Kamel, M.; Zhang, Y. Cooperative forest monitoring and fire detection using a team of UAVs-UGVs. In Proceedings of the 2016 International Conference on Unmanned Aircraft Systems, Arlington, VA, USA, 7–10 June 2016; pp. 1206–1211. [Google Scholar] [CrossRef]

- Fairchild, M.D. Color Appearance Models, 3rd ed.; John Wiley & Sons Ltd.: Chichester, West Sussex, UK, 2013. [Google Scholar]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Lei, S.; Fangfei, S.; Teng, W.; Leping, B.; Xinguo, H. A new fire detection method based on the centroid variety of consecutive frames. In Proceedings of the 2017 2nd International Conference on Image, Vision and Computing (ICIVC), Chengdu, China, 2–4 June 2017; pp. 437–442. [Google Scholar] [CrossRef]

- Wang, T.; Shi, L.; Yuan, P.; Bu, L.; Hou, X. A new fire detection method based on flame color dispersion and similarity in consecutive frames. In Proceedings of the 2017 Chinese Automation Congress (CAC), Jinan, China, 20–22 October 2017; pp. 151–156. [Google Scholar] [CrossRef]

- Chou, K.; Prasad, M.; Gupta, D.; Sankar, S.; Xu, T.; Sundaram, S.; Lin, C.; Lin, W. Block-based feature extraction model for early fire detection. In Proceedings of the 2017 IEEE Symposium Series on Computational Intelligence (SSCI), Hawaii, HI, USA, 27 November–1 December 2017; pp. 1–8. [Google Scholar] [CrossRef]

- Shi, L.; Long, F.; Zhan, Y.; Lin, C. Video-based fire detection with spatio-temporal SURF and color features. In Proceedings of the 2016 12th World Congress on Intelligent Control and Automation (WCICA), Guilin, China, 12–15 June 2016; pp. 258–262. [Google Scholar] [CrossRef]

- Abdullah, M.; Wijayanto, I.; Rusdinar, A. Position estimation and fire detection based on digital video color space for autonomous quadcopter using odroid XU4. In Proceedings of the 2016 International Conference on Control, Electronics, Renewable Energy and Communications (ICCEREC), Bandung, Indonesia, 13–15 September 2016; pp. 169–173. [Google Scholar] [CrossRef]

- Steffens, C.; Botelho, S.; Rodrigues, R. A texture driven approach for visible spectrum fire detection on mobile robots. In Proceedings of the 2016 XIII Latin American Robotics Symposium and IV Brazilian Robotics Symposium (LARS/SBR), Recife, Brazil, 8–12 October 2016; pp. 257–262. [Google Scholar] [CrossRef]

- Choi, J.; Choi, J.Y. Patch-based fire detection with online outlier learning. In Proceedings of the 2015 12th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Karlsruhe, Germany, 25–28 August 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Poobalan, K.; Liew, S. Fire detection based on color filters and Bag-of-Features classification. In Proceedings of the 2015 IEEE Student Conference on Research and Development (SCOReD), Kuala Lumpur, Malaysia, 13–14 December 2015; pp. 389–392. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, N.; Xiao, N. Fire detection and identification method based on visual attention mechanism. Opt. Int. J. Light Electron Opt. 2015, 126, 5011–5018. [Google Scholar] [CrossRef]

- Toulouse, T.; Rossi, L.; Akhloufi, M.; Celik, T.; Maldague, X. Benchmarking of wildland fire colour segmentation algorithms. IET Image Process. 2015, 9, 1064–1072. [Google Scholar] [CrossRef]

- Verstockt, S.; Kypraios, I.; Potter, P.; Poppe, C.; Walle, R. Wavelet-based multi-modal fire detection. In Proceedings of the 19th European Signal Processing Conference, Barcelona, Spain, 29 August–2 September 2011; pp. 903–907. [Google Scholar]

- Kyrkou, C.; Theocharides, T. Deep-Learning-Based Aerial Image Classification for Emergency Response Applications Using Unmanned Aerial Vehicles. arXiv 2019, arXiv:1906.08716. [Google Scholar]

- Lee, W.; Kim, S.; Lee, Y.; Lee, H.; Choi, M. Deep neural networks for wild fire detection with unmanned aerial vehicle. In Proceedings of the 2017 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 8–10 January 2017; pp. 252–253. [Google Scholar] [CrossRef]

- Akhloufi, M.A.; Tokime, R.B.; Elassady, H. Wildland fires detection and segmentation using deep learning. In Proceedings of the SPIE 10649, Pattern Recognition and Tracking XXIX, International Society for Optics and Photonics, Orlando, FL, USA, 15–19 April 2018; Volume 10649. [Google Scholar] [CrossRef]

- Toulouse, T.; Rossi, L.; Campana, A.; Celik, T.; Akhloufi, M. Computer vision for wildfire research: An evolving image dataset for processing and analysis. Fire Saf. J. 2017, 92, 188–194. [Google Scholar] [CrossRef]

- Bruhn, A.; Weickert, J.; Schnörr, C. Lucas/Kanade meets Horn/Schunck: Combining local and global optic flow methods. Int. J. Comput. Vis. 2005, 61, 211–231. [Google Scholar] [CrossRef]

- Asatryan, D.; Hovsepyan, S. Method for fire and smoke detection in monitored forest areas. In Proceedings of the 2015 Computer Science and Information Technologies (CSIT), Yerevan, Armenia, 28 September–2 October 2015; pp. 77–81. [Google Scholar] [CrossRef]

- Yuan, C.; Liu, Z.; Zhang, Y. Learning-based smoke detection for unmanned aerial vehicles applied to forest fire surveillance. J. Intell. Robot. Syst. 2018, 93, 337–349. [Google Scholar] [CrossRef]

- Duong, H.; Tinh, D.T. An efficient method for vision-based fire detection using SVM classification. In Proceedings of the 2013 International Conference on Soft Computing and Pattern Recognition (SoCPaR), Hanoi, Vietnam, 15–18 December 2013; pp. 190–195. [Google Scholar] [CrossRef]

- Zhou, Q.; Yang, X.; Bu, L. Analysis of shape features of flame and interference image in video fire detection. In Proceedings of the 2015 Chinese Automation Congress (CAC), Wuhan, China, 27–29 November 2015; pp. 633–637. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, X.; Zhang, Q. Fire alarm using multi-rules detection and texture features classification in video surveillance. In Proceedings of the 2014 7th International Conference on Intelligent Computation Technology and Automation, Changsha, China, 25–26 October 2014; pp. 264–267. [Google Scholar] [CrossRef]

- Chino, D.; Avalhais, L.; Rodrigues, J.; Traina, A. BoWFire: Detection of fire in still images by integrating pixel color and texture analysis. In Proceedings of the 2015 28th SIBGRAPI Conference on Graphics, Patterns and Images, Salvador, Brazil, 26–29 August 2015; pp. 95–102. [Google Scholar] [CrossRef]

- Chi, R.; Lu, Z.M.; Ji, Q.G. Real-time multi-feature based fire flame detection in video. IET Image Process. 2017, 11, 31–37. [Google Scholar] [CrossRef]

- Favorskaya, M.; Pyataeva, A.; Popov, A. Verification of smoke detection in video sequences based on spatio-temporal local binary patterns. Procedia Comput. Sci. 2015, 60, 671–680. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Gool, L.V. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikäinen, M.; Harwood, D. A comparative study of texture measures with classification based on featured distributions. Pattern Recognit. 1996, 29, 51–59. [Google Scholar] [CrossRef]

- Avalhais, L.; Rodrigues, J.; Traina, A. Fire detection on unconstrained videos using color-aware spatial modeling and motion flow. In Proceedings of the 2016 IEEE 28th International Conference on Tools with Artificial Intelligence (ICTAI), San Jose, CA, USA, 6–8 November 2016; pp. 913–920. [Google Scholar] [CrossRef]

- Li, K.; Yang, Y. Fire detection algorithm based on CLG-TV optical flow model. In Proceedings of the 2016 2nd IEEE International Conference on Computer and Communications (ICCC), Chengdu, China, 14–17 October 2016; pp. 1381–1385. [Google Scholar] [CrossRef]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the Fourth Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; pp. 147–152. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Ko, B.; Jung, J.; Nam, J. Fire detection and 3D surface reconstruction based on stereoscopic pictures and probabilistic fuzzy logic. Fire Saf. J. 2014, 68, 61–70. [Google Scholar] [CrossRef]

- Foggia, P.; Saggese, A.; Vento, M. Real-time fire detection for video-surveillance applications using a combination of experts based on color, shape, and motion. IEEE Trans. Circuits Syst. Video Technol. 2015, 25, 1545–1556. [Google Scholar] [CrossRef]

- Buemi, A.; Giacalone, D.; Naccari, F.; Spampinato, G. Efficient fire detection using fuzzy logic. In Proceedings of the 2016 IEEE 6th International Conference on Consumer Electronics—Berlin (ICCE-Berlin), Berlin, Germany, 5–7 September 2016; pp. 237–240. [Google Scholar] [CrossRef]

- Cai, M.; Lu, X.; Wu, X.; Feng, Y. Intelligent video analysis-based forest fires smoke detection algorithms. In Proceedings of the 2016 12th International Conference on Natural Computation, Fuzzy Systems and Knowledge Discovery (ICNC-FSKD), Changsha, China, 13–15 August 2016; pp. 1504–1508. [Google Scholar] [CrossRef]

- Zhao, Y.; Tang, G.; Xu, M. Hierarchical detection of wildfire flame video from pixel level to semantic level. Expert Syst. Appl. 2015, 42, 4097–4104. [Google Scholar] [CrossRef]

- Stadler, A.; Windisch, T.; Diepold, K. Comparison of intensity flickering features for video based flame detection algorithms. Fire Saf. J. 2014, 66, 1–7. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Dimitropoulos, K.; Grammalidis, N. Real time video fire detection using spatio-temporal consistency energy. In Proceedings of the 2013 10th IEEE International Conference on Advanced Video and Signal Based Surveillance, Krakow, Poland, 27–30 August 2013; pp. 365–370. [Google Scholar] [CrossRef]

- Wang, H.; Finn, A.; Erdinc, O.; Vincitore, A. Spatial-temporal structural and dynamics features for Video Fire Detection. In Proceedings of the 2013 IEEE Workshop on Applications of Computer Vision (WACV), Clearwater, FL, USA, 15–17 January 2013; pp. 513–519. [Google Scholar] [CrossRef]

- Kim, S.; Kim, T. Fire detection using the brownian correlation descriptor. In Proceedings of the 2016 IEEE International Conference on Consumer Electronics-Asia (ICCE-Asia), Seoul, Korea, 26–28 October 2016; pp. 1–4. [Google Scholar] [CrossRef]

- Székely, G.; Rizzo, M. Brownian distance covariance. Ann. Appl. Stat. 2009, 3, 1236–1265. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar] [CrossRef]

- Steffens, C.; Rodrigues, R.; Botelho, S. An unconstrained dataset for non-stationary video based fire detection. In Proceedings of the 2015 12th Latin American Robotics Symposium and 2015 3rd Brazilian Symposium on Robotics (LARS-SBR), Uberlandia, Brazil, 29–31 October 2015; pp. 25–30. [Google Scholar] [CrossRef]

- Pignaton de Freitas, E.; da Costa, L.A.L.F.; Felipe Emygdio de Melo, C.; Basso, M.; Rodrigues Vizzotto, M.; Schein Cavalheiro Corrêa, M.; Dapper e Silva, T. Design, Implementation and Validation of a Multipurpose Localization Service for Cooperative Multi-UAV Systems. In Proceedings of the 2020 International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 1–4 September 2020; pp. 295–302. [Google Scholar] [CrossRef]

- Phan, C.; Liu, H. A cooperative UAV/UGV platform for wildfire detection and fighting. In Proceedings of the 2008 Asia Simulation Conference—7th International Conference on System Simulation and Scientific Computing, Beijing, China, 10–12 October 2008; pp. 494–498. [Google Scholar] [CrossRef]

- Viguria, A.; Maza, I.; Ollero, A. Distributed Service-Based Cooperation in Aerial/Ground Robot Teams Applied to Fire Detection and Extinguishing Missions. Adv. Robot. 2010, 24, 1–23. [Google Scholar] [CrossRef]

- Akhloufi, M.A.; Castro, N.A.; Couturier, A. UAVs for wildland fires. In Proceedings of the SPIE 10643, Autonomous Systems: Sensors, Vehicles, Security, and the Internet of Everything. International Society for Optics and Photonics, Orlando, FL, USA, 15–19 April 2018; Volume 10643. [Google Scholar] [CrossRef]

- Akhloufi, M.A.; Toulouse, T.; Rossi, L. Multiple spectrum vision for wildland fires. In Proceedings of the SPIE 10661, Thermosense: Thermal Infrared Applications XL. International Society for Optics and Photonics, Orlando, FL, USA, 15–19 April 2018; Volume 10661, p. 1066105. [Google Scholar] [CrossRef]

- Toulouse, T.; Rossi, L.; Akhloufi, M.A.; Pieri, A.; Maldague, X. A multimodal 3D framework for fire characteristics estimation. Meas. Sci. Technol. 2018, 29, 025404. [Google Scholar] [CrossRef]

- Akhloufi, M.; Toulouse, T.; Rossi, L.; Maldague, X. Multimodal three-dimensional vision for wildland fires detection and analysis. In Proceedings of the 2017 Seventh International Conference on Image Processing Theory, Tools and Applications (IPTA), Montreal, QC, Canada, 28 November–1 December 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Akhloufi, M.; Toulouse, T.; Rossi, L.; Maldague, X. Three-dimensional infrared-visible framework for wildland fires. In Proceedings of the 14th International Workshop on Advanced Infrared Technology and Applications (AITA), Quebec City, QC, Canada, 27–29 September 2017; pp. 65–69. [Google Scholar]

| Authors | Year | Validation |

|---|---|---|

| Casbeer et al. [32] | 2006 | Simulation |

| Martins et al. [33] | 2007 | Simulation |

| Merino et al. [30,34,35] | 2007 | Practical |

| Sujit et al. [36] | 2007 | Simulation |

| Alexis et al. [37] | 2009 | Simulation |

| Ambrosia et al. [17] | 2011 | Practical |

| Bradley and Taylor [38] | 2011 | Near practical |

| Hinkley and Zajkowski [18] | 2011 | Practical |

| Kumar et al. [39] | 2011 | Simulation |

| Martínez-de Dios et al. [40] | 2011 | Practical |

| Pastor et al. [41] | 2011 | None |

| Belbachir et al. [42] | 2015 | Simulation |

| Karma et al. [43] | 2015 | Practical |

| Merino et al. [44,45] | 2015 | Practical |

| Ghamry and Zhang [46,47] | 2016 | Simulation |

| Ghamry et al. [31] | 2017 | Simulation |

| Sun et al. [48] | 2017 | Near practical |

| Yuan et al. [49] | 2017 | Simulation |

| Yuan et al. [50,51,52,53] | 2017 | Near practical |

| Lin et al. [54] | 2018 | Simulation |

| Wardihani et al. [55] | 2018 | Practical |

| Zhao et al. [56] | 2018 | Simulation |

| Pham et al. [57,58] | 2018 | Simulation |

| Julian and Kochenderfer [59] | 2019 | Simulation |

| Aydin et al. [26] | 2019 | Near practical |

| Jiao et al. [60,61] | 2020 | Near practical |

| Seraj and Gombolay [62] | 2020 | Simulation |

| Authors | Sensing Mode | Tasks |

|---|---|---|

| Casbeer et al. [32] | IR | Monitoring |

| Martins et al. [33] | NIR, Visual | Detection |

| Merino et al. [30,34,35] | IR, Visual | Detection, Monitoring |

| Sujit et al. [36] | Not specified | Monitoring |

| Alexis et al. [37] | Not specified | Monitoring |

| Ambrosia et al. [17] | Multispectral | Detection, Diagnosis |

| Bradley and Taylor [38] | IR | Detection |

| Hinkley and Zajkowski [18] | IR | Monitoring |

| Kumar et al. [39] | IR | Monitoring, Fighting |

| Martínez-de Dios et al. [40] | IR, Visual | Monitoring, Diagnosis |

| Pastor et al. [41] | IR, Visual | Detection, Monitoring |

| Belbachir et al. [42] | Temperature | Detection |

| Karma et al. [43] | Not specified | Monitoring |

| Merino et al. [44,45] | IR, Visual | Detection, Monitoring |

| Ghamry and Zhang [46,47] | Not specified | Detection, Monitoring |

| Ghamry et al. [31] | Not specified | Fighting |

| Sun et al. [48] | Visual | Detection, Monitoring |

| Yuan et al. [49] | IR | Detection |

| Yuan et al. [50,51,52,53] | Visual | Detection |

| Lin et al. [54] | Temperature | Monitoring |

| Wardihani et al. [55] | Temperature | Detection |

| Zhao et al. [56] | Visual | Detection |

| Pham et al. [57,58] | IR, Visual | Monitoring |

| Julian and Kochenderfer [59] | Not specified | Monitoring |

| Aydin et al. [26] | IR, Visual | Fighting |

| Jiao et al. [60,61] | Visual | Detection |

| Seraj and Gombolay [62] | Visual | Monitoring |

| Authors | Autonomy | Organization | Coordination |

|---|---|---|---|

| Casbeer et al. [32] | Autonomous | Multiple UAV | Decentralized |

| Martins et al. [33] | Autonomous | Single UAV | None |

| Merino et al. [30,34,35] | Autonomous | Multiple UAV | Centralized |

| Sujit et al. [36] | Autonomous | Multiple UAV | Decentralized |

| Alexis et al. [37] | Autonomous | Multiple UAV | Decentralized |

| Ambrosia et al. [17] | Piloted | Single UAV | None |

| Bradley and Taylor [38] | Piloted | Single UAV | None |

| Hinkley and Zajkowski [18] | Piloted | Single UAV | None |

| Kumar et al. [39] | Autonomous | Multiple UAV | Decentralized |

| Martínez-de Dios et al. [40] | Piloted | Single UAV | None |

| Pastor et al. [41] | Piloted | Single UAV | None |

| Belbachir et al. [42] | Autonomous | Multiple UAV | Centralized |

| Karma et al. [43] | Piloted | Multiple UAV and UGV | Centralized |

| Merino et al. [44,45] | Autonomous | Multiple UAV | Centralized |

| Ghamry and Zhang [46,47] | Autonomous | Multiple UAV | Centralized |

| Ghamry et al. [31] | Autonomous | Multiple UAV | Decentralized |

| Sun et al. [48] | Piloted | Single UAV | None |

| Yuan et al. [49] | Not specified | Single UAV | None |

| Yuan et al. [50,51,52,53] | Not specified | Single UAV | None |

| Lin et al. [54,63] | Autonomous | Multiple UAV | Centralized |

| Wardihani et al. [55] | Near autonomous | Single UAV | None |

| Zhao et al. [56] | Piloted | Single UAV | None |

| Pham et al. [57,58] | Autonomous | Multiple UAV | Decentralized |

| Julian and Kochenderfer [59] | Autonomous | Multiple UAV | Decentralized |

| Aydin et al. [26] | Autonomous | Multiple UAV | Centralized |

| Jiao et al. [60,61] | Not specified | Single UAV | None |

| Seraj and Gombolay [62] | Autonomous | Multiple UAV | Decentralized |

| Spectral Band | Wavelength (µm) |

|---|---|

| Visible | 0.4–0.75 |

| Near Infrared (NIR) | 0.75–1.4 |

| Short Wave IR (SWIR) | 1.4–3 |

| Mid Wave IR (MWIR) | 3–8 |

| Long Wave IR (LWIR) | 8–15 |

| Input | Statistical Measures | Spatial Features | Temporal Features |

|---|---|---|---|

| Color, IR and radiance images | Mean value, mean difference, color histogram, variance and entropy. | LBP, SURF, shape, convex hull to the perimeter rate, bounding box to the perimeter rate. | Shape and intensity variations, centroid displacement, ROI overlapping, fire to non-fire transitions, movement gradient histograms and Brownian correlation. |

| Wavelet transform | Mean energy content. | Mean blob energy content. | Diagonal filter difference. High-pass filter zero crossing of wavelet transform on area variation. |

| Dataset | Description | Wildland Fires | Aerial Footage | Annotations |

|---|---|---|---|---|

| FURG [114] | 14,397 fire frames in 24 videos from static and moving cameras. | No | No | Fire bounding boxes |

| BowFire [93] | 186 fire and non-fire images. | No | No | Fire masks |

| Corsican Fire DB [86] | 500 RGB and 100 multimodal images. | All | Few | Fire masks |

| VisiFire [104] | 14 fire videos, 15 smoke videos, 2 videos containing fire-like objects. | 17 videos | 7 videos | No |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Akhloufi, M.A.; Couturier, A.; Castro, N.A. Unmanned Aerial Vehicles for Wildland Fires: Sensing, Perception, Cooperation and Assistance. Drones 2021, 5, 15. https://doi.org/10.3390/drones5010015

Akhloufi MA, Couturier A, Castro NA. Unmanned Aerial Vehicles for Wildland Fires: Sensing, Perception, Cooperation and Assistance. Drones. 2021; 5(1):15. https://doi.org/10.3390/drones5010015

Chicago/Turabian StyleAkhloufi, Moulay A., Andy Couturier, and Nicolás A. Castro. 2021. "Unmanned Aerial Vehicles for Wildland Fires: Sensing, Perception, Cooperation and Assistance" Drones 5, no. 1: 15. https://doi.org/10.3390/drones5010015

APA StyleAkhloufi, M. A., Couturier, A., & Castro, N. A. (2021). Unmanned Aerial Vehicles for Wildland Fires: Sensing, Perception, Cooperation and Assistance. Drones, 5(1), 15. https://doi.org/10.3390/drones5010015