Cattle Detection Using Oblique UAV Images

Abstract

:1. Introduction

2. Material and Methods

2.1. Dataset

2.2. Experimental Setup

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Barbedo, J.G.A.; Koenigkan, L.V.; Santos, P.M.; Ribeiro, A.R.B. Counting Cattle in UAV Images—Dealing with Clustered Animals and Animal/Background Contrast Changes. Sensors 2020, 20, 2126. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Goolsby, J.A.; Jung, J.; Landivar, J.; McCutcheon, W.; Lacewell, R.; Duhaime, R.; Baca, D.; Puhger, R.; Hasel, H.; Varner, K.; et al. Evaluation of Unmanned Aerial Vehicles (UAVs) for detection of cattle in the Cattle Fever Tick Permanent Quarantine Zone. Subtrop. Agric. Environ. 2016, 67, 24–27. [Google Scholar]

- Barbedo, J.G.A.; Koenigkan, L.V.; Santos, T.T.; Santos, P.M. A Study on the Detection of Cattle in UAV Images Using Deep Learning. Sensors 2019, 19, 5436. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Xu, B.; Wang, W.; Falzon, G.; Kwan, P.; Guo, L.; Chen, G.; Tait, A.; Schneider, D. Automated cattle counting using Mask R-CNN in quadcopter vision system. Comput. Electron. Agric. 2020, 171, 105300. [Google Scholar] [CrossRef]

- Xu, B.; Wang, W.; Falzon, G.; Kwan, P.; Guo, L.; Sun, Z.; Li, C. Livestock classification and counting in quadcopter aerial images using Mask R-CNN. Int. J. Remote Sens. 2020, 41, 1–22. [Google Scholar] [CrossRef] [Green Version]

- Andrew, W.; Gao, J.; Mullan, S.; Campbell, N.; Dowsey, A.W.; Burghardt, T. Visual Identification of Individual Holstein-Friesian Cattle via Deep Metric Learning. arXiv 2020, arXiv:2006.09205. [Google Scholar]

- Mufford, J.T.; Hill, D.J.; Flood, N.J.; Church, J.S. Use of unmanned aerial vehicles (UAVs) and photogrammetric image analysis to quantify spatial proximity in beef cattle. J. Unmanned Veh. Syst. 2019, 7, 194–206. [Google Scholar] [CrossRef]

- Nyamuryekung’e, S.; Cibils, A.; Estell, R.; Gonzalez, A. Use of an Unmanned Aerial Vehicle—Mounted Video Camera to Assess Feeding Behavior of Raramuri Criollo Cows. Rangel. Ecol. Manag. 2016, 69, 386–389. [Google Scholar] [CrossRef]

- Longmore, S.; Collins, R.; Pfeifer, S.; Fox, S.; Mulero-Pázmány, M.; Bezombes, F.; Goodwin, A.; Juan Ovelar, M.; Knapen, J.; Wich, S. Adapting astronomical source detection software to help detect animals in thermal images obtained by unmanned aerial systems. Int. J. Remote Sens. 2017, 38, 2623–2638. [Google Scholar] [CrossRef]

- Andrew, W.; Greatwood, C.; Burghardt, T. Aerial Animal Biometrics: Individual Friesian Cattle Recovery and Visual Identification via an Autonomous UAV with Onboard Deep Inference. arXiv 2019, arXiv:1907.05310v1. [Google Scholar]

- Shao, W.; Kawakami, R.; Yoshihashi, R.; You, S.; Kawase, H.; Naemura, T. Cattle detection and counting in UAV images based on convolutional neural networks. Int. J. Remote Sens. 2020, 41, 31–52. [Google Scholar] [CrossRef] [Green Version]

- Chamoso, P.; Raveane, W.; Parra, V.; González, A. UAVs Applied to the Counting and Monitoring of Animals. Advances in Intelligent Systems and Computing. Adv. Intell. Syst. Comput. 2014, 291, 71–80. [Google Scholar]

- Rahnemoonfar, M.; Dobbs, D.; Yari, M.; Starek, M. DisCountNet: Discriminating and Counting Network for Real-Time Counting and Localization of Sparse Objects in High-Resolution UAV Imagery. Remote Sens. 2019, 11, 1128. [Google Scholar] [CrossRef] [Green Version]

- Rivas, A.; Chamoso, P.; González-Briones, A.; Corchado, J. Detection of Cattle Using Drones and Convolutional Neural Networks. Sensors 2018, 18, 2048. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Barbedo, J.G.A.; Koenigkan, L.V. Perspectives on the use of unmanned aerial systems to monitor cattle. Outlook Agric. 2018, 47, 214–222. [Google Scholar] [CrossRef] [Green Version]

- Sun, Y.; Yi, S.; Hou, F.; Luo, D.; Hu, J.; Zhou, Z. Quantifying the Dynamics of Livestock Distribution by Unmanned Aerial Vehicles (UAVs): A Case Study of Yak Grazing at the Household Scale. Rangel. Ecol. Manag. 2020, 73, 642–648. [Google Scholar] [CrossRef]

- Buda, M.; Maki, A.; Mazurowski, M.A. A systematic study of the class imbalance problem in convolutional neural networks. Neural Netw. 2018, 106, 249–259. [Google Scholar] [CrossRef] [Green Version]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. arXiv 2017, arXiv:1610.02357v3. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. ImageNet: A Large-Scale Hierarchical Image Database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; IEEE: Miami, FL, USA, 2009. [Google Scholar]

| Size | # Images in Each Cattle and Non-Cattle Classes |

|---|---|

| 224 × 224 | 276 |

| 112 × 112 | 856 |

| 56 × 56 | 1754 |

| 28 × 28 | 3530 |

| 14 × 14 | 8984 |

| Distance | % Total Number of Animals | % Total Number of Blocks with Animals |

|---|---|---|

| 30–50 m | 37 | 65 |

| 50–100 m | 20 | 18 |

| 100–250 m | 43 | 17 |

| over 250 m | - | - |

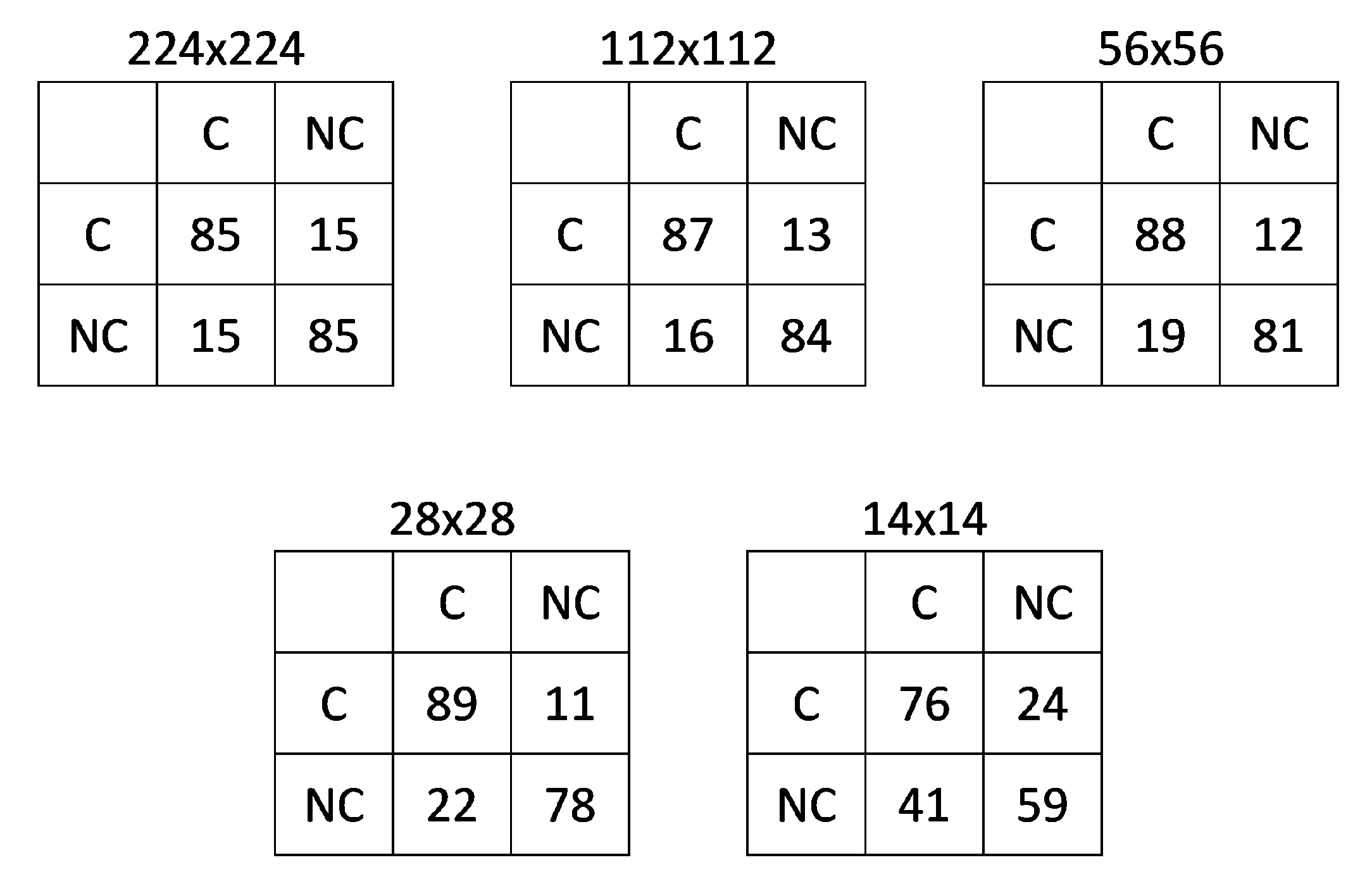

| Block Size | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|

| 224 × 224 | 0.87 | 0.92 | 0.87 | 0.87 |

| 0.85 | 0.85 | 0.85 | 0.85 | |

| 0.81 | 0.79 | 0.81 | 0.82 | |

| 112 × 112 | 0.87 | 0.87 | 0.95 | 0.87 |

| 0.85 | 0.84 | 0.87 | 0.85 | |

| 0.83 | 0.80 | 0.82 | 0.83 | |

| 56 × 56 | 0.85 | 0.84 | 0.90 | 0.86 |

| 0.84 | 0.82 | 0.88 | 0.85 | |

| 0.84 | 0.81 | 0.87 | 0.84 | |

| 28 × 28 | 0.85 | 0.82 | 0.91 | 0.85 |

| 0.83 | 0.80 | 0.89 | 0.84 | |

| 0.81 | 0.77 | 0.88 | 0.83 | |

| 14 × 14 | 0.71 | 0.70 | 0.78 | 0.71 |

| 0.67 | 0.65 | 0.76 | 0.70 | |

| 0.65 | 0.63 | 0.73 | 0.69 |

| Distance (m) | 224 × 224 | 112 × 112 | 56 × 56 | 28 × 28 | 14 × 14 |

|---|---|---|---|---|---|

| 30–50 | 0.88 | 0.89 | 0.9 | 0.89 | 0.72 |

| 50–100 | 0.82 | 0.86 | 0.86 | 0.89 | 0.72 |

| 100–250 | 0.76 | 0.8 | 0.84 | 0.87 | 0.75 |

| Distance (m) | 224 × 224 | 112 × 112 | 56 × 56 | 28 ×28 | 14 × 14 |

|---|---|---|---|---|---|

| 30–50 | 0 | 0 | 0 | 0 | 0 |

| 50–100 | 6 | 2 | 0 | 0 | 11 |

| 100–250 | 25 | 18 | 11 | 5 | 17 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Barbedo, J.G.A.; Koenigkan, L.V.; Santos, P.M. Cattle Detection Using Oblique UAV Images. Drones 2020, 4, 75. https://doi.org/10.3390/drones4040075

Barbedo JGA, Koenigkan LV, Santos PM. Cattle Detection Using Oblique UAV Images. Drones. 2020; 4(4):75. https://doi.org/10.3390/drones4040075

Chicago/Turabian StyleBarbedo, Jayme Garcia Arnal, Luciano Vieira Koenigkan, and Patrícia Menezes Santos. 2020. "Cattle Detection Using Oblique UAV Images" Drones 4, no. 4: 75. https://doi.org/10.3390/drones4040075