1. Introduction

The ever-advancing field of artificial intelligence is constantly finding new uses in many activities and businesses. Vehicle insurance and expertise companies involve activities such as automobile inspections in which many images are taken to record and verify not only possible damages but also identity, in the form of license plate and vehicle identification number, and usage, like travelled distance. Possible mistakes in acquiring these data can result in problems ranging from insurance fraud allegations to uninsured vehicles being involved in accidents. Experts performing routine activities such as manually typing data in the field are susceptible to these kinds of mistakes. Computer vision techniques, combined with machine learning models, can be used to produce systems that are able to extract this information from images directly. This can have the benefit of speeding up the task of data acquisition by skipping manual typing entirely or, at least, provide a layer of verification by notifying the expert when the data they introduced does not match the automatic reading, indicating there may have been a typing mistake or a problem with how the image was taken. Blurred, poorly illuminated images make for deficient expertise material.

2. Methodology

2.1. License Plate Reading

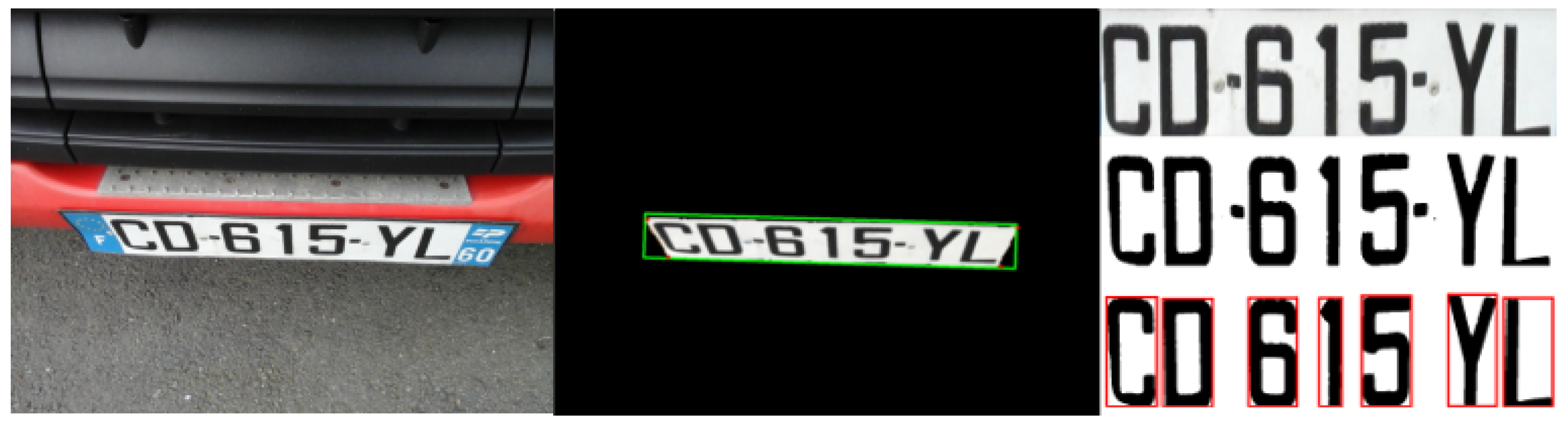

To read license plate numbers, a hybrid system was developed. First, the license plate is localised and segmented from the image using a novel convolutional neural network based on U-net [

1] architecture. From the segmentation map, the four corner points of the license plate rectangle are obtained so a projection transformation can be applied. With this transformation we obtain a flattened, standardised image of just the license plate, from which we can extract the characters using computer vision techniques.

Figure 1 shows a summary of these steps. Each character image is then classified using a convolutional neural network. Finally, the ordered character list is filtered using regular expressions to fix possible errors and classified as a Spanish, French or incorrectly read license plate.

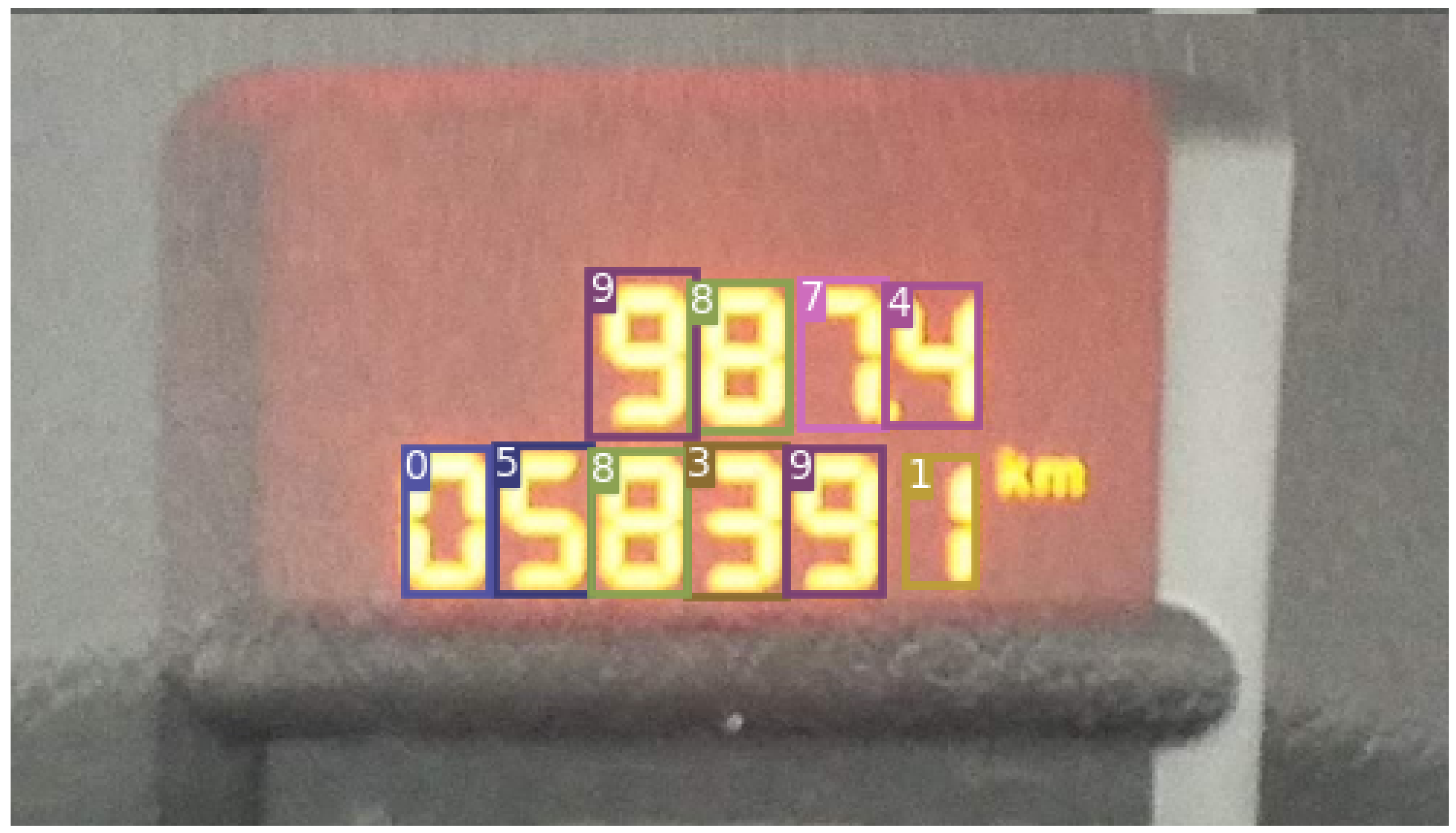

2.2. Odometer Reading

For odometers, a different approach was taken due to the inherent variability of analysing many different dashboards from any car make or model. To read the kilometre count, the user is asked to mark a point in the image over the digits that they want read. This way the system can limit the surface of the image that is analysed, as well as know which transcription is the one of interest, avoiding unnecessary processing and returning only what was requested from an image that may contain irrelevant data from the trip counter to time and date. A standardised window is taken from the image around the marked point and fed to a YOLOv3 [

2] network. This model segments and classifies the numbers found in the image fitting a bounding box for each, as seen on

Figure 2. Next, the user-supplied point is used to determine the extent of the figure of interest, including similar numbers in close proximity. For this, the size and position of already included numbers is used, providing flexibility to compensate for much of the heterogeneity of these images.

3. Results

A test dataset of 251 real images of varying illumination and quality was constructed in order to evaluate license plate reading. Of these, 236 were perfectly transcribed by the system, achieving an accuracy of 94.02%. For the remaining 15 incorrectly read, the system was able to report an image quality problem in 12 of them, while three mistakes, 1.20%, went unnoticed. In total, 100 dashboard images were used to test the odometer reading. The system read 75 of them correctly for an accuracy of 75%.

Funding

This research was co-funded by Ministerio de Ciencia, Innovación y Universidades and the European Union (European Regional Development Fund—ERDF) as part of the RTC-2017-5908-7 research project. We wish to acknowledge the support received from the Centro de Investigación de Galicia CITIC, funded by Consellería de Educación, Universidade e Formación Profesional from Xunta de Galicia and European Union. (European Regional Development Fund - FEDER Galicia 2014-2020 Program) by grant ED431G 2019/01.

Acknowledgments

This work was supported by and used material from project STEPS (RTC-2017-5908-7).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention, Proceedings of the MICCAI 2015, 18th International Conference, Part III, Munich, Germany, 5–9 October 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.027674. [Google Scholar]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).