Design and Feasibility Evaluation of Self-Reporting Application for Indoor Air Quality and Health Measures †

Abstract

:1. Introduction

2. Materials and Methods

2.1. Self-Reporting Application

2.2. Feasibility Pilot

2.3. Application Questionnaires

2.4. Application User Experience

3. Results

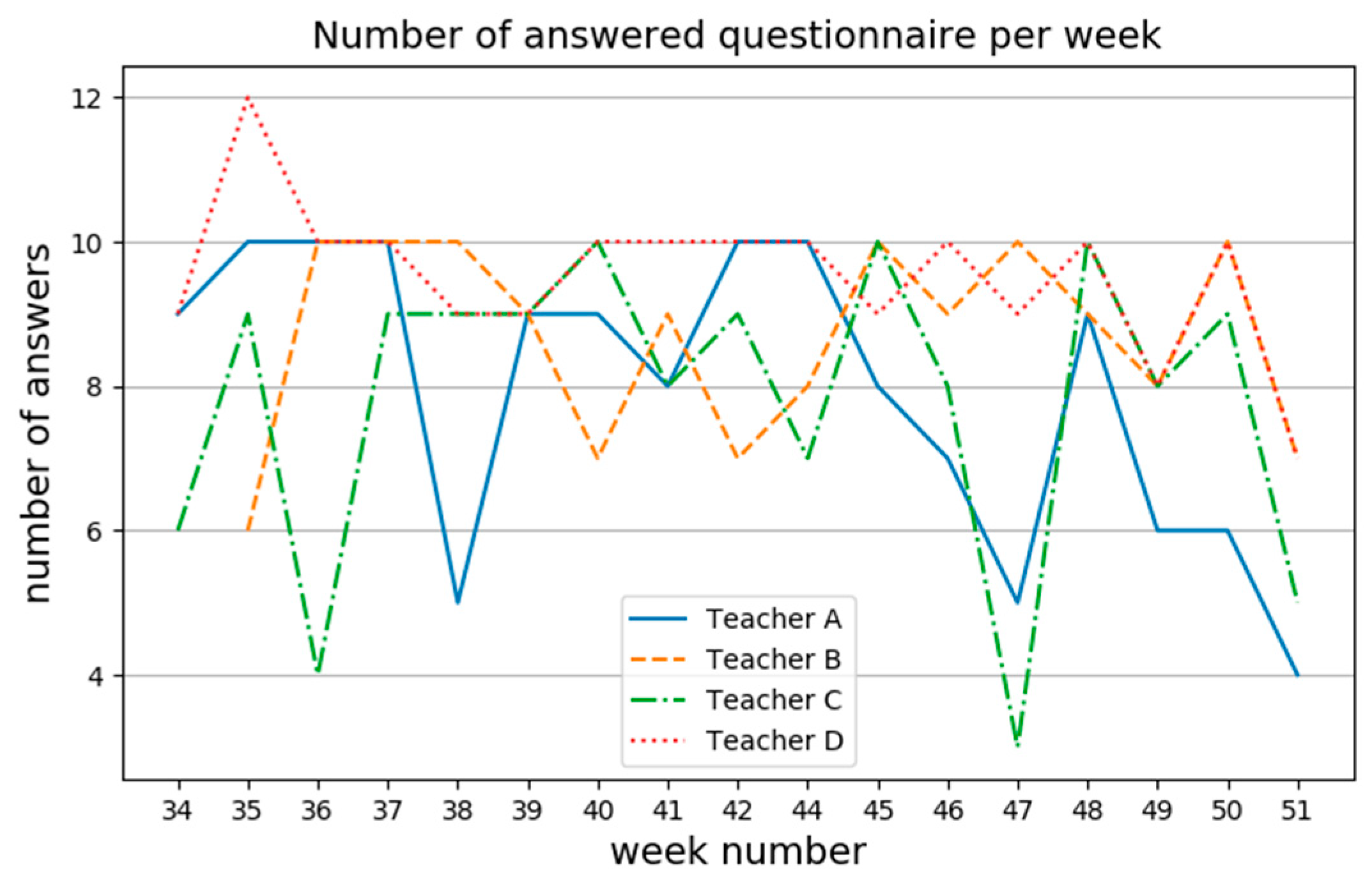

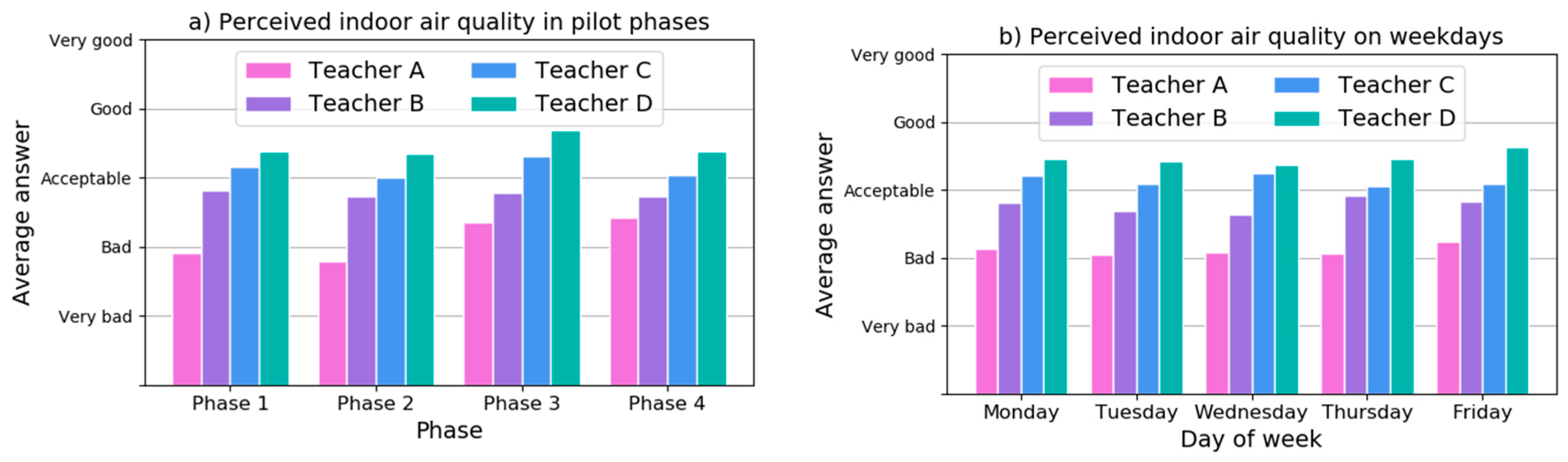

3.1. Self-Reporting Application Answers

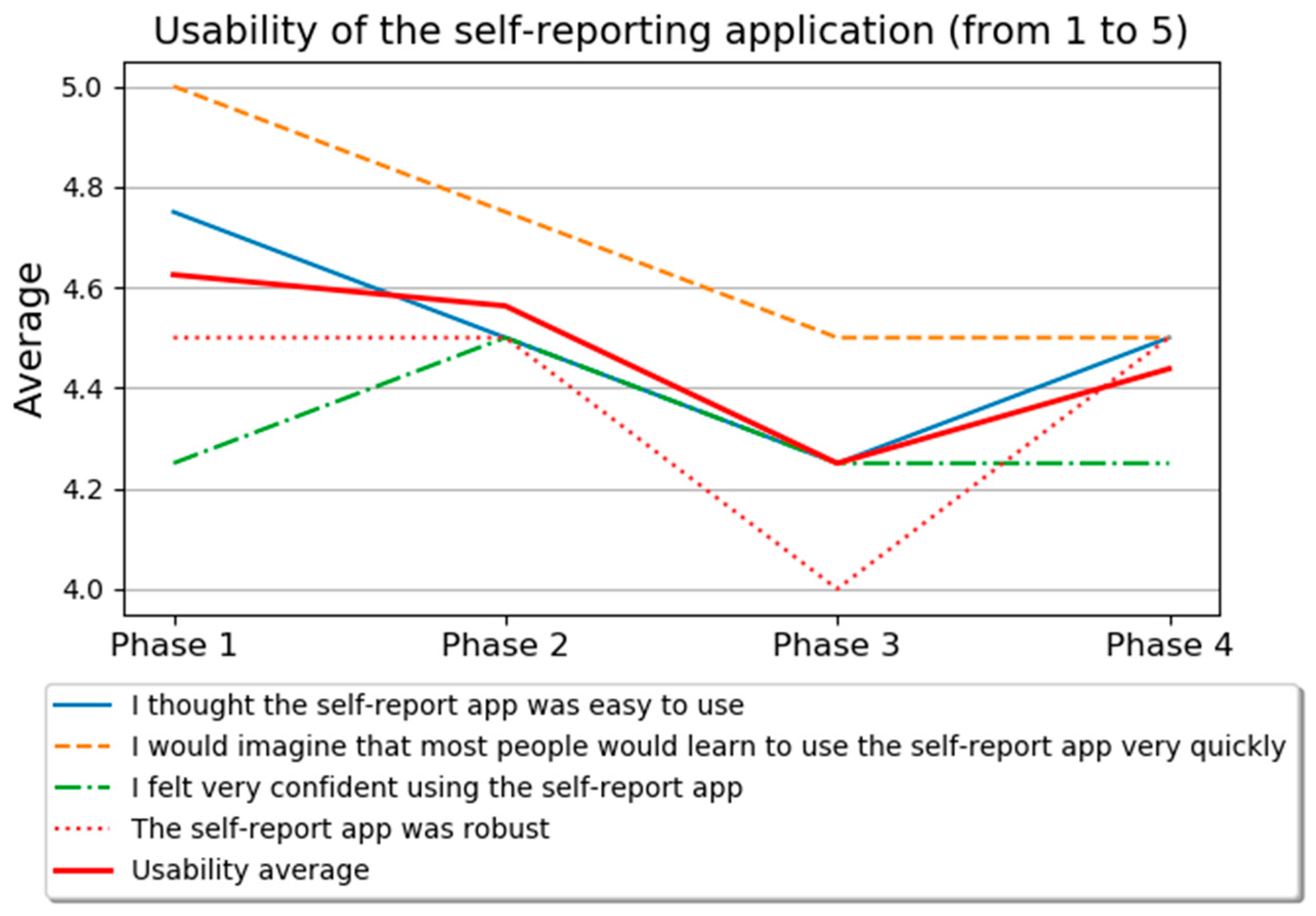

3.2. User Experience Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- WHO. Evolution of WHO Air Quality Guidelines: Past, Present and Future; Copenhagen: WHO Regional Office for Europe: København, Denmark, 2017. [Google Scholar]

- Brasche, S.; Bischof, W. Daily time spent indoors in German homes—Baseline data for the assessment of indoor exposure of German occupants. Int. J. Hyg. Environ. Health 2005, 208, 247–253. [Google Scholar] [CrossRef] [PubMed]

- Annesi-Maesano, I.; Baiz, N.; Banerjee, S.; Rudnai, P.; Rive, S.; The SINPHONIE Group. Indoor Air Quality and Sources in Schools and Related Health Effects. J. Toxicol. Environ. Health Part B 2013, 16, 491–550. [Google Scholar] [CrossRef] [PubMed]

- Jones, A.P. Indoor air quality and health. Atmos. Environ. 1999, 33, 4535–4564. [Google Scholar] [CrossRef]

- Redlich, C.A.; Sparer, J.; Cullen, M.R. Sick-building syndrome. Lancet 1997, 349, 1013–1016. [Google Scholar] [CrossRef] [PubMed]

- Sundell, J.; Levin, H.; Nazaroff, W.W.; Cain, W.S.; Fisk, W.J.; Grimsrud, D.T.; Gyntelberg, F.; Li, Y.; Persily, A.K.; Pickering, A.C.; et al. Ventilation rates and health: Multidisciplinary review of the scientific literature. Indoor Air 2011, 21, 191–204. [Google Scholar] [CrossRef] [PubMed]

- Wargocki, P.; Wyon, D.P. Ten questions concerning thermal and indoor air quality effects on the performance of office work and schoolwork. Build. Environ. 2017, 112, 359–366. [Google Scholar] [CrossRef]

- Bakó-Biró, Z.; Clements-Croome, D.J.; Kochhar, N.; Awbi, H.B.; Williams, M.J. Ventilation rates in schools and pupils’ performance. Build. Environ. 2012, 48, 215–223. [Google Scholar] [CrossRef]

- Haverinen-Shaughnessy, U.; Shaughnessy, R.J. Effects of Classroom Ventilation Rate and Temperature on Students’ Test Scores. PLoS ONE 2015, 10, e0136165. [Google Scholar] [CrossRef] [PubMed]

- Wyon, D.P.; Wargocki, P. How Indoor Environment Affects Performance. ASHRAE J. 2013, 55, 46–48, 50, 52. [Google Scholar]

- Spinelle, L.; Gerboles, M.; Kok, G.; Persijn, S.; Sauerwald, T.; Spinelle, L.; Gerboles, M.; Kok, G.; Persijn, S.; Sauerwald, T. Review of Portable and Low-Cost Sensors for the Ambient Air Monitoring of Benzene and Other Volatile Organic Compounds. Sensors 2017, 17, 1520. [Google Scholar] [CrossRef] [PubMed]

- Aguilera, T.; Lozano, J.; Paredes, J.A.; Álvarez, F.J.; Suárez, J.I.; Aguilera, T.; Lozano, J.; Paredes, J.A.; Álvarez, F.J.; Suárez, J.I. Electronic Nose Based on Independent Component Analysis Combined with Partial Least Squares and Artificial Neural Networks for Wine Prediction. Sensors 2012, 12, 8055–8072. [Google Scholar] [CrossRef] [PubMed]

- Langer, S.; Ramalho, O.; Le Ponner, E.; Derbez, M.; Kirchner, S.; Mandin, C. Perceived indoor air quality and its relationship to air pollutants in French dwellings. Indoor Air 2017, 27, 1168–1176. [Google Scholar] [CrossRef] [PubMed]

- Järvi, K.; Vornanen-Winqvist, C.; Mikkola, R.; Kurnitski, J.; Salonen, H. Online Questionnaire as a Tool to Assess Symptoms and Perceived Indoor Air Quality in a School Environment. Atmosphere 2018, 9, 270. [Google Scholar] [CrossRef]

- Andersson, K. Epidemiological Approach to Indoor Air Problems. Indoor Air 1998, 8, 32–39. [Google Scholar] [CrossRef]

- Raw, G.J.; Roys, M.S.; Whitehead, C.; Tong, D. Questionnaire design for sick building syndrome: An empirical comparison of options. Environ. Int. 1996, 22, 61–72. [Google Scholar] [CrossRef]

- Elo, A.-L.; Leppänen, A.; Jahkola, A. Validity of a single-item measure of stress symptoms. Scand. J. Work Environ. Health 2003, 29, 444–451. [Google Scholar] [CrossRef] [PubMed]

- Wargocki, P.; Wyon, D.P.; Baik, Y.K.; Clausen, G.; Fanger, P.O. Perceived Air Quality, Sick Building Syndrome (SBS) Symptoms and Productivity in an Office with Two Different Pollution Loads. Indoor Air 1999, 9, 165–179. [Google Scholar] [CrossRef] [PubMed]

- Maula, H.; Hongisto, V.; Naatula, V.; Haapakangas, A.; Koskela, H. The effect of low ventilation rate with elevated bioeffluent concentration on work performance, perceived indoor air quality, and health symptoms. Indoor Air 2017, 27, 1141–1153. [Google Scholar] [CrossRef] [PubMed]

- Reichheld, F.F. The One Number You Need to Grow. Harv. Bus. Rev. 2003, 81, 46–55. [Google Scholar] [PubMed]

- Brooke, J. System Usability Scale; Digital Equipment Corporation: Reading, UK, 1986. [Google Scholar]

- Finell, E.; Haverinen-Shaughnessy, U.; Tolvanen, A.; Laaksonen, S.; Karvonen, S.; Sund, R.; Saaristo, V.; Luopa, P.; Ståhl, T.; Putus, T.; et al. The associations of indoor environment and psychosocial factors on the subjective evaluation of Indoor Air Quality among lower secondary school students: A multilevel analysis. Indoor Air 2017, 27, 329–337. [Google Scholar] [CrossRef] [PubMed]

| Question | Answer Alternatives | ||||

|---|---|---|---|---|---|

| (M1) How rested you felt in the morning? | Very tired | Quite tired | Not rested nor tired | Quite rested | Very rested |

| (M2) How would you estimate at the moment the indoor air quality ? * | Very bad | Bad | Acceptable | Good | Very good |

| (M3) How would you estimate at the moment the freshness of air? | Extremely stuffy | Stuffy | Neutral | Fresh | Extremely fresh |

| (M4) How would you estimate at the moment the odor intensity? | Unbearable odor | Strong odor | Moderate odor | Slight odor | No odor |

| (M5) How would you estimate at the moment the temperature? | Hot | Warm | Neutral | Cool | Cold |

| (M6) How would you estimate at the moment the air humidity? | Extremely dry | Dry | Neutral | Humid | Extremely humid |

| (M7) How would you estimate at the moment the noise level? | Extremely noisy | Noisy | Neutral | quiet | Extremely quiet |

| Question | Answer Alternatives | ||||

|---|---|---|---|---|---|

| (A1) There is positive and negative stress. What kind of stress you felt today? * | Strong negative stress | Negative stress | Neutral | Positive stress | Strong positive stress |

| (A2) How productively did you work today? | Much less productively than usually | Less productively than usually | As usually | More productively than usually | Much more productively than usually |

| (A3) How would you estimate at the moment the indoor air quality? ** | Very bad | Bad | Acceptable | Good | Very good |

| (A4) Are you bothered at the moment by the indoor air quality of your office? (Indoor air quality is affected by odour, temperature, air humidity and noise for example). | No | Yes (If answer = YES, questions M3–M7 from the morning questionnaire are repeated) | |||

| (A5) Do you have at the moment indoor air quality related symptoms? (Symptoms can be headache, nausea, breathing problems etc.) | No | Yes (If answer = YES, eight follow-up questions: Do you have at the moment X symptoms?) Answer alternatives: not at all, slightly, to some extent, quite a lot, very much X = head/nose/throat/breathing/eye/skin/nausea/tiredness | |||

| (A6) Which of the following actions did you do during the day for improving indoor air quality? | Nothing, Opened a window, Opened a door, Adjusted air condition, Adjusted thermostat, Something else | ||||

| (A7) How would you estimate calmness/restlessness of the pupils today? | Extremely restless | Restless | Neutral | Calm | Extremely calm |

| (A8) How would you estimate the concentration of the pupils today? | Extremely non-concentrated | Non-concentrated | Neutral | Concentrated | Extremely concentrated |

| Question | P1 | P2 | P3 | P4 | Scale |

|---|---|---|---|---|---|

| Self-report app was robust. | x | x | x | x | 5-point Likert |

| I thought the self-report app was easy to use. | x | x | x | x | 5-point Likert |

| I would imagine that most people would learn to use the self-report app very quickly. | x | x | x | x | 5-point Likert |

| I felt very confident using the self-report app. | x | x | x | x | 5-point Likert |

| How likely is it that you would recommend self-report app to a friend or colleague? | x | x | x | x | 11-Point |

| I think that I would like to use the self-report app frequently. | x | 5-point Likert | |||

| I found the self-report app unnecessarily complex. | x | 5-point Likert | |||

| I think that I would need the support of a technical person to be able to use the self-report app. | x | 5-point Likert | |||

| I found the various functions in the self-report app were well integrated. | x | 5-point Likert | |||

| I thought there was too much inconsistency in the self-report app. | x | 5-point Likert | |||

| I found the self-report app very cumbersome to use. | x | 5-point Likert | |||

| I needed to learn a lot of things before I could get going with the self-report app. | x | 5-point Likert | |||

| How useful did you perceive the self-report app? | x | 10-point | |||

| I would use self-report app in the future. | x | 5-point Likert | |||

| Open feedback (“What did you think about the self-report app? What was good/bad about it?”, “Tell, what kind of thoughts or comments related to self-report app you have?”) | x | x | x | x | Open |

| What kind of feedback you would like to give about the usability of the self-report app? | x | Open |

| Teacher A | Teacher B | Teacher C | Teacher D | |||||

|---|---|---|---|---|---|---|---|---|

| Question | Average | std | Average | std | Average | std | Average | std |

| M1 | 1.72 | 0.51 | 2.74 | 0.82 | 2.47 | 0.94 | 3.31 | 0.78 |

| M2/A3 | 2.11 | 0.52 | 2.77 | 0.49 | 3.15 | 0.49 | 3.46 | 0.64 |

| M3 | 2.13 | 0.63 | 2.70 | 0.52 | 2.86 | 0.48 | 2.97 | 0.70 |

| M4 | 4.74 | 0.50 | 4.14 | 0.86 | 4.64 | 0.61 | 4.84 | 0.37 |

| M5 | 2.39 | 0.80 | 3.49 | 0.81 | 3.05 | 0.28 | 3.09 | 0.34 |

| M6 | 1.97 | 0.30 | 2.04 | 0.20 | 3.00 | 0.00 | 2.99 | 0.18 |

| M7 | 3.40 | 0.61 | 3.86 | 0.56 | 4.01 | 0.92 | 3.93 | 0.32 |

| A1 | 2.15 | 0.50 | 2.61 | 0.52 | 2.88 | 0.52 | 3.01 | 0.50 |

| A2 | 2.42 | 0.53 | 3.00 | 0.39 | 3.08 | 0.41 | 3.00 | 0.40 |

| A7 | 2.70 | 0.55 | 2.85 | 0.59 | 2.56 | 0.56 | 2.94 | 0.47 |

| A8 | 2.77 | 0.58 | 2.86 | 0.58 | 2.98 | 0.28 | 3.01 | 0.55 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Similä, H.; Muuraiskangas, S.; Ronkainen, J.; Vehmas, K.; Kallio, J. Design and Feasibility Evaluation of Self-Reporting Application for Indoor Air Quality and Health Measures. Proceedings 2019, 31, 47. https://doi.org/10.3390/proceedings2019031047

Similä H, Muuraiskangas S, Ronkainen J, Vehmas K, Kallio J. Design and Feasibility Evaluation of Self-Reporting Application for Indoor Air Quality and Health Measures. Proceedings. 2019; 31(1):47. https://doi.org/10.3390/proceedings2019031047

Chicago/Turabian StyleSimilä, Heidi, Salla Muuraiskangas, Jussi Ronkainen, Kaisa Vehmas, and Johanna Kallio. 2019. "Design and Feasibility Evaluation of Self-Reporting Application for Indoor Air Quality and Health Measures" Proceedings 31, no. 1: 47. https://doi.org/10.3390/proceedings2019031047

APA StyleSimilä, H., Muuraiskangas, S., Ronkainen, J., Vehmas, K., & Kallio, J. (2019). Design and Feasibility Evaluation of Self-Reporting Application for Indoor Air Quality and Health Measures. Proceedings, 31(1), 47. https://doi.org/10.3390/proceedings2019031047