People-as-a-Service Dilemma: Humanizing Computing Solutions in High-Efficiency Applications †

Abstract

:1. Introduction

2. State of the Art

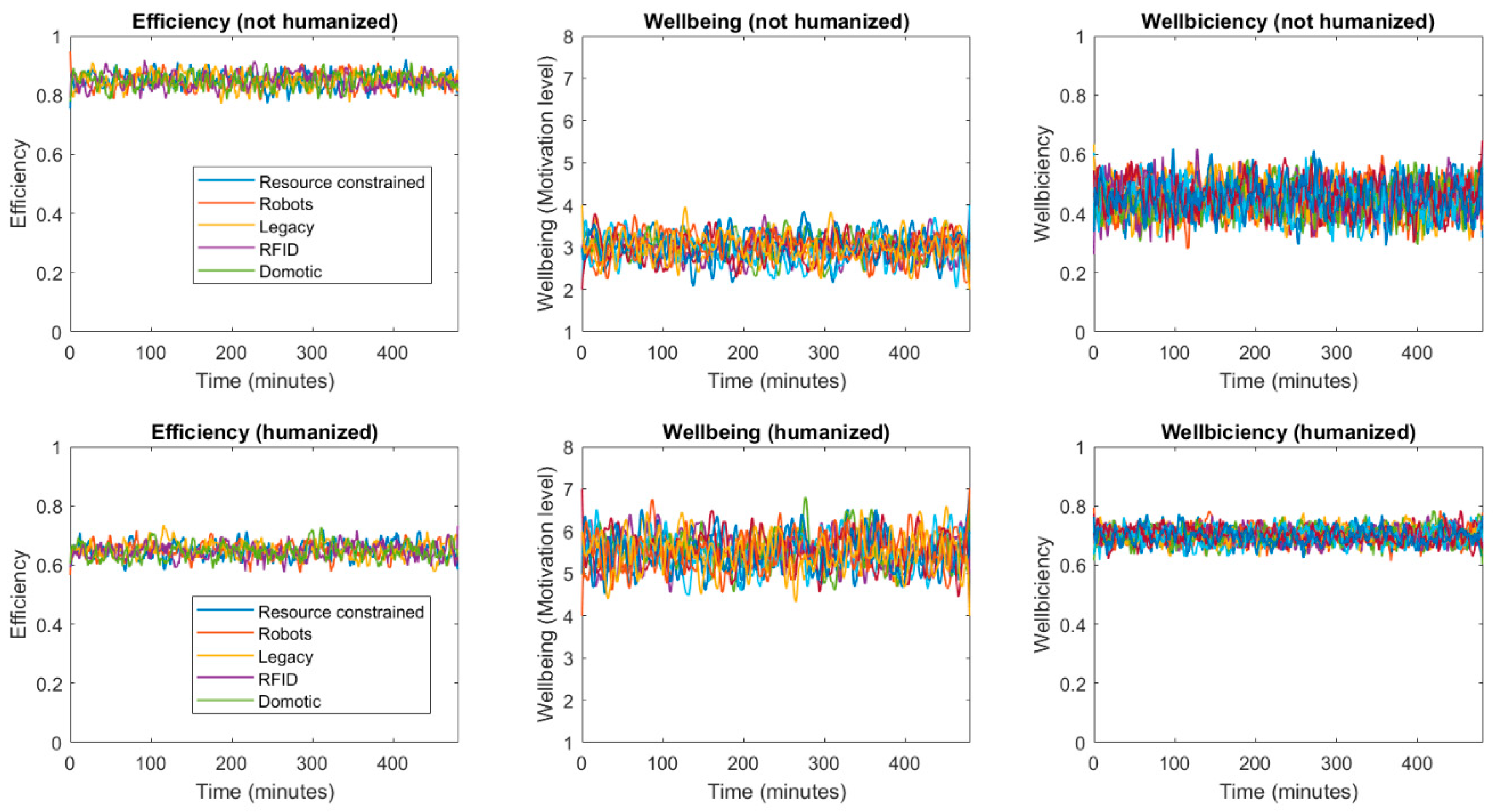

3. Wellbiciency: Humanizing Next-Generation Computing Solutions

3.1. Mathematical Formalization

| Algorithm 1. Mapping function for perceived wellbeing, |

| Input: Realistic wellbeing |

| Output: Perceived wellbeing |

| Create a circular array of positions |

| Insert in |

| if all elements in are equal then |

| else |

| if is lower than then |

| end if |

| end if |

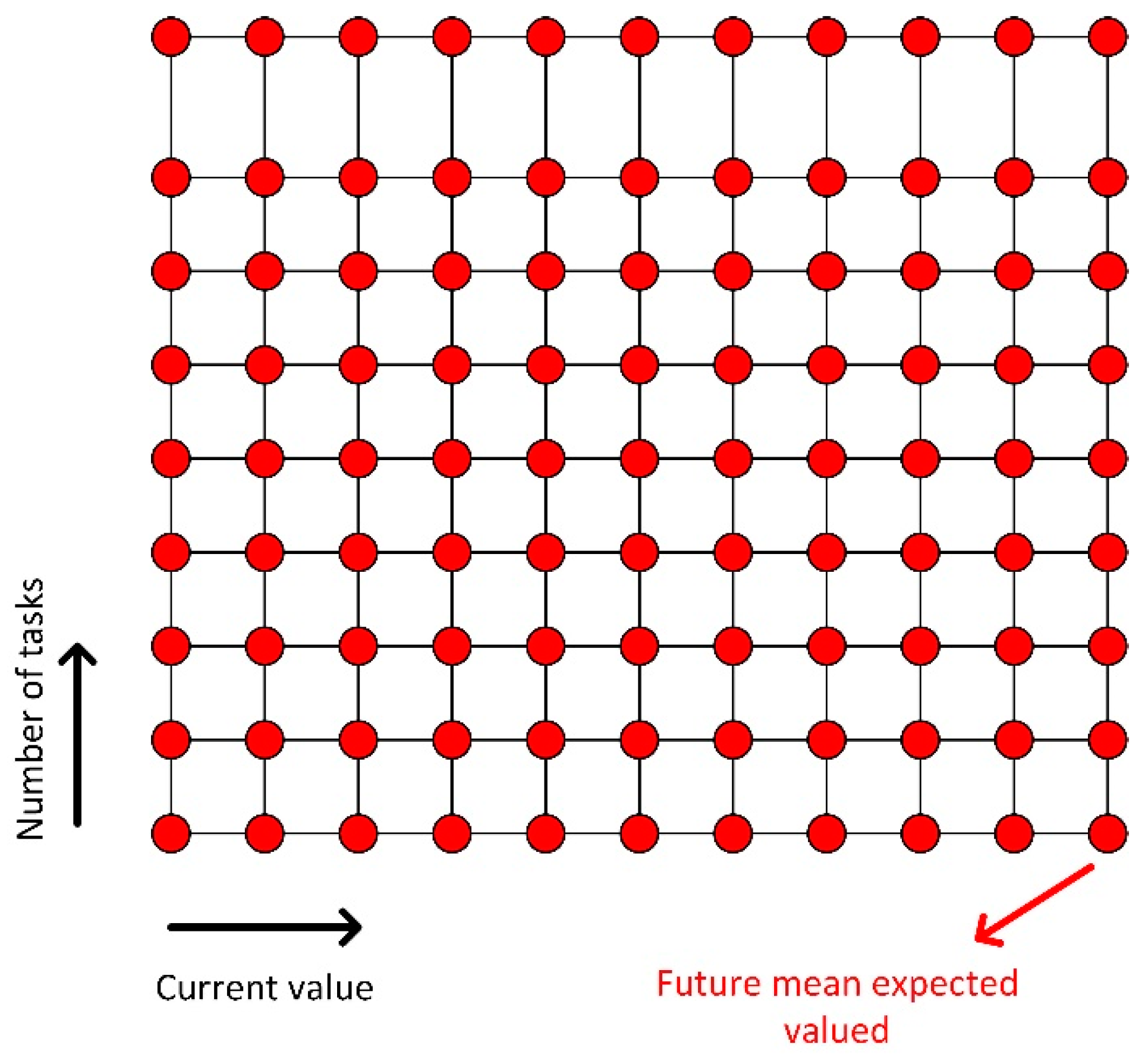

3.2. Proposed Algorithm

| Algorithm 2. Proposed process execution algorithm |

| Input: Collection of grids, |

| Current values of and |

| Output: Execution scheme |

| Future expect wellbiciency |

| Obtain vectors from grids. |

| for all combinations of do |

| Obtain |

| Obtain cost hypermatrix |

| Add to the indexes of |

| end for |

| is equal to the value of the last element in |

| Order de execution of |

4. Experimental Validation

5. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Bordel, B.; Alcarria, R.; Robles, T.; Martín, D. Cyber–physical systems: Extending pervasive sensing from control theory to the Internet of Things. Pervasive Mob. Comput. 2017, 40, 156–184. [Google Scholar] [CrossRef]

- Shi, W.; Cao, J.; Zhang, Q.; Li, Y.; Xu, L. Edge computing: Vision and challenges. IEEE Internet Things J. 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Bordel, B.; de Rivera, D.S.; Sánchez-Picot, Á.; Robles, T. Physical processes control in industry 4.0-based systems: A focus on cyber-physical systems. In Ubiquitous Computing and Ambient Intelligence; Springer: Cham, Switzerland, 2016; pp. 257–262. [Google Scholar]

- Bordel, B.; Alcarria, R.; de Rivera, D.S.; Robles, T. Process execution in Cyber-Physical Systems using cloud and Cyber-Physical Internet services. J. Supercomput. 2018, 74, 4127–4169. [Google Scholar] [CrossRef]

- Bordel, B.; Alcarria, R.; Sanchez de Rivera, D.; Martín, D.; Robles, T. Fast self-configuration in service-oriented Smart Environments for real-time applications. J. Ambient Intell. Smart Environ. 2018, 10, 143–167. [Google Scholar] [CrossRef]

- Sánchez-de-Rivera, D.; Bordel, B.; Alcarria, R.; Robles, T. Enabling Efficient Communications with Resource Constrained Information Endpoints in Smart Homes. Sensors 2019, 19, 1779. [Google Scholar] [CrossRef] [PubMed]

- Legner, C.; Eymann, T.; Hess, T.; Matt, C.; Böhmann, T.; Drews, P.; Mädche, A.; Urbach, N.; Ahlemann, F. Digitalization: Opportunity and challenge for the business and information systems engineering community. Bus. Inf. Syst. Eng. 2017, 59, 301–308. [Google Scholar] [CrossRef]

- Geissdoerfer, M.; Savaget, P.; Bocken, N.M.; Hultink, E.J. The Circular Economy—A new sustainability paradigm? J. Clean. Prod. 2017, 143, 757–768. [Google Scholar] [CrossRef]

- Duan, Y.; Fu, G.; Zhou, N.; Sun, X.; Narendra, N.C.; Hu, B. Everything as a service (XaaS) on the cloud: Origins, current and future trends. In Proceedings of the 2015 IEEE 8th International Conference on Cloud Computing, New York, NY, USA, 27 June–2 July 2015; pp. 621–628. [Google Scholar]

- Bordel, B.; Alcarria, R.; Jara, A. Process execution in humanized Cyber-physical systems: Soft processes. In Proceedings of the 2017 12th Iberian Conference on Information Systems and Technologies (CISTI), Lisbon, Portugal, 21–24 June 2017; pp. 1–7. [Google Scholar]

- Cohen, D.I.; Cohen, D.I. Introduction to Computer Theory; Wiley: New York, NY, USA, 1991; Volume 2. [Google Scholar]

- Schmidt, A. Implicit human computer interaction through context. Pers. Technol. 2000, 4, 191–199. [Google Scholar] [CrossRef]

- Chen, M.; Ma, Y.; Li, Y.; Wu, D.; Zhang, Y.; Youn, C.H. Wearable 2.0: Enabling human-cloud integration in next generation healthcare systems. IEEE Commun. Mag. 2017, 55, 54–61. [Google Scholar] [CrossRef]

- Rechy-Ramirez, E.J.; Marin-Hernandez, A.; Rios-Figueroa, H.V. Impact of commercial sensors in human computer interaction: A review. J. Ambient Intell. Humaniz. Comput. 2018, 9, 1479–1496. [Google Scholar] [CrossRef]

- Hui, T.K.; Sherratt, R.S. Towards disappearing user interfaces for ubiquitous computing: Human enhancement from sixth sense to super senses. J. Ambient Intell. Humaniz. Comput. 2017, 8, 449–465. [Google Scholar] [CrossRef]

- Du, G.; Zhang, P.; Liu, X. Markerless human–manipulator interface using leap motion with interval Kalman filter and improved particle filter. IEEE Trans. Ind. Inform. 2016, 12, 694–704. [Google Scholar] [CrossRef]

- Hong-juan, S.U.N. People-oriented User Interface Design. Packag. Eng. 2015, 4, 113–116. [Google Scholar]

- Lockner, D.; Bonnardel, N. Towards the evaluation of emotional interfaces. In International Conference on Human-Computer Interaction; Springer: Cham, Switzerland, 2015; pp. 500–511. [Google Scholar]

- Vasiljevic, G.A.M.; de Miranda, L.C. Brain–Computer Interface Games Based on Consumer-Grade EEG Devices: A Systematic Literature Review. Int. J. Hum. Comput. Interact. 2019, 1–38. [Google Scholar] [CrossRef]

- Shi, Y. The Application of Psychology in Human-computer Interaction. In Proceedings of the 2017 3rd International Conference on Management Science and Innovative Education, Jinan, China, 14–15 October 2017. [Google Scholar]

- Poore, J.; Vincent, E.; Mariano, L. Software as a Medium for Understanding Human Behavior. In International Conference on Human-Computer Interaction; Springer: Cham, Switzerland, 2017; pp. 60–73. [Google Scholar]

- Bordel, B.; Alcarria, R. Assessment of human motivation through analysis of physiological and emotional signals in Industry 4.0 scenarios. J. Ambient Intell. Humaniz. Comput. 2017, 1–21. [Google Scholar] [CrossRef]

- Mabrouk, A.B.; Zagrouba, E. Abnormal behavior recognition for intelligent video surveillance systems: A review. Expert Syst. Appl. 2018, 91, 480–491. [Google Scholar] [CrossRef]

- Liu, Y.; Nie, L.; Liu, L.; Rosenblum, D.S. From action to activity: Sensor-based activity recognition. Neurocomputing 2016, 181, 108–115. [Google Scholar] [CrossRef]

- Wang, J.; Chen, Y.; Hao, S.; Peng, X.; Hu, L. Deep learning for sensor-based activity recognition: A survey. Pattern Recognit. Lett. 2019, 119, 3–11. [Google Scholar] [CrossRef]

- Bordel, B.; Alcarria, R.; Sánchez-de-Rivera, D. A Two-Phase Algorithm for Recognizing Human Activities in the Context of Industry 4.0 and Human-Driven Processes. In World Conference on Information Systems and Technologies; Springer: Cham, Switzerland, 2019; pp. 175–185. [Google Scholar]

- Bordel, B.; Alcarria, R.; Martín, D.; Robles, T.; de Rivera, D.S. Self-configuration in humanized cyber-physical systems. J. Ambient Intell. Humaniz. Comput. 2017, 8, 485–496. [Google Scholar] [CrossRef]

- Sánchez, B.B.; Alcarria, R.; Sánchez-Picot, Á.; Sánchez-de-Rivera, D. A methodology for the design of application-specific cyber-physical social sensing co-simulators. Sensors 2017, 17, 2177. [Google Scholar] [CrossRef] [PubMed]

| Name | Description and Comments | |

|---|---|---|

| Linear function. Arithmetic mean. | This function considers the same weight for all components, and small values in any component may be compensated by other indicators. | |

| Rational function. Harmonic mean. | This function is greatly affected by small values, which are hardly compensated by high values in other indicators. | |

|

| Polynomial function. Hölder mean. | This function considers the same weight for all components, and small values in any component may be compensated by other indicators. However, as goes up, this compensation process becomes more difficult. |

| Logarithm function. Geometric mean. | This function is affected in the same manner by high values and small values. Null values cannot be compensated for, but very high values may compensate small measures in other indicators |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bordel, B.; Alcarria, R.; Hernández, M.; Robles, T. People-as-a-Service Dilemma: Humanizing Computing Solutions in High-Efficiency Applications. Proceedings 2019, 31, 39. https://doi.org/10.3390/proceedings2019031039

Bordel B, Alcarria R, Hernández M, Robles T. People-as-a-Service Dilemma: Humanizing Computing Solutions in High-Efficiency Applications. Proceedings. 2019; 31(1):39. https://doi.org/10.3390/proceedings2019031039

Chicago/Turabian StyleBordel, Borja, Ramón Alcarria, Miguel Hernández, and Tomás Robles. 2019. "People-as-a-Service Dilemma: Humanizing Computing Solutions in High-Efficiency Applications" Proceedings 31, no. 1: 39. https://doi.org/10.3390/proceedings2019031039

APA StyleBordel, B., Alcarria, R., Hernández, M., & Robles, T. (2019). People-as-a-Service Dilemma: Humanizing Computing Solutions in High-Efficiency Applications. Proceedings, 31(1), 39. https://doi.org/10.3390/proceedings2019031039