1. Introduction

Coastal ecosystems are complex and dynamic systems that are influenced by varying micro-climatic, biotic, and abiotic factors. For example, coastal habitat erosion and mobile dunes alternate coastal communities that can potentially facilitate the invasion of exotic species [

1,

2]. Coastal vegetation can act as barriers for storm tides and sea level rise vulnerabilities [

2,

3]. Mapping coastal habitats is challenging with traditional mapping and ground surveying methods because of the complexity of the landforms and the dynamic micro-topographical features of the habitat [

4]. Aerial photography is a popular, cost-effective method for obtaining and analyzing remotely sensed data and also a useful tool for determining the characteristics of dune features remotely. Unmanned aerial vehicles (UAV) have become a popular and cost-effective remote sensing technology, composed of aerial platforms capable of carrying small-sized and lightweight sensors [

5]. Discerning features from one another can be difficult when viewing the image with the naked eye, but when using remote sensing software, the task can be accomplished with greater accuracy.

Light-weight UAVs loaded with multispectral sensors are useful for data acquisition in diverse ecosystems and have the advantages of being low-cost, having high spatial and temporal resolution, and having minimum risk [

6,

7]. UAV imagery can be utilized to obtain a high degree of classification accuracy for heterogeneous landscapes, while solving classification problems for the analysis of informal settlements of topographic features such as irregular buildings and sloped terrain vegetation classes [

8]. UAV-based hyperspectral images and digital surface models (DSM) have been used to derive 13 species classification in the wetland area of Hong Kong [

6], estimating percent cover of emergent vegetation in a wetland [

9] and discriminating invasive species within coastal dune vegetation [

10]. Similar processes were integrated into this study, utilizing multispectral images taken from a UAV for classification analysis on a larger, more diverse study area.

The Ma-le’l Dunes are located at the upper end of the North Spit of Humboldt Bay, and are home to a range of both plant and animal species. A preliminary study on identifying Ma-le’l Dune features in a selected plot of the UAV orthomosaic image has given the baseline information for this study [

10]. The study compared the accuracy of feature extraction and supervised/unsupervised classifications for land-use classes in the dune habitat. The accuracy assessments showed that the supervised classification had an accuracy of 50%, while feature extraction had an accuracy of 65%. However, the selected plot for the feature extraction only captured an area of the beach and foredunes, while the entire orthomosaic image shows the ocean, beach, foredunes, and middunes. Our goal for this study was to determine which analysis is most accurate in identifying dune features when performed on a larger, more diverse area. The study area was expanded to the entire orthomosaic plot, which is approximately 31 acres. We perform the same classification analyses as in the previous study on the orthomosaic image and National Agriculture Imagery Program (NAIP) images of the dunes taken in 2012, 2014, and 2016. Methodologies that were used and incorporated included remote sensing, geographic information systems, and cartography. The analysis contributes theoretically and empirically to the ongoing research in the field of geospatial science.

2. Experiments

A DJI Mavic Pro UAV was used to fly a 31 acre plot of the Ma-le’l Dunes in Humboldt County, California at a height of about 80 m. Mission planning was conducted utilizing the DJI Ground Station Pro application on a tablet. There were a total of two missions flown within the same area, one in a west and east direction with the sensor pointed directly down while the other mission was flown in a north and south direction with the sensor pointed at a 15° upward angle. The images were georeferenced into a dense point cloud by taking estimates of the camera positions and calculating elevation.

The UAV images were processed and ortho-mosaicked using Agisoftphotoscan. The Align Photos Tool was used to find matching points between overlapping images, estimate camera positions of each photo, and build a sparse point cloud model. Place markers were utilized to optimize the camera position and orientation of the data. This was done by using the Build Mesh Workflow to reconstruct the geometry. Based on the estimated camera positions, the program calculates depth information for each camera to be combined into a single dense point cloud with the Build Dense Cloud Workflow. A textured polygonal model was created from the dense point cloud to assist with precise marker placement. The Build DEM Workflow was then used to generate a digital elevation model (DEM). The coordinate system was set to WGS 84 UTM Zone 10N. The DEM was then used to build an orthomosaic with the pixel size set to 14 cm. The DEM and orthomosaic were exported as tif files.

NAIP images from 2012, 2014, and 2016 were downloaded from United States Geological Survey’s Earth Explorer and clipped to the orthomosaic extent. The four images were opened in ENVI 64 bit and used in an Example-Based Feature Extraction Workflow, Supervised Classification Workflow, and Unsupervised Classification Workflow. Four classifications were set when classifying the three NAIP images: European beachgrass, shore pines, sand, and other vegetation. A fifth classification for water was added when analyzing the orthomosaic image.

The Example-Based Feature Extraction Workflow Tool was used, and the entire image was set as the spatial subset. The object creation segment and merge was left as the default, and the segmentation and computing attributes process was run. After each NAIP image was selected in the workflow, examples of each feature were selected. These features included European beachgrass, shore pine, sand, and other vegetation. The fifth class of water had to be added to the orthographic, photographs because water was included in the orthographic images, whereas it was not in any of the three NAIP images. After each feature had examples selected, a raster was created showing the distribution of each feature class.

The Classification Workflow Tool was used to perform supervised and unsupervised classification on the images. For the supervised classification, approximately 15 training sites for each of the five classes were selected. Spectral Angle Mapper was selected as the classification method in the algorithm tab. The resulting classifications were then refined using the Cleanup Tool to smooth and aggregate the image. The final classified image and statistics were then exported as .img files. For the unsupervised classifications, the same steps were followed, except no training data was used.

The classified images were opened in ArcMap, and 100 stratified random points were created using the Spatial Analyst Tool: Create Accuracy Assessment Points. The Update Accuracy Assessment Points Tool was used to update the classification of each point to correspond with each of the classified images. The high resolution of each image and previous knowledge from site visits allowed for the true classifications of each point to be identified on each image visually. The classified data and the true class data were then used to create accuracy assessment pivot tables in excel. The pivot tables displayed how the assessment points were classified and how they compared to their true classifications, and allowed for the overall accuracy to be calculated. The final maps of the classified images were designed and exported using ArcMap and Adobe Illustrator. These maps were made in a process of laying the NAIP or orthographic image under the classification raster, and then lowering the transparency of the classification raster to 60% opacity.

3. Results

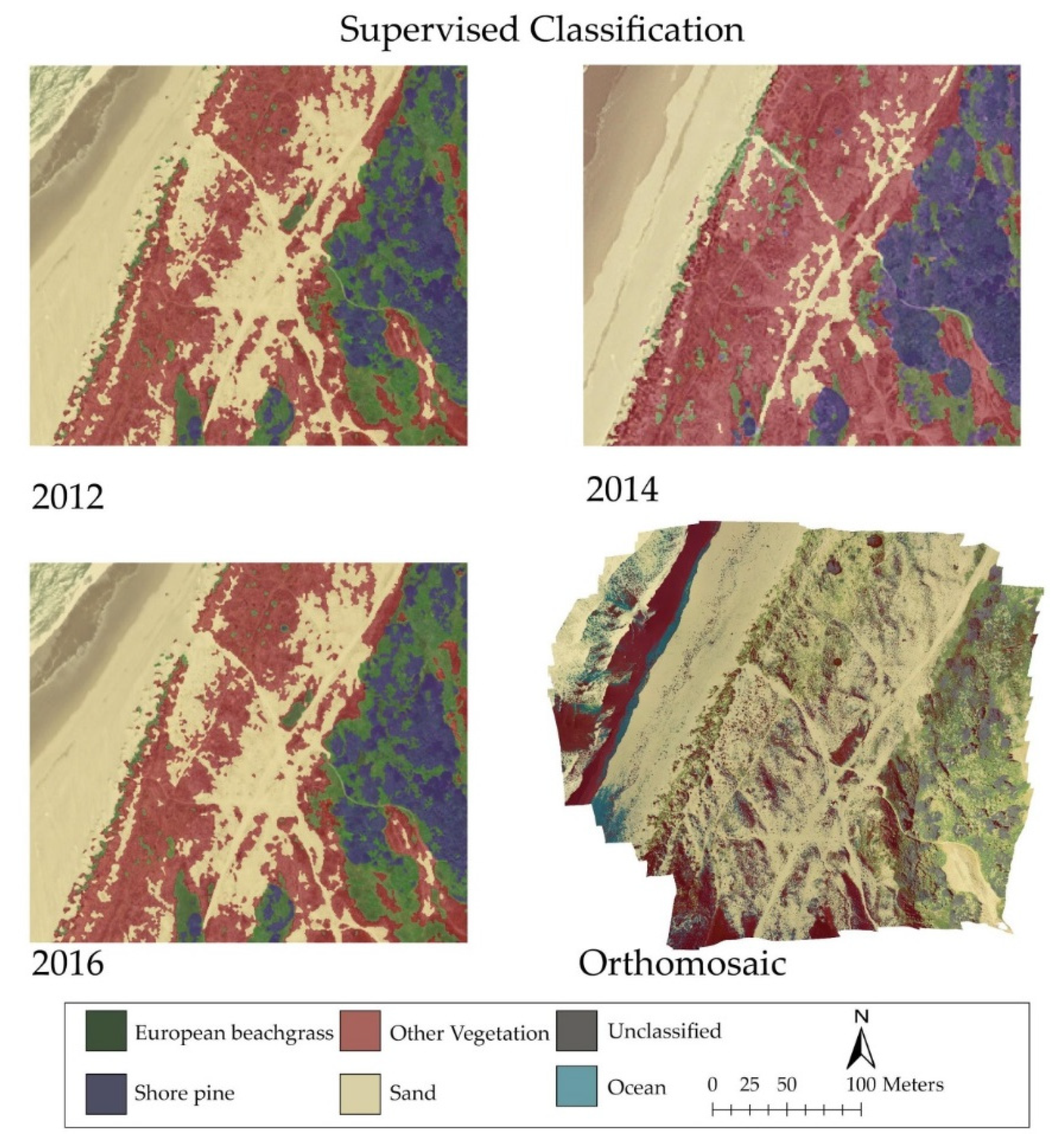

The results of the analysis and accuracy assessments showed that supervised classifications had the highest overall accuracy, followed by unsupervised classifications and then feature extraction (

Figure 1,

Figure 2 and

Figure 3,

Table 1), when classifying European beachgrass, shore pine, sand, and other vegetation. In addition, the NAIP imagery classifications yielded a higher overall accuracy than the orthomosaic image classifications across all three methods. The orthomosaic image showed a higher topographical variation than NAIP images, in which supervised and unsupervised classification resulted in overlapping classes. However, feature extraction did not show a higher variation in image classification, and the accuracy assessment showed only 30% (

Figure 3,

Table 1).

The overall accuracy assessment for NAIP supervised classification images is ≥80 for each time step, and therefore we utilized those images for time-series analysis to see the land-use/cover change between 2012–2014, 2014–2016, and 2012–2016 (

Table 2). The coefficient of agreement (Kappa) for classified images for all three time steps was 0.60. Of the land-use/cover classes, sand, shore pine, and other vegetation showed a ≥86 producer’s accuracy, while sand and shore pine showed a ≥87 user’s accuracy between time steps (

Table 2). The transition matrix between 2012 and 2014 showed that sand was mainly misclassified to other vegetation, and other vegetation to beach grass. In 2014–2016 period, misclassification was high between other vegetation and sand, and shore pine and beach grass. Finally, the entire time period (2012–2016) showed a comparatively high misclassification, sand-other vegetation, and other vegetation-beach grass (

Table 2).

4. Discussion

The results of this analysis indicated that when using multispectral imagery on dune ecology, it is most accurate to use a supervised classification when putting the ecosystem features into separate classes. The orthomosaic image was at a resolution that was too high for the feature extraction to accurately assign the correct class. This can be said for the unsupervised classification as well.

Increasing the number of training sites for the supervised classification and feature extraction could aid in improving accuracy for future research. Supervised classification worked well in this study, contributing moderate agreement or accuracy of kappa analysis (60%) between classified and reference image [

11]. Additionally, using assessments points with an equalized stratified random distribution, which would place an equal number of points in each class, could give more insight on the classification accuracy of each method based on each selected class. Classifications could also be improved by including topographic data with visual reflectance. Studies showed that including this could have a greater influence on classification accuracy than increasing resolution [

12]. Images acquired through another source, such as kite aerial photography, could additionally be added and analyzed to observe different color composites.

Feature extraction is a valuable classification technique for meter and/or sub-meter resolution images, for which our results for NAIP imagery reported moderately accuracy (40%–64%). The UAV orthomosaic image captured micro-topographic features, which makes it difficult to collect training sites due to high spectral and spatial resolution (ca. 14 cm). For example, a single pixel of NAIP image represents 51 pixels for the orthomosaic image. Alternative methods, such as superpixel segmentation with angular difference feature classification method [

13], could be useful in yielding higher accuracy for the feature extraction technique. As Cortenbach [

10] reported, a subset of the study area with a small plot of 0.72 acres worked very well for a feature extraction that contributed to 65% accuracy. The preliminary mapping at Ma-le’l Dunes with an orthomosaicimage captured linear features like social trails and coastal shorelines, and developed sea level rise and digital elevation models [

10].

Compared to the UAV orthomosaic image, NAIP images have four bands, including visible and NIR, which can be used to create NDVI to discriminate vegetation with other features. As a supplement to UAV, we attempted to use a kite with two cameras including RGB and NIR filters to derive NDVI [

2,

3]. However, due to low wind conditions we could not take the Picavet rig up to the air, which was a limitation of this study.

The dune habitat management is necessary to understand the past land-use changes, anthropogenic activities (i.e., social trails), ongoing ecological alterations like invasion of exotic species, and natural environmental issues, such as sea level rise and dune movement to make better management plans to conserve the dunes. The UAV orthoimage with high spatial and temporal resolution is useful for monitoring ongoing changes periodically to implement best management practices to mitigate future climatic and/or anthropogenic impacts.

5. Conclusions

The Ma-le’l dune habitats are dynamic and fragile; this study overviewed how land-use/cover classes changed from 2012–2017 with high resolution images using three classification techniques: supervised, unsupervised, and feature extraction. Of the classification techniques, supervised classification gave the highest overall accuracy for land-use classes (≥80), in which sand and shore pine classified well among all other land-uses. The UAV orthomosaic image captured a high topographic variability, which produced numerous fragments resulting in lower accuracy for the classifications. Therefore, future feature extractions for high-resolution orthomage may require additional steps, such as texture analysis [

10] and the angular difference feature classification method [

13], to obtain better results. The study can be expanded upon or furthered to support the detailed mapping of European beach grass, a predominant invasive species in the dune, using a high-resolution ortho imagery.