A Deep Convolutional Neural Network to Detect the Existence of Geospatial Elements in High-Resolution Aerial Imagery †

Abstract

:1. Introduction

2. Material and Methodology

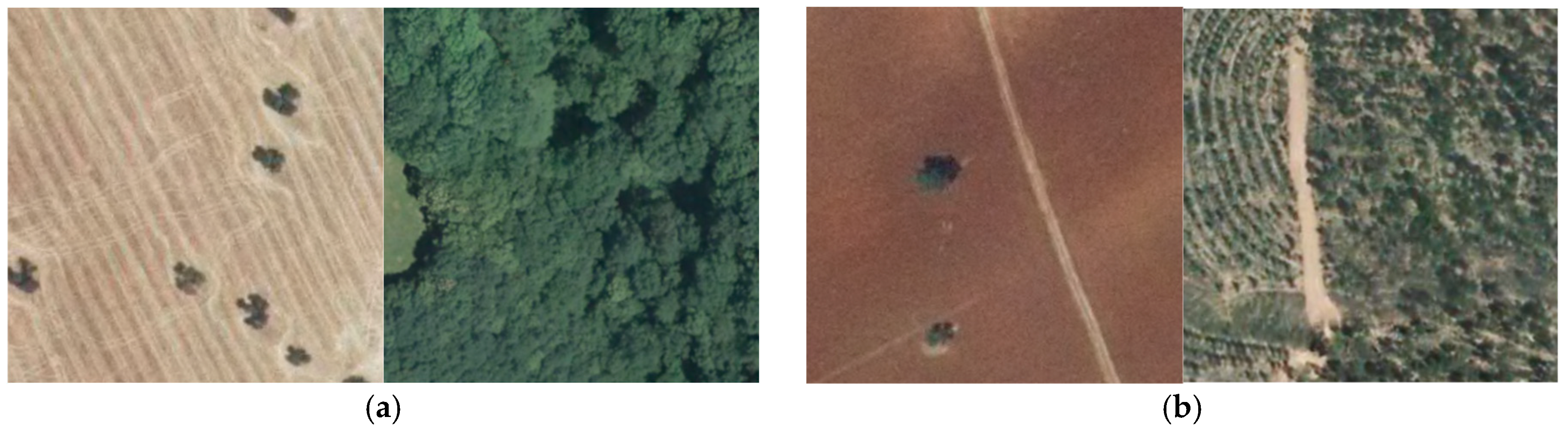

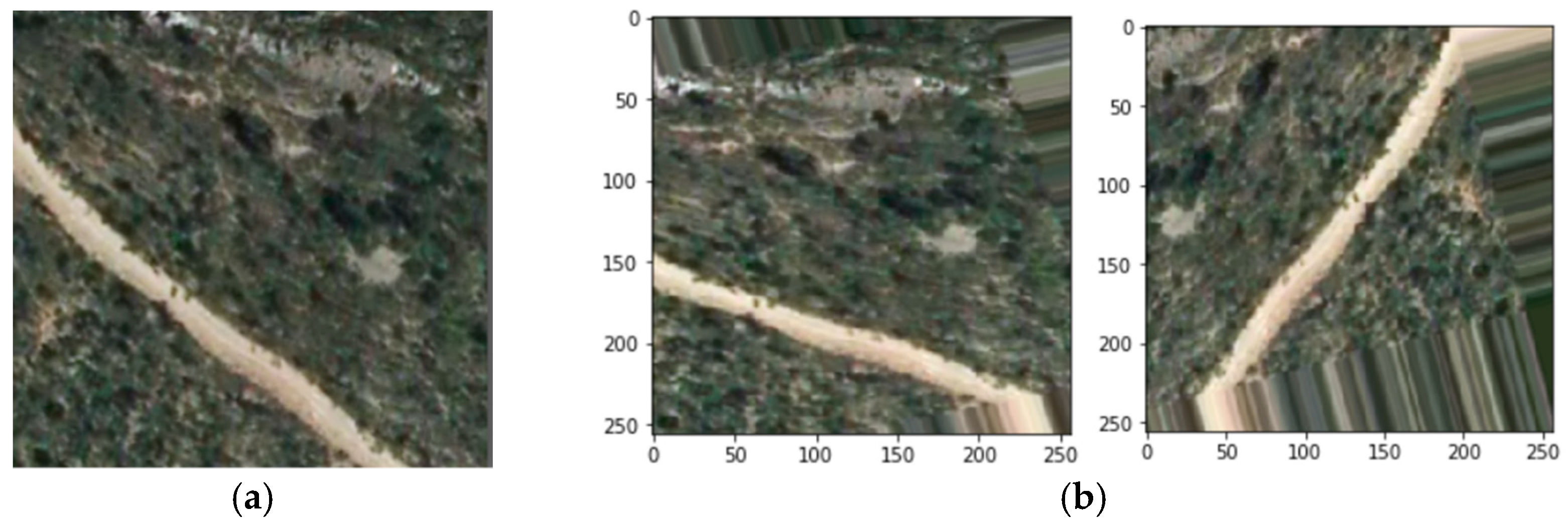

2.1. Data

2.2. Network’s Architecture

2.3. Network’s Training

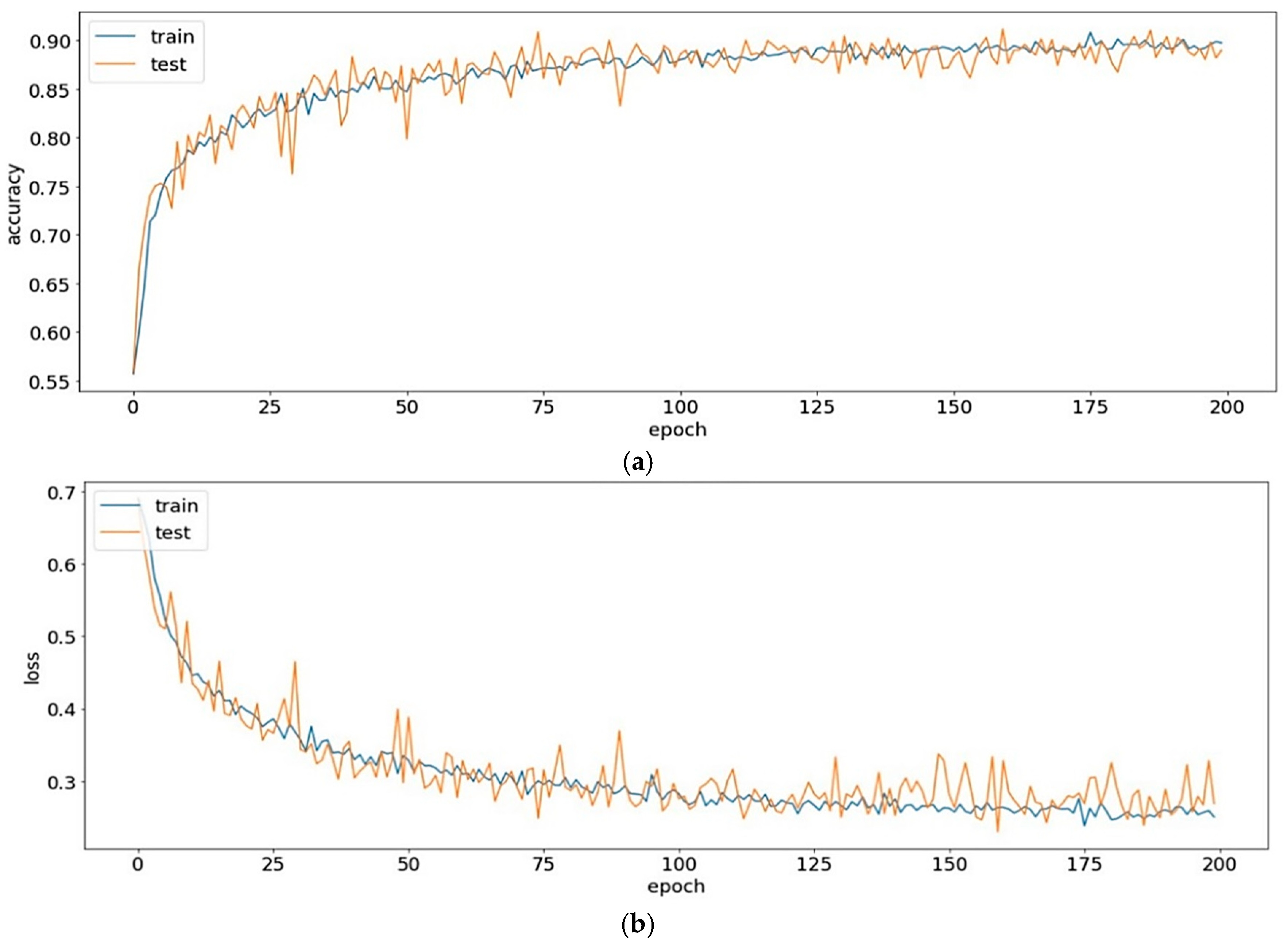

3. Results and Discussion

Funding

Acknowledgments

Conflicts of Interest

References

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep learning in remote sensing: A review. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Pritt, M.; Chern, G. Satellite Image Classification with Deep Learning. In Proceedings of the IEEE Applied Imagery Pattern Recognition Workshop (AIPR), Washington, DC, USA, 10–12 October 2017; pp. 1–7. [Google Scholar] [CrossRef]

- Camps-Valls, G.; Tuia, D.; Bruzzone, L.; Benediktsson, A. Advances in hyperspectral image classification. IEEE Signal Process 2014, 31, 45–54. [Google Scholar] [CrossRef]

- Tuia, D.; Persello, C.; Bruzzone, L. Domain Adaptation for the Classification of Remote Sensing Data: An Overview of Recent Advances. IEEE Geosci. Remote Sens. Mag. 2016, 4, 41–57. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. Proc. ICLR. 2015. Available online: https://arxiv.org/pdf/1409.1556.pdf (accessed on 24 April 2019).

- Bughin, J.; Hazan, E.; Ramaswamy, S.; Chui, M.; Allas, T.; Dahlström, P.; Henke, P.; Trench, M. Artificial Intelligence the Next Digital Frontier? Discussion Paper; McKinsey & Company: New York, NY, USA, 2017. [Google Scholar]

- Albert, A.; Kaur, J.; González, M. Using Convolutional Networks and Satellite Imagery to Identify Patterns in Urban Environments at a Large Scale. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, 13–17 August 2017. [Google Scholar] [CrossRef]

- Hu, W.; Huang, Y.; Wei, L.; Zhang, F.; Li, H. Deep convolutional neural networks for hyperspectral image classification. J. Sens. 2015, 2015, 258619. [Google Scholar] [CrossRef]

- Zhangy, Z.; Liuy, Q.; Wang, Y. Road Extraction by Deep Residual U-Net 2017. IEEE Geosci. Remote Sens. Lett. 2017. [Google Scholar] [CrossRef]

- Luque, B.; Morros, J.R.; Ruiz-Hidalgo, J. Spatio-Temporal Road Detection from Aerial Imagery using CNNs. In Proceedings of the International Conference on Computer Vision Theory and Applications, Porto, Portugal, 27 February 2017. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cira, C.-I.; Alcarria, R.; Manso-Callejo, M.-Á.; Serradilla, F. A Deep Convolutional Neural Network to Detect the Existence of Geospatial Elements in High-Resolution Aerial Imagery. Proceedings 2019, 19, 17. https://doi.org/10.3390/proceedings2019019017

Cira C-I, Alcarria R, Manso-Callejo M-Á, Serradilla F. A Deep Convolutional Neural Network to Detect the Existence of Geospatial Elements in High-Resolution Aerial Imagery. Proceedings. 2019; 19(1):17. https://doi.org/10.3390/proceedings2019019017

Chicago/Turabian StyleCira, Calimanut-Ionut, Ramón Alcarria, Miguel-Ángel Manso-Callejo, and Francisco Serradilla. 2019. "A Deep Convolutional Neural Network to Detect the Existence of Geospatial Elements in High-Resolution Aerial Imagery" Proceedings 19, no. 1: 17. https://doi.org/10.3390/proceedings2019019017

APA StyleCira, C.-I., Alcarria, R., Manso-Callejo, M.-Á., & Serradilla, F. (2019). A Deep Convolutional Neural Network to Detect the Existence of Geospatial Elements in High-Resolution Aerial Imagery. Proceedings, 19(1), 17. https://doi.org/10.3390/proceedings2019019017