Real-Time Efficiency Prediction in Nonlinear Fractional-Order Systems via Multimodal Fusion

Abstract

1. Introduction

- (1)

- First, we propose three base fusion-based prediction models for real-time efficiency prediction in nonlinear fractional-order partial differential systems: Asymptotic Cross-Fusion, Adaptive-Weight Late Fusion, and Two-Stage Feature Fusion.

- (2)

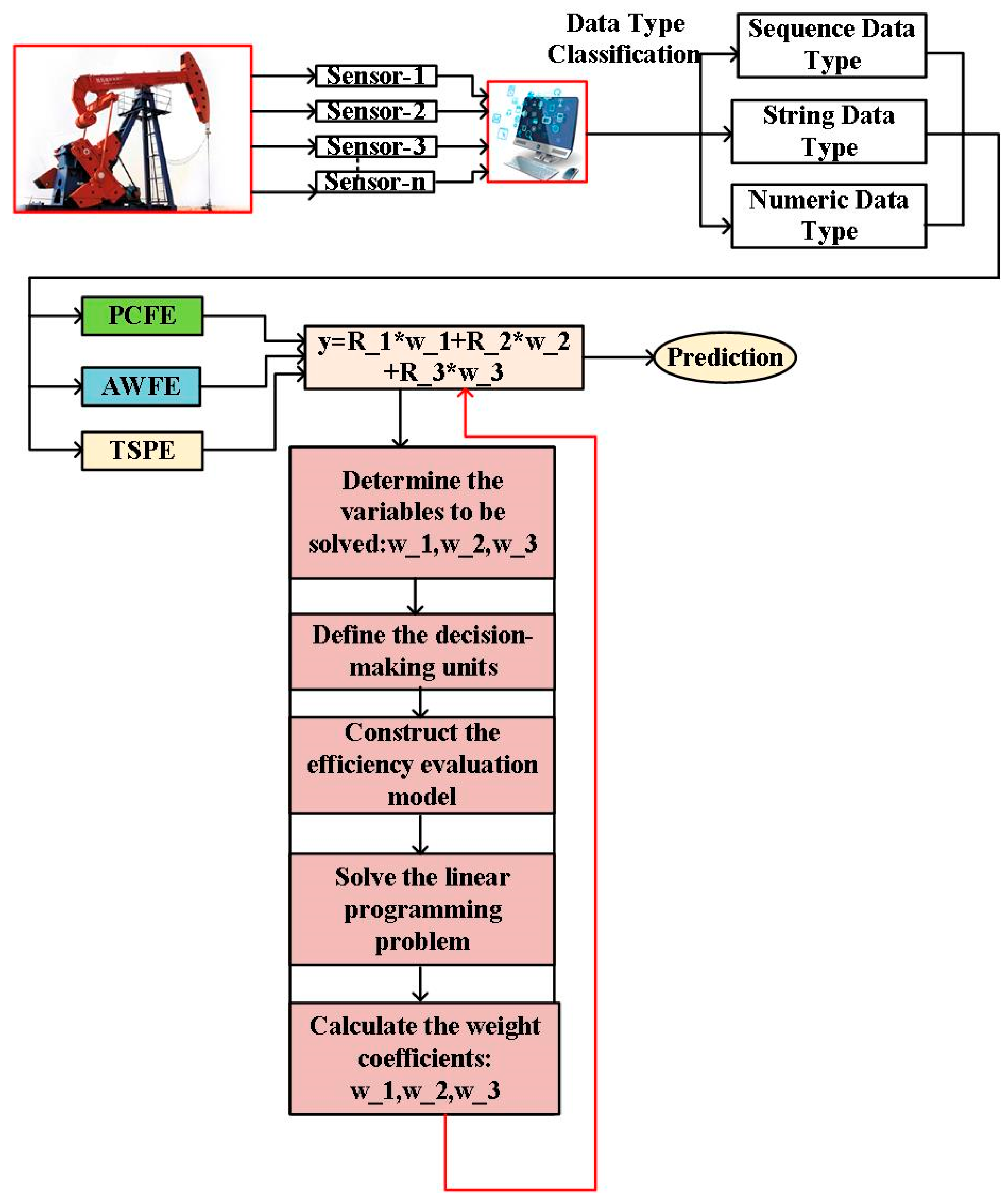

- Then, the development of two ensemble strategies integrating the base prediction models for real-time efficiency prediction in nonlinear fractional-order partial differential systems: a Parallel-Series Cascade strategy and a Data Envelopment Analysis strategy.

- (3)

- Finally, we introduce a multi-strategy ensemble prediction model for real-time efficiency prediction in nonlinear fractional-order partial differential equation systems.

2. Related Work

2.1. Mathematical Models

2.2. Prediction Models of System Efficiency Based on Historical Data

2.3. Multimodal Feature Fusion Networks

3. Basic Definitions

4. Methodology

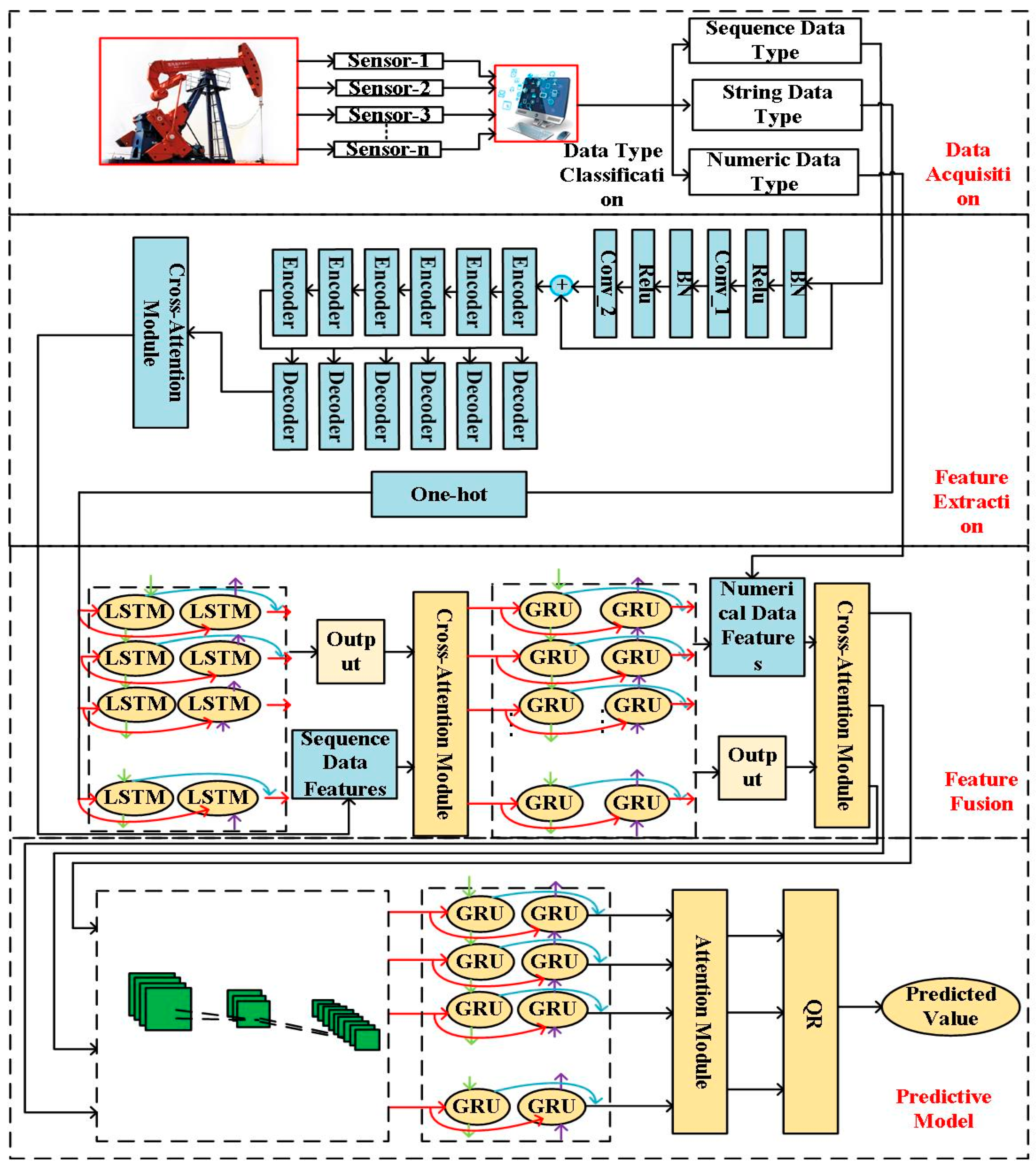

4.1. Prediction Models of Beam Pumping System Efficiency Based on Progressive Cross-Fertilization

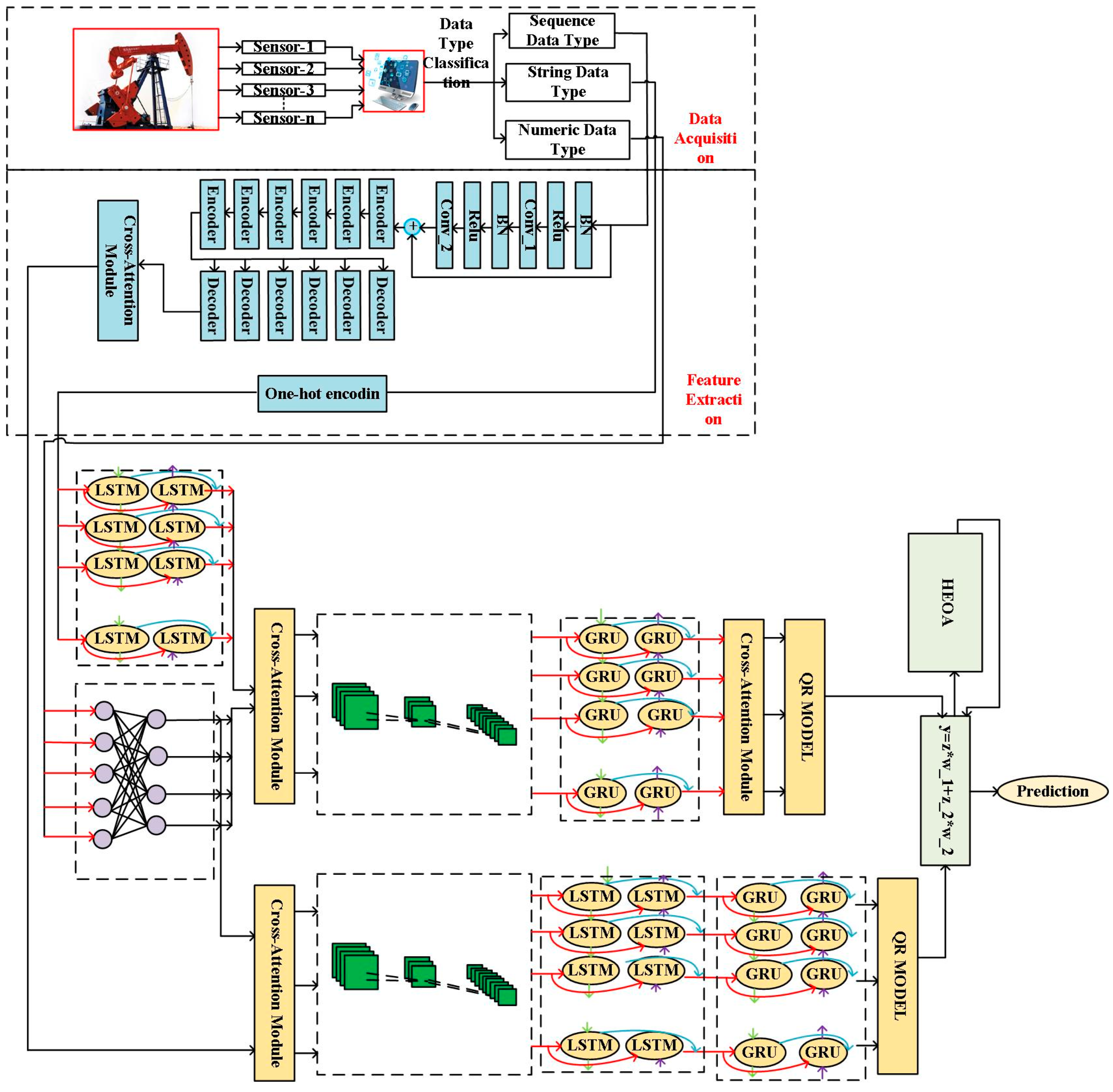

4.2. Adaptive Weight-Based Prediction Models of Efficiency of Late Fusion Pumping Well Systems

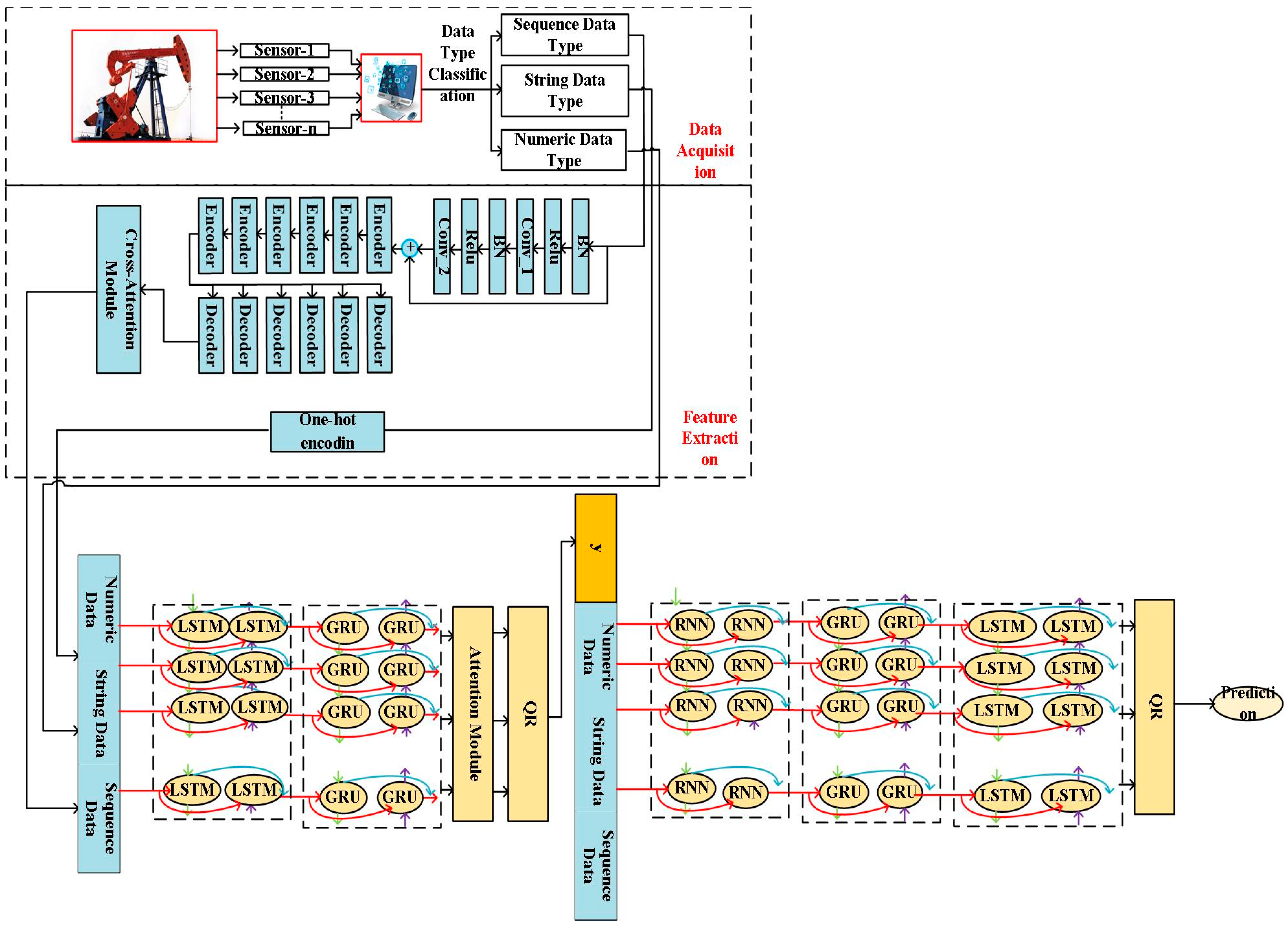

4.3. Prediction Models of Pumping Well System Efficiency Based on Two-Step Progressive Feature Fusion

4.4. Prediction Models of Pumping Well System Efficiency Based on the Parallel-Strand Cascaded Integration Strategy

4.5. Online Prediction Models of Pumping Well System Efficiency Based on the Data Envelope Method Integration Strategy

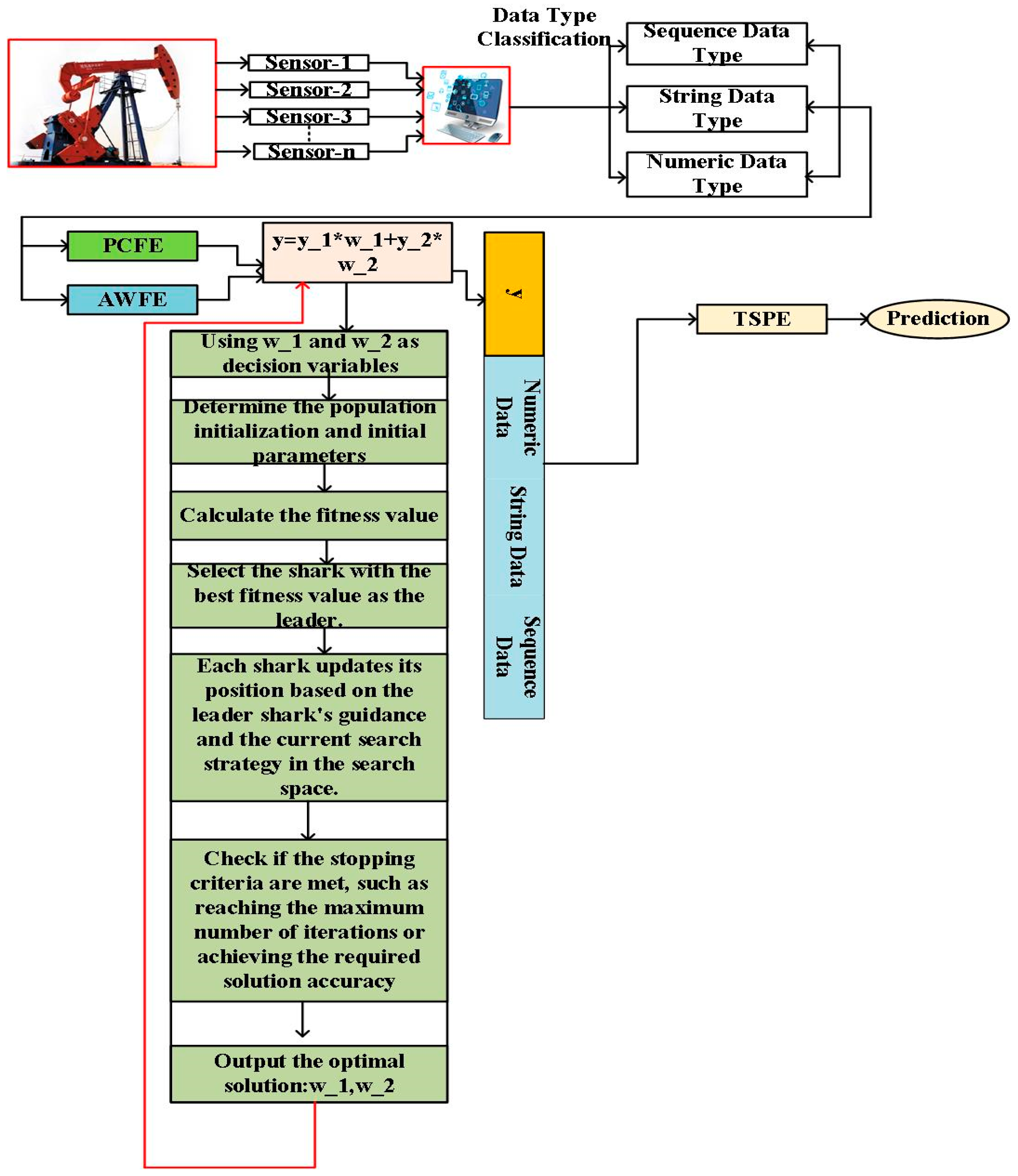

4.6. Online Prediction Models of Pumping Well System Efficiency Based on Integration of Multi-Integrated Strategies Integration

5. Experiment

5.1. Data Description

5.2. Data Pre-Processing and Evaluation Indicators

5.3. Experimental Details

- (1)

- PCFE: To validate the accuracy of PCFE, the learning rate was 0.001, the number of training iterations was 500, the batch size was 256, and the optimizer was Adam. In the ResNet backbone, convolutional kernels measured 7 × 7 with a stride of 2 and padding of 3. The Transformer module comprised eight attention heads with an embedding dimension of eight. Both the BiLSTM and BiGRU subnets consisted of two hidden layers, each containing 25 units. In the QRCNN–BiGRU–Attention model, convolutional kernels measured 16 × 16 with a stride of 1 and no padding, the BiGRU component included two hidden layers of 12 units each, and the attention mechanism used four heads with an attention dimension of 24.

- (2)

- AWFE: To validate the accuracy of AWFE, the learning rate was 0.001, the number of training iterations was 100, the batch size was 128, and the optimizer was Adam. In the ResNet backbone, convolutional kernels measured 7 × 7 with a stride of 2 and padding of 3. The Transformer module comprised eight attention heads with an embedding dimension of eight. At the data-alignment layer, the BiLSTM consisted of one hidden layer of five units. In the QRCNN–BiGRU–Cross-Attention model, convolutional kernels measured 16 × 16 with a stride of 2 and padding of 1, the BiGRU featured one hidden layer of 12 units, and the Cross-Attention mechanism employed four attention heads with an embedding dimension of 24. In the QRCNN-BiLSTM-BiGRU variant, convolutional kernels measured 16 × 16 with a stride of 1 and automatic padding; the BiLSTM comprised one hidden layer of 12 units; and the Cross-Attention module again utilized four heads with an embedding dimension of 24. Finally, the human evolutionary algorithm was configured with a population size of 50 and 1000 iterations.

- (3)

- TSPE: To validate TSPE accuracy, the following hyperparameters were adopted, namely a learning rate of 0.001, 80 training iterations, and a batch size of 128, with Adam as the optimizer. The ResNet backbone utilized 7 × 7 convolutional kernels with stride 2 and padding 3. The Transformer module contained eight attention heads with an embedding dimension of 8. In the QRBiLSTM–BiGRU–Attention model, both BiLSTM and BiGRU subnetworks comprised two hidden layers of 20 units each. The QRBiRNN-BiGRU-BiLSTM variant featured single hidden layers of 64 units in each subnetwork (BiRNN, BiLSTM, and BiGRU).

- (4)

- EPCI: To validate EPCI accuracy, the multiple hyperparameters were adopted—the learning rate was 0.001; there were 1000 training iterations for PCFE, 100 for AWFE, and 376 for TSPE; and there was a uniform batch size of 128. All other parameters remained fixed.

- (5)

- EDEA: To validate EDEA accuracy, a learning rate of 0.001 was adopted. Training iterations were set to 500 for PCFE, 100 for AWFE, and 100 for TSPE, with a uniform batch size of 128. In the PCFE method, both BiLSTM and BiGRU modules contained two hidden layers of 32 units each. For the AWFE approach, the data-alignment layer’s BiLSTM module employed a single hidden layer with 10 units. All other hyperparameters remained constant.

- (6)

- MEIE: To validate the accuracy of the proposed multi-strategy ensemble prediction model method for rod pump system efficiency, the final ensemble model (QRBiLSTM–BiGRU–Attention) was configured with a learning rate of 0.001, a batch size of 64, and 500 training iterations. The BiLSTM component contained two hidden layers of 64 units each, while the BiGRU component featured one hidden layer with 64 units. All other hyperparameters remained unchanged.

5.4. Experimental Results and Analysis

6. Ablation Study

6.1. Ablation Study of the PCFE Prediction Models

6.2. Ablation Study of the AWFE Prediction Models

6.3. Ablation Study of the TSPE Prediction Models

6.4. Ablation Study of the EPCI Prediction Models

6.5. Ablation Study of the EDEA Prediction Models

6.6. Ablation Study of the MEIE Prediction Models

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Luan, G.-H.; He, S.-L.; Yang, Z.; Yang, Z.; Zhao, H.-Y.; Hu, J.-H.; Xie, Q.; Shen, Y.-H. A prediction model for a new deep-rod pumping system. J. Pet. Sci. Eng. 2011, 80, 75–80. [Google Scholar] [CrossRef]

- Lv, X.X.; Wang, H.X.; Zhang, X.; Liu, Y.X.; Chen, S.S. An equivalent vibration model for optimization design of carbon/glass hybrid fiber sucker rod pumping system. J. Pet. Sci. Eng. 2021, 207, 109148. [Google Scholar] [CrossRef]

- Gibbs, S.G. Predicting the behavior of sucker rod pumping systems. J. Pet. Technol. 1965, 61, 769–778. [Google Scholar] [CrossRef]

- Lekia, S.D.L.; Evans, R.D. A coupled rod and fluid Dynamic model for predicting the behavior of sucker-rod pumping system. SPE 1965, 21664, 30–45. [Google Scholar]

- Xing, M.; Zhou, L.; Zhang, C.; Xue, K.; Zhang, Z. Simulation Analysis of Nonlinear Friction of Rod String in Sucker Rod Pumping System. J. Comput. Nonlinear Dyn. 2015, 14, 091008. [Google Scholar] [CrossRef]

- Xing, M. Response analysis of longitudinal vibration of sucker rod string considering rod buckling. Adv. Eng. Softw. 2019, 99, 49–58. [Google Scholar] [CrossRef]

- Moreno, G.A.; Garriz, A.E. Sucker rod string dynamics in deviated wells. J. Pet. Sci. Eng. 2020, 184, 106534. [Google Scholar] [CrossRef]

- Tarmigh, M.; Behbahani-Nejad, M.; Hajidavalloo, E. Two-way fluid-structure interaction for longitudinal vibration of a loaded elastic rod within a multiphase fluid flow. J. Braz. Soc. Mech. Sci. Eng. 2023, 45, 572. [Google Scholar] [CrossRef]

- Jiaojian, Y.I.; Dong, S.U.; Yousheng, Y.A.G. Predicting multi-tapered sucker-rod pumping systems with the analytical solution. J. Pet. Sci. Eng. 2021, 197, 108115. [Google Scholar]

- Wang, X.; Lv, L.; Li, S.; Pu, H.; Liu, Y.; Bian, B.; Li, D. Longitudinal vibration analysis of sucker rod based on a simplified thermo-solid model. J. Comput. Nonlinear Dyn. 2021, 196, 107951. [Google Scholar] [CrossRef]

- Li, Q.; Chen, B.; Huang, Z.; Tang, H.; Li, G.; He, L.; Sáez, A. Study on Equivalent Viscous Damping Coefficient of Sucker Rod Based on the Principle of Equal Friction Loss. Math. Probl. Eng. 2019, 2019, 9272751. [Google Scholar] [CrossRef]

- Ma, B.; Dong, S. Coupling Simulation of Longitudinal Vibration of Rod String and Multi-Phase Pipe Flow in Wellbore and Research on Downhole Energy Efficiency. Energies 2023, 16, 4988. [Google Scholar] [CrossRef]

- Langbauer, C.; Antretter, T. Finite Element Based Optimization and Improvement of the Sucker Rod Pumping System. In Proceedings of the Abu Dhabi International Petroleum Exhibition & Conference, Abu Dhabi, United Arab Emirates, 3–6 November 2017. [Google Scholar]

- Lukasiewicz, S.A. Dynamic Behavior of the Sucker Rod String in the Inclined Well. In Proceedings of the SPE Production Operations Symposium, Oklahoma City, OK, USA, 7–9 April 1991. [Google Scholar]

- Hongbo, W.; Shimin, D.; Yang, Z.; Shuqiang, W.; Xiurong, S. Coupling simulation of the pressure in pump and the longitudinal vibration of sucker rod string based on gas-liquid separation. Shiyou Xuebao/Acta Pet. Sin. 2023, 44, 394–404. [Google Scholar]

- Wang, H.; Dong, S. Research on the Coupled Axial-Transverse Nonlinear Vibration of Sucker Rod String in Deviated Wells. J. Vib. Eng. Technol. 2021, 9, 115–129. [Google Scholar] [CrossRef]

- Dong, S.; Li, W.; Houtian, B.; Wang, H.; Chen, J.; Liu, M. Optimizing the running parameters of a variable frequency beam pumping system and simulating its dynamic behaviors. Jixie Gongcheng Xuebao/J. Mech. Eng. 2016, 52, 63–70. [Google Scholar] [CrossRef]

- Tan, C.; Deng, H.; Feng, Z.; Li, B.; Peng, Z.; Feng, G. Data-driven system efficiency prediction and production parameter optimization for PW-LHM. J. Pet. Sci. Eng. 2022, 209, 109810. [Google Scholar] [CrossRef]

- Ma, B.; Dong, S. A novel hybrid efficiency prediction model for pumping well system based on MDS-SSA-GNN. Energy Sci. Eng. 2024, 12, 3272–3288. [Google Scholar] [CrossRef]

- Ma, B.; Dong, S. A Hybrid Prediction Model for Pumping Well System Efficiency Based on Stacking Integration Strategy. Int. J. Energy Res. 2024, 2024, 8868949. [Google Scholar] [CrossRef]

- Wang, X.; Kihara, D.; Luo, J.; Qi, G.-J. ENAET: Self-trained ensemble autoencoding transformations for semi-supervised learning. arXiv 2019, arXiv:1911:09265. [Google Scholar]

- Ju, C.; Bibaut, A.; van der Laan, M. The relative performance of ensemble methods with deep convolutional neural networks for image classification. J. Appl. Stat. 2018, 45, 2800–2818. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Feng, K.; Wu, J. SVM-based deep stacking networks. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 1 February 2019; Volume 33, pp. 5273–5280. [Google Scholar]

- Zhou, W.; Zhu, Y.; Lei, J.; Wan, J.; Yu, L. APNet: Adversarial Learning Assistance and Perceived Importance Fusion Network for All-Day RGB-T Salient Object Detection. IEEE Trans. Emerg. Top. Comput. Intell. 2022, 6, 957–968. [Google Scholar] [CrossRef]

- Chen, C.; Li, Z.; Kou, K.L.; Du, J.; Li, C.; Wang, H. Comprehensive Multisource Learning Network for Cross-Subject Multimodal Emotion Recognition. In Proceedings of the IEEE Transactions on Emerging Topics in Computational Intelligence, Piscataway, NJ, USA, 27 June 2024; Volume 9, pp. 365–380. [Google Scholar]

- Wang, L.; Peng, J.; Zheng, C.; Zhao, T.; Zhu, L. A cross modal hierarchical fusion multimodal sentiment analysis method based on multi-task learning. Inf. Process. Manag. 2024, 61, 103675. [Google Scholar] [CrossRef]

- Islam, M.; Nooruddin, S.; Karray, F.; Muhammad, G. Multi-level feature fusion for multimodal human activity recognition in Internet of Healthcare Things. Inf. Fusion 2023, 94, 17–31. [Google Scholar] [CrossRef]

- Zhao, X.; Tang, C.; Hu, H.; Wang, W.; Qiao, S.; Tong, A. Attention mechanism based multimodal feature fusion network for human action recognition. J. Vis. Commun. Image Represent. 2025, 110, 104459. [Google Scholar] [CrossRef]

- Sun, C.; Chen, X. Deep Coupling Autoencoder for Fault Diagnosis with Multimodal Sensory Data. In Proceedings of the IEEE Transactions on Industrial Informatics, Porto, Portugal, 18–20 July 2018; Volume 14, pp. 1137–1145. [Google Scholar]

- Jing, J.; Wu, H.; Sun, J.; Fang, X.; Zhang, H. Multimodal fake news detection via progressive fusion networks. Inf. Process. Manag. 2023, 60, 103120. [Google Scholar] [CrossRef]

- Niu, M.; Tao, J.; Liu, B.; Huang, J.; Lian, Z. Multimodal Spatiotemporal Representation for Automatic Depression Level Detection. IEEE Trans. Affect. Comput. 2023, 14, 294–307. [Google Scholar] [CrossRef]

- Peng, S.; Zhu, J.; Wu, T.; Tang, A.; Kan, J.; Pecht, M. SOH early prediction of lithium-ion batteries based on voltage interval selection and features fusion. Energy 2024, 308, 132993. [Google Scholar] [CrossRef]

- Gandhi, A.; Adhvaryu, K.; Poria, S.; Cambria, E.; Hussain, A. Multimodal sentiment analysis: A systematic review of history, datasets, multimodal fusion methods, applications, challenges and future directions. Inf. Fusion 2023, 91, 424–444. [Google Scholar] [CrossRef]

- Huang, J.; Zhang, F.; Safaei, B.; Qin, Z.; Chu, F. The flexible tensor singular value decomposition and its applications in multisensor signal fusion processing. Mech. Syst. Signal Process. 2024, 220, 111662. [Google Scholar] [CrossRef]

| Components | Benefits | |

|---|---|---|

| Feature Extraction | Residual Networks | By employing multi-layer convolutional stacks with shortcut connections, fine-grained local features across multiple receptive fields are efficiently extracted, while mitigating vanishing gradients and ensuring scalable network depth and performance. |

| Transformer | This mechanism establishes long-range dependencies, enhancing the model’s perception of global semantic information and compensating for the limited receptive field of pure convolutional networks. | |

| Cross-Attention | Dynamic weighted fusion across features from different modalities or hierarchical levels enables alignment and complementarity of critical features, thereby enhancing the discriminative power of the feature representations. | |

| Feature Fusion | BiLSTM | By capturing contextual information in both the forward and backward directions of the input sequence, it can more comprehensively model bidirectional dependencies. |

| Cross-Attention | By computing attention weights across features from different branches or modalities, it enables the complementary alignment of critical features. | |

| BiGRU | By supporting bidirectional propagation, it preserves robust temporal modeling capability while enhancing training efficiency and generalization. | |

| Predictive Model | CNN | It can efficiently capture local spatiotemporal dependencies and feature patterns. |

| BiGRU | By aggregating information from both the forward and backward directions of the sequence, it fully captures bidirectional dependencies. | |

| Attnetion | It can establish direct dependencies between all positions in a sequence or feature set, thereby overcoming the limitations of recurrent and convolutional networks in capturing long-range information. |

| Components | Benefits | |

|---|---|---|

| Feature Extraction | Residual Networks | By employing multi-layer convolutional stacks with shortcut connections, fine-grained local features across multiple receptive fields are efficiently extracted, while mitigating vanishing gradients and ensuring scalable network depth and performance. |

| Transformer | This mechanism establishes long-range dependencies, enhancing the model’s perception of global semantic information and compensating for the limited receptive field of pure convolutional networks. | |

| Cross-Attention | Dynamic weighted fusion across features from different modalities or hierarchical levels enables alignment and complementarity of critical features, thereby enhancing the discriminative power of the feature representations. | |

| Predictive Model-1 | CNN | It can efficiently capture local spatiotemporal dependencies and feature patterns. |

| BiGRU | By aggregating information from both the forward and backward directions of the sequence, it fully captures bidirectional dependencies. | |

| Cross-Attention | Dynamic weighted fusion across features from different modalities or hierarchical levels enables alignment and complementarity of critical features, thereby enhancing the discriminative power of the feature representations. | |

| Predictive Model-2 | CNN | It can efficiently capture local spatiotemporal dependencies and feature patterns. |

| BiGRU | By aggregating information from both the forward and backward directions of the sequence, it fully captures bidirectional dependencies. | |

| BiLSTM | By capturing contextual information in both the forward and backward directions of the input sequence, it can more comprehensively model bidirectional dependencies. |

| Components | Benefits | |

|---|---|---|

| Feature Extraction | Residual Networks | By employing multi-layer convolutional stacks with shortcut connections, fine-grained local features across multiple receptive fields are efficiently extracted, while mitigating vanishing gradients and ensuring scalable network depth and performance. |

| Transformer | This mechanism establishes long-range dependencies, enhancing the model’s perception of global semantic information and compensating for the limited receptive field of pure convolutional networks. | |

| Cross-Attention | Dynamic weighted fusion across features from different modalities or hierarchical levels enables alignment and complementarity of critical features, thereby enhancing the discriminative power of the feature representations. | |

| Predictive Model-1 | Attention | It can establish direct dependencies between all positions in a sequence or feature set, thereby overcoming the limitations of recurrent and convolutional networks in capturing long-range information. |

| BiGRU | By aggregating information from both the forward and backward directions of the sequence, it fully captures bidirectional dependencies. | |

| BiLSTM | By capturing contextual information in both the forward and backward directions of the input sequence, it can more comprehensively model bidirectional dependencies. | |

| Predictive Model-2 | BiRNN | By employing both forward and backward hidden states, it comprehensively captures contextual dependencies at both the beginning and end of the sequence. |

| BiGRU | By aggregating information from both the forward and backward directions of the sequence, it fully captures bidirectional dependencies. | |

| BiLSTM | By capturing contextual information in both the forward and backward directions of the input sequence, it can more comprehensively model bidirectional dependencies. |

| Components | Benefits | |

|---|---|---|

| Models | PCFE | This model seamlessly integrates heterogeneous sensor data through specialized encoders, dynamically fuses multi-scale features via Cross-Attention, and delivers robust real-time efficiency predictions with uncertainty quantification using a GRU–attention–quantile regression pipeline. |

| AWFE | This model combines specialized encoders for sequence, string, and numeric data with cross-modal attention fusion and ensemble GRU/LSTM predictors, topped by a quantile regression output to deliver robust, real-time efficiency predictions with uncertainty quantification. | |

| TSPE | By employing dedicated encoders for numeric, string, and sequence data with Cross-Attention fusion, and integrating dual-branch GRU/LSTM networks with quantile regression, this model achieves end-to-end multimodal feature fusion, real-time high-precision efficiency prediction, and uncertainty quantification. | |

| Weight Optimization | GKSO | By mimicking sharks’ dynamic foraging strategies, SOA effectively balances exploration and exploitation, reducing the risk of premature convergence to local optima. |

| Components | Benefits | |

|---|---|---|

| Models | PCFE | This model seamlessly integrates heterogeneous sensor data through specialized encoders, dynamically fuses multi-scale features via Cross-Attention, and delivers robust real-time efficiency predictions with uncertainty quantification using a GRU–attention–quantile regression pipeline. |

| AWFE | This model combines specialized encoders for sequence, string, and numeric data with cross-modal attention fusion and ensemble GRU/LSTM predictors, topped by a quantile regression output to deliver robust, real-time efficiency predictions with uncertainty quantification. | |

| TSPE | By employing dedicated encoders for numeric, string, and sequence data with Cross-Attention fusion, and integrating dual-branch GRU/LSTM networks with quantile regression, this model achieves end-to-end multimodal feature fusion, real-time high-precision efficiency prediction, and uncertainty quantification. | |

| Weight Optimization | DEA | DEA derives weights directly from the data without assuming a specific functional form, allowing each decision-making unit to be evaluated against its own “best-practice” frontier. |

| Components | Benefits | |

|---|---|---|

| Models | EPCI | The model achieves high-precision, robust system efficiency prediction by adaptively calibrating fusion weights with the Shark Optimization Algorithm to perform weighted integration of two complementary base learners. |

| EDEA | By leveraging Data Envelopment Analysis to optimally compute fusion weights for PCFE, AWFE, and TSPE, this model adaptively integrates three complementary predictors to achieve unbiased, high-accuracy system efficiency forecasts. |

| Characteristics | Example | Characteristics | Example | Characteristics | Example |

|---|---|---|---|---|---|

| Rated power of the electric motor | 15 KW | Number of centralizers | 750 | Well inclination angle | 0.43, 0.43, 0.58, … |

| Motor no-load power | 0.57 KW | Pump diameter | 28 mm | Dogleg severity | 0, 0.15, 0.29, … |

| Motor rated efficiency | 88.5% | Stroke frequency | 3 (min−1) | Electrical power curve | 13, 13.2, 15.6, … |

| Pump setting depth | 2250 m | Number of rod string grades | 2 | Balancing method | Crank balance |

| Stroke length | 2 m | Equivalent diameter of rod string | 17.474 mm | Pumping unit model | CYJY14-4.8-73HB |

| Balance degree | 95% | Tubing specification | 62 mm | Relative density of natural gas | 0.6 |

| Saturation pressure | 5 Mpa | Submergence depth | 0.8 m | Tubing pressure | 0.8 Mpa |

| Well fluid density | 815 (kg/m3) | Pump clearance grade | 1 | Gas–oil ratio | 25 |

| Well fluid viscosity | 5 (mPa s) | Dynamic fluid level | 2250 m | Casing pressure | 0.8 Mpa |

| System efficiency | 22.34% | Water cut | 35% | ||

| Methods | R2 | |

|---|---|---|

| PCFE | 0.7961 0.0076 | 1.8927 0.0324 |

| AWFE | 0.7627 0.0071 | 2.0835 0.0258 |

| TSPE | 0.7693 0.0085 | 2.0637 0.0966 |

| EPCI | 0.8685 0.00117 | 1.5490 0.0221 |

| EDEA | 0.8581 0.00114 | 1.7357 0.0179 |

| MEIE | 0.9335 0.00103 | 1.2293 0.0073 |

| Model | ||

|---|---|---|

| PCFE | 0.7961 0.0085 | 1.8927 0.0329 |

| ResNet | 0.7564 0.0119 | 2.0369 0.0882 |

| ResNet–Transformer | 0.7746 0.0101 | 1.9464 0.0596 |

| QRCNN | 0.7552 0.0155 | 2.0388 0.1294 |

| QRCNN-BiGRU | 0.7734 0.0103 | 1.9564 0.0731 |

| Model | /% | /% |

|---|---|---|

| ResNet | 2.35 | 4.65 |

| ResNet–Transformer | 2.71 | 2.83 |

| QRCNN | 1.82 | 4.21 |

| QRCNN-BiGRU | 2.85 | 3.37 |

| Model | ||

|---|---|---|

| AWFE | 0.7923 0.0072 | 1.8645 0.0262 |

| ResNet–Transformer | 0.7756 0.0106 | 1.9900 0.0285 |

| QRCNN-GRU-1 | 0.7714 0.0111 | 1.9904 0.0294 |

| QRCNN-1 | 0.75234 0.0146 | 2.1569 0.03127 |

| QRGRU-1 | 0.7544 0.0151 | 2.1369 0.03016 |

| QRCNN-BiLSTM-2 | 0.7743 0.0109 | 1.9901 0.0291 |

| QRBiLSTM-BiGRU-2 | 0.7708 0.0095 | 2.0835 0.0233 |

| QRGRU-2 | 0.7633 0.0146 | 2.1046 0.0332 |

| Model | /% | /% |

|---|---|---|

| ResNet–Transformer | 2.11 | 6.73 |

| QRCNN-GRU-1 | 2.64 | 6.75 |

| QRCNN-1 | 2.47 | 8.37 |

| QRGRU-1 | 2.20 | 7.36 |

| QRCNN-BiLSTM-2 | 2.27 | 6.74 |

| QRBiLSTM-BiGRU-2 | 2.72 | 11.75 |

| QRGRU-2 | 0.97 | 1.01 |

| Model | ||

|---|---|---|

| TSPE | 0.8362 0.0096 | 1.6590 0.0928 |

| ResNet | 0.7485 0.0226 | 2.3321 0.1353 |

| ResNet–Transformer | 0.7693 0.0224 | 2.0637 0.1174 |

| QRBiLSTM-BiGRU-1 | 0.7819 0.0221 | 1.9403 0.1068 |

| QRBiRNN-BiGRU-2 | 0.7715 0.0213 | 2.0224 0.1047 |

| QRBiRNN-BiLSTM-2 | 0.7823 0.0207 | 1.9263 0.1141 |

| QRBiGRU-BiLSTM-2 | 0.7708 0.0191 | 2.1210 0.1219 |

| QRBiLSTM-1 | 0.7706 0.0294 | 2.1222 0.1359 |

| QRBiGRU-1 | 0.7708 0.0327 | 2.0959 0.1446 |

| QRBiRNN-2 | 0.7519 0.0251 | 2.2361 0.1422 |

| QRBiLSTM-2 | 0.7617 0.0304 | 2.2094 0.1863 |

| QRBiGRU-2 | 0.7507 0.0294 | 2.2476 0.1272 |

| Model | /% | /% |

|---|---|---|

| ResNet | 2.71 | 13.01 |

| ResNet–Transformer | 8.00 | 24.40 |

| QRBiLSTM-BiGRU-1 | 6.50 | 16.96 |

| QRBiRNN-BiGRU-2 | 7.74 | 21.90 |

| QRBiRNN-BiLSTM-2 | 6.45 | 16.11 |

| QRBiGRU-BiLSTM-2 | 7.82 | 27.85 |

| QRBiLSTM-1 | 1.45 | 9.38 |

| QRBiGRU-1 | 1.42 | 8.02 |

| QRBiRNN-2 | 2.50 | 10.57 |

| QRBiLSTM-2 | 3.89 | 14.70 |

| QRBiGRU-2 | 2.61 | 5.63 |

| Model | ||

|---|---|---|

| EPCI | 0.8685 0.00119 | 1.5490 0.0243 |

| PCFE | 0.7802 0.00152 | 1.9058 0.1106 |

| AWFE | 0.7849 0.00150 | 1.9608 0.1757 |

| TSPE | 0.7320 0.0016 | 2.2094 0.1033 |

| Model | /% | /% |

|---|---|---|

| PCFE | 10.17 | 23.03 |

| AWFE | 9.63 | 26.59 |

| TSPE | 15.72 | 42.63 |

| Model | ||

|---|---|---|

| EDEA | 0.8581 0.00106 | 1.7357 0.0196 |

| PCFE | 0.7664 0.00164 | 1.9588 0.1114 |

| AWFE | 0.7850 0.00153 | 1.9387 0.1801 |

| TSPE | 0.7566 0.00158 | 2.1500 0.1126 |

| Model | /% | /% |

|---|---|---|

| PCFE | 10.69 | 12.85 |

| AWFE | 8.52 | 11.70 |

| TSPE | 11.83 | 23.87 |

| Model | ||

|---|---|---|

| MEIE | 0.9130 0.00103 | 1.3002 0.0076 |

| EPCI | 0.8609 0.00111 | 1.5742 0.0238 |

| EDEA | 0.8581 0.00109 | 1.7357 0.0191 |

| Model | /% | /% |

|---|---|---|

| EPCI | 5.71 | 6.01 |

| EDEA | 21.07 | 33.50 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, B.; Dong, S. Real-Time Efficiency Prediction in Nonlinear Fractional-Order Systems via Multimodal Fusion. Fractal Fract. 2025, 9, 545. https://doi.org/10.3390/fractalfract9080545

Ma B, Dong S. Real-Time Efficiency Prediction in Nonlinear Fractional-Order Systems via Multimodal Fusion. Fractal and Fractional. 2025; 9(8):545. https://doi.org/10.3390/fractalfract9080545

Chicago/Turabian StyleMa, Biao, and Shimin Dong. 2025. "Real-Time Efficiency Prediction in Nonlinear Fractional-Order Systems via Multimodal Fusion" Fractal and Fractional 9, no. 8: 545. https://doi.org/10.3390/fractalfract9080545

APA StyleMa, B., & Dong, S. (2025). Real-Time Efficiency Prediction in Nonlinear Fractional-Order Systems via Multimodal Fusion. Fractal and Fractional, 9(8), 545. https://doi.org/10.3390/fractalfract9080545