Abstract

This study proposes a novel hybrid model for stock volatility forecasting by integrating directional and temporal dependencies among financial time series and market regime changes into a unified modeling framework. Specifically, we design a novel Hurst Exponent Effective Transfer Entropy Graph Neural Network (H-ETE-GNN) model that captures directional and asymmetric interactions based on Effective Transfer Entropy (ETE), and incorporates regime change detection using the Hurst exponent to reflect evolving global market conditions. To assess the effectiveness of the proposed approach, we compared the forecast performance of the hybrid GNN model with GNN models constructed using Transfer Entropy (TE), Granger causality, and Pearson correlation—each representing different measures of causality and correlation among time series. The empirical analysis was based on daily price data of 10 major country-level ETFs over a 19-year period (2006–2024), collected via Yahoo Finance. Additionally, we implemented recurrent neural network (RNN)-based models such as Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) under the same experimental conditions to evaluate their performance relative to the GNN-based models. The effect of incorporating regime changes was further examined by comparing the model performance with and without Hurst-exponent-based detection. The experimental results demonstrated that the hybrid GNN-based approach effectively captured the structure of information flow between time series, leading to substantial improvements in the forecast performance for one-day-ahead realized volatility. Furthermore, incorporating regime change detection via the Hurst exponent enhanced the model’s adaptability to structural shifts in the market. This study highlights the potential of H-ETE-GNN in jointly modeling interactions between time series and market regimes, offering a promising direction for more accurate and robust volatility forecasting in complex financial environments.

1. Introduction

Volatility prediction in financial markets is essential for various fields, such as portfolio optimization, risk management, and option pricing. Over the years, significant efforts have been made to develop reliable models that can accurately forecast market volatility. Traditionally, statistical time series models, such as Generalized Autoregressive Conditional Heteroskedasticity (GARCH), have predominantly been used for this purpose [1,2]. While GARCH models have proven effective in many contexts, recent advances have positioned deep learning algorithms at the forefront of volatility prediction [3]. In particular, Long Short-Term Memory (LSTM) models have gained prominence in the financial domain due to their ability to effectively capture long-term dependencies in time series data [4,5]. Despite the ability of deep learning models to effectively learn time series patterns, integrating domain knowledge is essential to account for the complexities and non-stationarity of financial markets [6]. While deep learning models have made significant strides, the integration of domain-specific knowledge remains a critical gap, especially in financial markets, where non-stationarity and regime shifts are prevalent. Models that are developed without a proper understanding of the specific characteristics of financial markets are at a higher risk of overfitting, which can undermine the generalizability of their predictive performance [7].

One of the primary challenges in volatility prediction in financial markets is regime changes, or shifts in market states. Markets experience alternating periods of economic growth and recession, each characterized by distinct volatility patterns. These changes can reduce the predictive power of models that do not consider regime changes. Consequently, various approaches that incorporate regime changes have been proposed. In particular, estimation of the time-varying Hurst exponent has received considerable attention as a way to capture long memory and structural changes in financial markets. To detect these changes, Melike and Özgür Ömer introduced a fractal-based GARCH model that accounts for long memory and volatility clustering [8]. Choi utilized the Hurst exponent through multifractal detrended fluctuation analysis (MFDFA) to assess dynamic market efficiency [9], and analyzed time-series variations in dynamic volatility spillovers to identify key points of market state transitions [10]. The Hurst exponent is a valuable tool for quantifying the long memory characteristics and autocorrelation structure of time series data, making it particularly effective in capturing the complex volatility patterns in financial markets [11]. In this context, Morales et al. proposed a dynamic Hurst exponent tool for monitoring unstable periods in financial time series [12]. Trinidad Segovia et al. employed the Hurst exponent via the fractal-based FD4 algorithm to detect volatility clusters [13]. Notably, the Hurst exponent can effectively detect non-stationarity and volatility clustering in markets, aiding in the identification of subtle patterns that traditional statistical models may overlook [14]. Eom et al. empirically investigated the relationship between market efficiency and the predictability of future price changes using the Hurst exponent [15]. Furthermore, the dynamic Hurst exponent, which evolves over time, can be used to track changes in financial market conditions in real-time, offering a more refined approach for detecting regime changes [16]. In these studies, wavelet-based estimators, parametric filtering techniques, and detrended fluctuation analysis (DFA/MFDFA) are frequently employed to estimate the time-varying Hurst exponent [17,18,19,20,21], each providing valuable insights into the dynamic structure of financial time series. According to the Fractal Market Hypothesis, the Hurst exponent, which reflects the multi-time scale characteristics of financial markets, plays a crucial role in identifying market instability and structural changes, thereby improving predictive accuracy across diverse market environments [22].

In addition to this perspective, recent literature on volatility modeling has proposed rough volatility models based on fractional Brownian motion (fBm), which suggest that the Hurst exponent is significantly smaller than when estimated on log-transformed realized volatility series [23,24,25]. These models challenge the traditional interpretation of long memory and suggest that volatility is rough, exhibiting anti-persistent behavior, rather than being persistent. While our approach does not adopt the log-transformation of high-frequency realized volatility, we instead compute the Hurst exponent of daily returns. This choice was motivated by empirical observations that the Hurst exponent of daily returns tends to fluctuate around , allowing the detection of regime shifts as the market transitions between persistent () and anti-persistent () dynamics. For instance, during turbulent periods such as 2020, H consistently falls below , aligning with a notable decrease in predictive performance. This suggests that the predictive difficulty associated with anti-persistent regimes, as also reported in the rough volatility literature [24], holds true in our empirical setting. While volatility estimates derived from daily returns are known to exhibit strong serial dependence, which may enhance predictability, we emphasize that this property is shared across all benchmark models used in our evaluation.

While regime changes describe temporal shifts, another important challenge in predicting volatility in financial time series is the structural interdependence among assets, influenced by various stakeholders. Stock prices are not determined in isolation; they are influenced by interactions with other stocks, exchange rates, and other economic factors [26]. The international financial market network is fully connected, strongly globalized, and intricately entangled, consisting of modular structures with similar efficiency dynamics [27]. Consequently, a model that simultaneously accounts for these financial stakeholders is essential, and various approaches have been proposed to address this issue. For example, Baltakys et al. proposed a graph neural network (GNN) approach to predict the trading behavior of socially connected investors, thereby enhancing market surveillance and detecting abnormal transactions [28]. Similarly, Chen and Robert utilized GNNs to model interactions between financial assets, which improves the accuracy of multivariate realized volatility forecasting [29]. Das et al. employed GNNs to model relationships among financial entities, integrating sentiment analysis to capture sentiment dynamics and structural dependencies [30]. Recent studies have increasingly adopted GNNs in financial forecasting, due to their ability to model complex relationships among financial entities. Unlike recurrent neural networks (RNN), GNNs reflect the relationships and structures between entities, making them a powerful tool for analyzing graph-structured data [31]. In particular, a Graph Convolutional Network (GCN), a prominent variation of GNNs, applies convolution operations to graph data to efficiently aggregate and propagate information from neighboring nodes [32]. These models are well suited for capturing relationships and interactions in complex network structures, making them particularly useful for analyzing multidimensional, interconnected data such as financial markets [33]. To effectively utilize GNN-based models, information about the interactions between entities is crucial, and various approaches have been developed to infer these relationships. Traditionally, statistical techniques and information theory have been used to measure correlation and causality. For example, Lu et al. presented a Pearson-correlation-based graph attention network that captures internal relationships between electroencephalography signal segments within brain regions to improve sleep stage classification [34]. Similarly, Jia and Benson proposed a framework that enhances GNN regression by modeling residual correlations using a parameterized multivariate Gaussian distribution, leading to improved prediction outcomes [35]. On the other hand, Wein et al. proposed a GNN-based causality network to infer causal relationships in brain networks, improving the accuracy of identifying directed dependencies between brain regions [36]. Zheng et al. developed a Granger causality-inspired graph neural network to model causal relationships in brain networks for interpretable psychiatric diagnosis [37]. Granger causality is a widely used technique for analyzing causality in time series data, assessing whether changes in one variable influence another [38]. However, this method can only determine the presence of information flow, and is limited when quantifying the magnitude or strength of the information transfer. To address this, research has been conducted using mutual information, based on information theory, to assess the quantity of information transferred. While mutual information is useful for measuring the dependence between two variables, it does not capture the directionality of the information flow. To overcome this limitation, Transfer Entropy (TE) was proposed, which is gaining attention as a metric that simultaneously estimates the direction and quantity of information transfer [39]. In line with this, Duan et al. presented a GNN that utilizes TE to capture causal relationships in multivariate time series, achieving superior forecasting performance across various real-world datasets [40]. However, TE can quantify causal relationships by measuring changes in probability distributions but suffers from issues related to finite sample effects and the presence of noise, which can degrade the accuracy of estimations [41]. To address these problems, Effective Transfer Entropy (ETE), which provides a more precise measurement of the direction and strength of information transfer, has been introduced [42].

This study proposes a novel model that simultaneously considers the influence of multiple stakeholders, while adapting to regime changes by retraining the graph structure based on the Hurst exponent. First, the model quantifies information flows among ten country exchange-traded funds (ETFs) using effective transfer entropy. Second, it constructs a graph convolutional network utilizing ETE-based graphs to forecast one-day-ahead realized volatility. Third, the model detects regime changes through the Hurst exponent and dynamically retrains the graph when a regime change is identified. In comparison to prior works that relied on linear causality measures such as Granger causality or Pearson correlation, our approach captures nonlinear and directional causality more effectively through the use of ETE. Furthermore, we introduce a novel regime adaptation strategy that leverages the Hurst exponent to avoid unnecessary retraining during stable market trends, and focus updates only when structural changes are detected. The key contributions of this study are threefold. First, we develop a GNN-based forecasting framework that effectively captures nonlinear inter-asset dependencies by constructing adjacency matrices using ETE. Second, we propose a dynamic graph updating mechanism using the Hurst exponent, enabling the model to adapt to long-term memory dynamics and market regime shifts. Third, we empirically demonstrate that our hybrid model significantly outperformed various benchmarks, including GNNs using alternative causality measures and RNN-based models, in forecasting volatility over 19 years of ETF data. These results have practical implications for portfolio managers and risk analysts, as more accurate volatility forecasts can enhance asset allocation, hedging strategies, and option pricing. Theoretically, our framework advances the understanding of financial markets by integrating GNNs with nonlinear causality measures and a regime-adaptive mechanism based on the Hurst exponent, which captures varying memory characteristics across different market phases. The novelty of this study lies in being the first to integrate ETE-based graphs to capture collective dynamics among assets, while simultaneously accounting for regime changes using the Hurst exponent. Empirical results indicate that the proposed hybrid model improved the accuracy of realized volatility forecasts. These findings can contribute to a deeper understanding of fractal dynamics in financial markets, offering valuable insights for financial stakeholders.

The remainder of this paper is structured as follows: Section 2, which outlines the foundations of the ETE, causality graph neural network, and Hurst exponent; Section 3, which details the experimental approach and provides a statistical analysis of the financial time-series data; Section 4, which presents a comprehensive analysis of forecasting the realized volatility of ETFs; and Section 5, which interprets the findings and offers conclusions.

2. Methods

In order to forecast stock volatility more accurately, it is essential to consider the relationships between stocks and account for global regime changes. The ETE method was chosen because it effectively captures the directional causality among stocks, which is crucial for constructing a causality network that reflects the dynamic interconnections between different stocks [42]. GNNs can leverage the graph structure of the causality network to incorporate the relationships between different stocks into volatility forecasting [32]. Furthermore, the global stock market experiences episodic regime changes, which can be captured using the Hurst exponent [19]. The data included the daily closing prices of ten national ETFs, collected via Yahoo Finance. Log-returns were calculated, and the Hurst exponent was determined using a sliding window approach for global regime detection.

2.1. Effective Transfer Entropy

To understand ETE, it is essential to begin with the key concept of Mutual Information (MI) from information theory. MI is a measure that quantifies the statistical dependence between two random variables. Specifically, the MI between random variables X and Y, denoted as , is defined as shown in Equation (1).

where denotes the Shannon entropy of the random variable X, measuring the uncertainty of the probability distribution [43]. The conditional entropy represents the uncertainty of X given Y.

This concept of MI is extended to measure the causal relationship between time series, resulting in TE. TE measures the flow of information between two time series X and Y, quantifying the extent to which past values of Y contribute to predicting future values of X, and is defined as shown in Equation (2).

where and represent the past k and l values of the times series X and Y, respectively. The conditional probability distribution is used to measure how much better the probability of can be explained by incorporating the past information of Y along with that of X. Here, the sum is calculated over all possible joint combinations of , with i denoting the index for these joint combinations. In this study, following the approach in a previous work [44], we set the lag parameters k and l to 1, reflecting both theoretical and practical considerations. Theoretically, prior studies noted that stock markets in different time zones may experience delayed information transmission, and incorporating a one-day lag can better capture such cross-market dynamics. Practically, higher lag values exponentially increase the dimensionality of the conditional probability space, leading to greater computational complexity and estimation instability, especially in financial time series with limited data. As a result, TE is simplified as shown in Equation (3).

TE is calculated by estimating the conditional probability distribution between the past and future information of a time series. However, financial time series often contain high-frequency noise or extreme values, making it difficult to accurately estimate the continuous distribution. To address this issue, this study applied the Discretized Transfer Entropy method, which estimates the probability mass function through a discretization process [42]. Specifically, the time series segment for which TE is to be calculated is divided into proper intervals based on the maximum and minimum values, and the frequency of data points falling into each interval is measured to construct the probability mass function. Additionally, by constructing the joint probability distribution of the two time series in the same manner, all the conditional probabilities required for TE calculation can be estimated. This approach is useful for more reliably estimating the information flow in financial time series that include nonlinearity and noise. In this study, the number of intervals used for discretization was set to 3, following the approach in a previous study [42], where a ternary partition was adopted to represent the three fundamental types of price movements: significant gain, neutral or stable return, and significant loss. This discretization scheme provides an interpretable symbolic representation of market dynamics, while maintaining computational tractability in the estimation of Transfer Entropy [45].

On the other hand, while TE effectively captures nonlinear and asymmetric dependencies between time series, its application to real data may lead to overestimation or distorted interpretations, due to finite sample effects and the influence of noise. To address this, Marschinski and Kantz introduced the concept of ETE [42]. ETE is defined as shown in Equation (4), where the average TE value, calculated using randomized transfer entropy (RTE), is subtracted from the original TE in order to enhance the statistical significance of the TE value. The value of RTE is interpreted as a significance threshold, serving as a baseline to distinguish genuine information transfer from noise. To assess the statistical significance of the ETE value, we compute the Z-score based on the distribution of RTE, as shown in Equation (5). The Z-score measures how far the observed TE is from the mean of the shuffled TE values, normalized by their standard deviation. Only ETE values with a Z-score greater than are considered statistically significant.

where and denote the mean and standard deviation of the transfer entropy values computed from m independently shuffled versions of Y to X. This filtering step mitigates the risk of overestimating spurious causality due to noise or finite sample effects, ensuring that only statistically robust information flows are preserved in the final ETE matrix. This process removes the temporal structure, while preserving the marginal distribution of the time series, thus destroying the causal relationship between variables [46]. Therefore, ETE adjusts the TE value to exclude information flows arising from mere chance or noise, reflecting only statistically significant information transmission. By considering both the direction and strength of information flow, while providing statistical control over randomness, ETE serves as a useful tool for financial time series analysis. As suggested by Marschinski and Kantz, multiple shuffle simulations are commonly used to stabilize the bias estimate [42]. While no fixed optimal number of shuffles is prescribed, several studies have used between 25 and 100 or more simulations [45,47,48]. In this study, the number of shuffle simulations was treated as a hyperparameter and hyperparameter tuning was conducted to identify the most effective value.

Meanwhile, to demonstrate the effectiveness of ETE, this study used traditional methods for measuring causality or correlation between two variables as benchmarks. One such method, Granger causality, evaluates whether past values of variable Y can predict future values of variable X, examining the potential causal relationship from Y to X [38]. To do this, a linear regression model, as shown in Equation (6), is set up, and the model’s predictive performance is tested by including past values of Y.

where , , , p and represent the constant term, autoregressive terms, exogenous terms, lag order, and error term, respectively. If the coefficients are statistically different from zero, this indicates that Y Granger causes X. However, Granger causality inherently assumes linearity and requires that the time series satisfy the condition of weak stationarity.

Another benchmark, Pearson correlation, is a statistical measure that quantifies the degree to which two variables, X and Y, change together linearly. The correlation coefficient is defined as shown in Equation (7).

where and represent the mean values of X and Y, respectively, and n denotes the length of the time series. A Pearson correlation coefficient close to 0 indicates a weak linear relationship, while a value near 1 or −1 indicates a strong positive or negative linear correlation, respectively. While Pearson correlation is useful for identifying simple linear relationships between variables, it has limitations: it cannot identify causal relationships, nor does it account for hidden common causes or non-linear structures.

In contrast, TE extends the causal concept of Granger causality from an information-theoretic perspective, offering the advantages of capturing non-linear relationships and measuring the direction of information flow. While Pearson correlation is a simple tool for correlation analysis, Granger causality, TE, and, most notably, the ETE used in this study, are focused on analyzing temporal causal structures or information flow. Specifically, TE and ETE differentiate themselves from Granger causality by evaluating the significance of information flow without assuming linearity.

2.2. Causality Graph Neural Network

GNNs are deep learning techniques designed to perform computations on graph-structured data [49]. By leveraging the structural relationships between nodes, GNNs can effectively solve node prediction tasks. The core of GNN training lies in the message passing mechanism, in which each node aggregates information from its neighboring nodes and updates its embedding vector accordingly. By repeating this process multiple times, GNNs are able to capture the structural dependencies between nodes in depth. In this study, we propose a model that predicts the one-day-ahead realized volatility of stocks by applying GNNs with adjacency matrices constructed based on ETE. Specifically, we estimate the statistically significant causal influence of one asset’s past information on the future volatility of another asset using ETE. Based on these causal relationships, we construct a sparse adjacency matrix that reflects only meaningful links between assets. Unlike fully connected graphs, where all nodes are linked, this approach builds a graph that only incorporates actual causal links, which reduces the model complexity and helps to minimize noise during training.

Time series data not only contain numerical information but also embody crucial temporal structures, such as periodicity. In particular, financial time series often exhibit fractal structures, demonstrating statistical self-similarity across various time scales. This means that statistical properties such as variance or autocorrelation may be preserved across different temporal resolutions. This characteristic highlights the importance of volatility forecasting, since capturing such scale-invariant statistical patterns is essential for modeling financial uncertainty. To effectively capture such characteristics, single-scale feature extraction is often insufficient. Therefore, this study employs multiple convolutional filters with different receptive fields to extract multi-scale features from time series data. These features are then concatenated and used as the initial node embeddings. Each convolutional filter has a distinct receptive field with a shape of , where is a predefined constant specifying the width of the receptive field for the i-th filter, and , with q denoting the number of filters used. Given a time series input v, the resulting initial node embedding f is computed as shown in Equation (8).

where ∗, ⨁, and denote the convolution, concatenation, and rectified linear unit activation function , respectively. In this framework, multivariate time series data are transformed into a feature matrix , where n and d denote the number of variables and the number of features, respectively. The adjacency matrix A between nodes is constructed based on the causality-driven methods described earlier. The message passing process in the GNN follows Equation (9).

where and are learnable weight matrices for the l-th layer, while and denote the hidden states of nodes i and j at layer l, respectively. represents the weight of the directed edge from node j to node i, indicating the causal influence of node j on node i. denotes the set of neighboring nodes of node i. In addition to integrating ETE into the GNN, this study also applied other causal inference methods, such as transfer entropy and Granger causality, as well as a correlation-based method, namely Pearson correlation, to construct the adjacency matrix, and compared their predictive performance.

2.3. Regime Detection Using Hurst Exponent

In this study, the Hurst exponent is used to analyze the fractal structure of time series data, identify structural changes, and define corresponding regimes. Reflecting the long-term memory and self-similarity of time series, the Hurst exponent represents its fractal characteristics and is widely recognized as an effective tool for interpreting the nonlinear and complex patterns of financial time series. In particular, rescaled range (R/S) analysis, which is employed to estimate the Hurst exponent, is a widely used method for quantitatively assessing the fractal characteristics of time series data [50].

Consider a time series , which is partitioned into segments of length w, resulting in a set of segments . Each segment is defined as , where the total number of segments is . By calculating the rescaled range for various scales w and analyzing the changes in the average values across these scales, the fractal scaling relationship of the time series can be determined. To accomplish this, the rescaled range value for each segment is determined by the procedure described below. Initially, the mean of each segment is calculated as given in Equation (10).

Subsequently, the cumulative deviation from the segment mean is computed as shown in Equation (11).

Next, the range for each segment is calculated by determining the difference between the maximum and minimum values of the cumulative deviation, as shown in Equation (12).

Additionally, the standard deviation for each segment is calculated as shown in Equation (13).

The rescaled range is defined as , and the average rescaled range for each scale w is calculated by averaging this value over all segments, as shown in Equation (14).

The average value exhibits a specific pattern with respect to scale w, as shown in Equation (15), which generally follows a power law, indicating a fractal scaling relationship.

where c is a constant and H is the Hurst exponent. Taking the logarithm of both sides yields a linear relationship, as shown in Equation (16).

This can be interpreted as a linear regression model related to the fractal dimension. By fitting the relationship between and for multiple scales w using Ordinary Least Squares (OLS), the Hurst exponent can be estimated.

The Hurst exponent serves as a key numerical indicator of the fractality of a time series and enables interpretation of its dynamic properties according to its value. When H is close to , the time series exhibits behavior similar to a random walk, implying the absence of serial dependence. A value of H greater than indicates a persistent structure with strong fractal autocorrelation, whereas a value less than reflects an anti-persistent structure, in which changes tend to occur in the opposite direction.

In this study, a Hurst exponent value of served as the criterion for regime classification. The value is commonly interpreted as the point where the time series behavior shifts from exhibiting persistent or anti-persistent characteristics to randomness, and it is often used to distinguish between regimes with long-term memory, random walk, and anti-persistent behavior [51,52]. A given time window is classified as a regime if the number of days on which the Hurst exponent exceeds is greater than or equal to a specified threshold s. Since slightly higher or lower values than can also produce meaningful results in empirical time series, several alternative thresholds—such as and —were examined in a preliminary tuning phase. Additionally, a three-regime classification approach was also considered, where the regimes were defined as follows: Hurst exponent values below , between and , and above . After a comprehensive evaluation of experimental results, a binary classification based on the threshold was selected, as it provided the best trade-off between interpretability and predictive performance. Details regarding the observation window and the threshold s for regime classification are presented in Section 3.1.

3. Experiments and Data

3.1. Experiments

This study proposes the H-ETE-GNN model to predict the realized volatility of ten national ETFs by incorporating causal relationships across countries and a global regime detection mechanism. This study used ten national ETFs, following the dataset configuration in Barua and Sharma (2021) [53]. These ETFs represent both developed and developing countries, providing a broad view of international equity markets. This selection supports the objective of modeling causal relationships across countries, which aligns with the proposed GNN framework. This study concentrated on national ETFs, rather than sectoral ETFs or other asset classes such as commodities or fixed income, in order to capture cross-country causal dynamics.

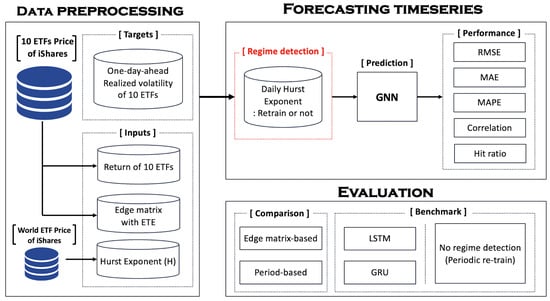

The overall experimental framework is presented in Figure 1. First, the close prices of the ten target national ETFs were collected to compute log-returns, which were used as inputs to construct the edge matrix, and the realized volatility values, which served as prediction targets. In addition, the log-return series of a representative world ETF was obtained, and the Hurst exponent was calculated using a sliding window approach. The window size was set to 250, since this setting produced a reasonable number of regime changes. With this window size, 47 regime changes were detected, while using 500 and 750 resulted in only 29 and 14 changes, respectively. These smaller counts may have been insufficient to adequately capture shifts in market conditions, so we chose 250, as it offered a more informative segmentation of the time series. To normalize the log-returns used as input features, min-max scaling was applied to rescale the values between zero and one. The GNN was trained using data from the most recent N days, which defined the training period. The edge matrix was also constructed based on these N days by estimating the directional causality among the ten national ETFs using the ETE method. For model training and evaluation, the training dataset was divided into two periods—training and validation—at a ratio of 7:3. A sliding window approach was employed during prediction, where the model took the most recent M days of information as input to forecast the next day’s realized volatility. Here, N refers to the length of the training period, and M denotes the input size of the model.

Figure 1.

Experiment framework using Hurst exponent with GNN.

In the subsequent stage, if a regime change was detected, the GNN was retrained. The regime detection procedure was as follows. For the daily Hurst exponent of the world ETF, if the number of days with values exceeding during the past 10 days was greater than or equal to a threshold s, the current period was classified as an “Above” regime. Conversely, if the number of days with values below exceeded or equaled s, the period was classified as a “Below” regime. The threshold s served as a hyperparameter controlling the sensitivity of regime classification and was tuned through experimentation. When a regime shift was identified based on this criterion, the edge matrix was recalculated and the GNN was updated. Since the Hurst exponent reflected the memory and dependence structure in past observations, a detected regime shift indicated that structural changes had already been underway in the recent window. Therefore, although the detection point may coincide with the tail end of the previous regime, it already embeds early signs of the new regime’s characteristics, making it a reasonable point for fine-tuning the model. To reduce computational cost, the model was not fully retrained; instead, only the edge matrix was replaced, and the model was fine-tuned accordingly. On the other hand, if the regime remained unchanged, the previously trained model was directly used to predict the one-day-ahead realized volatility, without additional training.

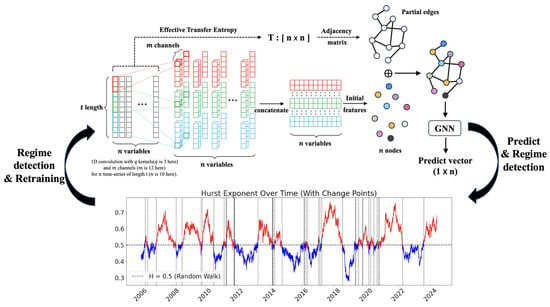

In this study, three multi-scale convolutional filters were used to extract features from the time series data during the initialization of the GNN model. The lengths of each kernel were set to 3, 5, and 7 to capture both short-term and long-term temporal patterns simultaneously. The number of channels for each kernel was set to 12. Additionally, the GNN consisted of three layers, allowing for three iterations of message passing. The model was retrained every time a regime change was detected via the Hurst exponent, as shown in Figure 2. The lower part of Figure 2 presents the Hurst exponent over time, where regimes are alternately marked in red and blue to indicate regime changes. The objective function of the GNN model was the mean squared error (MSE), and the Adam optimizer was used for weight optimization. The activation function applied was ReLU, with 100 epochs and early stopping to prevent overfitting. Hyperparameter tuning was conducted using Bayesian optimization [54], and the hyperparameters used for tuning are listed in Table 1. For benchmarking, the RNN model was tuned with the hyperparameters specified in Table 2, and the tuning results for the common hyperparameters M, N, and s are shown in Table 3.

Figure 2.

Proposed model framework using ETE-GNN with Hurst exponent.

Table 1.

Hyperparameter tuning for GNNs.

Table 2.

Hyperparameter tuning for RNNs.

Table 3.

Results of Hyperparameter tuning.

For benchmarking, LSTM and Gated Recurrent Unit (GRU) models, which are widely used in time series forecasting, were adopted. LSTM, a variant of a RNN, effectively learns long-term dependencies through its input gate, forget gate, and output gate [55]. A GRU, proposed to alleviate the excessive complexity of LSTM while maintaining its performance, is used as well [56,57]. Since LSTM and GRU are not graph-based models, no separate causality matrix was used; instead, the past N-day log-return data of the ETFs were directly utilized to predict the realized volatility. During this process, regime detection and model retraining based on the Hurst exponent were performed. This allowed a comparison of the performance of the proposed H-ETE-GNN model with that of the LSTM and GRU models, as well as the evaluation of the predictive performance of a graph-based model that considered causality between countries. In addition, to assess the causality information encoded by ETE, benchmark GNN models were constructed using TE, Granger causality, and Pearson correlation to generate the edge matrix. When Granger causality was used to construct the edge matrix, the causality values were binarized into 0 and 1 based on a 5% significance level. In the case of Pearson correlation, only the magnitude of the correlation was considered by using the absolute correlation value. Furthermore, to evaluate the effectiveness of regime change detection, experiments were conducted on the aforementioned models without using the Hurst exponent, with the models being periodically retrained every N days during the training period.

Model performance was evaluated using Root Mean Squared Error (RMSE), Mean Absolute Error (MAE), Mean Absolute Percentage Error (MAPE), correlation, and Hit ratio. The implementation was carried out in Python 3.8.20 with PyTorch 2.0.0, and the experiments were conducted on a system with an AMD Ryzen 9 4.5 GHz processor, 32 GB of RAM, and an NVIDIA GTX 4090 with 24 GB of memory.

3.2. Data

The data for each ETF used in this study are presented in Table 4. To comprehensively reflect the diverse stock price volatility across countries with differing macroeconomic conditions and market structures, 10 country ETFs representing both developed and emerging markets were selected. Since each ETF represents the overall market movement of the corresponding country’s stock market, they are useful for integrative analysis of various market dynamics observed across regions. For regime change detection, the Hurst exponent was calculated based on the time series of the iShares MSCI World ETF rather than individual countries. This ETF includes various regions and industrial sectors, reflecting global macroeconomic trends that extend beyond the market fluctuations of individual countries. By aggregating the stock markets of multiple developed nations into a single index, this provides a useful indicator for assessing the general direction of the global market, rather than focusing solely on specific countries. Therefore, the MSCI World index was used as a proxy for the Hurst exponent [58]. If the Hurst exponent were calculated separately for each country, the regime detection for each ETF would vary due to the heterogeneous market characteristics of each nation. This would result in different model retraining points for each ETF, significantly increasing the complexity and making it difficult to intuitively identify a common regime change. Thus, while realized volatility was predicted for each individual country ETF, the regime change detection relied solely on the MSCI World ETF, which reflects the overall market trends.

Table 4.

List of MSCI Country ETFs.

The Hurst exponent estimate provides more meaningful interpretation when the time series is stationary [59]. Therefore, the close price was converted into log return. The sample period was from 7 February 2003 to 29 May 2024. The log return, used as the model’s input, and the realized volatility, which served as the prediction target, were calculated as shown in Equations (17) and (18).

where t refers to the specific date and n denotes the number of past observations. In this study, realized volatility was computed using the past 20 days of log returns. The log return at time t is denoted as , and represents the closing price at time t. Table 5 presents the descriptive statistics of the log returns and realized volatilities for each ETF. To examine the normality of the distributions, a Jarque–Bera test was conducted [60], and the augmented Dickey–Fuller (ADF) test was applied to assess the stationarity of the data [61]. Most log returns exhibit negative skewness, where this indicates that the left tail of the distribution is longer and large negative fluctuations are more probable. The kurtosis values are substantially higher than those of a normal distribution, where this suggests the presence of heavy tails and implies that extreme variations occur more frequently than under the normality assumption, which is a well-known characteristic of financial return time series. At the 1% significance level, the Jarque–Bera test rejected the null hypothesis of normality for all series, and the ADF test results indicated that all series were stationary.

Table 5.

Descriptive statistics of each ETF.

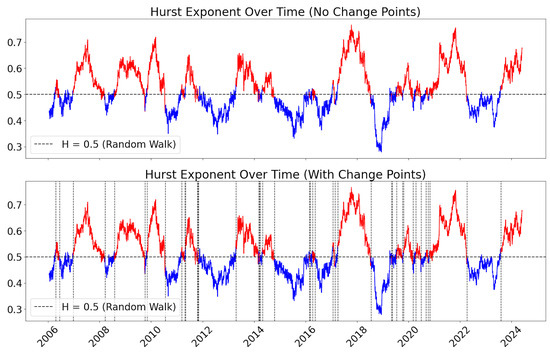

Figure 3 illustrates the daily Hurst exponent calculated using the World ETF. Also, Hurst exponent is color-coded in alternating red and blue to indicate each regime shift. The sensitivity of regime frequency was set to 6, as determined by the experiment that yielded the best performance. Each vertical dashed line in the figure marks a detected regime change, with a total of 47 changes observed. Consequently, the proposed model, H-ETE-GNN, was retrained 47 times accordingly.

Figure 3.

Result of Hurst exponent; vertical line is the rep of regime change.

4. Results

To evaluate the predictive performance of the proposed model, three analytical approaches were employed. First, the validity of regime detection using the Hurst exponent was assessed to determine whether it contributed to improved forecasting accuracy. Second, the effectiveness of the GNN in capturing and analyzing the structural relationships among the ten ETFs was verified through ETE, a statistical measure that estimates the directional flow of information between time series. To this end, the results were comprehensively compared and analyzed against benchmark models, with particular attention paid to year-by-year performance differences and the impact of each factor on model predictions. The model’s predictive performance was evaluated using RMSE, MAE, MAPE, and correlation, as defined by the following equations. In addition to those, we report the hit ratio, which measures the percentage of times the predicted direction matched the actual direction.

where n, , , , and represent the total number of samples, the real value, the predicted value, the mean of the real values, and the mean of the predicted values, respectively. The operator returns the sign of a value (i.e., 1 if positive, 0 if zero, and if negative), and the indicator function, which returns 1 if the condition inside the brackets is true, and 0 otherwise.

4.1. Forecasting Performance by Model

To evaluate the effectiveness of regime detection using the Hurst exponent, Table 6 compares periodic retraining with Hurst-based regime retraining. ETE-GNN, TE-GNN, Granger-GNN, and Pearson-GNN denote models that employed ETE, TE, Granger causality, and Pearson correlation, respectively, to construct the edge matrix of the GNN. The comparison included both RNNs, specifically LSTM and GRU models, and the GNN models under the two retraining strategies. All experiments were repeated ten times, and both the average and standard deviation were reported to ensure the reliability of the results. The results indicate that regime detection using the Hurst exponent consistently led to improved performance in all GNN-based models. Notably, H-ETE-GNN achieved the best performance among all models. Under the periodic regime, Granger-GNN showed the lowest error rates among all models. While regime detection also improved the performance in LSTM and GRU, the degree of improvement was relatively modest compared to the GNN-based models. In addition, both LSTM and GRU exhibited relatively high RMSE and MAPE values, and their correlation coefficients remained below . Overall, regime detection based on the Hurst exponent proved effective across all GNN-based architectures, including H-ETE-GNN. Moreover, models that incorporated relational information among multiple time series outperformed those that relied solely on individual series. Among them, H-ETE-GNN, which utilized ETE, demonstrated the highest level of predictive accuracy. In terms of hit ratio, the Hurst-based TE-GNN achieved the highest value at , but the Hurst-based ETE-GNN also recorded a comparable score of , with no statistically significant difference between the two models.

Table 6.

Comparison of Periodic changes vs. Hurst regimes; best results are highlighted in bold.

Table 7 presents the results of the one-tailed t-tests conducted to evaluate the statistical significance of performance differences between ETE-GNN and existing models. The table lists pairwise comparisons across three settings: between ETE-GNN and other models under the Periodic regime, under the Hurst-based regime detection, and between models with and without regime detection. In the Periodic regime, ETE-GNN consistently achieved a better performance than the RNN-based models across all metrics and showed statistically significant improvements over Pearson-GNN in terms of RMSE and correlation. In contrast, no significant differences were observed between ETE-GNN and Granger-GNN, indicating comparable performance. ETE-GNN showed superiority over TE-GNN only in RMSE and MAE. Under the Hurst-based regime, ETE-GNN outperformed LSTM, GRU, Pearson-GNN, and TE-GNN across all metrics, with strong statistical significance, and also showed clear superiority over Granger-GNN in MAE and hit ratio. In comparisons between the Hurst-based and Periodic settings, ETE-GNN showed significant improvements across all metrics, while LSTM, Pearson-GNN, and TE-GNN also exhibited meaningful improvements in specific metrics. These findings suggest that the Hurst-exponent-based regime detection strategy effectively contributed to improving the performance of ETE-GNN, while also providing measurable benefits for other models.

Table 7.

p-Value for pairwise comparison.

To examine whether splitting regimes based on the Hurst exponent around was optimal, this study also explored a ternary regime detection scheme using three ranges of H values. Table 8 presents the experimental results using a ternary regime detection scheme based on the Hurst exponent. Each model operated under identical experimental settings, applied to both the Periodic-change and Hurst-based regime detection frameworks. The ternary regime detection approach classified each time window into three regimes according to the Hurst exponent: the Below regime when the Hurst value is below , indicating anti-persistent behavior; the Middle regime, when it lies between and ; and the Above regime, when it exceeds , indicating persistent trends. The difference in prediction performance between the binary and ternary regime detection methods remained small. The binary approach identified 47 regime changes, while the ternary approach identified 57, based on hyperparameter settings that yielded the best performance in each case. Although the more fine-grained ternary classification aimed to capture a richer representation of market states, the experimental results did not indicate a significant performance gain compared to the simpler binary approach and instead introduced additional computational overhead. This study adopted the binary regime detection method as the final strategy, considering both interpretability and computational efficiency.

Table 8.

Comparison of Periodic changes vs. Hurst regimes: Ternary Regimes; best results are highlighted in bold.

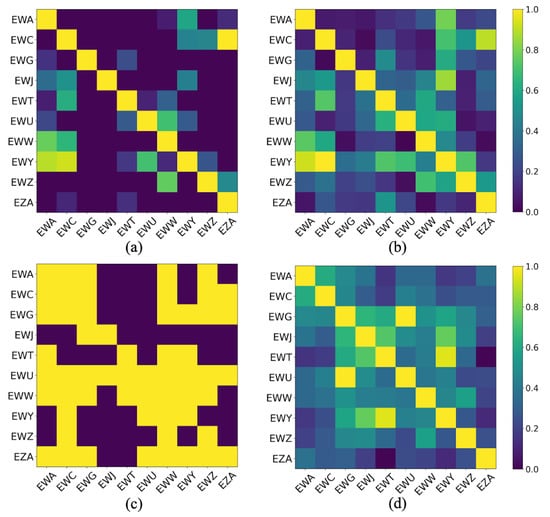

Figure 4 illustrates the edge matrices used at the initial training stage for each GNN model. The edge matrix based on Pearson correlation broadly captured the correlation structure among assets; however, its overly dense connectivity tended to degrade the prediction performance. In contrast, the matrix constructed using Granger causality strictly filtered the edges based on statistical significance, resulting in a highly sparse structure. While this sparsity prevented overfitting, it also removed potentially informative connections, limiting predictive gains. The adjacency matrix derived from TE fell between Pearson and ETE in terms of sparsity, reflecting meaningful information flow, while avoiding excessive linkage. The matrix based on ETE, which subtracted RTE from TE, further eliminated statistically insignificant edges, leading to a sparser but more informative structure. This enabled the model to more effectively capture essential information flow across assets. As a result, the model utilizing ETE demonstrated the highest predictive accuracy, followed closely by the model using Granger causality. These findings highlight that avoiding both overly dense and overly sparse structures, while accurately reflecting relationships among assets, is crucial for improving volatility forecasting performance.

Figure 4.

The edge matrices which were used in GNNs; (a) Effective Transfer Entropy matrix, (b) Transfer Entropy matrix, (c) Granger Causality matrix, (d) Pearson Correlation matrix.

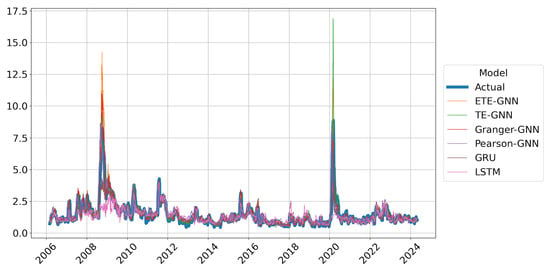

Figure 5 presents the predicted time series of models with Hurst-exponent-based regime detection, compared to the actual realized volatility. During periods of sharp volatility spikes, such as the 2008 global financial crisis and the 2020 COVID pandemic, the predicted trends from the models diverged significantly. Across all periods, the GNN-based models such as ETE-GNN, TE-GNN, Granger-GNN, and Pearson-GNN generally provided predictions close to the actual values. In contrast, the RNN-based models such as LSTM and GRU tended to either overestimate or underestimate the volatility in certain periods, with this tendency being most pronounced during sharp increases in volatility.

Figure 5.

Forecast performance of realized volatility; All Hurst models.

4.2. Forecasting Performance by Period

To thoroughly evaluate the predictive performance of the proposed model, we examined the annual prediction results. Table 9 compares the annual RMSE values of models with Hurst-exponent-based regime detection. Overall, the proposed H-ETE-GNN demonstrated the best performance, but its results were less favorable during the periods of the 2008 financial crisis and the COVID pandemic. This was due to the infrequent occurrence of regime changes during these periods. According to Figure 3, there were two regime changes between 2007 and 2008, while only one regime change occurred between 2021 and 2023. Over 19 years, there were 47 regime changes, making these periods below average in terms of regime changes. If an incorrect network was learned and no regime change occurred, the prediction performance in those periods deteriorated. Furthermore, although ETE was introduced to address the shortcomings of TE, ETE had limitations in capturing extreme non-linear interactions [62]. During these periods, ETE’s failure to capture extreme non-linear patterns and the lack of regime change resulted in suboptimal predictions. Meanwhile, the Granger-GNN model, which was relatively simple and effective in capturing linear relationships, performed better during these periods. In conclusion, the proposed H-ETE-GNN performed outstandingly during periods of low volatility, but its performance was relatively lower during the high-volatility periods of the 2007–2008 financial crisis and the 2020–2023 COVID-19 pandemic.

Table 9.

RMSE of prediction results with Hurst exponent by year; best results are highlighted in bold.

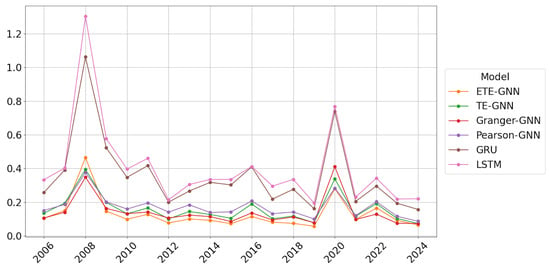

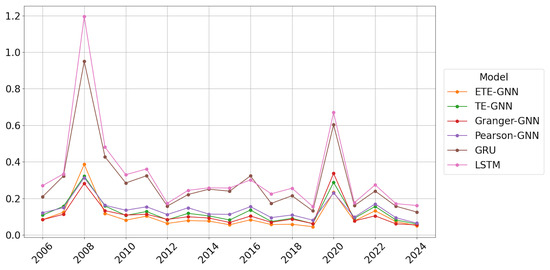

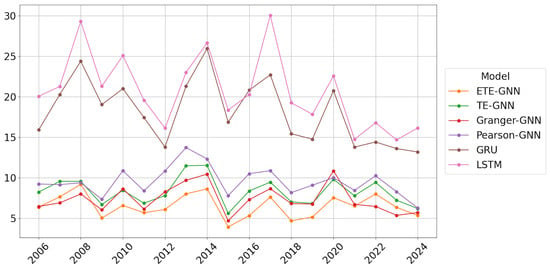

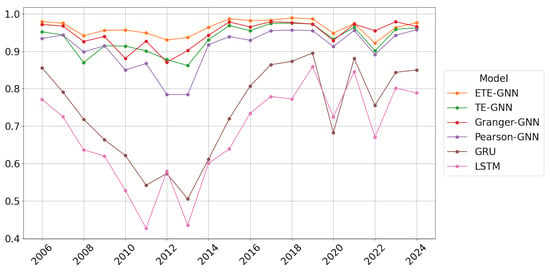

Figure 6, Figure 7, Figure 8 and Figure 9 present the annual prediction performance of each model, corresponding to RMSE, MAE, MAPE, and correlation metrics, respectively. For each model, the results of Hurst regime detection and periodic changes were averaged and displayed as annual charts. Overall, the GNN models exhibited superior predictive performance compared to the RNN models, with the RNN models showing a tendency to significantly increase errors during periods of sharp market fluctuations. The proposed H-ETE-GNN model demonstrated a stable prediction performance across most periods, indicating that ETE effectively captured the information flow between time series.

Figure 6.

RMSE of prediction results by year; mean of Hurst and NoHurst results.

Figure 7.

MAE of prediction results by year; mean of Hurst and NoHurst results.

Figure 8.

MAPE of prediction results by year; mean of Hurst and NoHurst results.

Figure 9.

Correlation between actual values and prediction results by year; mean of Hurst and NoHurst results.

Next, we examined the effectiveness of Hurst-exponent-based regime detection by analyzing annual prediction performance. Table 10 presents the annual RMSE results for H-ETE-GNN and ETE-GNN. In all years, except for 2008, 2012, and 2020, H-ETE-GNN demonstrated superior performance. In 2012, the difference between the two models was minimal, while in 2008 and 2020, ETE-GNN showed better performance. As mentioned earlier, this may occur in periods where regime changes are infrequent.

Table 10.

RMSE of H-ETE-GNN and ETE-GNN; best results are highlighted in bold.

To further assess the broad applicability of Hurst-exponent-based regime detection, Table 11 presents the annual RMSE from a collective perspective. For all RNN and GNN models, the average RMSE values under both Hurst regime detection and periodic changes were calculated. The results show that, in certain periods, the periodic changes model slightly outperformed, which may indicate that regime detection’s advantages are less clear in market environments where fluctuations are not extreme or during specific phases. Nevertheless, in general, the application of regime detection resulted in better performance. This suggests that the Hurst-exponent-based regime detection method effectively captured market phase changes, regardless of the predictive model used.

Table 11.

RMSE comparison: Hurst-exponent-based regime detection vs. Periodic changes; best results are highlighted in bold.

Finally, the effectiveness of GNNs considering causality between countries was examined. Table 12 compares the annual RMSE averages of GNN models and RNN models. In every year, the GNNs significantly outperformed the RNNs, demonstrating that GNNs are better at capturing the structural information of financial markets.

Table 12.

RMSE of GNN and RNN; best results are highlighted in bold.

5. Discussion and Conclusions

This paper introduces a hybrid model, H-ETE-GNN, which combines the ETE to estimate nonlinear inter-asset causality with GNNs, while dynamically reflecting market regime changes using the Hurst exponent. The primary contributions of this study are clearly outlined as follows: First, a framework is developed that effectively analyzes data structures reflecting inter-asset causality through an adjacency matrix composed of ETE. By utilizing this approach, GNNs can interpret the network structure of financial markets and systematically analyze the impact of interactions between nodes on prediction performance, unlike traditional RNN models based on sequential learning. Second, the study introduces a strategy that monitors the long-term dependency characteristics of financial markets using the Hurst exponent. By detecting regime changes based on this, the model dynamically retrains the ETE graph and parameters, demonstrating the potential for a prediction system that adapts flexibly to structural market fluctuations. Third, the effectiveness of the proposed H-ETE-GNN was validated by analyzing 19 years of data from 10 national ETFs. When compared to various benchmark models, the H-ETE-GNN showed superior performance in volatility forecasting, confirming its applicability. In addition to these contributions, the novelty of our work lies in the comprehensive comparison against benchmark models. In contrast to prior works that relied on linear causality measures, such as Granger causality or Pearson correlation, our approach highlighted the superior ability of the GNN model based on ETE to capture nonlinear and directional causality. Moreover, the incorporation of Hurst-exponent-based regime change detection adds significant value, enhancing the model’s adaptability to shifting market dynamics.

In this study, a series of benchmark experiments revealed that the GNN model based on ETE outperformed those based on TE, Granger causality, and Pearson correlation. This highlights that ETE’s ability to effectively capture nonlinear information flow contributes to improved prediction performance. Furthermore, when compared to RNN-based models under the same conditions, the GNN-based models, which reflected structural interactions between assets, generally demonstrated superior performance. Additionally, the strategy incorporating Hurst-exponent-based regime change detection outperformed Periodic retraining, underscoring the value of adapting models to long-term memory dependencies in the market.

The proposed H-ETE-GNN efficiently balances both accuracy and effectiveness by avoiding unnecessary retraining during stable market trends and only retraining at regime change points. This adaptive mechanism allows for more efficient deployment in real-world systems where computational resources are limited, and market dynamics are highly variable. However, during periods of rapid market collapse, such as the 2008 financial crisis or the 2020 COVID-19 pandemic, the model’s performance temporarily deteriorated. This suggests that the current modeling approach, while effective under typical conditions, has limitations in capturing abrupt and extreme market behaviors. This can be attributed to the fact that the Hurst exponent operates under a monofractal assumption, which may not fully capture the asymmetric and complex nature of the market structure. Additionally, while ETE improves causal inference compared to traditional TE, it has limitations in detecting extreme, localized nonlinear interactions, potentially missing crucial regime changes. These findings imply that, although H-ETE-GNN offers a promising solution for volatility forecasting in complex markets, its current structure may need further enhancement to deal with sudden and extreme market fluctuations and rapidly changing dependency structures. Thus, future research should focus on developing algorithms that can sensitively incorporate event significance or extreme probabilities to enhance causal inference stability during extreme market conditions. Recent studies have suggested that asymmetric multifractal Hurst exponents positively impact volatility forecasting [4], and the potential use of Rényi-entropy-based Transfer Entropy to better capture key information flow has also been explored [63]. Building on these advancements, future work could focus on hybridizing H-ETE-GNN with asymmetric multifractal analysis and Renyi transfer entropy, thereby increasing its robustness and adaptability across diverse market regimes, including crises. Another promising future direction involves incorporating high-frequency financial data to estimate realized volatility more accurately. While our current model relies on daily-return-based volatility estimates, applying ETE and GNN techniques to realized volatility derived from intraday data may improve responsiveness and granularity, particularly during volatile or rapidly evolving market conditions. Additionally, future research could explore integrating past volatility values directly into the model architecture. While our current GNN framework is designed around return-based node features, incorporating volatility information may enhance prediction performance if implemented carefully. To avoid potential overfitting due to increased input dimensionality, a hybrid approach such as LSTM-GNN could be adopted to jointly capture temporal and cross-sectional dependencies—an extension that lies beyond the scope of the present study but offers a promising avenue for future work.

Author Contributions

Conceptualization, P.C.; methodology, S.L.; software, S.L.; validation, S.L.; formal analysis, P.C.; investigation, P.C.; resources, P.C.; data curation, S.L.; writing—original draft preparation, P.C. and S.L.; writing—review and editing, P.C. and S.L.; visualization, S.L.; supervision, P.C.; project administration, P.C.; funding acquisition, P.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| GNN | graph neural network |

| ETE | effective transfer entropy |

| TE | transfer entropy |

| RNN | recurrent neural network |

| LSTM | long short-term memory |

| GRU | gated recurrent unit |

| ETF | exchange-traded fund |

| GCN | graph convolutional network |

| MI | mutual information |

| RTE | randomized transfer entropy |

| MSE | mean squared error |

| RMSE | root mean squared error |

| MAE | mean absolute error |

| MAPE | mean absolute percentage error |

| MDD | maximum drawdown |

References

- Franses, P.H.; Van Dijk, D. Forecasting stock market volatility using (non-linear) Garch models. J. Forecast. 1996, 15, 229–235. [Google Scholar] [CrossRef]

- Sen, J.; Mehtab, S.; Dutta, A. Volatility Modeling of Stocks from Selected Sectors of the Indian Economy Using GARCH. In Proceedings of the 2021 Asian Conference on Innovation in Technology (ASIANCON), Pune, India, 27–29 August 2021; pp. 1–9. [Google Scholar] [CrossRef]

- Kim, H.Y.; Won, C.H. Forecasting the volatility of stock price index: A hybrid model integrating LSTM with multiple GARCH-type models. Expert Syst. Appl. 2018, 103, 25–37. [Google Scholar] [CrossRef]

- Cho, P.; Lee, M. Forecasting the Volatility of the Stock Index with Deep Learning Using Asymmetric Hurst Exponents. Fractal Fract. 2022, 6, 394. [Google Scholar] [CrossRef]

- Sivadasan, E.T.; Mohana Sundaram, N.; Santhosh, R. Stock market forecasting using deep learning with long short-term memory and gated recurrent unit. Soft Comput. 2024, 28, 3267–3282. [Google Scholar] [CrossRef]

- Ahmed, D.M.; Hassan, M.M.; Mstafa, R.J. A Review on Deep Sequential Models for Forecasting Time Series Data. Appl. Comput. Intell. Soft Comput. 2022, 2022, 6596397. [Google Scholar] [CrossRef]

- Hommes, C.H. Financial markets as nonlinear adaptive evolutionary systems. Quant. Financ. 2001, 1, 149. [Google Scholar] [CrossRef]

- Bildirici, M.; Ersin, Ö.Ö. Regime-Switching Fractionally Integrated Asymmetric Power Neural Network Modeling of Nonlinear Contagion for Chaotic Oil and Precious Metal Volatilities. Fractal Fract. 2022, 6, 703. [Google Scholar] [CrossRef]

- Choi, S.Y. Analysis of stock market efficiency during crisis periods in the US stock market: Differences between the global financial crisis and COVID-19 pandemic. Phys. A Stat. Mech. Its Appl. 2021, 574, 125988. [Google Scholar] [CrossRef]

- Choi, S.Y. Dynamic volatility spillovers between industries in the US stock market: Evidence from the COVID-19 pandemic and Black Monday. N. Am. J. Econ. Financ. 2022, 59, 101614. [Google Scholar] [CrossRef]

- Hurst, H.E. Long-term storage capacity of reservoirs. Trans. Am. Soc. Civ. Eng. 1951, 116, 770–799. [Google Scholar] [CrossRef]

- Morales, R.; Di Matteo, T.; Gramatica, R.; Aste, T. Dynamical generalized Hurst exponent as a tool to monitor unstable periods in financial time series. Phys. A Stat. Mech. Its Appl. 2012, 391, 3180–3189. [Google Scholar] [CrossRef]

- Trinidad Segovia, J.; Fernández-Martínez, M.; Sánchez-Granero, M. A novel approach to detect volatility clusters in financial time series. Phys. A Stat. Mech. Its Appl. 2019, 535, 122452. [Google Scholar] [CrossRef]

- Tzouras, S.; Anagnostopoulos, C.; McCoy, E. Financial time series modeling using the Hurst exponent. Phys. A Stat. Mech. Its Appl. 2015, 425, 50–68. [Google Scholar] [CrossRef]

- Eom, C.; Choi, S.; Oh, G.; Jung, W.S. Hurst exponent and prediction based on weak-form efficient market hypothesis of stock markets. Phys. A Stat. Mech. Its Appl. 2008, 387, 4630–4636. [Google Scholar] [CrossRef]

- Endres, S.; Stübinger, J. A flexible regime switching model with pairs trading application to the S&P 500 high-frequency stock returns. Quant. Financ. 2019, 19, 1727–1740. [Google Scholar] [CrossRef]

- Simonsen, I.; Hansen, A.; Nes, O.M. Determination of the Hurst exponent by use of wavelet transforms. Phys. Rev. E 1998, 58, 2779–2787. [Google Scholar] [CrossRef]

- Carbone, A.; Castelli, G.; Stanley, H. Time-dependent Hurst exponent in financial time series. Phys. A Stat. Mech. Its Appl. 2004, 344, 267–271, Applications of Physics in Financial Analysis 4 (APFA4). [Google Scholar] [CrossRef]

- Gómez-Águila, A.; Trinidad-Segovia, J.; Sánchez-Granero, M. Improvement in Hurst exponent estimation and its application to financial markets. Financ. Innov. 2022, 8, 86. [Google Scholar] [CrossRef]

- Peng, C.K.; Buldyrev, S.V.; Havlin, S.; Simons, M.; Stanley, H.E.; Goldberger, A.L. Mosaic organization of DNA nucleotides. Phys. Rev. E 1994, 49, 1685–1689. [Google Scholar] [CrossRef]

- Kantelhardt, J.W.; Zschiegner, S.A.; Koscielny-Bunde, E.; Havlin, S.; Bunde, A.; Stanley, H. Multifractal detrended fluctuation analysis of nonstationary time series. Phys. A Stat. Mech. Its Appl. 2002, 316, 87–114. [Google Scholar] [CrossRef]

- Doorasamy, M.; Sarpong, P. Fractal market hypothesis and Markov regime switching model: A possible synthesis and integration. Int. J. Econ. Financ. Issues 2018, 8, 93–100. [Google Scholar]

- Gatheral, J.; Jaisson, T.; Rosenbaum, M. Volatility is rough. Quant. Financ. 2018, 18, 933–949. [Google Scholar] [CrossRef]

- Garcin, M. Forecasting with fractional Brownian motion: A financial perspective. Quant. Financ. 2022, 22, 1495–1512. [Google Scholar] [CrossRef]

- Wang, X.; Yu, J.; Zhang, C. On the optimal forecast with the fractional Brownian motion. Quant. Financ. 2024, 24, 337–346. [Google Scholar] [CrossRef]

- Summer, M. Financial Contagion and Network Analysis. Annu. Rev. Financ. Econ. 2013, 5, 277–297. [Google Scholar] [CrossRef]

- Cho, P.; Kim, K. Global Collective Dynamics of Financial Market Efficiency Using Attention Entropy with Hierarchical Clustering. Fractal Fract. 2022, 6, 562. [Google Scholar] [CrossRef]

- Baltakys, K.; Baltakienė, M.; Heidari, N.; Iosifidis, A.; Kanniainen, J. Predicting the trading behavior of socially connected investors: Graph neural network approach with implications to market surveillance. Expert Syst. Appl. 2023, 228, 120285. [Google Scholar] [CrossRef]

- Chen, Q.; Robert, C.Y. Multivariate Realized Volatility Forecasting with Graph Neural Network. In Proceedings of the Third ACM International Conference on AI in Finance, New York, NY, USA, 2–4 November 2022; ICAIF ’22. pp. 156–164. [Google Scholar] [CrossRef]

- Das, N.; Sadhukhan, B.; Chatterjee, R.; Chakrabarti, S. Integrating sentiment analysis with graph neural networks for enhanced stock prediction: A comprehensive survey. Decis. Anal. J. 2024, 10, 100417. [Google Scholar] [CrossRef]

- Scarselli, F.; Gori, M.; Tsoi, A.C.; Hagenbuchner, M.; Monfardini, G. The Graph Neural Network Model. IEEE Trans. Neural Netw. 2009, 20, 61–80. [Google Scholar] [CrossRef]

- Kipf, T.N.; Welling, M. Semi-Supervised Classification with Graph Convolutional Networks. arXiv 2017. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, S.; Xiao, Y.; Song, R. A Review on Graph Neural Network Methods in Financial Applications. arXiv 2022. [Google Scholar] [CrossRef]

- Lu, J.; Tian, Y.; Wang, S.; Sheng, M.; Zheng, X. PearNet: A Pearson Correlation-based Graph Attention Network for Sleep Stage Recognition. In Proceedings of the 2022 IEEE 9th International Conference on Data Science and Advanced Analytics (DSAA), Shenzhen, China, 13–16 October 2022; pp. 1–8. [Google Scholar] [CrossRef]

- Jia, J.; Benson, A.R. Residual Correlation in Graph Neural Network Regression. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, New York, NY, USA, 6–10 July 2020; KDD ’20. pp. 588–598. [Google Scholar] [CrossRef]

- Wein, S.; Malloni, W.M.; Tomé, A.M.; Frank, S.M.; Henze, G.I.; Wüst, S.; Greenlee, M.W.; Lang, E.W. A graph neural network framework for causal inference in brain networks. Sci. Rep. 2021, 11, 8061. [Google Scholar] [CrossRef] [PubMed]

- Zheng, K.; Yu, S.; Chen, B. CI-GNN: A Granger causality-inspired graph neural network for interpretable brain network-based psychiatric diagnosis. Neural Netw. 2024, 172, 106147. [Google Scholar] [CrossRef] [PubMed]

- Granger, C.W.J. Investigating Causal Relations by Econometric Models and Cross-spectral Methods. Econometrica 1969, 37, 424–438. [Google Scholar] [CrossRef]

- Schreiber, T. Measuring Information Transfer. Phys. Rev. Lett. 2000, 85, 461–464. [Google Scholar] [CrossRef]

- Duan, Z.; Xu, H.; Huang, Y.; Feng, J.; Wang, Y. Multivariate Time Series Forecasting with Transfer Entropy Graph. Tsinghua Sci. Technol. 2023, 28, 141–149. [Google Scholar] [CrossRef]

- Kaiser, A.; Schreiber, T. Information transfer in continuous processes. Phys. D Nonlinear Phenom. 2002, 166, 43–62. [Google Scholar] [CrossRef]

- Marschinski, R.; Kantz, H. Analysing the information flow between financial time series. Eur. Phys. J. B 2002, 30, 275–281. [Google Scholar] [CrossRef]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Sandoval, L., Jr. To lag or not to lag? How to compare indices of stock markets that operate on different times. Phys. A Stat. Mech. Its Appl. 2014, 403, 227–243. [Google Scholar] [CrossRef]

- Yao, C.Z.; Li, H.Y. Effective transfer entropy approach to information flow among epu, investor sentiment and stock market. Front. Phys. 2020, 8, 206. [Google Scholar] [CrossRef]

- Sensoy, A.; Sobaci, C.; Sensoy, S.; Alali, F. Effective transfer entropy approach to information flow between exchange rates and stock markets. Chaos Solitons Fractals 2014, 68, 180–185. [Google Scholar] [CrossRef]

- Kim, S.; Ku, S.; Chang, W.; Song, J.W. Predicting the Direction of US Stock Prices Using Effective Transfer Entropy and Machine Learning Techniques. IEEE Access 2020, 8, 111660–111682. [Google Scholar] [CrossRef]

- Caserini, N.A.; Pagnottoni, P. Effective transfer entropy to measure information flows in credit markets. Stat. Methods Appl. 2022, 31, 729–757. [Google Scholar] [CrossRef]

- Wu, Z.; Pan, S.; Chen, F.; Long, G.; Zhang, C.; Yu, P.S. A comprehensive survey on graph neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 4–24. [Google Scholar] [CrossRef] [PubMed]

- Borys, P.; Trybek, P.; Dworakowska, B.; Sekrecka-Belniak, A.; Nurowska, E.; Bednarczyk, P.; Wawrzkiewicz-Jałowiecka, A. Selectivity filter conductance, rectification and fluctuations of subdomains—How can this all relate to the value of Hurst exponent in the dwell-times of ion channels states? Chaos Solitons Fractals 2024, 180, 114492. [Google Scholar] [CrossRef]

- Yang, H.; Qu, L.; Chen, L.; Song, K.; Yang, Y.; Liang, Z. Potential sliding zone recognition method for the slow-moving landslide based on the Hurst exponent. J. Rock Mech. Geotech. Eng. 2024, 16, 4105–4124. [Google Scholar] [CrossRef]

- Mukherjee, S.; Sadhukhan, B.; Das, A.K.; Chaudhuri, A. Hurst exponent estimation using neural network. Int. J. Comput. Sci. Eng. 2023, 26, 157–170. [Google Scholar] [CrossRef]

- Barua, R.; Sharma, A.K. Using fear, greed and machine learning for optimizing global portfolios: A Black-Litterman approach. Financ. Res. Lett. 2023, 58, 104515. [Google Scholar] [CrossRef]

- Akiba, T.; Sano, S.; Yanase, T.; Ohta, T.; Koyama, M. Optuna: A next-generation hyperparameter optimization framework. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 2623–2631. [Google Scholar] [CrossRef]

- Greff, K.; Srivastava, R.K.; Koutník, J.; Steunebrink, B.R.; Schmidhuber, J. LSTM: A search space odyssey. IEEE Trans. Neural Netw. Learn. Syst. 2016, 28, 2222–2232. [Google Scholar] [CrossRef]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014. [Google Scholar] [CrossRef]

- Cho, K.; Van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv 2014. [Google Scholar] [CrossRef]

- Arenas, L.; Gil-Lafuente, A.M. Regime Switching in High-Tech ETFs: Idiosyncratic Volatility and Return. Mathematics 2021, 9, 742. [Google Scholar] [CrossRef]

- Ohanissian, A.; Russell, J.R.; Tsay, R.S. True or Spurious Long Memory? A New Test. J. Bus. Econ. Stat. 2008, 26, 161–175. [Google Scholar] [CrossRef]

- Jarque, C.M.; Bera, A.K. Efficient tests for normality, homoscedasticity and serial independence of regression residuals. Econ. Lett. 1980, 6, 255–259. [Google Scholar] [CrossRef]

- Sirisha, U.M.; Belavagi, M.C.; Attigeri, G. Profit Prediction Using ARIMA, SARIMA and LSTM Models in Time Series Forecasting: A Comparison. IEEE Access 2022, 10, 124715–124727. [Google Scholar] [CrossRef]

- He, J.; Shang, P. Comparison of transfer entropy methods for financial time series. Phys. A Stat. Mech. Its Appl. 2017, 482, 772–785. [Google Scholar] [CrossRef]

- Jizba, P.; Lavička, H.; Tabachová, Z. Causal inference in time series in terms of Rényi transfer entropy. Entropy 2022, 24, 855. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).