1. Introduction

Modeling complex physical systems often involves characteristics such as nonlocality, memory effects, and power–law dynamics, where traditional integer-order differential equations exhibit inherent limitations. Fractional Partial Differential Equations (fPDEs), by introducing non-integer-order derivative operators, naturally capture the system’s long-range temporal memory and spatial nonlocality and have thus found broad applications across various scientific and engineering domains.

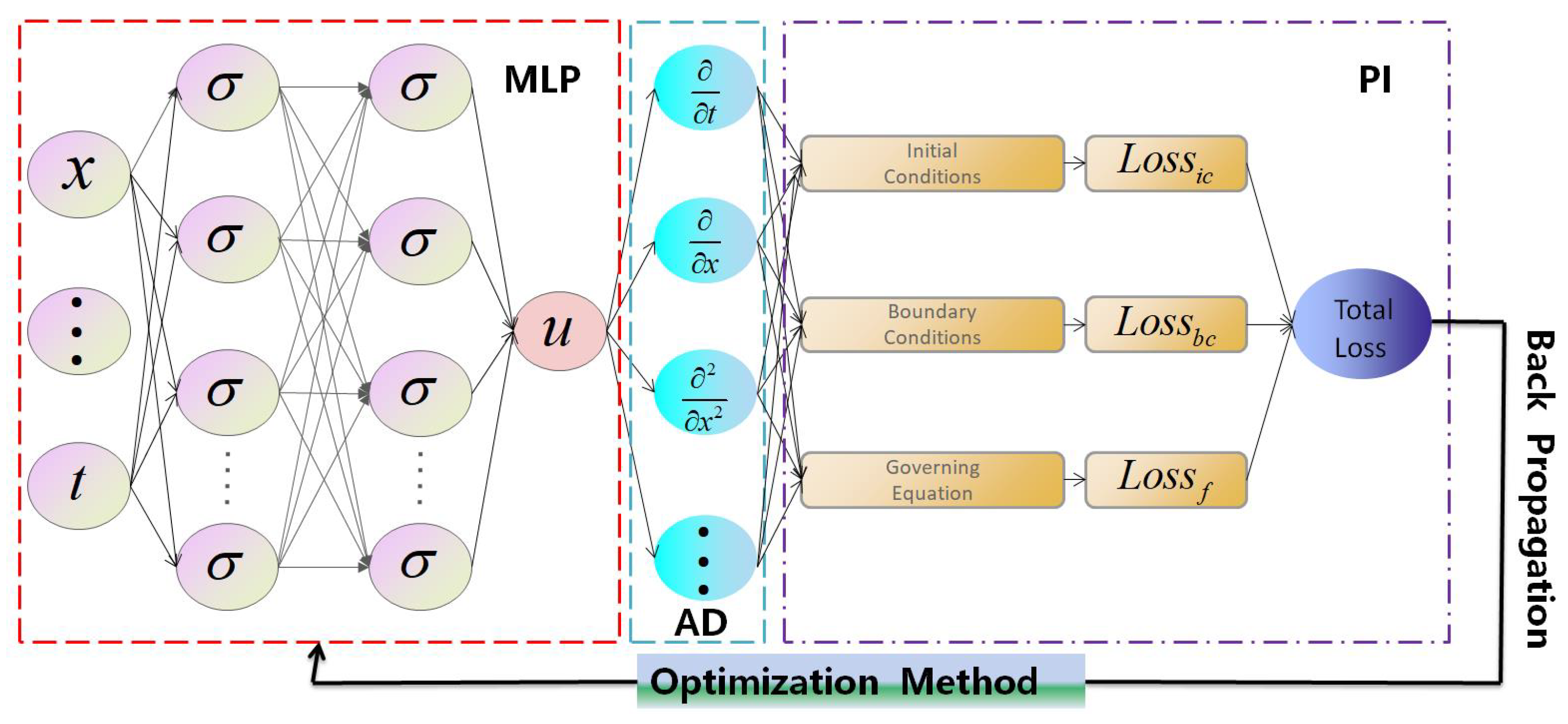

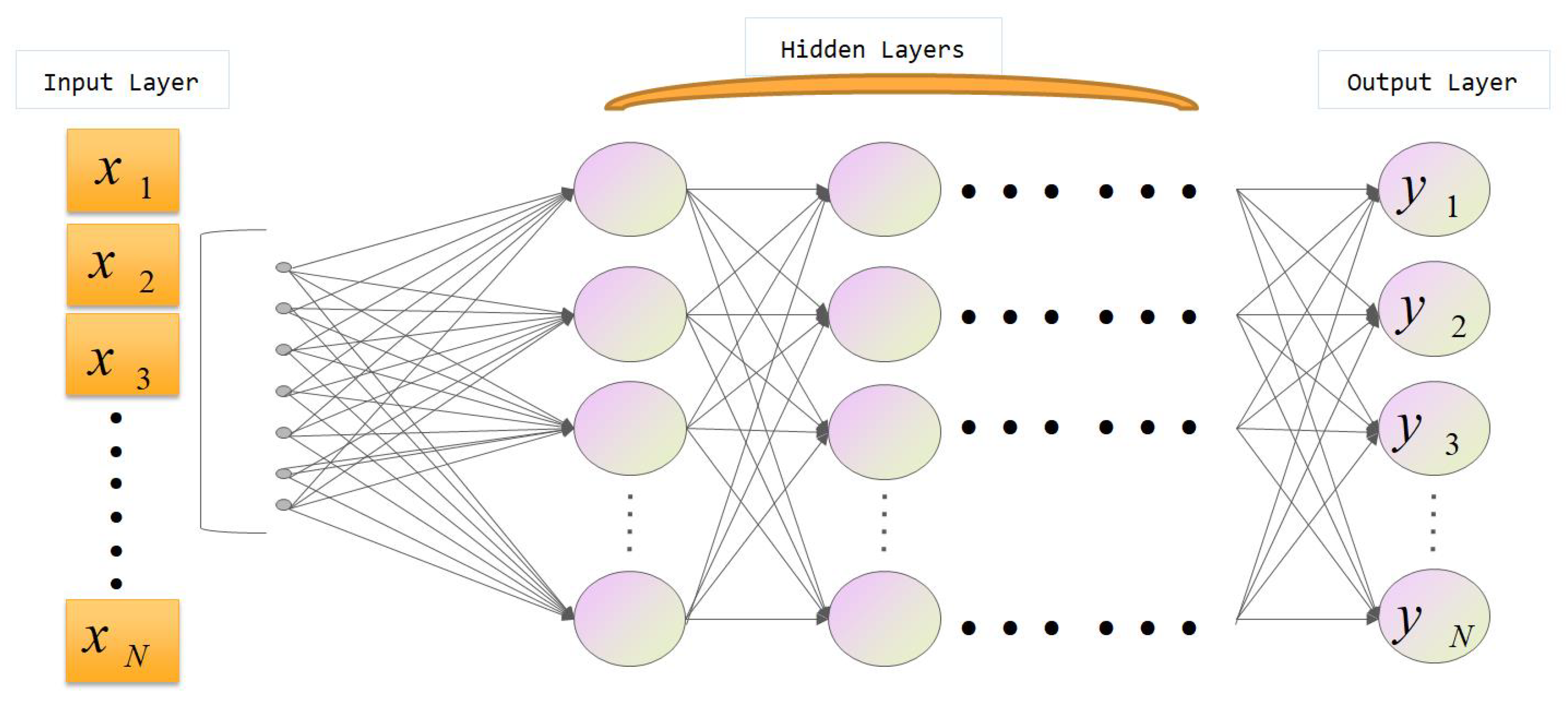

Solving fPDE has greater challenges compared to their integer-order counterparts, primarily due to the high computational complexity and numerical instability arising from their nonlocal operators. These issues have, to some extent, hindered the broader application and dissemination of fPDEs across various domains. Although traditional Physics-Informed Neural Networks (PINNs) [

1,

2] have demonstrated strong expressive power in function approximation, their efficacy relies on the classical chain rule, which holds efficiently during forward and backward propagation in neural networks but is no longer valid in the context of fractional calculus. This necessitates the development of novel Physics-Informed Neural Network Architectures specifically tailored for fPDEs. Pang et al. [

3] first extended the PINNs framework to the solution of fPDEs by proposing fractional Physics-Informed Neural Networks (fPINNs). This approach employs the Grüwald–Letnikov discretization and has successfully solved both forward and inverse problems of convection–diffusion equations. As an inherently data-driven method, fPINNs do not rely on fixed meshes or discrete nodes, thus offering flexibility in handling complex geometrical domains. In contrast to the extensive research on PINNs, efforts dedicated to improving and extending fPINNs remain relatively limited. Zhang et al. [

4] proposed an Adaptive Weighted Auxiliary Output fPINN (AWAO–fPINNs), which enhances training stability by introducing auxiliary variables and a dynamic weighting mechanism. Yan et al. [

5] introduced a Laplace transform-based fPINNs (Laplace fPINNs), which avoids the need for a large number of auxiliary points required in conventional methods and simplifies the construction of the loss function. Furthermore, Guo et al. [

6] developed Monte Carlo fPINNs (MC fPINNs) that employ a Monte Carlo sampling strategy to provide unbiased estimation of fractional derivatives from the outputs of deep neural networks, which are then used to construct soft penalty terms in the physical constraint loss, thereby reducing numerical bias.

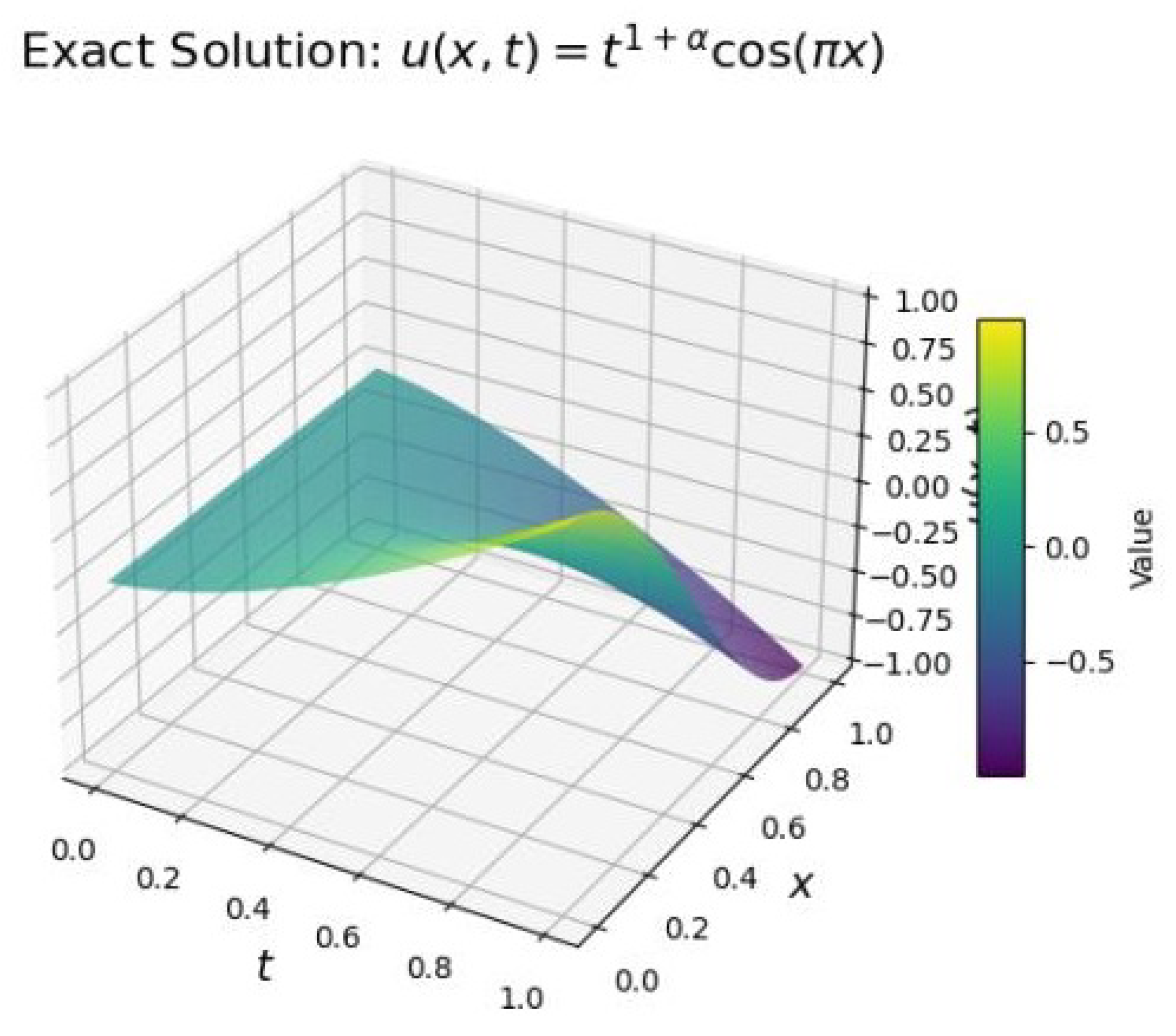

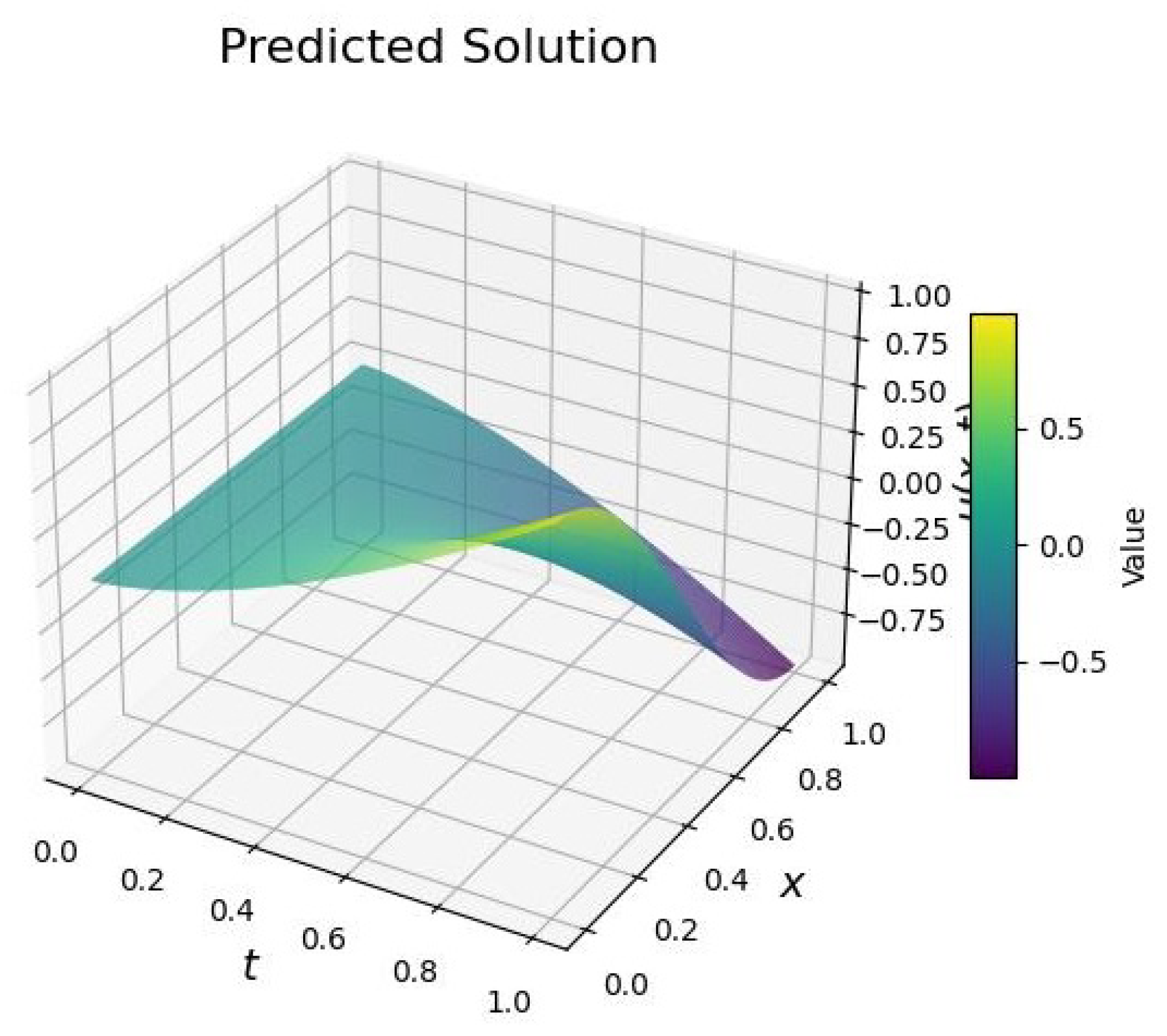

In this paper, we investigate a framework based on fPINNs, focusing on the fractional Allen–Cahn (f–AC) equation and fractional Cahn–Hilliard (f–CH) equation. For the given Ginzburg–Laudau energy functional,

If we take the

gradient flow and choose the Caputo fractional derivative as the time derivative

, we obtain the f–AC equation

If we take the

gradient flow, the corresponding f–CH equation can be obtained as follows:

where

is the phase variable, and

is the interface width parameter. In addition, many consistent conditions (such as periodic boundary conditions and homogeneous Neumann boundary conditions) and initial functions should be proposed to close the system. The f–AC and f–CH equations extend their classical counterparts by replacing the time derivative with a fractional-order derivative (e.g., the Caputo derivative). This enables the effective characterization of nonlocal dynamical behaviors such as “memory effects” and “long-range interactions” in the system [

7].

In the numerical solution of the f–AC equation, a variety of high-accuracy and stable discretization methods have been proposed, including the

and

time-stepping scheme, convolution quadrature, finite element method, and spectral method. Du and Yang [

7] systematically analyzed the well-posedness and maximum principle of Equation (

2), and proposed a time discretization scheme such as weighted convex splitting, for which they established unconditional stability and convergence. Liao and Tang [

8] developed a variable-step Crank–Nicolson-type scheme that preserves energy stability and the maximum principle within the Riemann–Liouville derivative framework, and they first established a discrete transformation relationship between Caputo and Riemann–Liouville fractional derivatives. Zhang and Gao [

9] constructed a high-order finite difference scheme using the shifted fractional trapezoidal rule, which exhibits energy dissipation and maximum principle preservation.

For the f–CH equation, the numerical solution poses significantly greater challenges compared to the f–AC equation, due to the coupling of its fourth-order spatial derivative with the fractional-order temporal derivative. Liu et al. [

10] proposed an efficient algorithm combining finite differences with the Fourier spectral method, effectively mitigating the high memory consumption and computational complexity caused by the nonlocality of the fractional operator. They further observed that, under certain conditions, a larger fractional order leads to a faster energy dissipation rate in the system. Zhang et al. [

11] employed the discrete energy method to establish the stability and convergence of their proposed finite difference scheme in the

norm. Ran et al. [

12] discretized the time-fractional derivative using the

formula, treated the nonlinear term via a second-order convex splitting scheme, and applied a pseudo-spectral method for the spatial discretization. By incorporating an adaptive time-stepping strategy, this approach significantly reduces computational cost while preserving the energy stability of the system.

In the application of deep learning techniques to solve phase field models, Wight and Zhao [

13] proposed a modified PINN for solving the Allen–Cahn and Cahn–Hilliard equations. However, solving the fractional-order counterparts of Equations (

2) and (

3) is even more challenging compared to their integer-order versions. The nonlocal nature of fractional differential operators introduces full temporal or spatial domain coupling in numerical computations, substantially increasing the complexity of algorithm design and computational cost. fPINNs has provided an effective approach for solving the f–AC equation and the f–CH equation by incorporating fractional differential operators. For instance, Wang et al. [

14] proposed a hybrid spectral-enhanced fPINNs, which integrates fPINNs with a spectral collocation method to solve the time-fractional phase field model. By leveraging the high approximation accuracy of the spectral collocation method, this approach significantly reduces the number of approximation points required for discretizing the fractional operator, thereby lowering computational complexity and improving training efficiency. Compared to standard fPINNs, this framework demonstrates enhanced function approximation capability and higher numerical accuracy while maintaining physical consistency. Additionally, Wang et al. [

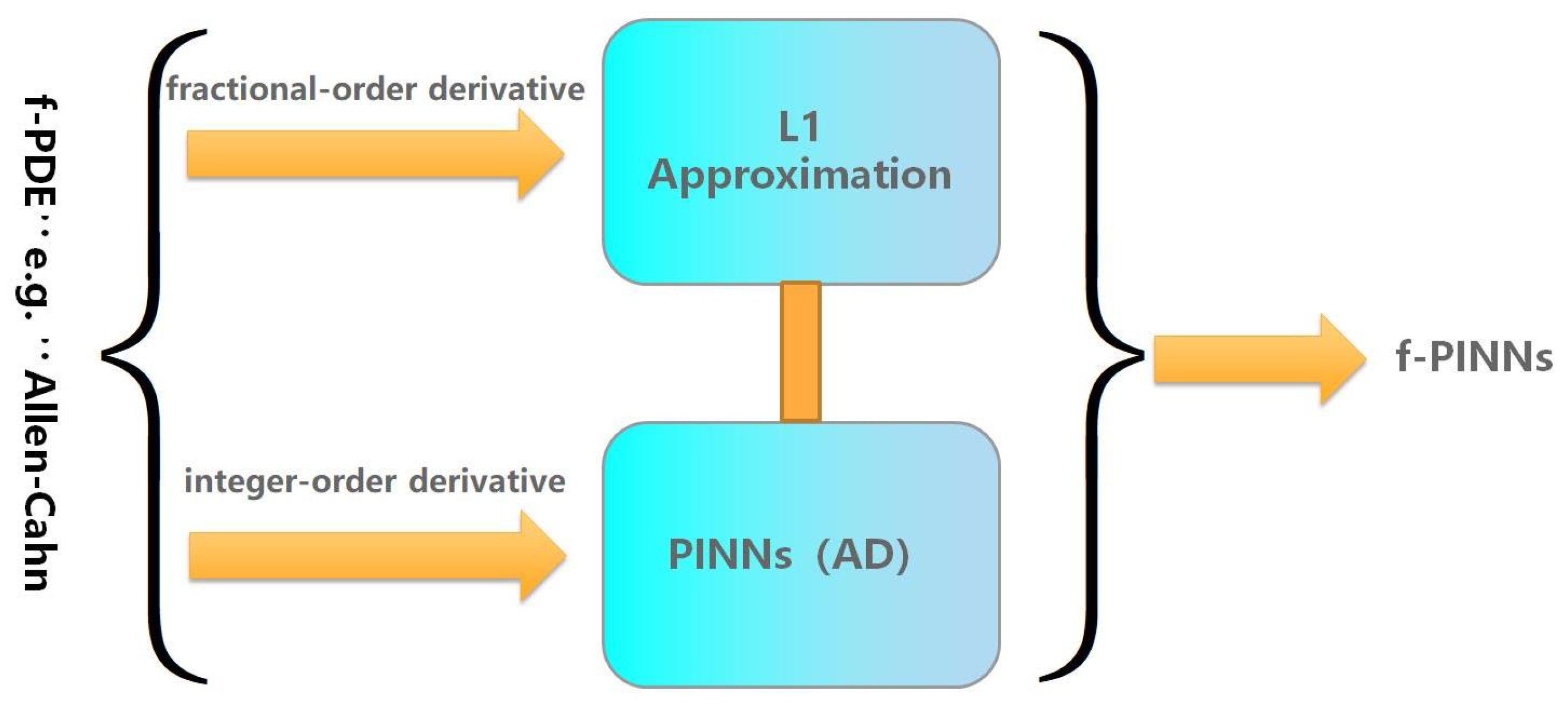

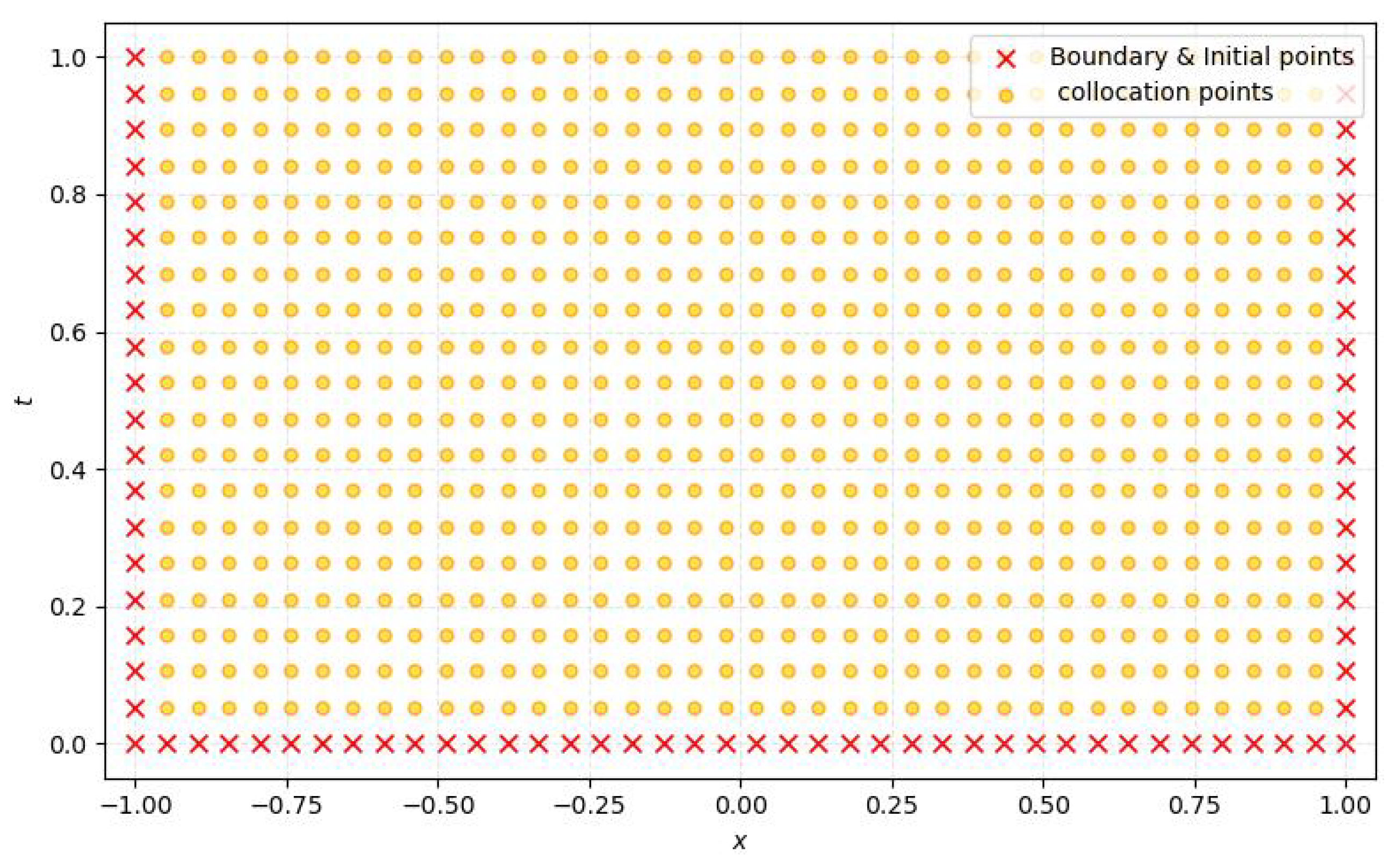

15] introduced a high-accuracy neural solver based on Hermite functions, which employs Hermite interpolation techniques to construct a high-order explicit approximation scheme for the fractional derivative. By constructing trial functions that inherently satisfy initial conditions, this method automatically embeds the initial conditions into the solution, simplifying the design of the loss function and reducing the complexity of the solution process. These studies indicate that deep neural network-based methods exhibit strong potential in solving fractional phase field models, offering a new, efficient, and flexible numerical paradigm for addressing challenges associated with nonlocality, high stiffness, and complex geometries. Specifically, we construct fPINNs based on the

discretization scheme to solve the corresponding fractional-order Equations (

2) and (

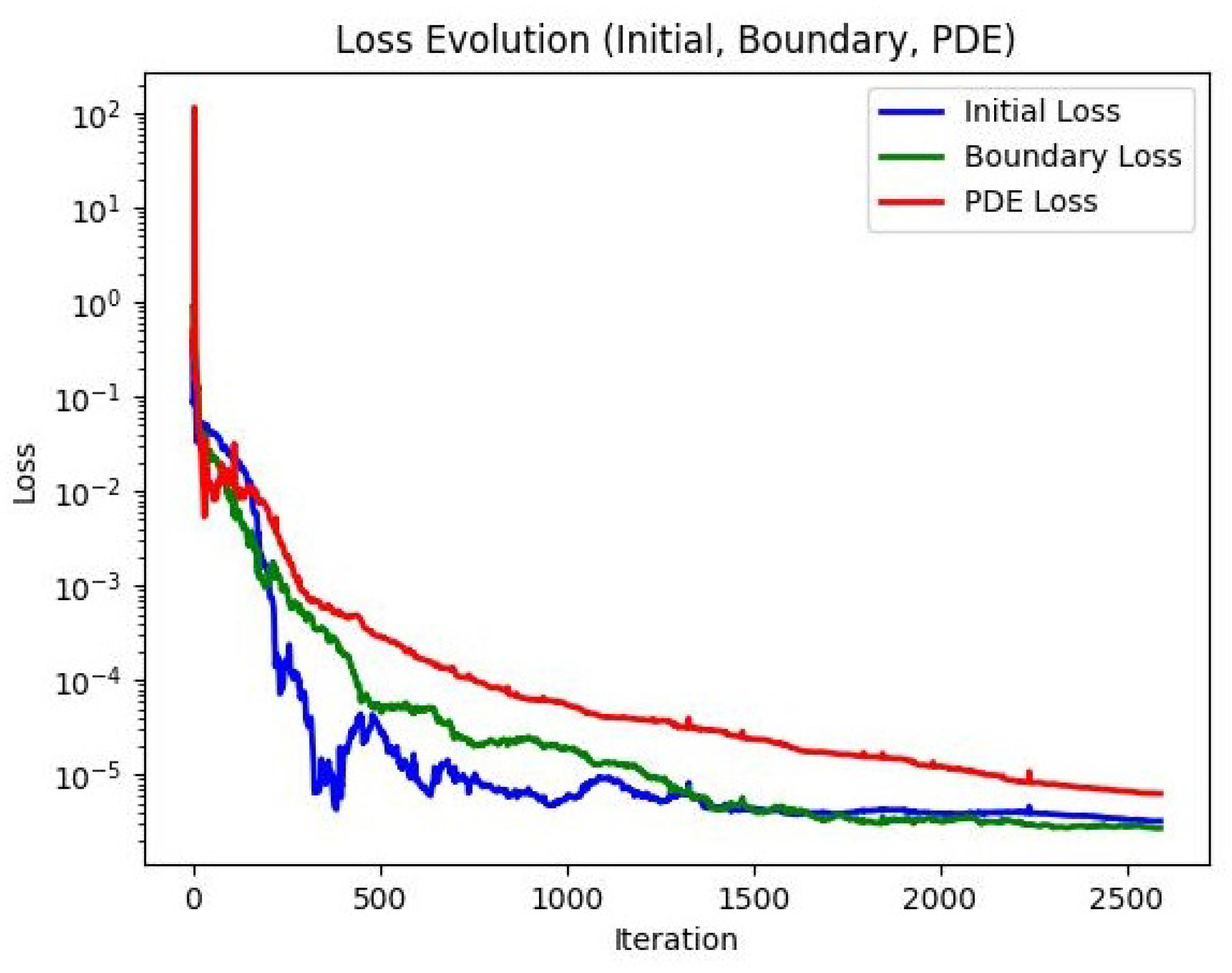

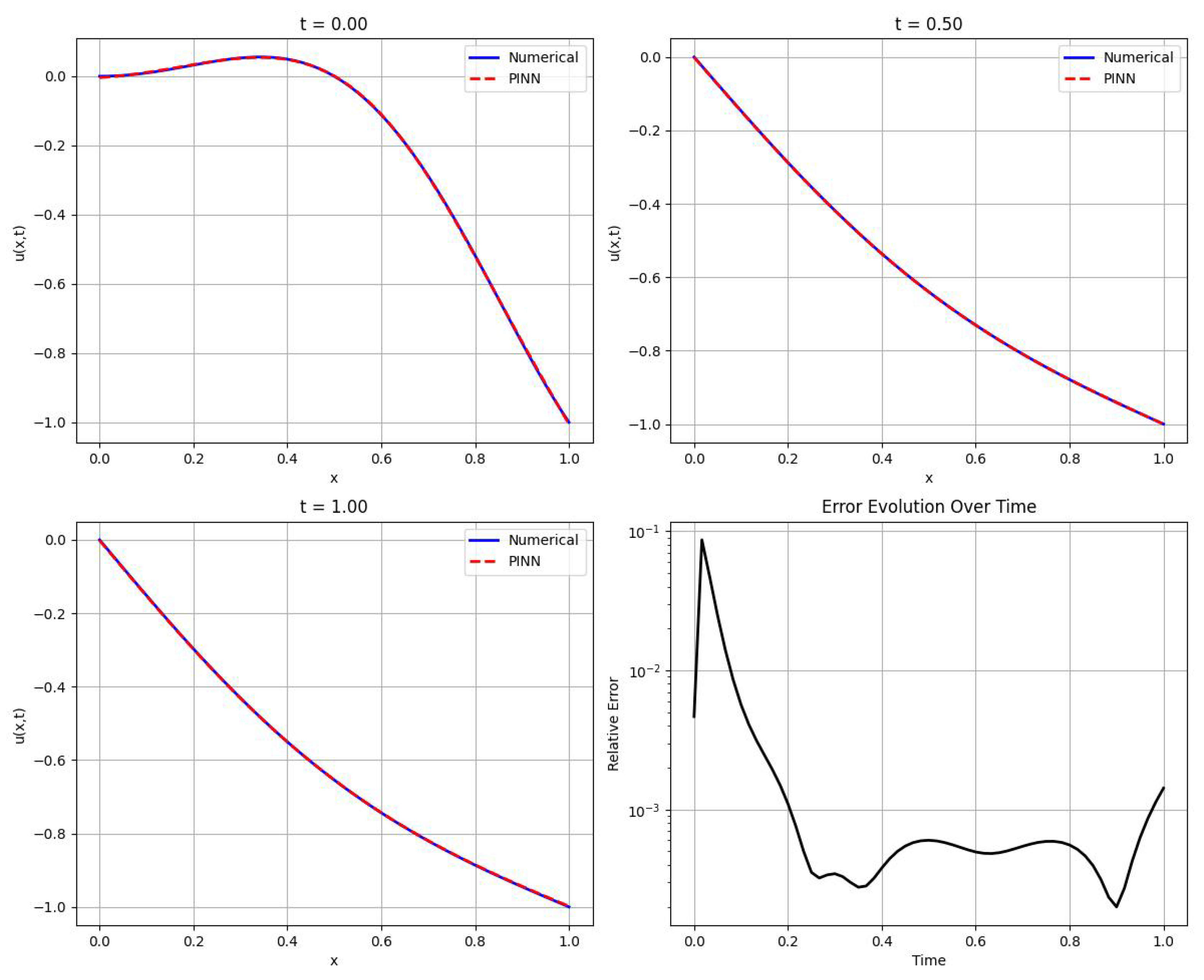

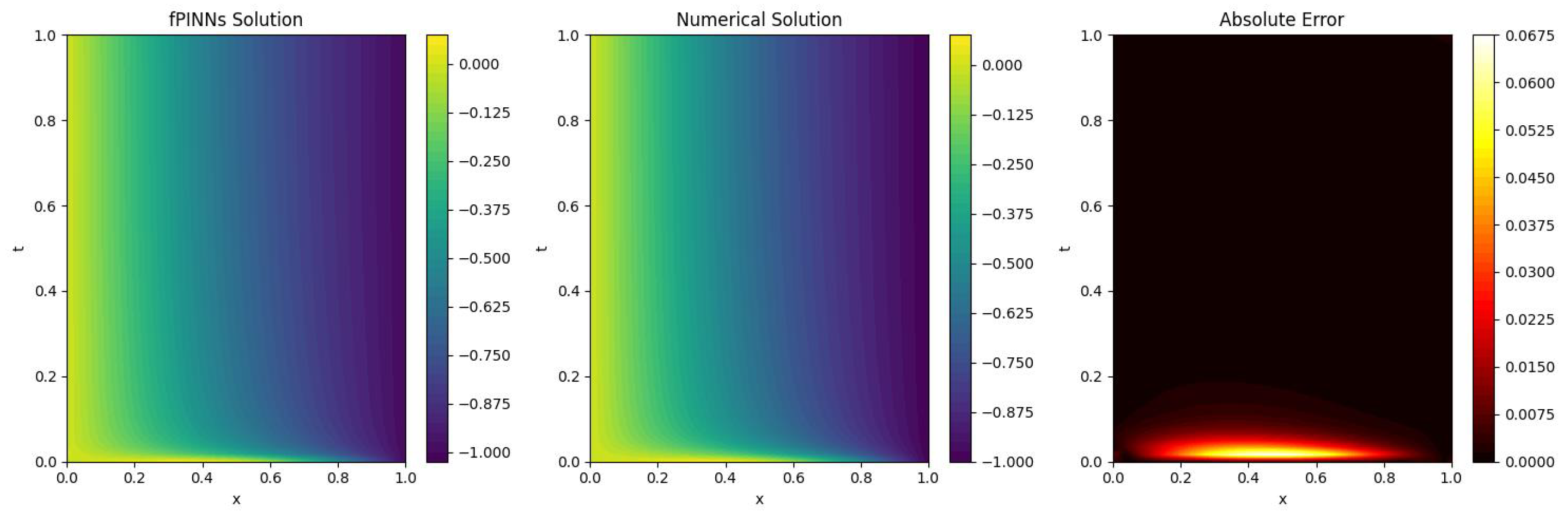

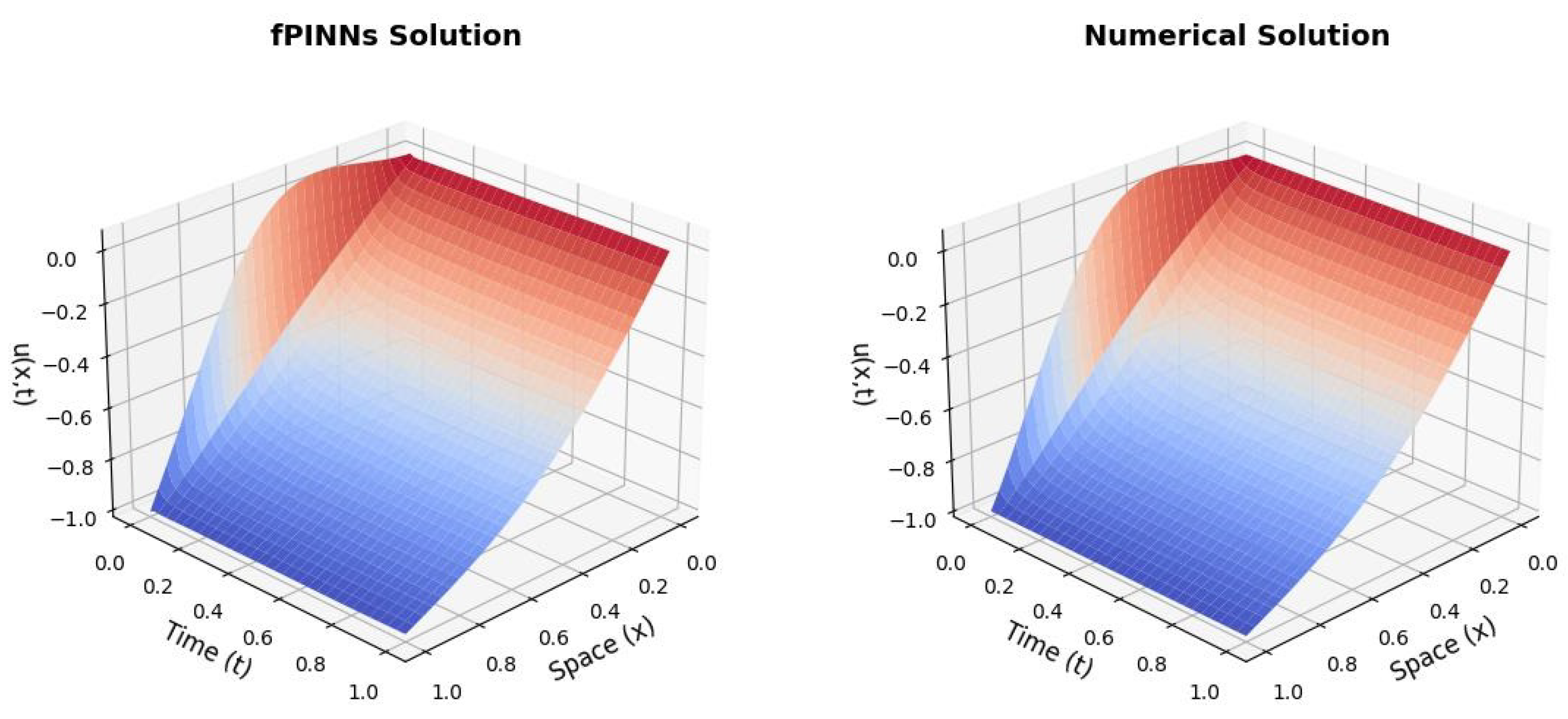

3), aiming to explore the applicability and effectiveness. Besides the standard fPINNs framework, we also consider three optimization strategies: adaptive non-uniform sampling, adaptive exponential moving average ratio loss weighting, and two-stage adaptive quasi-optimization to improve the accuracy of the original fPINNs. Correspondingly, some new improved fPINNs algorithms are proposed for solving Equations (

2) and (

3), and numerical examples also exhibit the efficiency and high accuracy of these algorithms.

The remainder of this paper is organized as follows. In

Section 2, we present the definition of the Caputo derivative and its interpolation approximation. In

Section 3, the fPINNs framework based on the

approximation for the f–AC equation is introduced. Upon the fPINNs framework, in

Section 4, we propose three improvement strategies and develop some new improved fPINNs algorithms by combining these strategies, namely f–A–PINNs, f–A–A–PINNs, and f–A–T–PINNs. In

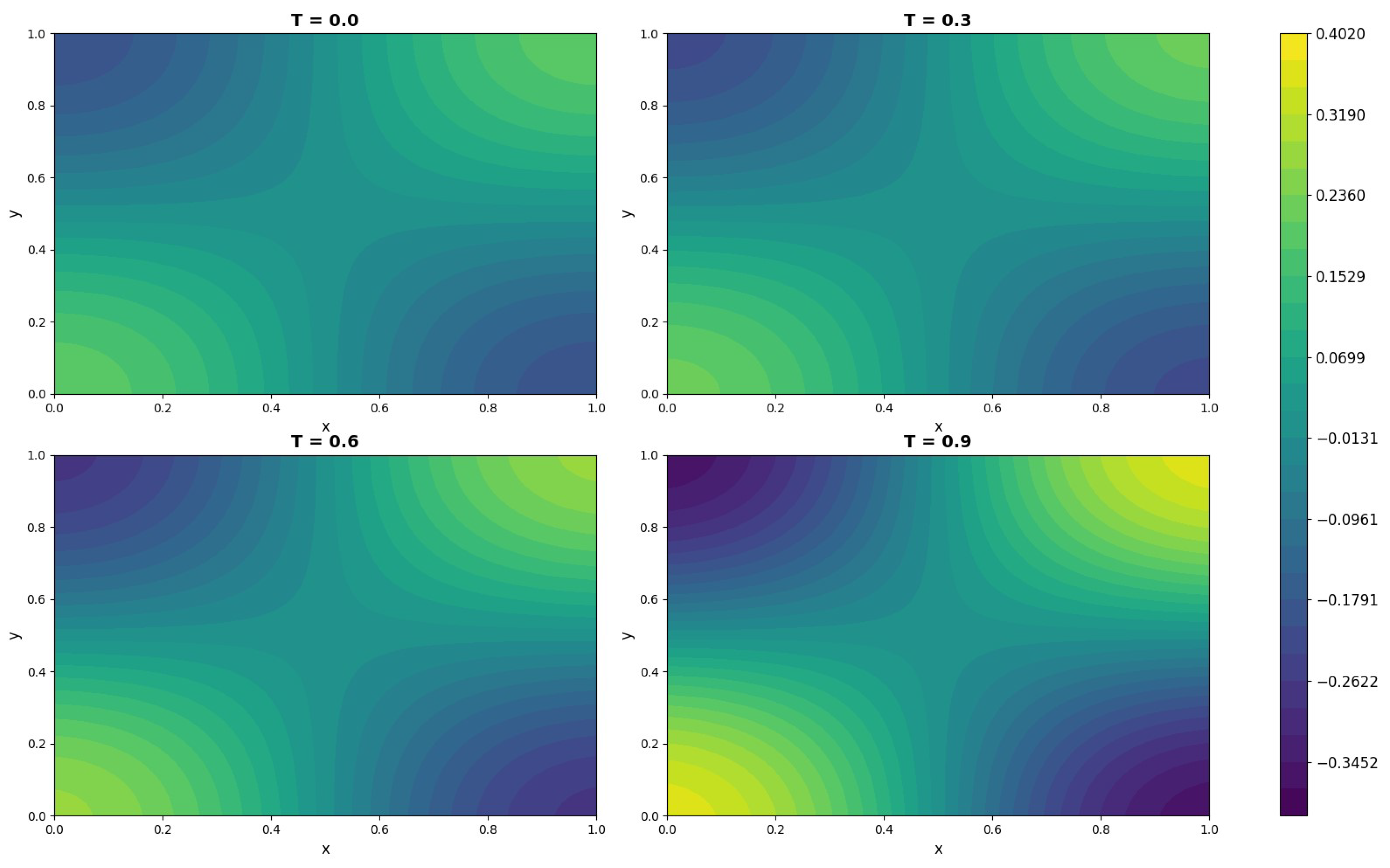

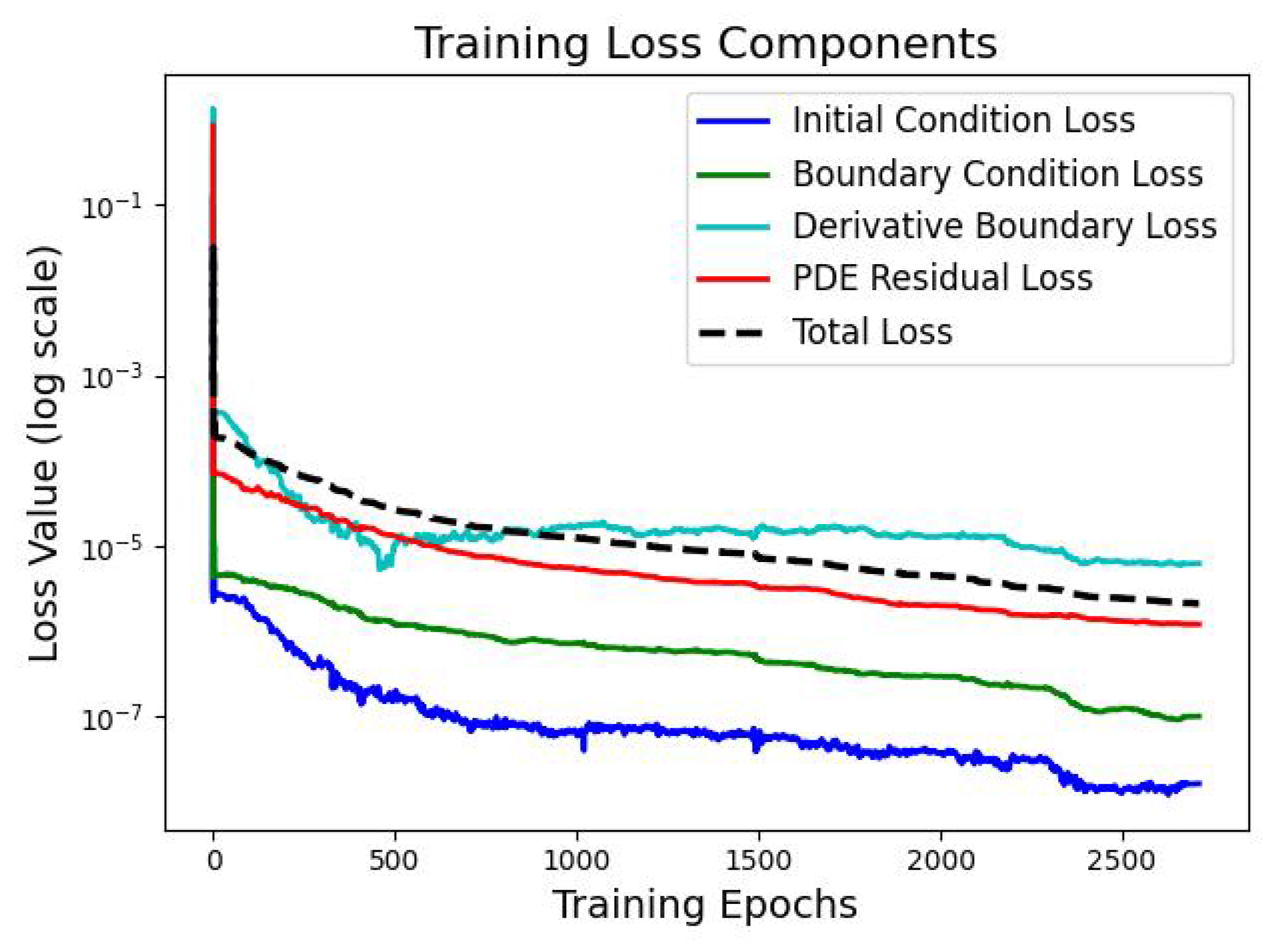

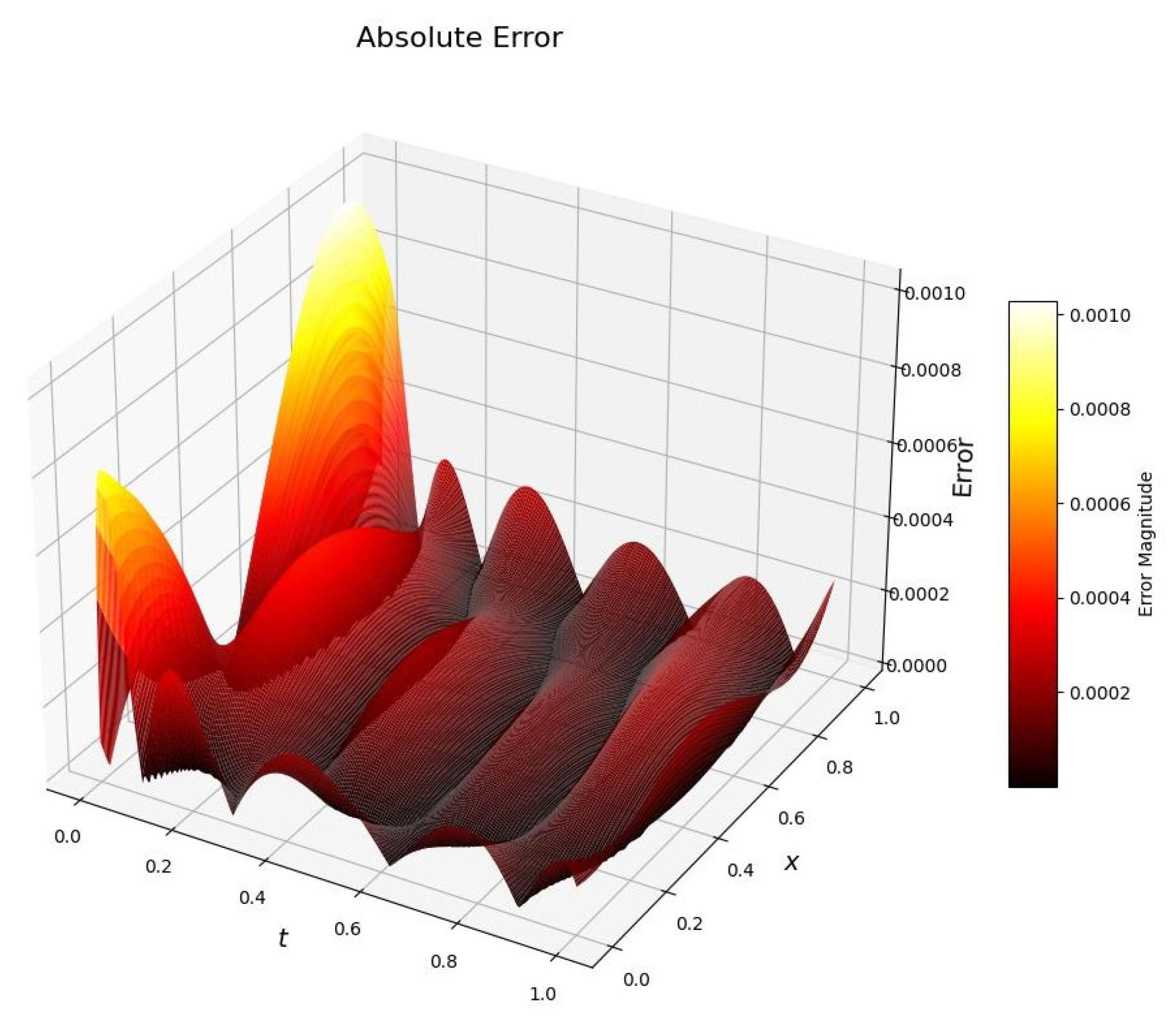

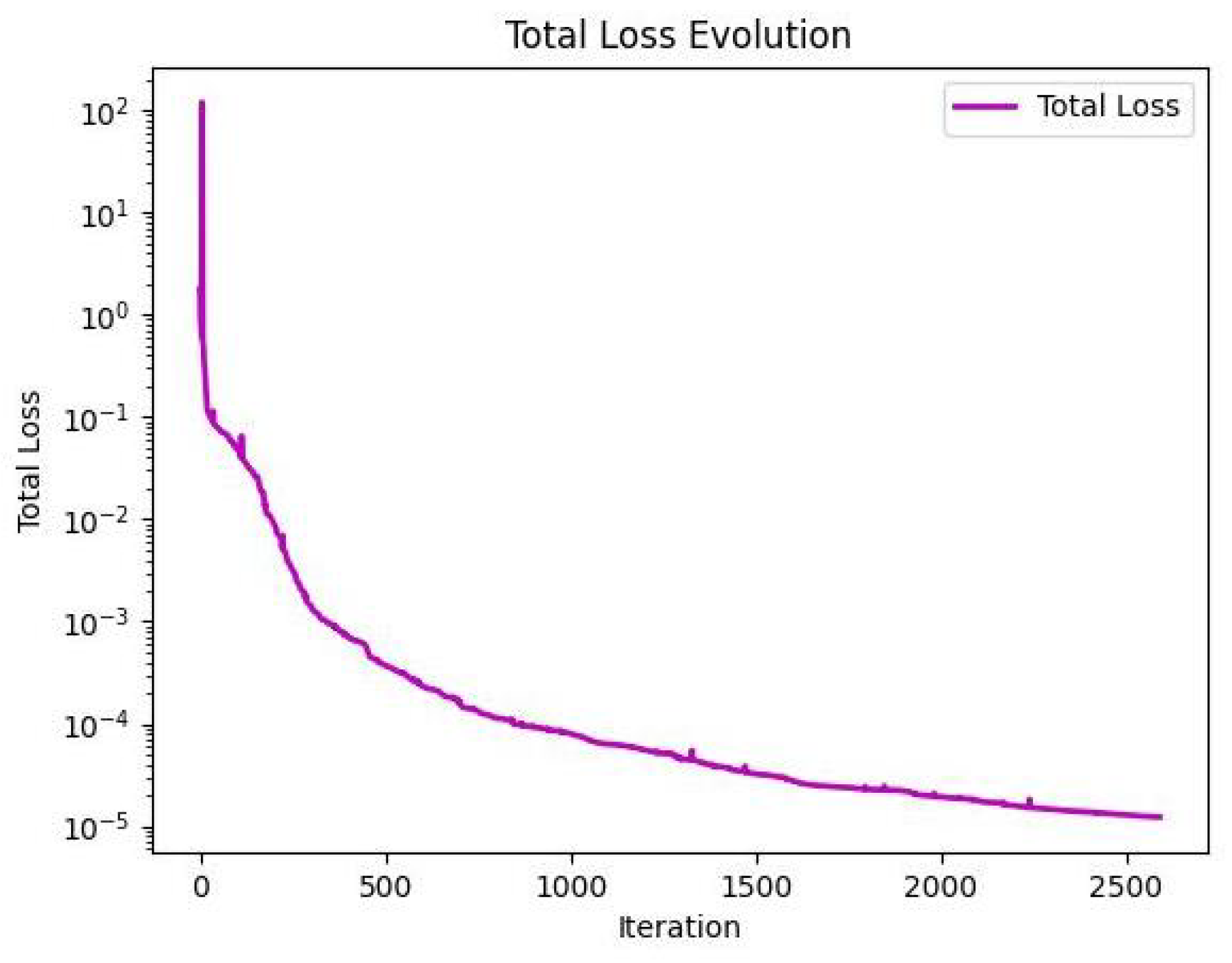

Section 5, numerical experiments and parameter stability tests are conducted for the f-AC equation using both the original fPINNs and the proposed improved algorithms. Furthermore, the most effective algorithm, f–A–T–PINNs, is applied to solve the 2D f-AC equation and the f–CH equation.

2. Caputo Derivative and Its Interpolation Approximation

Owing to the nonlocal nature of the Caputo derivative and the relatively complex numerical procedures involved in computing fractional-order derivatives, robust and accurate numerical methods for solving fPDEs are highly desirable. The Caputo derivative is particularly advantageous and more widely employed in addressing initial and boundary value problems arising in physics and engineering. Therefore, in this paper, we adopt the Caputo fractional derivative to study the solution of fPDEs. In this section, we briefly introduce the definition of fractional derivative, an interpolation-based approximation scheme for the Caputo fractional derivative.

Definition 1 ([

16])

. The Caputo derivative with order for the given function is defined aswhere n is a positive integer such that . The interpolation approximation of fractional derivative is a crucial step in the finite difference method, and it provides a promising approach for solving fPDEs using deep neural networks (DNNs). In the following, we will primarily introduce the interpolation approximation scheme for the Caputo fractional derivative of order .

For the Caputo derivation of order

,

the most commonly used method is the

approximation based on the piecewise linear interpolation.

Let

N be a positive integer. Define

, and

and we have

Performing linear interpolation for

on the interval

, we can obtain the interpolation function and the error function as follows:

where

.

Then, substituting the approximation function

of

in Equation (

7) leads to

and we can obtain the approximation formula of

,

which is often called a

formula, or

approximation.