1. Introduction

Self-similar sets. A compact non-empty set

A in Euclidean

is called self-similar if it is a finite union of pieces

which are geometrically similar to

A [

1]. Using contractive similitudes

with

Hutchinson (cf. [

2,

3,

4,

5]) has characterized these sets as unique solutions of the equation

The set

is called an iterated function system or IFS, and

A is the attractor of

A contractive similitude from Euclidean

to itself fulfils

where the constant

is called the factor of

We assume that all maps in

F have the same factor

In that case, we can write

where

is a contractive linear map and

is an isometry of

Here,

denotes a linear map and

a translation vector. With this notation, Equation (

1) is turned into the so-called numeration from

The maps

and the matrix of

g are often considered as the digits and base of a numeration system. For the decimal system, we have

and

for

and

We can assume that

(Otherwise, replace

by

and consider the translated attractor

with

cf. ([

6] Section VI.A).) That is,

so that zero is in

A and can be taken as a ’control point’ of the attractor. The idea of control points goes back to Thurston [

7] and plays an important part in this paper.

Motivation. With these assumptions and additional properties, a comprehensive theory of the measure and dimension of self-similar fractals was established [

2,

4,

5,

8]. Nevertheless, there are few concrete examples of self-similar sets in the literature. Most of them, like the Sierpiński gasket, fractal squares, and post-critically finite fractals, have a fairly simple structure, or, like Barnsley’s fern [

3], approximate natural phenomena with an unclear mathematical structure. The lack of examples has led to the widespread belief that magnifications of a self-similar set all look more or less the same.

This is not true. In the paper [

6] with Mekhontsev in 2018, we have shown that even simple modifications of the Sierpiński gasket have a very rich interior structure which in the presence of a separation condition can be described by finite-state automata. Without a computer, this structure cannot be recognized. Mekhontsev [

9] built the free IFStile package with excellent graphics which visualizes and classifies thousands of examples within minutes. It has not been generally used, however, since it is sophisticated and not open source. There is a definitive need for transparent software in fractal geometry.

Moreover, all traditional magnification algorithms for self-similar sets are limited by two constraints:

- (i)

Bounded number of digits. Suppose the diameter of A is one, and we use floating-point numbers with 16 decimal digits. Then, the magnification of A by the factor is not possible, since equals zero in the computer.

- (ii)

Numerical error propagation. Each magnification step is expressed by certain operations, such as multiplication. If magnification steps are iterated, the inaccuracies of the operations will accumulate.

Main contribution. In this note, we introduce the principle of virtual magnification, which solves problem (i) and speeds up the magnification procedure. The basic idea is to calculate with control points and directions of pieces instead of all their points. The principle works for arbitrary self-similar sets without the assumption of a separation condition.

Moreover, our algorithm will magnify without numerical error propagation and thus also solve problem (ii) when we have

integer data in (

2). That is,

g and the

must be given by integer matrices, and the

must have integer coordinates. The

must belong to a finite group

S of linear isometries such that

The

could be rational, since the multiplication of all

by the common denominator would change the size but not the shape of

Most concrete examples in the literature fulfill these conditions.

The algorithm was implemented in MATLAB R2020b as an open-source program with 100 lines of code, which are appended as

Supplementary Materials to this paper. The program was successfully tested with several other MATLAB versions as well as with Octave. It visualizes the interior structure of self-similar sets as a slow-motion film. For certain IFSs with integer data and open set condition, including most examples from the literature, you can run it almost as long as you want. Otherwise, a decisive constraint is the memory and speed of the computer. There is much room for improvement.

Exploration with our program shows that except for the most simple cases, self-similar attractors have a very rich local structure. An IFS does not just generate a compact set

it also generates a collection of photos of small places in

The theory of scenery flow and uniform scaling, discussed in

Section 3, says that magnification at different spots will lead to the same infinite collection although in different order. Our program is a practical way to sample photos from an IFS, showing the importance of the scenery flow.

Contents of the paper. Our program is written for attractors

A in the plane. Extensions are discussed in

Section 7. The simplest examples come from

and

with

While the program is based on matrix calculations, notation in the text will often use complex numbers, such as

and

instead of

The IFS data for

Figure 1 are

For this example, the function of the program will be explained in the next section. In

Section 3, we discuss the well-known concept of scenery flow and our discrete version for self-similar sets. We then formulate our principle of virtual magnification and operate with control points and neighbor maps. In

Section 5, we explain the structure of the program. Our implementation puts transparency and simplicity over the efficiency and quality of figures. In the two final sections, we discuss the example data, possible extensions of the program, and open problems. We hope that readers will feel encouraged to conduct their own experiments. The study of fractal structures requires explorative computer work.

2. A Preview of the Program

Our MATLAB program

scenery.m and a number of data files *.mat are the

Supplementary Materials of this paper. If you have access to MATLAB or the non-commercial version Octave, you can start it from one of the data files that contain the IFS matrices

g and

The example in

Figure 1 is obtained from loading the file B1-figure1.mat and executing the command

scenery(g,H,6,5,w(1,:)). Details will be given in

Section 5.

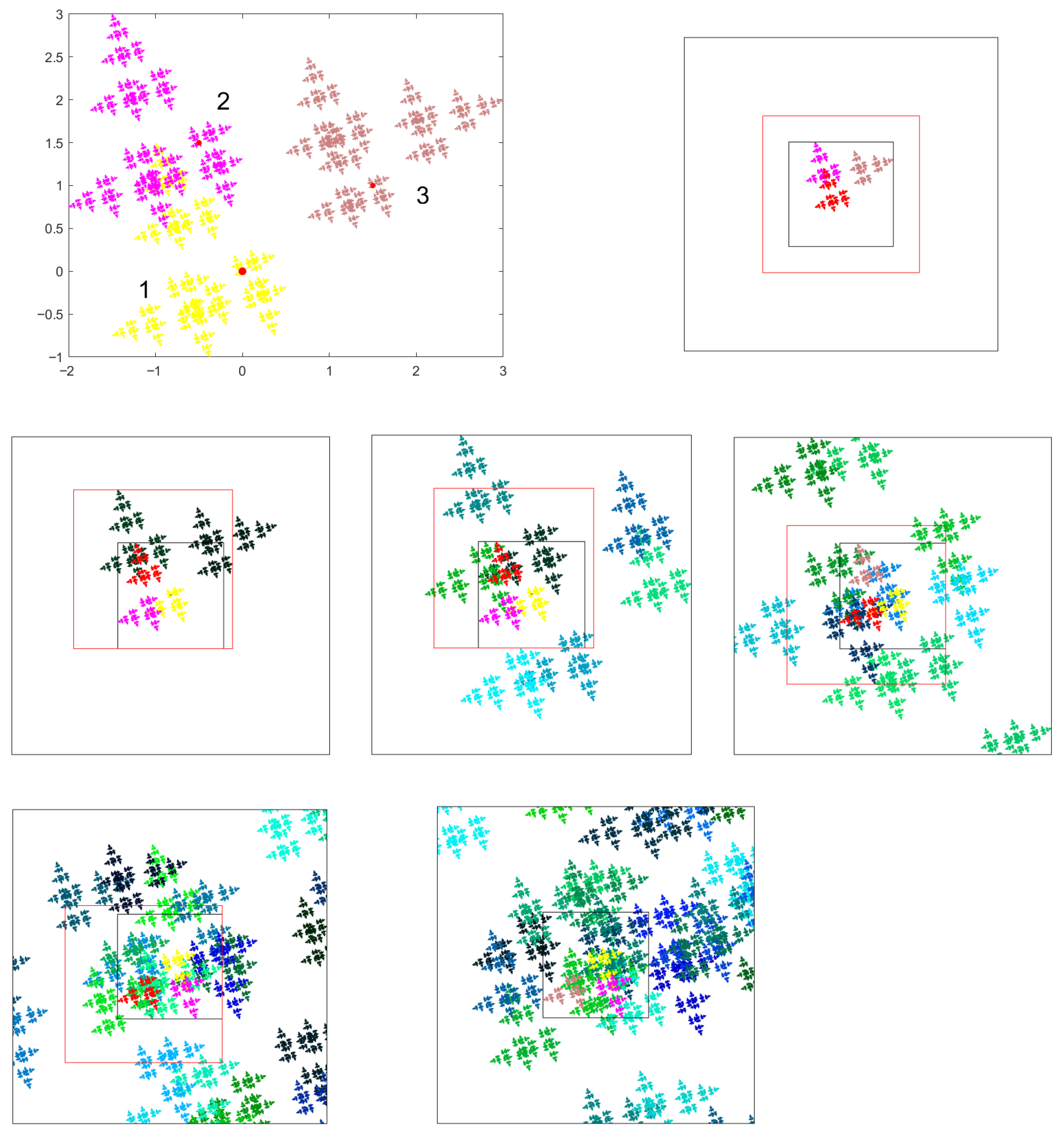

First, the fractal

A is shown in full scale in a coordinate system in the upper left of

Figure 1. If there are three pieces, the first is yellow, the second pink, and the third brown. We also draw the control point 0 as a red spot. Since we assume

the control point of

agrees with that of

while the control points of

are

respectively. In the figure,

A looks like a Cantor set, but the pieces

and

do intersect, and this is a place where magnification gives more information.

Next,

A is moved to the inner subsquare of a square screen in such a way that the control point of

A coincides with the center of the square. One of the pieces is selected and colored red. On the upper right of

Figure 1 is

, which was yellow before. The red subpiece is the central piece in the next magnification. The red square indicates the window of this magnification. On the left of the middle row, we have drawn

as the central piece with three subpieces. The sets

are now neighbor pieces with random shades of dark color. Note that the central piece has the same control point as

A but another orientation. The brown subpiece of the central piece is already colored red in order to show that the third part

of

is the next central piece. The piece

of the right neighbor is outside the red square and will not be seen in the next magnification.

In the picture in the middle,

is the central reference piece, and its third subpiece

is selected for the next step and colored red. The blue neighbors below the central piece are

and

Only a small part of

is in the red square and will be visible in the next magnification. The choice of piece 3 does not change the orientation of the central piece since

in (

3). There is a slight shift in the picture since the focus goes from the control point of the piece to the control point of its subpiece.

In the next step, piece 2 is selected, which rotates the central piece. For the last magnification, we have again chosen piece 3. Thus, the central pieces in the last row are and In the last picture, 45 neighbors intersect the screen, and it is not clear anymore whether we have a Cantor set. This view could not be anticipated from the global picture on the upper left. In the present case, however, subsequent magnification again simplifies the structure and indicates that it is indeed a Cantor set.

3. Continuous and Discrete Magnification Flow

Furstenberg’s concept of a photo. Now, let us treat the magnification process more formally. We first slightly modify Furstenberg’s notion of a miniset [

10]. Suppose we have an object

a mapping

and a subset

Then,

is called a

photo of

A on the screen

This is a very nice abstraction of the everyday concept of a photo. Usually,

is the plane, although with 3D printing, we could have three-dimensional photos and in theory also

n-dimensional ones [

10]. In this paper,

W is a square. The object

A in our examples is part of the plane. It could also be in some higher-dimensional space. The mapping

represents the properties of the camera. In our case,

is a similitude. Usually,

is a small part of

A, which is shown in standard size as the photo

So,

will be an expanding similitude.

The scenery flow. Let

denote the closed ball with center

c and radius

For

the photos generated by

have been considered by many authors [

10,

11,

12,

13,

14,

15,

16,

17,

18,

19]. The photo is

a subset of the unit ball

, which serves as a circular window. Some authors, in particular Furstenberg, take square windows. For a fixed

c and variable

, which tends to 0, these photos form a film which is called the magnification flow or scenery flow of

A at

It is appropriate to consider

on a logarithmic scale, taking

as the parameter. The values

then go to equidistant values

For self-similar sets, it was observed that on this logarithmic scale, the collection of photos of the scenery flow is independent of

c in a measure-theoretic sense [

11,

15,

19]. If we change the center point

the magnification film will show the same pictures but in different order. This ’uniform scaling property’ requires a limit

from Furstenberg minisets to Furstenberg microsets. It was recently proved for much more general measures and used to solve various problems in geometric measure theory [

12,

14,

17,

18]. Our interpretation from a somewhat applied viewpoint is that self-similar sets in the 1990s were seen as models of certain sets in nature, like a fern or a maple leaf [

3], and now appear as models of scale-free texture given by a collection of photos which share certain characteristic features.

The discrete magnification flow. It is good to know that our magnification experiments are connected with contemporary fractal research. We need not go into detail, however, since computers do not allow indefinite magnification with

and

We consider a discrete version of the magnification flow. Instead of assuming a point

c as the center for the magnification flow, we take a sequence

of indices in

and the corresponding nested sequence

of pieces of

A of level 1, 2, 3, …, which in the limit would determine a unique point

c of intersection. The center of magnification is slightly shifted at every step. When

with control point

is taken as reference piece in our program, the corresponding photo is given by

If we compare this with (

4) for

where

only the reference point is different. Without loss of generality, we can assume that

Moreover, 0 is the control point and

Then, the distance between 0 and

c is at most one, and the distance between

and

is at most

Consequently, the difference

Thus, corresponding photos of the continuous and the discrete scenery flow at

c differ only by a translation of length at most one. In our implementation of the discrete flow illustrated in

Figure 1, the translation vector is between two points in the small black square. By calculating our picture in the larger black square, we included the translated photo of the continuous scenery flow for the small square. In

Figure 1, the ratio of the side lengths of the large and small squares is 3. It can be increased so that the translation vector appears even smaller. This requires additional computer time and memory.

To summarize, the photos of our discrete setting include the photos of the established scenery flow for

It is possible to interpolate our photos numerically on the continuous scale of all

Thus, our method is indeed a visualization of the scenery flow—not for all

k up to infinity, only up to some

depending on the IFS and the capacity of the computer. The use of magnification factors

is motivated by our algorithm, which will now be presented. There is a mathematical benefit, too: for a finite type IFS with the open set condition, our discrete magnification flow is given by a finite number of photos repeated in random order [

20].

4. The Principle of Virtual Magnification

Traditional algorithms of magnification calculate the fractal at smaller places and then magnify to the size of the screen. The key idea of the present paper is to represent the data at the small place by a much simpler data structure: the control points of the reference piece and its neighbors in the small place. The configuration of these control points is magnified to standard size. Then, around each standardized control point, we draw the corresponding piece. For this, we need only one numerical copy of which is given as a cloud of N points.

Let us demonstrate this idea with

Figure 1. The global point cloud on the upper left was calculated by a classical IFS algorithm [

3]. It is then transformed to fit into the small black square

W at the upper right with a control point at the center. This is our standard point cloud. No further IFS iteration is calculated. All pieces in subsequent magnifications are obtained as rotated and translated copies of the standard cloud.

The self-similarity of A allows the algorithm to determine the positions of the control points in a recursive way. We can forget the coordinates of the small place where we are. We only have to keep track of the relative positions of neighbor control points with respect to the control point of the reference piece. This requires much less work than the local calculation of small pieces of

When we have integer IFS data, the calculations of control points and corresponding directions involve fairly small integers. There will be no numerical errors. We may have numerical errors in the initial calculation of the standard point cloud, but these errors cannot accumulate. That is why we can magnify as long as the computer can handle the number of neighbors.

To formulate the principle of virtual magnification in technical terms, we need the concept of a neighbor map which has been used in various forms [

6,

7,

21,

22,

23,

24,

25]. Suppose

and

are two words of length

k from the alphabet

Given the IFS

there are similitudes

and

which generate the

k-th level pieces

and

of the attractor

The neighbor map from

to

then is

Note that

does not map

to

This is accomplished by

The neighbor map

maps the full-size attractor

A to a neighboring set

of the same size such that the following diagram commutes.

Thus,

has the same metric position with respect to

A as

has with respect to

up to similarity. The conjugating map is

The following basic equation allows the calculation of neighbor maps of level

from those of level

k. For

the words

and

have a length of

Starting with the neighbor maps

of the first level, we can now inductively determine all neighbor maps. This has been accomplished in other papers [

6,

21,

26]. However, there are three differences.

- (i)

In other papers, only proper neighbor maps have been considered—that is, neighbors which intersect The definition above includes all potential neighbors. Below, we choose as actual neighbor maps those for which where C is a constant depending on A and our window

- (ii)

In other papers, it was assumed that the IFS fulfills the open set condition or weak separation condition in order to keep the number of proper neighbors finite. For visualization, this assumption is not needed. The computer can perform only finitely many steps, anyway.

- (iii)

The standardization forced by concerns size, position and direction. In our magnification flow, we change size and position but keep the direction of pieces. In the program, we work with neighbor maps and restore the direction afterwards.

We assumed that the

values are given by integer data,

where the matrices

and the vectors

have integer entries, and the

belong to a finite group

S of isometries with

Then, a straightforward calculation of

and of

from

by (

6) shows that

Proposition 1. For integer data, all neighbor maps have the form with some integer matrix and some integer vector t, which is the control point of the neighbor

Since s describes the direction change from A to a neighbor map describes what in geometry is called a flag: combined information on position and direction. The principle of virtual magnification says that instead of magnifying tens of thousands of points, we perform the calculation with tens of flags. Then, we transform one copy of our standard point cloud to each position and direction.

5. The Algorithm and Its Implementation

The algorithm uses only elementary matrix operations. Here are the main steps.

- 1.

Initialization. We calculate a global point cloud x representing A by a classical IFS algorithm. We draw x on the whole screen and in the small square. Initial values of parameters include the iteration number and the total number of iterations. The word u and the list of neighbor maps are empty. The reference piece is

- 2.

Choice of a subpiece. There are different options. The simplest is to take a random i uniformly from We replace the reference piece by its subpiece by putting and we save the direction matrix D of the new reference piece.

- 3.

Calculation of neighbors. For all neighbor maps

h in the list

and

we calculate the new neighbor map

as in (

6) and add the neighbor maps

with

Then, we remove the neighbor maps for which

cannot intersect the large square. This is our new list

- 4.

Plotting the magnification. The cloud of the reference piece with its new direction is The clouds of the reference pieces are with h in If the job is completed. Otherwise, we increase by one and go to 2.

We now discuss the implementation of the steps in the appended MATLAB program scenery.m and verify the correctness of the algorithm.

The program is run by loading an IFS data file into the workspace and typing

scenery(g,H,nit,np,3). Here,

is the total number of magnifications and

is the length of pauses in seconds to study subsequent pictures. The last parameter determines the choice of subpieces in step 2. Various data files are provided. Each data file consists of an

integer matrix

g and an

integer array

H which contains the digits

as described in

Section 1. The mapping

with the

matrix

and

vector

is represented by

It is very simple to edit these data and produce your own data files.

Step 1 starts with the calculation of the cloud

x by the procedure

globa.m. A minimum size of

points is prescribed. This parameter can be adapted to the size of the screen and the capacity of the computer. Attractors

A with larger Hausdorff dimensions require greater

N values for graphical accuracy. We determine the minimum level

L with

and take the actual number as

The point cloud

x is the set of all

for words

from our alphabet

—that is, all control points of pieces of level

It is calculated by a double loop. See Barnsley [

3] for related algorithms. As a matrix,

x has dimension

The system automatically determines maximal and minimal coordinates of x and plots the global point cloud in a corresponding rectangle. We prefer a quadratic window with the control point 0 of A in the center. The maximum length a of a vector in x is called the radius of In other words, a is the root of The small square in the middle of our screen will be It will include the point cloud x describing A as well as any rotation of x around 0.

The big square representing the screen is where the ratio is a parameter which can be changed. We put Moreover, we draw the red square which goes to the big square in the next step. Its radius is where denotes the expanding factor of our IFS. The center of the red square is the control point of the subpiece which will be chosen in step 3. To ensure the correctness of step 3, the red square must be contained in Since the center of the red square is in the small black square, this condition is fulfilled if or So, should be taken larger than but not too large because of the computational effort.

For step 2, the simplest choice of a random

is uniform. This is realized by the command

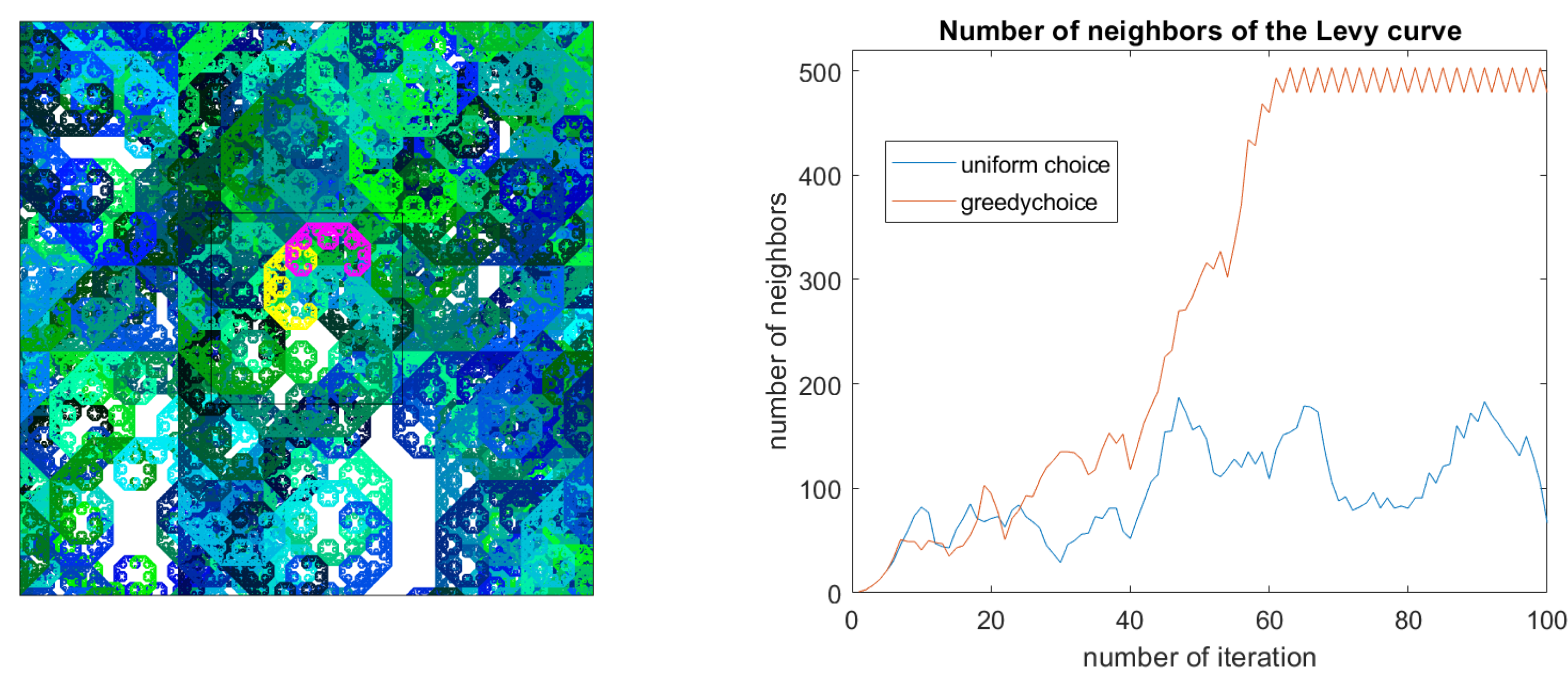

scenery(g,H,nit,np,0). Successive magnifications with uniform choice can be boringly repetitive, however. In particular, when the attractor contains islands as in

Figure 1, smaller islands will be regularly visited. For that reason, we wrote the empirical procedure

greedychoice which leads somewhat faster to interesting places with many neighbors. When the parameter

w in the command

scenery(g,H,nit,np,w) is larger than 0, subpieces with a greater number of neighbors will be favored. The weights will be the numbers of neighbors to the power of

(The window for those neighbors is chosen smaller than the large square, however.) In our experiments,

performed well, as shown in

Figure 2. A deterministic choice of subpiece

i is also possible, taking the vector

as an input parameter of

scenery(g,H,nit,np,w). The program then produces

n iterations, and in the case

continues with random choices according to

The vector of the actually chosen

is an output of the program

scenery. Thus, an interesting magnification series can be repeated, extended and modified.

The direction matrix D of the reference piece is calculated recursively, starting with an initial unity matrix for Suppose the actual piece has direction D and the subpiece was selected. Since the new direction is Division by R is necessary to keep an isometry matrix. (For very large numbers of iterations, this calculation can be modified to minimize numerical errors.)

Step 3 is performed by the procedure nbmaps.m. We select the neighbor maps h for in such a way that they include all the sets which hit the screen It is here that we move from potential neighbor sets to those whose control point is in the vicinity.

Proposition 2. Suppose and and is a neighbor map with isometry matrix s and translation vector and Then,

This is easy to see. Since

the existence of a point

would contradict the triangle inequality for

and

To apply this to our squares, let

and

Then, the assumptions of the proposition are fulfilled for our attractor

A and our screen

Let

t be the translation vector of a neighbor map

So, all neighbor maps with this condition are cancelled from our list in each step. In two dimensions, this works well, although some remaining neighbor maps miss the screen, too.

When

is drawn as a central piece, the list of neighbor maps

comprises all neighbor sets

which intersect the screen

Now, we select a subpiece

and draw the red square which goes to

W in the next step. Since the red square is contained in

W by the choice of

the neighbor sets of

must be of the form

where

is a neighbor of

So, we obtain the list of neighbor maps for

by applying Equation (

6) to all maps

h in the list for

and

Again, we drop the neighbor maps which fulfill (

7).

The number of remaining neighbors is saved in every step and is an output of the program. So, the full command is

z=scenery(g,H,nit,np,w) where

z is a

matrix. The upper line contains the indices

and the lower line contains the number of neighbors at each step. They give insight into the change of density of pieces during magnification and on the computational effort, as shown in

Figure 2.

Step 4 of our algorithm needs no explanation. It involves matrix multiplications and graphics operations which in the presence of many neighbors require a lot of time. It is possible to skip this step and perform only the neighbor calculations. One may like to see only a few magnifications on screen and evaluate the other pictures statistically. In our version, step 4 is regularly performed. This allows us to correct the neighbor list and take only those neighbors for which the cloud includes points on our screen.

6. Remarks on the Program and the Data Files

A starting point for explorative work. MATLAB was designed 40 years ago as a toolbox for experiments with matrices with a very simple and intuitive language. This is exactly what we need here. The language has become a standard. Now, the system is slow, but it is still available at most universities, and there is a free version, Octave. Our procedures are small. You can easily change the data, the parameters or the whole procedure. If you prefer to program in another environment, like Julia, R, Python, C++, Maple etc., you can transfer our algorithm.

Instead of making the program fast and the graphics beautiful, we focused on simplicity, transparency, and accessibility. Our program contains no black box.

Six families of data. We recommend to proceed with the data in lexikographic order. The letter A marks simple examples for which the magnification process can be easily followed. They can be run with and in order to gather some experience with the method. Later, you might prefer

The relatives of the Sierpiński gasket, mostly taken from [

6] and including

Figure 1, are distinguished by the letter B. They all have three maps, expanding factor

and Hausdorff dimension

The IFS values from [

6] were taken without change, and some of them have the control point 0 outside

This does not matter, however, as long as 0 and

A and thus all

belong to the small black square. These examples perform well with large

values and can be easily modified.

The data files marked with the letter C use as an expanding map so that pieces are rotated by 26.6° in each magnification step. These IFSs contain four maps. Their Hausdorff dimension is The symmetries are again 90° rotations. All IFSs designated by A, B, C are small integer data with the open set condition (OSC), permitting unlimited magnification. Since integer variables are not declared in our implementation, however, they are taken as floating-point numbers, which could result in significant error propagation for very large values. We checked that causes no problems. When there is a number at the end of the file name, it denotes the number of proper neighbors and indicates in some way the complexity of the IFS.

The other examples show that the program works well even for non-integer data and IFSs without the open set condition. There are some restrictions, so is recommended for the beginning. The family marked by D is related to the hexagonal lattice and rotations by multiples of 60°. D1 up to D3 have expanding map with and mappings, which yields the Hausdorff dimension They fulfill the OSC and provide very interesting, natural-looking patterns. D4 is a non-periodic tile with three pieces and In example D5, we have the same m and g but obviously not a tile. Here, two pieces of the second level coincide. To treat such ’exact overlaps’ efficiently, the program has to be modified so that it counts multiple occurences of the same neighbor piece only once. All these examples on the hexagonal lattice can be mapped into integer data by a linear coordinate transformation.

The three examples designated by the letter E come from a Pisot number of degree four and integer data projected from four-dimensional space, as explained in ([

26]

Section 4). They all have exact overlaps. The final group of examples with F are definitely not integer data. They have no exact overlaps and do probably not fulfill the OSC. For ’fatfireworks’, we replaced the factor

of the ’fireworks’ example in family B by

If this would fulfill the OSC, it must be a tile. Our program suggests that this is not the case. In F2 and F3, three incommensurable rotations were used together with both values of

In the ’fat’ case, we have no tile and thus no OSC. The ’slim’ case is hard to decide. In the final example, F4, we have replaced

by the affine map

The attractor is not self-similar, but the program calculates

If there were no rotations, magnifications would show line fibers, since the contractions in horizontal and vertical directions differ. With 90

o rotations, the scenery flow shows patterns such as in the self-similar examples for many iterations. This observation was unexpected.

Simple and more complex cases. For integer data IFSs in two dimensions, the finite type condition holds ([

26]

Section 1). This means that there are only finitely many proper neighbor maps. We can use Proposition 1 and (

7) to estimate directly the number of possible neighbor maps

which intersect our window

According to (

7), the integer vector

t is in the circle with radius

around 0, where

a is the radius of

A and

For our value

there are

Here,

denotes the number of possible symmetries

s, which is 4 for the data files with A, B, and C. So, we have 500 possible neighbor maps when the radius of

A is 1 and 500 millions when the radius of

A is 1000 (or when the radius is one, and the translations are not integers but decimals with three digits). The size of

A matters a lot.

Although the upper bound in (

8) need not coincide with the actual number of neighbor maps, it is a good rule that IFSs with small integers in the

lead to a small radius of

A and are tractable. Our data files A, B, and C belong to this class. It is possible to determine all neighbor maps and to organize them in the neighbor graph. From this automaton, topological properties of

A can be derived, as we have shown in [

6,

26]. Sometimes, even a neighborhood graph can be established which collects the connections between all possible photos [

20]. However, from

n given neighbors, we can theoretically compose

neighborhoods. So, the neighborhood automaton can be huge compared to our computer’s memory. If the radius of

A is large, even the neighbor automaton may be too large. To evaluate such cases correctly, we probably have to apply statistical methods.

The open set condition (OSC) is fulfilled if and only if all neighbor maps are different from the identity [

2,

4,

26]. Thus, it could be desirable to display a warning or stop the program if

for some neighbor map. This can easily be accomplished. However, there are examples without an OSC where it can take hours to find such

h values. On the other hand, there are examples with an OSC where a proof of the OSC may take hours. Our experience indicates that the question ’OSC or not OSC?’ should be replaced by ’low or high complexity?’ with a gradual change from one to the other. There are various ways to measure complexity. For our setting, a measurable parameter is the maximum number of neighbors on a ’photo’.

7. How to Continue

Improvements of the program and the database. The graphics can be improved when we represent

A as an image of

pixels and add image intensities on a matrix. This graphical representation is necessary to study IFSs of the form (

1) where the

values have different contraction factors or self-similar measures instead of self-similar sets. It is also desirable to have more interaction between users and the program. For larger examples, MATLAB has to be replaced by some faster system, like Julia.

Before you start programming, however, we urge you to test our simple package with your own IFS examples. Our data files are a tiny selection from a wide field. The command

H(:,:,j)=[a b c; d e f]; will change the mapping

in (

2) to

or define a new mapping for

Experiments will slow down mainly because of an abundance of neighbors. There is an emergency stop if the number of neighbors exceeds 1000. This command at the end of the program can be removed or changed. In the case of many neighbors, one pragmatic way to modify the IFS is by

g=1.2*g, which increases the expansion factor and makes the attractor thinner. Feel free to find better solutions. It is not forbidden to perform numerical experiments with IFSs given by decimal numbers without regard to integers or separation conditions, or similarity maps with different contraction factors, or affine contractions.

A change in the program is needed for IFSs with exact overlaps. Either identical neighbor maps must be eliminated, or, in the case of work with image intensities, must be counted with multiplicity. If this is settled, any complex Pisot number

g is a possible expansion for an IFS, and this can be considered as integer data, since

g is represented by an integer matrix

G in a

d-dimensional space. The neighbor calculations are performed in the

d-dimensional integer lattice and then projected to the two-dimensional eigenspace of

G, which corresponds to the eigenvalue

The point cloud is drawn in this plane. This method, implemented in Mekhontsev’s IFStile package [

9], is discussed in ([

26]

Section 3).

New classes of spaces. While two-dimensional self-similar sets have been investigated since 1990 by many authors, there are almost no studies of the three-dimensional case. On one hand, it is difficult to draw 3D fractals (see, however, ref. [

9] and Mandelbrot-type fractal demonstrations on diverse web sites). On the other hand, there are very few integer-valued matrices of similitudes or isometries on

Our program can easily be modified for the three-dimensional case. With

and the symmetry group of the cube, we have a very rich family of examples. It is much more general than the Sierpiński relatives, since we can go from

with dimension 1.58 up to

with dimension 2.8. We expect new geometrical insight from such examples, even for Cantor sets. The problem is to visualize and analyze the 3D constructions. When we use our point cloud method, we need less than twice the memory for the plane for the same number of neighbors, and we will project the three dimensional point clouds to the observer’s plane. This is a first step. A good 3D representation must include light sources and ray tracing, and working with intensities instead of point clouds may require hardware beyond a PC.

A similar remark concerns the study of graph-directed IFSs. Concrete examples have been rarely studied, and their potential, in particular in 3D constructions, has not been exploited. They are implemented in [

9]. We expect that in 3D, self-similar graph-directed constructions go much beyond ordinary IFSs. This question concerns mathematical exploration rather than computer programming.

Theoretical problems. So far, we have no rigorous method to decide whether a self-similar construction represents a Cantor set, although there are theoretical results [

27]. This is an annoying situation especially in 3D where our eyesight can be helpless. An algorithm for disconnectedness probably has to use the neighbor graph and thus would be applicable only to relatively simple IFSs. However, one can also think about computational methods in case the set is given as a finite set of 3D pixels. If an attractor is neither connected nor a Cantor set, one would like to know the structure of its non-trivial connectivity components. In the case of self-similar sets, we conjecture that they are given by a graph-directed IFS.

In the family B of data files, there are four Cantor sets and four non-Cantor sets, which can be distinguished by magnification. We think the visualization can help understand Cantor sets and the structure of components.

All Cantor sets are homeomorphic by a classical theorem, but their metrical structure differs. By eyesight, we can distinguish the photos of different IFSs. So, it is a challenge to find rigorous methods to describe and classify Cantor sets with a fairly homogeneous structure from a metric viewpoint.

Other questions are related to our algorithm. As

Figure 2 demonstrates, our empirical

greedychoice procedure usually leads faster to dense patterns than the uniform choice of subpieces. Is there a rigorous method which in self-similar constructions finds the densest patterns? This is connected with the dynamical interior of

which is defined in [

20] with the help of the neighborhood graph. In the case of tilings, it coincides with the topological interior. Lévy did search a long time for an interior point in his curve, which he found in 1937. As

Figure 2 indicates, we needed more than 50 steps of random magnification to have the window completely filled with neighbor curves. What is the fastest algorithm to find an interior point in a complicated self-similar tile? Which methods can extract geometric information from the ’photos’? While colleagues from science have their specific methods to describe soil, smoke and snow, we should have some methodology to understand simple mathematical model sets.