1. Introduction

The fixed point theory plays an important role in the study of iterated function systems and fractals. It is well-known that the simplest fractals are self-similar sets, which are considered ’fixed points’ in Hutchinson–Barnsley transformations. These transformations work within the hyperspaces of compact sets taken from the original spaces, utilizing the Pompeiu–Hausdorff distance. To study these Hutchinson–Barnsley operators, iterated function systems must be employed. Iterated function systems (IFSs) were first introduced and studied by Hutchinson [

1] in 1981 and later became popular through Barnsley [

2] in 1988, as a natural generalization of the well-known Banach contraction principle [

3] (which was further generalized by Nadler [

4] for multi-valued mappings). They initiate a method for defining fractals as attractors of specific discrete dynamical systems and are highly effective in applications such as fractal image compression. Moreover, they are effectively applied in computer graphics, quantum physics, wavelet analysis, and various other related fields. Subsequently, mathematicians and computer experts have shown significant and meaningful interest, as indicated by research articles such as those by Barnsley and Demko [

5] in 1985, Marchi [

6] in 1999, Wicks [

7] in 1991, Duvall et al. [

8] in 1993, Block and Keesling [

9] in 2002, Kieninger [

10] in 2002, Fišer [

11] in 2002, and Andres and Fišer [

12] in 2003.

Since 1981, iterated function systems (IFSs) have given rise to a growing variety of fractals. Over the years, these systems have evolved into powerful tools for creating and analyzing unique fractal structures. When constructing a fractal, established fixed-point results within suitably designed spaces often serve as a source of inspiration and guidance. In their work [

13], Kashyap et al. employed the Krasnoselskii fixed-point theorem to introduce a novel fractal creation, reminiscent of the classical fractal set originally developed by Mandelbrot in [

14]. Over the past two decades, many authors have expanded the IFS theory to include generalized contractions, countable IFSs, multi-function systems, and more extensive spaces. For example, Georgescu [

15] explored IFSs composed of generalized convex contractions within the framework of complete strong b-metric spaces; Gwóźdź-Lukawska and Jachymski [

16] pioneered the application of the Hutchinson–Barnsley theory to infinite IFSs; Ioana and Mihail [

17] studied the IFSs consisting of

-contractions; Klimek Kosek [

18] addressed generalized IFSs, multi-functions, and Cantor sets; Maślanka [

19] investigated a typical compact set as the attractor of generalized IFSs of infinite order; Maślanka and Strobin [

20] discussed generalized IFSs defined on the

-sum of a metric space; Dumitru [

21] explored generalized IFSs, incorporating Meir–Keeler functions. Torre et al. [

22,

23,

24] applied the concept of IFSs to multi-functions.

Recently, Prithvi et al. [

25,

26,

27] established generalized IFSs over generalized Kannan mappings and provided some remarks on countable IFSs via the partial Hausdorff metric and non-conventional IFSs. Verma and Priyadarshi [

28] studied new types of fractal functions for general datasets. Amit et al. [

29] introduced the concept of iterated function systems (IFSs) utilizing non-stationary

-contractions. Ahmad et al. [

30] worked on fractals of the generalized

–Hutchinson operator, while Rizwan et al. [

31] presented fractals of two types of enriched

–Hutchinson–Barnsley operators. Sahu et al. [

32] provided fractals on the K-iterated function system. Thangaraj et al. [

33] generated the fractals in controlled metric space for Kannan contractions. Chandra and Verma [

34] constructed fractals using Kannan mappings and smoothness analysis.

Some applications of IFSs, such as Monte Carlo simulations, random sampling techniques, and cryptographic algorithms, can be found in [

35,

36], respectively.

Let

represent a complete metric space and

be a self-map on it. Then

is said to be a Banach contraction if

such that we have the following:

The constant

for the self-mapping

is called the contraction factor of

. Banach [

3] proved that each Banach contraction exhibits a unique fixed point (say

) in a complete metric space, and the sequence

as

, for any initial guess

.

Following Banach’s theorem, Kannan [

37] initiated a fresh category of mappings known as Kannan contractions, which do not require the mappings to be continuous. Karapinar [

38] introduced a novel form of the Kannan contraction known as an interpolative Kannan operator. Further research and additional information on this topic can be found in [

39,

40,

41,

42].

IFS simply means the collection of contraction maps together with a complete metric space. The Banach contraction theorem guarantees the existence and uniqueness of the fixed point of the transformation

defined in terms of self-contractions, called the attractor of the IFS. Now, we explore some notions required to understand iterated function systems. If

is a complete metric space, then we denote

, a family of all non-empty compact subsets of

, known as the Hausdorff space on

. The distance between any point

and a subset

is given as follows:

The distance between any two subsets

is given by the following:

Finally, the Hausdorff distance between any two elements of

is described as follows:

Then the function

satisfies all properties of the metric and, thus, is known as the Hausdorff metric defined on

in terms of the metric

. The completeness of the space

implies the completeness of

:

Lemma 1. Suppose

is a metric space and

. Then, the following statements holds the following:

- (i)

;

- (ii)

;

- (iii)

If

and

are two finite collections of subsets of

, then

Using the above discussions, we define the IFS on

as follows:

Definition 1. Suppose

is a complete metric space and

is a class of continuous contraction mappings with contraction factors

. Then

is called a (hyperbolic) iterated function system.

The main result in this direction was given by Barnsley in [

2], which is stated as follows:

Theorem 1. Let

be an IFS with contraction factors

, for all

. Then the transformation

is defined by the following:which is also a contraction on

with contraction factor

. Furthermore,

has a unique attractor, which is

, such that we have the following:and is achieved by

, for any initial choice

. Here,

is given by

. In particular, the mappings used in the IFS are typically affine transformations. The dynamics of iterations linked to these transformations are perhaps not inherently captivating, but when the actions of this collection of contraction mappings are considered, the results are quite interesting and outstanding. For example, in two dimensions, consider the IFS

with contraction factor

, where

and

. Then the fixed points of these contractions

lie at the vertices of an equilateral triangle with coordinates

and

, which may not be particularly interesting; however, the corresponding attractor of the IFS is known as the Sierpinski gasket and is illustrated in

Figure 1.

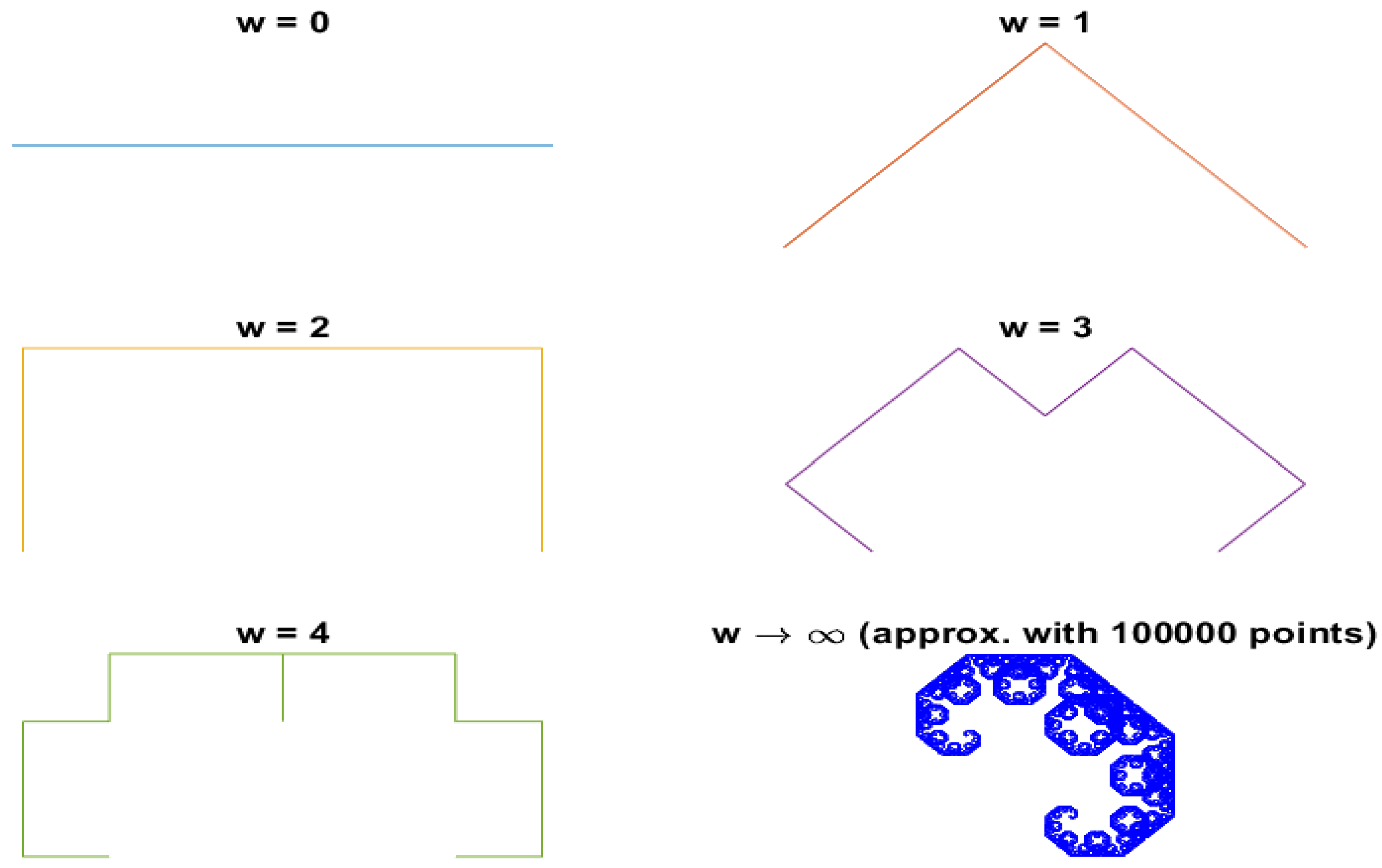

This fractal, characterized by its self-similarity and recursive structure, emerges as the collective limit of the iterated mappings, showcasing the beauty and complexity inherent in fractal geometry. Now, let us explore another fascinating example: the Dragon Curve, a quintessential masterpiece in the domain of fractal geometry. This intricate structure emerges through a captivating interplay of iterative transformations, meticulously orchestrated by affine mappings;

and

are essential to this process, with each using unique geometric properties to modify the pattern.

simply applies the diagonal reflection and scaling, shaping points, particularly with the matrix

. The mapping

adds the reflection and translation using the matrix given by

, with a shift in

. The combination of these maps results in the wonderful variety of the Dragon Curve fractal. By increasing the iterations, the simple line segments smoothly evolve under their effect, unveiling beautiful patterns that connect across a range of scales. So, the Dragon Curve shows the natural magic and fascinating magnificence that lies in the heart of fractal geometry. The fractal representation of the IFS is shown in

Figure 2.

The given IFS is categorized by two mappings

and

. This is a useful tool for producing pseudo-random numbers. By repeating these mappings on some given initial data, the resulting values create properties likely to those of random iterations. However, it is not completely random; this scheme is very useful in many scenarios that seek randomness, such as random sampling techniques, Monte Carlo simulations [

35], and algorithms related to cryptography [

36]. Monte Carlo simulations are computational methods that employ repeated random sampling to approximate complex mathematical and physical phenomena. They are widely used to model systems with uncertain variables and assess risks, providing valuable insights into areas like finance, engineering, and physics. By producing and examining many random samples, Monte Carlo methods can estimate probabilities and outcomes in cases where analytical solutions are not possible. On the other hand, cryptographic algorithms are developed to protect data through encryption and decryption, ensuring confidentiality, integrity, and authenticity. These algorithms, which encompass both symmetric and asymmetric key methods, are crucial for safeguarding sensitive information and securing digital communications. Moreover, Monte Carlo simulations are employed in cryptography to enhance key generation and validate algorithms, demonstrating their versatile application and importance across different domains. The resulting graph displays the sequence’s progression, developed by the IFS. The development of pseudo-random numbers is core to mathematics and related fields like numerical optimization, simulations, statistical analysis, cryptography, and other computational fields. These pseudo-numbers allow researchers to introduce uncertainty and variability into mathematical models and numerical optimization. This also enables them to study how systems behave under different conditions and make probabilistic predictions. Thus, the application of IFS in the production of pseudo-random numbers is primary in both mathematical research and computational workflows.

In 1969, Nadler [

4] generalized the fixed point theorem presented in [

3] by replacing the operator from single-valued to multi-valued mapping. We use the following lemma from [

4].

Lemma 2 ([

4]).

Suppose

is a metric space,

and

. Then for each

, there exists

, such that we have the following: In 1969, Kannan [

37] established a fixed point theorem for Kannan operators, which do not require continuity. A mapping

is called a Kannan contraction mapping if

such that

, and the following inequality holds:

This discovery led to more research into contractive-type conditions that do not require the continuity of

. Kannan’s theorem has been generalized in many ways by several authors to extend the scope of the metric fixed point theory in various directions.

Recently, Karapınar [

38] expanded the scope of Kannan contraction mappings by developing a new category known as interpolative Kannan-type contraction mappings.

Definition 2 ([

38]).

A mapping

is termed an interpolative Kannan-type contraction if there exist

and

such that the following condition holds: where

. To highlight the constants

and

involved in (

3), we refer to the interpolative Kannan-type contraction mapping as

–IKC. Reference [

38] demonstrated that any interpolative Kannan-type contraction mapping defined on a complete metric space possesses a unique fixed point.

The multi-valued version of the above definition is given as follows:

Definition 3. A map

is called a multi-valued interpolative Kannan-type contraction if

and

exist, such that the following holds:where

. To highlight the constants involved in Definition (

4), we refer to a multi-valued interpolative Kannan-type contraction as

–MIKC.

Not long ago, Sahu et al. [

32] demonstrated the IFS on Kannan contraction mappings. They defined the concept of K-IFS by selecting some finite Kannan contractions

over the complete metric space

, and provided the following result using the famous Collage Theorem:

Theorem 2 ([

32])

Consider a continuous Y–Kannan mapping

on

. Then, for every

, the mapping

, defined as

, is also a Y–Kannan mapping on

. Theorem 3 ([

32]).

Consider

a Y–KIFS. Then, the Hutchinson operator

defined by

for all

is a continuous Y–Kannan mapping on the complete metric space

. Its unique fixed point, alternatively referred to as an attractor

of the KIFS, satisfies

, and is given by

for any

. Expanding on the previously mentioned findings, Sahu et al. [

32] presented their renowned Collage Theorem, a seminal contribution to this field. This theorem is very useful and important in the theory of iterated function systems (IFSs) and Kannan contraction mappings, functioning as a powerful tool used for obtaining the dynamics of intricate systems influenced by these operators.

Theorem 4 ([

32]).

In a complete metric space

, take

as a fixed set in

, with

and the iterated function system (KIFS)

, with a contractivity factor Y. If

is the attractor of the given KIFS and then This inequality explains the relation between the distance from

to

and the factors Y and ϵ, providing a scale of convergence toward the attractor

. This study initiates a novel iterated function system and iterated multi-function system, called

–IFS and

–IMS, respectively, for interpolative Kannan operators, intended to cover a broader range of mappings. We also present the well-known Collage Theorem in the context of interpolative Kannan contractions. Additionally, we delve into the establishment of the attractor’s well-posedness within the framework of

–IMS. The Hutchinson operator is based on strict contraction mappings. In many practical scenarios, these mappings may not meet such strict criteria. The interpolative Kannan operator addresses this by allowing for more generalized contraction conditions, thus accommodating a wider range of mappings.

2. Main Results

We begin by stating and proving several propositions that illustrate the relationship between the self-mappings

for

and the contraction pair

. Additionally, we will prove the uniqueness of the fixed point of

, if such a fixed point exists.

Proposition 1. Let

be a continuous

–IKC, where

is a metric space. Then

satisfies the following expression for all

and

:Furthermore, in limiting case Proof. By definition of

–IKC, we have the following:

In the limiting case, we obtain the following:

since

as

. □

Subsequently, we present the following findings, where we prove that each continuous interpolative Kannan operator on a complete metric space must satisfy the inequality (

6).

Proposition 2. Let

be a continuous

–IKC on the complete metric space

, and

. Then, we have the following: Proof. Since

, we have

and

By using the continuity of

, the triangular inequality, and Proposition 1, we obtain the following:

□

Next, we state and prove the following lemma, which plays a crucial role in our main findings:

Lemma 3. If η,

,

, and

are non-negative real numbers, then we have the following: Proof. To prove that

, where

and

are non-negative real numbers, we use case analysis based on the values of

and

. Let

and

. We need to analyze the maximum values in two steps: first for the pairs

and

, and then for the products

and

.

Case 1: and

In this case,

and

. So,

. Since

is one of the elements being compared in

. Therefore,

and, hence,

.

Case 2: and

In this case,

and

. So,

. Since

(because

) and

(because

). Therefore,

and, hence,

.

Case 3: and

In this case,

and

. So,

. Since

(because

) and

(because

). Therefore,

and, hence,

.

Case 4: and

In this case,

and

. So,

. Since

and

. Therefore,

and, hence,

.

Hence, in all cases, we show the following:

Thus, the inequality is proven for all non-negative real numbers

and

. □

Next, we generalize the above lemma for the case of finite numbers as follows:

Lemma 4. For any non-negative real numbers

,

, where

, the following inequality holds: Proof. The proof of this lemma is straightforward and can be derived using Lemma 3. □

Next, we define the interpolative Kannan iteration function system as well as the iteration multi-function system, which involves the use of interpolative Kannan contraction operators. The traditional Hutchinson operator depends on strict contraction mappings. However, in many practical scenarios, these mappings may not strictly meet these conditions. The interpolative Kannan operator relaxes these requirements, accommodating mappings that satisfy more generalized contraction conditions.

Definition 4. Let

be a metric space and

be a finite collection of interpolative Kannan contraction operators (IKCs) with contraction pair

. Then, we call

, a

–IKC-IFS or simply IKC-IFS.

Similarly, the IMS for the multi-valued interpolative Kannan contraction operators is defined as follows:

Definition 5. Suppose

is a metric space and

is a finite collection of multi-valued interpolative Kannan contraction operators (MIKCs) with the contraction pair

. Then, we call

, a

–IKC-IMS.

Our initial result is as follows. This outcome presents the initial findings and sets the stage for further analysis.

Theorem 5. Let

be a continuous

–IKC. Then

is defined by

,

, and maps

into itself, and

is also a

–IKC on

.

Proof. Since

is continuous mapping, then

maps compact subsets of

into compact subsets of

. That is,

maps

into itself.

Secondly, we have the following:

Similarly,

Thus, using (

7) and (

8), we obtain the following:

This shows that

is also a

–IKC. □

We define the Hutchinson operator and Hutchinson-like operator equipped with interpolative Kannan mappings as follows:

Definition 6. Consider

be a metric space and

, where

, be a finite collection of

–IKCs. Then the transformation

is defined by the following:and is called the Hutchinson operator equipped with IKCs. Definition 7. Let

be a metric space and

, where

is a finite collection of

–MIKCs. Then the transformation

is defined by the following:and is called the Hutchinson-like operator associated with MIKCs. In the following, we present some results about the Hutchinson operator formed by the interpolative Kannan operators.

Theorem 6. Suppose

is a metric space and

, where

is a finite collection of

–IKCs. Then the transformation

is given by the following:is also a

–IKC on

, with

and

. Proof. Consider the following in light of Lemma 1 and Lemma 4:

Thus,

is also a

–IKC, where

. □

The Hutchinson operator—equipped with interpolative Kannan contractions—is also a Kannan contraction. So, we simply call it the

–IKC–Hutchinson operator, where

and

.

Theorem 7. Consider the

–IKC-IFS

on a complete metric space

and the

–IKC

defined by

where

. Then,

has a unique attractor of the given IFS, say

, satisfying

, and is given by

for any initial choice of

.

Proof. Let

be any arbitrary point and establish the sequence

. By using Theorem (6), it is clear that

is a

–IKC, where

. Then, we have the following:

Letting

, we obtain

.

Next, we show that

is a Cauchy sequence in

. More precisely, for

, we obtain the following:

This implies that

is a Cauchy sequence and due to the completeness of

, we can find

such that

as

.

Next, we show that

. Using the definition of

–IKC, we have the following:

Taking

, we obtain

, which yields

, and

is an attractor of the

–IFS. To show the uniqueness of the attractor, take contrary

with

. Then, by definition, we have the following:

A contradiction. Hence, the fixed point of

or the attractor of the

–IFS is unique. □

Example 1. Consider a system of two operators defined by the following:andon

with the usual metric. Suppose that both operators

and

are Kannan contractions, satisfying the Kannan inequality (2). First, check

with

and

. Then,

and

. For some

, inequality (2) implies the following:This leads to a contradiction, so

is not a Kannan mapping. A similar argument can be applied to

by choosing

and

. It is evident that both

and

satisfy inequality (3) with

and

. Hence, this forms an interpolative iterated function system (IKC-IFS). Consequently, the results provided by Sahu et al. [32] cannot be applied to this example. On the other hand, the corresponding

–IKC–Hutchinson-like operator is defined by the following:The attractor of the IKC–Hutchinson operator is the set

. Next, we have some results for the Hutchinson operator related to the iterated multi-function system.

Theorem 8. Suppose

is a metric space and

, where

is a finite collection of

–MIKCs. Then the transformation

is given by the following:is also a

–MIKC on

, where

and

. Proof. By following Lemma 1 and Lemma 4, we obtain the following:

Thus,

is also a

–MIKC, where

. □

Theorem 9. Consider the

–IKC-IMS

on a complete metric space

and the

–IKC

defined by the following:

where

. Then

has a unique attractor of the given IMS, say

, satisfying

and is given by

, for any initial choice of

.

Proof. Let

be any arbitrary point and establish the sequence

as follows: Suppose

such that

. By using Lemma (2), we can find an

such that

By induction, we can establish the sequence

such that we have the following:

Letting

, we obtain

.

Next, we show that

is a Cauchy sequence in

. More Precisely, for

, we obtain the following:

This implies that

is a Cauchy sequence and due to the completeness of

, we can find

such that

as

.

Next, we show that

. Using the definition of

–IKC, we obtain the following:

Taking

, we obtain

, which yields

, and

is an attractor of the

–IMS. □

Iterated function systems (IFSs) and Hutchinson–Barnsley operators associated with IKCs are fundamental in generating fractals and self-similar structures. IFSs employ straightforward recursive transformations to generate intricate patterns, making them crucial in areas such as computer graphics, image compression, and the modeling of natural phenomena. IKC guarantees the existence of unique fractal attractors in these systems, making this combination essential for comprehending geometric structures and their applications across diverse scientific and engineering fields.

Example 2. Let

be a usual metric. We define two multi-valued mappings, i.e.,andThen both mappings are multi-valued interpolative Kannan mappings with contraction pairs

and

, respectively. Then the corresponding

–IKC–Hutchinson-like operator is defined by the following:The attractor of the IKC–Hutchinson operator is the set

. In conclusion, using the previous discussions and findings, we establish the well-known Collage Theorem in the context of iterated function systems involving interpolative Kannan contractions. If we have an

–IFS, we know how to derive its corresponding attractor. But what about the reverse process? If we select a preferred compact fractal set, can we identify an

–IFS whose attractor approximates our chosen set? The answer is yes, and is stated as follows:

Theorem 10. Let

be a complete metric space,

a

-

–IFS and

be the corresponding

–IKC transformation with attractor

. If

and

are given, we have the following:thenOr equivalently, Proof. By using the triangular inequality, Theorem (7) and inequality (

13), we obtain the following:

or equivalently,

□