Structured Doubling Algorithm for a Class of Large-Scale Discrete-Time Algebraic Riccati Equations with High-Ranked Constant Term

Abstract

1. Introduction

2. Structured Doubling Algorithm

2.1. Iteration Scheme

2.2. Convergence

| Algorithm 1. Structured SDA for DAREs. | |

| Input: | , , S, B, , H and tolerances and , and ; |

| Output: | , , , and normalized relative resi- |

| dual ; | |

| Preprocess: | Compute , , , , and the economic QR de- |

| composition of . | |

| Iteration: | Set , , , , , , |

| , and ; | |

| For , do until convergence: | |

| Compute the relative residual as in (11). | |

| If , set , , and ; Exit; | |

| End If | |

| Compute | |

| ; | |

| ; | |

| ; | |

| Obtain in (8) with preprocessed matrices. | |

| , | |

| , | |

| , | |

| Set . | |

| End Do | |

3. Computational Issues

3.1. Residual and Stop Criterion

3.2. Complexity Analysis

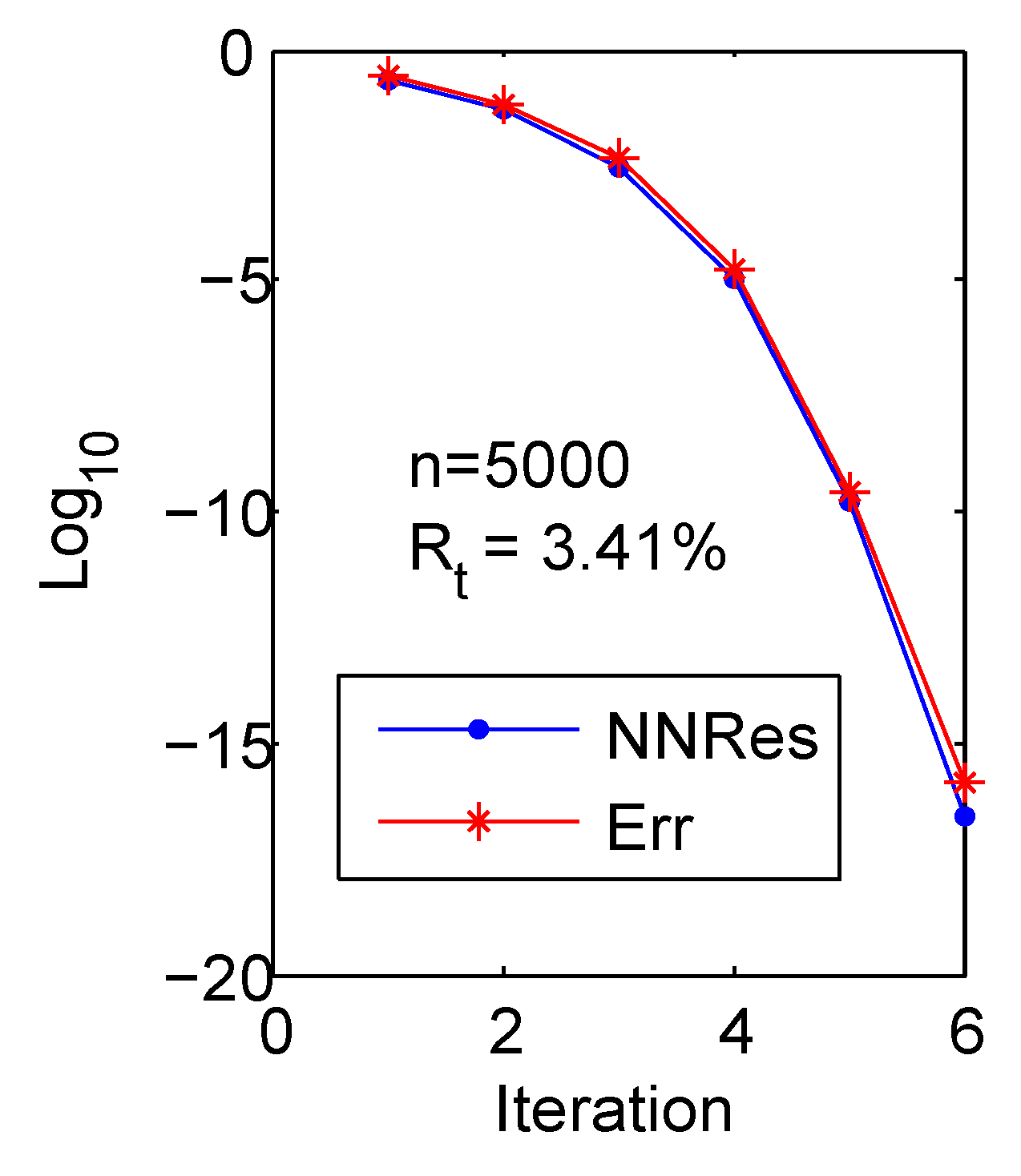

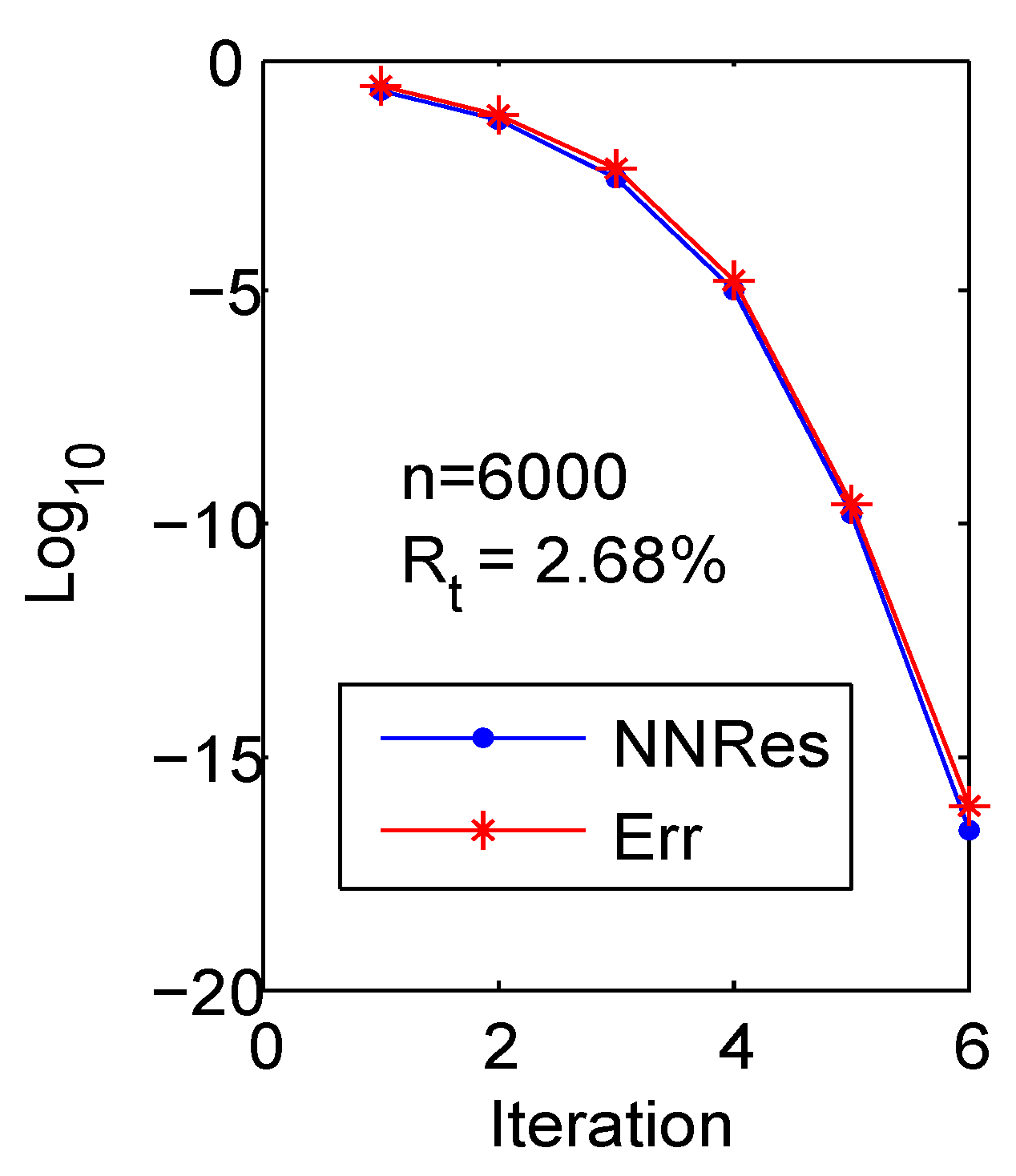

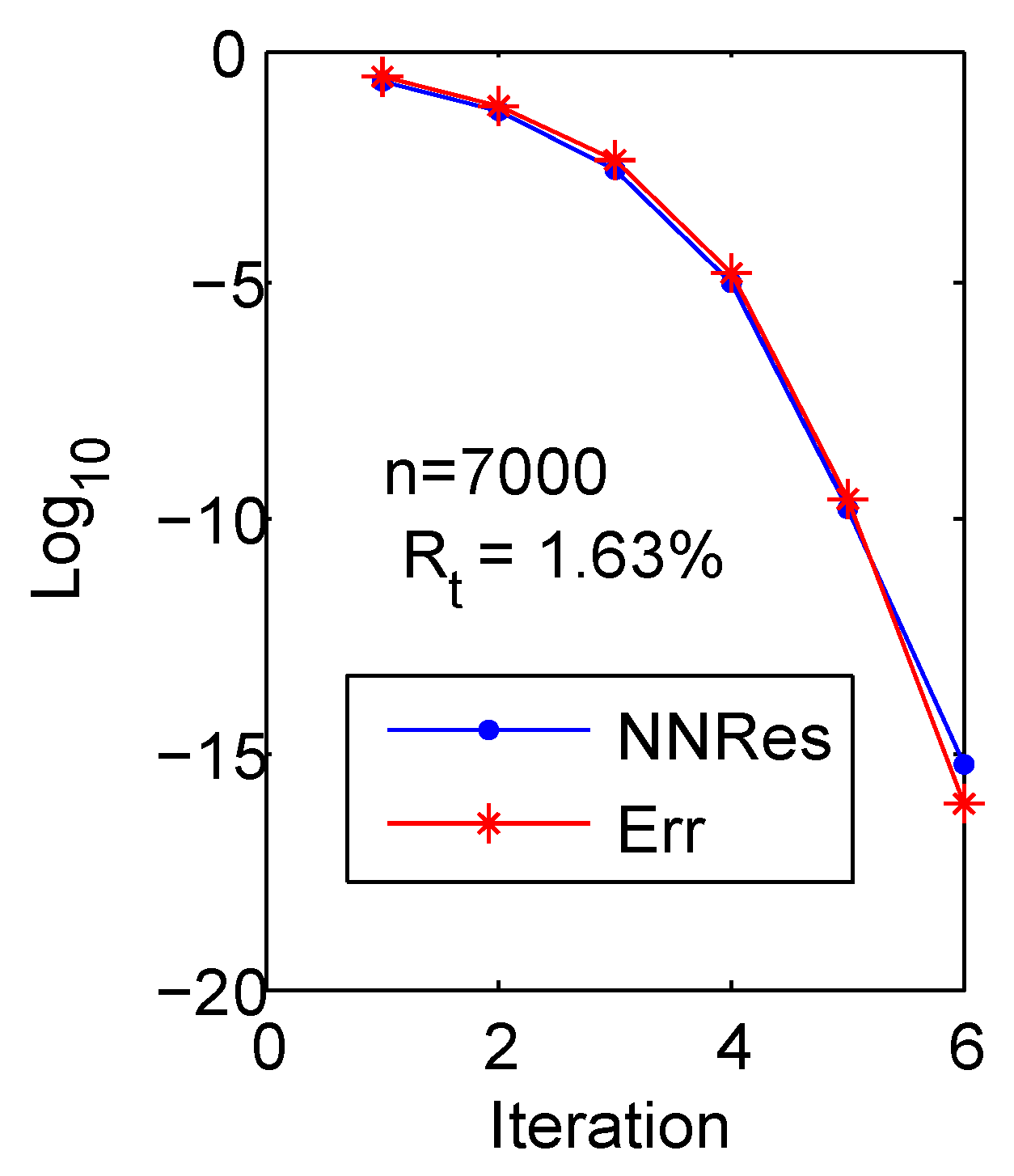

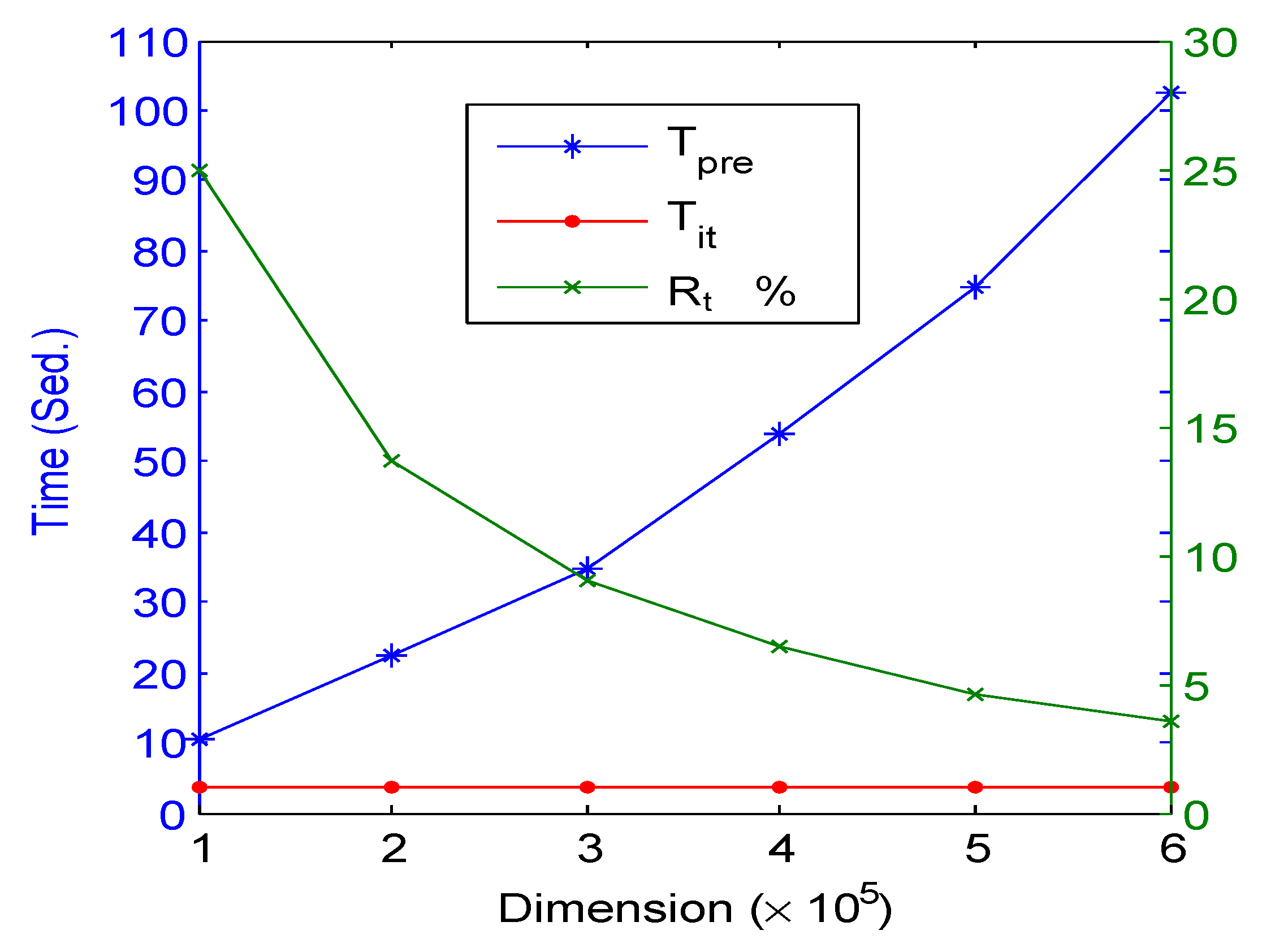

4. Numerical Experiments

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Athan, M.; Falb, P.L. Optimal Control: An Introduction to the Theory and Its Applications; McGraw-Hill: New York, NY, USA, 1965. [Google Scholar]

- Lancaster, P.; Rodman, L. Algebraic Riccati Equations; Clarendon Press: Oxford, UK, 1999. [Google Scholar]

- Rabbath, C.A.; Léchevin, N. Discrete-Time Control System Design with Applications; Springer Science and Business Media: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Nosrati, K.; Shafiee, M. On the convergence and stability of fractional singular Kalman filter and Riccati equation. J. Frankl. Inst. 2020, 357, 7188–7210. [Google Scholar] [CrossRef]

- Trujillo, J.J.; Ungureanu, V.M. Optimal control of discrete-time linear fractional-order systems with multiplicative noise. Int. J. Control. 2018, 91, 57–69. [Google Scholar] [CrossRef]

- Chu, E.K.-W.; Fan, H.-Y.; Lin, W.-W. A structure-preserving doubling algorithm for continuous-time algebraic Riccati equations. Lin. Alg. Appl. 2005, 396, 55–80. [Google Scholar] [CrossRef]

- Chu, E.K.-W.; Fan, H.-Y.; Lin, W.-W.; Wang, C.-S. A structure-preserving doubling algorithm for periodic discrete-time algebraic Riccati equations. Int. J. Control 2004, 77, 767–788. [Google Scholar] [CrossRef]

- Chu, E.K.-W.; Weng, P.C.-Y. Large-scale discrete-time algebraic Riccati equations—Doubling algorithm and error analysis. J. Comp. Appl. Maths. 2015, 277, 115–126. [Google Scholar] [CrossRef]

- Yu, B.; Fan, H.-Y.; Chu, E.K.-W. Large-scale algebraic Riccati equations with high-rank constant terms. J. Comput. Appl. Math. 2019, 361, 130–143. [Google Scholar] [CrossRef]

- Kamon, M.; Wang, F.; White, J. Generating nearly optimally compact models from Krylov-subspace based reduced order models. IEEE Trans. Circuits -Syst.-Ii Analog Digit. Signal Process. 2000, 47, 239–248. [Google Scholar] [CrossRef]

- Golub, G.H.; Van Loan, C.F. Matrix Computations, 3rd ed.; Johns Hopkins University Press: Baltimore, MD, USA, 1996. [Google Scholar]

- Yu, B.; Li, D.-H.; Dong, N. Low memory and low complexity iterative schemes for a nonsymmetric algebraic Riccati equation arising from transport theory. J. Comput. Appl. Math. 2013, 250, 175–189. [Google Scholar] [CrossRef]

- Lin, W.-W.; Xu, S.-F. Convergence analysis of structure-preserving doubling algorithms for Riccati-type matrix equations. SIAM J. Matrix Anal. Appl. 2006, 28, 26–39. [Google Scholar] [CrossRef]

- Bhatia, R. Matrix Analysis, Graduate Texts in Mathematics; Springer: Berlin/Heidelberg, Germany, 1997. [Google Scholar]

- Higham, N.J. Functions of Matrices: Theory and Computation; SIAM: Philadelphia, PA, USA, 2008. [Google Scholar]

- Higham, D.J.; Higham, N.J. MATLAB Guide; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 2016. [Google Scholar]

- Miguel, S.L.; Kamon, M.; Elfadel, I.; White, J. A coordinate transformed Arnoldi algorithm for generating guaranteed stable reduced order models of RLC circuits. In Proceedings of the IEEE/ACM International Conference on Computer-Aided Design, San Jose, CA, USA, 10–14 November 1996; pp. 288–294. [Google Scholar]

- Odabasioglu, A.; Celik, M.; Pileggi, L.T. PRIMA: Passive Reduced order Interconnect Macro modeling Algorithm. IEEE Trans.-Comput.-Aided Des. Integr. Circuits Syst. 1998, 17, 645–654. [Google Scholar] [CrossRef]

| Items | Flops | Memory |

|---|---|---|

| , | ||

| , , | ||

| , | ||

| Total |

| Items | Flops | Memory |

|---|---|---|

| , | ||

| , , | ||

| — | ||

| Total |

| n | 1000 | 3000 | 5000 |

|---|---|---|---|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, B.; Jiang, C.; Dong, N. Structured Doubling Algorithm for a Class of Large-Scale Discrete-Time Algebraic Riccati Equations with High-Ranked Constant Term. Fractal Fract. 2023, 7, 193. https://doi.org/10.3390/fractalfract7020193

Yu B, Jiang C, Dong N. Structured Doubling Algorithm for a Class of Large-Scale Discrete-Time Algebraic Riccati Equations with High-Ranked Constant Term. Fractal and Fractional. 2023; 7(2):193. https://doi.org/10.3390/fractalfract7020193

Chicago/Turabian StyleYu, Bo, Chengxu Jiang, and Ning Dong. 2023. "Structured Doubling Algorithm for a Class of Large-Scale Discrete-Time Algebraic Riccati Equations with High-Ranked Constant Term" Fractal and Fractional 7, no. 2: 193. https://doi.org/10.3390/fractalfract7020193

APA StyleYu, B., Jiang, C., & Dong, N. (2023). Structured Doubling Algorithm for a Class of Large-Scale Discrete-Time Algebraic Riccati Equations with High-Ranked Constant Term. Fractal and Fractional, 7(2), 193. https://doi.org/10.3390/fractalfract7020193