A Fractional-Order Fidelity-Based Total Generalized Variation Model for Image Deblurring

Abstract

:1. Introduction

2. Proposed Model

2.1. Review of TGV

2.2. The Proposed Model

2.3. Discrete Implementations of Gradient and Divergence

- (1)

- ;

- (2)

- ;

- (3)

- ;

- (4)

- .

3. Algorithms

3.1. Augmented Lagrangian Algorithm

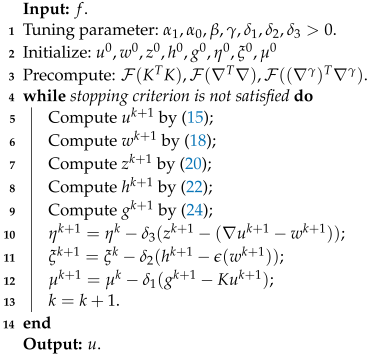

| Algorithm 1:FTGV-ADMM algorithm to solve the proposed model. |

|

3.2. Primal-Dual Algorithm

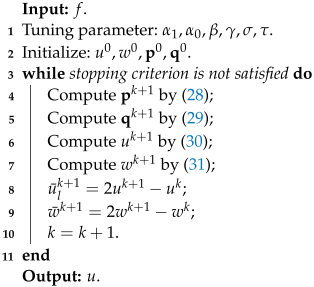

| Algorithm 2:FTGV-PD algorithm to solve the proposed model. |

|

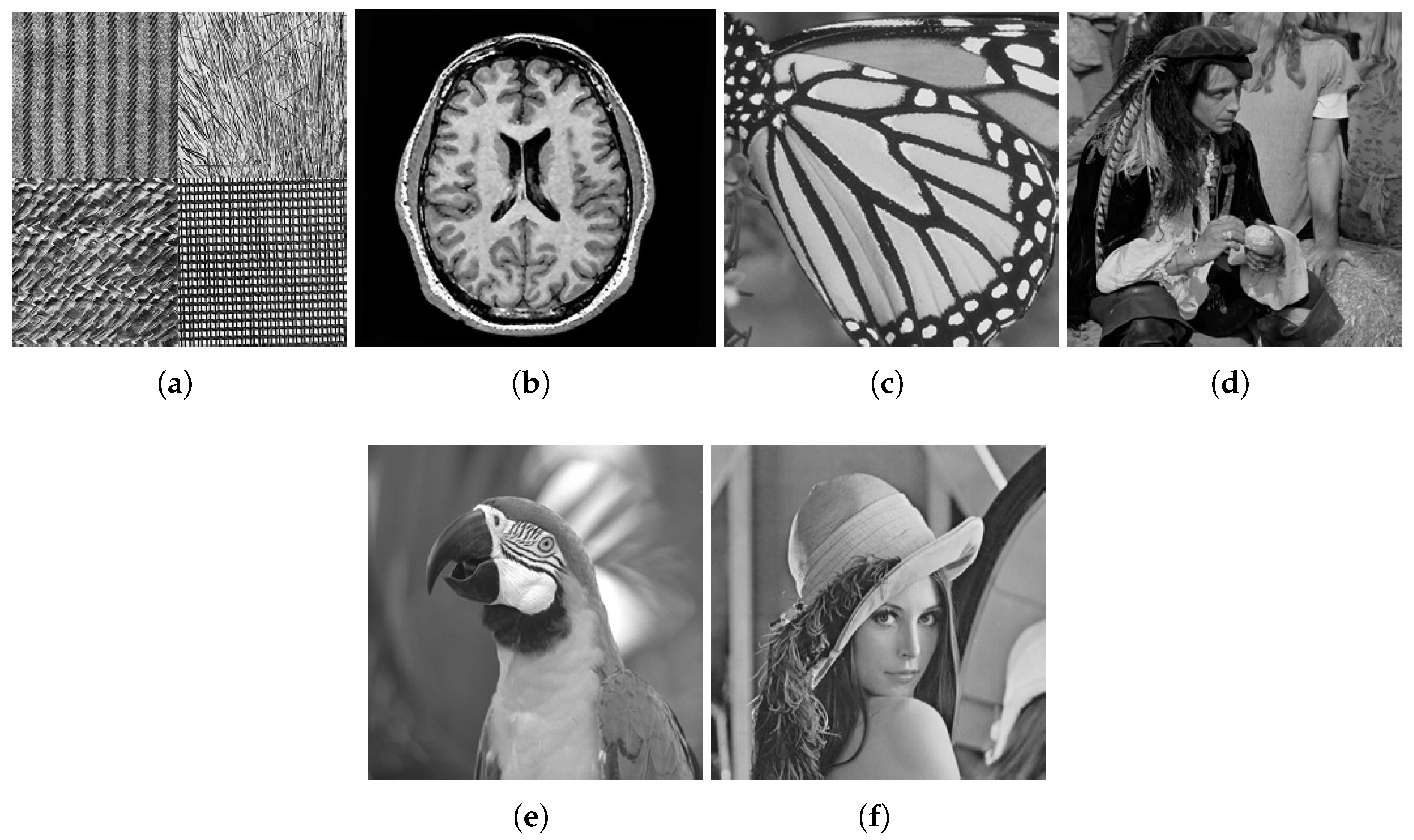

4. Numerical Experiments

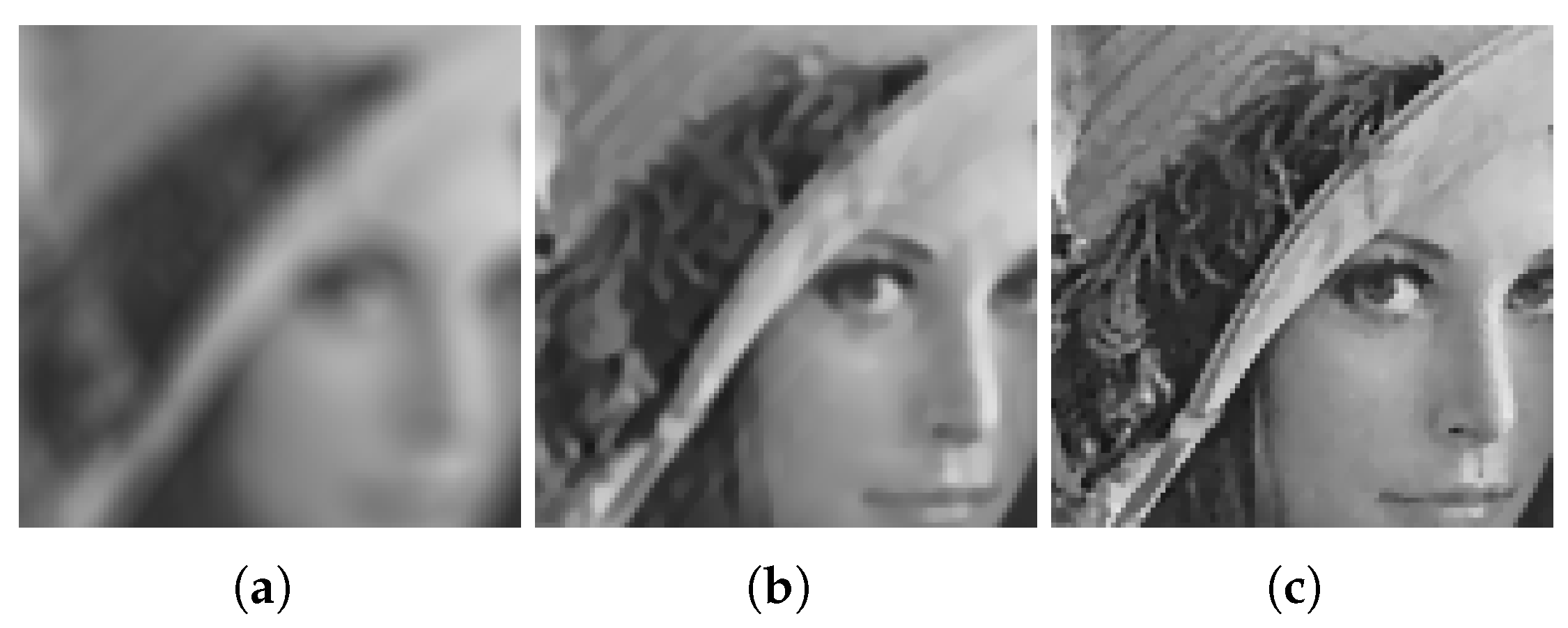

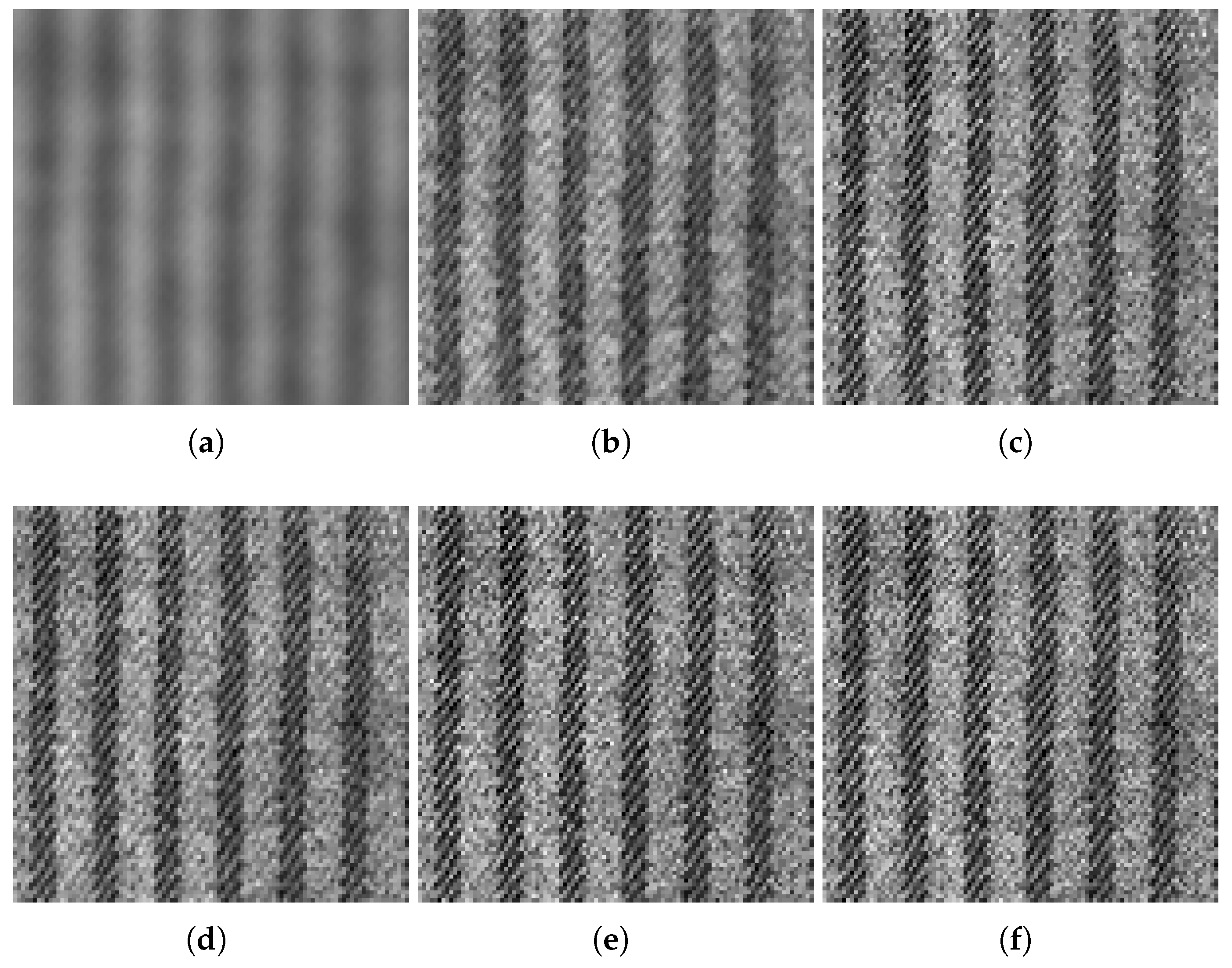

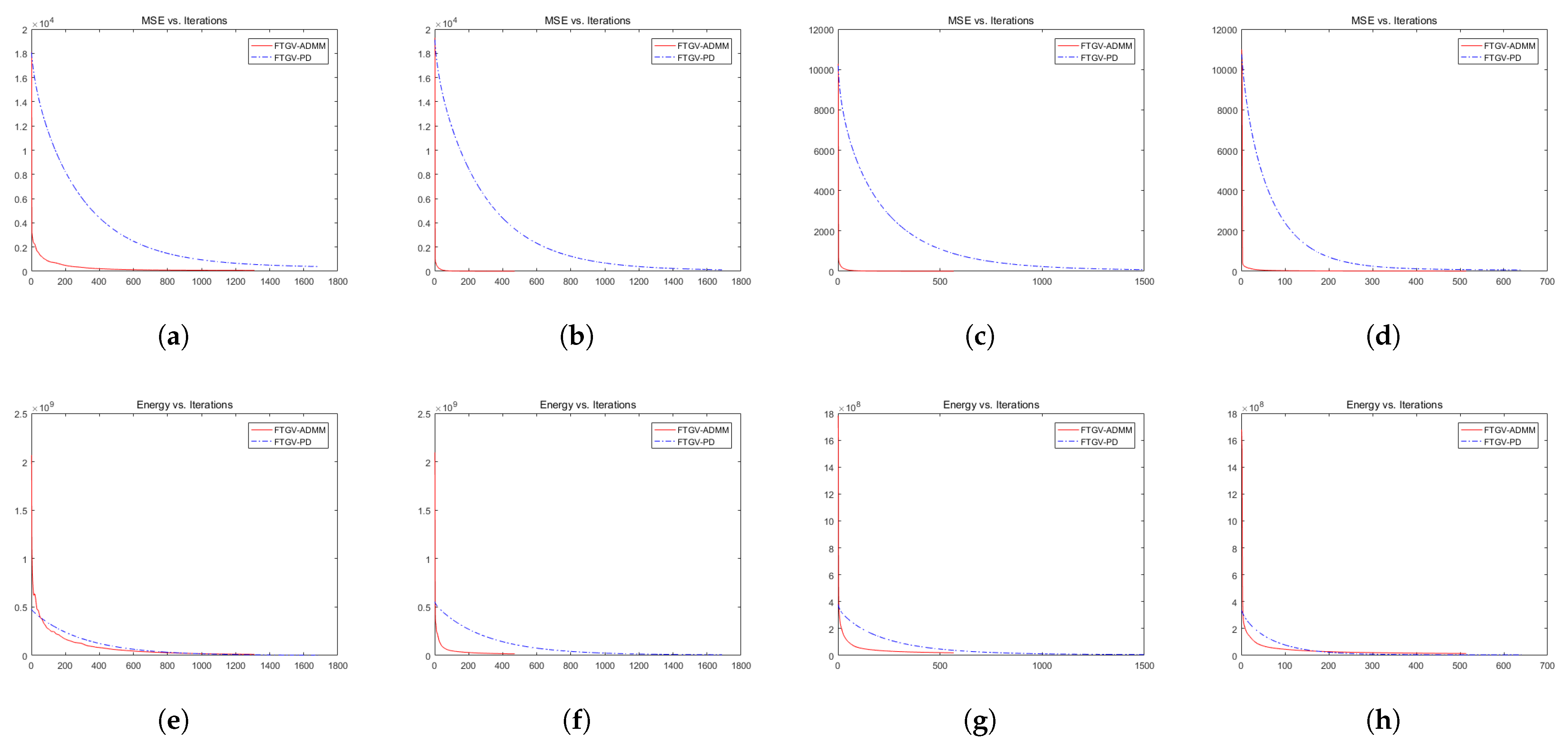

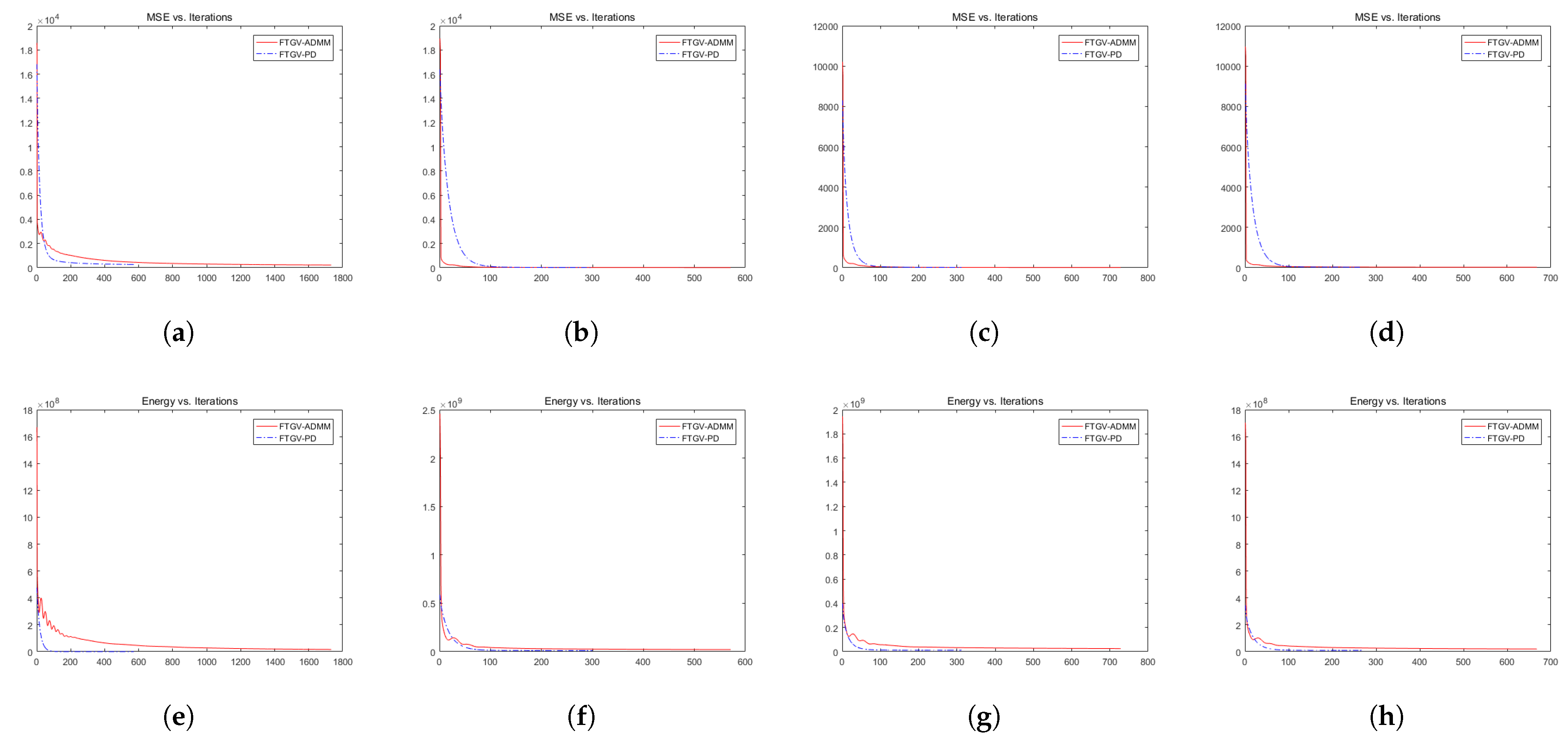

4.1. Comparison of Proposed Algorithms

4.2. Comparison of Other TGV-Based Methods

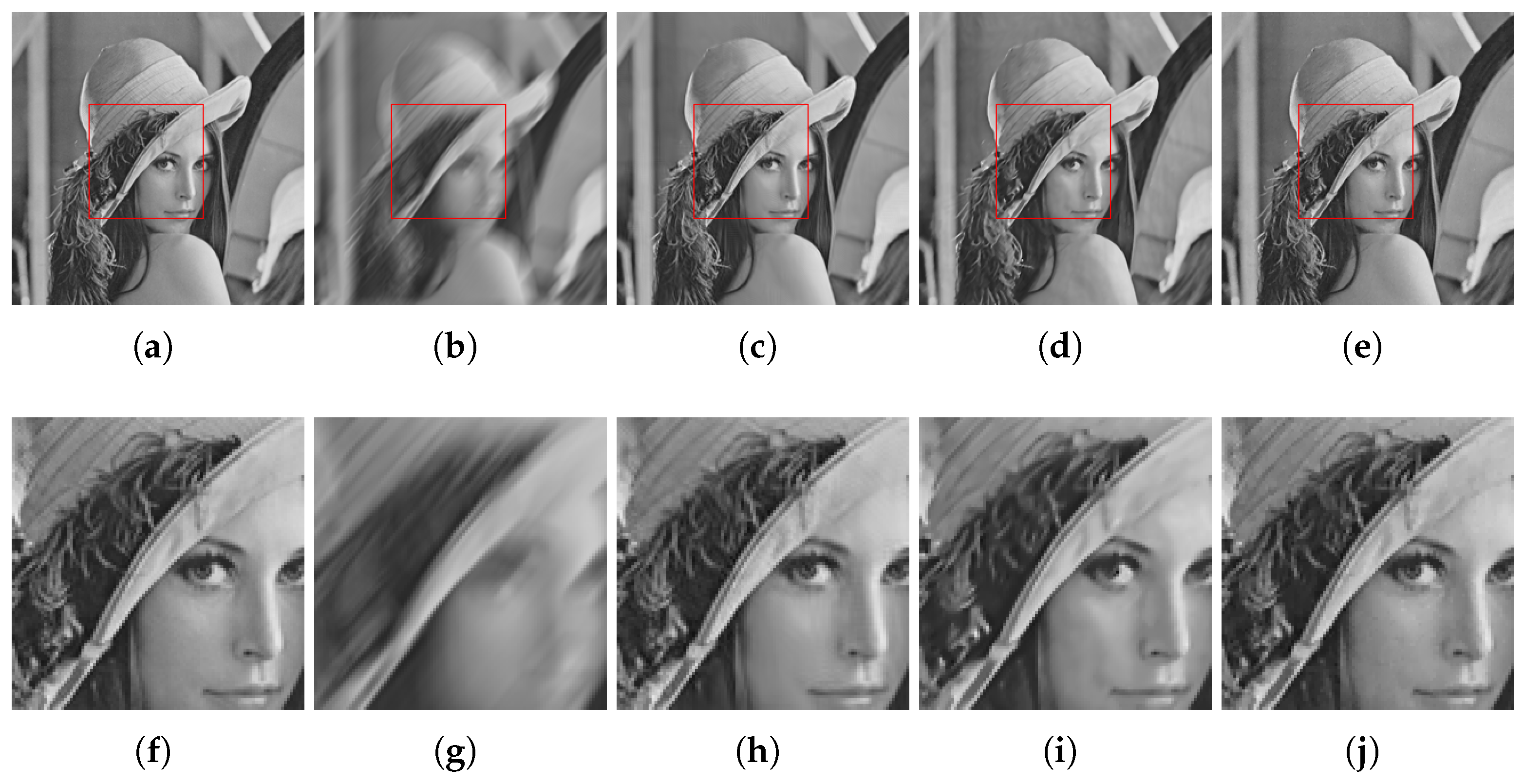

4.3. Comparison with BM3D and NLTV

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Kundur, D.; Hatzinakos, D. Blind image deconvolution. IEEE Signal Process. Mag. 1996, 13, 43–64. [Google Scholar] [CrossRef]

- Kundur, D.; Hatzinakos, D. A novel blind deconvolution scheme for image restoration using recursive filtering. IEEE Trans. Signal Process. 1998, 46, 375–390. [Google Scholar] [CrossRef]

- Lehr, J.; Sibarita, J.-B.; Chassery, J.-M. Image restoration in X-ray microscopy: PSF determination and biological applications. IEEE Trans. Image Process. 1998, 7, 258–263. [Google Scholar] [CrossRef] [PubMed]

- Qin, F.; Min, J.; Guo, H. A blind image restoration method based on PSF estimation. In Proceedings of the IEEE 2009 WRI World Congress on Software Engineering, Xiamen, China, 19–21 May 2009; Volume 2, pp. 173–176. [Google Scholar]

- Erler, K.; Jernigan, E. Adaptive image restoration using recursive image filters. IEEE Trans. Signal Process. 1994, 42, 1877–1881. [Google Scholar] [CrossRef]

- Liu, Z.; Caelli, T. A sequential adaptive recursive filter for image restoration. Comput. Vis. Graph. Image Process. 1988, 44, 332–349. [Google Scholar] [CrossRef]

- Rudin, L.; Osher, S.; Fatemi, E. Nonlinear total variation based noise removal algorithms. Phys. D Nonlinear Phenom. 1992, 60, 259–268. [Google Scholar] [CrossRef]

- Rudin, L.; Osher, S. Total variation based image restoration with free local constraints. In Proceedings of the 1st International Conference on Image Processing, Austin, TX, USA, 13–16 November 1994; pp. 31–35. [Google Scholar]

- Lysaker, M.; Lundervold, A.; Tai, X.C. Noise removal using fourth-order partial differential equation with applications to medical magnetic resonance images in space and time. IEEE Trans. Image Process. 2003, 12, 1579–1590. [Google Scholar] [CrossRef]

- Deng, L.; Fang, Q.; Zhu, H. Image denoising based on spatially adaptive high order total variation model. In Proceedings of the International Congress on Image and Signal Processing, BioMedical Engineering and Informatics, Datong, China, 15–17 October 2016; pp. 212–216. [Google Scholar]

- Adam, T.; Paramesran, R. Hybrid non-convex second-order total variation with applications to non-blind image deblurring. Signal Image Video Process. 2020, 14, 115–123. [Google Scholar] [CrossRef]

- He, C.; Hu, C.; Zhang, W.; Shi, B. A fast adaptive parameter estimation for total variation image restoration. IEEE Trans. Image Process. 2014, 23, 4954–4967. [Google Scholar] [CrossRef]

- Liu, J.; Huang, T.Z.; Selesnick, I.W.; Lv, X.G.; Chen, P.Y. Image restoration using total variation with overlapping group sparsity. Inf. Sci. 2015, 295, 232–246. [Google Scholar] [CrossRef]

- Carlavan, M.; Blanc-Féraud, L. Sparse Poisson noisy image deblurring. IEEE Trans. Image Process. 2012, 21, 1834–1846. [Google Scholar] [CrossRef] [PubMed]

- Bai, J.; Feng, X.C. Fractional-order anisotropic diffusion for image denoising. IEEE Trans. Image Process. 2007, 16, 2492–2502. [Google Scholar] [CrossRef]

- Chowdhury, M.R.; Qin, J.; Lou, Y. Non-blind and blind deconvolution under Poisson noise using fractional-order total variation. J. Math. Imaging Vision 2020, 62, 1238–1255. [Google Scholar] [CrossRef]

- Gilboa, G.; Osher, S. Nonlocal operators with applications to image processing. Multiscale Model. Simul. 2009, 7, 1005–1028. [Google Scholar] [CrossRef]

- Zhang, X.; Burger, M.; Bresson, X.; Osher, S. Bregmanized nonlocal regularization for deconvolution and sparse reconstruction. SIAM J. Imaging Sci. 2010, 3, 253–276. [Google Scholar] [CrossRef]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image restoration by sparse 3-D transformdomain collaborative filtering. SPIE Electron. Imaging 2008, 6812, 62–73. [Google Scholar]

- Danielyan, A.; Katkovnik, V.; Egiazarian, K. BM3D frames and variational image deblurring. IEEE Trans. Image Process. 2012, 21, 1715–1728. [Google Scholar] [CrossRef]

- Bredies, K.; Kunisch, K.; Pock, T. Total Generalized Variation. SIAM J. Imaging Sci. 2010, 3, 492–526. [Google Scholar] [CrossRef]

- Liu, X. Augmented Lagrangian method for total generalized variation based Poissonian image restoration. Comput. Math. Appl. 2016, 71, 1694–1705. [Google Scholar] [CrossRef]

- Liu, X. Total generalized variation and wavelet frame-based adaptive image restoration algorithm. Vis. Comput. 2019, 35, 1883–1894. [Google Scholar] [CrossRef]

- Shao, W.Z.; Wang, F.; Huang, L.L. Adapting total generalized variation for blind image restoration. Multidimens. Syst. Signal Process. 2019, 30, 857–883. [Google Scholar] [CrossRef]

- Gao, Y.; Liu, F.; Yang, X. Total generalized variation restoration with non-quadratic fidelity. Multidimens. Syst. Signal Process. 2018, 29, 1459–1484. [Google Scholar] [CrossRef]

- Knoll, F.; Bredies, K.; Pock, T.; Stollberger, R. Second order total generalized variation (TGV) for MRI. Magn. Reson. Med. 2011, 65, 480–491. [Google Scholar] [CrossRef]

- Bredies, K.; Valkonen, T. Inverse problems with second-order total generalized variation constraints. arXiv 2020, arXiv:2005.09725. [Google Scholar]

- He, C.; Hu, C.; Yang, X. An adaptive total generalized variation model with augmented lagrangian method for image denoising. Math. Probl. Eng. 2014, 2014, 157893. [Google Scholar] [CrossRef]

- Bredies, K. Recovering Piecewise Smooth Multichannel Images by Minimization of Convex Functionals with Total Generalized Variation Penalty; Springer: Berlin/Heidelberg, Germany, 2014; pp. 44–77. [Google Scholar]

- Tirer, T.; Giryes, R. Image restoration by iterative denoising and backward projections. IEEE Trans. Image Process. 2019, 28, 1220–1234. [Google Scholar] [CrossRef] [PubMed]

- Ren, D.; Zhang, H.; Zhang, D. Fast total-variation based image restoration based on derivative alternated direction optimization methods. Neurocomputing 2015, 170, 201–212. [Google Scholar] [CrossRef]

- Patel, V.M.; Maleh, R.; Gilbert, A.C.; Chellappa, R. Gradient-based image recovery methods from incomplete Fourier measurements. IEEE Trans. Image Process. 2011, 21, 94–105. [Google Scholar] [CrossRef]

- Zou, T.; Li, G.; Ma, G.; Zhao, Z.; Li, Z. A derivative fidelity-based total generalized variation method for image restoration. Mathematics 2022, 10, 3942. [Google Scholar] [CrossRef]

- Podlubny, I. Fractional Differential Equations, An introduction to fractional derivatives, frac-tional differential equations, to methods of their solution and some of their applications. In Mathematics in Science and Engineering; Academic Press, Inc.: San Diego, CA, USA, 1999. [Google Scholar]

- Guo, Z.; Yao, W.; Sun, J.; Wu, B. Nonlinear fractional diffusion model for deblurring images with textures. Inverse Probl. Imaging 2019, 13, 1161–1188. [Google Scholar] [CrossRef]

- Yao, W.; Guo, Z.; Sun, J.; Wu, B.; Gao, H. Multiplicative Noise Removal for Texture Images Based on Adaptive Anisotropic Fractional Diffusion Equations. SIAM J. Imaging Sci. 2019, 12, 839–873. [Google Scholar] [CrossRef]

- Dyda, B.; Ihnatsyeva, L.; Vähäkangas, A.V. On improved fractional Sobolev-Poincaré inequalities. Ark. Mat. 2016, 54, 437–454. [Google Scholar] [CrossRef]

- Hurri-Syrjnen, R.; Vhkangas, A.V. On fractional Poincaré inequalities. J. Anal. Mathématique 2011, 120, 85–104. [Google Scholar] [CrossRef]

- Jonsson, A.; Wallin, H. A Whitney extension theorem in Lp and Besov spaces. Ann. Inst. Fourier. 1978, 28, 139–192. [Google Scholar] [CrossRef]

- Adams, R.A. Sobolev Spaces; Pure and Applied Mathematics; Academic Press: San Diego, CA, USA, 1975; p. 65. [Google Scholar]

- Bourgain, J.; Brezis, H.; Mironescu, P. Another look at Sobolev spaces. Optimal. In Control and Partial Differential Equations; IOS Press: Amsterdam, The Netherlands, 2001; pp. 439–455. [Google Scholar]

- Meerschaert, C.; Tadjeran, M.M. Finite difference approximations for fractional advectiondispersion equations. J. Comput. Appl. Math. 2004, 172, 65–77. [Google Scholar] [CrossRef]

- Boyd, S.; Parikh, N.; Chu, E.; Peleato, B.; Eckstein, J. Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Mach. Learn. 2011, 3, 1–122. [Google Scholar] [CrossRef]

- Eckstein, J.; Bertsekas, D. On the Douglas-Rachford splitting method and the proximal point algorithm for maximal monotone operators. Math. Program. 1992, 55, 293–318. [Google Scholar] [CrossRef]

- Zhu, M.; Chan, T.F. An efficient primal-dual hybrid gradient algorithm for total variation image restoration. UCLA CAM Rep. 2008, 34, 8–34. [Google Scholar]

- Esser, E.; Zhang, X.; Chan, T. A general framework for a class of first order primal-dual algorithms for TV minimization. UCLA CAM Rep. 2009, 9, 67. [Google Scholar]

- Chen, Y.; Lan, G.; Ouyang, Y. Optimal primalšCdual methods for a class of saddle pont problems. SIAM J. Optim. 2014, 24, 1779–1814. [Google Scholar]

- Chambolle, A.; Pock, T. On the ergodic convergence rates of a first-order primalšCdual algorithm. Math. Program. 2016, 159, 253–287. [Google Scholar] [CrossRef]

- Bahouri, H.; Chemin, J.Y.; Danchin, R. Fourier Analysis Nonlinear Partial Differential Equations; Springer: Berlin, Germany, 2011. [Google Scholar]

- Chambolle, A. An algorithm for total variation minimization and applications. J. Math. Imaging Vision 2004, 20, 89–97. [Google Scholar]

| Model | Image Type | I | II | III |

|---|---|---|---|---|

| TGV() | All images | (1,2,35) | (1,2,35) | (1,2,35) |

| APE-TGV () | All images | (1,3,0.3) | (1,3,0.3) | (1,3,0.3) |

| DTGV () | Texture image | (1,2,2000) | (1,2,2000) | (1,2,2000) |

| Other images | (5,10,2000) | (5,10,2000) | (5,10,2000) | |

| FTGV-ADMM | All images | (0.1,0.2,0.7,5000) | (0.1,0.2,0.7,5000) | (0.1,0.2,0.7,5000) |

| FTGV-PD | Texture image | (0.1,0.2,0.8,150) | (0.1,0.2,0.8,2500) | (0.1,0.2,0.8,2500) |

| Other images | (5,10,0.8,150) | (10,20,0.8,2500) | (10,20,0.8,2500) |

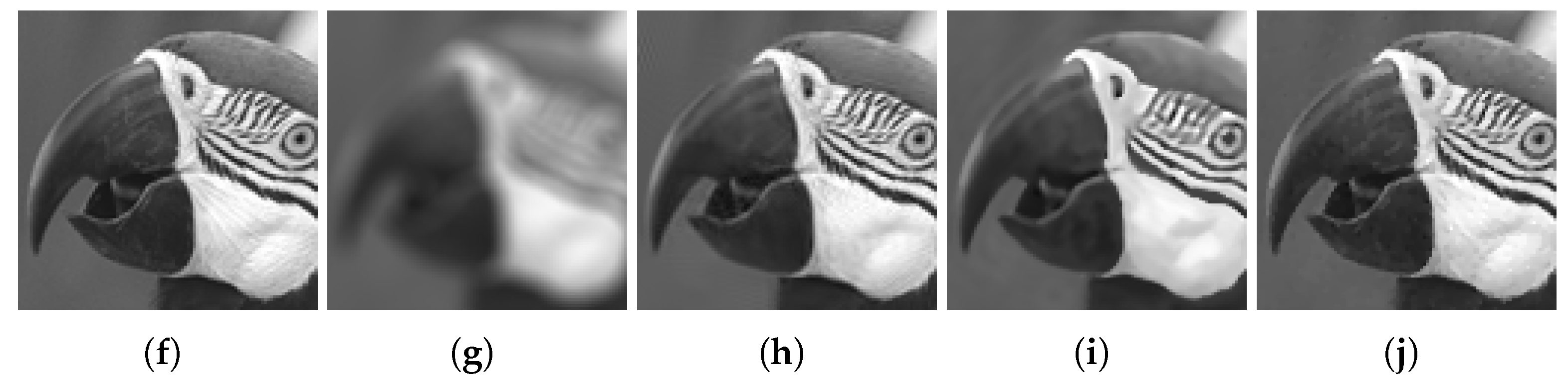

| Figure | Methods | I | II | III |

|---|---|---|---|---|

| PSNR/SSIM | PSNR/SSIM | PSNR/SSIM | ||

| Texture | TGV | 22.59/0.8937 | 18.91/0.7436 | 19.82/0.6964 |

| APE-TGV | 28.34/0.9729 | 25.83/0.9335 | 24.01/0.8763 | |

| D-TGV | 29.03/0.9776 | 24.17/0.9162 | 22.77/0.8588 | |

| FTGV-ADMM | /0.9080 | |||

| FTGV-PD | 24.75/0.9442 | 26.70/0.9502 | 24.75/ | |

| Butterfly | TGV | 26.74/0.8826 | 27.30/0.9069 | 25.93/0.8759 |

| APE-TGV | 37.01/0.9814 | 34.29/0.9747 | 33.12/0.9668 | |

| D-TGV | 35.72/0.9665 | 34.20/0.9627 | 31.70/0.9477 | |

| FTGV-ADMM | /0.9812 | /0.9741 | ||

| FTGV-PD | 26.38/0.9285 | 34.67/0.9681 | 32.58/0.9571 |

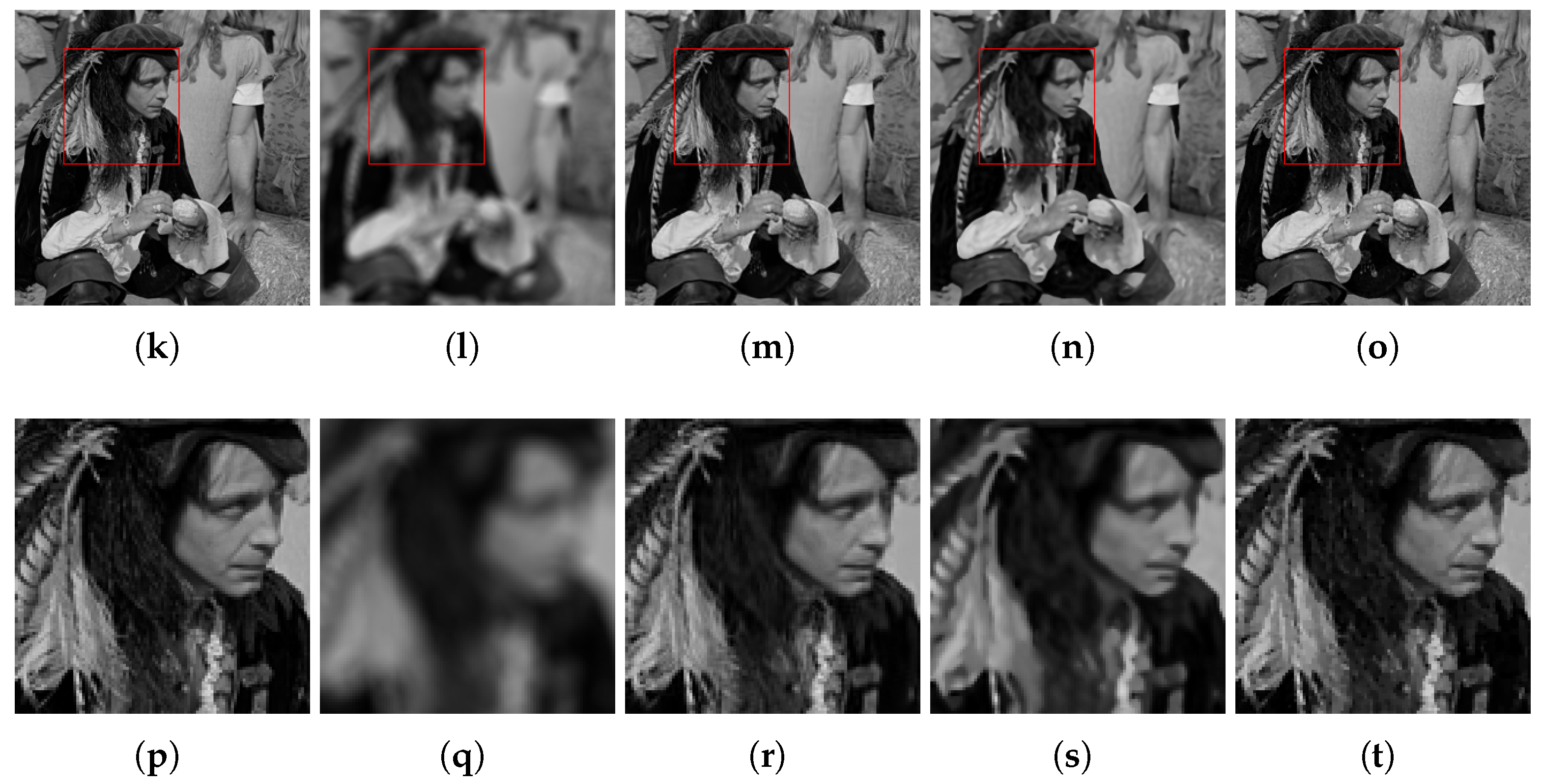

| Figure | Methods | I | II | III |

|---|---|---|---|---|

| PSNR/SSIM | PSNR/SSIM | PSNR/SSIM | ||

| Brain | TGV | 30.45/0.8173 | 28.96/0.9219 | 28.26/0.8959 |

| APE-TGV | 39.90/0.9899 | 37.07/ | 34.53/ | |

| D-TGV | 38.72/0.9097 | 36.67/0.9583 | 33.76/0.9512 | |

| FTGV-ADMM | /0.9747 | /0.9721 | /0.9729 | |

| FTGV-PD | 29.67/0.7967 | 37.11/0.9753 | 34.26/0.9656 | |

| Man | TGV | 28.57/0.8566 | 27.24/0.8229 | 26.54/0.8000 |

| APE-TGV | 35.71/0.9641 | 33.26/0.9409 | 31.42/0.9225 | |

| D-TGV | 36.50/0.9643 | 33.78/0.9444 | 31.98/0.9228 | |

| FTGV-ADMM | ||||

| FTGV-PD | 29.28/0.8657 | 33.35/0.9395 | 31.66/0.9169 |

| Figure | Methods | PSNR | SSIM |

|---|---|---|---|

| Figure 9 | BM3D | 36.67 | 0.9563 |

| NLTV | 33.76 | 0.9392 | |

| FTGV-ADMM | 38.66 | 0.9761 | |

| Figure 10 | BM3D | 37.68 | 0.9575 |

| NLTV | 35.31 | 0.9467 | |

| FTGV-ADMM | 38.65 | 0.9717 | |

| Figure 11 | BM3D | 32.21 | 0.9183 |

| NLTV | 29.12 | 0.8251 | |

| FTGV-ADMM | 34.28 | 0.9492 | |

| Figure 12 | BM3D | 33.65 | 0.9580 |

| NLTV | 30.47 | 0.9219 | |

| FTGV-ADMM | 38.48 | 0.9812 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, J.; Sun, J.; Guo, Z.; Yao, W. A Fractional-Order Fidelity-Based Total Generalized Variation Model for Image Deblurring. Fractal Fract. 2023, 7, 756. https://doi.org/10.3390/fractalfract7100756

Gao J, Sun J, Guo Z, Yao W. A Fractional-Order Fidelity-Based Total Generalized Variation Model for Image Deblurring. Fractal and Fractional. 2023; 7(10):756. https://doi.org/10.3390/fractalfract7100756

Chicago/Turabian StyleGao, Juanjuan, Jiebao Sun, Zhichang Guo, and Wenjuan Yao. 2023. "A Fractional-Order Fidelity-Based Total Generalized Variation Model for Image Deblurring" Fractal and Fractional 7, no. 10: 756. https://doi.org/10.3390/fractalfract7100756

APA StyleGao, J., Sun, J., Guo, Z., & Yao, W. (2023). A Fractional-Order Fidelity-Based Total Generalized Variation Model for Image Deblurring. Fractal and Fractional, 7(10), 756. https://doi.org/10.3390/fractalfract7100756