Abstract

In this paper, we focus on the computation of Caputo-type fractional differential equations. A high-order predictor–corrector method is derived by applying the quadratic interpolation polynomial approximation for the integral function. In order to deal with the weak singularity of the solution near the initial time of the fractional differential equations caused by the fractional derivative, graded meshes were used for time discretization. The error analysis of the predictor–corrector method is carefully investigated under suitable conditions on the data. Moreover, an efficient sum-of-exponentials (SOE) approximation to the kernel function was designed to reduce the computational cost. Lastly, several numerical examples are presented to support our theoretical analysis.

Keywords:

high-order predictor–corrector method; graded meshes; fractional differential equations; sum-of-exponentials approximation; error analysis MSC:

65L05; 65L12; 65L70

1. Introduction

Growing interest has focused on the study of fractional differential equations (FDEs) over the last few decades; see [1,2] and the references therein. Obtaining the exact solutions for FDEs can be very challenging, especially for general right-hand-side functions. Thus, there is a need to develop numerical methods for FDEs, for which extensive work has been conducted. One idea is to directly approximate the fractional derivative operators, e.g., [3,4,5]. Another idea is first to transform the FDEs into the integral forms and then use the numerical schemes to solve the integral equation; see, e.g., [6,7,8,9,10,11,12,13,14,15]. There are also some other numerical methods for FDEs, such as the variational iteration [16], Adomian decomposition [17], finite-element [18], and spectral [19] methods.

Adams methods are one of the most studied implicit–explicit linear multistep method groups. They play a major rule in the numerical processing of various differential equations. Therefore, great interest has been devoted to generalizing Adams methods to FDEs, especially the Adams-type predictor–corrector method. For example, Diethelm et al. [7,8,9,10] suggested the numerical approximation of FDEs using the Adams-type predictor–corrector method on uniform meshes. Deng [20] apprehended the short memory principle of fractional calculus and further applied the Adams-type predictor–corrector method for the numerical solution of FDEs on uniform meshes. Nguyen and Jang [21] studied a new Adams-type predictor–corrector method on uniform meshes by introducing a new prediction stage which is the same accuracy order as that of the correction stage for solving FDEs. Zhou et al. [22] considered the fast second-order Adams-type predictor–corrector method on graded meshes to solve a nonlinear time-fractional Benjamin–Bona–Mahony–Burgers equation.

Solutions to FDEs typically exhibit weak singularity at the initial time. In order to handle such problems, several techniques were developed, such as using nonuniform grids to keep errors small near the singularity [5,12,13,23,24,25], or employing correction terms to recover theoretical accuracy [6,15,26,27], or choosing a simple change in variable to derive a new and equivalent time-rescaled FDE [28,29].

In this paper, our goals are to construct high-order numerical methods and deal with the singularity of the solution of FDEs. Motivated by the above research, we follow the predictor–corrector method proposed in [21] and apply graded meshes to solve the following FDEs

where is a real number; denotes the fractional derivative in the Caputo sense, which is defined for all functions w that are absolutely continuous on by (e.g., [1])

To ensure that the existence and uniqueness of the solution of Problem (1) (e.g., [8], Theorems 2.1, 2.2), we assumed that the continuous function f fulfilled the Lipschitz condition with respect to its second argument on a suitable set G, i.e., for any ,

where is the Lipschitz constant independent of and . Equation (1) can be rewritten as the following Volterra integral equation (e.g., [8])

The following regularity assumptions on the solution are also used for our proposed method:

Moreover, we can learn from ([30], Section 2) or ([10], Theorem 2.1) that the analytical solution of (1) can be written as the summation of the singular and the regular parts; see the following lemma where for each , .

Lemma 1

([10], Theorem 2.1).

- (a)

- Suppose that . Then, there exist some constants and a function such that

- (b)

- Suppose that . Then, there exist some constants , and a function , such that

From the above lemma, when , there are some constants , such that

Then

where are some constants. Therefore, assumptions (5) are reasonable, and we can also obtain for that

The computational work and storage of the predictor–corrector method still remain very high due to the nonlocality of the fractional derivatives. Therefore, fast methods to reduce computational cost and storage were also investigated. For example, on the basis of an efficient sum-of-exponentials (SOE) approximation for the kernel function , Jiang et al. [31] introduced a fast evaluation of the Caputo fractional derivative on the interval with a uniform absolute error , where and is the time step size. One can also refer to [32,33,34,35]. In the present paper, we also use this SOE technique to construct the corresponding fast predictor–corrector method for (1).

The rest of this paper is organized as follows. In Section 2, we formulate the high-order predictor–corrector method for (1). In Section 3, we discuss the error estimates of the predictor–corrector method. In Section 4, we propose a fast algorithm for the presented predictor–corrector method. Several numerical examples are given in Section 5 to illustrate the computational flexibility and verify our error estimates of the used methods. A brief conclusion is given in Section 6.

Notation: In this paper, notation C is used to denote a generic positive constant that is always independent of mesh size, but may take different values at different occurrences.

2. High-Order Predictor–Corrector Method

In order to handle the weak singularity of the solution of (1), we consider the graded meshes

where constant mesh grading is chosen by the user. One can obtain that

The discretized version of (4) at is given as

To construct the high-order predictor–corrector method for (1), on each small interval , we denote the linear interpolation polynomial and quadratic interpolation polynomial of a function as and , respectively, i.e.,

and

Set

For the calculation of the predictor formula of (8), we do not use the unknown value when computing . Three cases are divided for n as follows:

- When , we use to approximate on interval .

- When , we use to approximate on intervals and .

- When , we use to approximate on first small interval , to approximate on each interval and to approximate on the last small interval .

Then, it follows from (8) that

where , for a function , and

and, for ,

For the corrector formula of (8), we use to approximate on the first small interval , and to approximate on intervals . Hence, we can obtain from (8) that

where

We denote the preliminary approximation of from (12) as (used in (15)) and the final approximation of from (15) as . Then, with (12) and (15), our predictor–corrector method for Problem (1) can be derived as follows:

where and .

Remark 1.

We use the same approximation of integral ds for the calculation of predictor Formula (12) and corrector Formula (15), which had the greatest computational burden. Thus, this reduces the overall cost of the predictor–corrector method. In addition, even though our predictor–corrector method (17) can be viewed as a generalization of the predictor–corrector method presented in [21], unlike their method, we did not need to use the values of and to start up the scheme.

3. Error Estimates of the Predictor–Corrector Method

In this section, we study the error analysis of the predictor–corrector method (17). For this, we first introduce some lemmas that are used in analysis.

Lemma 2

([11], Lemma 3.3). Assume that with . Let . Assume also that sequence satisfies

Then, one has

Proof.

A simple deduction from the expression of and in (9) and (10) gives

Then, from (11), we have for and that

Again, one can obtain that

Moreover,

Hence, for , one has from (14) that

Similar to the above inequalities, we can obtain other cases of the bound of ; that is,

In addition, by using (18)–(20), we obtain

Therefore, the proof is now completed. □

For and . Define

Lemma 4.

Let . Suppose that for . For , we define

and

Then, we have

The proof of the above lemma is a bit lengthy. For the detailed proof, see Appendix A. Set

On the basis of the above preliminaries, a convergence criterion of the predictor–corrector method (17) can be stated as follows.

Theorem 1.

Proof.

We can obtain from (8), (12), (15) and (17) that

and

By using (1), (5), (6) and Lemma 4, we have

It follows from (3) and Lemma 3 that

and

Then, we obtain from (22)–(27) that

and

Substituting (28) into (29) gives

for a fixed constant with the use of Lemma 3. Invoking Lemma 2 to (30) gives

The proof is, thus, complete. □

4. Construction of the Fast Algorithm

Due to the nonlocality of the fractional derivatives, our predictor–corrector method (17) also needed high computational work and storage. In order to overcome this difficulty, inspired by Jiang [31], in this section we consider the corresponding sum-of-exponentials (SOE) technique to improve the computational efficiency of the predictor–corrector method (17). Before deriving the fast predictor–corrector method, we give the following lemma for the SOE approximation.

Lemma 5

([31], Section 2.1). For the given , an absolute tolerance error ϵ, a cut-off time and a final time T, there exist a positive integer , positive quadrature nodes , and corresponding positive weights () such that

where

Now, we describe the fast predictor–corrector method, and we obtain from (12) that

where for and

By using a recursive relation, one has that

where

Similarly, we have from (15) that

The prediction and correction stages approximations of are denoted with and , respectively. Set and . Then, we obtain the fast predictor–corrector method for Problem (1) from (31)–(33):

The next result is the fundamental convergence bound for our fast predictor–corrector method (34).

Lemma 6.

Let . Suppose that for . For , we define

and

Then, we have

Proof.

We can obtain that

where we used Lemmas 4 and 5. The proof of the bound of is similar. □

The following theorem can easily be obtained by repeating the proof of Theorem 1.

5. Numerical Examples

We present some numerical examples to check the convergence orders and the efficiency of the proposed predictor–corrector method (17) and fast predictor–corrector method (34). For convenience, we denote these two methods as PCM and fPCM, respectively.

Example 1.

Consider the following FDEs with :

The exact solution of (35) is , where

is the Mittag-Leffler function.

Since

that is, behaves as . Set and . Through Theorems 1 and 2, we have

for PCM (17) and fPCM (34), respectively.

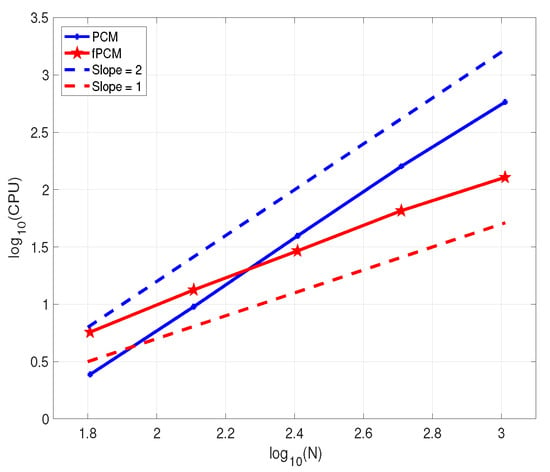

In our calculation, for fPCM, we take . In addition, to present the results, we define to measure the convergence order of the methods, where can be or . Applying PCM and fPCM to Problem (35) with different and r, a series of numerical solutions can be obtained. For simplicity, in Table 1, we just display the maximal nodal errors, convergence orders, and CPU times in seconds of PCM and fPCM for Problem (35) with . “EOC” in each column of p denotes the expected order of convergence presented in (36). “CPU" denotes the total CPU time in seconds for used methods to solve (35). As one may infer from Table 1, both PCM and fPCM almost had the same maximal nodal errors and convergence orders because, as shown in (36), the influence of the SOE approximation error could be negligible when it is chosen to be very small. In terms of CPU times, Figure 1 shows that fPCM took less time than PCM did, and this advantage is becoming more obvious with the increase in time steps N. When N was rather small compared to PCM, the fPCM was no longer efficient. Moreover, Figure 1 shows that the scales of PCM were like , but the scales of fPCM were just like .

Table 1.

Maximal nodal errors, convergence orders, and CPU times of PCM and fPCM for Problem (35) with .

Figure 1.

Total number of time steps N versus CPU times of PCM and fPCM in log–log scale for Problem (35) with .

Example 2.

Consider the following Benjamin–Bona–Mahony–Burgers equation:

the function f, the initial-boundary value conditions are determined by exact solution .

Similarly to (4), Equation (37) can be rewritten as the following integrodifferential equation.

where

Let M be a positive integer. Set for . By applying the centered difference schemes and to numerically approximate and , respectively, we can obtain the corresponding PCM and fPCM for (37).

One can check that

behaves as . For , set , where and are the predictor–corrector method solution and the fast predictor–corrector method solution of (37). Set and . Similarly to [22], we use discrete norm to calculate the errors. Let and . Then, one has

for PCM and fPCM, respectively.

The numerical results are given in Table 2 and Table 3, where the convergence orders in time and space are calculated with

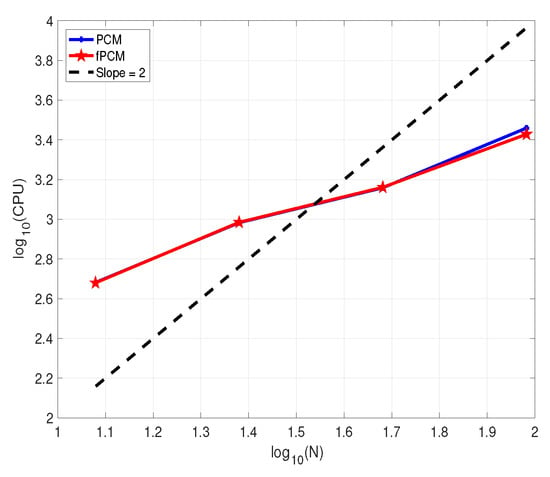

respectively, and can be or . In the fPCM, we set . Table 2 and Table 3 show that PCM and fPCM almost had the same accuracy. In terms of CPU time, Table 3 and Figure 2 show that the fPCM offered no advantage when N was small, but when N was larger, the advantage of fPCM was obvious.

Table 2.

Maximal nodal errors and convergence orders of PCM and fPCM for Problem (37) with and .

Table 3.

Maximal nodal errors, convergence orders, and CPU times of PCM and fPCM for Problem (37) with , and .

Figure 2.

Total number of time steps N versus CPU times of PCM and fPCM in log–log scale for Problem (37) with and .

6. Concluding Remarks

A fast high-order predictor–corrector method was constructed for solving fractional differential equations. Graded meshes were used for time discretization to deal with the weak singularity of the solution near the initial time. Several numerical examples were presented to support our theoretical analysis. Since the predictor–corrector method failed to solve the stiff problem (see [6], Section 5), our fast high-order predictor–corrector method also had the same property. In future work, we will try to construct implicit–explicit methods by using the technique of our predictor–corrector method to solve the stiff fractional differential equations or time-fractional partial differential equations.

Author Contributions

Methodology, Y.Z.; writing—original draft preparation, X.S.; writing—review and editing, X.S. and Y.Z. All authors have read and agreed to the published version of the manuscript.

Funding

The work of Yongtao Zhou is supported in part by the China Postdoctoral Science Foundation under grant 2021M690322, and the National Natural Science Foundation of China for Young Scientists under grant 12101037.

Data Availability Statement

Not applicable.

Acknowledgments

The authors sincerely thank the reviewers for their constructive comments to improve the manuscript.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| SOE | Sum of exponentials |

| FDEs | Fractional differential equations |

| PCM | Predictor–corrector method |

| fPCM | Fast predictor–corrector method |

Appendix A. Proof of Lemma 4

Proof.

By using , for

and, for

We similarly derive

We first consider the estimate of . When , with the use of (7) and (A1), we obtain

When , it follows from (7), (A1), and (A2) that

For some

Then, when , one obtains from , (A1), (A2) and (A4) that

For , we can obtain from (7) that

For , recalling (7) and noting that, for

Therefore

where the well-known convergence results for series

was used. For , with the use of (7), one obtains for that

Then, one sees that

For , again by using (7), one can obtain that

Substituting (A6)–(A9) into (A5) gives

References

- Diethelm, K. The Analysis of Fractional Differential Equations; Lecture Notes in Mathematics; Springer: Berlin, Germany, 2010; Volume 2004, pp. viii+247. [Google Scholar]

- Jin, B.; Lazarov, R.; Zhou, Z. Numerical methods for time-fractional evolution equations with nonsmooth data: A concise overview. Comput. Methods Appl. Mech. Engrg. 2019, 346, 332–358. [Google Scholar] [CrossRef]

- Chen, H.; Stynes, M. Error analysis of a second-order method on fitted meshes for a time-fractional diffusion problem. J. Sci. Comput. 2019, 79, 624–647. [Google Scholar] [CrossRef]

- Kopteva, N.; Meng, X. Error analysis for a fractional-derivative parabolic problem on quasi-graded meshes using barrier functions. SIAM J. Numer. Anal. 2020, 58, 1217–1238. [Google Scholar] [CrossRef]

- Stynes, M.; O’Riordan, E.; Gracia, J.L. Error analysis of a finite difference method on graded meshes for a time-fractional diffusion equation. SIAM J. Numer. Anal. 2017, 55, 1057–1079. [Google Scholar] [CrossRef]

- Cao, W.; Zeng, F.; Zhang, Z.; Karniadakis, G.E. Implicit-explicit difference schemes for nonlinear fractional differential equations with nonsmooth solutions. SIAM J. Sci. Comput. 2016, 38, A3070–A3093. [Google Scholar] [CrossRef]

- Diethelm, K.; Freed, A.D. The FracPECE subroutine for the numerical solution of differential equations of fractional order. Forsch. Und Wiss. Rechn. 1998, 1999, 57–71. [Google Scholar]

- Diethelm, K.; Ford, N.J. Analysis of fractional differential equations. J. Math. Anal. Appl. 2002, 265, 229–248. [Google Scholar] [CrossRef]

- Diethelm, K.; Ford, N.J.; Freed, A.D. A predictor-corrector approach for the numerical solution of fractional differential equations. Nonlinear Dyn. 2002, 29, 3–22. [Google Scholar] [CrossRef]

- Diethelm, K.; Ford, N.J.; Freed, A.D. Detailed error analysis for a fractional Adams method. Numer. Algorithms 2004, 36, 31–52. [Google Scholar] [CrossRef]

- Li, C.; Yi, Q.; Chen, A. Finite difference methods with non-uniform meshes for nonlinear fractional differential equations. J. Comput. Phys. 2016, 316, 614–631. [Google Scholar] [CrossRef]

- Liu, Y.; Roberts, J.; Yan, Y. Detailed error analysis for a fractional Adams method with graded meshes. Numer. Algorithms 2018, 78, 1195–1216. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Roberts, J.; Yan, Y. A note on finite difference methods for nonlinear fractional differential equations with non-uniform meshes. Int. J. Comput. Math. 2018, 95, 1151–1169. [Google Scholar] [CrossRef]

- Lubich, C. Fractional linear multistep methods for Abel-Volterra integral equations of the second kind. Math. Comp. 1985, 45, 463–469. [Google Scholar] [CrossRef]

- Zhou, Y.; Suzuki, J.L.; Zhang, C.; Zayernouri, M. Implicit-explicit time integration of nonlinear fractional differential equations. Appl. Numer. Math. 2020, 156, 555–583. [Google Scholar] [CrossRef]

- Inc, M. The approximate and exact solutions of the space-and time-fractional Burgers equations with initial conditions by variational iteration method. J. Math. Anal. Appl. 2008, 345, 476–484. [Google Scholar] [CrossRef]

- Jafari, H.; Daftardar-Gejji, V. Solving linear and nonlinear fractional diffusion and wave equations by Adomian decomposition. Appl. Math. Comput. 2006, 180, 488–497. [Google Scholar] [CrossRef]

- Jin, B.; Lazarov, R.; Pasciak, J.; Zhou, Z. Error analysis of a finite element method for the space-fractional parabolic equation. SIAM J. Numer. Anal. 2014, 52, 2272–2294. [Google Scholar] [CrossRef]

- Zayernouri, M.; Karniadakis, G.E. Discontinuous spectral element methods for time- and space-fractional advection equations. SIAM J. Sci. Comput. 2014, 36, B684–B707. [Google Scholar] [CrossRef]

- Deng, W. Short memory principle and a predictor-corrector approach for fractional differential equations. J. Comput. Appl. Math. 2007, 206, 174–188. [Google Scholar] [CrossRef]

- Nguyen, T.B.; Jang, B. A high-order predictor-corrector method for solving nonlinear differential equations of fractional order. Fract. Calc. Appl. Anal. 2017, 20, 447–476. [Google Scholar] [CrossRef]

- Zhou, Y.; Li, C.; Stynes, M. A fast second-order predictor-corrector method for a nonlinear time-fractional Benjamin-Bona-Mahony-Burgers equation. 2022; submitted to Numer. Algorithms. [Google Scholar]

- Zhou, Y.; Stynes, M. Block boundary value methods for linear weakly singular Volterra integro-differential equations. BIT Numer. Math. 2021, 61, 691–720. [Google Scholar] [CrossRef]

- Zhou, Y.; Stynes, M. Block boundary value methods for solving linear neutral Volterra integro-differential equations with weakly singular kernels. J. Comput. Appl. Math. 2022, 401, 113747. [Google Scholar] [CrossRef]

- Zhou, B.; Chen, X.; Li, D. Nonuniform Alikhanov linearized Galerkin finite element methods for nonlinear time-fractional parabolic equations. J. Sci. Comput. 2020, 85, 39. [Google Scholar] [CrossRef]

- Lubich, C. Discretized fractional calculus. SIAM J. Math. Anal. 1986, 17, 704–719. [Google Scholar] [CrossRef]

- Zeng, F.; Zhang, Z.; Karniadakis, G.E. Second-order numerical methods for multi-term fractional differential equations: Smooth and non-smooth solutions. Comput. Methods Appl. Mech. Engrg. 2017, 327, 478–502. [Google Scholar] [CrossRef]

- Li, D.; Sun, W.; Wu, C. A novel numerical approach to time-fractional parabolic equations with nonsmooth solutions. Numer. Math. Theory Methods Appl. 2021, 14, 355–376. [Google Scholar]

- She, M.; Li, D.; Sun, H.w. A transformed L1 method for solving the multi-term time-fractional diffusion problem. Math. Comput. Simul. 2022, 193, 584–606. [Google Scholar] [CrossRef]

- Lubich, C. Runge-Kutta theory for Volterra and Abel integral equations of the second kind. Math. Comp. 1983, 41, 87–102. [Google Scholar] [CrossRef]

- Jiang, S.; Zhang, J.; Zhang, Q.; Zhang, Z. Fast evaluation of the Caputo fractional derivative and its applications to fractional diffusion equations. Commun. Comput. Phys. 2017, 21, 650–678. [Google Scholar] [CrossRef]

- Li, D.; Zhang, C. Long time numerical behaviors of fractional pantograph equations. Math. Comput. Simul. 2020, 172, 244–257. [Google Scholar] [CrossRef]

- Yan, Y.; Sun, Z.Z.; Zhang, J. Fast evaluation of the Caputo fractional derivative and its applications to fractional diffusion equations: A second-order scheme. Commun. Comput. Phys. 2017, 22, 1028–1048. [Google Scholar] [CrossRef]

- Liao, H.l.; Tang, T.; Zhou, T. A second-order and nonuniform time-stepping maximum-principle preserving scheme for time-fractional Allen-Cahn equations. J. Comput. Phys. 2020, 414, 109473. [Google Scholar] [CrossRef] [Green Version]

- Ran, M.; Lei, X. A fast difference scheme for the variable coefficient time-fractional diffusion wave equations. Appl. Numer. Math. 2021, 167, 31–44. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).