Distributed Optimization for Fractional-Order Multi-Agent Systems Based on Adaptive Backstepping Dynamic Surface Control Technology

Abstract

1. Introduction

- (1)

- Compared with [19,20,21,22,23], where the NNs-based adaptive backstepping DSC design algorithm was proposed for the consensus problem of MASs, we solve the distributed optimization problem for the FOMASs with unmeasured states. To accomplish this difficult task, based on the penalty function and negative gradient method, we construct a Lyapunov function for the control strategy. The distributed control method proposed in this paper ensures that all the agents’ outputs reach consensus to the optimal solution of the global objective function asymptotically instead of tracking the reference trajectory.

- (2)

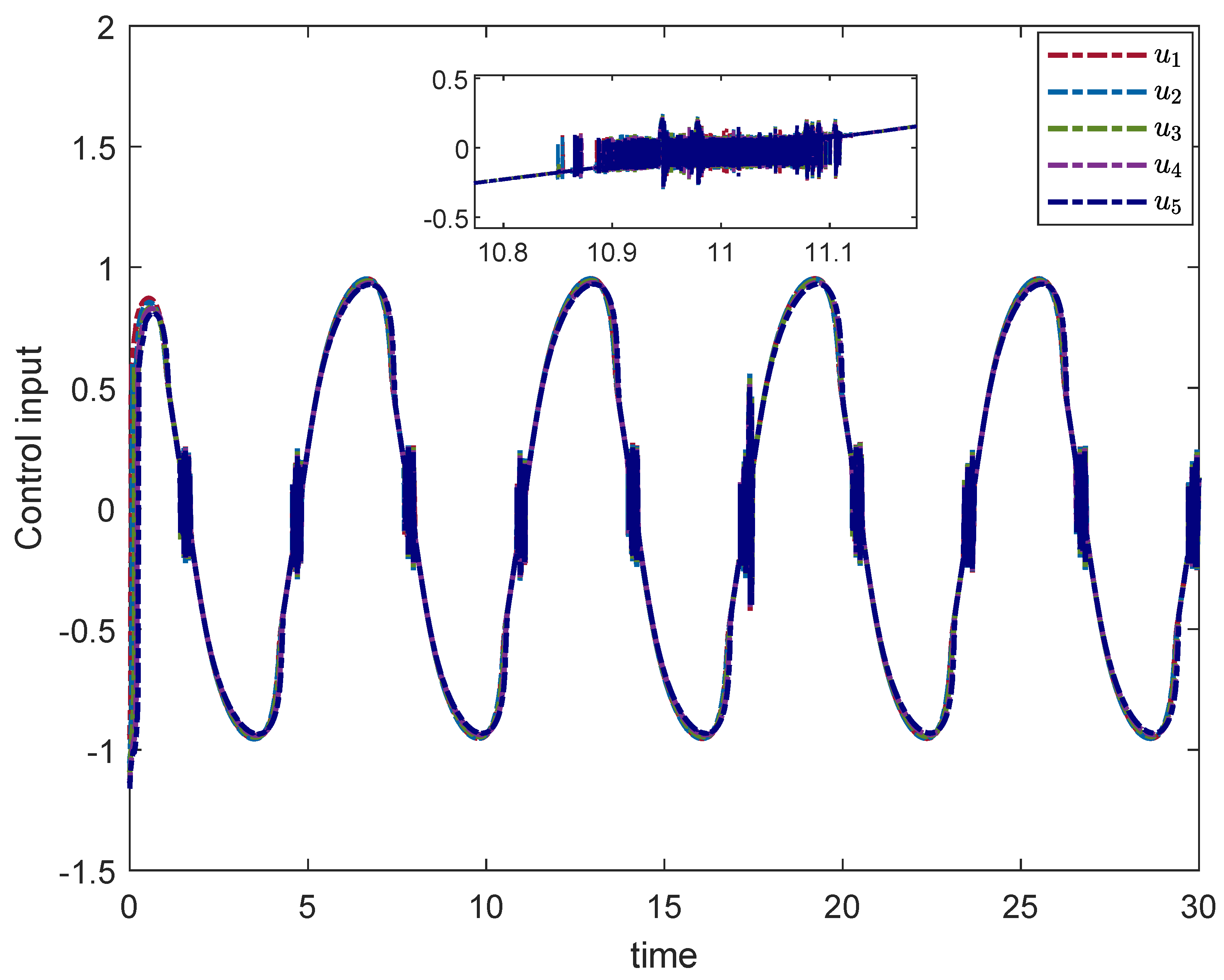

- Different from [12,16], in which the distributed optimization algorithms are developed for first-order and second-order MASs, this article mainly focuses on the distributed optimization problem for fractional high-order MASs with unmeasured states and the unknown nonlinear functions. To overcome this challenge, we propose the distributed optimized adaptive backstepping controller based on the fractional DSC method, which is widely used in fractional high-order MASs, and the controller we propose has excellent performance in fractional-order MASs, which will be reflected in the Simulation section when comparing with [16].

- (3)

- In contrast to [17], which investigates an adaptive backstepping protocol to the distributed optimization for the integer order MASs, with each agent modeled by the strict-feedback form, this article will address the FOMASs with unmeasured states. The unknown nonlinear functions are considered in our article and will be approximated by the NNs.

2. Preliminaries

2.1. Fractional Calculus

2.2. Graph Theory

2.3. Fractional-Order Nonlinear Multi-Agent System

2.4. Convex Analysis

2.5. Problem Formulation

3. Main Results

3.1. State Observer Design

3.2. Controller Design

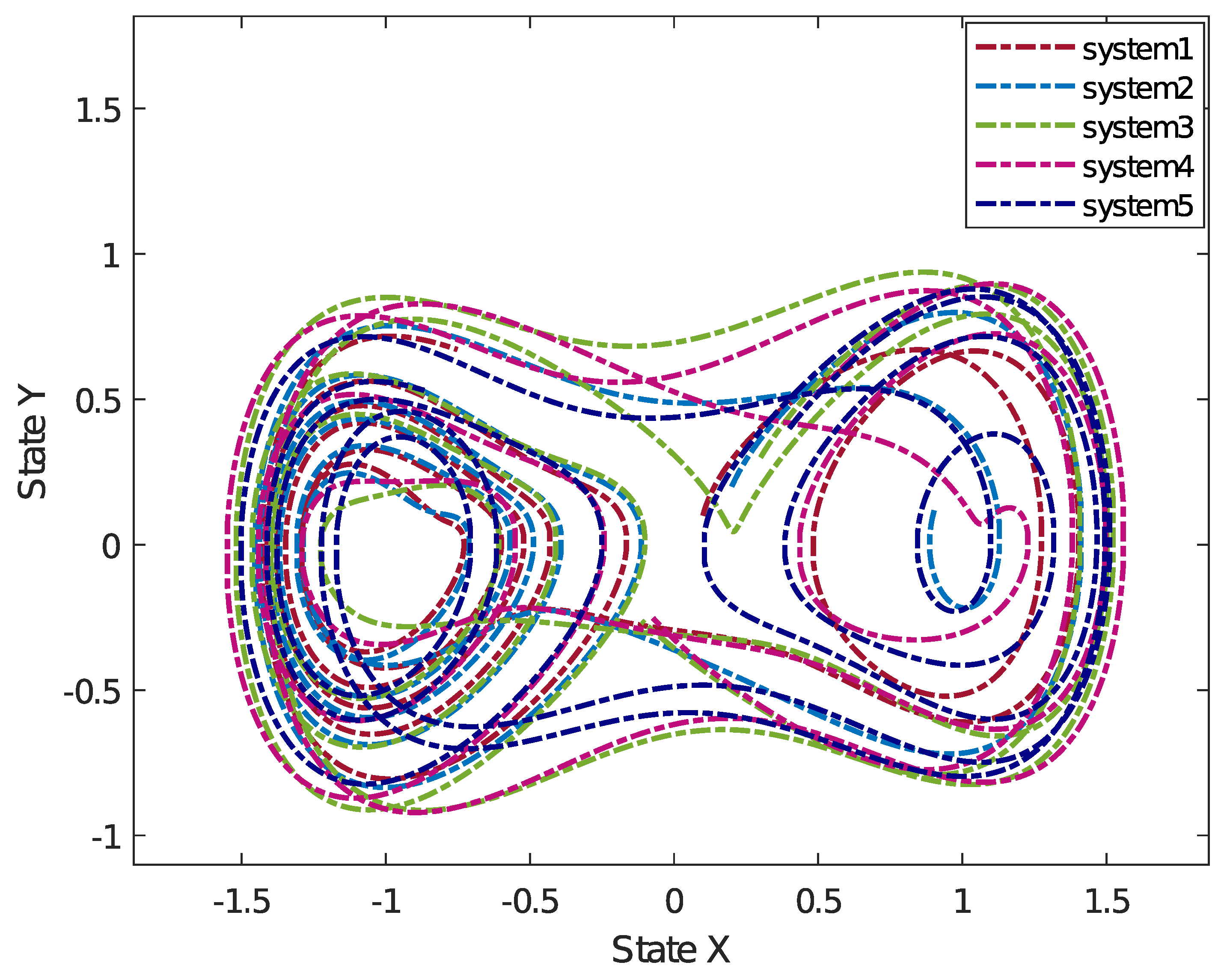

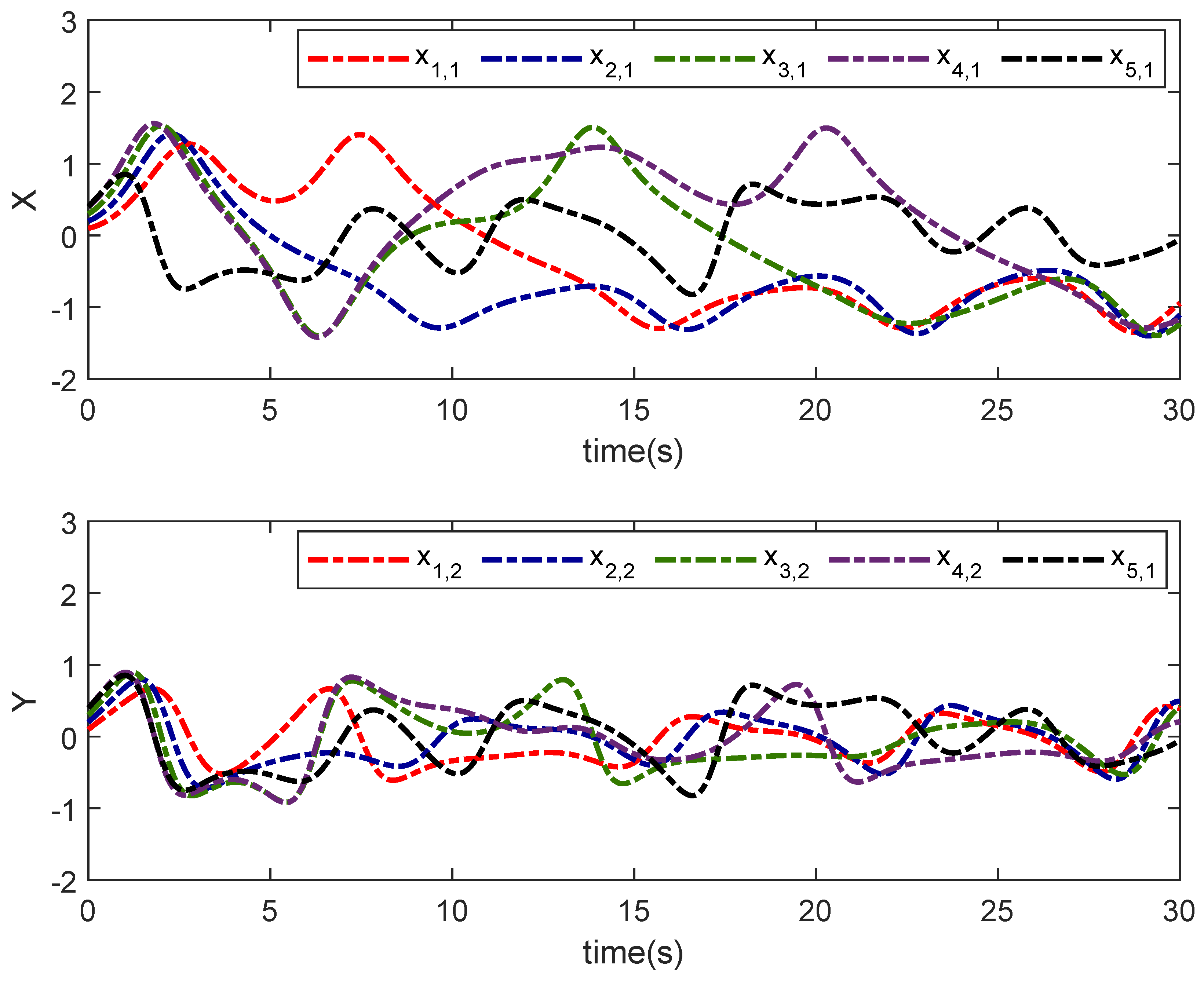

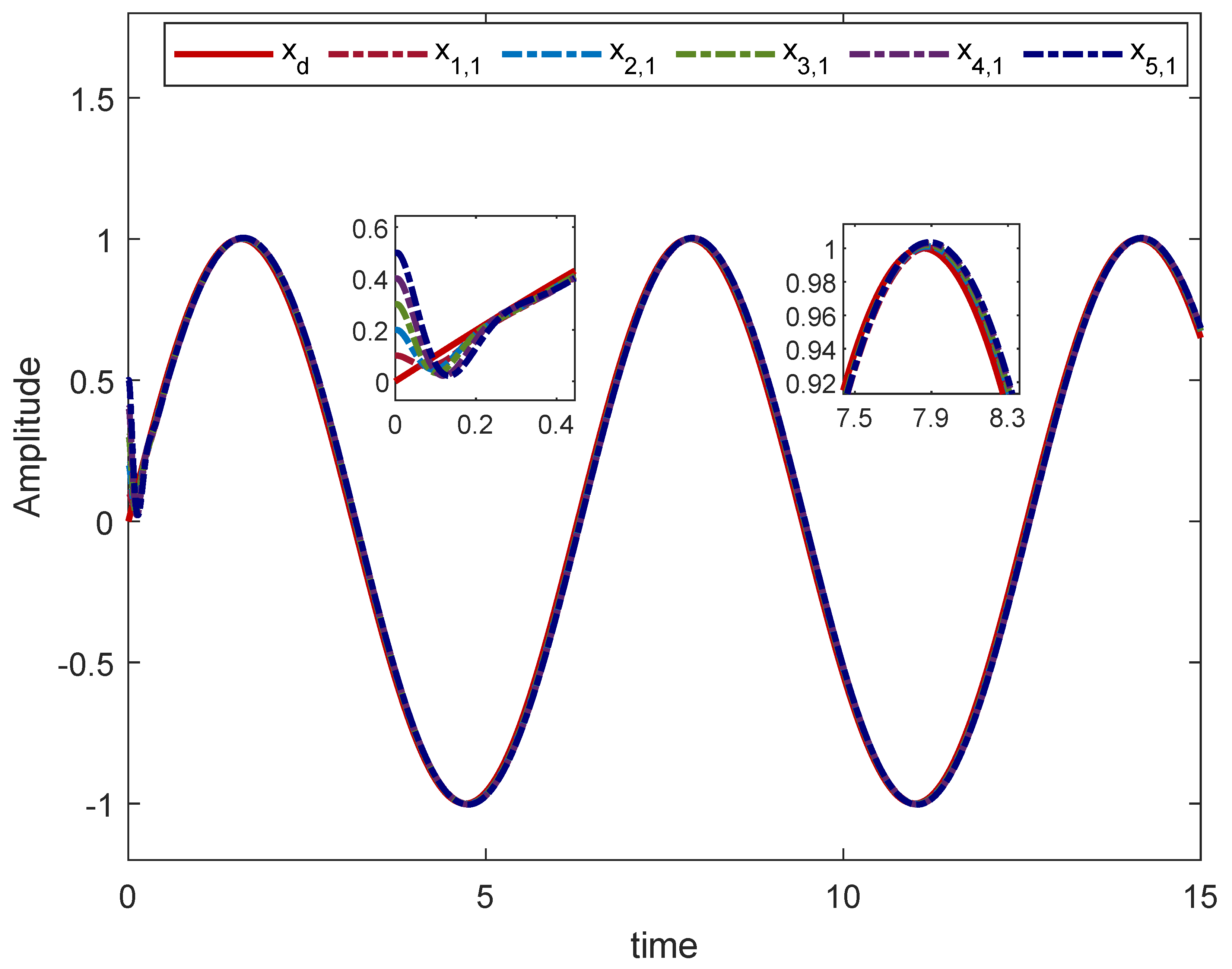

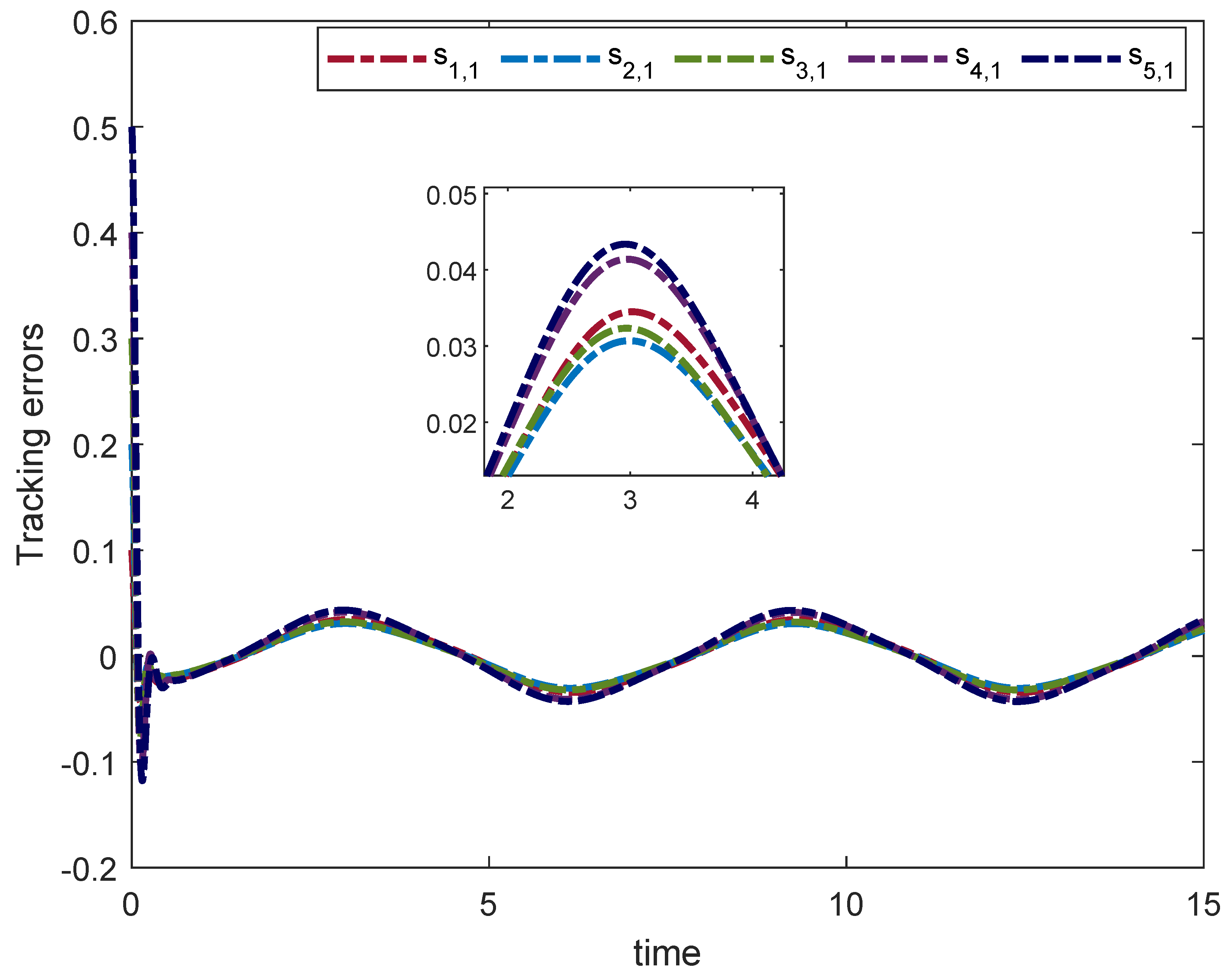

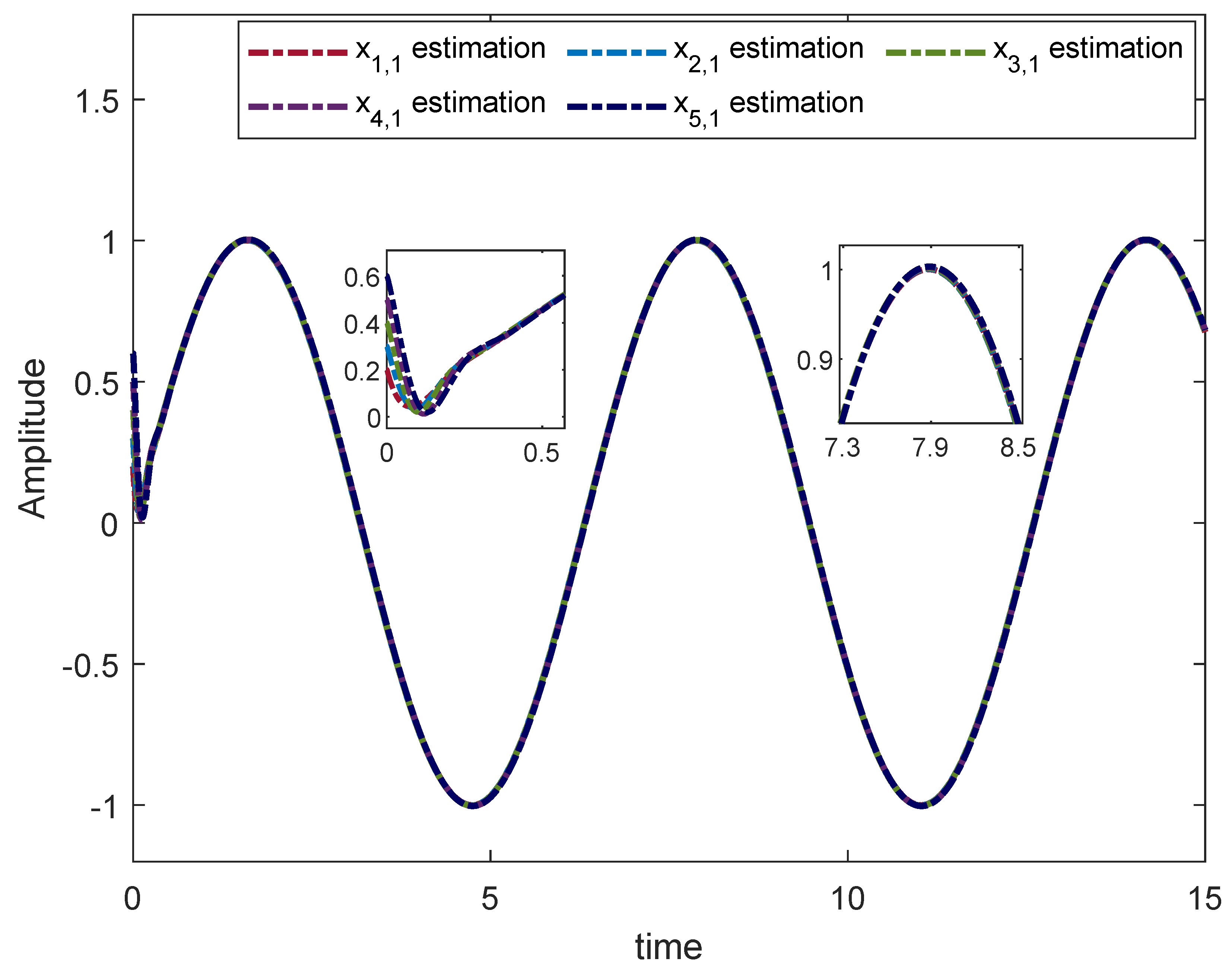

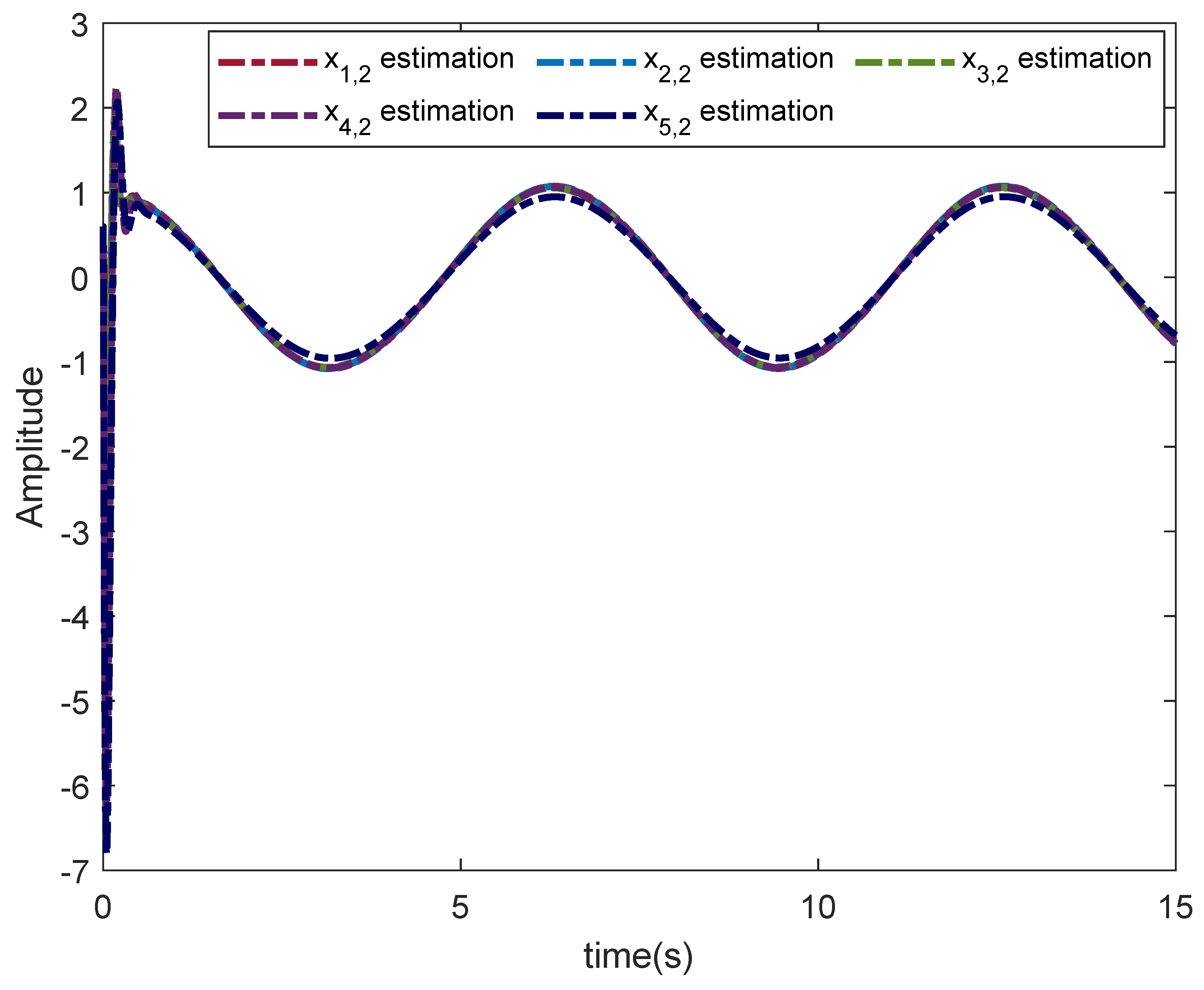

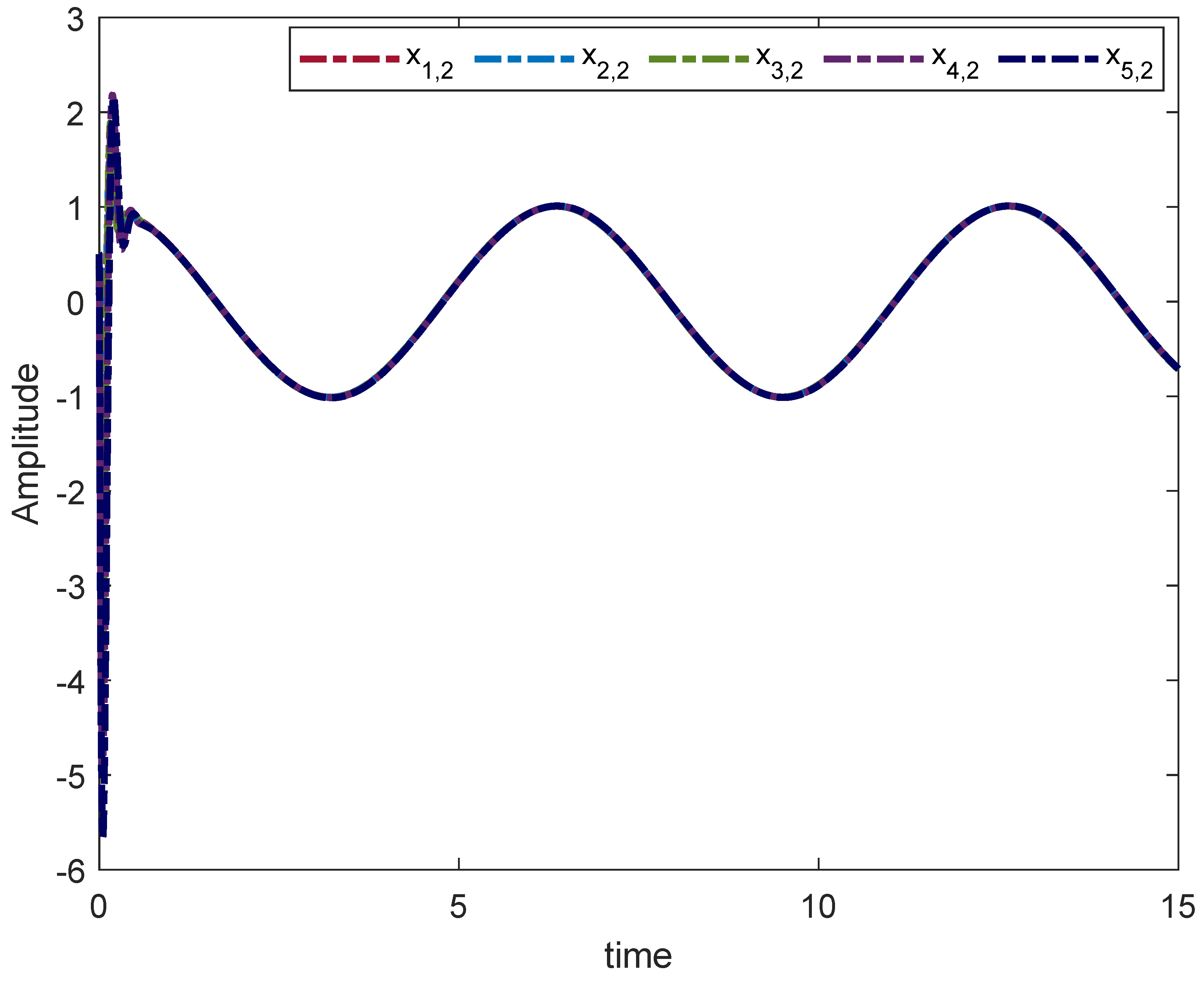

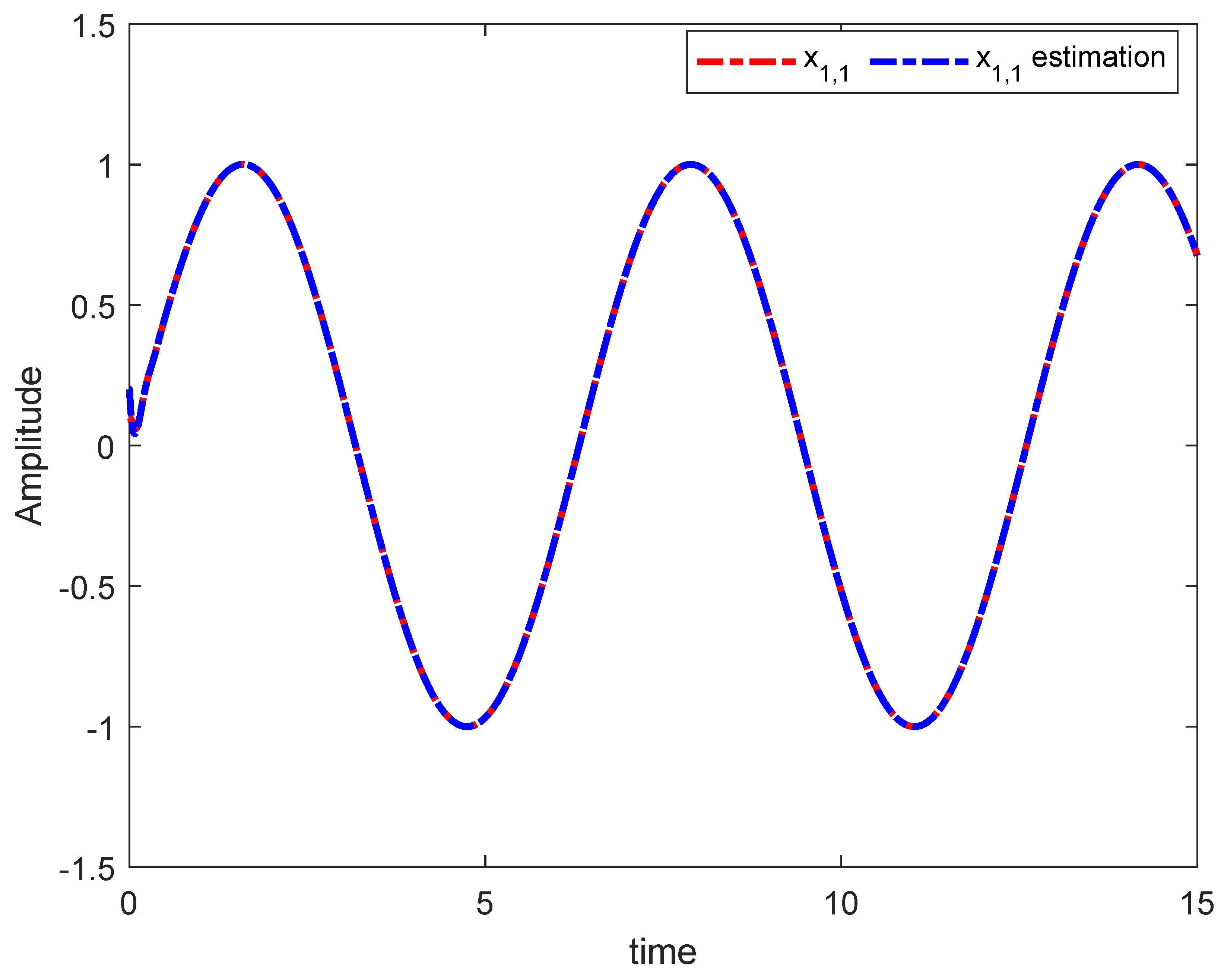

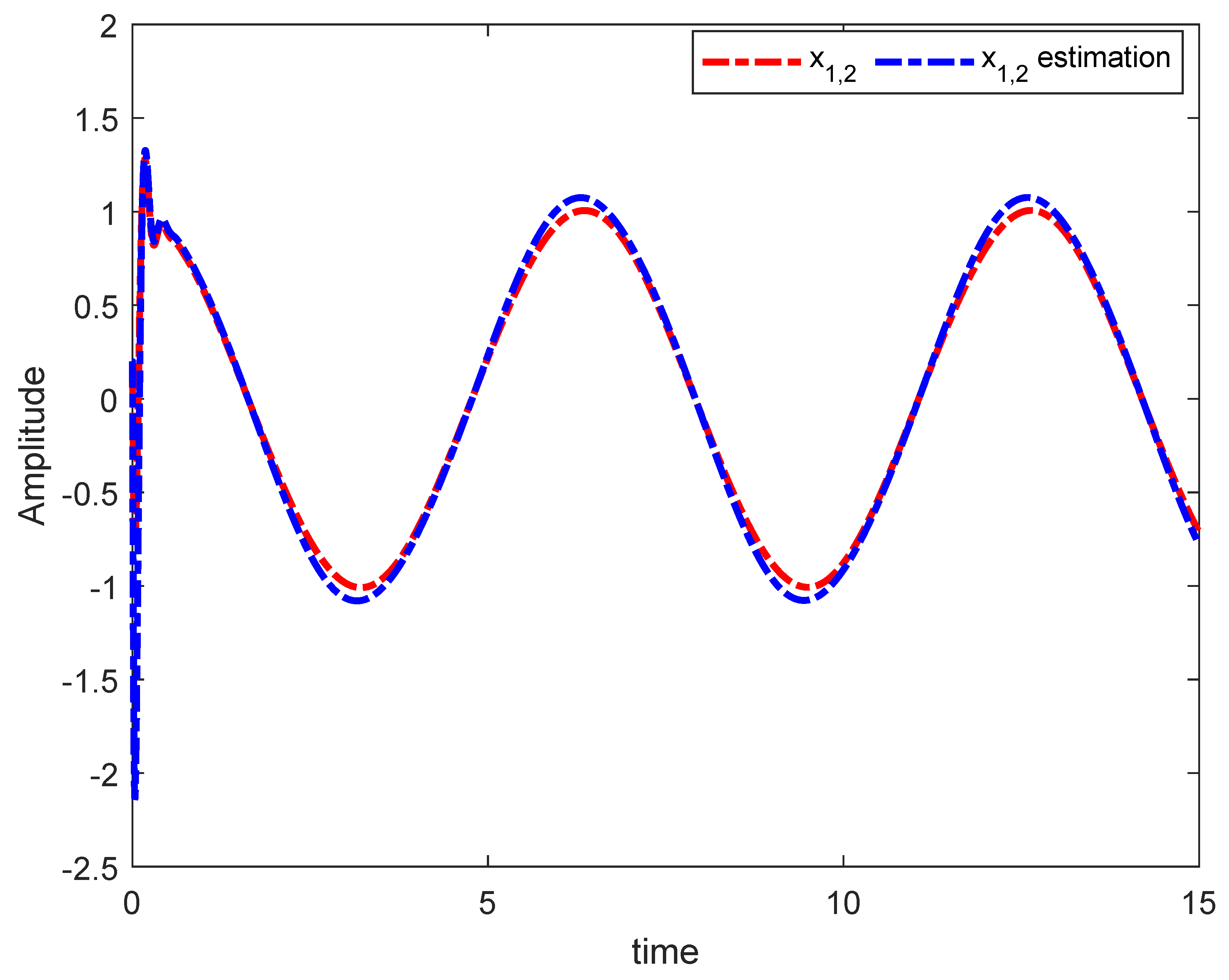

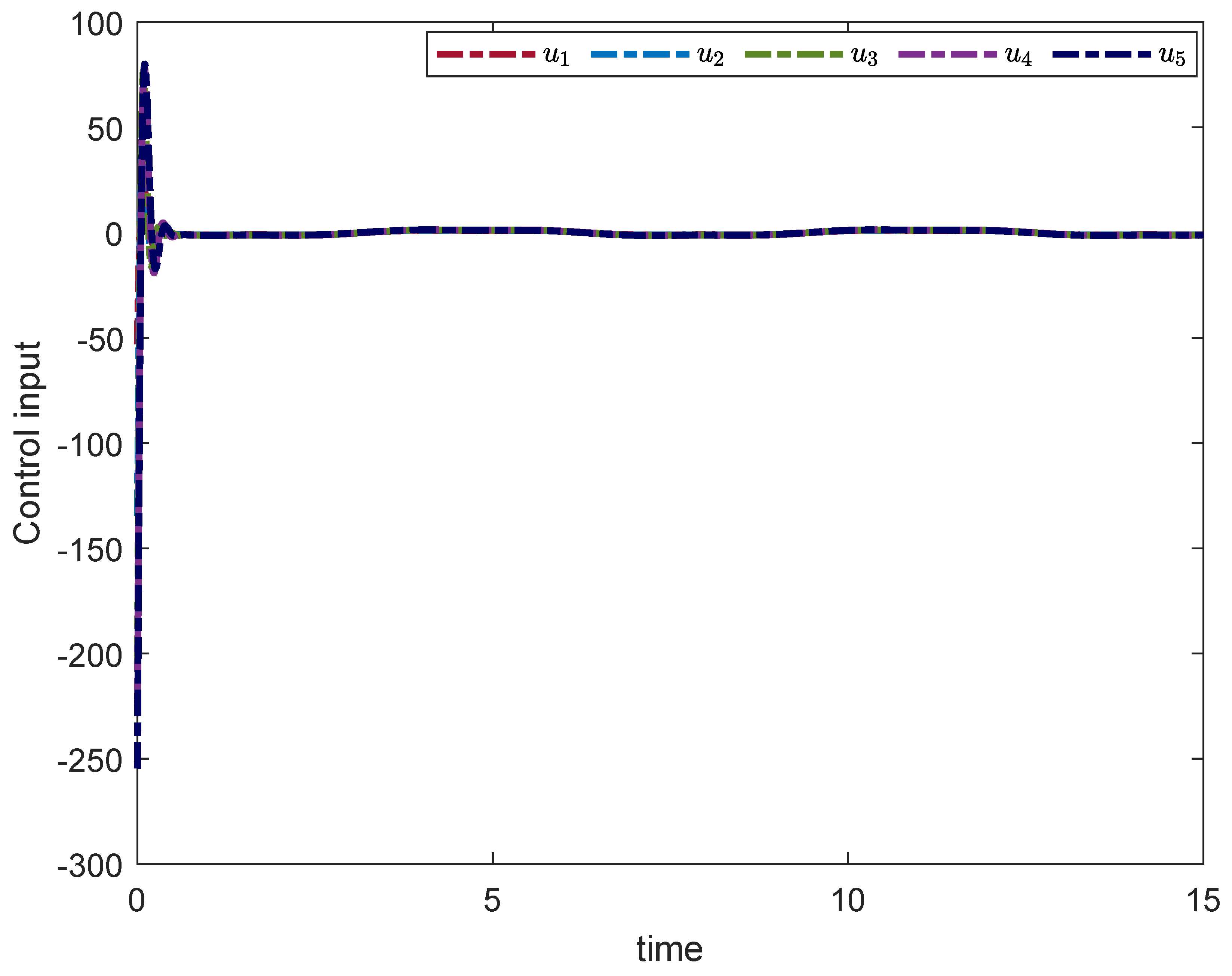

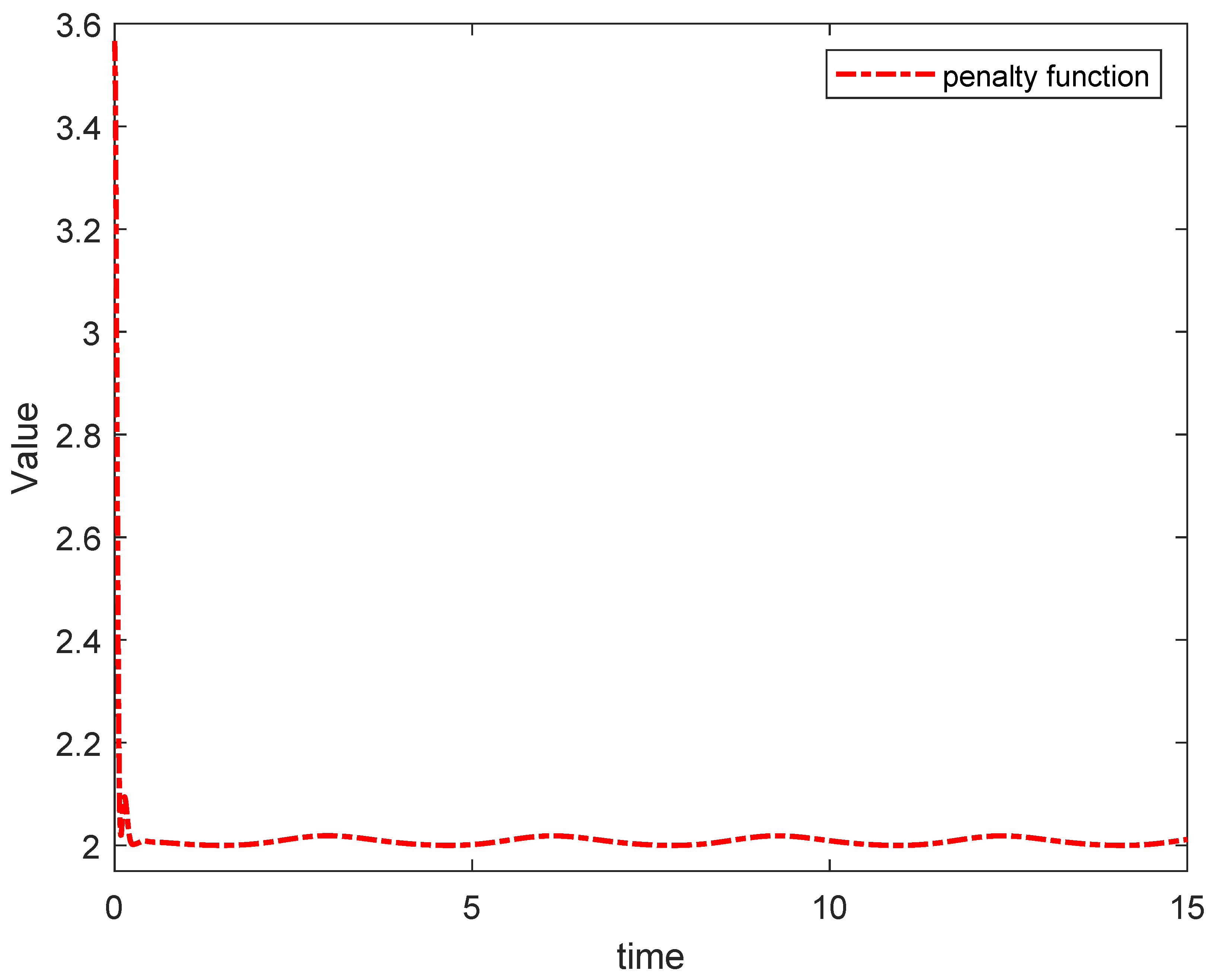

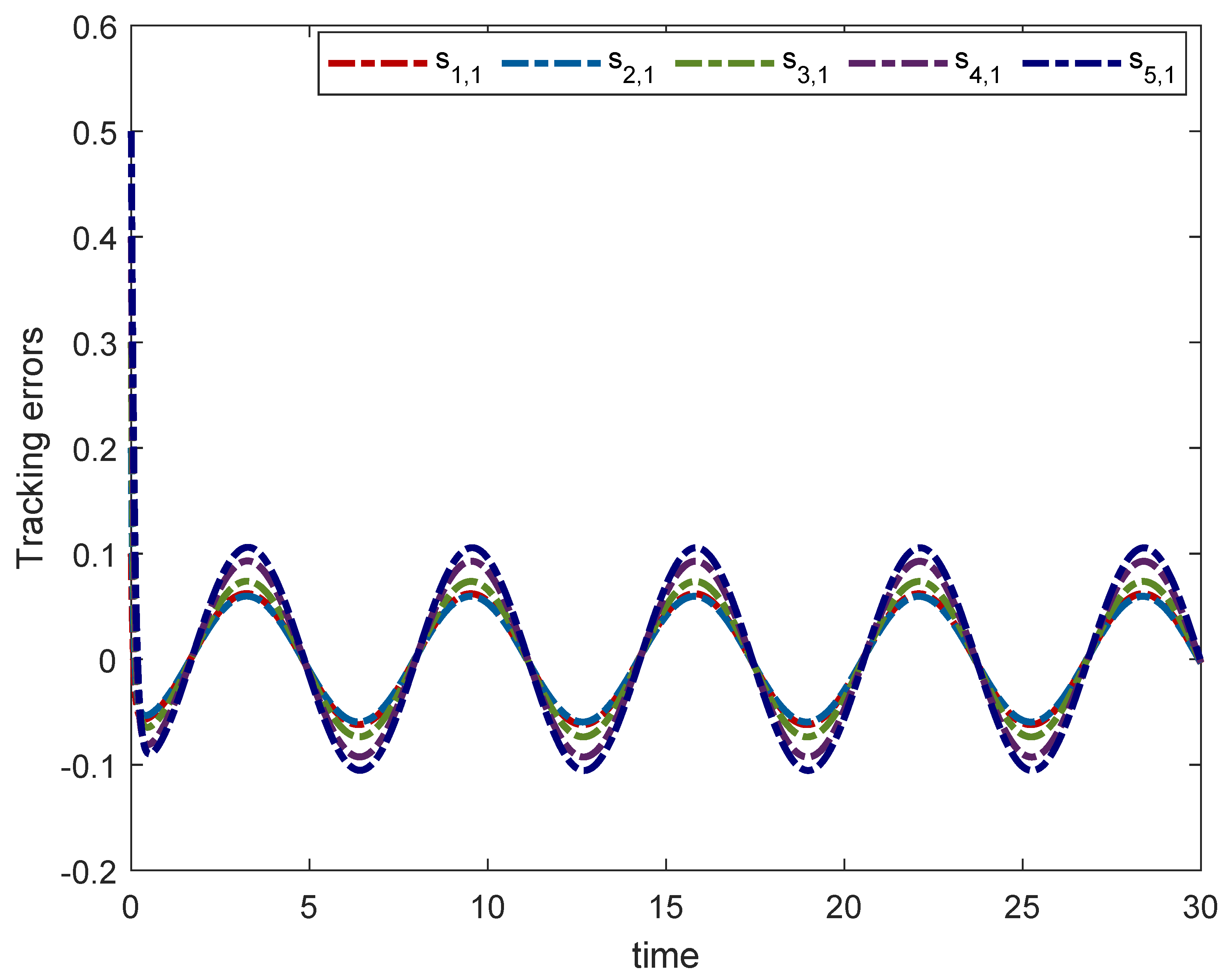

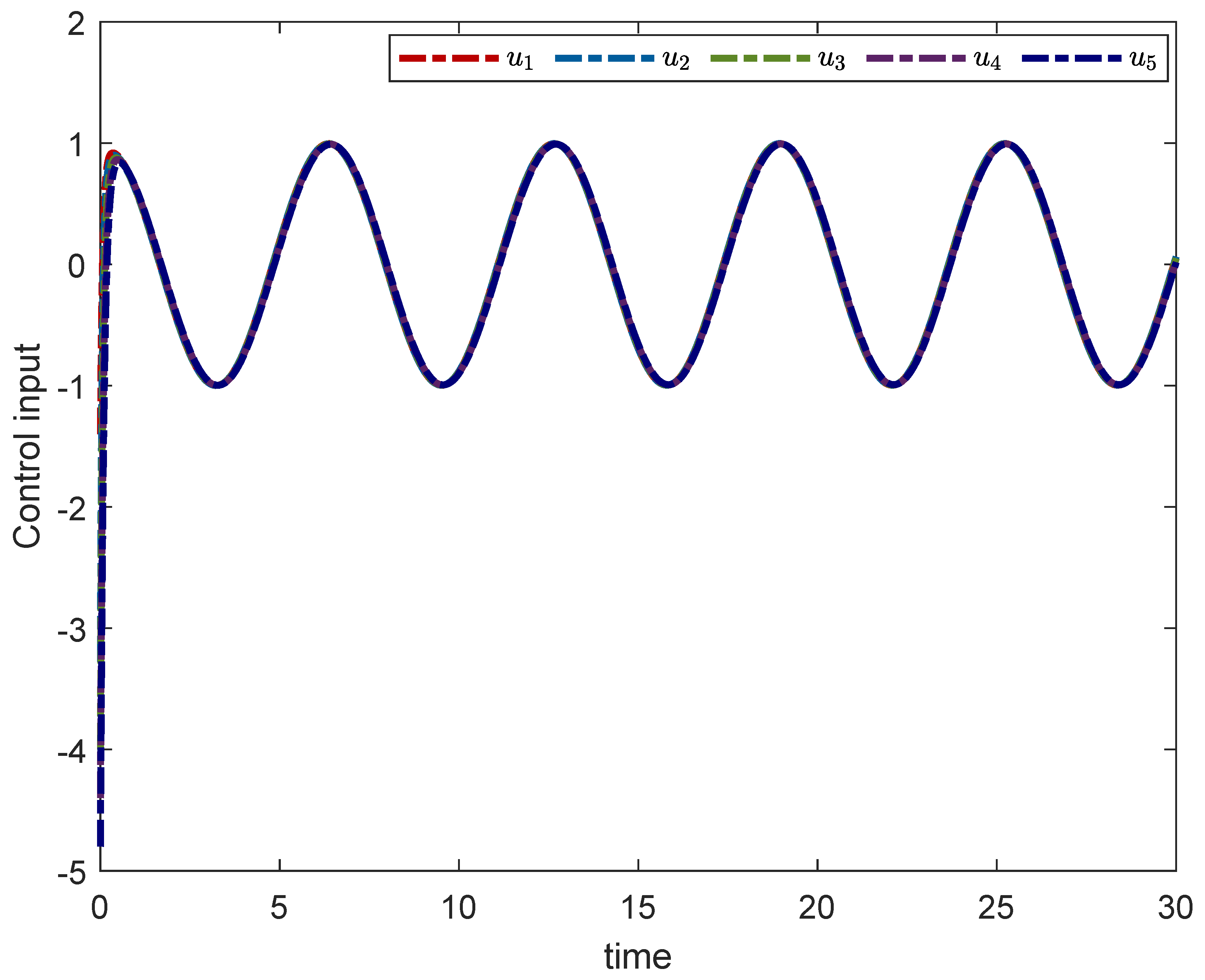

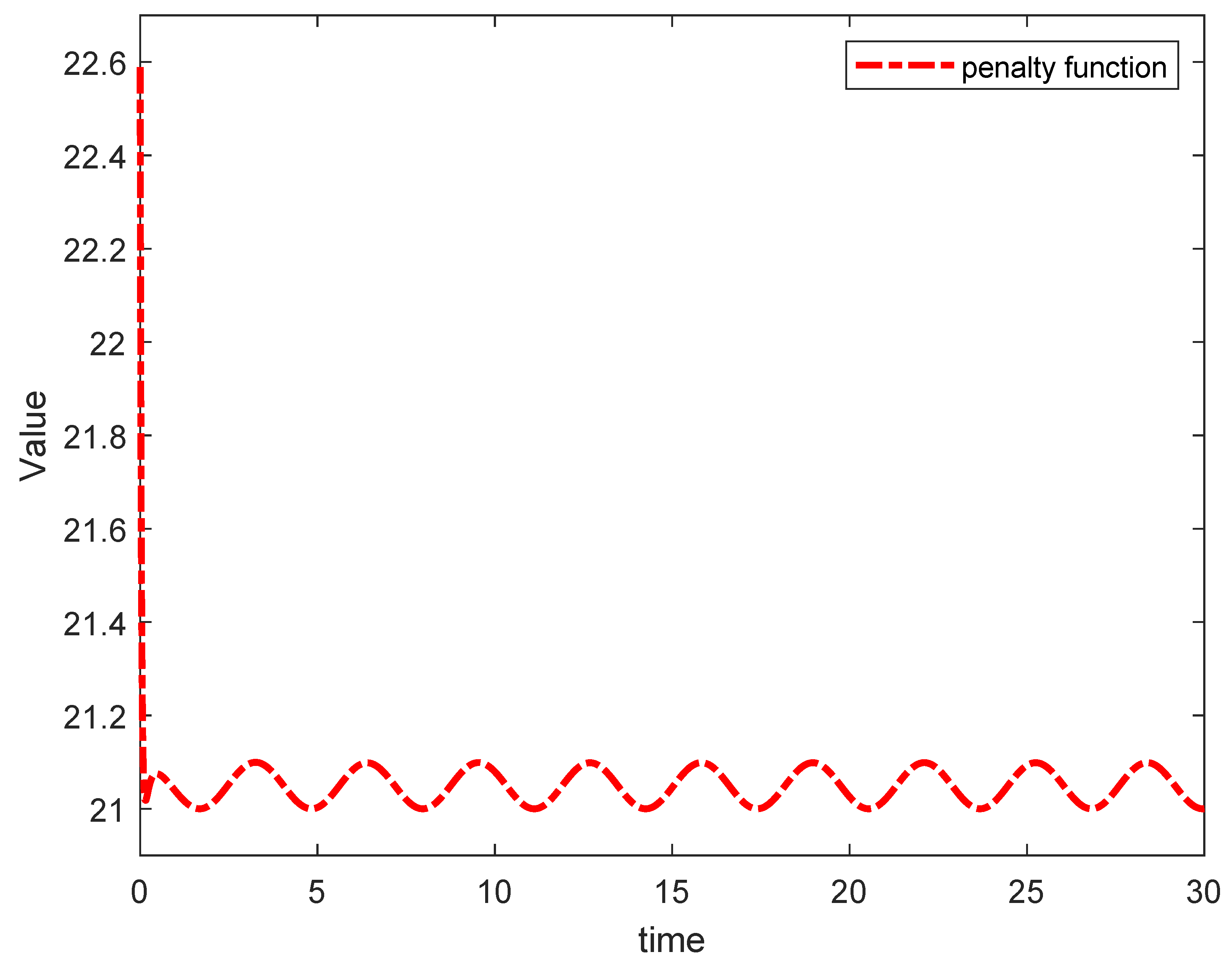

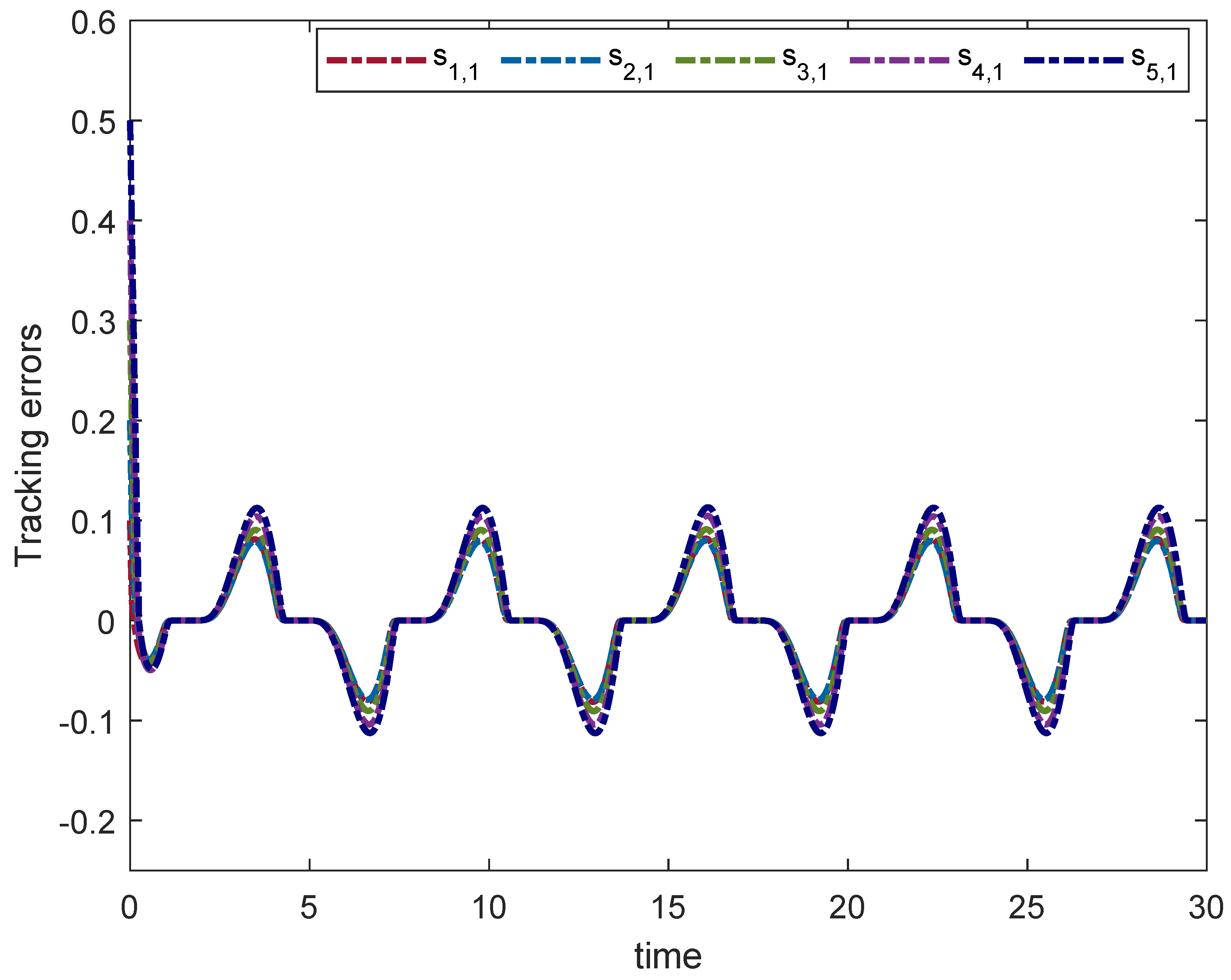

4. Simulation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wang, Z.; Wang, L.; Zhang, H.; Vlacic, L.; Chen, Q. Distributed Formation Control of Nonholonomic Wheeled Mobile Robots Subject to Longitudinal Slippage Constraints. IEEE Trans. Syst. Man Cybern. Syst. 2021, 51, 2992–3003. [Google Scholar] [CrossRef]

- Klaimi, J.; Rahim-Amoud, R.; Merghem-Boulahia, L.; Jrad, A. A novel loss-based energy management approach for smart grids using multi-agent systems and intelligent storage systems. Sustain. Cities Soc. 2018, 39, 344–357. [Google Scholar] [CrossRef]

- Taboun, M.S.; Brennan, R.W. An Embedded Multi-Agent Systems Based Industrial Wireless Sensor Network. Sensors 2017, 17, 2112. [Google Scholar] [CrossRef] [PubMed]

- Cao, D.; Zhao, J.; Hu, W.; Ding, F.; Huang, Q.; Chen, Z.; Blaabjerg, F. Data-Driven Multi-Agent Deep Reinforcement Learning for Distribution System Decentralized Voltage Control with High Penetration of PVs. IEEE Trans. Smart Grid 2021, 12, 4137–4150. [Google Scholar] [CrossRef]

- Jiménez, A.C.; García-Díaz, V.; Bolaños, S. A Decentralized Framework for Multi-Agent Robotic Systems. Sensors 2018, 18, 417. [Google Scholar] [CrossRef]

- Chen, T.; Yuan, J.; Yang, H. Event-triggered adaptive neural network backstepping sliding mode control of fractional-order multi-agent systems with input delay. J. Vib. Control. 2021, 10775463211036827. [Google Scholar] [CrossRef]

- Yuan, J.; Chen, T. Observer-based adaptive neural network dynamic surface bipartite containment control for switched fractional order multi-agent systems. Int. J. Adapt. Control Signal Process. 2022, 36, 1619–1646. [Google Scholar] [CrossRef]

- Yuan, J.; Chen, T. Switched Fractional Order Multiagent Systems Containment Control with Event-Triggered Mechanism and Input Quantization. Fractal Fract. 2022, 6, 77. [Google Scholar] [CrossRef]

- Zilun, H.; Jianying, Y. Distributed optimal formation algorithm for multi-satellites system with time-varying performance function. Int. J. Control 2018, 93, 1015–1026. [Google Scholar]

- Chen, T.; Shan, J. Continuous constrained attitude regulation of multiple spacecraft on SO3. Aerosp. Sci. Technol. 2020, 99, 105769.1–105769.15. [Google Scholar] [CrossRef]

- Chen, T.; Shan, J. Distributed spacecraft attitude tracking and synchronization under directed graphs. Aerosp. Sci. Technol. 2021, 109, 106432. [Google Scholar] [CrossRef]

- Wang, X.; Li, S.; Wang, G. Distributed optimization for disturbed second-order multiagent systems based on active antidisturbance control. IEEE Trans. Neural Netw. Learn. Syst. 2019, 31, 2104–2117. [Google Scholar] [CrossRef] [PubMed]

- Guo, G.; Kang, J. Distributed Optimization of Multiagent Systems Against Unmatched Disturbances: A Hierarchical Integral Control Framework. IEEE Trans. Syst. Man Cybern. Syst. 2022, 52, 3556–3567. [Google Scholar] [CrossRef]

- Pilloni, A.; Franceschelli, M.; Pisano, A.; Usai, E. Sliding Mode-Based Robustification of Consensus and Distributed Optimization Control Protocols. IEEE Trans. Autom. Control 2021, 66, 1207–1214. [Google Scholar] [CrossRef]

- Liu, Y.; Yang, G.H. Distributed Robust Adaptive Optimization for Nonlinear Multiagent Systems. IEEE Trans. Syst. Man Cybern. Syst. 2021, 51, 1046–1053. [Google Scholar] [CrossRef]

- Feng, Z.; Hu, G.; Cassandras, C.G. Finite-time distributed convex optimization for continuous-time multiagent systems with disturbance rejection. IEEE Trans. Control Netw. Syst. 2019, 7, 686–698. [Google Scholar] [CrossRef]

- Qin, Z.; Liu, T.; Jiang, Z.P. Adaptive backstepping for distributed optimization. Automatica 2022, 141, 110304. [Google Scholar] [CrossRef]

- Chen, X.; Zhao, L.; Yu, J. Adaptive neural finite-time bipartite consensus tracking of nonstrict feedback nonlinear coopetition multi-agent systems with input saturation. Neurocomputing 2020, 397, 168–178. [Google Scholar] [CrossRef]

- Shen, Q.; Shi, P. Distributed command filtered backstepping consensus tracking control of nonlinear multiple-agent systems in strict-feedback form. Automatica 2015, 53, 120–124. [Google Scholar] [CrossRef]

- Lin, Z.; Liu, Z.; Zhang, Y.; Chen, C. Command filtered neural control of multi-agent systems with input quantization and unknown control direction. Neurocomputing 2021, 430, 47–57. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, H.; Li, Q.; Liang, H. Practical fixed-time bipartite consensus control for nonlinear multi-agent systems: A barrier Lyapunov function-based approach. Inf. Sci. 2022, 607, 519–536. [Google Scholar] [CrossRef]

- Li, P.; Wu, X.; Chen, X.; Qiu, J. Distributed adaptive finite-time tracking for multi-agent systems and its application. Neurocomputing 2022, 481, 46–54. [Google Scholar] [CrossRef]

- Zhao, L.; Yu, J.; Lin, C. Command filter based adaptive fuzzy bipartite output consensus tracking of nonlinear coopetition multi-agent systems with input saturation. ISA Trans. 2018, 80, 187–194. [Google Scholar] [CrossRef]

- Mousavi, A.; Markazi, A.H.; Khanmirza, E. Adaptive fuzzy sliding-mode consensus control of nonlinear under-actuated agents in a near-optimal reinforcement learning framework. J. Frankl. Inst. 2022, 359, 4804–4841. [Google Scholar] [CrossRef]

- Yoo, S.J. Distributed Consensus Tracking for Multiple Uncertain Nonlinear Strict-Feedback Systems Under a Directed Graph. IEEE Trans. Neural Netw. Learn. Syst. 2013, 24, 666–672. [Google Scholar] [CrossRef]

- Distributed adaptive coordination control for uncertain nonlinear multi-agent systems with dead-zone input. J. Frankl. Inst. 2016, 353, 2270–2289. [CrossRef]

- Wu, Z.; Zhang, T.; Xia, X.; Hua, Y. Finite-time adaptive neural command filtered control for non-strict feedback uncertain multi-agent systems including prescribed performance and input nonlinearities. Appl. Math. Comput. 2022, 421, 126953. [Google Scholar] [CrossRef]

- Qu, F.; Tong, S. Observer-based fuzzy adaptive quantized control for uncertain nonlinear multiagent systems. Int. J. Adapt. Control Signal Process. 2019, 33, 567–585. [Google Scholar] [CrossRef]

- Li, Y.m.; Li, K.; Tong, S. An Observer-Based Fuzzy Adaptive Consensus Control Method for Nonlinear Multi-Agent Systems. IEEE Trans. Fuzzy Syst. 2022, 30, 4667–4678. [Google Scholar] [CrossRef]

- Wang, W.; Tong, S. Observer-Based Adaptive Fuzzy Containment Control for Multiple Uncertain Nonlinear Systems. IEEE Trans. Fuzzy Syst. 2019, 27, 2079–2089. [Google Scholar] [CrossRef]

- Li, Y.; Qu, F.; Tong, S. Observer-Based Fuzzy Adaptive Finite-Time Containment Control of Nonlinear Multiagent Systems with Input Delay. IEEE Trans. Cybern. 2021, 51, 126–137. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.; Ma, H.; Chen, M.; Li, H. Observer-Based Fixed-Time Adaptive Fuzzy Bipartite Containment Control for Multiagent Systems with Unknown Hysteresis. IEEE Trans. Fuzzy Syst. 2022, 30, 1302–1312. [Google Scholar] [CrossRef]

- Chen, C.; Ren, C.E.; Tao, D. Fuzzy Observed-Based Adaptive Consensus Tracking Control for Second-Order Multiagent Systems with Heterogeneous Nonlinear Dynamics. IEEE Trans. Fuzzy Syst. 2016, 24, 906–915. [Google Scholar] [CrossRef]

- Zhao, L.; Yu, J.; Lin, C. Distributed adaptive output consensus tracking of nonlinear multi-agent systems via state observer and command filtered backstepping. Inf. Sci. 2019, 478, 355–374. [Google Scholar] [CrossRef]

- Gao, Z.; Zhang, H.; Wang, Y.; Mu, Y. Time-varying output formation-containment control for homogeneous/heterogeneous descriptor fractional-order multi-agent systems. Inf. Sci. 2021, 567, 146–166. [Google Scholar] [CrossRef]

- Lin, W.; Peng, S.; Fu, Z.; Chen, T.; Gu, Z. Consensus of fractional-order multi-agent systems via event-triggered pinning impulsive control. Neurocomputing 2022, 494, 409–417. [Google Scholar] [CrossRef]

- Zhang, X.; Chen, S.; Zhang, J.X. Adaptive sliding mode consensus control based on neural network for singular fractional order multi-agent systems. Appl. Math. Comput. 2022, 434, 127442. [Google Scholar] [CrossRef]

- Gong, P.; Lan, W.; Han, Q.L. Robust adaptive fault-tolerant consensus control for uncertain nonlinear fractional-order multi-agent systems with directed topologies. Automatica 2020, 117, 109011. [Google Scholar] [CrossRef]

- Cheng, Y.; Hu, T.; Li, Y.; Zhong, S. Consensus of fractional-order multi-agent systems with uncertain topological structure: A Takagi-Sugeno fuzzy event-triggered control strategy. Fuzzy Sets Syst. 2021, 416, 64–85. [Google Scholar] [CrossRef]

- Shahvali, M.; Azarbahram, A.; Naghibi-Sistani, M.B.; Askari, J. Bipartite consensus control for fractional-order nonlinear multi-agent systems: An output constraint approach. Neurocomputing 2020, 397, 212–223. [Google Scholar] [CrossRef]

- Zhu, W.; Li, W.; Zhou, P.; Yang, C. Consensus of fractional-order multi-agent systems with linear models via observer-type protocol. Neurocomputing 2017, 230, 60–65. [Google Scholar] [CrossRef]

- Chen, S.; An, Q.; Zhou, H.; Su, H. Observer-based consensus for fractional-order multi-agent systems with positive constraint. Neurocomputing 2022, 501, 489–498. [Google Scholar] [CrossRef]

- Li, Y.; Chen, Y.; Podlubny, I. Mittag–Leffler stability of fractional order nonlinear dynamic systems. Automatica 2009, 45, 1965–1969. [Google Scholar] [CrossRef]

- Podlubny, I. An introduction to fractional derivatives, fractional differential equations, to methods of their solution and some of their applications. Math. Sci. Eng 1999, 198, 340. [Google Scholar]

- Duarte-Mermoud, M.A.; Aguila-Camacho, N.; Gallegos, J.A.; Castro-Linares, R. Using general quadratic Lyapunov functions to prove Lyapunov uniform stability for fractional order systems. Commun. Nonlinear Sci. Numer. Simul. 2015, 22, 650–659. [Google Scholar] [CrossRef]

- Wang, Y.; Xie, L.; De Souza, C.E. Robust control of a class of uncertain nonlinear systems. Syst. Control Lett. 1992, 19, 139–149. [Google Scholar] [CrossRef]

- Li, Z.; Duan, Z. Cooperative Control of Multi-Agent Systems: A Consensus Region Approach; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- Zou, Y.; Zheng, Z. A robust adaptive RBFNN augmenting backstepping control approach for a model-scaled helicopter. IEEE Trans. Control Syst. Technol. 2015, 23, 2344–2352. [Google Scholar]

- Huang, J.T. Global tracking control of strict-feedback systems using neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2012, 23, 1714–1725. [Google Scholar] [CrossRef]

- Wang, D.; Huang, J. Neural network-based adaptive dynamic surface control for a class of uncertain nonlinear systems in strict-feedback form. IEEE Trans. Neural Netw. 2005, 16, 195–202. [Google Scholar] [CrossRef]

- Yu, J.; Shi, P.; Dong, W.; Chen, B.; Lin, C. Neural network-based adaptive dynamic surface control for permanent magnet synchronous motors. IEEE Trans. Neural Netw. Learn. Syst. 2014, 26, 640–645. [Google Scholar]

- Bernstein, A.; Dall’Anese, E.; Simonetto, A. Online primal-dual methods with measurement feedback for time-varying convex optimization. IEEE Trans. Signal Process. 2019, 67, 1978–1991. [Google Scholar] [CrossRef]

- Huang, B.; Zou, Y.; Meng, Z.; Ren, W. Distributed time-varying convex optimization for a class of nonlinear multiagent systems. IEEE Trans. Autom. Control 2019, 65, 801–808. [Google Scholar] [CrossRef]

- Yi, X.; Li, X.; Xie, L.; Johansson, K.H. Distributed online convex optimization with time-varying coupled inequality constraints. IEEE Trans. Signal Process. 2020, 68, 731–746. [Google Scholar] [CrossRef]

- Hu, Z.; Yang, J. Distributed finite-time optimization for second order continuous-time multiple agents systems with time-varying cost function. Neurocomputing 2018, 287, 173–184. [Google Scholar] [CrossRef]

- Deepika, D.; Kaur, S.; Narayan, S. Uncertainty and disturbance estimator based robust synchronization for a class of uncertain fractional chaotic system via fractional order sliding mode control. Chaos Solitons Fractals 2018, 115, 196–203. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, X.; Zhao, W.; Yuan, J.; Chen, T.; Zhang, C.; Wang, L. Distributed Optimization for Fractional-Order Multi-Agent Systems Based on Adaptive Backstepping Dynamic Surface Control Technology. Fractal Fract. 2022, 6, 642. https://doi.org/10.3390/fractalfract6110642

Yang X, Zhao W, Yuan J, Chen T, Zhang C, Wang L. Distributed Optimization for Fractional-Order Multi-Agent Systems Based on Adaptive Backstepping Dynamic Surface Control Technology. Fractal and Fractional. 2022; 6(11):642. https://doi.org/10.3390/fractalfract6110642

Chicago/Turabian StyleYang, Xiaole, Weiming Zhao, Jiaxin Yuan, Tao Chen, Chen Zhang, and Liangquan Wang. 2022. "Distributed Optimization for Fractional-Order Multi-Agent Systems Based on Adaptive Backstepping Dynamic Surface Control Technology" Fractal and Fractional 6, no. 11: 642. https://doi.org/10.3390/fractalfract6110642

APA StyleYang, X., Zhao, W., Yuan, J., Chen, T., Zhang, C., & Wang, L. (2022). Distributed Optimization for Fractional-Order Multi-Agent Systems Based on Adaptive Backstepping Dynamic Surface Control Technology. Fractal and Fractional, 6(11), 642. https://doi.org/10.3390/fractalfract6110642