Federated Learning with Adversarial Optimisation for Secure and Efficient 5G Edge Computing Networks

Abstract

1. Introduction

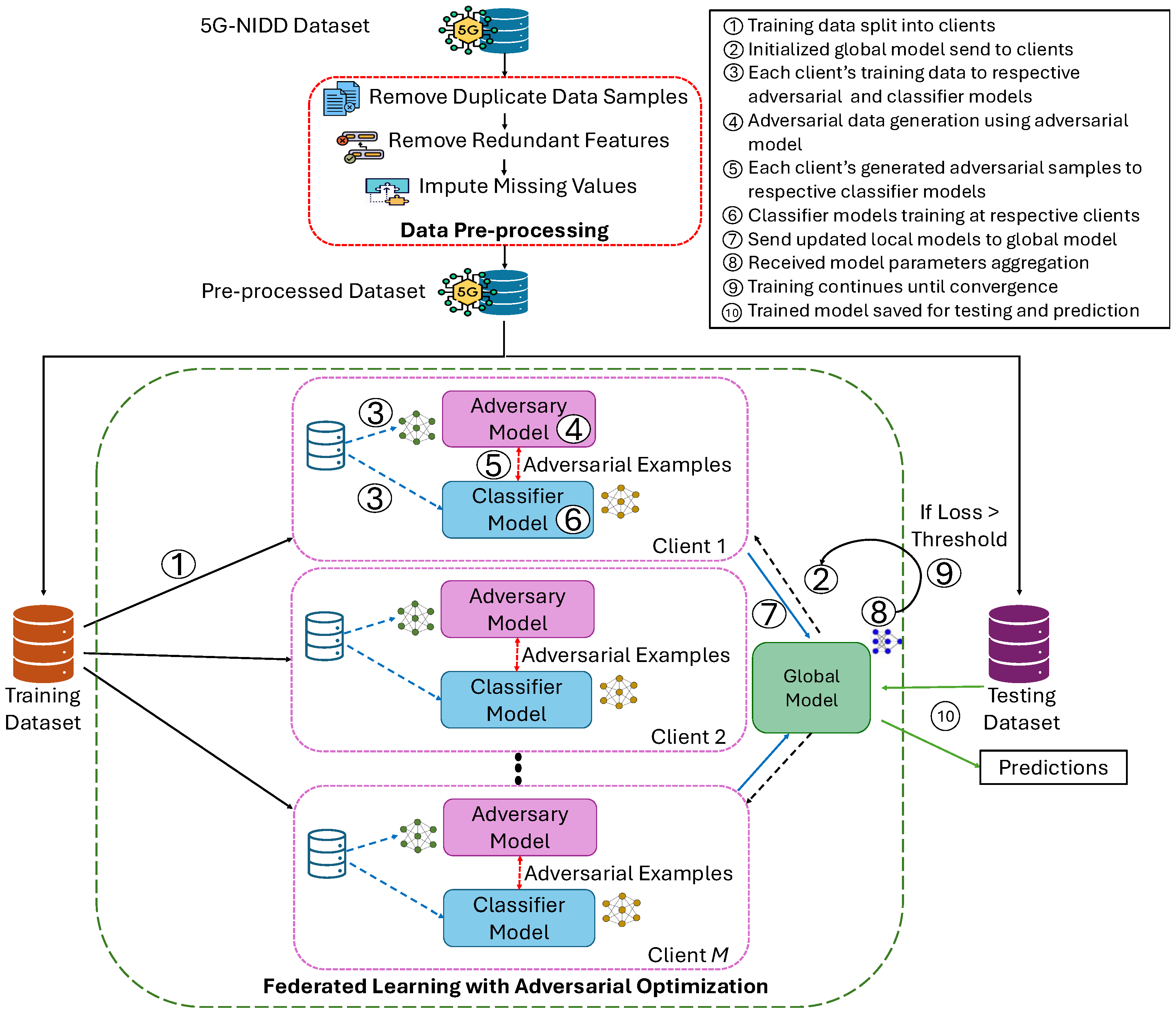

- This paper proposes a novel FL framework with adversarial optimisation to strengthen the security of 5G edge computing networks. By incorporating a classifier model and an adversary model, the proposed algorithm simultaneously improves the robustness of the FL model against adversarial attacks. This ensures a more secure and private FL training across edge devices.

- To train the proposed framework, adversary model considers the Fast Gradient Sign Method (FGSM) for generation of stronger perturbations based on the classifier model’s responses in an iterative manner. This guarantees the improvement of model resilience in privacy-sensitive 5G edge computing networks.

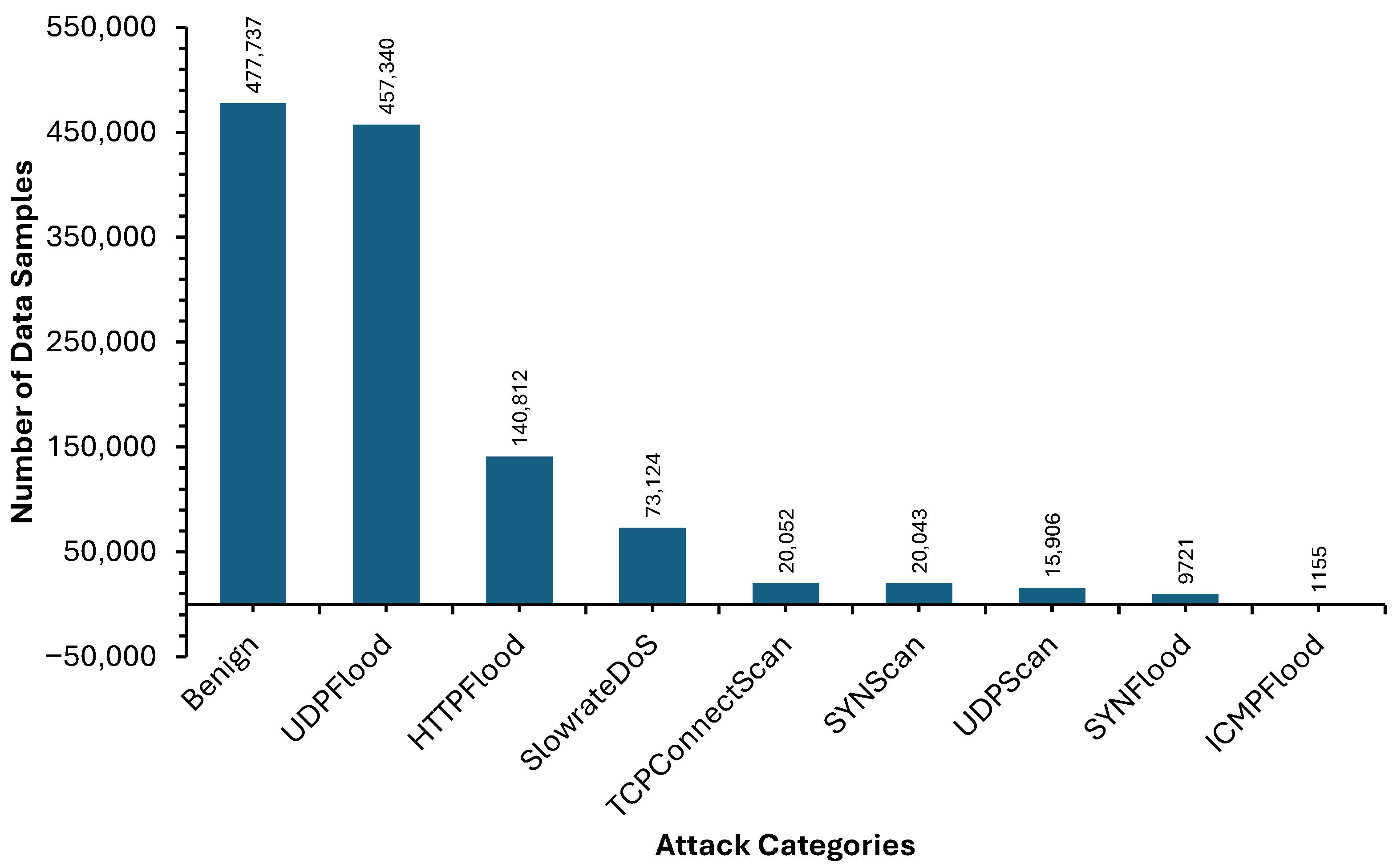

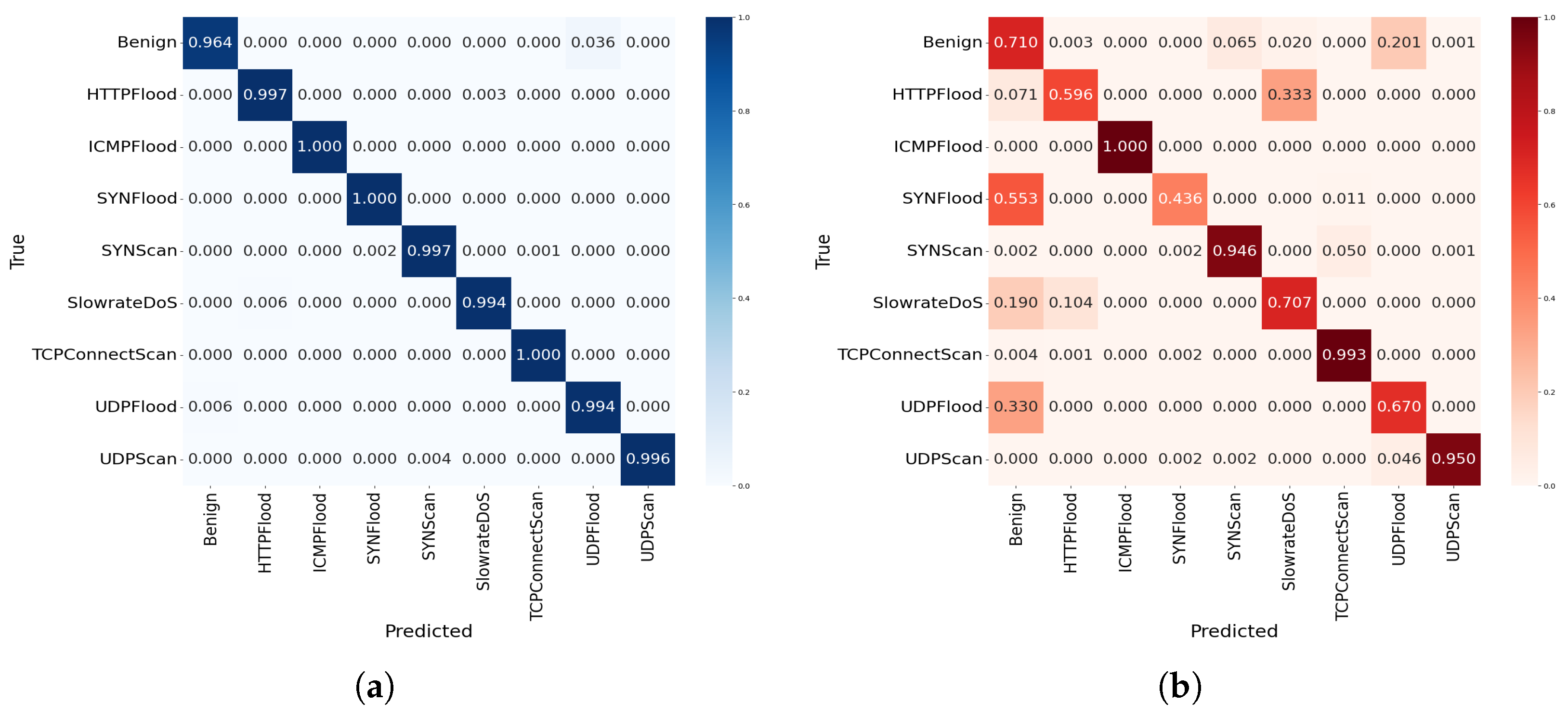

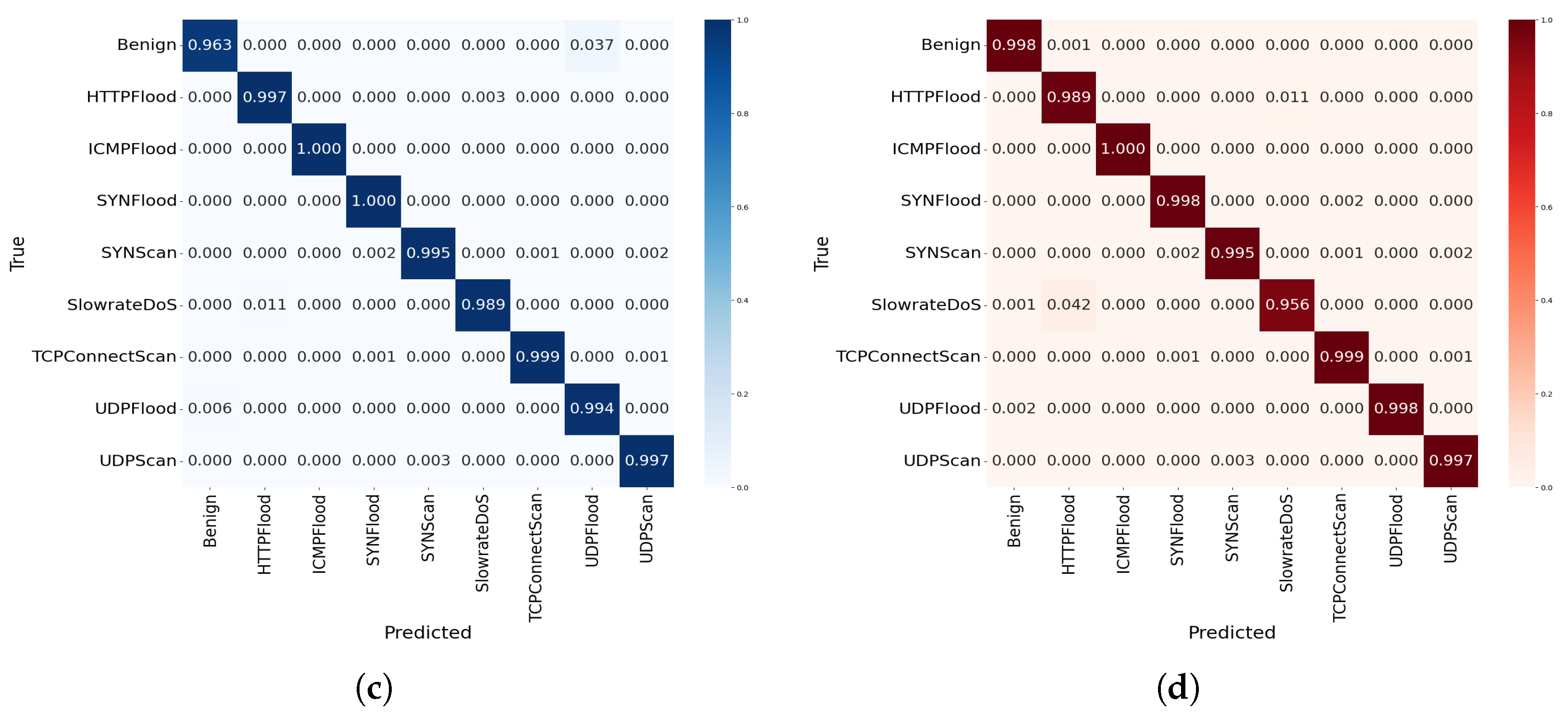

- For performance evaluation, extensive level simulations have been performed considering the 5G-Network Intrusion Detection Dataset (NIDD) [10], which validates the adaptability of the proposed algorithm to 5G edge computing networks including 5G-enabled IoT. Comprehensive experimental analysis reveals valuable insights into the proposed FL with adversarial optimisation algorithm in terms of accuracy, scalability, computational efficiency and time efficiency.

- To demonstrate generalisability beyond 5G edge computing, the proposed model is further validated on IDS-IoT-2024 and CICIDS2017 datasets. Results confirm the generalisation capability of proposed method across diverse FL-based intrusion detection domains.

2. Related Work

3. System Model and Problem Formulation

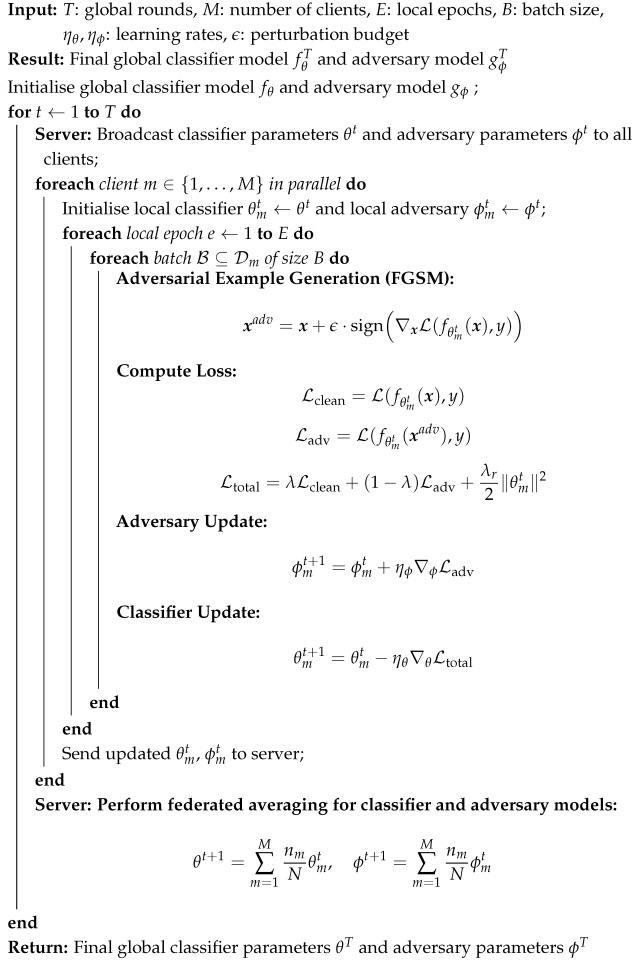

4. Proposed Federated Learning with Adversarial Optimisation Algorithm

| Algorithm 1: Federated Learning with Adversarial Optimisation |

|

4.1. Dataset Description and Pre-Processing

4.2. Training of Federated Learning with Adversarial Optimisation Model

4.3. Testing of the Trained Federated Learning with Adversarial Optimisation Model

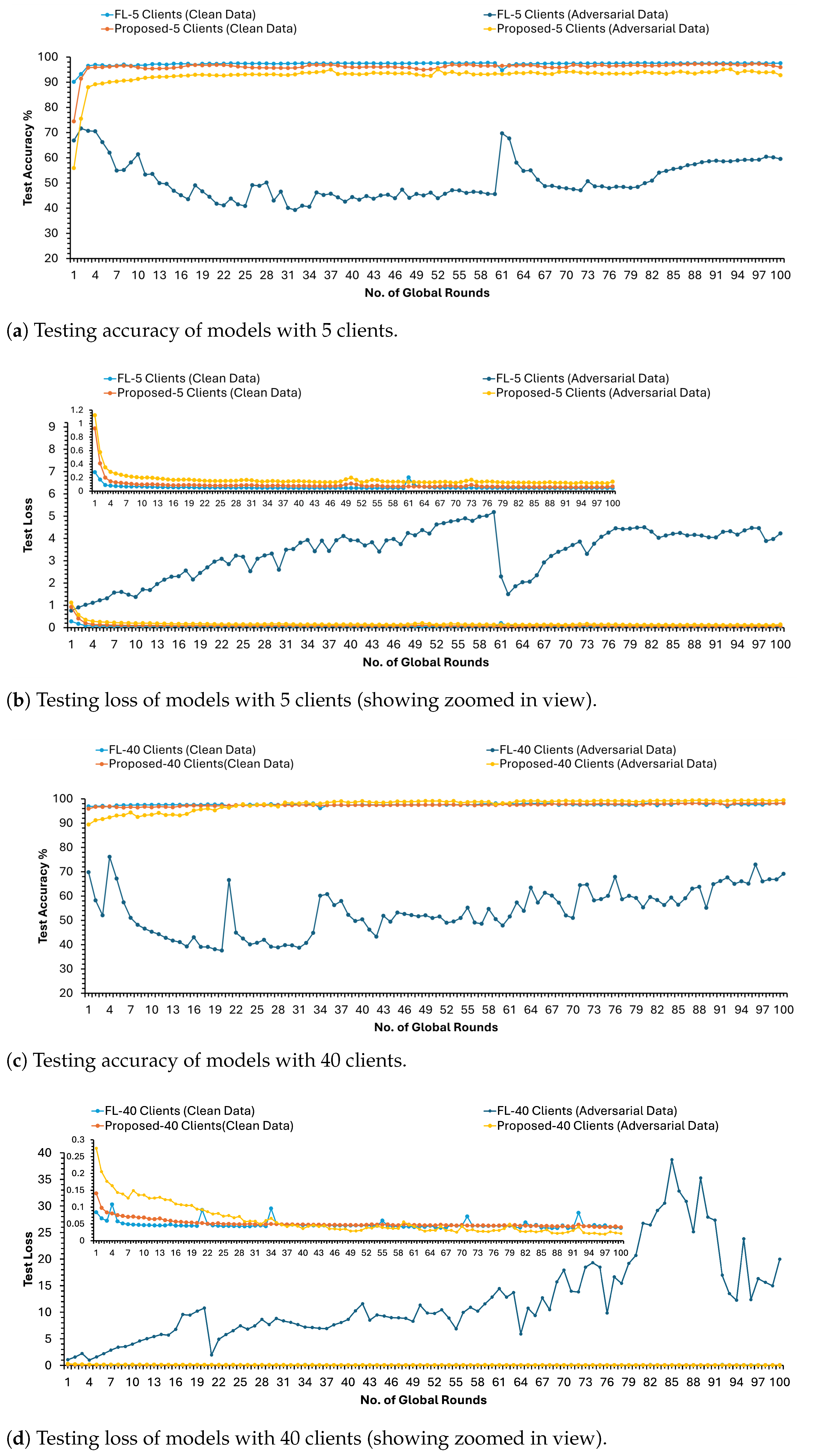

5. Performance Evaluation

Implementation of Federated Learning with Adversarial Optimisation Model

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| 5G-NIDD | 5G-Network Intrusion Detection Dataset |

| FGSM | Fast Gradient Sign Method |

| FL | Federated Learning |

| FLOPS | Floating-point Operations Per Second |

| GPU | Graphics Processing Unit |

| IID | Independent and Identically Distributed |

| IIoT | Industrial Internet of Things |

| PGD | Projected Gradient Descent |

| C&W | Carlini and Wagner |

References

- Hassan, N.; Yau, K.L.A.; Wu, C. Edge computing in 5G: A review. IEEE Access 2019, 7, 127276–127289. [Google Scholar] [CrossRef]

- Lee, J.; Solat, F.; Kim, T.Y.; Poor, H.V. Federated learning-empowered mobile network management for 5G and beyond networks: From access to core. IEEE Commun. Surv. Tutor. 2024, 26, 2176–2212. [Google Scholar] [CrossRef]

- Nowroozi, E.; Haider, I.; Taheri, R.; Conti, M. Federated learning under attack: Exposing vulnerabilities through data poisoning attacks in computer networks. IEEE Trans. Netw. Serv. Manag. 2025, 22, 822–831. [Google Scholar] [CrossRef]

- Han, G.; Ma, W.; Zhang, Y.; Liu, Y.; Liu, S. BSFL: A blockchain-oriented secure federated learning scheme for 5G. J. Inf. Secur. Appl. 2025, 89, 103983. [Google Scholar] [CrossRef]

- Feng, Y.; Guo, Y.; Hou, Y.; Wu, Y.; Lao, M.; Yu, T.; Liu, G. A survey of security threats in federated learning. Complex Intell. Syst. 2025, 11, 1–26. [Google Scholar] [CrossRef]

- Rao, B.; Zhang, J.; Wu, D.; Zhu, C.; Sun, X.; Chen, B. Privacy inference attack and defense in centralized and federated learning: A comprehensive survey. IEEE Trans. Artif. Intell. 2024, 6, 333–353. [Google Scholar] [CrossRef]

- Tahanian, E.; Amouei, M.; Fateh, H.; Rezvani, M. A Game-theoretic Approach for Robust Federated Learning. Int. J. Eng. Trans. A Basics 2021, 34, 832–842. [Google Scholar]

- Guo, Y.; Qin, Z.; Tao, X.; Dobre, O.A. Federated Generative-Adversarial-Network-Enabled Channel Estimation. Intell. Comput. 2024, 3, 0066. [Google Scholar] [CrossRef]

- Grierson, S.; Thomson, C.; Papadopoulos, P.; Buchanan, B. Min-max training: Adversarially robust learning models for network intrusion detection systems. In Proceedings of the 2021 14th International Conference on Security of Information and Networks (SIN), Virtual, 15–17 December 2021; IEEE: New York, NY, USA, 2021; Volume 1, pp. 1–8. [Google Scholar]

- Samarakoon, S.; Siriwardhana, Y.; Porambage, P.; Liyanage, M.; Chang, S.Y.; Kim, J.; Kim, J.; Ylianttila, M. 5G-NIDD: A Comprehensive Network Intrusion Detection Dataset Generated over 5G Wireless Network. arXiv 2022, arXiv:2212.01298. [Google Scholar] [CrossRef]

- Bonawitz, K.; Eichner, H.; Grieskamp, W.; Huba, D.; Ingerman, A.; Ivanov, V.; Kiddon, C.; Konečnỳ, J.; Mazzocchi, S.; McMahan, B.; et al. Towards federated learning at scale: System design. Proc. Mach. Learn. Syst. 2019, 1, 374–388. [Google Scholar]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

- Bagdasaryan, E.; Veit, A.; Hua, Y.; Estrin, D.; Shmatikov, V. How to backdoor federated learning. In Proceedings of the International Conference on Artificial Intelligence and Statistics, Online, 26–28 August 2020; pp. 2938–2948. [Google Scholar]

- Lyu, L.; Yu, H.; Yang, Q. Threats to federated learning: A survey. arXiv 2020, arXiv:2003.02133. [Google Scholar] [CrossRef]

- Abadi, M.; Chu, A.; Goodfellow, I.; McMahan, H.B.; Mironov, I.; Talwar, K.; Zhang, L. Deep learning with differential privacy. In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security, Vienna, Austria, 24–28 October 2016; pp. 308–318. [Google Scholar]

- Bonawitz, K.; Ivanov, V.; Kreuter, B.; Marcedone, A.; McMahan, H.B.; Patel, S.; Ramage, D.; Segal, A.; Seth, K. Practical secure aggregation for privacy-preserving machine learning. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, Dallas, TX, USA, 30 October–3 November 2017; pp. 1175–1191. [Google Scholar]

- Phong, L.T.; Aono, Y.; Hayashi, T.; Wang, L.; Moriai, S. Privacy-preserving deep learning via additively homomorphic encryption. IEEE Trans. Inf. Forensics Secur. 2017, 13, 1333–1345. [Google Scholar] [CrossRef]

- Nasr, M.; Shokri, R.; Houmansadr, A. Machine learning with membership privacy using adversarial regularization. In Proceedings of the 2018 ACM SIGSAC Conference on Computer and Communications Security, Toronto, ON, Canada, 15–19 October 2018; pp. 634–646. [Google Scholar]

- Zhang, J.; Li, B.; Chen, C.; Lyu, L.; Wu, S.; Ding, S.; Wu, C. Delving into the adversarial robustness of federated learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 11245–11253. [Google Scholar]

- Nguyen, T.D.; Nguyen, T.; Le Nguyen, P.; Pham, H.H.; Doan, K.D.; Wong, K.S. Backdoor attacks and defenses in federated learning: Survey, challenges and future research directions. Eng. Appl. Artif. Intell. 2024, 127, 107166. [Google Scholar] [CrossRef]

- Wang, X.; Garg, S.; Lin, H.; Hu, J.; Kaddoum, G.; Piran, M.J.; Hossain, M.S. Toward accurate anomaly detection in Industrial Internet of Things using hierarchical federated learning. IEEE Internet Things J. 2021, 9, 7110–7119. [Google Scholar] [CrossRef]

- Wang, X.; Hu, J.; Lin, H.; Garg, S.; Kaddoum, G.; Piran, M.J.; Hossain, M.S. QoS and privacy-aware routing for 5G-enabled industrial Internet of Things: A federated reinforcement learning approach. IEEE Trans. Ind. Inform. 2021, 18, 4189–4197. [Google Scholar] [CrossRef]

- Wang, X.; Hu, J.; Lin, H.; Liu, W.; Moon, H.; Piran, M.J. Federated learning-empowered disease diagnosis mechanism in the Internet of Medical Things: From the privacy-preservation perspective. IEEE Trans. Ind. Inform. 2022, 19, 7905–7913. [Google Scholar] [CrossRef]

- McCarthy, A.; Ghadafi, E.; Andriotis, P.; Legg, P. Defending against adversarial machine learning attacks using hierarchical learning: A case study on network traffic attack classification. J. Inf. Secur. Appl. 2023, 72, 103398. [Google Scholar] [CrossRef]

- McCarthy, A.; Ghadafi, E.; Andriotis, P.; Legg, P. Functionality-preserving adversarial machine learning for robust classification in cybersecurity and intrusion detection domains: A survey. J. Cybersecur. Priv. 2022, 2, 154–190. [Google Scholar] [CrossRef]

- White, J.; Legg, P. Evaluating Data Distribution Strategies in Federated Learning: A Trade-Off Analysis Between Privacy and Performance for IoT Security. In International Conference on Cyber Security, Privacy in Communication Networks; Springer: Singapore, 2023; pp. 17–37. [Google Scholar]

- White, J.; Legg, P. Federated learning: Data privacy and cyber security in edge-based machine learning. In Data Protection in a Post-Pandemic Society: Laws, Regulations, Best Practices and Recent Solutions; Springer: Cham, Switzerland, 2023; pp. 169–193. [Google Scholar]

- Koppula, M.; Leo Joseph, L.M.I. A Real-World Dataset “IDSIoT2024” for Machine Learning/Deep Learning Based Cyber Attack Detection System for IoT Architecture. In Proceedings of the 2025 3rd International Conference on Intelligent Data Communication Technologies and Internet of Things (IDCIoT), Bengaluru, India, 5–7 February 2025; pp. 1757–1764. [Google Scholar] [CrossRef]

- Sharafaldin, I.; Lashkari, A.H.; Ghorbani, A.A.; Ribeiro, E.A. CICIDS2017 (Cleaned and Preprocessed Version). Dataset. Available online: https://www.kaggle.com/datasets/ericanacletoribeiro/cicids2017-cleaned-and-preprocessed (accessed on 31 July 2025).

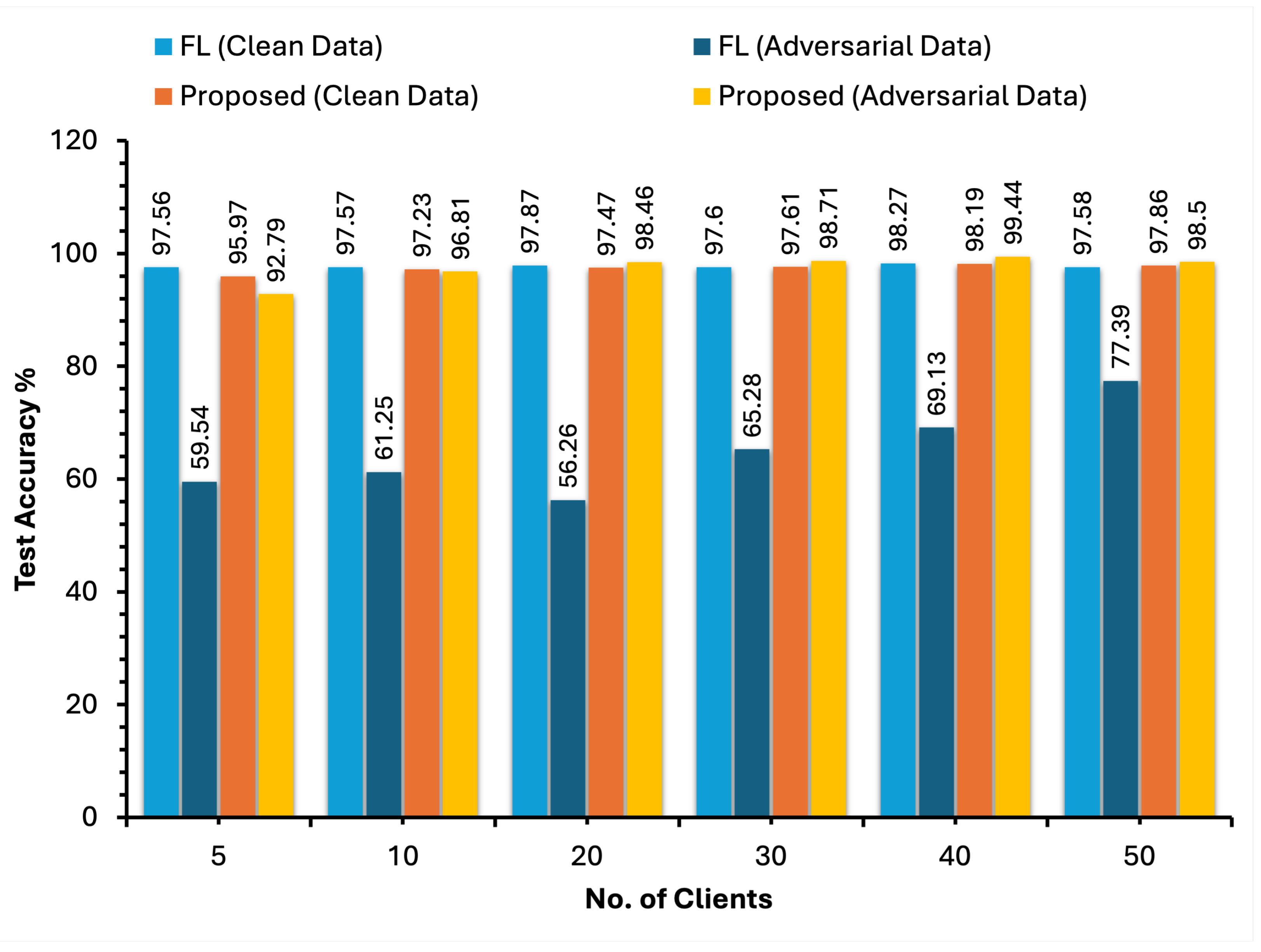

| Clients | Strategy | Clean Test Data | Adversarial Test Data | ||||

|---|---|---|---|---|---|---|---|

| Precision | Recall | F1 | Precision | Recall | F1 | ||

| 5 | FL | 98% | 98% | 98% | 62% | 60% | 57% |

| Proposed | 96% | 96% | 96% | 93% | 93% | 93% | |

| 10 | FL | 98% | 98% | 98% | 67% | 61% | 63% |

| Proposed | 97% | 97% | 97% | 97% | 97% | 97% | |

| 20 | FL | 98% | 98% | 98% | 60% | 56% | 56% |

| Proposed | 97% | 97% | 97% | 98% | 98% | 98% | |

| 30 | FL | 98% | 98% | 98% | 66% | 65% | 64% |

| Proposed | 98% | 98% | 98% | 99% | 99% | 99% | |

| 40 | FL | 98% | 98% | 98% | 72% | 69% | 70% |

| Proposed | 98% | 98% | 98% | 99% | 99% | 99% | |

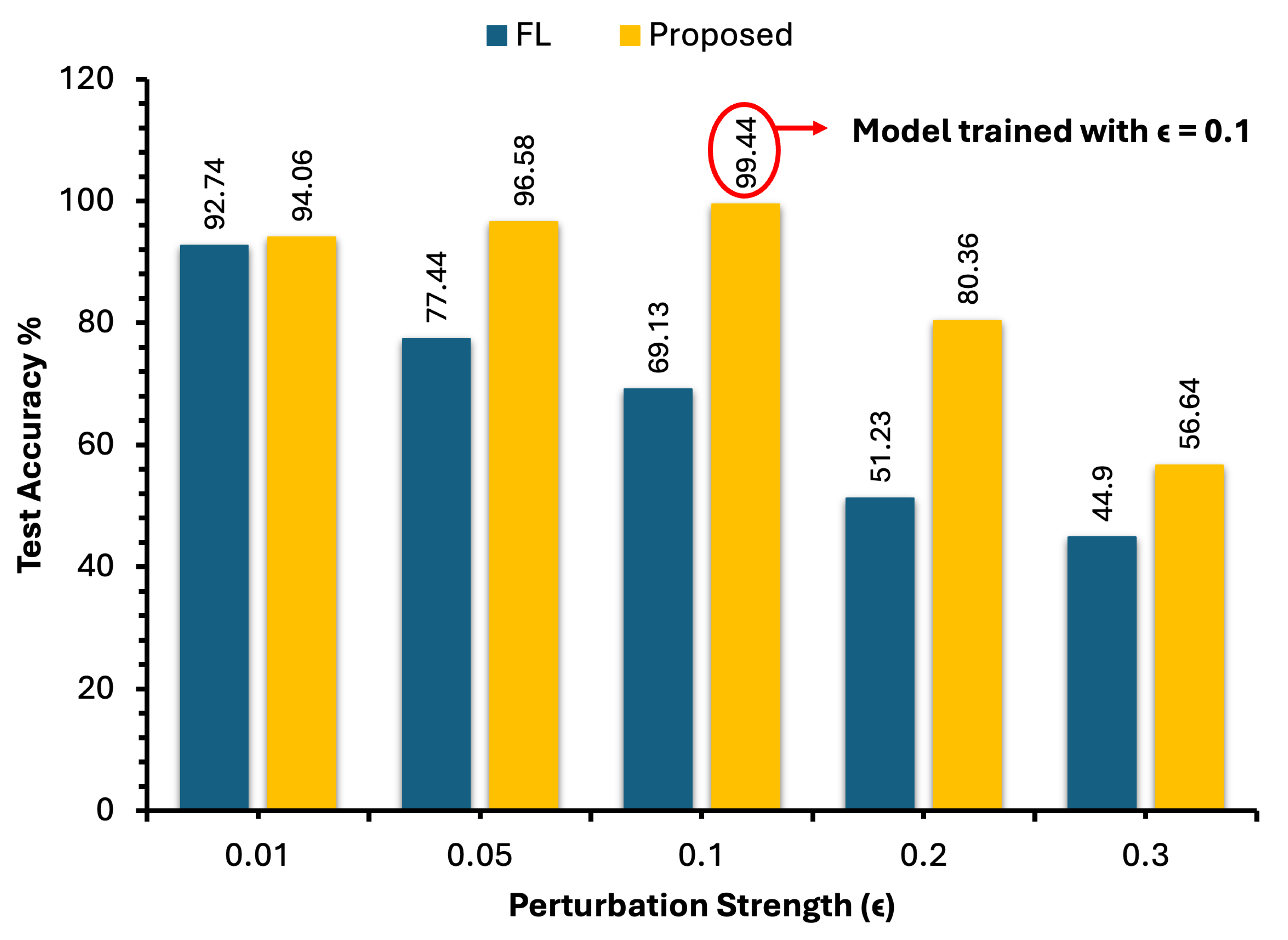

| Perturbation | Strategy | Precision | Recall | F1 |

|---|---|---|---|---|

| = 0.01 | FL | 93% | 93% | 93% |

| Proposed | 94% | 94% | 94% | |

| = 0.05 | FL | 78% | 77% | 78% |

| Proposed | 97% | 97% | 97% | |

| = 0.1 | FL | 72% | 69% | 70% |

| Proposed | 99% | 99% | 99% | |

| = 0.2 | FL | 54% | 51% | 52% |

| Proposed | 80% | 81% | 80% | |

| = 0.3 | FL | 48% | 45% | 46% |

| Proposed | 61% | 57% | 54% |

| Performance Parameter | Test Data | Algorithm | 5G-NIDD [10] | IDS-IoT-2024 [28] | CICIDS2017 [29] |

|---|---|---|---|---|---|

| Accuracy | Clean | Proposed | 97.23% | 98.63% | 97.95% |

| Standard FL | 97.57% | 99.25% | 98.8% | ||

| Proposed | 96.19% | 98.33% | 97.17% | ||

| Standard FL | 95.22% | 97.66% | 90.83% | ||

| Proposed | 96.04% | 98.13% | 97.12% | ||

| Standard FL | 78.99% | 56.44% | 79.05% | ||

| Proposed | 96.81% | 97.77% | 96.81% | ||

| Standard FL | 61.25% | 45.88% | 60.86% | ||

| Proposed | 78.55% | 93.68% | 93.01% | ||

| Standard FL | 49.77% | 34.56% | 51.14% | ||

| Proposed | 50.10% | 64.84% | 85.82% | ||

| Standard FL | 43.89% | 28.52% | 47.09% | ||

| Precision | Clean | Proposed | 97% | 99% | 98% |

| Standard FL | 98% | 99% | 99% | ||

| Proposed | 96% | 98% | 97% | ||

| Standard FL | 95% | 98% | 90% | ||

| Proposed | 96% | 98% | 97% | ||

| Standard FL | 79% | 64% | 85% | ||

| Proposed | 97% | 98% | 97% | ||

| Standard FL | 67% | 56% | 80% | ||

| Proposed | 83% | 94% | 92% | ||

| Standard FL | 55% | 47% | 70% | ||

| Proposed | 59% | 77% | 84% | ||

| Standard FL | 49% | 44% | 66% | ||

| Recall | Clean | Proposed | 97% | 99% | 98% |

| Standard FL | 98% | 99% | 99% | ||

| Proposed | 96% | 98% | 97% | ||

| Standard FL | 95% | 98% | 91% | ||

| Proposed | 96% | 98% | 97% | ||

| Standard FL | 79% | 56% | 79% | ||

| Proposed | 97% | 98% | 97% | ||

| Standard FL | 61% | 46% | 61% | ||

| Proposed | 79% | 94% | 93% | ||

| Standard FL | 50% | 35% | 51% | ||

| Proposed | 50% | 65% | 86% | ||

| Standard FL | 44% | 29% | 47% | ||

| F1-Score | Clean | Proposed | 97% | 99% | 98% |

| Standard FL | 98% | 99% | 99% | ||

| Proposed | 96% | 98% | 97% | ||

| Standard FL | 95% | 98% | 90% | ||

| Proposed | 96% | 98% | 97% | ||

| Standard FL | 79% | 59% | 82% | ||

| Proposed | 97% | 98% | 97% | ||

| Standard FL | 63% | 48$ | 68% | ||

| Proposed | 78% | 94% | 92% | ||

| Standard FL | 51% | 37% | 59% | ||

| Proposed | 46% | 66% | 85% | ||

| Standard FL | 45% | 31% | 55% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zafar, S.; White, J.; Legg, P. Federated Learning with Adversarial Optimisation for Secure and Efficient 5G Edge Computing Networks. Big Data Cogn. Comput. 2025, 9, 238. https://doi.org/10.3390/bdcc9090238

Zafar S, White J, Legg P. Federated Learning with Adversarial Optimisation for Secure and Efficient 5G Edge Computing Networks. Big Data and Cognitive Computing. 2025; 9(9):238. https://doi.org/10.3390/bdcc9090238

Chicago/Turabian StyleZafar, Saniya, Jonathan White, and Phil Legg. 2025. "Federated Learning with Adversarial Optimisation for Secure and Efficient 5G Edge Computing Networks" Big Data and Cognitive Computing 9, no. 9: 238. https://doi.org/10.3390/bdcc9090238

APA StyleZafar, S., White, J., & Legg, P. (2025). Federated Learning with Adversarial Optimisation for Secure and Efficient 5G Edge Computing Networks. Big Data and Cognitive Computing, 9(9), 238. https://doi.org/10.3390/bdcc9090238