1. Introduction

Empathetic response generation signifies the capacity of dialogue systems to comprehend and perceive a user’s circumstances and formulates appropriate responses with users as the focal point [

1]. It has been suggested that the generation of empathetic responses has potential in areas such as counseling [

2] and emotional support [

3], particularly those that require greater user experience and satisfaction. Although most approaches endeavor to regulate emotional responses by assigning explicit emotional labels to generators [

4,

5,

6,

7], the capacity of chatbots to engage in empathetic conversations in open domains without explicit emotional labels remains a formidable challenge. Consequently, how to enable dialogue systems to autonomously generate empathetic responses remains an interesting research area.

The critical components in empathetic response generation encompass the ability to perceive, comprehend, and respond to the user’s conversational state, as well as the facilitation of sustained dialogue. Recent studies have sought to cultivate empathy by integrating external knowledge such as commonsense knowledge and emotional vocabulary knowledge to augment the expression of empathy. However, two main challenges exist. (1) These methods rely on discrete sentiment words within utterances to identify and convey emotions, overlooking the continuous emotional transitions of users in context. This oversight may result in biased evaluations of users’ emotional needs. (2) Existing methods demonstrate the limited ability to understand and process semantic information, leading to the generation of less engaging responses. Consequently, human–computer dialogue lacks the sustainability observed in human-to-human interactions. As illustrated in

Figure 1, the response “I am glad to hear that!” indicates that the dialogue system does not understand the user’s emotion properly, which is also not conducive to an ongoing dialogue.

In response to the aforementioned challenges, we propose a novel framework for empathetic response generation, termed the emotional transition prompt and dual-semantic contrastive learning (EPDC). EPDC comprises three main components: an emotional transition prompt-learning module, a dual-semantic contrastive learning module, and an emotional state learning module. Specifically, the emotional transition prompt learning module innovatively calculates the change of users’ emotional polarity transition during the conversation to reduce the bias of discrete sentiment prediction. The dual-semantic contrastive learning module captures high-order semantic information at varying levels of semantic granularity, thereby improving the coherence and sustainability of the dialogue. The emotional state learning module integrates users’ emotional and cognitive states at the utterance level to improve the overall quality of the generated responses. We conduct comprehensive experiments on the benchmark dataset EMPATHETICDIALOGUES; the experimental results demonstrate the superior performance of EPDC compared to the baselines, as evidenced by both automatic and manual evaluations. In addition, our findings also indicate that EPDC can effectively guide users toward a subsequent conversational turn based on the ongoing dialogue topic.

In summary, the contributions of this paper are as follows:

We propose the EPDC framework, a novel method for generating empathetic responses that explicitly considers users’ emotional and cognitive states.

We innovatively incorporate the changes in users’ emotional polarity during the conversation as contextual prompts to eliminate the bias of discrete emotion prediction.

To the extent of our knowledge, we are pioneers in addressing sustainability challenges in empathetic dialogue systems through dual-semantic contrastive learning.

Extensive experiments and analyses are conducted to validate the effectiveness of EPDC and its robustness across diverse embedding dimensions.

2. Related Work

In this section, we review related work from the perspectives of both empathetic response generation models and contrastive learning text generation models.

2.1. Empathetic Response Generation Models

Emotional response generation is a precursor to empathetic response generation, and they rely on predefined emotional labels or keywords to guide the content of the target output [

7,

8,

9,

10,

11]. Such modelling has been fruitful as increasingly large corpora with detailed annotations become available. For example, Zhou et al. [

4] develop an external memory module to select whether the decoded word is an emotional word or a generic word, in order to generate responses for five specific emotional labels. Based on this, EACM [

12] adds an emotion selector that enables the model to pick the appropriate unique emotional responses. Furthermore, to address the tendency for generic responses in emotional dialogues, Shen and Feng [

7] introduce curriculum learning and dual learning, thereby enhancing the diversity of responses.

However, existing empathetic response generation models focus on the user’s emotion at the last turn of conversation to predict the emotional category of the generated response [

13,

14,

15,

16,

17,

18,

19]. For example, Majumder et al. [

16] propose that chatbots should be capable of mimicry, considering polarity-based emotion clustering and emotion mimicry. Lin et al. [

14] improve response quality by incorporating expert feedback across different emotion categories. Moreover, Li et al. [

18] and Sabour et al. [

19] attempt to introduce external commonsense knowledge to obtain richer user information, thereby improving the model’s empathetic capabilities.

Recent research efforts have attempted to make dialogue systems more empathetic through various approaches. Wang et al. [

20] adopt self-imagine and other-imagine perspectives using heterogeneous graphs, enabling the model to better empathize with users. Yang et al. [

21] introduce a dependency tree to capture the correlation between emotion and semantics, and propose a dynamic correlation graph convolutional network to guide the generation of empathetic responses. To improve emotion perception, Fu et al. [

22] integrate an emotion correlation-aware aggregation approach with both soft and hard decision strategies. Similarly, Su et al. [

23] extract personal status and discourse topics as hidden sentiment signals to achieve more accurate emotion recognition.

Although the above works have focused on enhancing the empathetic capabilities of dialogue models, most of them have neglected the fact that the user’s emotion during the conversation is a continuously changing process, which can result in emotional bias in the generated responses. In addition, their ability to infer subtle emotional intensity is limited. Therefore, we propose the EPDC model to attenuate this emotional bias by establishing the user’s emotional transition prompt with historical emotional states in the context, enabling more accurate reasoning about the user’s current emotional needs.

2.2. Contrastive Learning Text Generation Models

Contrastive learning, which produces sample representations by narrowing the distance between positive pairs and expanding the distance between negative pairs, has been widely applied in text generation tasks [

24,

25]. For instance, Pan et al. [

26] utilize contrastive loss to explicitly project different languages to a shared semantic space and improve the performance of machine translation. Both Su et al. [

27] and An et al. [

28] present contrastive frameworks for text generation, with the former working on enhancing text diversity and the latter focusing on constructing sequence-level contrast examples. Furthermore, MCCL [

29] contrast the target response with negative responses, enabling the chatbot to distinguish and avoid contradictory response patterns.

Recently, contrastive learning has been applied to deeper textual relations and large language models. Dan et al. [

30] employ contrastive learning to generate text that follows specific comparative logic relations, effectively improving the model’s comprehension of Chinese. Sen et al. [

31] integrate contrastive learning into the decoding stage of large language models to balance the coherence and diversity of the response. Conversely, Zhang et al. [

32] utilize the text generation ability of large language models to augment the sample size for contrastive learning.

The aforementioned models benefit from the higher-order features between the generated text and the target text captured by contrast learning, which enhances the generative power of the model. However, the essence of human dialogue lies in the semantic understanding of the context, which is the basis for dialogue to take place. Hence, in this paper we employ dual-semantic contrastive learning to capture the higher-order features of the input context to enhance the semantic understanding ability of the model. At the same time, dual-semantic also allows the model to augment positive samples and expand the semantic space without external resources. And we further aim for the model to generate empathetic responses that can lead to new turns of dialogue.

3. Approach

In this section, we first formally define the task of empathetic response generation in multi-turn dialogue systems. We then introduce the EPDC model, which consists of four main components, i.e., emotional transition prompt learning module (see

Section 3.2), dual-semantic contrastive learning module (see

Section 3.3), emotional state learning module (see

Section 3.4), empathetic response generation module (see

Section 3.5).

The dialogue D between two speakers can be represented as a sequence of utterances . Then the D is divided as two parts , where represents the conversation context, and W denotes the target response . Each utterance of context contains a sequence of tokens of arbitrary length . And the emotion category e is obtained through empathy knowledge learning. Thereby, the task of empathetic response generation is to compute the probability of generating a response W based on the given conversation context C.

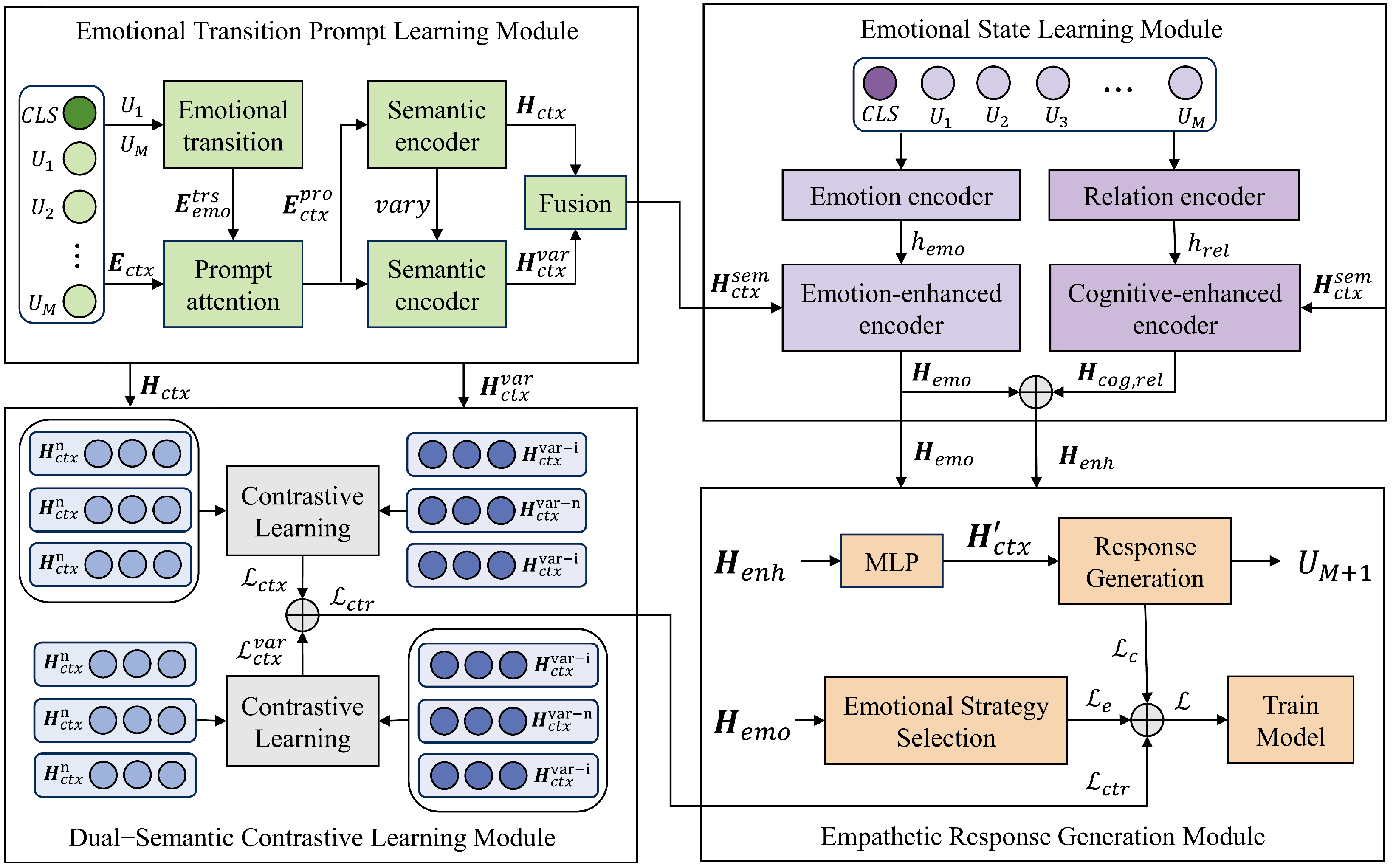

3.1. Overview

Figure 2 presents an overview of the proposed model, EPDC, which is built on the standard Transformer [

33]. First, the context embedding enhanced with the emotional transition prompt

is produced by an attention mechanism, which is sent into two parallel semantic encoders to get the context hidden representations

and

. Then,

and

are input into the dual-semantic contrastive learning module to obtain contrastive loss

. Subsequently, we use the emotional state signal

and cognitive state signal

generated by the emotional state learning module to improve semantic representation

. Finally, the empathetic responses are generated by calculating the scores on all candidate words based on

.

3.2. Emotional Transition Prompt Learning Module

Following previous works [

16,

17,

19], we concatenate each sentence in the dialogue history

C into a long sequence of words and use the special token

as the starting marker of the context input

, where the symbol ⊕ represents the concatenate operation. Similar to Devlin et al. [

34], we utilize the final hidden representation of

as the representation of the entire sequence.

To mitigate the bias in discrete sentiment prediction, we introduce continuous sentiment cues by encoding the sentiment polarity transitions of users within the context of the conversation as prompts, which offer superior controllability over the generation process [

35,

36]. On the one hand, we first use a word embedding layer and a position embedding layer to obtain the word embedding

and position embedding

of the sequence

C. In the multi-turn dialogue settings, it is important to distinguish whether the utterance comes from the speaker or the listener. Therefore, we incorporate the state embedding

into input context

C. The vector representation of the dialogue history

is the summation of the above types of embeddings:

where

,

is the number of words in the sequence

C and

is the embedding dimension. On the other hand, based on the change in emotional polarity scores

, we also use an embedding layer to generate the user’s emotional transition prompt

:

where

,

is the preset threshold, and

is calculated based on the user’s first utterance

and current utterance

:

where

,

are the emotional polarity scores produced by the function

. The function

[

37] begins by calculating the sentiment values of all sentiment-bearing words in the input text. These values are then aggregated via a weighted summation and normalized to produce positive, negative, neutral, and compound scores. Notably, the compound score is utilized as the measure of emotional polarity.

We then integrate the emotional transition prompt

into the conversation context embedding

:

where

is the attention weight,

is the preset weight coefficient, and

is fed into two context-level semantic encoders to generate different fine-grained contextual representations:

where

and

have the same dimension

and

is the hidden size of these two encoders. It is important to note that both

and

are derived from Transformer [

33], and the only difference between the pair is the number of heads in the multi-head attention mechanism. This design allows EPDC to capture finer-grained and more diverse semantic relationships [

38], thereby mitigating potential biases that could arise from a single attention mechanism.

Finally, we merge different fine-grained contextual representations:

where

W and

are the weight matrices, and

is the ReLU activation function.

3.3. Dual-Semantic Contrastive Learning Module

To enhance the semantic comprehension of the model, we devise a contrastive objective to distinguish higher-order semantic representations at different levels of granularity. Our “dual-semantic” approach allows the model to deeply understand the historical semantics while maintaining a degree of creativity. Or rather, single contrastive learning can help the model stick to the positive semantic samples but also weaken its generalization ability. The dual contrastive learning, in which there are two positive samples, expands the semantic learning space of the model to a certain extent, making the model more capable of understanding and applying historical semantics [

39,

40]. The experiment results in

Section 5 also prove that the responses generated by EPDC not only fit the historical dialogues, but also lead to the next turn of dialogue. Given the batch size

N, we denote the semantic representations generated by the context encoder view as

and

. From the perspective of

, we consider

as the positive sample and the representations

as the negative samples. After that, the contrastive object in the

view can be defined as:

where

represents a score function that measures the similarity, and

and

correspond to the similarity of positive samples and negative samples, respectively. The contrastive object of the

view is defined similarly and the overall contrastive loss is as follows:

3.4. Emotional State Learning Module

3.4.1. Knowledge Extraction Encoders

Inspired by ATOMIC [

41], we extracted five commonsense relations (

[xReact], [xWant], [xNeed], [xIntent], [xEffect]) from each utterance

in the dialogue context

C, where “[xReact]” indicates the user’s emotion state and the remaining four relationships stand for the user’s cognitive state. Therefore, we use two independent encoders to obtain hidden vectors of commonsense knowledge:

where

,

and

,

are produced by COMET [

42]. Considering that the emotion states are represented by discrete affective words (e.g.,

sad, excited, happy, angry, surprised) and the cognitive states are expressed as sentences, we take the average of the emotion encoder output and the relation encoder output of the

implicit state, respectively.

3.4.2. Knowledge-Enhanced Encoders

Similarly to Majumder et al. [

16], we integrate commonsense relation representations with the contextual representation at the utterance level and then subsequently feed them to the knowledge-enhanced encoders to obtain commonsense-refined context representations for each relation, respectively:

where

. Both affective and cognitive knowledge are important components in refining the user’s state, and we expect the model to be able to generate appropriate empathetic responses based on a mixture of them. Consequently, we connect emotionally enhanced contextual representations with cognitively enhanced contextual representations:

where

.

3.5. Empathetic Response Generation Module

3.5.1. Emotional Strategy Selection

In practice, the speaker’s sentiment changes as the dialogue progresses. To learn the user’s historical emotional states within the dialogue context

C, we use the mean values of the various types of emotion labels in the emotion-enhanced contextual representation

for classification:

where

, and

means to take the mean of all the elements of the second dimension of the tensor

. To generate the emotion category distribution

, we feed

into a linear layer and perform the Softmax operation:

where

,

is the weight vector and

s is the total number of emotion labels available in the dataset. We update the weight parameters by computing the minimizing cross-entropy loss between the emotion category distribution

and the ground truth label

:

3.5.2. Response Generation

To generate the target empathetic response

based on the knowledge-enhanced context

, we adopt the decoder in Transformer following the previous work [

16,

18]:

where

represents the embeddings of the tokens that have been generated before time

t, and

is the final representation of the dialogue context, which is produced after

passes through Multi-Layer Perceptron (MLP). ⊙ denotes element-wise multiplication, and

is the ReLU activation function.

We then use the negative log-likelihood as the generation loss function:

Eventually, we combine all the losses for model training:

where

,

and

are hyperparameters that we use to optimize our model. And during our experiments, we set

,

and

.

4. Experimental Setup

In this section, we provide a comprehensive overview of our experimental setup.

4.1. Research Questions

Our study aims to address the following key research questions that are central to the understanding of EPDC’s effectiveness on empathetic response generation:

RQ1: Does our proposed model, EPDC, outperform existing baselines in empathetic response generation?

RQ2: Is modeling the user’s emotional transition throughout the conversation and the introduction of dual-semantic contrastive learning really effective?

RQ3: How does varying the embedding dimension impact the performance across all models?

RQ4: What is the influence of context length on the response generation capabilities of our model?

RQ5: How do the hyperparameters in the loss function affect the performance of EPDC?

4.2. Dataset

Our experimental investigations are carried out using the publicly available EMPATHETICDIALOGUES dataset [

13], which serves as a standard benchmark for evaluating empathetic response generation. Specifically, EMPATHETICDIALOGUES contains nearly 25k open-domain binary dialogues and 32 uniformly distributed emotion labels. The listener infers the emotional needs of the speaker through what the speaker says and responds empathetically. Following Rashkin et al. [

13], we use the 8:1:1 train/valid/test split and the last utterance of each sample is an empathetic response.

4.3. Baselines for Comparison

To establish a solid foundation for our comparative analysis, we employ seven distinct baseline models that represent a spectrum of approaches in the field of empathetic response generation:

Transformer [

33]: The original Transformer model, which is trained for encoder–decoder framework.

Multi-TRS [

13]: An optimized version of Transformer model on the emotion conversation task.

MoEL [

43]: A jointly trained model with separate decoders for each type of emotion and to combine them to generate responses.

MIME [

16]: A Transformer-based model that mimics user emotions and classifies them into negative and positive polarity categories.

EmpDG [

17]: A multi-resolution interactive empathetic dialogue model that proposes an empathetic generator to produce responses and adds two discriminators for optimization. However, discriminators utilize information from future rounds in the dialogue, so this module is removed in our experiments for fairness.

CEM [

19]: A dialogue model that introduces commonsense knowledge, which is used to enhance cognitive understanding of user scenarios and further enhances empathetic expression in the generated responses.

KEMP [

18]: A model that combines emotion-related concepts into the encoding and decoding process through emotion context graph, which enhances the emotion-dependency capabilities of dialogue systems.

4.4. Evaluation Metrics

Our evaluation framework incorporates a dual-pronged approach, encompassing both automatic evaluations and human evaluations.

4.4.1. Automatic Evaluations

Following [

2,

16,

19,

44], we adopt four metrics to automatically evaluate the performance of EPDC and baselines, i.e., Perplexity, Distinct-1, Distinct-2 and Accuracy. Perplexity explicitly measures the model’s ability to account for the syntactic structure of both the dialogue and individual utterances [

45]. A lower PPL indicates a more fluent sequence of words, suggesting higher quality text generation. Distinct-1 and Distinct-2 are often used to measure the diversity of generated responses by computing the ratio of distinct unigrams and bigrams, respectively. Higher values for these metrics indicate greater diversity and less generic output. Accuracy measures whether the emotion category of the response is the same as the ground truth emotion label.

4.4.2. Human Evaluations

Following [

16,

17,

18], we randomly select 100 samples from EPDC and baselines; we then ask three professional annotators to compare the responses based on three criteria, i.e., Empathy, Relevance and Fluency. Empathy measures whether the sentiment of the response fits the dialogue scenario; Relevance evaluates whether the response conforms to the historical topic; Fluency measures the grammatical correctness and readability of the generated responses. We divide the evaluation index into five levels from 5 to 1, representing

strongly agree, agree, not necessarily, disagree, strongly disagree, respectively.

4.5. Implementation Details

This subsection provides a detailed account of our experimental implementation specifics. All models are trained in PyTorch 1.12.1, and are using the Adam optimizer [

46,

47] with

and

. We use pre-trained embedding GloVE vectors [

48] with four dimensions (i.e., 50, 100, 200, 300), where the hidden dimension is set to 300. The initial learning rate of EPDC is 0.0001, and the maximum decoding step is 30, while all the training parameters of the baseline models follow the configuration mentioned in the corresponding article. All the models are trained on one single NVIDIA GeForce RTX 3090 GPU using a batch size of 16. Early stopping is applied when training EPDC.

5. Results and Discussion

5.1. Overall Performance (RQ1)

To answer

RQ1, we investigate the semantic quality and emotional accuracy of the responses generated by EPDC and the baselines in terms of automatic evaluations and human evaluations. As shown in

Table 1, our proposed method, EPDC, consistently achieves state-of-the-art results across all metrics. This demonstrates its superior effectiveness in generating empathetic responses with multi-turn dialogues. Particularly, the improvements of EPDC over the best-performing baseline in terms of Perplexity and Accuracy are, respectively, up to approximately 2.57% and 9.90%.

5.1.1. Automatic Evaluation Results

We find that the methods with independent sentiment knowledge analysis modules (CEM, KEMP, EPDC) have better performance, which illustrates the auxiliary role of sentiment analysis for response generation. The emotional transition prompt learning module in EPDC can particularly enhance the role of sentiment analysis, and the historical emotional state of the user through the conversation is also considered in EPDC, which provides a more comprehensive signal for prediction. Moreover, KEMP performs poorly on the diversity metrics, which may be attributed to its excessive focus on emotional signals during the generation process. In contrast, EPDC commendably balances the semantic quality and emotional accuracy of responses, which indicates that our dual-semantic contrastive learning module can learn multi-level semantic representations that better reflect user emotions, through the two different fine-grained semantic features.

5.1.2. Human Evaluation Results

As shown in

Table 1, EPDC outperforms the baselines across the three human evaluation indicators. This illustrates that EPDC enhances the model’s ability to imitate human language and create more anthropomorphic responses. Among them, the improvement of indicator Empathy is the most significant, which shows that modeling the user’s emotional transition during the dialogue context can better achieve empathy for the user. KEMP has the highest Empathy scores in the baseline models, which is consistent with the results we obtained in the automated evaluation. In terms of Relevance, CEM, KEMP, and EPDC achieve better results than other models. This is likely attributed to the exploitation of COMET by all of them to analyze commonsense relationships in the context, allowing them to generate responses that are more relevant to the conversation topic. And we find there is no obvious difference among models in terms of Fluency. We deduce that it is because the generated responses by Transformer are already fluent and grammatical.

5.2. Ablation Study (RQ2)

To answer RQ2, we conduct ablation experiments to determine the individual contributions of each component in our model. The following variants are developed:

w/o ETP: Removing the emotional transition prompt learning module.

w/o DCL: Removing the dual-semantic contrastive learning module.

w/o KEM: Removing the emotional knowledge extraction encoder and the emotion-enhanced encoder.

w/o CEM: Removing the user’s emotional state during the conversation history (Equations 19), and is replaced by .

As shown in

Table 2, we set up controlled experiments to verify the contribution of each component. First, we analyze the results for the w/o ETP and w/o DCL variants. Removing the ETP, we find that the accuracy decreased significantly, indicating that the emotion transition prompt has a significant impact on emotion perception. Removing the DCL, the perplexity and distinct increased significantly, indicating that high-order semantic features benefit the information expression and syntactic quality of the response but affect the diversity. Next, We examine the w/o KEM and w/o CEM variants. The similar accuracy scores for both variants indicate that it is effective and necessary to consider the user’s emotional state during the conversation history. Their scores on the other three indicators are not significantly different, indicating that there is no noticeable noise attached to the emotional information extraction from the commonsense relationships.

5.3. Impact of Embedding Dimension (RQ3)

To answer

RQ3, we analyze the performance of all models under four different embedding dimensions, i.e.,

.

Figure 3 directly illustrates the results of the four best-performing models (

Appendix A shows the detailed automatic evaluation results).

Generally, for most cases, EPDC outperforms all baselines at every embedding dimension across all metrics, with the exception of Distinct-2 at

. This confirms the robustness of EPDC across different embedding dimensions. In particular, EPDC has the most obvious advantage in 100

, indicating that EPDC learns rich and useful dialogue information in low-dimensional pre-training vectors. Compared to the baselines, EPDC has the most stable results, which demonstrates excellent generalization ability of the EPDC. It is obvious that the embedding dimension has the maximum impact on the performance of KEMP, and its Perplexity and Accuracy scores show a completely opposite trend of change. This may due to the fact that KEMP repeatedly strengthened emotional signals during the generation stage, weakening the guiding role of semantic signals in the generation process, which is consistent with our analysis in the automatic evaluation results. Additionally, with the increase in embedding dimension, MIME and EPDC maintained consistent trends, probably because they both focused on the variability between the positive samples and negative samples. It is also worth noting that CEM’s scores on Perplexity are very unstable, probably because its parameters are more applicable when the embedding dimension

, and it may overfit at lower dimensions. Therefore, we do not include it as a comparison in

Figure 3.

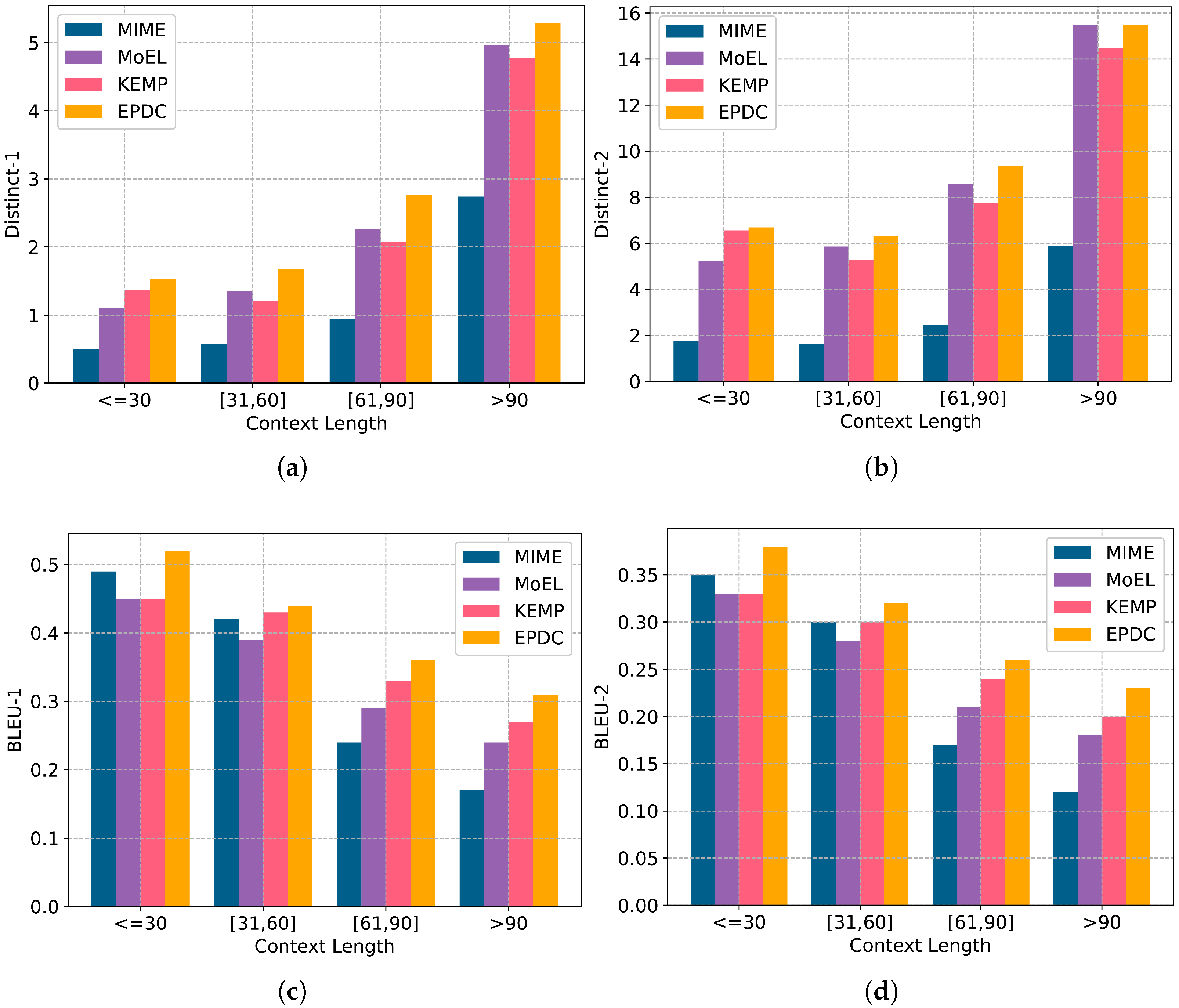

5.4. Impact of Context Length (RQ4)

To answer

RQ4, we investigate the performance of EPDC and three baselines (i.e., MIME, MoEL and KEMP) on test samples of different context lengths (measured by word count). We split the test samples into groups according to their context length and present the distribution of tests by context length in

Table 3. While longer texts can be more informative, long text samples with more than 60 words only make up a minority. Following [

49,

50], we evaluate the model performance in terms of Distinct-1, Distinct-2, BLEU-1 and BLEU-2, respectively. BLEU-1 and BLEU-2 measure the word-overlap between the generated response and the ground truth. A higher BLEU score indicates that the generated response is closer to the ground-truth. The experimental results are shown in

Figure 4.

Generally, EPDC outperforms all baselines across every context length for all metrics, which confirms its robustness in handling dialogues of varying lengths. Especially for contexts with a length of no more than 30, EPDC clearly performs better on the BLUE scores than the baselines, more so than other length contexts, proving its effectiveness in short contexts. The high-order semantic features extracted by dual-semantic contrastive learning enable EPDC to make full use of the dialogue information in short contexts. MIME is the worst-performing baseline in terms of Distinct scores, which is consistent with their overall performance shown in

Table 1. Additionally, with the increase in context length, almost all models show an increase in terms of the Distinct scores (except KEMP in Distinct-1) and a decrease in terms of BLEU scores. This means that when the context length grows, it is increasingly hard for dialogue machines to balance the similarity to the ground truth and sentence diversity in the process of generating responses. This phenomenon is likely due to the fact that longer contexts enrich the dialogue information while introducing noise.

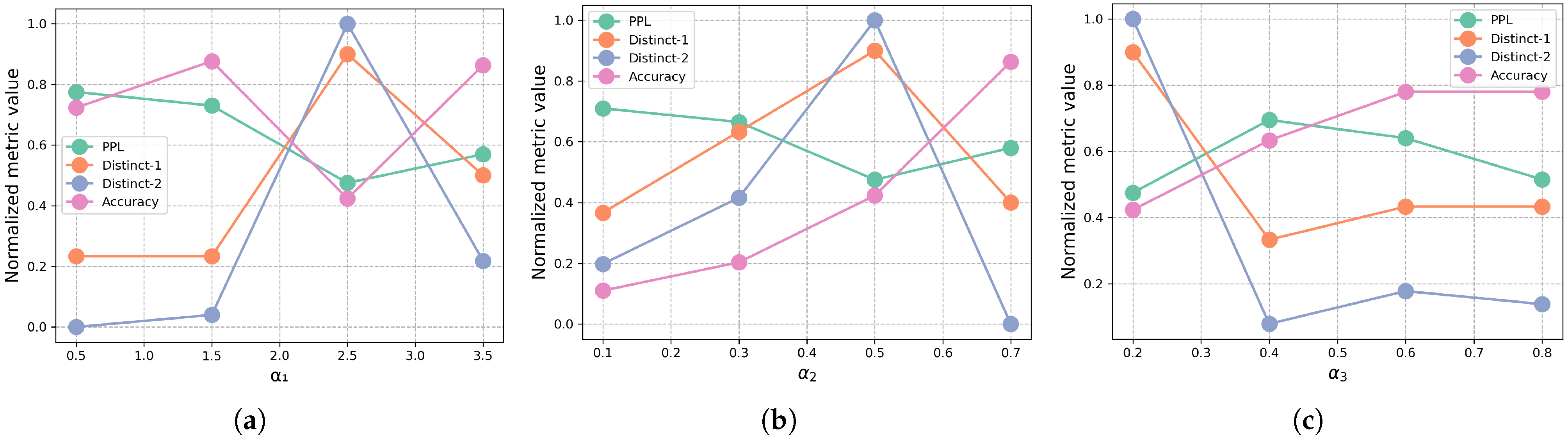

5.5. Hyperparameter Analysis (RQ5)

To answer

RQ5, we perform the sensitivity analysis on the hyperparameters of the loss function, as shown in

Figure 5. Regarding context loss hyperparameters

, we observe that as the

increased, the overall performance of the EPDC continued to improve, reaching optimal results in terms of both perplexity and distinct when

. However, if the value of a exceeds 2.5, the model performance is degraded, indicating that excessive focus on context may disrupt the balance between fluency and diversity.

Regarding the emotion loss hyperparameter, , we observe that increasing its value led to a steady improvement in emotional prediction accuracy. This finding indicates that EPDC is sufficiently effective in modeling sentiment components. However, when , the distinct metrics drop noticeably, suggesting that the strong focus on sentiment characteristics can affect the model’s ability to generate diverse responses.

Regarding the dual-semantic contrastive learning hyperparameter, , we observe that perplexity and distinct metrics vary synchronously within the range of 0.2 to 0.6. When , the automatic evaluation metrics plateaued, indicating that the contrastive learning effect saturated around this threshold.

5.6. Case Study

Table 4 lists the generated responses of EPDC and baselines. In the first case, EPDC generates a response that resonates with the user’s sadness by replying with “too bad”. At the same time, it guides the user to the next turn of conversation around the context topic, which the baseline models all fail to do. This could be benefited by the use of the dual-semantic contrastive learning module for obtaining higher-order semantic features.

In the second case, while all models generate responses with the appropriate emotion “sorry”, only EmpDG, CEM, and EPDC truly understand the dialogue context. This is likely because these models all apply external knowledge. However, EPDC accurately expressed its wishes to the wife of the interlocutor, which is also very fitting to ground truth, indicating EPDC’s superior generative ability. Furthermore, by comparing two cases, we find that a longer context helps models obtain rich information to generate emotional responses that are more inseparable to the dialogue history.

In the third case, only EPDC generates a response that matches the “nostalgic” sentiment, while the other models are influenced by the “nice” and “tough times” in the user’s current utterance and express improper emotions. This demonstrates the necessity and effectiveness of emotional transition prompt in EPDC. In terms of semantic coherence, only the responses generated by EPDC also fit the dialogue context, which illustrates that positive samples in contrastive learning play a powerful auxiliary role in the semantic understanding ability of the model.

6. Conclusions

In this work, we propose an empathetic response generation model based on emotional transition prompt and dual-semantic contrastive learning (EPDC), which produce emotionally accurate, topically relevant, and meaningful responses. Empirical validation on a publicly available conversational dataset demonstrates the efficacy of EPDC, showcasing its superiority over existing empathetic response generation baselines in terms of both automatic and human evaluations. Furthermore, EPDC demonstrates the capacity to guide the subsequent turn of dialogue, thereby contributing to the sustainability of human–machine interactions.

Future research could explore leveraging large language models to enhance response diversity while maintaining emotion accuracy, as referenced in [

51]. Additionally, our efforts will be directed towards the development of personalized dialogue systems tailored to meet the diverse needs of individual users.

Author Contributions

Conceptualization, H.C.; methodology, Y.M.; software, Y.M.; validation, T.S.; formal analysis, Y.Z.; writing—original draft preparation, Y.M.; writing—review and editing, Y.Z. and T.S.; funding acquisition, T.S.; supervision, H.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by Natural Science Foundation of Henan under Grant No. 252300420989.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The dataset presented in this study is included in the article. Further inquiries can be directed to the corresponding author.

Acknowledgments

We are especially grateful to the participants for completing the human evaluation during this work. We also acknowledge the creators of the EMPATHETICDIALOGUES dataset, which contains rich sentiment annotations but may reflect cultural biases inherent in the original data. We also recognize the importance of responsible use of this dataset and will continue to work to mitigate potential biases and misuse in future research.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. The Automatic Evaluation Results of Different Dimensions

Table A1.

The automatic evaluation results of all models when the embedding dimension .

Table A1.

The automatic evaluation results of all models when the embedding dimension .

| Models | Perplexity | Distinct-1 | Distinct-2 | Accuracy (%) |

|---|

| Transformer | 38.05 | 0.48 | 2.12 | - |

| Multi-TRS | 37.46 | 0.49 | 2.24 | 35.01 |

| MoEL | 37.69 | 0.44 | 2.10 | 32.41 |

| MIME | 36.92 | 0.47 | 1.91 | 29.71 |

| EmpDG | 37.94 | 0.45 | 2.00 | 30.54 |

| CEM | 36.94 | 0.66 | 2.99 | 36.67 |

| KEMP | 36.89 | 0.55 | 2.29 | 37.55 |

| EPDC | 35.95 | 0.67 | 3.01 | 41.27 |

Table A2.

The automatic evaluation results of all models when the embedding dimension .

Table A2.

The automatic evaluation results of all models when the embedding dimension .

| Models | Perplexity | Distinct-1 | Distinct-2 | Accuracy (%) |

|---|

| Transformer | 37.24 | 0.42 | 1.72 | - |

| Multi-TRS | 37.74 | 0.53 | 2.40 | 32.43 |

| MoEL | 37.62 | 0.51 | 2.66 | 34.75 |

| MIME | 37.02 | 0.46 | 1.83 | 31.25 |

| EmpDG | 37.75 | 0.50 | 2.21 | 31.72 |

| CEM | 132.04 | 0.07 | 0.10 | 34.5 |

| KEMP | 42.50 | 0.60 | 2.95 | 26.79 |

| EPDC | 35.63 | 0.71 | 2.98 | 38.31 |

Table A3.

The automatic evaluation results of all models when the embedding dimension .

Table A3.

The automatic evaluation results of all models when the embedding dimension .

| Models | Perplexity | Distinct-1 | Distinct-2 | Accuracy (%) |

|---|

| Transformer | 37.23 | 0.54 | 2.48 | - |

| Multi-TRS | 37.87 | 0.48 | 2.00 | 32.22 |

| MoEL | 37.42 | 0.48 | 2.37 | 34.10 |

| MIME | 36.56 | 0.53 | 2.41 | 32.31 |

| EmpDG | 37.03 | 0.47 | 1.97 | 28.66 |

| CEM | 291.13 | 0.42 | 0.70 | 38.92 |

| KEMP | 40.61 | 0.27 | 0.91 | 24.05 |

| EPDC | 35.34 | 0.81 | 3.71 | 39.85 |

Table A4.

The automatic evaluation results of all models when the embedding dimension .

Table A4.

The automatic evaluation results of all models when the embedding dimension .

| Models | Perplexity | Distinct-1 | Distinct-2 | Accuracy (%) |

|---|

| Transformer | 38.60 | 0.47 | 1.94 | - |

| Multi-TRS | 37.90 | 0.48 | 2.02 | 29.55 |

| MoEL | 38.02 | 0.63 | 3.67 | 33.34 |

| MIME | 37.04 | 0.50 | 2.35 | 28.32 |

| EmpDG | 37.90 | 0.38 | 1.41 | 22.42 |

| CEM | 246.75 | 0.27 | 0.47 | 37.37 |

| KEMP | 38.68 | 0.47 | 2.39 | 24.36 |

| EPDC | 36.22 | 0.67 | 2.92 | 37.79 |

Appendix B. Comparison with Large Language Models

Given the competitive advantage of large language models (LLMs) in current dialogue systems, we conduct comparative experiments using EPDC against LLaMA-7b [

52] and GPT-4 [

53]. As shown in

Table A5, LLMs generally outperform small-scale emotional dialogue generation models in terms of response fluency, diversity, and emotional accuracy. However, the integration of EPDC with LLMs yields further performance improvements, demonstrating EPDC’s positive contribution to strengthening the emotional dialogue proficiency of LLMs.

It is worth noting that the accuracy of LLaMA-7b is not reported because it has not been fine-tuned on emotion-labeled dialogue datasets and therefore exhibits poor performance in emotion prediction. For integration with LLMs, we concatenate the emotion vector with the final context representation , both generated by EPDC, as input to the LLMs. GPT-4 is accessed via the OpenAI API, while LLaMA is built on the NVIDIA GeForce RTX 4090 GPU.

Table A5.

The automatic evaluation results of EPDC and large language models when the embedding dimension . Bold values indicate the best performance in each column.

Table A5.

The automatic evaluation results of EPDC and large language models when the embedding dimension . Bold values indicate the best performance in each column.

| Models | Perplexity | Distinct-1 | Distinct-2 | Accuracy (%) |

| LLaMA-7b | 14.19 | 1.95 | 7.38 | - |

| LLaMA-7b + EPDC | 13.57 | 2.02 | 8.12 | 44.15 |

| GPT-4 | 7.03 | 2.64 | 16.89 | 46.38 |

| GPT-4 + EPDC | 6.65 | 2.77 | 17.25 | 47.11 |

References

- Higashinaka, R.; Dohsaka, K.; Isozaki, H. Effects of self-disclosure and empathy in human-computer dialogue. In Proceedings of the IEEE Workshop on Spoken Language Technology, Goa, India, 15–18 December 2008; pp. 109–112. [Google Scholar] [CrossRef]

- Mao, Y.; Cai, F.; Guo, Y.; Chen, H. Incorporating emotion for response generation in multi-turn dialogues. Appl. Intell. 2022, 52, 7218–7229. [Google Scholar] [CrossRef]

- Vakulenko, S.; Kanoulas, E.; de Rijke, M. A Large-scale Analysis of Mixed Initiative in Information-Seeking Dialogues for Conversational Search. ACM Trans. Inf. Syst. 2021, 39, 49:1–49:32. [Google Scholar] [CrossRef]

- Zhou, H.; Huang, M.; Zhang, T.; Zhu, X.; Liu, B. Emotional Chatting Machine: Emotional Conversation Generation with Internal and External Memory. In Proceedings of the 32nd AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 730–739. [Google Scholar] [CrossRef]

- Wang, K.; Wan, X. SentiGAN: Generating Sentimental Texts via Mixture Adversarial Networks. In Proceedings of the 27th International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; pp. 4446–4452. [Google Scholar] [CrossRef]

- Song, Z.; Zheng, X.; Liu, L.; Xu, M.; Huang, X. Generating Responses with a Specific Emotion in Dialog. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 3685–3695. [Google Scholar] [CrossRef]

- Shen, L.; Feng, Y. CDL: Curriculum Dual Learning for Emotion-Controllable Response Generation. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 556–566. [Google Scholar] [CrossRef]

- Ghosh, S.; Chollet, M.; Laksana, E.; Morency, L.; Scherer, S. Affect-LM: A Neural Language Model for Customizable Affective Text Generation. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, Vancouver, Canada, 30 July–4 August 2017; pp. 634–642. [Google Scholar] [CrossRef]

- Zhou, X.; Wang, W.Y. MojiTalk: Generating Emotional Responses at Scale. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; pp. 1128–1137. [Google Scholar] [CrossRef]

- Li, J.; Sun, X. A Syntactically Constrained Bidirectional-Asynchronous Approach for Emotional Conversation Generation. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 678–683. [Google Scholar] [CrossRef]

- Colombo, P.; Witon, W.; Modi, A.; Kennedy, J.; Kapadia, M. Affect-Driven Dialog Generation. In Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; pp. 3734–3743. [Google Scholar] [CrossRef]

- Wei, W.; Liu, J.; Mao, X.; Guo, G.; Zhu, F.; Zhou, P.; Hu, Y. Emotion-aware Chat Machine: Automatic Emotional Response Generation for Human-like Emotional Interaction. In Proceedings of the 28th ACM International Conference on Information and Knowledge Management, Beijing, China, 3–7 November 2019; pp. 1401–1410. [Google Scholar] [CrossRef]

- Rashkin, H.; Smith, E.M.; Li, M.; Boureau, Y. Towards Empathetic Open-domain Conversation Models: A New Benchmark and Dataset. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 5370–5381. [Google Scholar] [CrossRef]

- Lin, Z.; Xu, P.; Winata, G.I.; Siddique, F.B.; Liu, Z.; Shin, J.; Fung, P. CAiRE: An End-to-End Empathetic Chatbot. In Proceedings of the 34th AAAI Conference on Artificial Intelligence, Online, 7–12 February 2020; pp. 13622–13623. [Google Scholar] [CrossRef]

- Zhong, P.; Zhang, C.; Wang, H.; Liu, Y.; Miao, C. Towards Persona-Based Empathetic Conversational Models. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Online, 16–20 November 2020; pp. 6556–6566. [Google Scholar] [CrossRef]

- Majumder, N.; Hong, P.; Peng, S.; Lu, J.; Ghosal, D.; Gelbukh, A.F.; Mihalcea, R.; Poria, S. MIME: MIMicking Emotions for Empathetic Response Generation. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Online, 16–20 November 2020; pp. 8968–8979. [Google Scholar] [CrossRef]

- Li, Q.; Chen, H.; Ren, Z.; Ren, P.; Tu, Z.; Chen, Z. EmpDG: Multi-resolution Interactive Empathetic Dialogue Generation. In Proceedings of the 28th International Conference on Computational Linguistics, Barcelona, Spain, 8–13 December 2020; pp. 4454–4466. [Google Scholar] [CrossRef]

- Li, Q.; Li, P.; Ren, Z.; Ren, P.; Chen, Z. Knowledge Bridging for Empathetic Dialogue Generation. In Proceedings of the 36th AAAI Conference on Artificial Intelligence, Online, 22 February–1 March 2022; pp. 10993–11001. [Google Scholar] [CrossRef]

- Sabour, S.; Zheng, C.; Huang, M. CEM: Commonsense-Aware Empathetic Response Generation. In Proceedings of the 36th AAAI Conference on Artificial Intelligence, Online, 22 February–1 March 2022; pp. 11229–11237. [Google Scholar] [CrossRef]

- Wang, X.; Liu, Z.; Liu, T.; Fang, Z. Empathetic Response Generation With Self and Other-Imagine Graph. IEEE Trans. Comput. Soc. Syst. 2024, 11, 7801–7813. [Google Scholar] [CrossRef]

- Yang, Z.; Ren, Z.; Wang, Y.; Zhu, X.; Chen, Z.; Cai, T.; Wu, Y.; Su, Y.; Ju, S.; Liao, X. Exploiting Emotion-Semantic Correlations for Empathetic Response Generation. arXiv 2024, arXiv:2402.17437. [Google Scholar] [CrossRef]

- Fu, F.; Zhang, L.; Wang, Q.; Mao, Z. E-CORE: Emotion Correlation Enhanced Empathetic Dialogue Generation. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Singapore, 6–10 December 2023; pp. 10568–10586. [Google Scholar] [CrossRef]

- Su, Y.; Wei, Y.; Nie, W.; Zhao, S.; Liu, A. Dynamic Causal Disentanglement Model for Dialogue Emotion Detection. IEEE Trans. Affect. Comput. 2025, 16, 1–14. [Google Scholar] [CrossRef]

- Gao, T.; Yao, X.; Chen, D. SimCSE: Simple Contrastive Learning of Sentence Embeddings. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Online and Punta Cana, Dominican Republic, 7–11 November 2021; pp. 6894–6910. [Google Scholar] [CrossRef]

- Krishna, K.; Chang, Y.; Wieting, J.; Iyyer, M. RankGen: Improving Text Generation with Large Ranking Models. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Abu Dhabi, United Arab Emirates, 7–11 December 2022; pp. 199–232. [Google Scholar] [CrossRef]

- Pan, X.; Wang, M.; Wu, L.; Li, L. Contrastive Learning for Many-to-many Multilingual Neural Machine Translation. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, Online, 1–6 August 2021; pp. 244–258. [Google Scholar] [CrossRef]

- Su, Y.; Lan, T.; Wang, Y.; Yogatama, D.; Kong, L.; Collier, N. A Contrastive Framework for Neural Text Generation. In Proceedings of the 36th Conference on Neural Information Processing Systems, New Orleans, LA, USA, 28 November–9 December 2022. [Google Scholar]

- An, C.; Feng, J.; Lv, K.; Kong, L.; Qiu, X.; Huang, X. CoNT: Contrastive Neural Text Generation. In Proceedings of the 36th Conference on Neural Information Processing Systems, New Orleans, LA, USA, 28 November–9 December 2022. [Google Scholar]

- Li, W.; Kong, J.; Liao, B.; Cai, Y. Mitigating Contradictions in Dialogue Based on Contrastive Learning. In Proceedings of the Findings of the Association for Computational Linguistics, Dublin, Ireland, 22–27 May 2022; pp. 2781–2788. [Google Scholar] [CrossRef]

- Dan, Y.; Tian, J.; Zhou, J.; Yan, M.; Zhang, J.; Chen, Q.; He, L. Modeling Comparative Logical Relation with Contrastive Learning for Text Generation. In Proceedings of the 12th China National Conference on Chinese Computational Linguistics, Chengdu, China, 12–16 October 2024; Volume 15362, pp. 107–119. [Google Scholar] [CrossRef]

- Sen, J.; Pandey, R.; Waghela, H. Context-Enhanced Contrastive Search for Improved LLM Text Generation. arXiv 2025, arXiv:2504.21020. [Google Scholar] [CrossRef]

- Zhang, R.; Wang, Y.; Yang, Y. Generation-driven Contrastive Self-training for Zero-shot Text Classification with Instruction-following LLM. In Proceedings of the 18th Conference of the European Chapter of the Association for Computational Linguistics, St. Julian’s, Malta, 17–22 March 2024; pp. 659–673. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All you Need. In Proceedings of the 31st Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Devlin, J.; Chang, M.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar] [CrossRef]

- Li, X.L.; Liang, P. Prefix-Tuning: Optimizing Continuous Prompts for Generation. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, Online, 1–6 August 2021; pp. 4582–4597. [Google Scholar] [CrossRef]

- Ghazvininejad, M.; Karpukhin, V.; Gor, V.; Celikyilmaz, A. Discourse-Aware Soft Prompting for Text Generation. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Abu Dhabi, United Arab Emirates, 7–11 December 2022; pp. 4570–4589. [Google Scholar] [CrossRef]

- Hutto, C.J.; Gilbert, E. VADER: A Parsimonious Rule-Based Model for Sentiment Analysis of Social Media Text. In Proceedings of the 18th International AAAI Conference on Weblogs and Social Media, Ann Arbor, MI, USA, 1–4 June 2014; Volume 8, pp. 216–225. [Google Scholar]

- Voita, E.; Talbot, D.; Moiseev, F.; Sennrich, R.; Titov, I. Analyzing Multi-Head Self-Attention: Specialized Heads Do the Heavy Lifting, the Rest Can Be Pruned. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 5797–5808. [Google Scholar] [CrossRef]

- Tang, H.; Zhao, G.; Wu, Y.; Qian, X. Multi-Sample based Contrastive Loss for Top-k Recommendation. arXiv 2021, arXiv:2109.00217. [Google Scholar] [CrossRef]

- Zhao, K.; Wu, Q.; Cai, X.; Tsuruoka, Y. Leveraging Multi-lingual Positive Instances in Contrastive Learning to Improve Sentence Embedding. In Proceedings of the 18th Conference of the European Chapter of the Association for Computational Linguistics, St. Julian’s, Malta, 17–22 March 2024; pp. 976–991. [Google Scholar]

- Sap, M.; Bras, R.L.; Allaway, E.; Bhagavatula, C.; Lourie, N.; Rashkin, H.; Roof, B.; Smith, N.A.; Choi, Y. ATOMIC: An Atlas of Machine Commonsense for If-Then Reasoning. In Proceedings of the 33rd AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 3027–3035. [Google Scholar] [CrossRef]

- Bosselut, A.; Rashkin, H.; Sap, M.; Malaviya, C.; Celikyilmaz, A.; Choi, Y. COMET: Commonsense Transformers for Automatic Knowledge Graph Construction. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 4762–4779. [Google Scholar] [CrossRef]

- Lin, Z.; Madotto, A.; Shin, J.; Xu, P.; Fung, P. MoEL: Mixture of Empathetic Listeners. In Proceedings of the Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, Hong Kong, China, 3–7 November 2019; pp. 121–132. [Google Scholar] [CrossRef]

- Ling, Y.; Cai, F.; Chen, H.; de Rijke, M. Leveraging Context for Neural Question Generation in Open-domain Dialogue Systems. In Proceedings of the 29th The Web Conference, Taipei, Taiwan, 20–24 April 2020; pp. 2486–2492. [Google Scholar] [CrossRef]

- Serban, I.V.; Sordoni, A.; Bengio, Y.; Courville, A.C.; Pineau, J. Building End-To-End Dialogue Systems Using Generative Hierarchical Neural Network Models. In Proceedings of the 30th AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; pp. 3776–3784. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, R.; Xu, L.; Lu, X.; Yu, Y.; Xu, M.; Zhao, H. FasterSal: Robust and Real-Time Single-Stream Architecture for RGB-D Salient Object Detection. IEEE Trans. Multim. 2025, 27, 2477–2488. [Google Scholar] [CrossRef]

- Pennington, J.; Socher, R.; Manning, C.D. Glove: Global Vectors for Word Representation. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar] [CrossRef]

- Lin, H.; Feng, S.; Geishauser, C.; Lubis, N.; van Niekerk, C.; Heck, M.; Ruppik, B.M.; Vukovic, R.; Gasic, M. EmoUS: Simulating User Emotions in Task-Oriented Dialogues. In Proceedings of the 46th International ACM SIGIR Conference on Research and Development in Information Retrieval, Taipei, Taiwan, 23–27 July 2023; pp. 2526–2531. [Google Scholar] [CrossRef]

- Liu, L.; Huang, J.X. Prompt Learning to Mitigate Catastrophic Forgetting in Cross-lingual Transfer for Open-domain Dialogue Generation. In Proceedings of the 46th International ACM SIGIR Conference on Research and Development in Information Retrieval, Taipei, Taiwan, 23–27 July 2023; pp. 2287–2292. [Google Scholar] [CrossRef]

- Zhao, W.X.; Zhou, K.; Li, J.; Tang, T.; Wang, X.; Hou, Y.; Min, Y.; Zhang, B.; Zhang, J.; Dong, Z.; et al. A Survey of Large Language Models. arXiv 2023, arXiv:2303.18223. [Google Scholar] [CrossRef] [PubMed]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. LLaMA: Open and Efficient Foundation Language Models. arXiv 2023, arXiv:2302.13971. [Google Scholar] [CrossRef]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. In Proceedings of the 34th Conference on Neural Information Processing Systems, Virtual, 6–12 December 2020. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).