The following provides a brief introduction to the methods and neural network architectures involved:

5.2. Results and Analysis

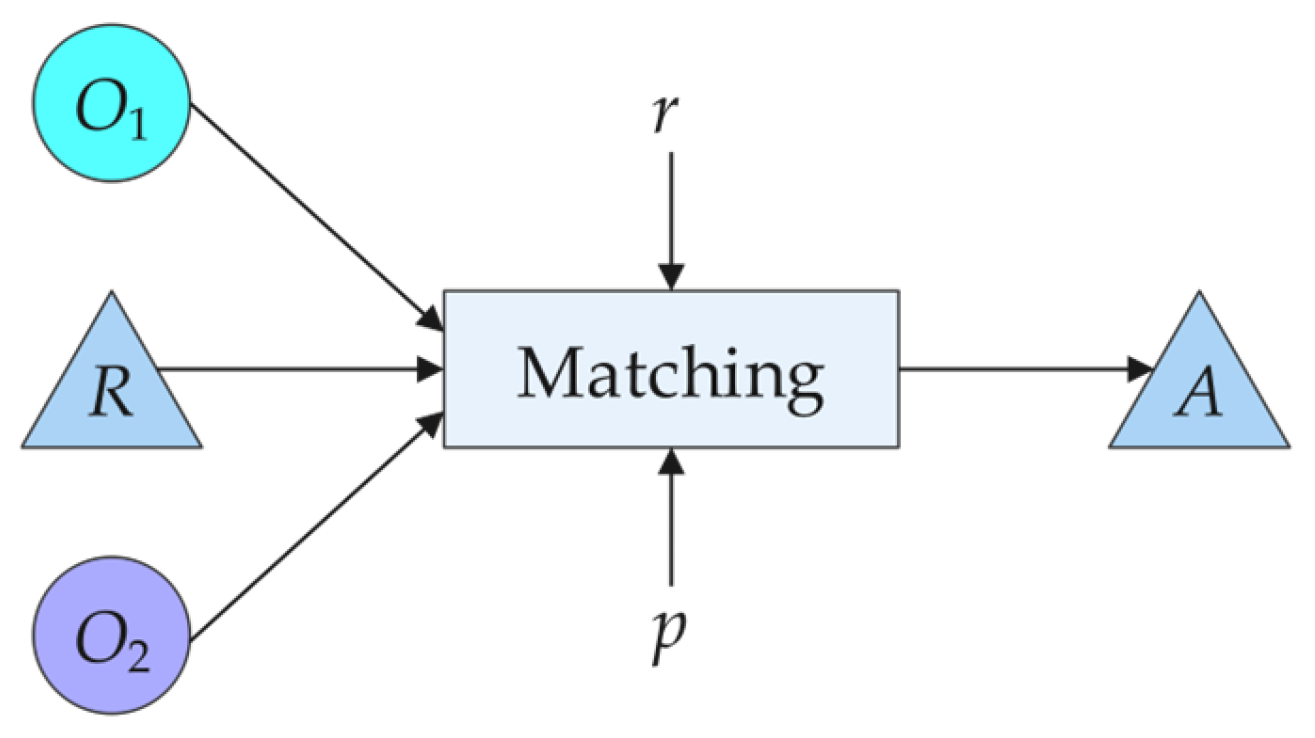

As shown in

Table 3, among the 31 test cases, the proposed method achieved equal or higher precision in 139 out of 155 comparisons with the five comparison methods, and was slightly outperformed by AML and Transformer in only 8 cases. This demonstrates that the proposed method is capable of achieving more accurate entity alignment in most cases. AML exhibits slightly better precision in some cases due to its use of dozens of similarity measurement techniques to enhance matching accuracy. However, the interference among these techniques results in lower recall and increased computational complexity. Similarly, the Transformer-based model leverages multi-head self-attention to capture global semantic dependencies and achieves high precision under a relatively strict matching threshold. However, it exhibits limited sensitivity to local sequential patterns and structural perturbations, such as word order variations or incomplete annotations, due to its weak inductive bias toward positional continuity and token order. As a result, many truly correct entity pairs are missed, leading to a significant decline in recall. In contrast, RNNs possess inherent sequential processing capabilities, making them more suitable for modeling local dependencies and maintaining sensitivity to structural variations in input sequences. Combined with a relatively high matching threshold, this strategy effectively improves the confidence of alignment results. Although the precision is slightly lower than that of some baseline methods, the sequential modeling capability enhances the model’s robustness in handling entity pairs with word order variations, structural perturbations, or partial lexical overlap, thereby contributing to improved recall.

In

Table 4, the recall of the proposed method is relatively lower in cases with scrambled labels and no comments. This is primarily because such benchmark cases rely heavily on the implicit semantics of entity IDs, which are typically meaningless random strings in real-world scenarios. The proposed method intentionally avoids ID-based alignment and instead focuses on aligning based on meaningful label and comment information. In cases where labels are scrambled and comments are missing, the RNN-based semantic model struggles to extract stable semantic features, and the N-gram character-level method may also be ineffective due to the lack of common character fragments. Nonetheless, the proposed method jointly models both labels and comments to capture semantic relationships more comprehensively, and applies an N-gram strategy to complement the alignment of entities that cannot be matched through semantic similarity alone. As a result, the proposed method demonstrates strong generality in various heterogeneous conditions, such as no hierarchies, missing properties, and altered hierarchies, and achieves an average recall that is 11.69% higher than that of LogMap, the second-best performing method.

Table 5 presents Friedman’s test on the alignment quality in terms of F-measure (computed rank in parentheses). Firstly, from the perspective of F-measure, the proposed method demonstrates consistently stable overall performance across all 31 test cases. In particular, it maintains a high F-measure (ranging from 0.81 to 0.82) even in complex scenarios involving multiple overlapping types of ontology heterogeneity (e.g., Cases 248–262), where the performance of competing methods tends to fluctuate significantly, and in some cases, their F-measure drops to 0. Furthermore, from the comparative results across different types of heterogeneous scenarios, the proposed method achieves the highest ranking in three task categories: terminological heterogeneity (Cases 201–202), structural heterogeneity (Cases 221–247), and complex heterogeneity involving both terminological and structural differences (Cases 248–262), with F-measure improvements of 6.5%, 19.88%, and 17.87% over the second-best method, respectively. In terminological heterogeneity scenarios, explicit modeling of labels and comments, combined with pre-trained word embeddings, effectively enhances the perception of semantic differences. In structural heterogeneity scenarios, the contextual modeling capability of RNNs effectively captures hierarchical relationships between entities. In composite heterogeneity scenarios, the synergy of semantic modeling and character-level N-gram matching ensures semantic accuracy while addressing shallow differences such as spelling and abbreviations, thereby comprehensively improving matching performance. Overall, the proposed method achieves the highest average F-measure, outperforming the second-best method by 18.18%, which strongly validates not only its robustness and generality but also highlights its potential as a reliable solution for ontology alignment tasks across diverse and complex domains.

Secondly, Friedman’s test [

44] was applied to evaluate the alignment quality in terms of F-measure, aiming to determine whether significant differences exist among the participating methods. Specifically, under the null hypothesis, Friedman’s test assumes that all evaluated methods exhibit equivalent performance. To reject the null hypothesis, indicating statistically significant performance differences, the computed statistic

must meet or exceed the critical value from the reference chi-square distribution table. This study adopts a significance level of

, and with six participating methods in the matching tasks, the degrees of freedom correspond to 5, with the critical chi-square value being

. As indicated by the mean ranks in the last row of

Table 5, the proposed method achieves an average rank of 1.74 across the 31 test cases, significantly lower than that of other methods, demonstrating its superior average performance. Across the entire set of tasks, Friedman’s test statistic

substantially exceeds the critical value of 11.07, providing strong evidence to reject the null hypothesis of no significant performance differences among methods, thereby confirming the presence of statistically significant performance variations.

Based on the Friedman test results indicating significant performance differences among the evaluated methods, this study further applies the Holm test [

45] to assess whether the differences in alignment quality between each of the compared methods and the proposed method are statistically significant. Given that the proposed method achieves the lowest average rank and thus demonstrates the best overall performance, using it as the reference method for multiple comparisons is statistically justified. In the Holm test, the process involves the following steps. First, the

z-values are calculated to represent the ranking differences between each of the compared methods and the baseline. Then, based on the standard normal distribution, these

z-values are used to derive the unadjusted

p-values. Next, these

p-values are compared against the adjusted significance thresholds under the significance level

, computed as

, where

m is the number of methods compared with the baseline and

i denotes the rank position of the unadjusted

p-value among all comparisons. This allows us to determine whether the observed performance differences are statistically significant relative to the proposed method.

Table 6 presents the statistical analysis results of the Holm test. The results show that the proposed method is statistically superior to all other ontology matching methods. All comparison methods yield

p-values that are significantly lower than their corresponding Holm-adjusted significance thresholds, indicating that these methods are statistically different from the proposed method in terms of alignment performance. For instance, LogMap, which has the lowest

z-value among the baselines, yields a

p-value of 0.0156, which is far below its threshold of 0.05. Similarly, other methods such as AML, LogMapBio, Transformer, and PhenoMF also produce extremely low unadjusted

p-values, all well below their corresponding Holm-adjusted thresholds. These small

p-values further confirm that the differences in alignment quality between the proposed method and the compared methods are statistically significant at the 5% level. The consistency of these results across the compared methods further reinforces the statistical advantage of the proposed method, thereby validating the effectiveness of its innovative design for the ontology matching task.

To comprehensively evaluate the practical usability and computational cost of each ontology matching method, a comparative analysis of runtime across all benchmark cases was conducted, as presented in

Table 7.

As shown in

Table 7, the proposed method achieves the highest F-measure while requiring only 116 s of execution time, which is lower than all other methods, demonstrating its superior runtime efficiency. Furthermore, to comprehensively evaluate the matching quality and processing speed of each method, we adopt an efficiency-effectiveness trade-off metric, namely the F-measure per second. As shown in the fourth column of the figure, the proposed method achieves a score of 0.0078, which is approximately 1.2 times that of AML (0.0064) and 2.3 times that of LogMap (0.0034), and considerably higher than those of LogMapBio, PhenoMF, and Transformer. These results indicate that the proposed method not only achieves high alignment accuracy but also maintains strong practicality and scalability, making it well-suited for efficient matching in large-scale ontology matching tasks.

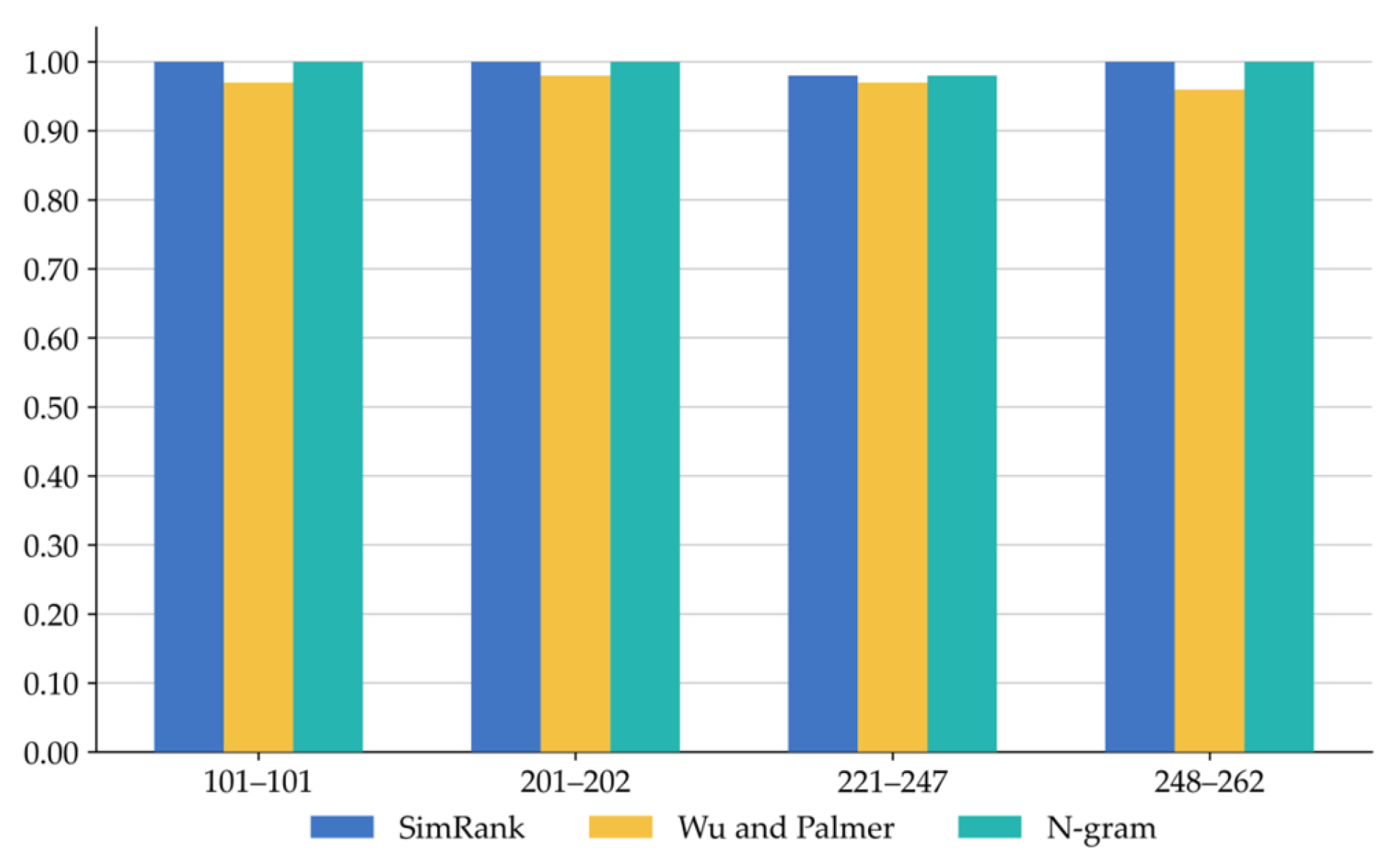

Figure 5,

Figure 6 and

Figure 7 present a comparison of the RNN-based hybrid ontology matching methods across four types of heterogeneity cases in the benchmark, in terms of precision, recall, and F-measure, respectively. As shown in

Figure 5, all three methods perform well under a high filtering threshold. However, since some of the relevant words for the entities are missing in WordNet, Wu-Palmer fails to provide effective similarity measurements, resulting in lower rankings across all four types of cases. Additionally, in the case of semantically ambiguous entities, all the methods often fail to achieve precise alignment. For example, according to the reference alignment, the source entity (ID: Conference, label: The location of an event, comment: An event presenting work.) is matched to the target entity (ID: Conference, label: Conference, comment: A scientific conference). However, our method performs sequential matching within the similarity matrix, first matches another target entity (ID: ScientificMeeting, label: Scientific meeting, comment: An event presenting work). Although the newly identified entity pair shares an identical comment, and the target entity’s comment in the reference alignment is semantically close to the label of the newly identified target entity, the reference alignment does not include this newly identified entity pair. This makes it difficult to identify the matched pairs in the reference alignment under the sequential matching setup.

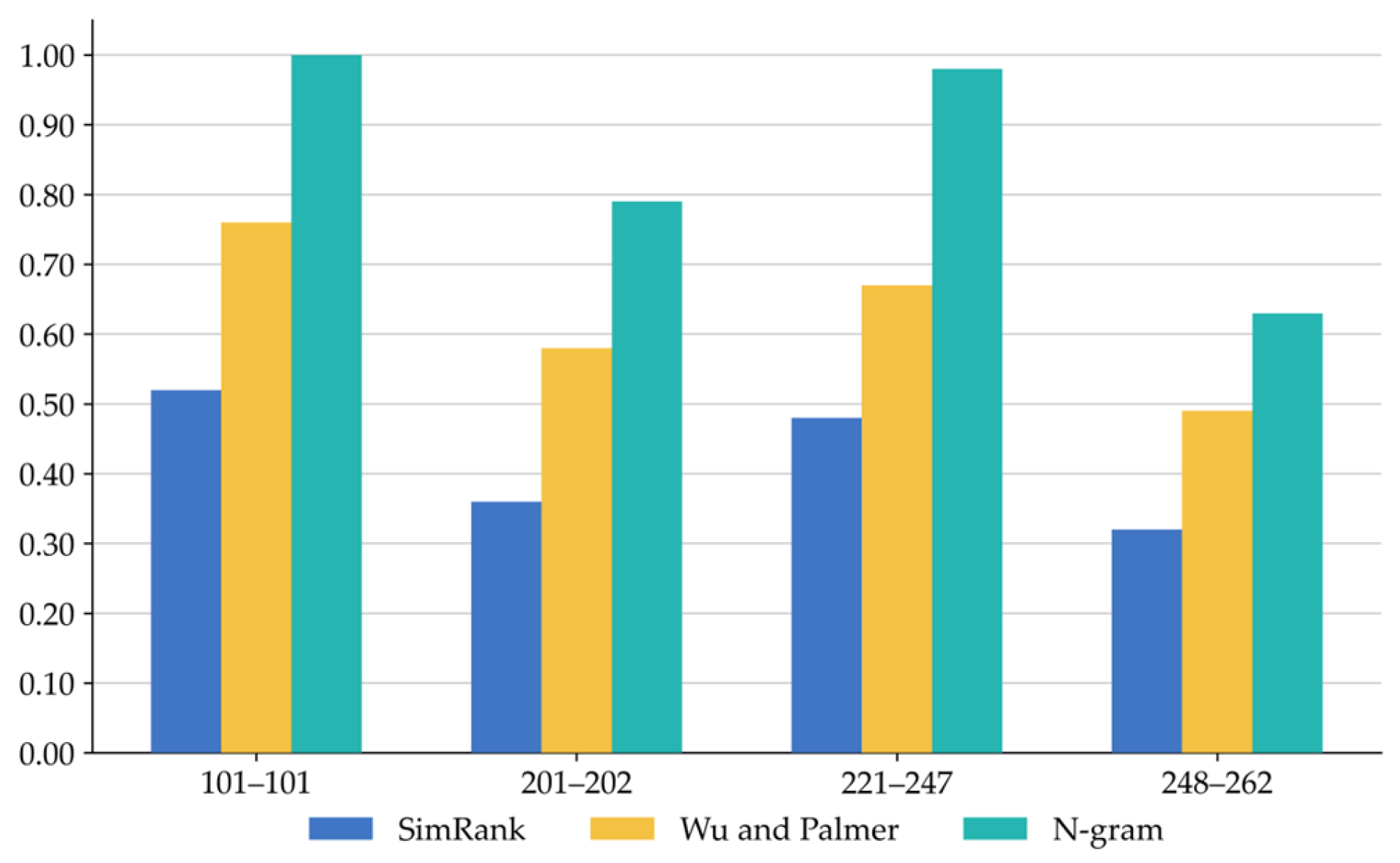

Figure 6 shows the comparison of recall. Due to the lack of neighboring entities for data properties and object properties in the ontologies, SimRank is unable to compute similarity values for them. In contrast, our method fully leverages the co-occurrence probability of common substrings in the entity pairs to capture the surface-level feature associations between sequence fragment information, thereby maximizing the discovery of potential alignments and improving recall.

Figure 7 presents the F-measure values of the three hybrid methods across the four dataset types. The proposed method achieves the best matching results on each case set, which reflects the effectiveness and generality of the method. Combined with

Figure 5 and

Figure 6, the superior F-measure performance of our method is mainly attributed to its high recall, which is enabled by N-gram’s ability to identify as many entities as possible based on character-level information, while the high filtering threshold ensures precision, resulting in an overall boost in F-measure. Compared to the SimRank and Wu-Palmer hybrid methods, the proposed method improves the F-measure by 18.46%. This further confirms that syntax-based similarity measurement methods are more effective than those based on structure and semantics in handling surface-level features, thereby improving the quality of the matching results.

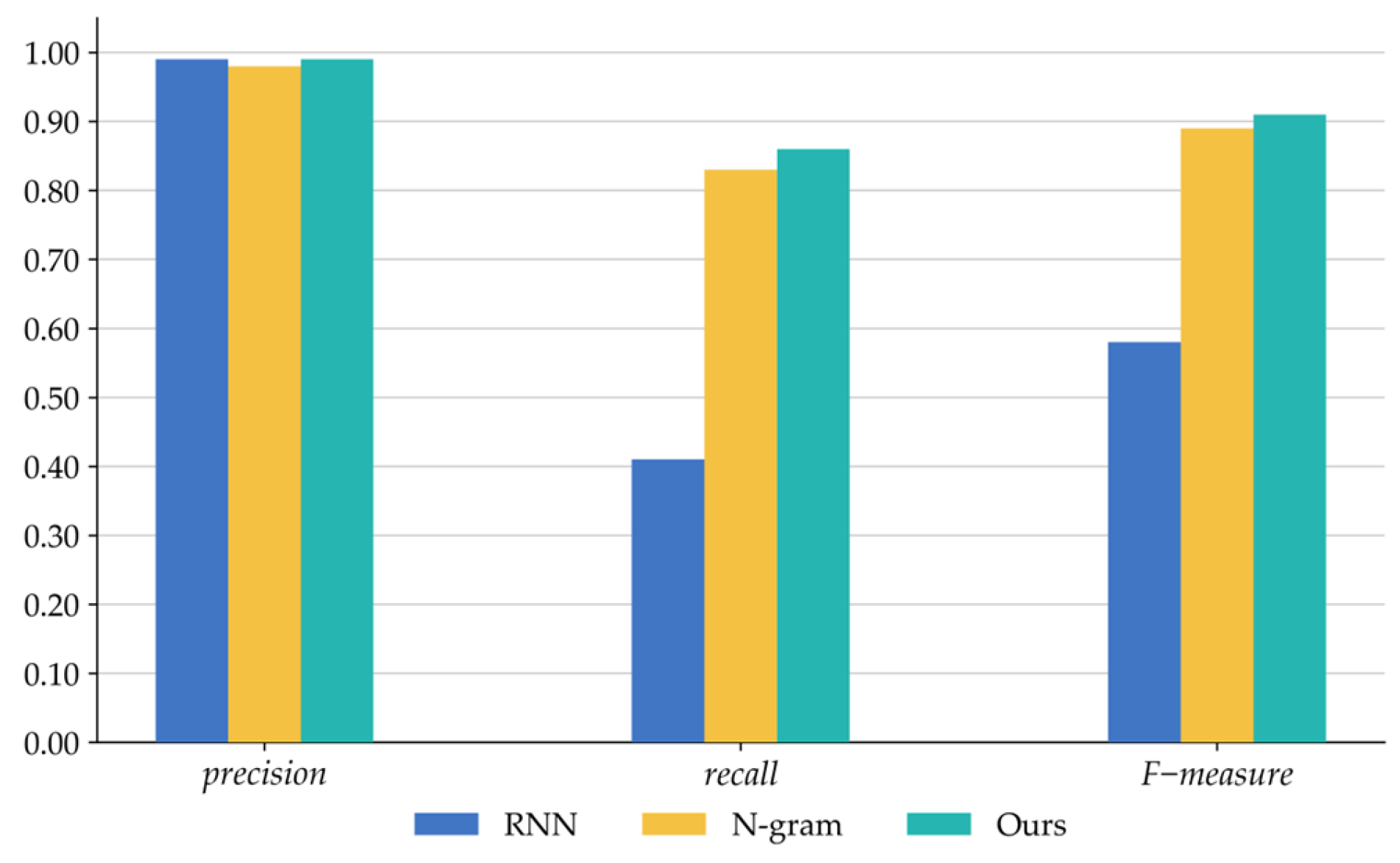

As shown in

Figure 8, the ablation results indicate that the RNN achieves higher precision, while the N-gram effectively improves recall. These advantages stem from their respective strengths: the RNN leverages its capacity to model complex semantic dependencies in sequential data, enabling the capture of deeper semantic relationships between entities and thereby enhancing precision; in contrast, the N-gram excels at detecting local character patterns within entity texts, allowing identification of additional potential matches and a significant increase in recall. In comparison, the proposed hybrid method maintains a high precision while improving recall, resulting in the optimal overall F-measure. These findings demonstrate that combining sequence modeling with syntactic features facilitates more accurate and comprehensive ontology matching.

Furthermore, to provide an in-depth assessment of the computational efficiency of the standalone RNN model and the proposed method (RNN+N-gram), two commonly used lightweight variants of RNN, namely LSTM and GRU, are included in the comparison. Both their standalone configurations and their integrations with the N-gram module are analyzed in terms of time and space complexity. This results in a total of six methods being evaluated: RNN, LSTM, GRU, RNN with N-gram, LSTM with N-gram, and GRU with N-gram. In addition, the metric F-measure per second is computed for all methods to jointly reflect their performance in alignment quality and computational efficiency. A summary of the comparative results is provided in

Table 8.

As shown in

Table 8, when processing a total of

entity pairs, the RNN model’s primary computational cost stems from sequence embedding and sequence modeling. For each pair of entities, two textual fields (i.e., label and comment) are processed independently. Given that the maximum input sequence length is

L, the embedding dimension is

d, and the hidden representation dimension is

h, the overall time complexity of the RNN model can be expressed as

, where

accounts for input-to-hidden transformations and

corresponds to hidden-to-hidden recurrent operations. In the proposed method, based on the RNN matching results, an additional N-gram comparison is introduced to measure the similarity of residual unmatched entity pairs. The time complexity of this step is

, where

G denotes the number of residual unmatched entity pairs,

l is the average character length of each textual field (label or comment), and

k is the window size used in the N-gram process. Since this module is applied only to a relatively small number of unmatched pairs (i.e.,

G <

T), the additional time cost remains limited. This is further supported by the runtime comparison shown in the table, where the proposed method introduces only a 3 s increase in runtime, indicating that the computational overhead is relatively low and confirming a favorable trade-off between performance and efficiency.

Similarly, for the standalone LSTM model, each time step involves four computational submodules: the input gate, forget gate, output gate, and candidate state update. Although strictly speaking, only three of them are gating mechanisms, all four submodules involve independent matrix multiplication operations. As a result, each time step requires four matrix multiplications, making the overall time complexity approximately four times that of the RNN, i.e., . Building on this, the LSTM + N-gram method incorporates an additional character-level N-gram module to further match residual unmatched entity pairs. The time complexity of this additional step is . Although this module introduces additional computational overhead, its impact remains limited since it also only operates on a relatively small subset of unmatched pairs (i.e., G < T). For the standalone GRU model, each time step consists of the update gate, reset gate, and candidate hidden state computation, which correspond to three matrix multiplications. Therefore, the time complexity of GRU is approximately three times that of the RNN, i.e., . Similarly, the GRU + N-gram method extends the GRU-based model with the same N-gram module, resulting in a total time complexity of .

In terms of space complexity, the RNN model primarily involves the storage of input sequences, the embedding matrix, and model parameters, resulting in a total space complexity of , where V denotes the vocabulary size. The term corresponds to the memory used for storing input sequences, accounts for the embedding matrix, and represents the number of weight parameters in the RNN cell, including input-to-hidden and hidden-to-hidden connections. For the proposed method, the additional space cost mainly arises from storing the unmatched entity pairs and their temporary processing results, which is approximately O(G). However, since the N-gram matching process is conducted in a pairwise manner and does not require the construction or storage of large-scale matrices, this additional memory overhead is minimal and does not significantly increase the overall memory consumption. In summary, while the proposed hybrid method introduces a slight increase in computational complexity, it substantially improves the recall and overall performance of ontology alignment, demonstrating a favorable balance between effectiveness and resource cost.

In comparison, for the standalone LSTM model, the space complexity increases due to the additional parameters required by the gating mechanisms, which include the input gate, forget gate, output gate, and the candidate state update. Each of these submodules requires distinct sets of weight matrices for input and recurrent connections, along with corresponding biases. As a result, the overall space complexity becomes , which is approximately four times the parameter-related memory of the RNN model. In the LSTM + N-gram configuration, the added N-gram component incurs a temporary memory cost of approximately O(G), similar to the RNN + N-gram setting. However, since the matching is restricted to a small number of unmatched entity pairs and no large-scale matrices are constructed, the memory overhead remains minor. For the GRU model, which combines the reset gate, update gate, and candidate hidden state into a more compact structure, the space complexity is moderately reduced compared to LSTM. Specifically, it requires three sets of input and recurrent weight matrices and biases, yielding a total space complexity of . As with the LSTM + N-gram, the GRU + N-gram method introduces an additional O(G) memory cost due to the character-level N-gram computation.

As shown in the fourth column of

Table 8, the F-measure comparison on the benchmark dataset demonstrates that the RNN model outperforms its two lightweight variants, LSTM and GRU, in terms of alignment quality. This indicates that RNN is more effective at capturing medium-range semantic dependencies, thereby enhancing matching performance. The fifth column presents the runtime comparison, where the RNN model exhibits significantly lower processing time than LSTM and GRU, which corroborates the above analysis on time complexity, further confirming its computational efficiency during the matching process. A closer examination of the runtime of the three hybrid methods reveals that the additional time cost introduced by the N-gram module is approximately 3 to 4 s across all variants, suggesting that the primary runtime differences are attributed to the complexity of the underlying neural models. This further supports the conclusion that RNN can efficiently model semantic features with lower computational overhead. The final column reports the F-measure per second metric, which jointly reflects the effectiveness and efficiency of each method. It can be observed that the proposed method achieves a 39.29% improvement over the second-best hybrid variant (i.e., LSTM + N-gram), indicating that it not only maintains high alignment quality but also offers superior computational efficiency, making it highly practical and scalable for ontology matching tasks.