The Importance of AI Data Governance in Large Language Models

Abstract

1. Introduction

1.1. How Are Data Crucial to Build LLM Performance?

- The main issues in the absence of strong data governance in LLMs are “Hallucination” while performing the output response based on the input query.

- The other vital issue is “Data misuse”, which creates a big issue due to ethical violations (unclear and unauthorized data usage policies).

- “Biasness in data” is a major concern that leads to creating biased approaches in LLMs.

- A lack of a data governance framework leads to “Data breach and lack of data security (security concern)” activity, which increases the risk of various adversarial attacks (backdoor attacks, data poisoning attacks, model inversion attacks, transfer-based black box attacks, etc.).

- Also, it impacts “Ethical implications and legal concerns” in LLMs due to the lack of data governance frameworks.

- The failure of LLMs, while deploying in a production pipeline, requires a strong LLMOps pipeline with the assistance of a solid data governance approach.

1.2. Addressing Data Misuse, Biases, and Ethical Challenges in the Digital Era of LLMs

1.3. Problem Statement

1.4. Objectives of the Survey

- One paper proposed the use of an AI data governance framework in the context of LLMs to improve the detection of suspicious transactions (money laundering and anomaly detection in financial transactions) [76].

- The use of an AI-driven intelligent data framework that substantially improves operational efficiency, compliance accuracy, and data integrity for the future development of AI-based work [77].

- One author provides a critique of the use of centering the implementation of AI data governance in LLMs, which is more effective for model performance [78].

- One study recommends the use of a robust data governance framework in the AI-enabled healthcare system, which addresses ethical challenges and privacy concerns (builds trust among users of healthcare services) [79].

- The use of AI data governance frameworks automates the process of managing data quality in the banking sector to improve model performance [80].

- The use of data-centric governance throughout the model learning lifecycle, responsible for the deployment of an AI system which reduces the risk of deployment failure, reduces the deployment process, and increases the solution design approach [81].

- The integration of an AI-driven data governance framework with a banking system that enhances data accurately, reliably, and securely, which creates trust and accountability in the financial sector [82].

- As AI is evolving very rapidly in daily life, lots of manual tasks are being reduced due to automation capabilities. Therefore, trust in the AI system is needed, which needs to be addressed through co-governance implementation techniques such as regulation, standards, and principles. The use of data governance frameworks improves AI maturity [83].

2. Foundations of AI Data Governance

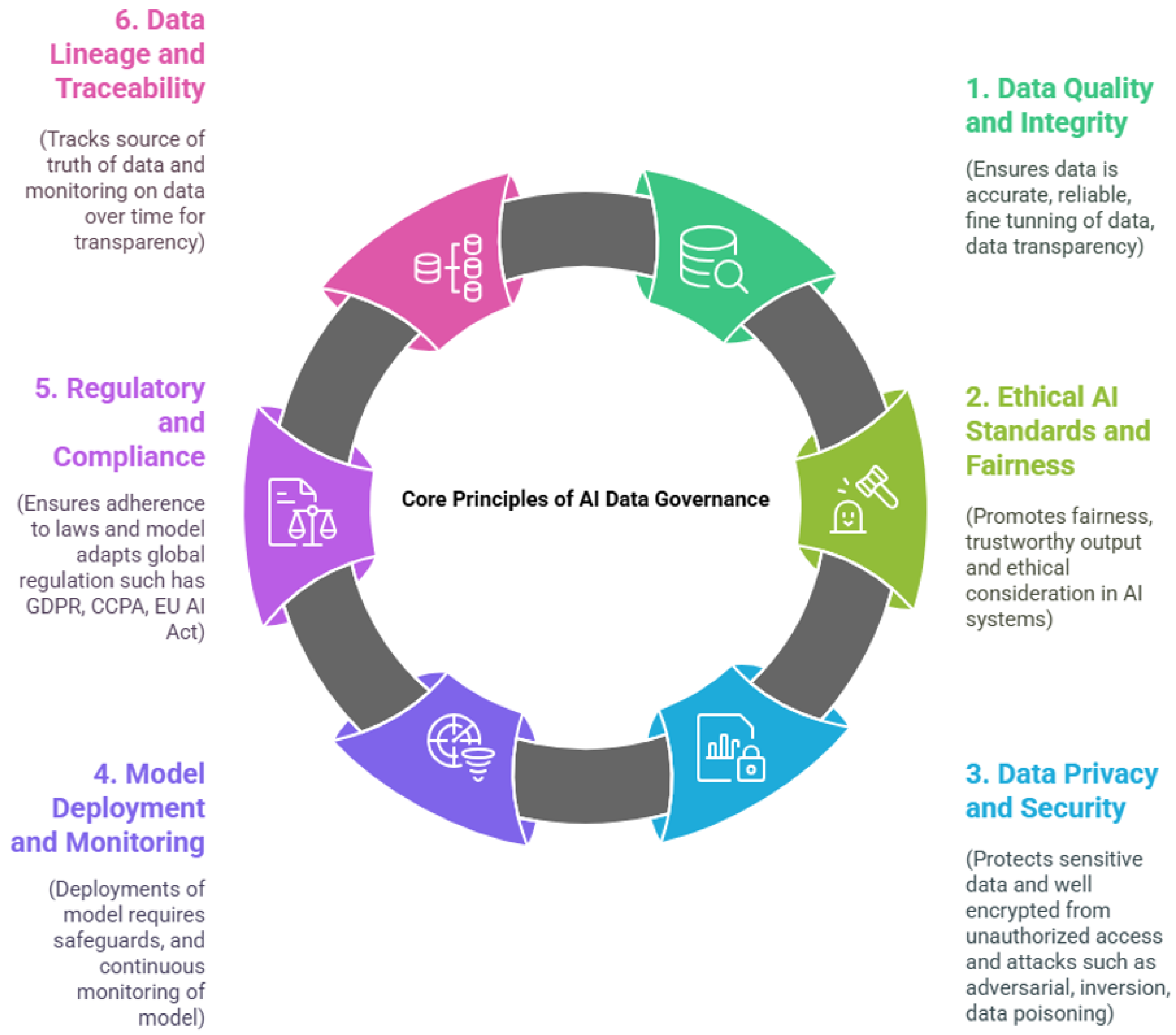

2.1. Core Principles of AI Data Governance Relevance to LLMs

2.2. Core Principles of AI Data Governance

2.2.1. Data Quality and Integrity

2.2.2. Ethical AI Standards and Fairness

2.2.3. Data Privacy and Security

2.2.4. Model Deployment and Monitoring

2.2.5. Regulatory and Compliance

2.2.6. Data Lineage and Traceability

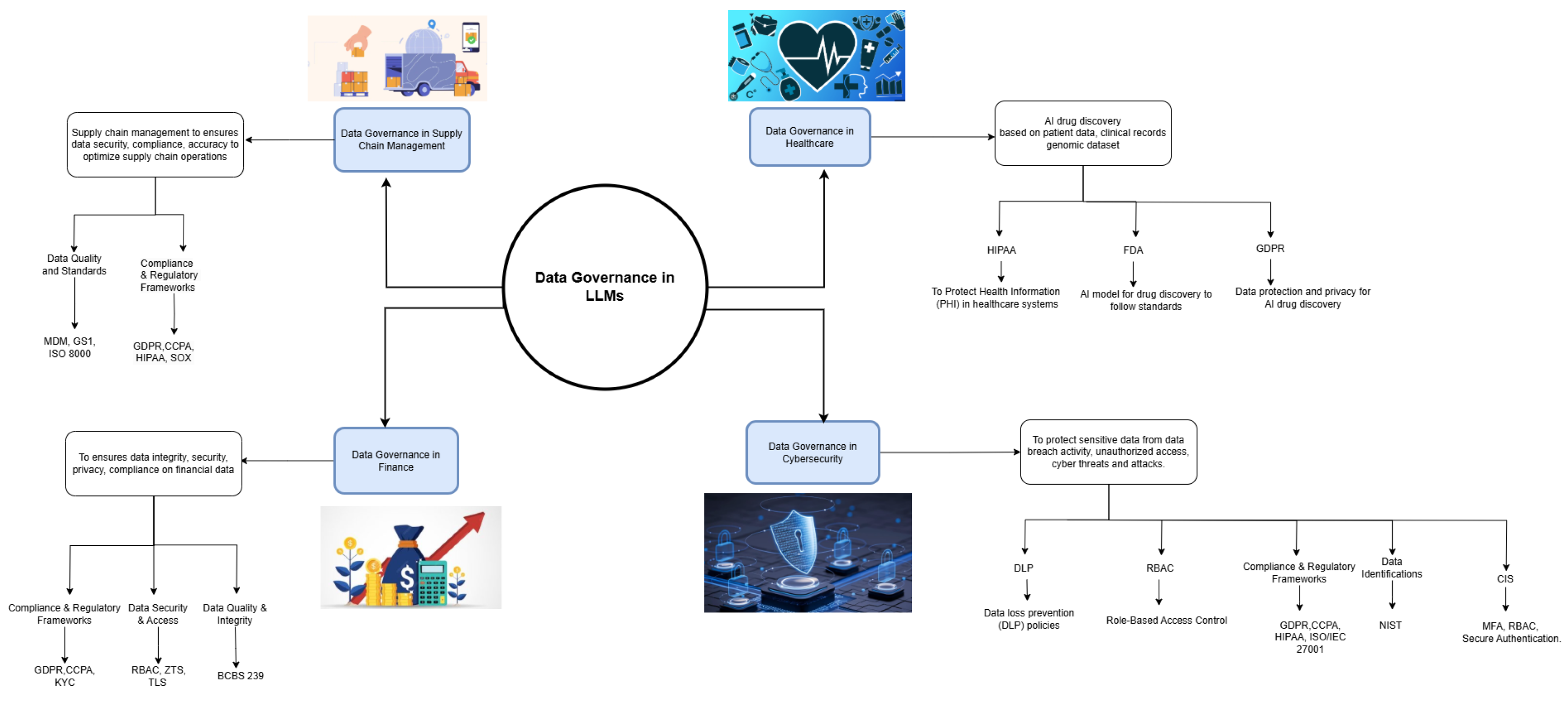

3. Use of AI Data Governance in Various Domains

3.1. AI Data Governance in Supply Chain Management

3.2. AI Data Governance in Healthcare

3.3. AI Data Governance in Cybersecurity

3.4. AI Data Governance in Finance

3.5. Domain-Specific Strategies and Challenges in LLM Data Governance

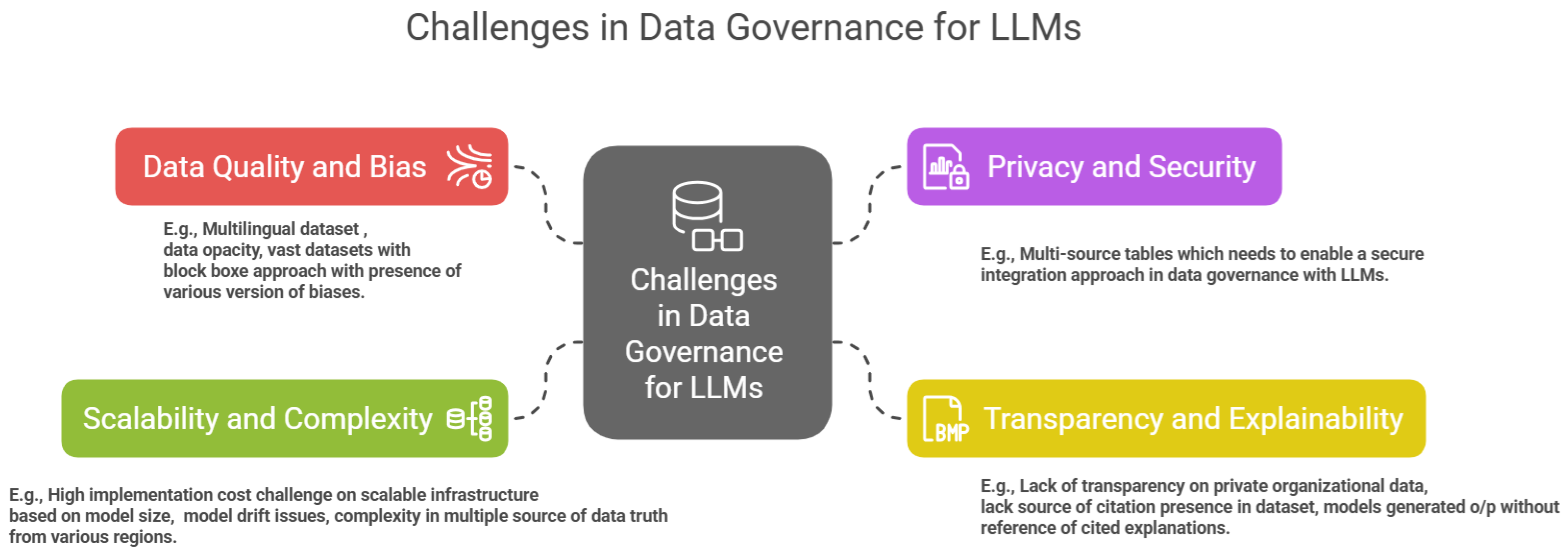

4. Challenges in Data Governance for LLMs

4.1. Data Quality and Bias

4.2. Privacy and Security

4.3. Transparency and Explainability

4.4. Scalability and Complexity

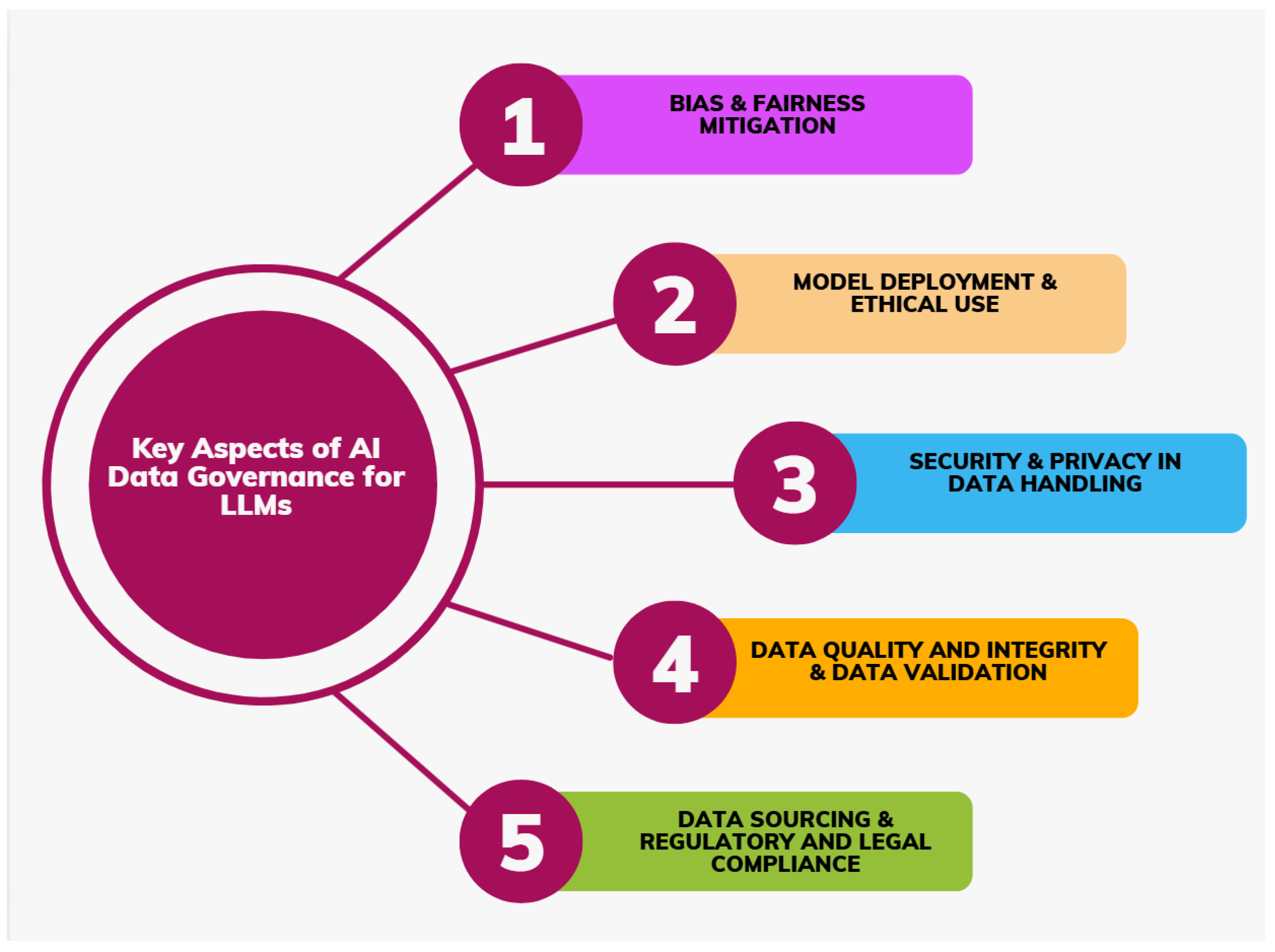

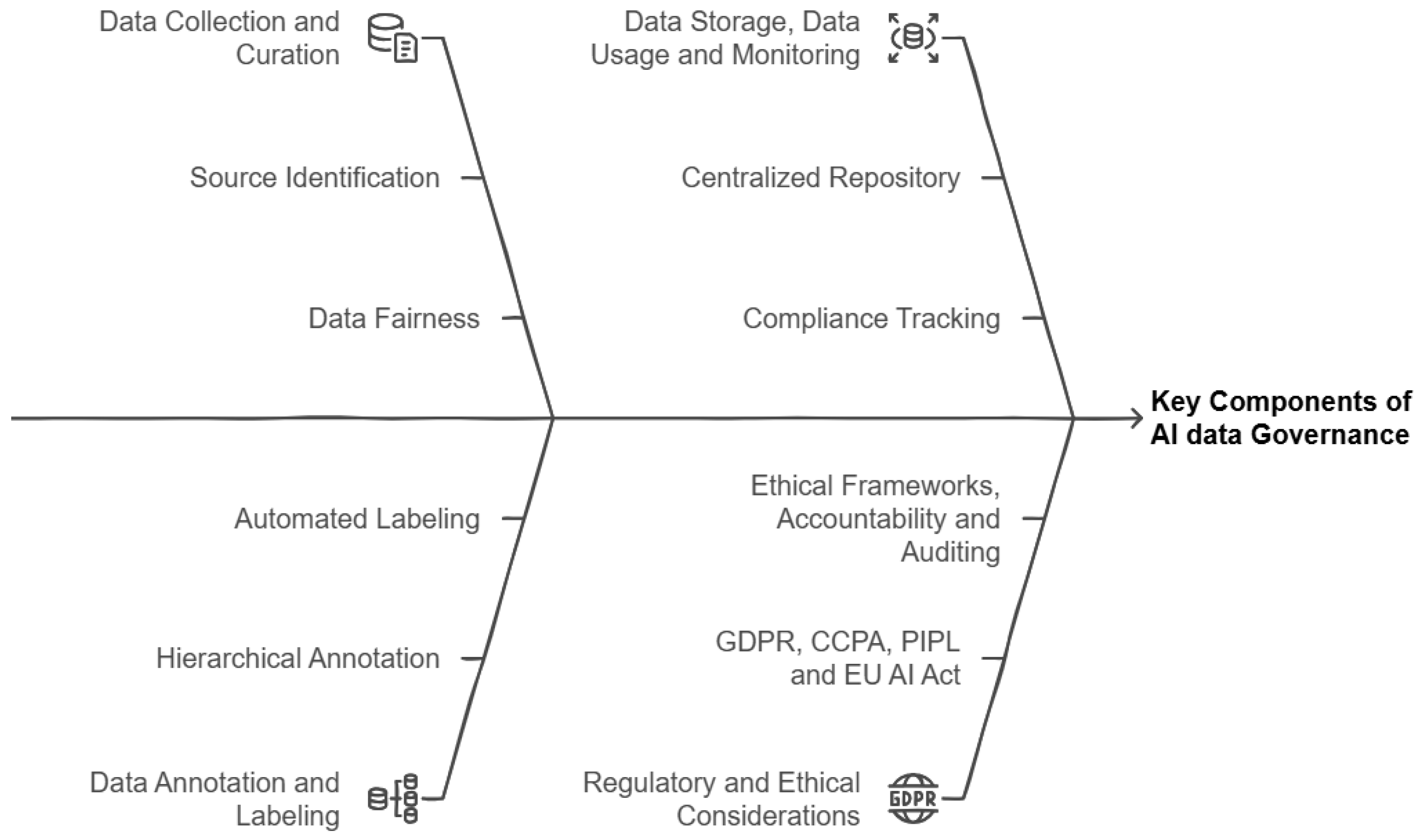

5. Key Components of AI Data Governance for LLMs

5.1. Data Collection and Curation

5.2. Data Annotation and Labeling

5.3. Data Storage and Management

5.4. Data Usage and Monitoring

5.5. Regulatory and Ethical Considerations

Global Regulatory Landscape

5.6. Comparative Insights of GDPR, CCPA, and PIPL in Practice for LLM Governance

5.6.1. Ethical Frameworks

5.6.2. Accountability and Auditing

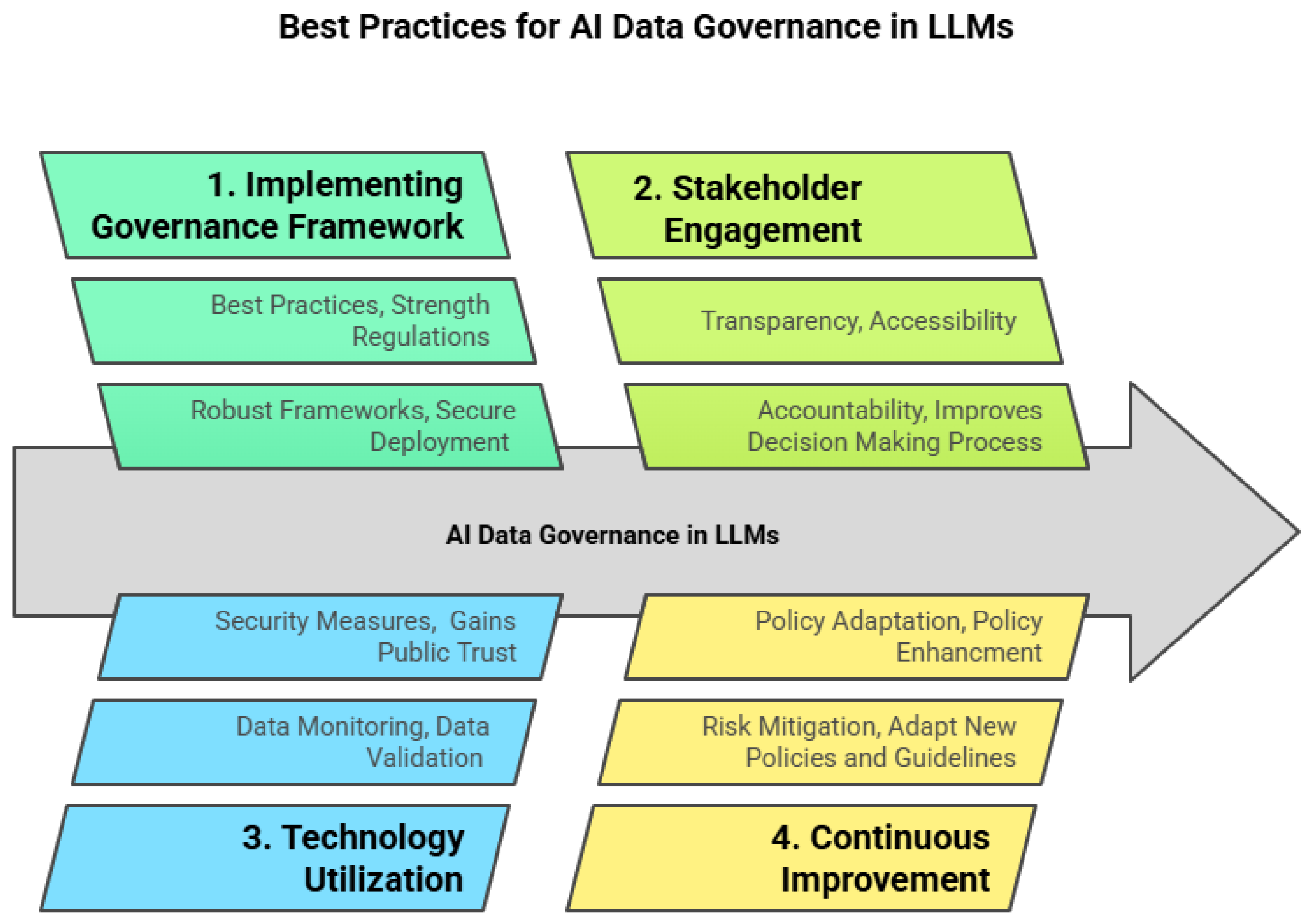

6. Best Practices for AI Data Governance in LLMs

6.1. Developing a Governance Framework

6.2. Stakeholder Engagement

6.3. Leveraging Technology

6.4. Continuous Improvement

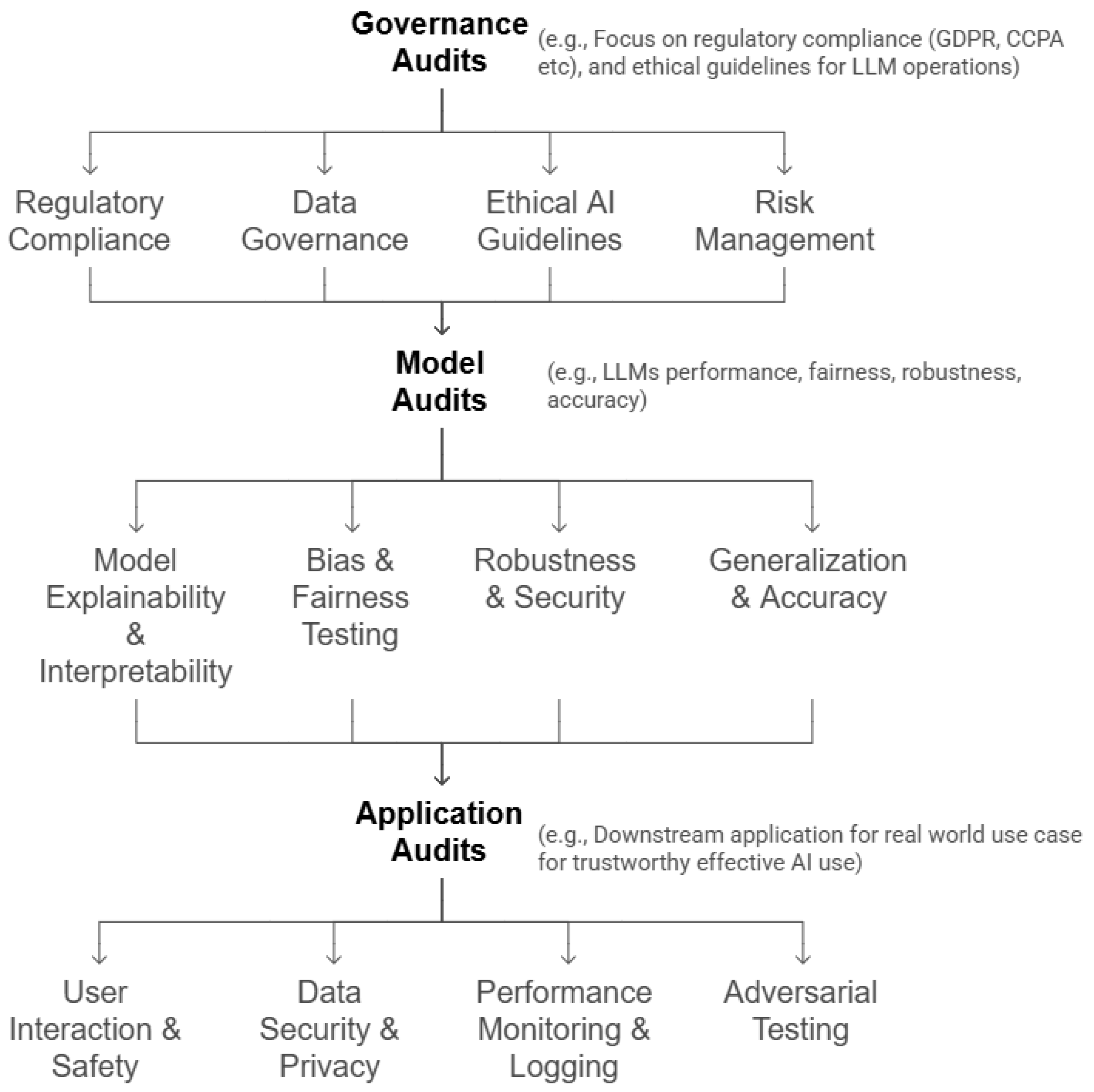

6.5. Tools and Metrics for Auditing Governance Quality in LLM Pipelines with Lifecycle Phases

7. Case Studies and Real-World Applications on Implementing Data Governance in LLMs

8. Open Challenges and Future Research Opportunities

8.1. Scalability of Data Governance Framework

8.2. Security Risks and Data Breaches

8.3. Data Privacy and Compliance

8.4. Data Provenance and Traceability

8.5. Human–AI Collaboration in Data Governance

8.6. Data Quality and Bias Mitigation

8.7. Gaps and Potential Future Research Directions

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| GDPR | General Data Protection Regulation |

| HIPAA | Health Insurance Portability and Accountability Act |

| CCPA | California Consumer Privacy Act |

| FCRA | Fair Credit Reporting Act |

| MDM | Master data management |

| FSA | Financial sentiment analysis |

| IT | Information Technology |

| OECD | Organization for Economic Cooperation and Development |

| ENISA | European Union Agency for Cybersecurity |

| NIST | National Institute of Standards and Technology |

| CRS | Conversational recommender system |

| BCBS | Basel Committee on Banking Supervision |

| SCM | Supply chain management |

| LLMDB | LLM-enhanced data management paradigm |

| FDA | Food and Drug Administration |

| MLOps | Machine learning operations |

| LLMOps | Large language model operations |

| GPU | Graphics Processing Unit |

| TPU | Tensor Processing Unit |

| LLM | Large language model |

| AIRMF | AI risk management framework |

| DLP | Data Loss Prevention |

| RBAC | Role-Based Access Control |

| ISO/IEC | International Organization for Standardization/International |

| Electrotechnical Commission | |

| MFA | Multi-Factor Authentication |

| KYC | Know Your Customer |

| TLS | Transport Layer Security |

| ML | Machine learning |

| ZTS | Zero Trust Security |

| BCBS | Basel Committee on Banking Supervision |

| GS1 | Global Standards for supply chain and data management |

| SOX | Sarbanes–Oxley Act |

| RBAC | Role-Based Access Control |

| PHI | Protected Health Information |

| CTI | Cyber threat intelligence |

| EHRs | Electronic health records |

| EU | European Union |

| PIPL | Personal Information Protection Law |

| CPRA | California Privacy Rights Act |

| AI | Artificial intelligence |

| NIST | National Institute of Standards and Technology |

| SOC | Service Organization Focus |

| MDM | Master data management |

| TKG | Telecom Knowledge Governance |

| RAG | Retrieval-Augmented Generation |

| LGPD | Lei Geral de Proteção de Dados (General Data Protection Law) |

| HHH | Helpful, honest, and harmless |

| PII | Personal identifiable information |

| DVD | Data validation document |

| EAI | Explainable artificial intelligence |

| WHO | World Health Organization |

| AU | African Union |

| EU | European Union |

| DPK | Data Prep Kit |

| DLG | Data lineage graphs |

| ETHOS | Ethical Technology and Holistic Oversight System |

| KYC | Know Your Customer |

| DataBOM | Data Bill of Materials |

| HAIRA | Healthcare AI governance readiness assessment |

| GRC | Governance, risk, and compliance |

| PII | Personally identifiable information |

| DLP | Data Loss Prevention |

| SCC | Standard Contractual Clauses |

References

- Haque, M.A. LLMs: A Game-Changer for Software Engineers? arXiv 2024, arXiv:2411.00932. [Google Scholar] [CrossRef]

- Meduri, S. Revolutionizing Customer Service: The Impact of Large Language Models on Chatbot Performance. Int. J. Sci. Res. Comput. Sci. Eng. Inf. Technol. 2024, 10, 721–730. [Google Scholar] [CrossRef]

- Pahune, S.; Chandrasekharan, M. Several categories of large language models (llms): A short survey. arXiv 2023, arXiv:2307.10188. [Google Scholar] [CrossRef]

- Vavekanand, R.; Karttunen, P.; Xu, Y.; Milani, S.; Li, H. Large Language Models in Healthcare Decision Support: A Review. SSRN. 2024. Available online: https://ssrn.com/abstract=4892593 (accessed on 22 May 2025).

- Veigulis, Z.P.; Ware, A.D.; Hoover, P.J.; Blumke, T.L.; Pillai, M.; Yu, L.; Osborne, T.F. Identifying Key Predictive Variables in Medical Records Using a Large Language Model (LLM). Research Square. 2024. Available online: https://www.researchsquare.com/article/rs-4957517/v1 (accessed on 22 May 2025).

- Yuan, M.; Bao, P.; Yuan, J.; Shen, Y.; Chen, Z.; Xie, Y.; Zhao, J.; Li, Q.; Chen, Y.; Zhang, L.; et al. Large language models illuminate a progressive pathway to artificial intelligent healthcare assistant. Med. Plus 2024, 1, 100030. [Google Scholar] [CrossRef]

- Zhang, K.; Meng, X.; Yan, X.; Ji, J.; Liu, J.; Xu, H.; Zhang, H.; Liu, D.; Wang, J.; Wang, X.; et al. Revolutionizing Health Care: The Transformative Impact of Large Language Models in Medicine. J. Med. Internet Res. 2025, 27, e59069. [Google Scholar] [CrossRef]

- Acosta, J.N.; Falcone, G.J.; Rajpurkar, P.; Topol, E.J. Multimodal biomedical AI. Nat. Med. 2022, 28, 1773–1784. [Google Scholar] [CrossRef]

- Huang, K.; Altosaar, J.; Ranganath, R. Clinicalbert: Modeling clinical notes and predicting hospital readmission. arXiv 2019, arXiv:1904.05342. [Google Scholar]

- Lee, J.; Yoon, W.; Kim, S.; Kim, D.; Kim, S.; So, C.H.; Kang, J. BioBERT: A pre-trained biomedical language representation model for biomedical text mining. Bioinformatics 2020, 36, 1234–1240. [Google Scholar] [CrossRef]

- Santos, T.; Tariq, A.; Das, S.; Vayalpati, K.; Smith, G.H.; Trivedi, H.; Banerjee, I. PathologyBERT-pre-trained vs. a new transformer language model for pathology domain. In Proceedings of the AMIA Annual Symposium Proceedings, Washington, DC, USA, 29 April 2023; Volume 2022, p. 962. [Google Scholar]

- Christophe, C.; Kanithi, P.K.; Raha, T.; Khan, S.; Pimentel, M.A. Med42-v2: A suite of clinical llms. arXiv 2024, arXiv:2408.06142. [Google Scholar]

- Wu, S.; Irsoy, O.; Lu, S.; Dabravolski, V.; Dredze, M.; Gehrmann, S.; Kambadur, P.; Rosenberg, D.; Mann, G. Bloomberggpt: A large language model for finance. arXiv 2023, arXiv:2303.17564. [Google Scholar]

- Araci, D. FinBERT: Financial Sentiment Analysis with Pre-trained Language Models. arXiv 2019, arXiv:1908.10063. [Google Scholar]

- Yang, H.; Liu, X.Y.; Wang, C.D. Fingpt: Open-source financial large language models. arXiv 2023, arXiv:2306.06031. [Google Scholar] [CrossRef]

- Zhao, Z.; Welsch, R.E. Aligning LLMs with Human Instructions and Stock Market Feedback in Financial Sentiment Analysis. arXiv 2024, arXiv:2410.14926. [Google Scholar]

- Yu, Y.; Yao, Z.; Li, H.; Deng, Z.; Cao, Y.; Chen, Z.; Suchow, J.W.; Liu, R.; Cui, Z.; Xu, Z.; et al. Fincon: A synthesized llm multi-agent system with conceptual verbal reinforcement for enhanced financial decision making. arXiv 2024, arXiv:2407.06567. [Google Scholar]

- Shah, S.; Ryali, S.; Venkatesh, R. Multi-Document Financial Question Answering using LLMs. arXiv 2024, arXiv:2411.07264. [Google Scholar]

- Wei, Q.; Yang, M.; Wang, J.; Mao, W.; Xu, J.; Ning, H. Tourllm: Enhancing llms with tourism knowledge. arXiv 2024, arXiv:2407.12791. [Google Scholar]

- Banerjee, A.; Satish, A.; Wörndl, W. Enhancing Tourism Recommender Systems for Sustainable City Trips Using Retrieval-Augmented Generation. arXiv 2024, arXiv:2409.18003. [Google Scholar]

- Wang, J.; Shalaby, A. Leveraging Large Language Models for Enhancing Public Transit Services. arXiv 2024, arXiv:2410.14147. [Google Scholar]

- Zhang, Z.; Sun, Y.; Wang, Z.; Nie, Y.; Ma, X.; Sun, P.; Li, R. Large language models for mobility in transportation systems: A survey on forecasting tasks. arXiv 2024, arXiv:2405.02357. [Google Scholar]

- Zhai, X.; Tian, H.; Li, L.; Zhao, T. Enhancing Travel Choice Modeling with Large Language Models: A Prompt-Learning Approach. arXiv 2024, arXiv:2406.13558. [Google Scholar]

- Mo, B.; Xu, H.; Zhuang, D.; Ma, R.; Guo, X.; Zhao, J. Large language models for travel behavior prediction. arXiv 2023, arXiv:2312.00819. [Google Scholar]

- Nie, Y.; Kong, Y.; Dong, X.; Mulvey, J.M.; Poor, H.V.; Wen, Q.; Zohren, S. A Survey of Large Language Models for Financial Applications: Progress, Prospects and Challenges. arXiv 2024, arXiv:2406.11903. [Google Scholar]

- Papasotiriou, K.; Sood, S.; Reynolds, S.; Balch, T. AI in Investment Analysis: LLMs for Equity Stock Ratings. In Proceedings of the 5th ACM International Conference on AI in Finance, Brooklyn, NY, USA, 14–17 November 2024; pp. 419–427. [Google Scholar]

- Fatemi, S.; Hu, Y.; Mousavi, M. A Comparative Analysis of Instruction Fine-Tuning LLMs for Financial Text Classification. arXiv 2024, arXiv:2411.02476. [Google Scholar]

- Gebreab, S.A.; Salah, K.; Jayaraman, R.; ur Rehman, M.H.; Ellaham, S. Llm-based framework for administrative task automation in healthcare. In Proceedings of the 2024 12th International Symposium on Digital Forensics and Security (ISDFS), San Antonio, TX, USA, 29–30 April 2024; pp. 1–7. [Google Scholar]

- Cascella, M.; Montomoli, J.; Bellini, V.; Bignami, E. Evaluating the feasibility of ChatGPT in healthcare: An analysis of multiple clinical and research scenarios. J. Med. Syst. 2023, 47, 33. [Google Scholar] [CrossRef] [PubMed]

- Palen-Michel, C.; Wang, R.; Zhang, Y.; Yu, D.; Xu, C.; Wu, Z. Investigating LLM Applications in E-Commerce. arXiv 2024, arXiv:2408.12779. [Google Scholar]

- Fang, C.; Li, X.; Fan, Z.; Xu, J.; Nag, K.; Korpeoglu, E.; Kumar, S.; Achan, K. Llm-ensemble: Optimal large language model ensemble method for e-commerce product attribute value extraction. In Proceedings of the 47th International ACM SIGIR Conference on Research and Development in Information Retrieval, New York, NY, USA, 14–18 July 2024; pp. 2910–2914. [Google Scholar]

- Yin, M.; Wu, C.; Wang, Y.; Wang, H.; Guo, W.; Wang, Y.; Liu, Y.; Tang, R.; Lian, D.; Chen, E. Entropy law: The story behind data compression and llm performance. arXiv 2024, arXiv:2407.06645. [Google Scholar]

- Kumar, R.; Kakde, S.; Rajput, D.; Ibrahim, D.; Nahata, R.; Sowjanya, P.; Kumar, D. Pretraining Data and Tokenizer for Indic LLM. arXiv 2024, arXiv:2407.12481. [Google Scholar]

- Lu, K.; Liang, Z.; Nie, X.; Pan, D.; Zhang, S.; Zhao, K.; Chen, W.; Zhou, Z.; Dong, G.; Zhang, W.; et al. Datasculpt: Crafting data landscapes for llm post-training through multi-objective partitioning. arXiv 2024, arXiv:2409.00997. [Google Scholar]

- Choe, S.K.; Ahn, H.; Bae, J.; Zhao, K.; Kang, M.; Chung, Y.; Pratapa, A.; Neiswanger, W.; Strubell, E.; Mitamura, T.; et al. What is Your Data Worth to GPT? LLM-Scale Data Valuation with Influence Functions. arXiv 2024, arXiv:2405.13954. [Google Scholar]

- Jiao, F.; Ding, B.; Luo, T.; Mo, Z. Panda llm: Training data and evaluation for open-sourced chinese instruction-following large language models. arXiv 2023, arXiv:2305.03025. [Google Scholar]

- Gan, Z.; Liu, Y. Towards a Theoretical Understanding of Synthetic Data in LLM Post-Training: A Reverse-Bottleneck Perspective. arXiv 2024, arXiv:2410.01720. [Google Scholar]

- Wood, D.; Lublinsky, B.; Roytman, A.; Singh, S.; Adam, C.; Adebayo, A.; An, S.; Chang, Y.C.; Dang, X.H.; Desai, N.; et al. Data-Prep-Kit: Getting your data ready for LLM application development. arXiv 2024, arXiv:2409.18164. [Google Scholar]

- Liu, Z. Cultural Bias in Large Language Models: A Comprehensive Analysis and Mitigation Strategies. J. Transcult. Commun. 2024, 3, 224–244. [Google Scholar] [CrossRef]

- Khola, J.; Bansal, S.; Punia, K.; Pal, R.; Sachdeva, R. Comparative Analysis of Bias in LLMs through Indian Lenses. In Proceedings of the 2024 IEEE International Conference on Electronics, Computing and Communication Technologies (CONECCT), Bangalore, India, 12–14 July 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Talboy, A.N.; Fuller, E. Challenging the appearance of machine intelligence: Cognitive bias in LLMs and Best Practices for Adoption. arXiv 2023, arXiv:2304.01358. [Google Scholar]

- Faridoon, A.; Kechadi, M.T. Healthcare Data Governance, Privacy, and Security-A Conceptual Framework. In Proceedings of the EAI International Conference on Body Area Networks, Milan, Italy, 5–6 February 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 261–271. [Google Scholar]

- Gavgani, V.Z.; Pourrasmi, A. Data Governance Navigation for Advanced Operations in Healthcare Excellence. Depiction Health 2024, 15, 249–254. [Google Scholar] [CrossRef]

- Raza, M.A. Cyber Security and Data Privacy in the Era of E-Governance. Soc. Sci. J. Adv. Res. 2024, 4, 5–9. [Google Scholar] [CrossRef]

- Du, X.; Xiao, C.; Li, Y. Haloscope: Harnessing unlabeled llm generations for hallucination detection. arXiv 2024, arXiv:2409.17504. [Google Scholar]

- Li, R.; Bagade, T.; Martinez, K.; Yasmin, F.; Ayala, G.; Lam, M.; Zhu, K. A Debate-Driven Experiment on LLM Hallucinations and Accuracy. arXiv 2024, arXiv:2410.19485. [Google Scholar]

- Liu, X. A Survey of Hallucination Problems Based on Large Language Models. Appl. Comput. Eng. 2024, 97, 24–30. [Google Scholar] [CrossRef]

- Reddy, G.P.; Pavan Kumar, Y.V.; Prakash, K.P. Hallucinations in Large Language Models (LLMs). In Proceedings of the 2024 IEEE Open Conference of Electrical, Electronic and Information Sciences (eStream), Vilnius, Lithuania, 25 April 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Zhui, L.; Fenghe, L.; Xuehu, W.; Qining, F.; Wei, R. Ethical considerations and fundamental principles of large language models in medical education. J. Med. Internet Res. 2024, 26, e60083. [Google Scholar] [CrossRef]

- Shah, S.B.; Thapa, S.; Acharya, A.; Rauniyar, K.; Poudel, S.; Jain, S.; Masood, A.; Naseem, U. Navigating the Web of Disinformation and Misinformation: Large Language Models as Double-Edged Swords. IEEE Access 2024, 1. [Google Scholar] [CrossRef]

- Ma, T. LLM Echo Chamber: Personalized and automated disinformation. arXiv 2024, arXiv:2409.16241. [Google Scholar]

- Pahune, S.; Akhtar, Z. Transitioning from MLOps to LLMOps: Navigating the Unique Challenges of Large Language Models. Information 2025, 16, 87. [Google Scholar] [CrossRef]

- Tie, J.; Yao, B.; Li, T.; Ahmed, S.I.; Wang, D.; Zhou, S. LLMs are Imperfect, Then What? An Empirical Study on LLM Failures in Software Engineering. arXiv 2024, arXiv:2411.09916. [Google Scholar]

- Menshawy, A.; Nawaz, Z.; Fahmy, M. Navigating Challenges and Technical Debt in Large Language Models Deployment. In Proceedings of the 4th Workshop on Machine Learning and Systems—EuroMLSys ’24, New York, NY, USA, 22 April 2024; pp. 192–199. [Google Scholar] [CrossRef]

- Chen, T. Challenges and Opportunities in Integrating LLMs into Continuous Integration/Continuous Deployment (CI/CD) Pipelines. In Proceedings of the 2024 5th International Seminar on Artificial Intelligence, Networking and Information Technology (AINIT), Nanjing, China, 29–31 March 2024; pp. 364–367. [Google Scholar] [CrossRef]

- Hu, S.; Wang, P.; Yao, Y.; Lu, Z. “I Always Felt that Something Was Wrong”: Understanding Compliance Risks and Mitigation Strategies when Professionals Use Large Language Models. arXiv 2024, arXiv:2411.04576. [Google Scholar]

- Berger, A.; Hillebrand, L.; Leonhard, D.; Deußer, T.; De Oliveira, T.B.F.; Dilmaghani, T.; Khaled, M.; Kliem, B.; Loitz, R.; Bauckhage, C.; et al. Towards Automated Regulatory Compliance Verification in Financial Auditing with Large Language Models. In Proceedings of the 2023 IEEE International Conference on Big Data (BigData), Sorrento, Italy, 15–18 December 2023; pp. 4626–4635. [Google Scholar] [CrossRef]

- Fakeyede, O.G.; Okeleke, P.A.; Hassan, A.; Iwuanyanwu, U.; Adaramodu, O.R.; Oyewole, O.O. Navigating data privacy through IT audits: GDPR, CCPA, and beyond. Int. J. Res. Eng. Sci. 2023, 11, 184–192. [Google Scholar]

- Aaronson, S.A. Data Dysphoria: The Governance Challenge Posed by Large Learning Models. 2023. Available online: https://ssrn.com/abstract=4554580 (accessed on 23 May 2025). [CrossRef]

- Cheong, I.; Caliskan, A.; Kohno, T. Envisioning legal mitigations for LLM-based intentional and unintentional harms. In Proceedings of the 1st Workshop on Generative AI and Law (ICML 2022), Honolulu, HI, USA, 2022. [Google Scholar]

- Glukhov, D.; Han, Z.; Shumailov, I.; Papyan, V.; Papernot, N. Breach By A Thousand Leaks: Unsafe Information Leakage inSafe’AI Responses. arXiv 2024, arXiv:2407.02551. [Google Scholar]

- Madhavan, D. Enterprise Data Governance: A Comprehensive Framework for Ensuring Data Integrity, Security, and Compliance in Modern Organizations. Int. J. Sci. Res. Comput. Sci. Eng. Inf. Technol. 2024, 10, 731–743. [Google Scholar] [CrossRef]

- Rejeleene, R.; Xu, X.; Talburt, J. Towards trustable language models: Investigating information quality of large language models. arXiv 2024, arXiv:2401.13086. [Google Scholar]

- Yang, J.; Wang, Z.; Lin, Y.; Zhao, Z. Problematic Tokens: Tokenizer Bias in Large Language Models. In Proceedings of the 2024 IEEE International Conference on Big Data (BigData), Washington, DC, USA, 15–18 December 2024; pp. 6387–6393. [Google Scholar]

- Balloccu, S.; Schmidtová, P.; Lango, M.; Dušek, O. Leak, cheat, repeat: Data contamination and evaluation malpractices in closed-source LLMs. arXiv 2024, arXiv:2402.03927. [Google Scholar]

- Abdelnabi, S.; Fay, A.; Cherubin, G.; Salem, A.; Fritz, M.; Paverd, A. Are you still on track!? Catching LLM Task Drift with Activations. arXiv 2024, arXiv:2406.00799. [Google Scholar]

- Würsch, M.; David, D.P.; Mermoud, A. Monitoring Emerging Trends in LLM Research. In Large Language Models in Cybersecurity; Springer: Berlin/Heidelberg, Germany, 2024; pp. 153–161. [Google Scholar] [CrossRef]

- Mannapur, S.B. Machine Learning Drift Detection and Concept Drift Analysis: Real-time Monitoring and Adaptive Model Maintenance. Int. J. Sci. Res. Comput. Sci. Eng. Inf. Technol. 2024, 11. [Google Scholar]

- Pai, Y.T.; Sun, N.E.; Li, C.T.; Lin, S.d. Incremental Data Drifting: Evaluation Metrics, Data Generation, and Approach Comparison. ACM Trans. Intell. Syst. Technol. 2024, 15, 1–26. [Google Scholar] [CrossRef]

- Sharma, V.; Mousavi, E.; Gajjar, D.; Madathil, K.; Smith, C.; Matos, N. Regulatory framework around data governance and external benchmarking. J. Leg. Aff. Disput. Resolut. Eng. Constr. 2022, 14, 04522006. [Google Scholar]

- Vardia, A.S.; Chaudhary, A.; Agarwal, S.; Sagar, A.K.; Shrivastava, G. Cloud Security Essentials: A Detailed Exploration. Emerg. Threat. Countermeas. Cybersecur. 2025, 14, 413–432. [Google Scholar]

- Sainz, O.; Campos, J.A.; García-Ferrero, I.; Etxaniz, J.; de Lacalle, O.L.; Agirre, E. Nlp evaluation in trouble: On the need to measure llm data contamination for each benchmark. arXiv 2023, arXiv:2310.18018. [Google Scholar]

- Perełkiewicz, M.; Poświata, R. A Review of the Challenges with Massive Web-mined Corpora Used in Large Language Models Pre-Training. arXiv 2024, arXiv:2407.07630. [Google Scholar]

- Jiao, J.; Afroogh, S.; Xu, Y.; Phillips, C. Navigating llm ethics: Advancements, challenges, and future directions. arXiv 2024, arXiv:2406.18841. [Google Scholar]

- Peng, B.; Chen, K.; Li, M.; Feng, P.; Bi, Z.; Liu, J.; Niu, Q. Securing large language models: Addressing bias, misinformation, and prompt attacks. arXiv 2024, arXiv:2409.08087. [Google Scholar]

- Mhammad, A.F.; Agarwal, R.; Columbo, T.; Vigorito, J. Generative & Responsible AI—LLMs Use in Differential Governance. In Proceedings of the 2023 International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, 13–25 December 2023; pp. 291–295. [Google Scholar] [CrossRef]

- Kumari, B. Intelligent Data Governance Frameworks: A Technical Overview. Int. J. Sci. Res. Comput. Sci. Eng. Inf. Technol. 2024, 10, 141–154. [Google Scholar] [CrossRef]

- Gupta, R.; Walker, L.; Corona, R.; Fu, S.; Petryk, S.; Napolitano, J.; Darrell, T.; Reddie, A.W. Data-Centric AI Governance: Addressing the Limitations of Model-Focused Policies. arXiv 2024, arXiv:2409.17216. [Google Scholar]

- Arigbabu, A.T.; Olaniyi, O.O.; Adigwe, C.S.; Adebiyi, O.O.; Ajayi, S.A. Data governance in AI-enabled healthcare systems: A case of the project nightingale. Asian J. Res. Comput. Sci. 2024, 17, 85–107. [Google Scholar] [CrossRef]

- Yandrapalli, V. AI-Powered Data Governance: A Cutting-Edge Method for Ensuring Data Quality for Machine Learning Applications. In Proceedings of the 2024 Second International Conference on Emerging Trends in Information Technology and Engineering (ICETITE), Vellore, India, 22–23 February 2024; pp. 1–6. Available online: https://ieeexplore.ieee.org/abstract/document/10493601 (accessed on 23 May 2025).

- McGregor, S.; Hostetler, J. Data-centric governance. arXiv 2023, arXiv:2302.07872. [Google Scholar]

- Nathan, C.; Alalyani, A.; Serbanoiu, A.A.; Khan, D. AI-Powered Data Governance: Ensuring Integrity in Banking’s Technological Frontier. ResearchGate. 2023. Available online: https://www.researchgate.net/publication/373296863_AI-Powered_Data_Governance_Ensuring_Integrity_in_Banking%27s_Technological_Frontier (accessed on 23 May 2025).

- Tjondronegoro, D.W. Strategic AI Governance: Insights from Leading Nations. arXiv 2024, arXiv:2410.01819. [Google Scholar]

- Schiff, D.; Biddle, J.; Borenstein, J.; Laas, K. What’s next for ai ethics, policy, and governance? A global overview. In Proceedings of the AAAI/ACM Conference on AI, Ethics, and Society, New York, NY, USA, 7–9 February 2020; pp. 153–158. [Google Scholar]

- Arnold, G.; Ludwick, S.; Mohsen Fatemi, S.; Krause, R.; Long, L.A.N. Policy entrepreneurship for transformative governance. In European Policy Analysis; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2024. [Google Scholar]

- Jakubik, J.; Vössing, M.; Kühl, N.; Walk, J.; Satzger, G. Data-centric artificial intelligence. In Business Information Systems Engineering; Springer: Berlin/Heidelberg, Germany, 2024; Volume 66, pp. 507–515. [Google Scholar] [CrossRef]

- Majeed, A.; Hwang, S.O. Technical analysis of data-centric and model-centric artificial intelligence. IT Prof. 2024, 25, 62–70. [Google Scholar] [CrossRef]

- Gisolfi, N. Model-Centric Verification of Artificial Intelligence. Ph.D. Thesis, Carnegie Mellon University, Pittsburgh, PA, USA, 13 January 2022; pp. 1–237. Available online: https://www.ri.cmu.edu/app/uploads/2022/01/CMU-RI-TR-22-02.pdf (accessed on 23 May 2025).

- Currie, N. Risk Based Approaches to Artificial Intelligence. Crowe Data Management. 2019. Available online: https://www.crowe.com/-/media/Crowe/LLP/folio-pdf/Risk-Approaches-to-AI.pdf (accessed on 23 May 2025).

- Lütge, C.; Hohma, E.; Boch, A.; Poszler, F.; Corrigan, C. On a Risk-Based Assessment Approach to AI Ethics Governance. Institute for Ethics in Artificial Intelligence, Technical University of Munich. 2022. Available online: https://www.ieai.sot.tum.de/wp-content/uploads/2022/06/IEAI-White-Paper-on-Risk-Management-Approach_2022-FINAL.pdf (accessed on 23 May 2025).

- Lee, C.A.; Chow, K.; Chan, H.A.; Lun, D.P.K. Decentralized governance and artificial intelligence policy with blockchain-based voting in federated learning. Front. Res. Metrics Anal. 2023, 8, 1035123. [Google Scholar] [CrossRef]

- Pencina, M.J.; McCall, J.; Economou-Zavlanos, N.J. A federated registration system for artificial intelligence in health. JAMA 2024, 332, 789–790. [Google Scholar] [CrossRef] [PubMed]

- Lim, H.Y.F. Regulatory compliance. In Artificial Intelligence; Edward Elgar Publishing: London, UK, 17 March 2022; pp. 85–108. Available online: https://www.elgaronline.com/edcollchap/edcoll/9781800371712/9781800371712.00017.xml (accessed on 23 May 2025).

- Aziza, O.R.; Uzougbo, N.S.; Ugwu, M.C. The impact of artificial intelligence on regulatory compliance in the oil and gas industry. World J. Adv. Res. Rev. 2023, 19, 1559–1570. [Google Scholar] [CrossRef]

- Eitel-Porter, R. Beyond the promise: Implementing ethical AI. AI Ethics 2021, 1, 73–80. [Google Scholar] [CrossRef]

- Daly, A.; Hagendorff, T.; Hui, L.; Mann, M.; Marda, V.; Wagner, B.; Wang, W.; Witteborn, S. Artificial intelligence governance and ethics: Global perspectives. arXiv 2019, arXiv:1907.03848. [Google Scholar] [CrossRef]

- Sidhpurwala, H.; Mollett, G.; Fox, E.; Bestavros, M.; Chen, H. Building Trust: Foundations of Security, Safety and Transparency in AI. arXiv 2024, arXiv:2411.12275. [Google Scholar] [CrossRef]

- Singh, K.; Saxena, R.; Kumar, B. AI Security: Cyber Threats and Threat-Informed Defense. In Proceedings of the 2024 8th Cyber Security in Networking Conference (CSNet), Paris, France, 4–6 December 2024; pp. 305–312. [Google Scholar]

- Bowen, G.; Sothinathan, J.; Bowen, R. Technological Governance (Cybersecurity and AI): Role of Digital Governance. In Cybersecurity and Artificial Intelligence: Transformational Strategies and Disruptive Innovation; Springer: Berlin/Heidelberg, Germany, 2024; pp. 143–161. [Google Scholar]

- Savaş, S.; Karataş, S. Cyber governance studies in ensuring cybersecurity: An overview of cybersecurity governance. Int. Cybersecur. Law Rev. 2022, 3, 7–34. [Google Scholar] [CrossRef] [PubMed]

- Lal, S.; Singh, B.; Kaunert, C. Role of Artificial Intelligence (AI) and Intellectual Property Rights (IPR) in Transforming Drug Discovery and Development in the Life Sciences: Legal and Ethical Concerns. Libr. Prog. Libr. Sci. Inf. Technol. Comput. 2024, 44, 7070. Available online: https://openurl.ebsco.com/EPDB%3Agcd%3A11%3A19455179/detailv2?sid=ebsco%3Aplink%3Ascholar&id=ebsco%3Agcd%3A180917877&crl=c&link_origin=scholar.google.com (accessed on 23 May 2025).

- Mirakhori, F.; Niazi, S.K. Harnessing the AI/ML in Drug and Biological Products Discovery and Development: The Regulatory Perspective. Pharmaceuticals 2025, 18, 47. [Google Scholar] [CrossRef] [PubMed]

- Price, W.; Nicholson, I. Distributed governance of medical AI. SMU Sci. Tech. L Rev. 2022, 25, 3. [Google Scholar] [CrossRef]

- Han, Y.; Tao, J. Revolutionizing Pharma: Unveiling the AI and LLM Trends in the Pharmaceutical Industry. arXiv 2024, arXiv:2401.10273. [Google Scholar]

- Tripathi, S.; Gabriel, K.; Tripathi, P.K.; Kim, E. Large language models reshaping molecular biology and drug development. Chem. Biol. Drug Des. 2024, 103, e14568. [Google Scholar] [CrossRef]

- Liu, J.; Wang, C.; Liu, S. Applications of Large Language Models in Clinical Practice: Path, Challenges, and Future Perspectives. OSF Preprints. Center for Open Science. 2024. Available online: https://osf.io/preprints/osf/82bjd_v1 (accessed on 23 May 2025).

- Dou, Y.; Zhao, X.; Zou, H.; Xiao, J.; Xi, P.; Peng, S. ShennongGPT: A Tuning Chinese LLM for Medication Guidance. In Proceedings of the 2023 IEEE International Conference on Medical Artificial Intelligence (MedAI), Beijing, China, 18–19 November 2023; pp. 67–72. [Google Scholar]

- Zhao, H.; Liu, Z.; Wu, Z.; Li, Y.; Yang, T.; Shu, P.; Xu, S.; Dai, H.; Zhao, L.; Mai, G.; et al. Revolutionizing finance with llms: An overview of applications and insights. arXiv 2024, arXiv:2401.11641. [Google Scholar]

- Li, Y.; Wang, S.; Ding, H.; Chen, H. Large language models in finance: A survey. In Proceedings of the fourth ACM International Conference on AI in Finance, Brooklyn, NY, USA, 27–29 November 2023; pp. 374–382. [Google Scholar]

- Kong, Y.; Nie, Y.; Dong, X.; Mulvey, J.M.; Poor, H.V.; Wen, Q.; Zohren, S. Large Language Models for Financial and Investment Management: Models, Opportunities, and Challenges. J. Portf. Manag. 2024, 51, 211–231. Available online: https://web.media.mit.edu/~xdong/paper/jpm24c.pdf (accessed on 23 May 2025). [CrossRef]

- Yuan, Z.; Wang, K.; Zhu, S.; Yuan, Y.; Zhou, J.; Zhu, Y.; Wei, W. FinLLMs: A Framework for Financial Reasoning Dataset Generation with Large Language Models. arXiv 2024, arXiv:2401.10744. [Google Scholar] [CrossRef]

- Febrian, G.F.; Figueredo, G. KemenkeuGPT: Leveraging a Large Language Model on Indonesia’s Government Financial Data and Regulations to Enhance Decision Making. arXiv 2024, arXiv:2407.21459. [Google Scholar]

- Clairoux-Trepanier, V.; Beauchamp, I.M.; Ruellan, E.; Paquet-Clouston, M.; Paquette, S.O.; Clay, E. The use of large language models (llm) for cyber threat intelligence (cti) in cybercrime forums. arXiv 2024, arXiv:2408.03354. [Google Scholar]

- Shafee, S.; Bessani, A.; Ferreira, P.M. Evaluation of llm chatbots for osint-based cyber threat awareness. arXiv 2024, arXiv:2401.15127. [Google Scholar] [CrossRef]

- Wang, F. Using large language models to mitigate ransomware threats. Preprints. 2023. Available online: https://www.preprints.org/manuscript/202311.0676/v1 (accessed on 23 May 2025).

- Zangana, H.M. Harnessing the Power of Large Language Models. In Application of Large Language Models (LLMs) for Software Vulnerability Detection; IGI Global: Hershey, PA, USA, 2024; ISBN 9798369393130. [Google Scholar]

- Yang, J.; Chi, Q.; Xu, W.; Yu, H. Research on adversarial attack and defense of large language models. Appl. Comput. Eng. 2024, 93, 105–113. [Google Scholar] [CrossRef]

- Abdali, S.; Anarfi, R.; Barberan, C.; He, J. Securing Large Language Models: Threats, Vulnerabilities and Responsible Practices. arXiv 2024, arXiv:2403.12503. [Google Scholar]

- Ashiwal, V.; Finster, S.; Dawoud, A. Llm-based vulnerability sourcing from unstructured data. In Proceedings of the 2024 IEEE European Symposium on Security and Privacy Workshops (EuroS&PW), Vienna, Austria, 8–12 July 2024; pp. 634–641. [Google Scholar]

- Srivastava, S.K.; Routray, S.; Bag, S.; Gupta, S.; Zhang, J.Z. Exploring the Potential of Large Language Models in Supply Chain Management: A Study Using Big Data. J. Glob. Inf. Manag. (JGIM) 2024, 32, 1–29. [Google Scholar] [CrossRef]

- Wang, S.; Zhao, Y.; Hou, X.; Wang, H. Large language model supply chain: A research agenda. ACM Trans. Softw. Eng. Methodol. 2024, 9, 123–145. Available online: https://dl.acm.org/doi/abs/10.1145/3708531 (accessed on 23 May 2025). [CrossRef]

- Xu, W.; Xiao, J.; Chen, J. Leveraging large language models to enhance personalized recommendations in e-commerce. In Proceedings of the 2024 International Conference on Electrical, Communication and Computer Engineering (ICECCE), Kuala Lumpur, Malaysia, 30–31 October 2024; pp. 1–6. [Google Scholar]

- Zhu, J.; Lin, J.; Dai, X.; Chen, B.; Shan, R.; Zhu, J.; Tang, R.; Yu, Y.; Zhang, W. Lifelong personalized low-rank adaptation of large language models for recommendation. arXiv 2024, arXiv:2408.03533. [Google Scholar]

- Mohanty, I. Recommendation Systems in the Era of LLMs. In Proceedings of the 15th Annual Meeting of the Forum for Information Retrieval Evaluation, Panjim, India, 15–18 December 2023; pp. 142–144. [Google Scholar]

- Li, C.; Deng, Y.; Hu, H.; Kan, M.Y.; Li, H. Incorporating External Knowledge and Goal Guidance for LLM-based Conversational Recommender Systems. arXiv 2024, arXiv:2405.01868. [Google Scholar]

- Alhafni, B.; Vajjala, S.; Bannò, S.; Maurya, K.K.; Kochmar, E. Llms in education: Novel perspectives, challenges, and opportunities. arXiv 2024, arXiv:2409.11917. [Google Scholar]

- Leinonen, J.; MacNeil, S.; Denny, P.; Hellas, A. Using Large Language Models for Teaching Computing. In Proceedings of the 55th ACM Technical Symposium on Computer Science Education V. 2, Portland, OR, USA, 20–23 March 2024; p. 1901. [Google Scholar]

- Zdravkova, K.; Dalipi, F.; Ahlgren, F. Integration of Large Language Models into Higher Education: A Perspective from Learners. In Proceedings of the 2023 International Symposium on Computers in Education (SIIE), Setúbal, Portugal, 16–18 November 2023; pp. 1–6. [Google Scholar]

- Jadhav, D.; Agrawal, S.; Jagdale, S.; Salunkhe, P.; Salunkhe, R. AI-Driven Text-to-Multimedia Content Generation: Enhancing Modern Content Creation. In Proceedings of the 2024 8th International Conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud) (I-SMAC), Kirtipur, Nepal, 3–5 October 2024; pp. 1610–1615. [Google Scholar]

- Li, S.; Li, X.; Chiariglione, L.; Luo, J.; Wang, W.; Yang, Z.; Mandic, D.; Fujita, H. Introduction to the Special Issue on AI-Generated Content for Multimedia. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 6809–6813. [Google Scholar] [CrossRef]

- Yao, Y.; Duan, J.; Xu, K.; Cai, Y.; Sun, Z.; Zhang, Y. A survey on large language model (llm) security and privacy: The good, the bad, and the ugly. High-Confid. Comput. 2024, 4, 100211, ISSN 2667-2952. [Google Scholar] [CrossRef]

- Nazi, Z.A.; Peng, W. Large language models in healthcare and medical domain: A review. Informatics 2024, 11, 57. [Google Scholar] [CrossRef]

- Huang, J.; Chang, K.C.C. Citation: A key to building responsible and accountable large language models. arXiv 2023, arXiv:2307.02185. [Google Scholar]

- Liu, Y.; Yao, Y.; Ton, J.F.; Zhang, X.; Guo, R.; Cheng, H.; Klochkov, Y.; Taufiq, M.F.; Li, H. Trustworthy llms: A survey and guideline for evaluating large language models’ alignment. arXiv 2023, arXiv:2308.05374. [Google Scholar]

- Carlini, N.; Tramèr, F.; Wallace, E.; Jagielski, M.; Herbert-Voss, A.; Lee, K.; Roberts, A.; Brown, T.; Song, D.; Erlingsson, Ú.; et al. Extracting Training Data from Large Language Models. In Proceedings of the 30th USENIX Security Symposium, Online, 11–13 August 2021; pp. 2633–2650, ISBN 978-1-939133-24-3. Available online: https://www.usenix.org/conference/usenixsecurity21/presentation/carlini-extracting (accessed on 23 May 2025).

- Pan, X.; Zhang, M.; Ji, S.; Yang, M. Privacy risks of general-purpose language models. In Proceedings of the 2020 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 18–21 May 2020; pp. 1314–1331. [Google Scholar]

- Zimelewicz, E.; Kalinowski, M.; Mendez, D.; Giray, G.; Santos Alves, A.P.; Lavesson, N.; Azevedo, K.; Villamizar, H.; Escovedo, T.; Lopes, H.; et al. Ml-enabled systems model deployment and monitoring: Status quo and problems. In Proceedings of the International Conference on Software Quality, Vienna, Austria, 23–25 April 2024; Springer: Cham, Switzerland, 2024; pp. 112–131. [Google Scholar]

- Bodor, A.; Hnida, M.; Najima, D. From Development to Deployment: An Approach to MLOps Monitoring for Machine Learning Model Operationalization. In Proceedings of the 2023 14th International Conference on Intelligent Systems: Theories and Applications (SITA), Casablanca, Morocco, 22–23 November 2023; pp. 1–7. [Google Scholar] [CrossRef]

- Roberts, T.; Tonna, S.J. Extending the Governance Framework for Machine Learning Validation and Ongoing Monitoring. In Risk Modeling: Practical Applications of Artificial Intelligence, Machine Learning, and Deep Learning; Wiley: Hoboken, NJ, USA, 2022; Chapter 7. [Google Scholar] [CrossRef]

- Nogare, D.; Silveira, I.F. EXPERIMENTATION, DEPLOYMENT AND MONITORING MACHINE LEARNING MODELS: APPROACHES FOR APPLYING MLOPS. Revistaft 2024, 28, 55. [Google Scholar] [CrossRef]

- Mehdi, A.; Bali, M.K.; Abbas, S.I.; Singh, M. Unleashing the Potential of Grafana: A Comprehensive Study on Real-Time Monitoring and Visualization. In Proceedings of the 2023 14th International Conference on Computing Communication and Networking Technologies (ICCCNT), Delhi, India, 6–8 July 2023; pp. 1–8. [Google Scholar] [CrossRef]

- Samudrala, L.N.R. Automated SLA Monitoring in AWS Cloud Environments—A Comprehensive Approach Using Dynatrace. J. Artif. Intell. Cloud Comput. 2024, 2, 1–6. [Google Scholar] [CrossRef]

- Menon, V.; Jesudas, J.; S.B, G. Model monitoring with grafana and dynatrace: A comprehensive framework for ensuring ml model performance. Int. J. Adv. Res. 2024, 12, 54–63. Available online: https://www.researchgate.net/publication/381030637_MODEL_MONITORING_WITH_GRAFANA_AND_DYNATRACE_A_COMPREHENSIVE_FRAMEWORK_FOR_ENSURING_ML_MODEL_PERFORMANCE (accessed on 23 May 2025). [CrossRef]

- Yadav, S. Balancing Profitability and Risk: The Role of Risk Appetite in Mitigating Credit Risk Impact. Int. Sci. J. Econ. Manag. 2024, 3, 1–7, ISSN 2583-6129. Available online: https://isjem.com/download/balancing-profitability-and-risk-the-role-of-risk-appetite-in-mitigating-credit-risk-impact/ (accessed on 23 May 2025). [CrossRef]

- Anil, V.K.S.; Babatope, A. Data privacy, security, and governance: A global comparative analysis of regulatory compliance and technological innovation. Glob. J. Eng. Technol. Adv. 2024, 21, 190–202. [Google Scholar] [CrossRef]

- Zhang, S.; Ye, L.; Yi, X.; Tang, J.; Shui, B.; Xing, H.; Liu, P.; Li, H. “Ghost of the past”: Identifying and resolving privacy leakage from LLM’s memory through proactive user interaction. arXiv 2024, arXiv:2410.14931. [Google Scholar]

- Asthana, S.; Mahindru, R.; Zhang, B.; Sanz, J. Adaptive PII Mitigation Framework for Large Language Models. arXiv 2025, arXiv:2501.12465. [Google Scholar]

- Kalinin, M.; Poltavtseva, M.; Zegzhda, D. Ensuring the Big Data Traceability in Heterogeneous Data Systems. In Proceedings of the 2023 International Russian Automation Conference (RusAutoCon), Sochi, Russia, 10–16 September 2023; pp. 775–780. [Google Scholar] [CrossRef]

- Falster, D.; FitzJohn, R.G.; Pennell, M.W.; Cornwell, W.K. Versioned data: Why it is needed and how it can be achieved (easily and cheaply). PeerJ Prepr. 2017, 5, e3401v1. [Google Scholar]

- Mirchandani, S.; Xia, F.; Florence, P.; Ichter, B.; Driess, D.; Arenas, M.G.; Rao, K.; Sadigh, D.; Zeng, A. Large language models as general pattern machines. arXiv 2023, arXiv:2307.04721. [Google Scholar]

- Chen, Y.; Zhao, Y.; Li, X.; Zhang, J.; Long, J.; Zhou, F. An open dataset of data lineage graphs for data governance research. Vis. Inform. 2024, 8, 1–5. [Google Scholar] [CrossRef]

- Kramer, S.G. Artificial Intelligence in the Supply Chain: Legal Issues and Compliance Challenges. J. Supply Chain. Manag. Logist. Procure. 2024, 7, 139–148. [Google Scholar] [CrossRef]

- Hausenloy, J.; McClements, D.; Thakur, M. Towards Data Governance of Frontier AI Models. arXiv 2024, arXiv:2412.03824. [Google Scholar]

- Liu, Y.; Zhang, D.; Xia, B.; Anticev, J.; Adebayo, T.; Xing, Z.; Machao, M. Blockchain-Enabled Accountability in Data Supply Chain: A Data Bill of Materials Approach. In Proceedings of the 2024 IEEE International Conference on Blockchain (Blockchain), Copenhagen, Denmark, 19–22 August 2024; pp. 557–562. [Google Scholar]

- Azari, M.; Arif, J.; Moustabchir, H.; Jawab, F. Navigating Challenges and Leveraging Future Trends in AI and Machine Learning for Supply Chains. In AI and Machine Learning Applications in Supply Chains and Marketing; Masengu, R., Tsikada, C., Garwi, J., Eds.; IGI Global Scientific Publishing: Hershey, PA, USA, 2025; pp. 257–282. [Google Scholar] [CrossRef]

- Hussein, R.; Zink, A.; Ramadan, B.; Howard, F.M.; Hightower, M.; Shah, S.; Beaulieu-Jones, B.K. Advancing Healthcare AI Governance: A Comprehensive Maturity Model Based on Systematic Review. medRxiv 2024. [Google Scholar]

- Singh, B.; Kaunert, C.; Jermsittiparsert, K. Managing Health Data Landscapes and Blockchain Framework for Precision Medicine, Clinical Trials, and Genomic Biomarker Discovery. In Digitalization and the Transformation of the Healthcare Sector; Wickramasinghe, N., Ed.; IGI Global Scientific Publishing: Hershey, PA, USA, 2025; pp. 283–310. [Google Scholar] [CrossRef]

- Hassan, M.; Borycki, E.M.; Kushniruk, A.W. Artificial intelligence governance framework for healthcare. Healthc. Manag. Forum 2024, 38, 125–130. [Google Scholar] [CrossRef]

- Chakraborty, A.; Karhade, M. Global AI Governance in Healthcare: A Cross-Jurisdictional Regulatory Analysis. arXiv 2024, arXiv:2406.08695. [Google Scholar]

- Kim, J.; Kim, S.Y.; Kim, E.A.; Sim, J.; Lee, Y.; Kim, H. Developing a Framework for Self-regulatory Governance in Healthcare AI Research: Insights from South Korea. Asia-Pac. Biotech Res. (ABR) 2024, 16, 391–406. [Google Scholar] [CrossRef] [PubMed]

- Olimid, A.P.; Georgescu, C.M.; Olimid, D.A. Legal Analysis of EU Artificial Intelligence Act (2024): Insights from Personal Data Governance and Health Policy. Access Just. E. Eur. 2024, 7, 120. [Google Scholar]

- Kolade, T.M.; Aideyan, N.T.; Oyekunle, S.M.; Ogungbemi, O.S.; Dapo-Oyewole, D.L.; Olaniyi, O.O. Artificial Intelligence and Information Governance: Strengthening Global Security, through Compliance Frameworks, and Data Security. Asian J. Res. Comput. Sci. 2024, 17, 36–57. [Google Scholar] [CrossRef]

- Mbah, G.O.; Evelyn, A.N. AI-powered cybersecurity: Strategic approaches to mitigate risk and safeguard data privacy. World J. Adv. Res. Rev. 2024, 24, 310–327. [Google Scholar] [CrossRef]

- Folorunso, A.; Adewumi, T.; Adewa, A.; Okonkwo, R.; Olawumi, T.N. Impact of AI on cybersecurity and security compliance. Glob. J. Eng. Technol. Adv. 2024, 21, 167–184. [Google Scholar] [CrossRef]

- Jabbar, H.; Al-Janabi, S.; Syms, F. AI-Integrated Cyber Security Risk Management Framework for IT Projects. In Proceedings of the 2024 International Jordanian Cybersecurity Conference (IJCC), Amman, Jordan, 17–18 December 2024; pp. 76–81. [Google Scholar] [CrossRef]

- Muhammad, M.H.B.; Abas, Z.B.; Ahmad, A.S.B.; Sulaiman, M.S.B. AI-Driven Security: Redefining Security Information Systems within Digital Governance. Int. J. Res. Inf. Secur. Syst. (IJRISS) 2024, 8090245, 2923–2936. [Google Scholar] [CrossRef]

- Effoduh, J.; Akpudo, U.; Kong, J. Toward a trustworthy and inclusive data governance policy for the use of artificial intelligence in Africa. Data Policy 2024, 6, e34. [Google Scholar] [CrossRef]

- Jyothi, V.E.; Sai Kumar, D.L.; Thati, B.; Tondepu, Y.; Pratap, V.K.; Praveen, S.P. Secure Data Access Management for Cyber Threats using Artificial Intelligence. In Proceedings of the 2022 6th International Conference on Electronics, Communication and Aerospace Technology, Coimbatore, India, 1–3 December 2022; pp. 693–697. [Google Scholar] [CrossRef]

- Boggarapu, N.B. Modernizing Banking Compliance: An Analysis of AI-Powered Data Governance in a Hybrid Cloud Environment. CSEIT 2024, 10, 2434. [Google Scholar] [CrossRef]

- Akokodaripon, D.; Alonge-Essiet, F.O.; Aderoju, A.V.; Reis, O. Implementing Data Governance in Financial Systems: Strategies for Ensuring Compliance and Security in Multi-Source Data Integration Projects. CSI Trans. ICT Res. 2024, 5, 1631. [Google Scholar] [CrossRef]

- Chukwurah, N.; Ige, A.B.; Adebayo, V.I.; Eyieyien, O.G. Frameworks for Effective Data Governance: Best Practices, Challenges, and Implementation Strategies Across Industries. Comput. Sci. IT Res. J. 2024, 5, 1666–1679. [Google Scholar] [CrossRef]

- Li, Y.; Yu, X.; Koudas, N. Data Acquisition for Improving Model Confidence. Proc. Acm Manag. Data 2024, 2, 1–25. [Google Scholar] [CrossRef]

- Barrenechea, O.; Mendieta, A.; Armas, J.; Madrid, J.M. Data Governance Reference Model to streamline the supply chain process in SMEs. In Proceedings of the 2019 IEEE XXVI International Conference on Electronics, Electrical Engineering and Computing (INTERCON), Lima, Peru, 12–14 August 2019; pp. 1–4. [Google Scholar]

- Aghaei, R.; Kiaei, A.A.; Boush, M.; Vahidi, J.; Barzegar, Z.; Rofoosheh, M. The Potential of Large Language Models in Supply Chain Management: Advancing Decision-Making, Efficiency, and Innovation. arXiv 2025, arXiv:2501.15411. [Google Scholar]

- Tse, D.; Chow, C.k.; Ly, T.p.; Tong, C.y.; Tam, K.w. The challenges of big data governance in healthcare. In Proceedings of the 2018 17th IEEE International Conference On Trust, Security And Privacy In Computing And Communications/12th IEEE International Conference On Big Data Science And Engineering (TrustCom/BigDataSE), New York, NY, USA, 1–3 August 2018; pp. 1632–1636. [Google Scholar]

- Tripathi, S.; Mongeau, K.; Alkhulaifat, D.; Elahi, A.; Cook, T.S. Large language models in health systems: Governance, challenges, and solutions. Acad. Radiol. 2025, 32, 1189–1191. [Google Scholar] [CrossRef] [PubMed]

- Salman, A.; Creese, S.; Goldsmith, M. Position Paper: Leveraging Large Language Models for Cybersecurity Compliance. In Proceedings of the 2024 IEEE European Symposium on Security and Privacy Workshops (EuroS&PW), Vienna, Austria, 8–12 July 2024; pp. 496–503. [Google Scholar]

- McIntosh, T.R.; Susnjak, T.; Liu, T.; Watters, P.; Xu, D.; Liu, D.; Nowrozy, R.; Halgamuge, M.N. From cobit to iso 42001: Evaluating cybersecurity frameworks for opportunities, risks, and regulatory compliance in commercializing large language models. Comput. Secur. 2024, 144, 103964. [Google Scholar] [CrossRef]

- Tavasoli, A.; Sharbaf, M.; Madani, S.M. Responsible Innovation: A Strategic Framework for Financial LLM Integration. arXiv 2025, arXiv:2504.02165. [Google Scholar]

- Chu, Z.; Guo, H.; Zhou, X.; Wang, Y.; Yu, F.; Chen, H.; Xu, W.; Lu, X.; Cui, Q.; Li, L.; et al. Data-centric financial large language models. arXiv 2023, arXiv:2310.17784. [Google Scholar]

- Zhou, X.; Zhao, X.; Li, G. LLM-Enhanced Data Management. arXiv 2024, arXiv:2402.02643. [Google Scholar]

- Gorti, A.; Chadha, A.; Gaur, M. Unboxing Occupational Bias: Debiasing LLMs with US Labor Data. In Proceedings of the AAAI Symposium Series, Arlington, Virginia, 20 August 2024; AAAI Press: Washington, DC, USA, 2024; Volume 4, pp. 48–55. [Google Scholar]

- de Dampierre, C.; Mogoutov, A.; Baumard, N. Towards Transparency: Exploring LLM Trainings Datasets through Visual Topic Modeling and Semantic Frame. arXiv 2024, arXiv:2406.06574. [Google Scholar]

- Yang, J.; Wang, Z.; Lin, Y.; Zhao, Z. Global Data Constraints: Ethical and Effectiveness Challenges in Large Language Model. arXiv 2024, arXiv:2406.11214. [Google Scholar]

- Li, C.; Zhuang, Y.; Qiang, R.; Sun, H.; Dai, H.; Zhang, C.; Dai, B. Matryoshka: Learning to Drive Black-Box LLMs with LLMs. arXiv 2024, arXiv:2410.20749. [Google Scholar]

- Alber, D.A.; Yang, Z.; Alyakin, A.; Yang, E.; Rai, S.; Valliani, A.A.; Zhang, J.; Rosenbaum, G.R.; Amend-Thomas, A.K.; Kurland, D.B.; et al. Medical large language models are vulnerable to data-poisoning attacks. Nat. Med. 2025, 31, 618–626. [Google Scholar] [CrossRef]

- Wu, F.; Cui, L.; Yao, S.; Yu, S. Inference Attacks in Machine Learning as a Service: A Taxonomy, Review, and Promising Directions. arXiv 2024, arXiv:2406.02027. [Google Scholar]

- Subramaniam, P.; Krishnan, S. Intent-Based Access Control: Using LLMs to Intelligently Manage Access Control. arXiv 2024, arXiv:2402.07332. [Google Scholar]

- Mehra, T. The Critical Role of Role-Based Access Control (RBAC) in securing backup, recovery, and storage systems. Int. J. Sci. Res. Arch. 2024, 13, 1192–1194. [Google Scholar] [CrossRef]

- Li, L.; Chen, H.; Qiu, Z.; Luo, L. Large Language Models in Data Governance: Multi-source Data Tables Merging. In Proceedings of the 2024 IEEE International Conference on Big Data (BigData), Washington, DC, USA, 15–18 December 2024; pp. 3965–3974. [Google Scholar]

- Kayali, M.; Wenz, F.; Tatbul, N.; Demiralp, Ç. Mind the Data Gap: Bridging LLMs to Enterprise Data Integration. arXiv 2024, arXiv:2412.20331. [Google Scholar]

- Erdem, O.; Hassett, K.; Egriboyun, F. Evaluating the Accuracy of Chatbots in Financial Literature. arXiv 2024, arXiv:2411.07031. [Google Scholar]

- Ruke, A.; Kulkarni, H.; Patil, R.; Pote, A.; Shedage, S.; Patil, A. Future Finance: Predictive Insights and Chatbot Consultation. In Proceedings of the 2024 4th Asian Conference on Innovation in Technology (ASIANCON), Pimari Chinchwad, India, 23–25 August 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Zheng, Z. ChatGPT-style Artificial Intelligence for Financial Applications and Risk Response. Int. J. Comput. Sci. Inf. Technol. 2024, 3, 179–186. [Google Scholar] [CrossRef]

- Kushwaha, P.K.; Kumar, R.; Kumar, S. AI Health Chatbot using ML. Int. J. Sci. Res. Eng. Manag. (IJSREM) 2024, 8, 1–5. [Google Scholar] [CrossRef]

- Hassani, S. Enhancing Legal Compliance and Regulation Analysis with Large Language Models. In Proceedings of the 2024 IEEE 32nd International Requirements Engineering Conference (RE), Reykjavik, Iceland, 24–28 June 2024; pp. 507–511. [Google Scholar] [CrossRef]

- Kumar, B.; Roussinov, D. NLP-based Regulatory Compliance–Using GPT 4.0 to Decode Regulatory Documents. arXiv 2024, arXiv:2412.20602. [Google Scholar]

- Kaur, P.; Kashyap, G.S.; Kumar, A.; Nafis, M.T.; Kumar, S.; Shokeen, V. From Text to Transformation: A Comprehensive Review of Large Language Models’ Versatility. arXiv 2024, arXiv:2402.16142. [Google Scholar]

- Zhu, H. Architectural Foundations for the Large Language Model Infrastructures. arXiv 2024, arXiv:2408.09205. [Google Scholar]

- Koppichetti, R.K. Framework of Hub and Spoke Data Governance Model for Cloud Computing. J. Artif. Intell. Cloud Comput. 2024, 2, 1–4. Available online: https://onlinescientificresearch.com/articles/framework-of-hub-and-spoke-data-governance-model-for-cloud-computing.pdf (accessed on 23 May 2025). [CrossRef]

- Li, D.; Sun, Z.; Hu, X.; Hu, B.; Zhang, M. CMT: A Memory Compression Method for Continual Knowledge Learning of Large Language Models. arXiv 2024, arXiv:2412.07393. [Google Scholar] [CrossRef]

- Folorunso, A.; Babalola, O.; Nwatu, C.E.; Ukonne, U. Compliance and Governance issues in Cloud Computing and AI: USA and Africa. Glob. J. Eng. Technol. Adv. 2024, 21, 127–138. [Google Scholar] [CrossRef]

- Alsaigh, R.; Mehmood, R.; Katib, I.; Liang, X.; Alshanqiti, A.; Corchado, J.M.; See, S. Harmonizing AI governance regulations and neuroinformatics: Perspectives on privacy and data sharing. Front. Neuroinformatics 2024, 18, 1472653. [Google Scholar] [CrossRef]

- Zhang, C.; Zhong, H.; Zhang, K.; Chai, C.; Wang, R.; Zhuang, X.; Bai, T.; Qiu, J.; Cao, L.; Fan, J.; et al. Harnessing Diversity for Important Data Selection in Pretraining Large Language Models. arXiv 2024, arXiv:2409.16986. [Google Scholar]

- Rajasegar, R.; Gouthaman, P.; Ponnusamy, V.; Arivazhagan, N.; Nallarasan, V. Data Privacy and Ethics in Data Analytics. In Data Analytics and Machine Learning: Navigating the Big Data Landscape; Springer: Berlin/Heidelberg, Germany, 2024; Volume 145, pp. 195–213. [Google Scholar] [CrossRef]

- Pang, J.; Wei, J.; Shah, A.P.; Zhu, Z.; Wang, Y.; Qian, C.; Liu, Y.; Bao, Y.; Wei, W. Improving data efficiency via curating llm-driven rating systems. arXiv 2024, arXiv:2410.10877. [Google Scholar]

- Seedat, N.; Huynh, N.; van Breugel, B.; van der Schaar, M. Curated llm: Synergy of llms and data curation for tabular augmentation in ultra low-data regimes. In Proceedings of the International Conference on Learning Representations (ICLR) 2024, Vienna, Austria, 7–11 May 2024; Available online: https://openreview.net/forum?id=ynguffsGfa (accessed on 23 May 2025).

- Oktavia, T.; Wijaya, E. Strategic Metadata Implementation: A Catalyst for Enhanced BI Systems and Organizational Effectiveness. Hightech Innov. J. 2025, 6, 21–41. [Google Scholar] [CrossRef]

- Walshe, T.; Moon, S.Y.; Xiao, C.; Gunawardana, Y.; Silavong, F. Automatic Labelling with Open-source LLMs using Dynamic Label Schema Integration. arXiv 2025, arXiv:2501.12332. [Google Scholar]

- Cholke, P.C.; Patankar, A.; Patil, A.; Patwardhan, S.; Phand, S. Enabling Dynamic Schema Modifications Through Codeless Data Management. In Proceedings of the 2024 IEEE Region 10 Symposium (TENSYMP), New Delhi, India, 27–29 September 2024; pp. 1–9. [Google Scholar]

- Strome, T. Data governance best practices for the AI-ready airport. J. Airpt. Manag. 2024, 19, 57–70. [Google Scholar] [CrossRef]

- Suhra, R. Unified Data Governance Strategy for Enterprises. Int. J. Comput. Appl. 2024, 186, 36–41. Available online: https://www.ijcaonline.org/archives/volume186/number50/transforming-enterprise-data-management-through-unified-data-governance/ (accessed on 23 May 2025).

- Aiyankovil, K.G.; Lewis, D.; Hernandez, J. Mapping Data Governance Requirements Between the European Union’s AI Act and ISO/IEC 5259: A Semantic Analysis. In Proceedings of the NeXt-generation Data Governance Workshop, Amsterdam, The Netherlands, 17–19 September 2024. [Google Scholar]

- Aiyankovil, K.G.; Lewis, D. Harmonizing AI Data Governance: Profiling ISO/IEC 5259 to Meet the Requirements of the EU AI Act. Front. Artif. Intell. Appl. 2024, 395, 363–365. [Google Scholar] [CrossRef]

- Gupta, P.; Parmar, D.S. Sustainable Data Management and Governance Using AI. World J. Adv. Eng. Technol. Sci. 2024, 13, 264–274. [Google Scholar] [CrossRef]

- Idemudia, C.; Ige, A.; Adebayo, V.; Eyieyien, O. Enhancing data quality through comprehensive governance: Methodologies, tools, and continuous improvement techniques. Comput. Sci. IT Res. J. 2024, 5, 1680–1694. [Google Scholar] [CrossRef]

- Comeau, D.S.; Bitterman, D.S.; Celi, L.A. Preventing unrestricted and unmonitored AI experimentation in healthcare through transparency and accountability. Npj Digit. Med. 2025, 8, 42. [Google Scholar] [CrossRef]

- Organisation for Economic Co-operation and Development. Towards an Integrated Health Information System in the Netherlands; Technical Report; Organisation for Economic Co-operation and Development (OECD): Paris, France, 2022. [Google Scholar]

- Musa, M.B.; Winston, S.M.; Allen, G.; Schiller, J.; Moore, K.; Quick, S.; Melvin, J.; Srinivasan, P.; Diamantis, M.E.; Nithyanand, R. C3PA: An Open Dataset of Expert-Annotated and Regulation-Aware Privacy Policies to Enable Scalable Regulatory Compliance Audits. arXiv 2024, arXiv:2410.03925. [Google Scholar]

- Eshbaev, G. GDPR vs. Weakly Protected Parties in Other Countries. Uzb. J. Law Digit. Policy 2024, 2, 55–65. [Google Scholar] [CrossRef]

- Borgesius, F.Z.; Asghari, H.; Bangma, N.; Hoepman, J.H. The GDPR’s Rules on Data Breaches: Analysing Their Rationales and Effects. SCRIPTed 2023, 20, 352. [Google Scholar] [CrossRef]

- Musch, S.; Borrelli, M.C.; Kerrigan, C. Bridging Compliance and Innovation: A Comparative Analysis of the EU AI Act and GDPR for Enhanced Organisational Strategy. J. Data Prot. Priv. 2024, 7, 14–40. [Google Scholar] [CrossRef]

- Aziz, M.A.B.; Wilson, C. Johnny Still Can’t Opt-out: Assessing the IAB CCPA Compliance Framework. Proc. Priv. Enhancing Technol. 2024, 2024, 349–363. [Google Scholar] [CrossRef]

- Rao, S.D. The Evolution of Privacy Rights in the Digital Age: A Comparative Analysis of GDPR and CCPA. Int. J. Law 2024, 2, 40. [Google Scholar] [CrossRef]

- Harding, E.L.; Vanto, J.J.; Clark, R.; Hannah Ji, L.; Ainsworth, S.C. Understanding the scope and impact of the california consumer privacy act of 2018. J. Data Prot. Priv. 2019, 2, 234–253. [Google Scholar] [CrossRef]

- Charatan, J.; Birrell, E. Two Steps Forward and One Step Back: The Right to Opt-out of Sale under CPRA. Proc. Priv. Enhancing Technol. 2024, 2024, 91–105. [Google Scholar] [CrossRef]

- Wang, G. Administrative and Legal Protection of Personal Information in China: Disadvantages and Solutions. In Courier of Kutafin Moscow State Law University (MSAL); MGIMO University Press: Moscow, Russia, 2024; pp. 189–197. ISSN 2311-5998. [Google Scholar] [CrossRef]

- Yang, L.; Lin, Y.; Chen, B. Practice and Prospect of Regulating Personal Data Protection in China. Laws 2024, 13, 78. [Google Scholar] [CrossRef]

- Bolatbekkyzy, G. Comparative Insights from the EU’s GDPR and China’s PIPL for Advancing Personal Data Protection Legislation. Gron. J. Int. Law 2024, 11, 129–146. [Google Scholar] [CrossRef]

- Yalamati, S. Ensuring Ethical Practices in AI and ML Toward a Sustainable Future. In Artificial Intelligence and Machine Learning for Sustainable Development, 1st ed.; CRC Press: Boca Raton, FL, USA, 2024; p. 15. [Google Scholar] [CrossRef]

- Zhu, M.; Zhang, W.; Xu, C. Ethical Governance and Implementation Paths for Global Marine Science Data Sharing. Front. Mar. Sci. 2024, 11, 1421252. [Google Scholar] [CrossRef]

- Sharma, K.; Kumar, P.; Özen, E. Ethical Considerations in Data Analytics: Challenges, Principles, and Best Practices. In Data Alchemy in the Insurance Industry; Taneja, S., Kumar, P., Reepu, Kukreti, M., Özen, E., Eds.; Emerald Publishing Limited: Leeds, UK, 2024; pp. 41–48. [Google Scholar] [CrossRef]

- McNicol, T.; Carthouser, B.; Bongiovanni, I.; Abeysooriya, S. Improving Ethical Usage of Corporate Data in Higher Education: Enhanced Enterprise Data Ethics Framework. Inf. Technol. People 2024, 37, 2247–2278. [Google Scholar] [CrossRef]

- Kottur, R. Responsible AI Development: A Comprehensive Framework for Ethical Implementation in Contemporary Technological Systems. Comput. Sci. Inf. Technol. 2024, 10, 1553–1561. [Google Scholar] [CrossRef]

- Sharma, R.K. Ethics in AI: Balancing innovation and responsibility. Int. J. Sci. Res. Arch. 2025, 14, 544–551. [Google Scholar] [CrossRef]

- Díaz-Rodríguez, N.; Del Ser, J.; Coeckelbergh, M.; de Prado, M.L.; Herrera-Viedma, E.; Herrera, F. Connecting the dots in trustworthy Artificial Intelligence: From AI principles, ethics, and key requirements to responsible AI systems and regulation. Inf. Fusion 2023, 99, 101896. [Google Scholar] [CrossRef]

- Zisan, T.I.; Pulok, M.M.K.; Borman, D.; Barmon, R.C.; Asif, M.R.H. Navigating the Future of Auditing: AI Applications, Ethical Considerations, and Industry Perspectives on Big Data. Eur. J. Theor. Appl. Sci. 2024, 2, 324–332. [Google Scholar] [CrossRef]

- Sari, R.; Muslim, M. Accountability and Transparency in Public Sector Accounting: A Systematic Review. AMAR Account. Manag. Rev. 2023, 3, 1440. [Google Scholar] [CrossRef]

- Felix, S.; Morais, M.G.; Fonseca, J. The role of internal audit in supporting the implementation of the general regulation on data protection—Case study in the intermunicipal communities of Coimbra and Viseu. In Proceedings of the 2018 13th Iberian Conference on Information Systems and Technologies (CISTI), Caceres, Spain, 13–16 June 2018; pp. 1–7. [Google Scholar] [CrossRef]

- Weaver, L.; Imura, P. System and Method of Conducting Self Assessment for Regulatory Compliance. U.S. Patent App. 14/497,436, 31 March 2016. [Google Scholar]

- Brenner, J. ISO 27001: Risk management and compliance. Risk Manag. 2007, 54, 24. [Google Scholar]

- Malatji, M. Management of enterprise cyber security: A review of ISO/IEC 27001: 2022. In Proceedings of the 2023 International conference on cyber management and engineering (CyMaEn), Bangkok, Thailand, 26–27 January 2023; pp. 117–122. [Google Scholar]

- Segun-Falade, O.D.; Leghemo, I.M.; Odionu, C.S.; Azubuike, C. A Review on [Insert Paper Topic]. Int. J. Sci. Res. Arch. 2024, 12, 2984–3002. [Google Scholar] [CrossRef]

- Janssen, M.; Brous, P.; Estevez, E.; Barbosa, L.S.; Janowski, T. Data governance: Organizing data for trustworthy Artificial Intelligence. Gov. Inf. Q. 2020, 37, 101493. [Google Scholar] [CrossRef]

- Olateju, O.; Okon, S.U.; Olaniyi, O.O.; Samuel-Okon, A.D.; Asonze, C.U. Exploring the Concept of Explainable AI and Developing Information Governance Standards for Enhancing Trust and Transparency in Handling Customer Data. J. Eng. Res. Rep. 2024, 26, 244–268. Available online: https://journaljerr.com/index.php/JERR/article/view/1206 (accessed on 23 May 2025).

- Friha, O.; Ferrag, M.A.; Kantarci, B.; Cakmak, B.; Ozgun, A.; Ghoualmi-Zine, N. Llm-based edge intelligence: A comprehensive survey on architectures, applications, security and trustworthiness. IEEE Open J. Commun. Soc. 2024, 5, 5799–5856. Available online: https://ieeexplore.ieee.org/abstract/document/10669603 (accessed on 23 May 2025). [CrossRef]

- Leghemo, I.M.; Azubuike, C.; Segun-Falade, O.D.; Odionu, C.S. Data Governance for Emerging Technologies: A Conceptual Framework for Managing Blockchain, IoT, and AI. J. Eng. Res. Rep. 2025, 27, 247–267. [Google Scholar] [CrossRef]

- O’Sullivan, K.; Lumsden, J.; Anderson, C.; Black, C.; Ball, W.; Wilde, K. A Governance Framework for Facilitating Cross-Agency Data Sharing. Int. J. Popul. Data Sci. 2024, 9. [Google Scholar] [CrossRef]

- Bammer, G. Stakeholder Engagement. In Sociology, Social Policy and Education 2024; Edward Elgar Publishing: Bingley, UK, 2024; pp. 487–491. [Google Scholar] [CrossRef]

- Demiris, G. Stakeholder Engagement for the Design of Generative AI Tools: Inclusive Design Approaches. Innov. Aging 2024, 8, 585–586. [Google Scholar] [CrossRef]

- Siew, R. Stakeholder Engagement. In Sustainability Analytics Toolkit for Practitioners; Palgrave Macmillan: Singapore, 2023. [Google Scholar] [CrossRef]

- Arora, A.; Vats, P.; Tomer, N.; Kaur, R.; Saini, A.K.; Shekhawat, S.S.; Roopak, M. Data-Driven Decision Support Systems in E-Governance: Leveraging AI for Policymaking. In Artificial Intelligence: Theory and Applications; Lecture Notes in Networks and Systems; Sharma, H., Chakravorty, A., Hussain, S., Kumari, R., Eds.; Springer: Singapore, 3 January 2024; Volume 844. [Google Scholar] [CrossRef]

- Luo, J.; Luo, X.; Chen, X.; Xiao, Z.; Ju, W.; Zhang, M. SemiEvol: Semi-supervised Fine-tuning for LLM Adaptation. arXiv 2024, arXiv:2410.14745. [Google Scholar]

- Uuk, R.; Brouwer, A.; Schreier, T.; Dreksler, N.; Pulignano, V.; Bommasani, R. Effective Mitigations for Systemic Risks from General-Purpose AI. SSRN. 2024. Available online: https://ssrn.com/abstract=5021463 (accessed on 23 May 2025).

- Nadeem, M.; Bethke, A.; Reddy, S. StereoSet: Measuring stereotypical bias in pretrained language models. arXiv 2020, arXiv:2004.09456. [Google Scholar]

- Robinson, R. Assessing gender bias in medical and scientific masked language models with StereoSet. arXiv 2021, arXiv:2111.08088. [Google Scholar]

- Bird, S.; Dudík, M.; Edgar, R.; Horn, B.; Lutz, R.; Milan, V.; Sameki, M.; Wallach, H.; Walker, K. Fairlearn: A Toolkit for Assessing and Improving Fairness in AI. Microsoft Research Technical Report MSR-TR-2020-32. 2020. Available online: https://www.microsoft.com/en-us/research/wp-content/uploads/2020/05/Fairlearn_WhitePaper-2020-09-22.pdf (accessed on 23 May 2025).

- Saleiro, P.; Kuester, B.; Hinkson, L.; London, J.; Stevens, A.; Anisfeld, A.; Rodolfa, K.T.; Ghani, R. Aequitas: A bias and fairness audit toolkit. arXiv 2018, arXiv:1811.05577. [Google Scholar]

- Rella, B.P.R. MLOPs and DataOps integration for scalable machine learning deployment. Int. J. Multidiscip. Res. 2022, 4, 20. [Google Scholar]

- TruEra. TruEra Monitoring Delivers Important ML Model Insights Fast. 2023. Available online: https://truera.com/ai-quality-education/ml-monitoring/truera-ml-monitoring-delivers-important-ml-model-insights/ (accessed on 18 May 2025).

- Bate, A.B.d.A.R. Auditable Data Provenance in Streaming Data Processing. Master’s Thesis, Instituto Superior Técnico, University of Lisbon, Lisbon, Portugal, 2023. [Google Scholar]

- Zaharia, M.; Chen, A.; Davidson, A.; Ghodsi, A.; Hong, S.A.; Konwinski, A.; Murching, S.; Nykodym, T.; Ogilvie, P.; Parkhe, M.; et al. Accelerating the machine learning lifecycle with MLflow. IEEE Data Eng. Bull. 2018, 41, 39–45. [Google Scholar]

- Gadepally, V.; Kepner, J. Technical Report: Developing a Working Data Hub. arXiv 2020, arXiv:2004.00190. [Google Scholar]

- Alvarez-Romero, C.; Martínez-García, A.; Bernabeu-Wittel, M.; Parra-Calderón, C.L. Health data hubs: An analysis of existing data governance features for research. Health Res. Policy Syst. 2023, 21, 70. [Google Scholar] [CrossRef]

- Gade, K.R. Data Quality Metrics for the Modern Enterprise: A Data Analytics Perspective. MZ J. Artif. Intell. 2024, 1. Available online: https://mzresearch.com/index.php/MZJAI/article/view/416 (accessed on 23 May 2025).

- Yang, W.; Fu, R.; Amin, M.B.; Kang, B. Impact and influence of modern AI in metadata management. arXiv 2025, arXiv:2501.16605. [Google Scholar]

- Muppalaneni, R.; Inaganti, A.C.; Ravichandran, N. AI-Enhanced Data Loss Prevention (DLP) Strategies for Multi-Cloud Environments. J. Comput. Innov. Appl. 2024, 2, 1–13. [Google Scholar]

- Naik, S. Cloud-Based Data Governance: Ensuring Security, Compliance, and Privacy. Eastasouth J. Inf. Syst. Comput. Sci. 2023, 1, 69–87. [Google Scholar] [CrossRef]

- AIMultiple Research Team. Data Governance Case Studies. 2024. Available online: https://research.aimultiple.com/data-governance-case-studies/ (accessed on 14 March 2025).

- Google Cloud. Data Governance in Generative AI—Vertex AI. 2024. Available online: https://cloud.google.com/vertex-ai/generative-ai/docs/data-governance (accessed on 14 March 2025).

- Microsoft. AI Principles and Approach. 2024. Available online: https://www.microsoft.com/en-us/ai/principles-and-approach (accessed on 14 March 2025).

- Microsoft. Introducing Modern Data Governance for the Era of AI. 2024. Available online: https://azure.microsoft.com/en-us/blog/introducing-modern-data-governance-for-the-era-of-ai/ (accessed on 14 March 2025).

- Majumder, S.; Bhattacharjee, A.; Kozhaya, J.N. Enhancing AI Governance in Financial Industry through IBM watsonx.governance. TechRxiv. 30 March 2024. Available online: https://www.techrxiv.org/doi/full/10.36227/techrxiv.171177466.65923432 (accessed on 14 March 2025).

- Schneider, J.; Kuss, P.; Abraham, R.; Meske, C. Governance of generative artificial intelligence for companies. arXiv 2024, arXiv:2403.08802. [Google Scholar]

- Mökander, J.; Schuett, J.; Kirk, H.R.; Floridi, L. Auditing large language models: A three-layered approach. AI Ethics 2024, 4, 1085–1115. [Google Scholar] [CrossRef]

- Cai, H.; Wu, S. TKG: Telecom Knowledge Governance Framework for LLM Application. Res. Sq. 2023. [Google Scholar] [CrossRef]

- Asthana, S.; Zhang, B.; Mahindru, R.; DeLuca, C.; Gentile, A.L.; Gopisetty, S. Deploying Privacy Guardrails for LLMs: A Comparative Analysis of Real-World Applications. arXiv 2025, arXiv:2501.12456. [Google Scholar]

- Mamalis, M.; Kalampokis, E.; Fitsilis, F.; Theodorakopoulosand, G.; Tarabanis, K. A Large Language Model Based Legal Assistant for Governance Applications. ResearchGate. 2024. Available online: https://www.researchgate.net/publication/383360660_A_Large_Language_Model_Agent_Based_Legal_Assistant_for_Governance_Applications (accessed on 23 May 2025).

- Zhao, L. Artificial Intelligence and Law: Emerging Divergent National Regulatory Approaches in a Changing Landscape of Fast-Evolving AI Technologies. In Law 17 Oct 2023; Edward Elgar Publishing: Bingley, UK, 2023; pp. 369–399. [Google Scholar] [CrossRef]

- Imam, N.M.; Ibrahim, A.; Tiwari, M. Explainable Artificial Intelligence (XAI) Techniques To Enhance Transparency In Deep Learning Models. IOSR J. Comput. Eng. (IOSR-JCE) 2024, 26, 29–36. [Google Scholar] [CrossRef]

- Butt, A.; Junejo, A.Z.; Ghulamani, S.; Mahdi, G.; Shah, A.; Khan, D. Deploying Blockchains to Simplify AI Algorithm Auditing. In Proceedings of the 2023 IEEE 8th International Conference on Engineering Technologies and Applied Sciences (ICETAS), Bahrain, Bahrain, 25–27 October 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Yang, F.; Abedin, M.Z.; Qiao, Y.; Ye, L. Toward Trustworthy Governance of AI-Generated Content (AIGC): A Blockchain-Driven Regulatory Framework for Secure Digital Ecosystems. IEEE Trans. Eng. Manag. 2024, 71, 14945–14962. [Google Scholar] [CrossRef]

- Zhao, Y. Audit Data Traceability and Verification System Based on Blockchain Technology and Deep Learning. In Proceedings of the 2024 International Conference on Telecommunications and Power Electronics (TELEPE), Frankfurt, Germany, 29–31 May 2024; pp. 77–82. [Google Scholar] [CrossRef]

- Chaffer, T.J.; von Goins II, C.; Cotlage, D.; Okusanya, B.; Goldston, J. Decentralized Governance of Autonomous AI Agents. arXiv 2024, arXiv:2412.17114. [Google Scholar]

- Nweke, O.C.; Nweke, G.I. Legal and Ethical Conundrums in the AI Era: A Multidisciplinary Analysis. Int. Law Res. Arch. 2024, 13, 1–10. [Google Scholar] [CrossRef]

- Van Rooy, D. Human–machine collaboration for enhanced decision-making in governance. Data Policy 2024, 6, e60. [Google Scholar] [CrossRef]

- Abeliuk, A.; Gaete, V.; Bro, N. Fairness in LLM-Generated Surveys. arXiv 2025, arXiv:2501.15351. [Google Scholar]

- Alipour, S.; Sen, I.; Samory, M.; Mitra, T. Robustness and Confounders in the Demographic Alignment of LLMs with Human Perceptions of Offensiveness. arXiv 2024, arXiv:2411.08977. [Google Scholar]

- Agarwal, S.; Muku, S.; Anand, S.; Arora, C. Does Data Repair Lead to Fair Models? Curating Contextually Fair Data To Reduce Model Bias. In Proceedings of the 2022 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2022; pp. 3898–3907. [Google Scholar] [CrossRef]

- Simpson, S.; Nukpezah, J.; Brooks, K.; Pandya, R. Parity benchmark for measuring bias in LLMs. AI Ethics 2024. [Google Scholar] [CrossRef]

| Application | References |

|---|---|

| (Cultural, algorithmic) biases in LLMs | [39,40,41] |

| Data privacy and security concerns | [42,43,44] |

| Hallucination in LLMs | [45,46,47,48] |

| Ethical implications and misinformation | [49,50,51] |

| Failure deployment of LLMs | [52,53,54,55] |

| Regulatory compliance and legal concerns | [56,57,58] |

| Unintended destructive outputs | [59,60,61] |

| Lack of data validation and data quality control | [62,63,64,65] |

| Data evolution and drift create a lack of performance | [66,67,68,69] |

| Aspect | Description |

|---|---|

| Data lifecycle management | Use of intelligent data governance across the AI model throughout the end-to-end lifecycle from development phase to end of deployment phase. |

| Regulatory compliance and legal frameworks | The scalable and flexible governance model adapts the global regulation like GDPR, CCAA, HIPAA, AI Act, and AIRMF. |

| Ethical and fair AI practices | The implementation of AI data governance ensures that AI models and systems operate with transparency and fairness without any discriminatory metrics (e.g., regardless of race, gender, religion, age, and others). |

| Data privacy and security | The implementation of an intelligence of data governance leverages the data privacy and encrypted mechanism to mitigate data breach activity. Also prevents various cyber threats and several attacks (e.g., adversarial, model inversion, inference, data poisoning, and others). |

| Data quality, integrity, and validation | Data quality, integrity, and validation are essential elements of data governance. These three factors directly impact the quality of trustworthiness in AI models. |

| Data lineage and traceability | Data lineage and traceability are the vital components of data governance methodology, which assist auditors in tracing data usage and assist with debugging the issue for root cause analysis. |

| More secure end-to-end model deployment | The use of this data governance approach assists with secure and confident deployment of AI model via various pipelines (DevOps, MLOps, and LLMOps) from initial phase, robust model training, testing and validation, deployment phase, and post-deployment phase. |

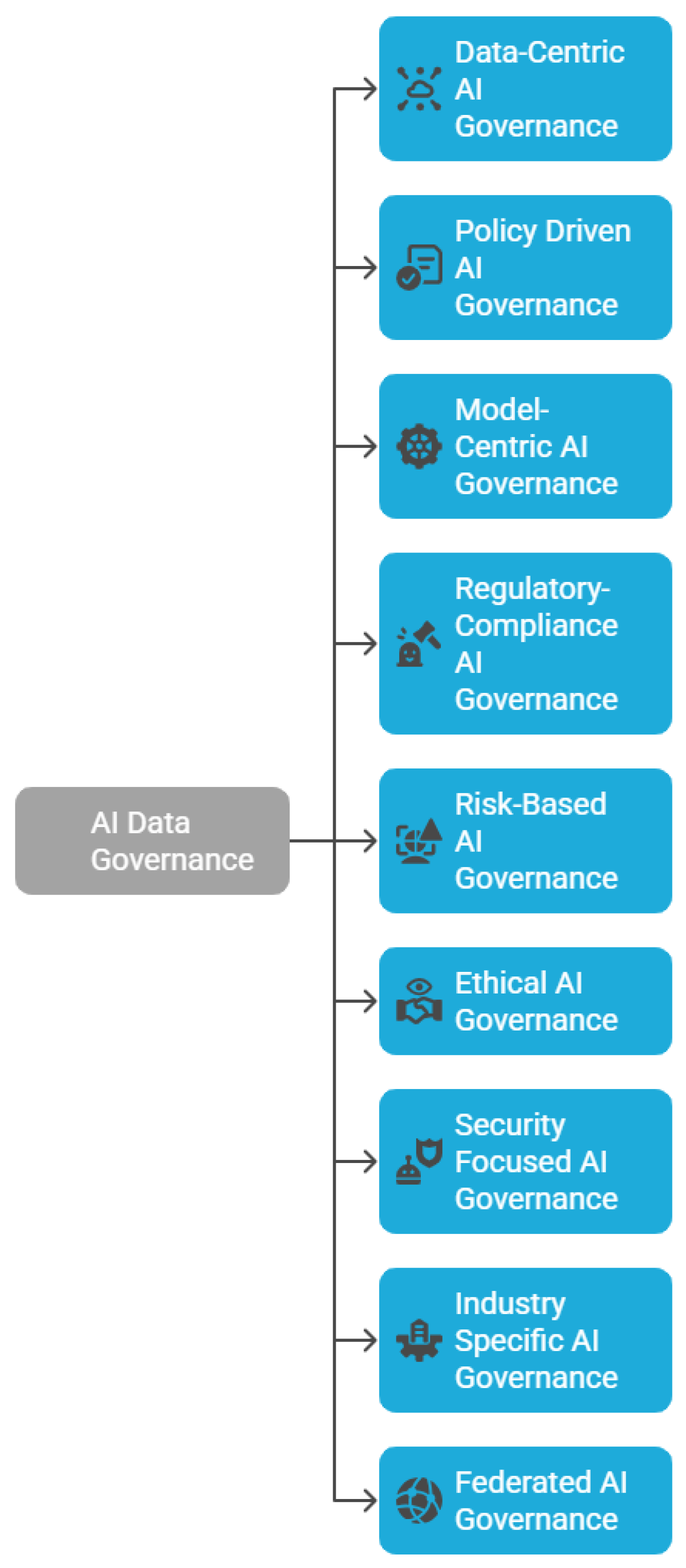

| Types of AI Governance | Focus | Key Aspects | References |

|---|---|---|---|

| Policy-driven governance | Regulations and compliance: focus on various policies and data protection law | GDPR, HIPAA, AI Act, and CCPA | [84,85] |

| Data-centric governance | Data quality and integrity: ensure data fairness, accuracy, and bias mitigation approach | Master data management, data encryption, and third-party data sharing policies | [78,81,86] |

| Model-centric governance | Model explainability: focus on model’s lifecycle from initial phase to secure deployment phase | Model lifecycle management (MLOps and LLMOps) and model performance and accuracy | [86,87,88] |

| Risk-based governance | AI risk management: identifies potential AI risk (e.g., algorithmic bias, data privacy breaches, and security vulnerabilities) and applies data governance controls | Financial and operational risk, security and cyber risk management, and algorithmic risk management | [89,90] |

| Federated AI governance | Decentralized AI systems: AI model training with secure confidential data (e.g., train AI model without sharing confidential patient data) | Decentralized model governance and accountability, security and trust in federated systems, and decentralized model governance and accountability | [91,92] |

| Regulatory-compliance governance | Adherence to laws: ensure models do not break rights, privacy, and laws | Healthcare AI must comply with HIPAA regulations | [93,94] |

| Ethical AI governance | Fairness and bias prevention: identifies biased, unfair, and other discriminatory metrics | Transparency and explainability, ethical guidelines and frameworks (e.g., OECD AI principles), and safety and robustness | [95,96] |

| Security-focused governance | AI cybersecurity and attacks: to prevent models from experiencing various attacks (e.g., model inversion and prompt injection) | Model security and integrity, Cybersecurity Act (e.g., NIST and ENISA), and secure AI model development and post-deployment security | [97,98,99,100] |

| Industry-specific governance | Domain-based AI rules: ensure compliance is aligned with domain-specific regulation (e.g., pharma and healthcare domain) | AI-driven drug discovery follows FDA, healthcare AI must go with HIPAA, and finance with GDPR regulation | [101,102,103] |

| Domain | Governance Strategy | Outcomes | Challenges | References |

|---|---|---|---|---|

| Supply chain | Enforcement of data quality such as inventory levels, ensuring logistics data are accurate, transparency (e.g., routing optimizations), and model monitoring (e.g., drift and hallucination) | Better processes and fewer mistakes | Managing different data sources and keeping track of data history | [172,173,174] |