Abstract

The present study proposes adaptive augmented reality (AR) architecture, specifically designed to enhance real-time operator assistance and occupational safety in industrial environments, which is representative of Industry 4.0. The proposed system addresses key challenges in AR adoption, such as the need for dynamic personalisation of instructions based on operator profiles and the mitigation of technical and cognitive barriers. Architecture integrates theoretical modelling, modular design, and real-time adaptability to match instruction complexity with user expertise and environmental conditions. A working prototype was implemented using Microsoft HoloLens 2, Unity 3D, and Vuforia and validated in a controlled industrial scenario involving predictive maintenance and assembly tasks. The experimental results demonstrated statistically significant enhancements in task completion time, error rates, perceived cognitive load, operational efficiency, and safety indicators in comparison with conventional methods. The findings underscore the system’s capacity to enhance both performance and consistency while concomitantly bolstering risk mitigation in intricate operational settings. This study proposes a scalable and modular AR framework with built-in safety and adaptability mechanisms, demonstrating practical benefits for human–machine interaction in Industry 4.0. The present study is subject to certain limitations, including validation in a simulated environment, which limits the direct extrapolation of the results to real industrial scenarios; further evaluation in various operational contexts is required to verify the overall scalability and applicability of the proposed system. It is recommended that future research studies explore the long-term ergonomics, scalability, and integration of emerging technologies in decision support within adaptive AR systems.

1. Introduction

The advent of Industry 4.0 in recent years has been a significant driver of the adoption of advanced technologies that facilitate digital integration in industrial processes. In this context, augmented reality (AR) has become a pivotal instrument for technical assistance and operator training, markedly enhancing efficiency and safety in industrial settings. AR facilitates the overlay of virtual information on the physical environment, thereby providing operators with direct access to real-time data within their field of view. This facilitates the execution of complex tasks without reliance on external information sources [1]. Technology has been demonstrated to be particularly useful in reducing errors and increasing productivity by providing visual guides adapted to specific tasks [2,3]. This capacity has positioned AR as a transformative technology not only in industry but also in other domains such as education, healthcare, marketing, and entertainment, where its implementation has revealed domain-specific challenges and design patterns [4].

Industry 4.0 is founded upon the principles of digitisation and connectivity, utilising technologies such as the Internet of Things (IoT), artificial intelligence (AI), and cloud computing, which facilitate optimised decision-making and enhanced operational efficiency [5]. In this context, AR is identified as a key enabler for human–machine interaction, with the potential to significantly contribute to maintenance and training assistance. This is achieved through the enabling of contextualised data visualisation, which improves understanding of complex tasks. As indicated in a report by Markets and Markets [6], the global AR market is projected to expand from USD 15.3 billion in 2020 to USD 77.0 billion in 2025, exhibiting a compound annual growth rate (CAGR) of 38.1%. This underscores the significance of AR in contemporary industrial operations.

The advent of AR is affecting a transformation in the manner by which industrial workers are trained and assisted in the completion of their daily tasks. This is due to the fact that AR reduces the cognitive load placed upon the worker by providing real-time visual information that is superimposed upon the physical environment. This eliminates the necessity for the worker to consult manuals or external devices [7]. In maintenance, assembly, and disassembly tasks, AR systems have been demonstrated to markedly enhance accuracy and efficiency by enabling operators to adhere to step-by-step instructions directly on components, accelerating processes, reducing failures, and enhancing occupational safety [8]. This not only accelerates processes but also mitigates operational failures and improves occupational safety.

A number of studies have demonstrated the capacity of AR to facilitate the transfer of expert knowledge to novice workers. This is achieved by providing a platform for experts to collaborate remotely with operators, thereby enhancing both training and on-site problem-solving [9,10]. Furthermore, AR has been demonstrated to confer substantial benefits in task performance and reduced mental workload compared to conventional training techniques [11]. Nevertheless, despite the advantages that AR offers, the adoption of this technology still encounters technical challenges and difficulties in adapting to the specific requirements of users and the work environment [12].

One of the principal challenges associated with the implementation of AR in industrial contexts is the necessity to personalise the user experience. Recent studies have indicated that AR systems should be adaptive, offering the appropriate amount and type of information to the operator in accordance with their level of experience and the complexity of the task [13]. In this regard, adaptive solutions permit the adjustment of data visualisation in accordance with user preferences, thereby enhancing acceptability and mitigating the risk of cognitive overload [14]. Operators with greater experience require less information, whereas those who are less experienced benefit from detailed guidance. The capacity of AR systems to modify the content of instructions in real time, contingent on the worker’s progress and the complexity of the task, has demonstrated efficacy as a strategy to enhance performance [15]. Furthermore, technologies such as eye tracking and cognitive effort monitoring have optimised the personalisation of AR, ensuring that the information provided is always the most appropriate for each situation [16].

Another crucial aspect of AR in industrial settings is its capacity to enhance occupational safety through the provision of visual alerts regarding potential hazards in the physical environment. This is of particular importance in sectors where conditions can be hazardous and the risk of accidents is high, such as mining, construction, and heavy manufacturing [17]. The integration of safety alerts into the field of vision represents a significant advancement in occupational safety, as it not only enhances accident prevention but also reduces response time to critical situations.

Furthermore, AR has the potential to enhance preventive maintenance by furnishing operators with comprehensive data regarding equipment status and prospective failures, thereby enabling them to anticipate issues and implement proactive solutions before they materialise. This represents a significant benefit of AR in industrial contexts, where unanticipated downtime can result in substantial financial implications [18,19].

Despite the progress demonstrated, it is essential to establish a critical comparison between AR and other emerging technologies associated with Industry 4.0, such as digital twins, wearable robotics, and virtual reality (VR). Digital twins are distinguished by their capacity to execute precise simulations in synchrony with real-time data, thereby establishing them as pivotal instruments for predictive analysis and the enhancement of intricate processes [20]. VR, conversely, facilitates total immersion in virtual environments, thereby enabling training in safe and controlled contexts [21]. In contrast, AR is distinguished by its capacity to superimpose digital information on the physical environment in real time, thereby facilitating direct, contextualised, and dynamic operational assistance in real-life work scenarios. Recent studies [22] have demonstrated that, in technical tasks such as industrial maintenance or component assembly, AR has been shown to outperform both traditional methodologies and certain VR applications in terms of operational accuracy, error reduction, and time efficiency.

Nevertheless, the implementation of AR solutions on an industrial scale is constrained by technical limitations that must be surmounted to ensure their viability and performance in challenging contexts. The primary challenge is the latency associated with rendering and interaction with virtual objects, which can negatively impact the user’s perceptual synchronisation. In dynamic scenarios where tasks require immediate responses, latencies of between 300 and 500 milliseconds (ms) have been reported [23], values that compromise both the fluidity of the experience and operational safety. Another critical aspect is the precision in the superposition of digital elements onto physical components. This spatial precision is of particular relevance in sectors such as aerospace, where millimetre tolerances are of pivotal significance [24]. Consequently, calibration, tracking, and spatial anchoring systems must be sufficiently robust against variations in environmental conditions, such as changes in lighting or movements of the user and the object. A lack of reliability in alignment has been shown to induce operational errors, affect system efficiency, and significantly increase the cognitive load on the operator.

Notwithstanding the considerable potential offered by AR in industrial environments, its large-scale implementation continues to face significant technical challenges. These include the need to design interfaces that are not only ergonomic but also intuitive, capable of minimising distractions in complex or adverse operating conditions [25,26]. Moreover, the precision in the location and superposition of virtual elements on physical components is a critical requirement, especially in sectors that demand high quality and safety standards, such as the automotive industry [27]. In this context, the strengthening of spatial tracking and anchoring systems, together with the development of advanced methodologies for the dynamic personalisation of visual content, is emerging as a priority strategic line to consolidate the advancement of AR within the framework of Industry 4.0 [28].

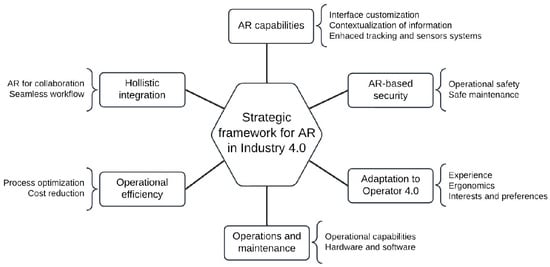

In light of these challenges, there is a clear need for a proposed architectural framework that can address the technical barriers and facilitate a safe and efficient implementation of AR in Industry 4.0. The proposed architecture should encompass the customisation of interfaces, the incorporation of AR-based security mechanisms, and enhancements to tracking and sensor systems, with the objective of guaranteeing the precision and contextualisation of information. Such an approach would not only enhance the user experience but also make a substantial contribution to safety and productivity in industrial environments. At the nexus of these challenges lies a strategic framework that integrates AR capabilities and responds to the requirements of maintenance and the Operator 4.0 profile. This framework must be aligned with the needs of personalisation, contextualization, and technical requirements, adapting to variable operator profiles and tasks [29,30] (Figure 1).

Figure 1.

Strategic framework for implementation of AR in Industry 4.0.

The principal objective of this study is to devise and implement an adaptive AR architecture with the aim of optimising real-time operational assistance and reinforcing safety in industrial environments that are characteristic of Industry 4.0. This architectural model seeks to address the existing limitations in the literature regarding the implementation of AR. It proposes a model that facilitates the efficient integration of this technology in industrial processes while allowing the dynamic customisation of instructions and operational guides according to the operator’s skills and the characteristics of the environment.

The proposed AR adaptive architecture has the objective of improving operational efficiency, which it seeks to achieve by reducing execution times and errors in tasks such as predictive maintenance and assembly and connection of components. Furthermore, the cognitive load perceived by operators is reduced by providing information directly in their field of vision. Additionally, the objective is to reinforce industrial safety by integrating visual alerts and interactive guides to facilitate the early detection and prevention of risks while promoting technological acceptance through the design of intuitive, ergonomic interfaces that are tailored to the capabilities and experience of users.

In alignment with the technological advancements and challenges outlined in the existing literature, this study examines the potential of adaptive AR models to overcome technical, operational, and standardisation barriers, offering a scalable solution that aligns with the customisation and precision demands of Industry 4.0. The validation of the proposed architectural model is conducted through the development and implementation of a functional prototype, utilising AR devices such as the HoloLens 2 (Microsoft Corporation, Redmond, WA, USA) and integrating cutting-edge technologies such as Unity 3D 2022.3.10f1 (Unity Technologies, San Francisco, CA, USA), Vuforia Engine 10.16 (PTC Inc., Boston, MA, USA), and industrial sensors for real-time monitoring.

2. Technological Advances and Challenges of AR in Industrial Contexts

2.1. AR Applications in Industry 4.0

AR has emerged as a transformative technology within Industry 4.0, playing a crucial role in real-time monitoring and industrial safety. The capacity of AR to create an interactive visual environment enables operators to receive real-time instructions, data, and alerts superimposed on the physical environment, thereby facilitating the execution of complex tasks and reducing cognitive load [31]. This technology has been demonstrated to be effective in a number of specific applications, including operator training, predictive maintenance, assembly tasks, and data visualisation. It enables a rapid and safe response in situations that require a high level of responsiveness and present a significant industrial risk [32].

The application of AR in industrial contexts shares foundational principles with implementations in other sectors, where immersive technologies have been increasingly adopted to support training, engagement, and task performance [33]. This cross-sectoral perspective lends further credence to the notion that the efficacy of AR is contingent upon its capacity to adapt to the demands of users and tasks in real time.

The efficacy of AR systems in facilitating complex industrial tasks, such as equipment maintenance and operation, has been substantiated by numerous studies. Operators can be guided by step-by-step visual instructions in their field of vision, thereby reducing the likelihood of errors that may arise from relying on physical manuals or external devices [34,35]. The implementation of devices such as smart glasses that display interactive instructions has been demonstrated to reduce the probability of operational errors by providing continuous feedback and eliminating the necessity to switch between information sources. This is particularly pertinent in sectors where safety and quality are of paramount importance, such as manufacturing and assembly [36,37,38]. Moreover, in the context of training, AR facilitates the simulation of intricate scenarios within a secure and regulated setting, thereby facilitating operator practice of tasks without exposure to physical hazards [39,40].

Moreover, AR has been demonstrated to be an efficacious instrument in the domain of predictive maintenance, furnishing prompt insight into the condition of equipment and the identification of prospective failures, thus empowering operators to undertake preventive interventions [41]. This proactive approach contributes to a significant reduction in unplanned downtime, thereby optimising operational efficiency and minimising associated costs, particularly in industrial sectors where outages can generate considerable economic impacts.

2.2. Main Challenges and Constraints

Despite the advantages it offers, the adoption of AR in Industry 4.0 is hindered by significant technical and operational barriers. The high development costs of these systems and the difficulty of integration in complex and varied industrial environments represent the most significant obstacles [42]. The accuracy of tracking systems and the superimposition of virtual elements on the physical environment are critical aspects that present significant challenges, particularly in sectors where accuracy is essential for operational safety, such as aeronautics [43]. Technical limitations in the alignment and stability of superimposed visual elements affect the effectiveness of AR in applications that require high precision.

A further significant challenge is the lack of standardisation in the development and evaluation of AR systems. This makes it difficult to create universal applications that can be adapted to different sectors, as the heterogeneity of industrial environments and needs makes it difficult to implement AR solutions that can be adapted without significant adjustments to software or hardware [44]. Furthermore, the heterogeneity in operator skill and experience levels represents an additional challenge for developers, who must design ergonomic and flexible interfaces capable of adjusting to varying working conditions and user profiles [45].

In terms of occupational safety, AR has the potential to enhance working conditions by projecting real-time visual alerts directly into the operators’ field of vision, alerting them to potential hazards and facilitating a prompt response to dangerous situations. However, the safe implementation of these systems necessitates the use of intuitive interfaces and interactive verification protocols to guarantee compliance with safety measures and prevent potential omissions [46]. It is therefore imperative that these systems are designed with great care in order to mitigate the risk of critical steps being omitted as a result of overreliance on technology. Such an omission could negate the safety benefits that AR offers.

2.3. AR Adaptive Assistance Models

The importance of personalisation in AR assistance has increased in recent years [47], indicating the necessity to modify visual content and information density in accordance with the operator’s proficiency level and task context. Adaptive assistance models in AR facilitate the real-time adjustment of the interface, thereby optimising performance and reducing cognitive load while enhancing safety [48].

Adaptive AR models integrate adaptive methodologies that facilitate the creation of personalised experiences based on user progress and task complexity. For instance, real-time adaptation in railway maintenance [49] employs AR to guide operators through complex processes by adjusting visual content. The flexibility of these systems has also been applied in the field of visualisation and assistance in industrial operations [50]. This has the effect of providing appropriate guidance for each situation and minimising operator errors by providing the exact amount of information needed for each stage of the task.

This type of adaptive assistance in AR represents a significant advancement in terms of usability and acceptance of the technology [51], particularly in the context of Industry 4.0, where personalisation, precision, and safety are paramount, and the capacity to adapt content to the operator’s capabilities and progress has become crucial to optimise performance and ensure the efficacy of AR in industrial applications.

3. Adaptive Architecture Proposal for AR Assistance in Industry 4.0

Industry 4.0 signifies a profound transformation in industrial production and monitoring systems through the integration of sophisticated technologies such as AR. In this context, the implementation of adaptive AR architecture becomes a crucial element to enhance precision, efficiency, and safety in the execution of industrial tasks. This architectural design is intended to facilitate real-time operational assistance, thereby enabling operators to interact with dynamic environments in an effective and safe manner while simultaneously reducing the risks associated with human error and enhancing the user experience in industrial settings.

The proposed architecture responds to the specific challenges of Industry 4.0, where the integration of digitalisation and automation with systems that promote both adaptability and real-time operational safety is a necessity [29]. A fundamental tenet of this architectural framework is its capacity to adapt the level of detail in AR instructions in a dynamic manner, contingent on the operator’s proficiency and the specific attributes of the operational milieu. To illustrate, less experienced operators receive comprehensive and detailed guidance, which reduces the cognitive load, while more experienced operators access more concise and focused representations, thus optimising operational efficiency and accuracy in the execution of critical tasks.

The architectural design incorporates principles such as information reuse, dynamic updating, and bidirectional interaction with the physical environment, thereby ensuring the system’s flexibility and facilitating its deployment in a diverse range of industrial settings. The reuse of information ensures that AR guides can be adapted to different platforms and configurations without the necessity for extensive customisation. Furthermore, the dynamic loading of data enables continuous updating without interruption to ongoing operations. Moreover, real-time bidirectional interaction enables the system to adapt continuously to changing conditions in the operational environment, thereby optimising the user experience and task execution efficiency.

The architectural lifecycle is structured into four principal phases: planning, enrichment, execution, and review. These phases guarantee that the system not only adapts to the requirements of each environment but also undergoes continuous evolution in response to feedback derived from operational data. During the execution phase, the system collates pertinent data, including operational time and error rates, which are then used to modify the AR instructions in real time in order to reflect the specific requirements of the operator and the environment. In the review phase, the aforementioned metrics are subjected to analysis with a view to identifying potential avenues for improvement. This enables the constant evolution of operational strategies and ensures the reliability and dynamism of the system. It is of significant importance to note that this iterative and adaptive approach is fundamental to meeting the accuracy, safety, and efficiency standards that are required in the context of Industry 4.0.

In light of the aforementioned considerations, the following subsections will provide a detailed examination of the technical and methodological rationale underlying this proposal. Firstly, the fundamental principles that underpin the design of AR systems for real-time monitoring will be discussed, with particular emphasis on the pillars of adaptability, user-centred design, and operational safety. Subsequently, a particular architectural framework for real-time operational assistance based on AR will be presented. This will address the modular structure of the framework, the dynamics of interaction with operators and the system’s ability to customise instructions according to the specific needs of the industrial context.

3.1. Design Principles of AR Systems for Real-Time Monitoring

The design of an AR system for implementation in Industry 4.0 must be based on principles that ensure efficient and safe integration into the industrial environment. This is particularly important in contexts where there is considerable variability in operator experience and skill, and the risks associated with human error are significant. The proposed architectural framework is founded upon three fundamental principles: adaptability, user-centred design, and operational safety. These principles not only inform the structuring of the AR modules and components but also seek to optimise the operator’s interaction with the digital tools, prioritising real-time operational assistance that is flexible and adapted to both the user’s needs and the characteristics of the industrial environment.

Adaptability is a fundamental tenet of the architectural design of AR systems intended for deployment in industrial settings. The necessity for adaptability arises from the inherent diversity of operator skills and experience, which demands a flexible system capable of dynamically adjusting the level of detail and complexity of instructions. To illustrate, AR systems must be capable of tailoring the quantity and nature of information presented to the user. For those with less experience, the system must furnish comprehensive and visually detailed guidance, whereas for more seasoned operators, it is vital to provide more summarised and straightforward representations that prioritise efficiency and avoid superfluous redundancies.

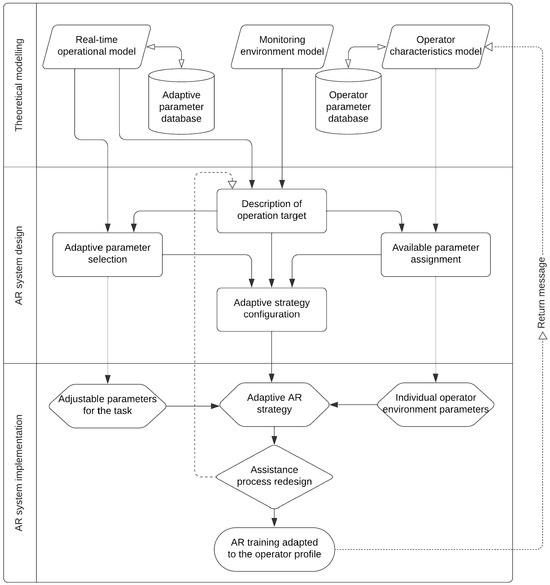

In this context, a comprehensive methodology for the modelling, design, and implementation of an AR-based adaptive assistance system is presented in Figure 2. The methodology is structured around three fundamental phases. The initial phase, designated theoretical modelling, is concerned with the construction of conceptual models that delineate the pivotal operational parameters and contextual characteristics of the industrial milieu. This enables the collation and organisation of pertinent data for adaptive personalisation. The second phase, designated as AR system design, entails the organisation of the aforementioned data and the development of adaptive strategies that align operator capabilities with the specific demands of the task. These strategies comprise dynamic parameter setting and the exact definition of operational objectives, thereby ensuring that instructions are both contextually pertinent and tailored to the specific requirements of each operator. Finally, the adaptive implementation phase enables the system to adjust the presented AR guidance in real time, utilising continuous feedback processes to optimise the interaction between the operator and the system. This facilitates the maximisation of operational efficiency and safety in the work environment.

Figure 2.

Proposed methodology for modelling, designing, and implementing an AR-based adaptive assistance system.

The initial phase, designated theoretical modelling, is concerned with the construction of conceptual models that delineate the pivotal operational parameters and contextual characteristics of the industrial milieu. This enables the collation and organisation of pertinent data for adaptive personalisation. The second phase, designated as AR system design, entails the organisation of the aforementioned data. The initial phase, theoretical modelling, serves to establish conceptual and operational foundations through the implementation of three interdependent models. The real-time operations model defines the principal operational parameters that permit adjustments to tasks in accordance with the evolving conditions of the environment. This provides the initial basis for operational customisation. The environment monitoring model gathers specific data from the operating environment and organises it in a database comprising tools, work objects, and environmental conditions, thereby ensuring an adequate flow of information for contextual analysis. Ultimately, the operator characteristics model categorises users according to their competencies and experience, generating indicators that serve as a foundation for the personalisation of AR instructions, thereby facilitating the adaptation of the system to different user profiles. These models interact through specialised databases, such as the adaptive parameters database and the operator characteristics database, which serve as repositories of information to feed subsequent stages of the process.

The second phase, designated as AR system design, entails the organisation of data obtained in the modelling stage and the configuration of adaptive strategies through a structured flow. The selection of adaptive parameters identifies the critical elements that require adjustment to optimise the interaction between operator capabilities and task demands, thereby ensuring efficient alignment between available resources and operational objectives. Subsequently, the description of the target operation defines the principal elements and characteristics of the operational process, thereby providing the foundation for the configuration of adaptive strategies. The aforementioned strategies, which are delineated in the adaptive configuration stage, integrate the selected parameters and the operator’s characteristics, thereby generating a bespoke model that considers both the user’s constraints and strengths.

The final phase, the implementation of the AR system, is concerned with dynamic, real-time interaction with the operator. This involves the integration of continuous feedback processes, which allow instructions to be adjusted as the operation progresses. The adaptive approach in AR entails the application of bespoke settings to modify both the visual and textual content displayed to the operator, thereby ensuring its alignment with their real-time performance. This approach enables the system to respond in a dynamic manner to the operator’s requirements, with instructions being adapted based on key performance indicators such as error rate and execution time. The redesigned assistance process permits the modification of operating procedures based on the aforementioned metrics, thereby optimising not only safety but also task productivity. The final phase of the process entails the provision of training that is tailored to the specific profile of the operator. This training is conducted with the use of AR guides that are dynamically adjusted with the objective of enhancing efficiency and reducing the occurrence of operational errors.

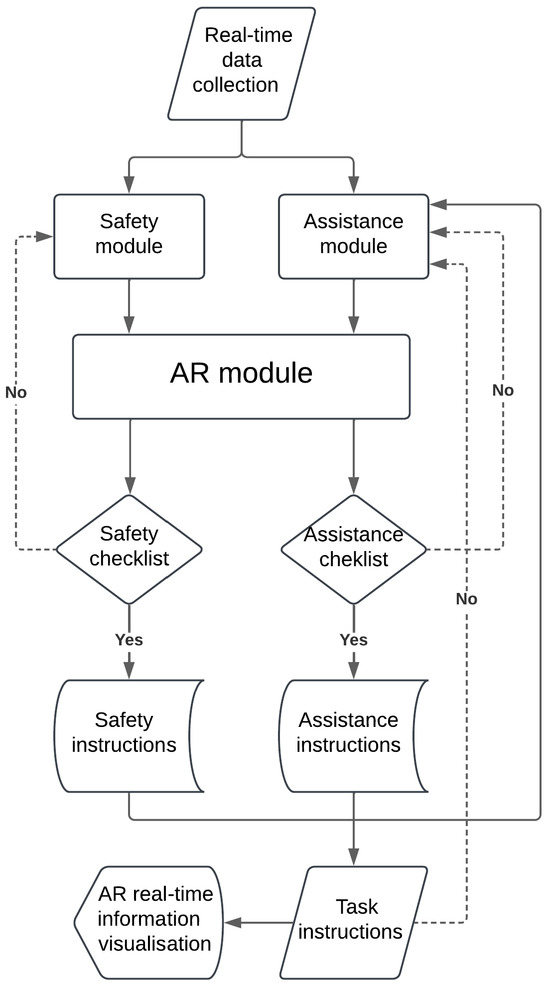

In consideration of the aforementioned methodology, a structured AR-based real-time assistance system is presented (Figure 3). This system integrates safety and operational assistance modules, which guide the user through each step of the workflow. The design commences with the collection of real-time data, which are then fed into two principal modules, i.e., the safety module, which is responsible for ensuring that all regulations are adhered to prior to commencement, and the assistance module, which analyses the operator’s preparedness to commence the tasks. These modules are interconnected with the AR module, which is tasked with centralising operations and presenting the operator with adaptive, interactive checklists that control each phase of the process.

Figure 3.

Structure of the real-time AR-based assistance system.

The operational flow commences with the interaction of the safety module, which generates an interactive safety checklist. This ensures that the operator has completed all actions necessary to comply with safety standards before proceeding to the subsequent module. Upon successful completion of this stage, the system initiates the attendance checklist, which is designed to confirm the operator’s readiness to perform the designated tasks. These processes are supported by centralised databases that store instructions and adaptive content, which are customised according to the operator’s profile and the characteristics of the environment.

Subsequently, the AR module displays safety and assistance instructions in real time, which are presented by AR devices directly in the operator’s field of vision. The instructions, which include visual guides and specific procedures, are adapted dynamically based on performance data and operating conditions. This allows the content to be adjusted according to the contextual needs and metrics of the operator, such as their level of experience or real-time performance.

The incorporation of these modules is crucial for the reduction in risk and the enhancement in operational efficiency. For instance, the validation of each phase through the utilisation of interactive checklists markedly diminishes the probability of human error and guarantees compliance with regulations prior to progression. Furthermore, the iterative flow of the system enables AR to serve not only as a visualisation tool but also as a comprehensive control and assistance system, capable of responding dynamically to the demands of the environment.

3.2. Architecture Proposal for Real-Time AR Assistance in Industry 4.0

The proposed architecture for AR assistance is founded upon principles of scalability, security, and modularity. This architectural design permits the efficient integration of multiple interdependent components that collaborate synergistically to provide a contextualised assistance system. Similarly, as previously stated, the modular design incorporates real-time interaction with the instruction database, integration of assistance and safety modules, and dynamic deployment of information tailored to the operational environment and operator profile.

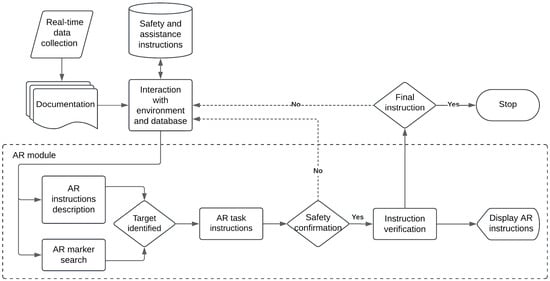

The system is structured around the following four principal modules: virtual data, real-world interaction, safety, and real-time assistance. Each module fulfils a distinct function within the adaptive assistance chain, collectively ensuring the integrated delivery of personalised, dynamic, and real-time accessible operational and safety instructions, thereby optimising user experience while minimising operational risks (Figure 4).

Figure 4.

Industrial adaptive assistance AR module workflow.

The workflow of the AR module and its interaction with the instruction database form the foundation of the real-time assistance system designed for industrial environments. They facilitate the organised collection, processing, deployment, and verification of virtual content, thereby ensuring accurate synchronisation and safe execution of operations. The initial process is concerned with the real-time collection of data, which are then processed and stored in a structured documentation system that serves as a central repository for operational and safety instructions. Subsequently, the data are integrated into the environment and database interaction component, thereby enabling instructions to be synchronised with the specific conditions of the operation.

The AR instructions description sub-module plays a pivotal role within the AR module flow, as it presents detailed textual and visual information that contextualises the operator with respect to the task parameters. This is achieved by organising and displaying structured content, including diagrams, instructions, and visual markers. This allows the operator to become familiar with the specific requirements before commencing the operation, which is of paramount importance in minimising ambiguity and reducing the margin of error, particularly in complex tasks.

The AR marker search sub-module serves to extend the interaction capabilities of the system by implementing image processing and pattern recognition, which enable the precise location and tracking of visual markers in the operator’s physical environment. This sub-module establishes a correspondence between virtual instructions and the operating environment, thereby facilitating the precise superimposition of virtual elements on physical components.

The process culminates in the instruction verification sub-module, which bears responsibility for the sequential validation of each step through the utilisation of interactive checklists. This ensures that each action performed complies with the established requirements before advancing to the subsequent step and providing real-time confirmations. It is crucial to emphasise the significance of these validation systems in risk management. In conjunction with the prompt feedback of data, this enables the guidelines and instructions provided to the operator to be adjusted dynamically in accordance with the performance metrics of the task being undertaken by the operator. In this manner, the assistance provided is optimised and efficient, and safe execution is facilitated in accordance with the principles of adaptive design.

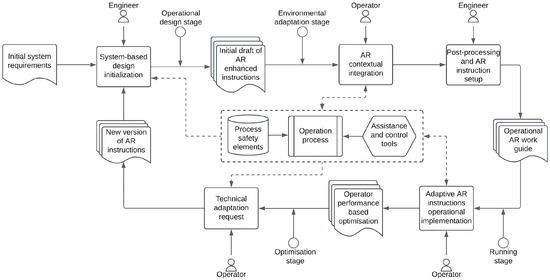

This modular and scalable approach integrates interdependent components, thereby facilitating efficient collaboration and the delivery of contextualised operational assistance in real time. The system’s capacity to adapt both the content and level of assistance in response to the challenges of Industry 4.0, which is characterised by variability in operator experience and task complexity, represents a significant advantage. The system follows an adaptive lifecycle, as illustrated in Figure 5, comprising four main phases: operational design, environmental adaptation, execution, and optimisation. These iterative stages ensure the constant evolution of the system by incorporating real-time feedback and updating work instructions according to the dynamic conditions of the operating environment and operator performance. This enables the system to remain efficient and relevant to the changing demands of the industrial environment.

Figure 5.

Adaptive lifecycle for real-time AR assistance.

The initial phase, operational design, establishes the fundamental basis of the system by defining the preliminary requirements and configuration of the AR instructions. At this juncture, the engineers utilise the system-based design initialisation module to develop an initial draft of AR-enriched instructions. This preliminary version comprises a fundamental structure with task descriptions, technical parameters, and reference models, incorporating placeholders for specific content to be adapted in subsequent stages. This enables the system to be flexible and adaptable, ensuring that it can be rapidly adjusted to different operational contexts without the necessity for extensive redesigns.

The second phase, environmental adaptation, incorporates a contextual integration process in which the system captures specific data from the operating environment using AR devices. The data obtained, including 3D models, reference images, and site data, are integrated in real time through the AR contextual integration module. This stage is crucial for the customisation and enhancement of the instructions, ensuring the accurate and contextual overlay of virtual elements on the physical environment. The personalisation achieved at this stage serves to reduce the cognitive load on the operator while also improving operational accuracy by providing adaptive visual guidance that is specific to the actual working conditions.

In the third phase, which is the operational implementation of the adapted instructions in real time, the system deploys the aforementioned instructions using the “Adaptive AR instructions operational implementation” module. During this phase, the operator’s performance is monitored using metrics such as operating time, error rate, and compliance with safety protocols. This continuous monitoring enables the system to make adjustments to the operational guidelines in response to operator behaviour and environmental demands. Furthermore, the “Process safety elements” and “Assistance and control tools” modules are integrated to guarantee the strict adherence to safety protocols and to facilitate prompt technical assistance, thereby ensuring a secure and productive work environment.

The final phase, optimisation, comprises a comprehensive analysis of the data collected during execution through the “Operator performance-based optimisation” module. This analysis is conducted in collaboration with engineers and operators, who work together to adjust instructions and improve operating procedures. This process reaches its conclusion with the generation of new iterations of AR-enhanced instructions through the “New version of AR instructions” module, which are then integrated back into the system, thereby restarting the iterative cycle. This ensures that the system evolves proactively, adapting to the changing demands of Industry 4.0 and promoting constant improvement in safety, efficiency, and operational adaptability.

The proposed adaptive AR architecture represents a substantial advancement over existing models by incorporating real-time dynamic personalisation, operator profile-based adjustments, and modular scalability. In contrast to conventional approaches, which frequently depend on static instruction sets, our architecture utilises adaptive instructions and continuous feedback mechanisms to adapt the information presented to operators according to their skill levels and the operational context. This adaptability has the potential to reduce cognitive load, enhance operational efficiency, and improve safety measures, addressing the critical demands of Industry 4.0 environments. The architecture’s scalability and flexibility are ensured by its modular design, allowing for deployment in a variety of industrial scenarios while maintaining consistent performance and ease of use.

4. Implementing AR in Industrial Scenarios

The proposed AR architecture was tested experimentally through the development and implementation of a working prototype in a simulated industrial environment. This environment was specifically designed to focus on predictive maintenance operations and the assembly of an asynchronous induction motor. For the purposes of visualisation and interaction with the AR system, Microsoft HoloLens 2 devices were employed, selected on the basis of their capacity to provide an immersive and hands-free experience, which is of particular importance in industrial environments where mobility and safety are of paramount concern.

4.1. System Configuration and Experimental Environment

The system was developed using Unity 3D as the graphics engine, complemented by the Vuforia SDK for AR object recognition and tracking. The choice of Microsoft HoloLens 2 was based on its ability to provide an immersive, hands-free experience, which is crucial in industrial environments where mobility and interaction without additional devices are essential.

The asynchronous induction motor is a widely utilised component in industry, primarily due to its robust construction and energy-efficient operation. The motor has a nominal power of 5 kW, operates at a voltage of 400 V and a frequency of 50 Hz, with a synchronous speed of 1500 rpm, a power factor of 0.85, and an efficiency of 92%. The motor is equipped with a star/delta connection, an insulation class of F, and an IP55 protection rating, rendering it well-suited for deployment in challenging industrial settings. Furthermore, the design and implementation of the system complied with safety and risk reduction standards for industrial machinery, as evidenced by compliance with international standards such as ISO 12100:2010 [52] on machine safety and ISO 13849-1:2023 [53] on safety control systems.

The asynchronous induction motor was selected as the primary component for this study due to its widespread industrial application, robust construction, and energy efficiency. This choice provides an ideal foundation for validating the adaptive AR architecture in a controlled environment, allowing for detailed analysis of critical parameters such as temperature, vibration, and electrical current. While this study constitutes an initial experimental validation, the proposed methodology is inherently scalable and can be extended to more complex systems and dynamic industrial settings in future research.

4.2. Testing of the Prototype and Implemented Functionalities

The objective of the design and implementation of the AR system prototype was to incorporate a set of advanced functionalities that would allow for the integral optimisation of industrial processes. The aforementioned functionalities were selected and developed in accordance with an approach based on the identification of operational needs, with the objective of optimising efficiency, precision, and safety in the activities carried out by operators. The principal technical and operational characteristics of the system are outlined below.

The experimental protocol comprised five consecutive days of testing, with controlled illuminance and an eye–object distance of 70 ± 5 cm. The sampling interval for all analogue channels was set at 100 ms, and the end-to-end latency of the data channel was 412 ± 28 ms.

Object recognition and tracking: The Vuforia system was employed to implement a feature recognition and real-time tracking mechanism for the engine and its components, thereby enabling the precise superimposition of virtual elements on the physical environment. The visualisation of adaptive instructions in AR comprises modules that present detailed and adaptive instructions, including interactive 3D models, animations, and explanatory texts, directly in the operator’s field of vision.

The real-time monitoring and analysis of data: Sensors were integrated into the system to capture critical engine parameters, including temperature, vibration, and electrical current. The data were subjected to real-time processing and analysis, thereby facilitating the implementation of predictive maintenance strategies. This implementation is aligned with ISO 13374-1:2016 [54] on condition monitoring and diagnostic systems, thereby ensuring a consistent structure for data processing and the generation of timely alerts.

The system is designed to be interacted with in a natural manner. HoloLens 2 enables the operator to interact with the system through gestures and voice commands, thereby facilitating an intuitive user experience and reducing the necessity for supplementary input devices.

Moreover, the recommendations set forth in ISO 9241-960:2017 [55] regarding touch and gesture interaction were taken into account with the aim of guaranteeing optimal usability and efficient user experience. Figure 6 depicts the operator engaging with the AR system prototype, wherein virtual elements are superimposed on the asynchronous induction motor.

Figure 6.

Testing the first prototype using Microsoft HoloLens 2.

4.3. Implementation of Intelligent Predictive Maintenance

The implementation of intelligent predictive maintenance in the system integrates sensors and processing capabilities that enable real-time analysis of critical engine signals, continuously monitoring key parameters such as temperature, vibration, and electrical current against defined reference values according to the engine’s technical specifications. This allows potential anomalies to be identified before they become major failures.

In order to provide a foundation for the validation of the functionality under discussion, a physical laboratory setup was developed. In this setup, all sensors were connected to a LabJack U3-HV (LabJack Corporation, Lakewood, CO, USA) data acquisition system (DAQ) (Figure 7). The device was selected on the basis of its compatibility with ±10 V analogue input signals in high-voltage mode (HV), its flexibility for integration into custom development environments, and its USB connectivity, which facilitates data transmission. The DAQ furnished four autonomous analogue input channels (AIN0–AIN3), each linked to a distinct sensor module for the purpose of monitoring a specific operational parameter of the motor: temperature, vibration, and current.

Figure 7.

Experimental setup of the predictive maintenance system.

The temperature was monitored using a PT100 resistance temperature detector (RTD), which is in accordance with IEC 60751:2022 [56]. The sensor exhibits a nominal resistance of 100 Ω at 0 °C and class A accuracy, with a tolerance of ±0.15 °C + 0.002 °C, where t represents the measured temperature in degrees Celsius. While the PT100 sensor offers a full range from −200 °C to +850 °C, the system was configured for operation between −50 °C and +250 °C, aligning with industrial conditions. In order to guarantee compatibility with the U3-HV’s analogue input range, the PT100 was connected via a MAX31865 signal conditioning module. This module converted the sensor’s resistive output into a stable direct current (DC) voltage.

Vibration signals were acquired using a triaxial piezoelectric accelerometer with IEPE (Integrated Electronics Piezo-Electric) technology, in accordance with the specifications defined by ISO 20816-3:2020 [57] for vibration monitoring in rotating machinery. The sensor has been characterised by a sensitivity of 100 mV/g, a measurement range of ±50 mV peak, and a frequency response from 0.5 Hz to 10 kHz. It is imperative to note that IEPE sensors output an AC signal and require constant-current excitation. Therefore, it was necessary to integrate an IEPE signal conditioner into the system. The function of this module is to supply the requisite excitation current and to convert the AC signal into a properly referenced DC voltage within the input limits of the U3-HV. This ensured that dynamic vibration data could be accurately captured, thereby enabling the early detection of mechanical faults such as imbalance, misalignment, or bearing failure.

For the purpose of current monitoring, a solid-core toroidal current transformer (CT) with a 100:5 A transformation ratio and accuracy class 0.5 was utilised, in accordance with IEC 61869-2:2019 [58]. These CTs have been shown to be capable of measuring primary currents of up to 120 mA and delivering an AC current output that is proportional to the motor load. In order to adapt the signal for DAQ input, a burden resistor was connected across the CT’s secondary winding, thereby converting the current into an AC voltage signal. This voltage was then passed through an active signal conditioning circuit, the function of which was to rectify and filter the waveform to produce a stable DC signal compatible with the U3-HV’s analogue inputs. This configuration facilitated precise and uninterrupted monitoring of the motor’s electrical current, thereby enabling the identification of anomalies such as overloads or phase imbalances.

The DAQ was integrated into the Unity 3D development platform through a modular software architecture capable of real-time data acquisition, processing, and visualisation. A bespoke Python 3.11.5 (Python Software Foundation, Wilmington, DE, USA) script was developed to collect sensor data from the DAQ and transmit it at 10 Hz using a local TCP/IP socket connection (Appendix A). This script utilised the LabJack LJM (LabJack Management Library).

Within the Unity 3D framework, a network listener component was responsible for decoding the incoming JSON-formatted data, which were then utilised to dynamically update the graphical interface embedded within the 3D environment. The interface incorporated real-time graphs, numeric readouts, and visual indicators that reflected the engine’s operating state. Utilising Unity’s AR rendering capabilities, the sensor data were spatially aligned with physical elements in the operator’s field of view through the Microsoft HoloLens 2, thereby enabling intuitive and contextualised interaction with the monitored equipment.

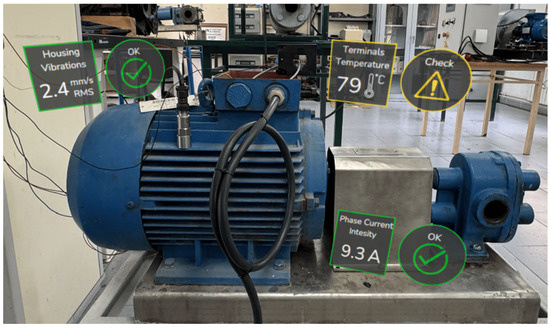

In the event of a motor temperature of 79 °C being attained (which is a significantly lower temperature than the 155 °C limit allowed for insulation class F), the AR system generates a visual alert that is superimposed on the operator’s field of vision. The purpose of this alert is to inform the operator of possible operational anomalies, such as electrical overload, loss of contact at the terminals, or deficiencies in the ventilation system. Additionally, the system employs a set of targeted corrective measures based on the context, thereby facilitating the prompt and precise intervention of the operator. Figure 8 provides a detailed representation of how the AR system visualises the critical engine parameters in real-time, emphasising relevant operational states and alerting the operator to any deviations or abnormalities identified.

Figure 8.

Implementing intelligent predictive maintenance control for asynchronous induction motors.

The design and development of the functions for signal processing and alert generation were conducted in accordance with the specifications set forth in IEC 61499-1:2022 [59], which ensures the implementation of a modular and scalable architecture within the control system. Furthermore, the ISO 17359:2018 [60] guidelines were incorporated, which establish the principles for monitoring and diagnosing machine conditions, ensuring efficient and reliable management of predictive maintenance, and promoting the safety and optimisation of industrial processes.

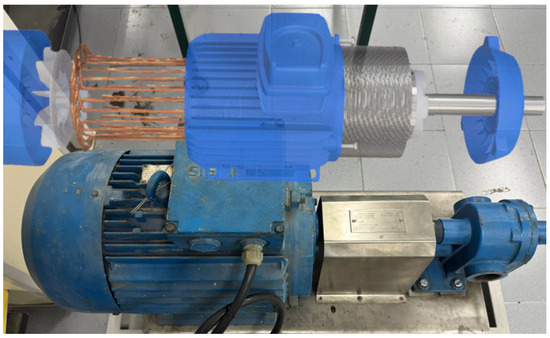

4.4. Assistance with Connection and Assembly Process

During the connection and assembly process, the AR system provides the operator with comprehensive instructions and 3D visualisations of the parts and components to be assembled. This approach enables the operator to perceive the assembly sequence, dimensional tolerances, and tightening torques required for each bolt or joint with clarity and precision, based on specific technical data. To illustrate, during the coupling of the rotor to the stator, the system not only indicates the correct position of the component but also verifies, through sensors and machine vision technology, that alignment and fit comply with the defined parameters. This reduces the probability of errors and significantly improves the quality of the connection and assembly.

Figure 9 illustrates the capacity of the AR system to facilitate the visualisation of virtual elements and the coordination of actions through the system. The image depicts the assistance provided during the engine assembly process. In order to guarantee the quality and ergonomics of the assembly process, the ISO 6385:2016 [61] guidelines on the ergonomic design of work tasks were adhered to, which ensured that the assembly was performed in a safe and efficient manner.

Figure 9.

Assembly process of the asynchronous induction motor in AR.

5. Analysis and Discussion

A comparative study was conducted to evaluate the performance and effectiveness of the AR system, using two methodological approaches applied to different groups of operators. The control group (CG) completed the assigned tasks using conventional methods, specifically printed manuals and standard operating procedures, while the experimental group (EG) performed the same tasks using the AR system implemented on HoloLens 2 devices, which offered functionalities such as virtual element overlay, adaptive instructions, and real-time monitoring. The decision to use printed manuals as a reference is a pragmatic one, given their ubiquity as a standard tool in industrial environments. This choice provides a baseline for assessing the tangible improvements brought about by AR in terms of efficiency, accuracy, and safety. This methodological approach enables a clear and quantifiable analysis of the specific advantages of AR over traditional methods, paving the way for future, more extensive comparative studies. While the present study focuses on printed manuals as the standard method of comparison, it acknowledges the importance of broader analyses. Subsequent research endeavours will encompass evaluations of advanced digital technologies and hybrid systems, thereby offering a more comprehensive assessment of the impact of AR in contemporary industrial settings.

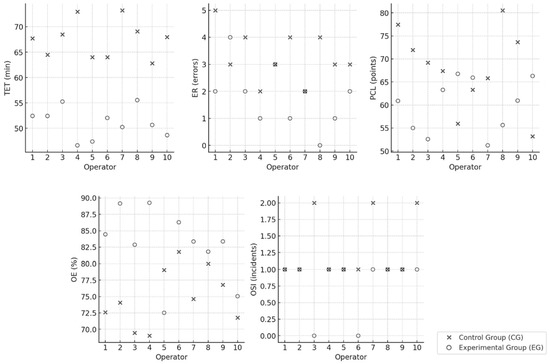

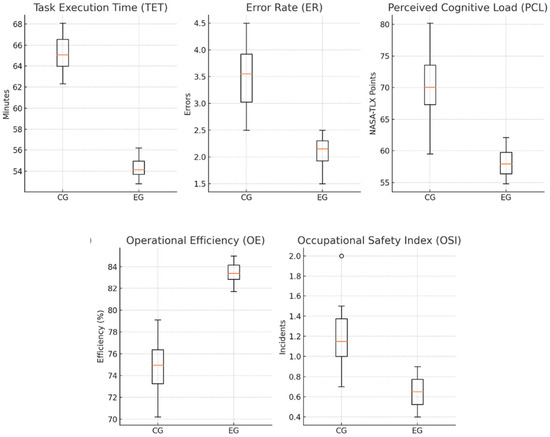

Ten operators were selected for each group, ensuring that their levels of experience were equivalent to ensure comparability of the results. During the performance of the connection, maintenance, and assembly tasks, a number of key variables were evaluated. The variables evaluated were as follows: task execution time (TET), which corresponds to the total time spent completing the assigned activities; error rate (ER), measured as the number of errors made during execution; and perceived cognitive load (PCL), assessed using the NASA-TLX Mental Workload Index, a standardised questionnaire widely used to measure the mental workload associated with work tasks. The operational efficiency (OE) is calculated as the ratio between the expected standard time and the actual time spent executing the task. The occupational safety index (OSI) is a count of the number of incidents or near misses that occur during the performance of tasks. In order to provide a visual representation of the dispersion of performance between individuals, the corrected scatter plots are illustrated for each of the variables mentioned (Figure 10). The graphs presented herein demonstrate consistent trends that favour SG, which employed the AR system, on all indicators. In particular, lower values of TET, ER, PCL, and OSI and higher values of OE are observed in comparison to the CG, which utilised conventional documentation.

Figure 10.

Corrected scatter plots of operator performance for each measured variable.

The quantitative results of the experiment are summarised in Table 1, which provides a detailed statistical comparison between the two groups. The mean and standard deviation values are reported, and the significance of the differences was assessed using two-sample t-tests with a threshold of p < 0.05. Furthermore, the analysis incorporates effect size estimates based on Cohen’s d, thereby facilitating a more profound interpretation of the practical significance of the observed differences.

Table 1.

Detailed comparison of results between the traditional method and the AR system.

As illustrated in Table 1, a thorough statistical analysis has been conducted to compare the CG with the EG. The results of this analysis indicate statistically significant enhancements in the EG across all assessed variables (p < 0.05). The duration of TET was reduced by 16.7%, from 65.2 ± 5.1 min in the CG to 54.3 ± 4.0 min in the EG, with a Cohen’s d of 1.21, indicating a very large effect size. The ER exhibited a decline of 40.0%, from 3.5 ± 1.2 to 2.1 ± 0.9 errors per task (d = 1.19). Concurrently, the PCL demonstrated a reduction of 17.2%, from 70.3 ± 9.7 to 58.2 ± 8.3 points on the NASA-TLX scale (d = 1.25), thereby also reflecting a very large effect. OE exhibited a notable increase of 10.7%, transitioning from 75.0 ± 5.0% in the CG to 83.0 ± 4.0% in the EG, manifesting a substantial effect size (d = 1.08). Finally, the OSI exhibited a 41.7% improvement, with a decrease from 1.2 ± 0.7 to 0.7 ± 0.3 incidents (d = 0.85), which is also regarded as a substantial effect. These results confirm not only the statistical significance of the AR system’s impact on task performance and safety but also its substantial practical relevance, supporting its effectiveness in reducing errors, workload, and risk while enhancing efficiency and consistency in industrial operations.

In order to facilitate a more profound interpretative analysis of the results, Figure 11 presents a set of boxplots that compare the distributional characteristics of each evaluated variable across the CG and the EG. These graphical representations provide a valuable complement to the statistical findings that have been discussed previously, as they offer a visual illustration of the central tendency, dispersion, and the presence of outliers within each group. It is noteworthy that the EG demonstrates more compact interquartile ranges and diminished overall variability in comparison to the CG, suggesting a higher degree of consistency and repeatability in task performance. This distributional homogeneity lends support to the hypothesis that the AR-based system contributes not only to improved average performance but also to the standardisation of outcomes, minimising deviations and mitigating the occurrence of extreme values.

Figure 11.

Boxplot comparison of evaluated variables between groups. The orange line represents the median value for each group.

The results of the statistical analysis demonstrate that the observed differences between the CG and EG are statistically significant for all variables under evaluation. The reduction in TET and ER indicates that the AR system enhances efficiency and accuracy in the execution of complex industrial tasks. The reduction in PCL indicates that the AR system reduces the mental workload on operators; this may be due to the presentation of contextualised and easily accessible information in their field of view. This finding is consistent with previous studies that have highlighted the ability of AR to facilitate the understanding and execution of complex tasks [62,63]. The observed increase in OE and reduction in OSI indicate that the AR system not only enhances performance but also contributes to a safer work environment, reducing the occurrence of incidents and near misses. These findings are consistent with research indicating the benefits of AR in improving safety and productivity in industrial settings [64,65,66,67].

The results illustrate the significant potential of AR to optimise industrial processes and improve efficiency, accuracy, and safety in a wide range of operations. The proposed AR system has been designed with scalability in mind, ensuring adaptability in various industrial environments. Its modular architecture allows for flexible integration into existing operational systems, catering to different levels of task complexity and customisation requirements. This adaptability makes the system applicable in areas such as manufacturing, logistics, and predictive maintenance without requiring extensive reconfiguration.

However, it is important to note that large-scale implementation of this technology requires consideration of several additional factors to ensure its successful integration. Ensuring the compatibility of the AR system with existing infrastructure and management platforms is critical, requiring meticulous planning and the development of customised technical adaptations.

In addition, comprehensive training and adaptation programmes for operators are essential. Such training not only facilitates a smooth transition to this new technology but also ensures that users feel confident and competent in its operation.

From an economic perspective, while the initial implementation costs can be substantial due to hardware procurement, IoT sensor integration, and software customisation, these costs are justified by the long-term operational benefits. These include reductions in task execution time, lower error rates, and increased safety, all of which contribute to measurable productivity gains. In addition, the system’s ability to minimise downtime through predictive maintenance ensures continuous operation and operational efficiency. This proactive approach supports a favourable return on investment (ROI), as the benefits of increased efficiency, reduced operational risks, and optimised resource utilisation accrue over time. Regular upgrades and maintenance of the system’s software and hardware are also essential to maintain its effectiveness and operational security, ensuring that its long-term benefits are fully realised.

The findings of the present research (a 17% reduction in cognitive load, a 10.7% increase in operational efficiency, and a 41.7% decrease in the incident rate) not only reproduce but, in some cases, exceed the improvements reported in the most recent specialised literature, thereby reinforcing the external validity of the proposed approach. It has been reported in the literature that advancements of a similar nature have been observed in previous studies. For instance, a 15% reduction in NASA-TLX scores was observed when HoloLens2 was used in electronic assembly [62]. Similarly, a 9% increase in packaging line efficiency was achieved through the implementation of adaptive guides [63], and a 35% reduction in incident rates during heavy machinery operations was facilitated by AR [64]. Earlier literature had hypothesised the positive effects of contextual information overlays on reducing search time and errors [8,35]. However, their impact on cognitive load and safety had not been precisely quantified. The present study extends this knowledge by demonstrating not only improvements in task performance but also a significant increase in inter-operator consistency, a finding that aligns with previous conclusions regarding the importance of adaptive strategies for users with diverse levels of expertise [36].

From an applied perspective, the results of this study highlight that dynamic content personalisation constitutes a pivotal leverage point for industrial AR. This capability is underpinned by two enabling factors. Firstly, the deployment of ultra-low-latency 5G infrastructures, which allow real-time model updates and telemetry transmission [68]. Secondly, the integration of AR systems with digital twins, enabling synchronous alignment of physical and virtual asset states [69]. The convergence of these technologies engenders avenues for high-value industrial applications, including predictive maintenance enhanced by federated learning at the network edge, which has been demonstrated to reduce unplanned downtime by up to 25% [18]; expert remote assistance, geographically distributed operations, achieving productivity gains exceeding 12% [63]; and on-site training based on simulations of infrequent failures, leading to shorter training cycles and enhanced procedural knowledge retention [62].

However, the pervasive adoption of AR as a universal interface for industrial operations introduces novel risk vectors. Recent studies have highlighted that the incorporation of additional sensors and wireless communication channels serves to expand the system’s attack surface, thereby necessitating the implementation of multilayered defence mechanisms that address both the device level and the IT/OT integration layer [46,66,70]. Moreover, the mounting reliance on operational data necessitates the integration of artificial intelligence-based predictive analytics to efficaciously filter, prioritise, and contextualise events, as evidenced in applications involving machine tools [18] and situated visualisation systems [41,71]. In view of these challenges, future research should prioritise the development of cybersecurity protocols tailored to collaborative AR environments, in addition to longitudinal evaluations of ergonomic and cognitive impacts associated with large-scale and long-duration deployments.

6. Conclusions

The present study has developed an adaptive AR architecture with the objective of enhancing real-time attendance and occupational safety within the context of Industry 4.0. The approach combines theoretical modelling with practical implementation in controlled experimental scenarios, thereby demonstrating the effectiveness of AR in optimising industrial processes. The primary novelty of this study lies in the integration of dynamic customisation, continuous feedback, and modularity, elements that have not usually been found in combination in previous approaches.

The proposed architecture is predicated on three fundamental principles: adaptability, user-centred design, and operational safety. Its capacity to customise instructions dynamically and to facilitate intuitive interaction ensures its applicability in a variety of industrial environments. A series of experimental trials, conducted with the assistance of Microsoft HoloLens 2 devices and utilising a range of technologies including Unity 3D and Vuforia, have yielded positive results, validating the efficacy of the proposed approach in the domains of predictive maintenance tasks and the assembly and connection of asynchronous induction motors.

The findings of the comparative study demonstrate significant enhancements in the performance of the AR-based system in comparison to conventional methods, as evidenced by reduced execution times, diminished errors, and reduced cognitive load experienced by operators. Moreover, greater efficiency, reduced occupational risks, and improved task accuracy have been achieved. The system’s capacity to adapt the intricacy of instructions in accordance with operator proficiency and environmental variables has been instrumental in reducing errors and enhancing performance.

Moreover, the integration of sophisticated functionalities, including predictive maintenance and anomaly detection, has enhanced operational efficiency and safety, in accordance with international standards. The incorporation of integrated sensors has been instrumental in promoting proactive decision-making, thereby minimising unplanned downtime. It is anticipated that the incorporation of technologies such as IoT and AI-based predictive models will facilitate enhanced functionality in the future.

Author Contributions

Conceptualisation, G.M.M. and F.d.C.V.; methodology, G.M.M. and F.d.C.V.; software, G.M.M.; validation, G.M.M. and F.d.C.V.; formal analysis, G.M.M. and F.d.C.V.; investigation, G.M.M. and F.d.C.V.; resources, G.M.M. and F.d.C.V.; data curation, G.M.M. and F.d.C.V.; writing—original draft preparation, G.M.M. and F.d.C.V.; writing—review and editing, G.M.M. and F.d.C.V.; visualisation, G.M.M. and F.d.C.V.; supervision, F.d.C.V. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created or analysed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Real-Time LabJack–Unity Data Acquisition Script

| Algorithm A1. Python script for real-time acquisition and transmission of LabJack U3-HV data to Unity via TCP/IP socket. |

| import socket import json import time from labjack import ljm def main(): try: handle = ljm.openS(“ANY”, “ANY”, “ANY”) print(“LabJack device opened successfully.”) except Exception as e: print(“Error opening LabJack device:”, e) return channels = [“AIN0”, “AIN1”, “AIN2”] HOST, PORT = ‘127.0.0.1′, 5055 server_socket = socket.socket(socket.AF_INET, socket.SOCK_STREAM) server_socket.setsockopt(socket.SOL_SOCKET, socket.SO_REUSEADDR, 1) try: server_socket.bind((HOST, PORT)) server_socket.listen(1) print(f”Waiting for Unity to connect at {HOST}:{PORT} …”) except Exception as e: print(“Error setting up TCP socket:”, e) ljm.close(handle) return try: conn, addr = server_socket.accept() print(f”Connection established with Unity: {addr}”) except Exception as e: print(“Error accepting connection:”, e) server_socket.close() ljm.close(handle) return try: while True: try: readings = ljm.eReadNames(handle, len(channels), channels) except Exception as e: print(“Error reading from LabJack channels:”, e) break data = { “Temperature”: round(readings [0], 2), “Vibration”: round(readings [1], 2), “Current”: round(readings [2], 2), “timestamp”: time.time() } message = json.dumps(data) + “\n” try: conn.sendall(message.encode(‘utf-8′)) except Exception as e: print(“Connection error while sending data:”, e) break time.sleep(0.1) except KeyboardInterrupt: print(“Manual interrupt detected. Stopping data transmission.”) finally: print(“Closing connection and LabJack device.”) try: conn.close() except: pass server_socket.close() ljm.close(handle) if __name__ == “__main__”: main() |

References

- Fukuyama, H.; Tsionas, M.; Tan, Y. Dynamic network data envelopment analysis with a sequential structure and behavioural-causal analysis: Application to the Chinese banking industry. Eur. J. Oper. Res. 2023, 307, 1360–1373. [Google Scholar] [CrossRef]

- Höhl, W. Official survey data and virtual worlds—Designing an integrative and economical open source production pipeline for xR-applications in small and medium-sized enterprises. Big Data Cogn. Comput. 2020, 4, 26. [Google Scholar] [CrossRef]

- Choi, S.; Park, J.S. Development of augmented reality system for productivity enhancement in offshore plant construction. J. Mar. Sci. Eng. 2021, 9, 209. [Google Scholar] [CrossRef]

- Villagran-Vizcarra, D.C.; Luviano-Cruz, D.; Pérez-Domínguez, L.A.; Méndez-González, L.C.; Garcia-Luna, F. Applications Analyses, Challenges and Development of Augmented Reality in Education, Industry, Marketing, Medicine, and Entertainment. Appl. Sci. 2023, 13, 2766. [Google Scholar] [CrossRef]

- Peres, R.S.; Jia, X.; Lee, J.; Sun, K.; Colombo, A.W.; Barata, J. Industrial artificial intelligence in industry 4.0—Systematic review, challenges and outlook. IEEE Access 2020, 8, 220121–220139. [Google Scholar] [CrossRef]

- Markets and Markets. Augmented Reality Market with COVID-19 Impact Analysis by Offering (Hardware and Software), Device Type (Head-Mounted Display, Head-Up Display), Application (Consumer, Commercial, Enterprise, Automotive), and Region—Global Forecast to 2027; Markets and Markets Research Private Ltd.: Pune, India, 2022. [Google Scholar]

- Sharma, A.; Mehtab, R.; Mohan, S.; Shah, M.K.M. Augmented reality—An important aspect of Industry 4.0. Ind. Robot 2022, 49, 428–441. [Google Scholar] [CrossRef]

- Wang, X.; Ong, S.K.; Nee, A.Y.C. A comprehensive survey of augmented reality assembly research. Adv. Manuf. 2016, 4, 1–22. [Google Scholar] [CrossRef]

- Santi, G.M.; Ceruti, A.; Liverani, A.; Osti, F. Augmented reality in industry 4.0 and future innovation programs. Technologies 2021, 9, 33. [Google Scholar] [CrossRef]

- Eswaran, M.; Gulivindala, A.K.; Inkulu, A.K.; Bahubalendruni, M.R. Augmented reality-based guidance in product assembly and maintenance/repair perspective: A state of the art review on challenges and opportunities. Expert Syst. Appl. 2023, 213, 118983. [Google Scholar] [CrossRef]

- Alessa, F.M.; Alhaag, M.H.; Al-Harkan, I.M.; Ramadan, M.Z.; Alqahtani, F.M. A neurophysiological evaluation of cognitive load during augmented reality interactions in various industrial maintenance and assembly tasks. Sensors 2023, 23, 7698. [Google Scholar] [CrossRef]

- Morales Méndez, G.; del Cerro Velázquez, F. Augmented Reality in Industry 4.0 Assistance and Training Areas: A Systematic Literature Review and Bibliometric Analysis. Electronics 2024, 13, 1147. [Google Scholar] [CrossRef]

- Gramouseni, F.; Tzimourta, K.D.; Angelidis, P.; Giannakeas, N.; Tsipouras, M.G. Cognitive assessment based on electroencephalography analysis in virtual and augmented reality environments, using head mounted displays: A systematic review. Big Data Cogn. Comput. 2023, 7, 163. [Google Scholar] [CrossRef]

- Carvalho, A.V.; Chouchene, A.; Lima, T.M.; Charrua-Santos, F. Cognitive manufacturing in industry 4.0 toward cognitive load reduction: A conceptual framework. Appl. Syst. Innov. 2020, 3, 55. [Google Scholar] [CrossRef]

- Ghasemi, Y.; Jeong, H.; Choi, S.H.; Park, K.B.; Lee, J.Y. Deep learning-based object detection in augmented reality: A systematic review. Comput. Ind. 2022, 139, 103661. [Google Scholar] [CrossRef]

- Wu, S.; Chen, H.; Hou, L.; Zhang, G.K.; Li, C.Q. Using eye-tracking to measure worker situation awareness in augmented reality. Autom. Constr. 2024, 165, 105582. [Google Scholar] [CrossRef]

- Baroroh, D.K.; Chu, C.H.; Wang, L. Systematic literature review on augmented reality in smart manufacturing: Collaboration between human and computational intelligence. J. Manuf. Syst. 2021, 61, 696–711. [Google Scholar] [CrossRef]

- Liu, C.; Zhu, H.; Tang, D.; Nie, Q.; Zhou, T.; Wang, L.; Song, Y. Probing an intelligent predictive maintenance approach with deep learning and augmented reality for machine tools in IoT-enabled manufacturing. Robot. Comput.-Integr. Manuf. 2022, 77, 102357. [Google Scholar] [CrossRef]

- Shakhovska, N.; Yakovyna, V.; Mysak, M.; Mitoulis, S.A.; Argyroudis, S.; Syerov, Y. Real-time monitoring of road networks for pavement damage detection based on preprocessing and neural networks. Big Data Cogn. Comput. 2024, 8, 136. [Google Scholar] [CrossRef]

- Tao, F.; Sui, F.; Liu, A.; Qi, Q.; Zhang, M.; Song, B.; Guo, Z.; Lu, S.C.-Y.; Nee, A.Y. Digital twin-driven product design framework. Int. J. Prod. Res. 2019, 57, 3935–3953. [Google Scholar] [CrossRef]

- Gavish, N.; Gutiérrez, T.; Webel, S.; Rodríguez, J.; Peveri, M.; Bockholt, U.; Tecchia, F. Evaluating virtual reality and augmented reality training for industrial maintenance and assembly tasks. Interact. Learn. Environ. 2015, 23, 778–798. [Google Scholar] [CrossRef]

- Pujiono, I.P.; Asfahani, A.; Rachman, A. Augmented Reality (AR) and Virtual Reality (VR): Recent Developments and Applications in Various Industries. Innov. J. Soc. Sci. Res. 2024, 4, 1679–1690. [Google Scholar]

- Hazarika, A.; Rahmati, M. Towards an evolved immersive experience: Exploring 5G-and beyond-enabled ultra-low-latency communications for augmented and virtual reality. Sensors 2023, 23, 3682. [Google Scholar] [CrossRef]

- Devagiri, J.S.; Paheding, S.; Niyaz, Q.; Yang, X.; Smith, S. Augmented Reality and Artificial Intelligence in industry: Trends, tools, and future challenges. Exper. Syst. Appl. 2022, 207, 118002. [Google Scholar] [CrossRef]

- Vortmann, L.M.; Putze, F. Attention-aware brain computer interface to avoid distractions in augmented reality. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–8. [Google Scholar]

- Kim, H.; Kim, T.; Lee, M.; Kim, G.J.; Hwang, J.I. CIRO: The effects of visually diminished real objects on human perception in handheld augmented reality. Electronics 2021, 10, 900. [Google Scholar] [CrossRef]

- Boboc, R.G.; Gîrbacia, F.; Butilă, E.V. The application of augmented reality in the automotive industry: A systematic literature review. Appl. Sci. 2020, 10, 4259. [Google Scholar] [CrossRef]

- Gattullo, M.; Scurati, G.W.; Fiorentino, M.; Uva, A.E.; Ferrise, F.; Bordegoni, M. Towards augmented reality manuals for industry 4.0: A methodology. Robot. Comput.-Integr. Manuf. 2019, 56, 276–286. [Google Scholar] [CrossRef]

- Masood, T.; Egger, J. Adopting augmented reality in the age of industrial digitalisation. Comput. Ind. 2020, 115, 103112. [Google Scholar] [CrossRef]