Introducing a Novel Fast Neighbourhood Component Analysis–Deep Neural Network Model for Enhanced Driver Drowsiness Detection

Abstract

1. Introduction

- Introducing an innovative approach that integrates fast neighbourhood component analysis (FNCA) and deep neural networks (DNNs) to enhance the detection of driver drowsiness using electroencephalogram (EEG) data.

- Improving the FNCA + DNN model and comparing it with recent models using the benchmark driver drowsiness dataset SEED-VIG [12].

2. Related Works

2.1. Classical and Traditional Machine Learning Approaches

2.2. Deep-Learning-Based Models

2.3. Hybrid and Multimodal Systems

2.4. Attention Mechanisms and Optimization-Enhanced Techniques

2.5. Benchmarking and Comparative Evaluation

3. Methodology

3.1. EEG Data Analysis and Feature Extraction

3.1.1. EEG Data

- Channel selection: The data from eight different electrodes (Cz, Fz, T7, T8, C3, C4, PO7, and PO8) were chosen from the publicly available dataset (see Table 2). These brain regions were chosen for their importance in cognitive processing, sensory integration, and maintaining alertness (which are all crucial to accurately identifying shifts in drowsiness).

- Sample frequency: The dataset used in our study was recorded at a particular sample frequency, denoted as fs = 500 Hz, which is critical in capturing the variety of significant brain activity.

3.1.2. Feature Extraction from Frequency Bands

| Algorithm 1: EEG Spectral Feature Extraction for Drowsiness Detection | ||

| Input: | ||

| ||

| Process: | ||

| ||

| Output: | ||

| Features: A vector containing power spectral features for classification or analysis. | ||

| End | ||

3.2. EEG Data Labelling

- T_low = P_25(X),

- T_mid = P_50(X),

- T_high = P_75(X),

3.3. Data Pre-Processing and Training

3.4. Fast Neighbourhood Component Analysis (FNCA):

Data Transformed with FNCA

- Designing the DNN Model: The network will consist of a few input layers, various hidden processing layers, and one output layer. The size of the input layer should be equal to the number of transformed features. It consists of hidden layers designed with an increase in complexity and nonlinearity by ReLU activation functions. Finally, the last layers used the Softmax function to apply probabilities to different classes by making the final classification.

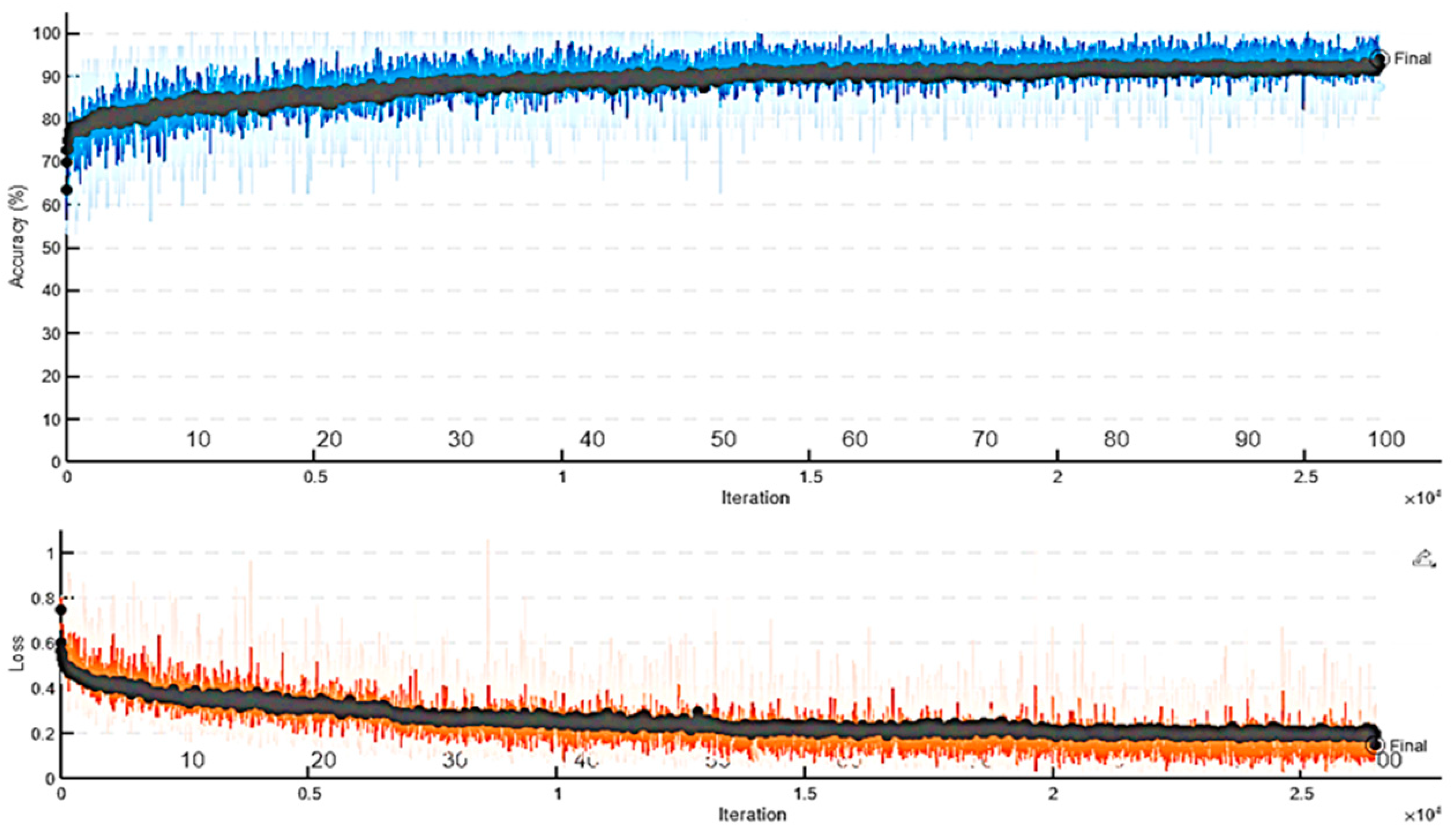

- Training: In the learning phase, the training of the DNN is performed using the Adam optimizer to optimize the weights. We control the learning rate, apply the regularization to avoid overfitting, and manipulate the batch size to make the training progress smooth. Adam is chosen for its effectiveness in adjusting the learning rate, promoting faster and more reliable learning.

- Evaluation: Our main metric to evaluate the performance of the classifier is the classification accuracy. It gives the proportion of all correct predictions from all predictions made.

- (True Positives): Correctly predicted positive cases.

- (True Negatives): Correctly predicted negative cases.

- (False Positives): Incorrectly predicted positive cases.

- (False Negatives): Incorrectly predicted negative cases.

| Algorithm 2: FNCA-DNN Hybrid Model for Driver Drowsiness Classification | ||

| Input: | ||

| ||

| Process: | ||

| ||

| Output: | ||

| ||

4. Results and Analysis

4.1. Analysis of FNCA + DNN for Driver Drowsiness Detection

4.2. An Improved FNCA + DNN Model for Driver Drowsiness Detection Using EEG Signals

4.3. Cross-Subject Evaluation Strategy

4.4. Real-Time Inference and Latency Performance

4.5. Ten-Fold Cross-Validation Results

4.6. Understanding the Attention Mechanism and Feature Importance

4.7. Comparison with State-of-the-Art Methods on the SEED-VIG Dataset

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| DA | Data augmentation |

| DD | Drowsiness detection |

| DNN | Deep neural network |

| EEG | Electroencephalogram |

| FAWT | Flexible analytic wavelet transform |

| FNCA | Fast neighbourhood component analysis |

| KNN | K-nearest neighbours |

| NCA | Neighbourhood component analysis |

| NHTSA | National Highway Traffic Safety Administration |

| pBCI | Passive brain–computer interface |

| PCA | Principal component analysis |

| PSD | Power spectral density |

| RF | Random forest |

| SVM | Support vector machine |

| CNN | Convolutional neural network |

| LSTM | Long short-term memory |

| DL | Deep learning |

| LP | Low-pass |

| HP | High-pass |

| RBF | Radial basis function |

| PFC | Prefrontal cortex |

| FC | Frontal cortex |

| OC | Occipital cortex |

| EBI | Eye blink interval |

| QML | Quantum machine learning |

| CNOT | Controlled-NOT gate |

| CZ | Controlled-Z gate |

| iSWAP | Imaginary SWAP gate |

References

- Albadawi, Y.; Takruri, M.; Awad, M. A Review of Recent Developments in Driver Drowsiness Detection Systems. Sensors 2022, 22, 2069. [Google Scholar] [CrossRef] [PubMed]

- Al-Gburi, S.; Kanar, A.; Al-Sammak, I.; Marghescu, C.C.; Khattab, M. Analyzing Different Models Driver Behavior Detection Using EEG Data. In Proceedings of the 15th International Conference Communications (COMM), Bucharest, Romania, 3–4 October 2024; pp. 1–5. [Google Scholar]

- Knapik, M.; Cyganek, B. Driver’s Fatigue Recognition Based on Yawn Detection in Thermal Images. Neurocomputing 2019, 338, 274–292. [Google Scholar] [CrossRef]

- Liu, W.; Qian, J.; Yao, Z.; Jiao, X.; Pan, J. Convolutional Two-Stream Network Using Multi-Facial Feature Fusion for Driver Fatigue Detection. Future Internet 2019, 11, 115. [Google Scholar] [CrossRef]

- You, F.; Gong, Y.; Tu, H.; Liang, J.; Wang, H. A Fatigue Driving Detection Algorithm Based on Facial Motion Information Entropy. J. Adv. Transp. 2020, 2020, 1–17. [Google Scholar] [CrossRef]

- Fouad, I.A. A Robust and Efficient EEG-Based Drowsiness Detection System Using Different Machine Learning Algorithms. Ain Shams Eng. J. 2023, 14, 101895. [Google Scholar] [CrossRef]

- Al-Gburi, S.H.; Al-Sammak, K.A.; Marghescu, I.; Oprea, C.C. State of the Art in Drivers’ Attention Monitoring—A Systematic Literature Review. Karbala Int. J. Mod. Sci. 2023, 9, 14–26. [Google Scholar] [CrossRef]

- Arakawa, T. Trends and Future Prospects of the Drowsiness Detection and Estimation Technology. Sensors 2021, 21, 7921. [Google Scholar] [CrossRef]

- Drowsy Driving. Available online: https://www.nhtsa.gov/risky-driving/drowsy-driving (accessed on 27 December 2024).

- Drowsiness. Available online: https://medlineplus.gov/ency/article/003208.htm (accessed on 27 December 2024).

- Yang, W.; Wang, K.; Zuo, W. Fast Neighborhood Component Analysis. Neurocomputing 2012, 83, 31–37. [Google Scholar] [CrossRef]

- Zheng, W.-L.; Lu, B.-L. A Multimodal Approach to Estimating Vigilance Using EEG and Forehead EOG. J. Neural Eng. 2017, 14, 026017. [Google Scholar] [CrossRef]

- Ramzan, M.; Khan, H.U.; Awan, S.M.; Ismail, A.; Ilyas, M.; Mahmood, A. A Survey on State-of-the-Art Drowsiness Detection Techniques. IEEE Access 2019, 7, 61904–61919. [Google Scholar] [CrossRef]

- Drivers Are Falling Asleep Behind the Wheel. Available online: https://www.nsc.org/road/safety-topics/fatigued-driver (accessed on 27 December 2024).

- Suni, E. Drowsy Driving: Dangers and How to Avoid It|Sleep Foundation; Sleep Foundation: Arlington, VA, USA, 2022. [Google Scholar]

- Doudou, M.; Bouabdallah, A.; Berge-Cherfaoui, V. Driver Drowsiness Measurement Technologies: Current Research, Market Solutions, and Challenges. Int. J. Intell. Transp. Syst. Res. 2020, 18, 297–319. [Google Scholar] [CrossRef]

- Fu, B.; Boutros, F.; Lin, C.-T.; Damer, N. A Survey on Drowsiness Detection—Modern Applications and Methods. IEEE Trans. Intell. Veh. 2024, 1–23. [Google Scholar] [CrossRef]

- Wu, P.; Song, L.; Meng, X. Temporal Analysis of Cellphone-Use-Involved Crash Injury Severities: Calling for Preventing Cellphone-Use-Involved Distracted Driving. Accid. Anal. Prev. 2022, 169, 106625. [Google Scholar] [CrossRef] [PubMed]

- Al-Gburi, S.H.; Al-Sammak, K.A.; Alheeti, K.M.A.; Suciu, G.; Abdulqader, A.G. Driver Behavior Assessment with Different ML Models Using EEG and Physiological Data—A Comparative Study. In Proceedings of the 2024 16th International Conference on Electronics, Computers and Artificial Intelligence (ECAI), Iasi, Romania, 27–28 June 2024; IEEE: New York, NY, USA, 2024. [Google Scholar]

- Korotcov, A.; Tkachenko, V.; Russo, D.P.; Ekins, S. Comparison of Deep Learning with Multiple Machine Learning Methods and Metrics Using Diverse Drug Discovery Data Sets. Mol. Pharm. 2017, 14, 4462–4475. [Google Scholar] [CrossRef]

- Sikander, G.; Anwar, S. Driver Fatigue Detection Systems: A Review. IEEE Trans. Intell. Transp. Syst. 2019, 20, 2339–2352. [Google Scholar] [CrossRef]

- Mohammed Hashim, B.A.; Amutha, R. Human Activity Recognition Based on Smartphone Using Fast Feature Dimensionality Reduction Technique. J. Ambient Intell. Humaniz. Comput. 2021, 12, 2365–2374. [Google Scholar] [CrossRef]

- Subasi, A.; Saikia, A.; Bagedo, K.; Singh, A.; Hazarika, A. EEG-Based Driver Fatigue Detection Using FAWT and Multiboosting Approaches. IEEE Trans. Industr. Inform. 2022, 18, 6602–6609. [Google Scholar] [CrossRef]

- Ren, Z.; Li, R.; Chen, B.; Zhang, H.; Ma, Y.; Wang, C.; Lin, Y.; Zhang, Y. EEG-Based Driving Fatigue Detection Using a Two-Level Learning Hierarchy Radial Basis Function. Front. Neurorobot. 2021, 15, 618408. [Google Scholar] [CrossRef]

- Shahbakhti, M.; Beiramvand, M.; Nasiri, E.; Far, S.M.; Chen, W.; Sole-Casals, J.; Wierzchon, M.; Broniec-Wojcik, A.; Augustyniak, P.; Marozas, V. Fusion of EEG and Eye Blink Analysis for Detection of Driver Fatigue. IEEE Trans. Neural Syst. Rehabil. Eng. 2023, 31, 2037–2046. [Google Scholar] [CrossRef]

- Cui, J.; Lan, Z.; Sourina, O.; Muller-Wittig, W. EEG-Based Cross-Subject Driver Drowsiness Recognition with an Interpretable Convolutional Neural Network. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 7921–7933. [Google Scholar] [CrossRef]

- Fang, W.; Tang, L.; Pan, J. AGL-Net: An Efficient Neural Network for EEG-Based Driver Fatigue Detection. J. Integr. Neurosci. 2023, 22, 146. [Google Scholar] [CrossRef] [PubMed]

- Siddhad, G.; Dey, S.; Roy, P.P.; Iwamura, M. Awake at the Wheel: Enhancing Automotive Safety Through EEG-Based Fatigue Detection; Springer: Berlin/Heidelberg, Germany, 2024. [Google Scholar]

- Arif, S.; Munawar, S.; Ali, H. Driving Drowsiness Detection Using Spectral Signatures of EEG-Based Neurophysiology. Front. Physiol. 2023, 14, 1153268. [Google Scholar] [CrossRef] [PubMed]

- Yaacob, S.; Izzati Affandi, N.A.; Krishnan, P.; Rasyadan, A.; Yaakop, M.; Mohamed, F. Drowsiness Detection Using EEG and ECG Signals. In Proceedings of the 2020 IEEE 2nd International Conference on Artificial Intelligence in Engineering and Technology (IICAIET), Kota Kinabalu, Malaysia, 26–27 September 2020; IEEE: New York, NY, USA, 2020. [Google Scholar]

- Miao, Z.; Zhao, M.; Zhang, X.; Ming, D. Lmda-Net: Alightweight Multi-Dimensional Attention Network General Eeg-Based Braincomputer Interfaces Interpretability. NeuroImage 2023, 276, 120209. [Google Scholar] [CrossRef]

- Ding, Y.; Robinson, N.; Zhang, S.; Zeng, Q.; Guan, C. TSception: Capturing Temporal Dynamics and Spatial Asymmetry from EEG for Emotion Recognition. IEEE Trans. Affect. Comput. 2023, 14, 2238–2250. [Google Scholar] [CrossRef]

- Liu, Z.; Mao, H.; Wu, C.-Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. Convnet 2020s. In Proceedings of the IEEE/CVF Conference Computer Vision Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11976–11986. [Google Scholar]

- Lins, I.D.; Araújo, L.M.M.; Maior, C.B.S.; Ramos, P.M.d.S.; Moura, M.J.d.C.; Ferreira-Martins, A.J.; Chaves, R.; Canabarro, A. Quantum Machine Learning for Drowsiness Detection with EEG Signals. Process Saf. Environ. Prot. 2024, 186, 1197–1213. [Google Scholar] [CrossRef]

- Cui, J.; Yuan, L.; Li, R.; Wang, Z.; Yang, D.; Jiang, T. Benchmarking EEG-Based Cross-Dataset Driver Drowsiness Recognition with Deep Transfer Learning. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2023, 2023, 1–6. [Google Scholar]

- Hidalgo Rogel, J.M.; Martínez Beltrán, E.T.; Quiles Pérez, M.; López Bernal, S.; Martínez Pérez, G.; Huertas Celdrán, A. Studying Drowsiness Detection Performance While Driving through Scalable Machine Learning Models Using Electroencephalography. Cognit. Comput. 2024, 16, 1253–1267. [Google Scholar] [CrossRef]

- Lan, Z.; Zhao, J.; Liu, P.; Zhang, C.; Lyu, N.; Guo, L. Driving Fatigue Detection Based on Fusion of EEG and Vehicle Motion Information. Biomed. Signal Process. Control 2024, 92, 106031. [Google Scholar] [CrossRef]

- Xiang, C.; Fan, X.; Bai, D.; Lv, K.; Lei, X. A Resting-State EEG Dataset for Sleep Deprivation. Sci. Data 2024, 11, 427. [Google Scholar] [CrossRef]

- Manohare, M.; Rajasekar, E.; Parida, M. Analysing Change Brain Waves Due Heterogeneous Road Traffic Noise Exposure Using Electroencephalography Measurements. Noise Health 2023, 25, 36–54. [Google Scholar] [CrossRef]

- Stancin, I.; Frid, N.; Cifrek, M.; Jovic, A. EEG Signal Multichannel Frequency-Domain Ratio Indices for Drowsiness Detection Based on Multicriteria Optimization. Sensors 2021, 21, 6932. [Google Scholar] [CrossRef] [PubMed]

- Borghini, G.; Astolfi, L.; Vecchiato, G.; Mattia, D.; Babiloni, F. Measuring Neurophysiological Signals in Aircraft Pilots and Car Drivers for the Assessment of Mental Workload, Fatigue and Drowsiness. Neurosci. Biobehav. Rev. 2014, 44, 58–75. [Google Scholar] [CrossRef] [PubMed]

- Eoh, H.J.; Chung, M.K.; Kim, S.-H. Electroencephalographic Study of Drowsiness in Simulated Driving with Sleep Deprivation. Int. J. Ind. Ergon. 2005, 35, 307–320. [Google Scholar] [CrossRef]

- Yi Wen, T.; Mohd Aris, S.A. Electroencephalogram (EEG) Stress Analysis on Alpha/Beta Ratio and Theta/Beta Ratio. Indones. J. Electr. Eng. Comput. Sci. 2020, 17, 175. [Google Scholar] [CrossRef]

- Irudayasamy, A.; Ganesh, D.; Natesh, M.; Rajesh, N.; Salma, U. Big Data Analytics on the Impact of Omicron and Its Influence on Unvaccinated Community through Advanced Machine Learning Concepts. Int. J. Syst. Assur. Eng. Manag. 2022, 15, 346–355. [Google Scholar] [CrossRef]

- Romero-Laiseca, M.A.; Delisle-Rodriguez, D.; Cardoso, V.; Gurve, D.; Loterio, F.; Posses Nascimento, J.H.; Krishnan, S.; Frizera-Neto, A.; Bastos-Filho, T. A Low-Cost Lower-Limb Brain-Machine Interface Triggered by Pedaling Motor Imagery for Post-Stroke Patients Rehabilitation. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 988–996. [Google Scholar] [CrossRef]

- Zhao, G.; Wu, Y. An Efficient Kernel-Based Feature Extraction Using a Pull–Push Method. Appl. Soft Comput. 2020, 96, 106584. [Google Scholar] [CrossRef]

- Wang, F.; Zhang, H.; Wang, K.; Zuo, W. Fast neighbourhood component analysis with spatially smooth regulariser for robust noisy face recognition. IET Biom. 2014, 3, 278–290. [Google Scholar] [CrossRef]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Lawhern, V.J.; Solon, A.J.; Waytowich, N.R.; Gordon, S.M.; Hung, C.P.; Lance, B.J. EEGNet: A compact convolutional neural network for EEG-based brain–computer interfaces. J. Neural Eng. 2018, 15, 056013. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the 32nd International Conference on Machine Learning (ICML), Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Roy, Y.; Banville, H.; Albuquerque, I.; Gramfort, A.; Falk, T.H.; Faubert, J. Deep learning-based electroencephalography analysis: A systematic review. J. Neural Eng. 2019, 16, 051001. [Google Scholar] [CrossRef] [PubMed]

- Schirrmeister, R.T.; Springenberg, J.T.; Fiederer, L.D.J.; Glasstetter, M.; Eggensperger, K.; Tangermann, M.; Hutter, F.; Burgard, W.; Ball, T. Deep learning with convolutional neural networks for EEG decoding and visualization. Hum. Brain Mapp. 2017, 38, 5391–5420. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Eskandarian, A. A Survey and Tutorial of EEG-Based Brain Monitoring for Driver State Analysis. IEEE/CAA J. Autom. Sin. 2021, 8, 1222–1242. [Google Scholar] [CrossRef]

| Criteria | Proposed Model (FNCA + DNN) | Recent Works ([8,21,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37]) | Key Advantage of the Proposed Model |

|---|---|---|---|

| FNCA for Metric Learning | ✓ | ✗ (None use FNCA; Ref. [25] uses NCA) | Optimizes feature space for classification via metric learning, enhancing k-NN/discriminative power. |

| DNN Architecture | ✓ (4 FC layers + BatchNorm/Dropout) | ✓ (Refs.[25,27,31,32,33,34,35,36] use DNNs) | Balances depth and simplicity with regularization for robust EEG feature extraction. |

| Multi-Band EEG Analysis | ✓ (Explicit 5-band EEG features) | ✗ (Refs. [24,29] use spectral bands; others lack structured multi-band focus) | Captures nuanced neural activity across bands for early drowsiness detection. |

| Attention Mechanisms | ✓ | ✗ (Only Ref. [31] uses attention) | Focuses on salient EEG regions, suppressing noise and improving feature relevance. |

| Real-Time Applicability | ✓ | ✓ (Refs. [25,27,36] emphasize real-time) | Lightweight DNN + FNCA enables efficient edge deployment. |

| Hybrid Data Integration | ✗ (EEG-only) | ✓ (Ref. [37] combines EEG + vehicle data) | Maintains focus on EEG-centric detection for simplicity and cost-effectiveness. |

| Cross-Dataset Generalization | ✗ | ✓ (Ref. [35] uses transfer learning) | Future work can extend to cross-dataset validation. |

| Channel | Description | Measurement Unit |

|---|---|---|

| Cz | Central electrode measurement | Microvolts (uV) |

| Fz | Frontal electrode measurement | Microvolts (uV) |

| T7 | Temporal, right electrode measurement | Microvolts (uV) |

| T8 | Temporal, left electrode measurement | Microvolts (uV) |

| C3 | Central, right electrode measurement | Microvolts (uV) |

| C4 | Central, left electrode measurement | Microvolts (uV) |

| PO7 | Parietal, right electrode measurement | Microvolts (uV) |

| PO8 | Parietal, left electrode measurement | Microvolts (uV) |

| Alpha_Cz | Beta_Cz | Alpha_Fz | Beta_Fz | Alpha_T7 | Beta_T7 | Alpha_T8 | Beta_T8 | Alpha_C3 | Beta_C3 | Alpha_C4 | Beta_C4 | Alpha_PO7 | Beta_PO7 | Alpha_PO8 | Beta_PO8 | Label |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0.4747 | 0.1345 | 0.7986 | 0.3456 | 0.1020 | 0.0531 | 0.2378 | 0.0727 | 0.1687 | 0.0476 | 0.3673 | 0.0926 | 1.2582 | 0.2520 | 1.2379 | 0.2862 | Intermediate |

| 0.7368 | 0.5476 | 0.7238 | 0.9066 | 0.1572 | 0.1638 | 0.2621 | 0.3266 | 0.2076 | 0.2051 | 0.3065 | 0.4058 | 1.7552 | 1.0129 | 1.6653 | 1.0814 | Alert |

| 0.2203 | 0.1948 | 0.4749 | 0.4552 | 0.0816 | 0.0760 | 0.0948 | 0.1087 | 0.1005 | 0.0840 | 0.1070 | 0.1622 | 0.6020 | 0.3342 | 0.6578 | 0.3274 | Alert |

| 2.0105 | 0.0457 | 0.8869 | 0.0445 | 5.9531 | 0.5587 | 3.2653 | 0.5525 | 2.3442 | 0.1974 | 2.0768 | 0.1599 | 4.9304 | 0.1937 | 7.8594 | 0.1390 | Drowsy |

| Layer | Parameters |

|---|---|

| Input Layer | Input size: Number of features |

| Fully Connected Layer 1 | Number of neurons: 100 (learns complex patterns by connecting all inputs to 100 hidden neurons) |

| ReLU Layer 1 | Activation function: ReLU |

| Fully Connected Layer 2 | Number of neurons: 100 |

| ReLU Layer 2 | Activation function: ReLU |

| Fully Connected Layer 3 | Number of neurons: Number of unique classes |

| Softmax Layer | Activation function: Softmax |

| Classification Layer | Classification layer |

| Training Options | Optimization algorithm: Adam (adaptive learning rate optimizer; balances speed and stability for convergence) |

| Maximum epochs: 30 | |

| Mini-batch size: 128 (processes 128 samples per gradient update (balances memory and converge)) | |

| Initial learning rate: 1 × 10−3 (initial step size for weight updates; prevents overshooting minima) | |

| L2 Regularization: 1 × 10−4 (fines large weights to reduce overfitting) |

| Learning Rate | Epochs | Mini-Batch Accuracy | Validation Accuracy |

|---|---|---|---|

| 0.0010 | 30 | 86.72% | 83.67% |

| 0.0010 | 50 | 88.28% | 83.59% |

| 0.0010 | 40 | 89.84% | 82.80% |

| 0.010 | 30 | 81.25% | 81.43% |

| Layer Name | Type | Units/Filters | Activation | Dropout Rate |

|---|---|---|---|---|

| inputLayer | Feature Input Layer | Input Size | - | - |

| FC1 | Fully Connected Layer | 256 | - | - |

| BN1 | Batch Normalization | - | - | - |

| ReLU1 | ReLU Activation | - | ReLU | - |

| FC2 | Fully Connected Layer | 128 | - | - |

| BN2 | Batch Normalization | - | - | - |

| ReLU2 | ReLU Activation | - | ReLU | - |

| FC3 | Fully Connected Layer | 64 | - | - |

| BN3 | Batch Normalization | - | - | - |

| ReLU3 | ReLU Activation | - | ReLU | - |

| dropout1 | Dropout Layer | - | - | 0.3 |

| FC4 | Fully Connected Layer | 32 | - | - |

| BN4 | Batch Normalization | - | - | - |

| ReLU4 | ReLU Activation | - | ReLU | - |

| dropout2 | Dropout Layer | - | - | 0.2 |

| FC_output | Fully Connected Layer | numClasses | - | - |

| Softmax | Softmax Layer | numClasses | Softmax | - |

| OutputLayer | Classification Layer | - | - | - |

| Configuration | Accuracy (Proposed) | Accuracy KNN [37], 2024 | |

|---|---|---|---|

| Train Subject 1 | Test Subject 21 | 52.77% | 35% |

| Train Subjects 1–11 | Test Subject 21 | 72.09% | 51% |

| Train Subjects 1–19 | Test Subject 21 | 77.18% | 60% |

| Train Subject 4 | Test Subject 21 | 74.92% | - |

| Train Subject 4 | Test Subject 8 | 81.47% | - |

| Fold | Accuracy (%) | |

|---|---|---|

| 0 | Fold 1 | 90.03 |

| 1 | Fold 2 | 90.44 |

| 2 | Fold 3 | 90.71 |

| 3 | Fold 4 | 89.63 |

| 4 | Fold 5 | 89.9 |

| 5 | Fold 6 | 91.79 |

| 6 | Fold 7 | 89.5 |

| 7 | Fold 8 | 90.58 |

| 8 | Fold 9 | 89.5 |

| 9 | Fold 10 | 91.78 |

| 10 | Mean ± Std | 90.386 ± 0.81 |

| Reference | Year | Classifier | Accuracy (%) |

|---|---|---|---|

| [33] | 2022 | TSeption | 83.15 ± 0.36 |

| [29] | 2025 | ||

| [34] | 2022 | ConvNext | 81.95 ± 0.61 |

| [29] | 2025 | ||

| [32] | 2023 | LMDA | 81.06 ± 0.99 |

| [29] | 2025 | ||

| [29] | 2025 | NLMDA-Net | 83.71 ± 0.30 |

| [36] | 2023 | EDJAN transfer learning | 0.76 |

| [28] | 2023 | CNN + LSTM | 85.1 ± 0.5 |

| [28] | 2023 | ATT + CNN + LSTM | 85.6 ± 0.3 |

| [28] | 2023 | Ghost + LSTM | 86.6 ± 0.4 |

| [28] | 2023 | ATT + Ghost + LSTM | 87.3 ± 0.2 |

| [28] | 2023 | CNN + LST | 85.1 ± 0.5 |

| Proposed model FNCA + DNN 12 subjects | 0.9429 | ||

| Proposed model FNCA + DNN 21 subjects | 90.386 ± 0.81 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al-Gburi, S.H.; Al-Sammak, K.A.; Marghescu, I.; Oprea, C.C.; Drăgulinescu, A.-M.C.; Suciu, G.; Alheeti, K.M.A.; Alduais, N.A.M.; Al-Sammak, N.A.H. Introducing a Novel Fast Neighbourhood Component Analysis–Deep Neural Network Model for Enhanced Driver Drowsiness Detection. Big Data Cogn. Comput. 2025, 9, 126. https://doi.org/10.3390/bdcc9050126

Al-Gburi SH, Al-Sammak KA, Marghescu I, Oprea CC, Drăgulinescu A-MC, Suciu G, Alheeti KMA, Alduais NAM, Al-Sammak NAH. Introducing a Novel Fast Neighbourhood Component Analysis–Deep Neural Network Model for Enhanced Driver Drowsiness Detection. Big Data and Cognitive Computing. 2025; 9(5):126. https://doi.org/10.3390/bdcc9050126

Chicago/Turabian StyleAl-Gburi, Sama Hussein, Kanar Alaa Al-Sammak, Ion Marghescu, Claudia Cristina Oprea, Ana-Maria Claudia Drăgulinescu, George Suciu, Khattab M. Ali Alheeti, Nayef A. M. Alduais, and Nawar Alaa Hussein Al-Sammak. 2025. "Introducing a Novel Fast Neighbourhood Component Analysis–Deep Neural Network Model for Enhanced Driver Drowsiness Detection" Big Data and Cognitive Computing 9, no. 5: 126. https://doi.org/10.3390/bdcc9050126

APA StyleAl-Gburi, S. H., Al-Sammak, K. A., Marghescu, I., Oprea, C. C., Drăgulinescu, A.-M. C., Suciu, G., Alheeti, K. M. A., Alduais, N. A. M., & Al-Sammak, N. A. H. (2025). Introducing a Novel Fast Neighbourhood Component Analysis–Deep Neural Network Model for Enhanced Driver Drowsiness Detection. Big Data and Cognitive Computing, 9(5), 126. https://doi.org/10.3390/bdcc9050126