Enhancing Deep Learning Sustainability by Synchronized Multi Augmentation with Rotations and Multi-Backbone Architectures

Abstract

1. Introduction

2. Background and Related Work

- limited computational power of EI devices in comparison to centralized server-based solutions or high-end graphic processing units (GPUs),

- constrained Random Access Memory (RAM) and general storage capacity limits specific to EI systems,

- energy-efficient regimes and limited power consumption to extend battery life,

- real-time processing requirements, as far as often EI applications necessitate low latency for operations in real-time or near-real-time regimes.

3. Materials and Methods

3.1. Dataset

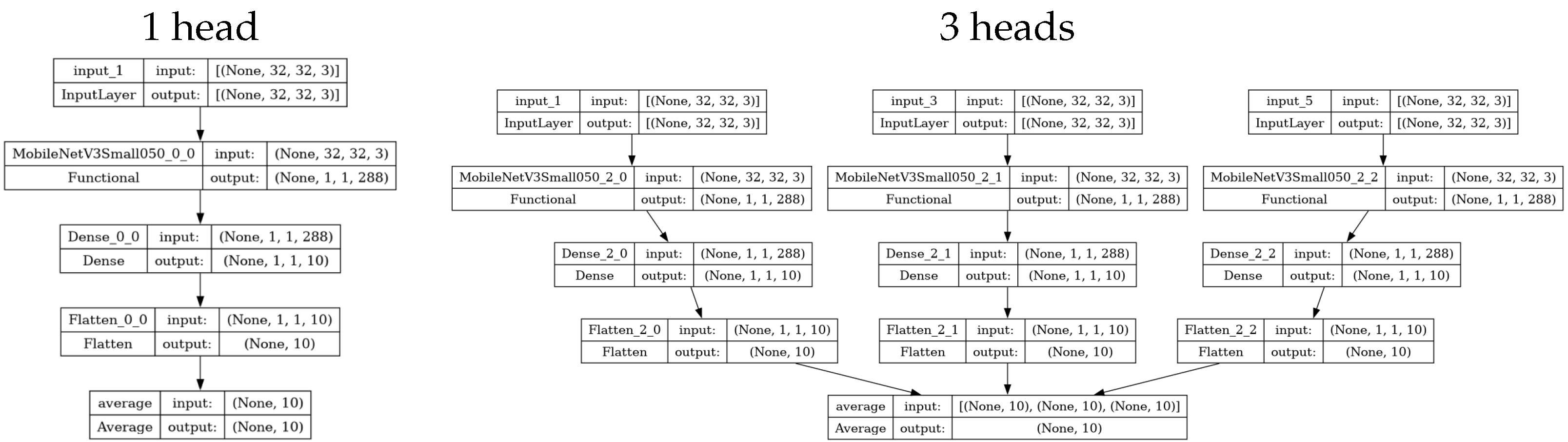

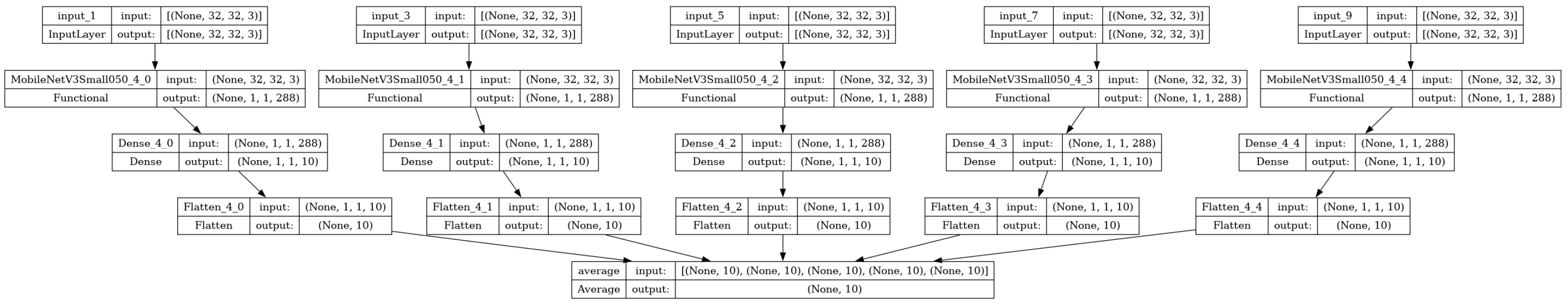

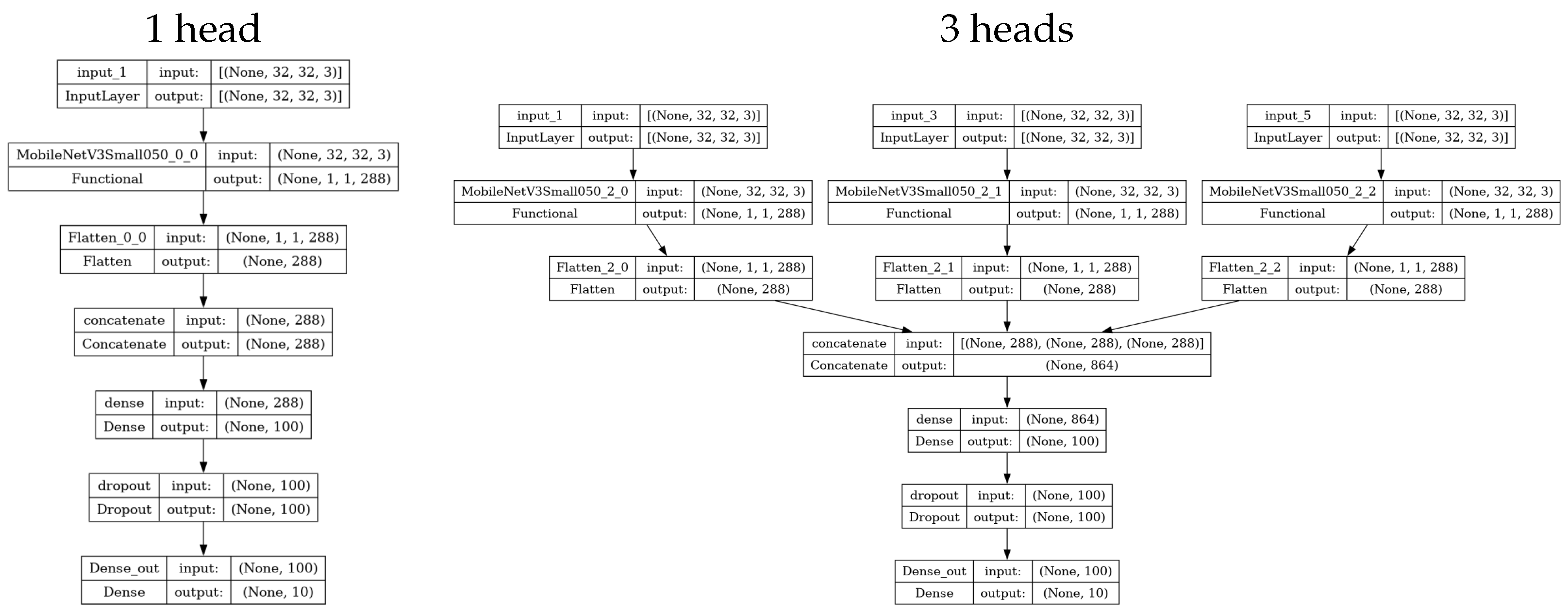

3.2. Baseline Models

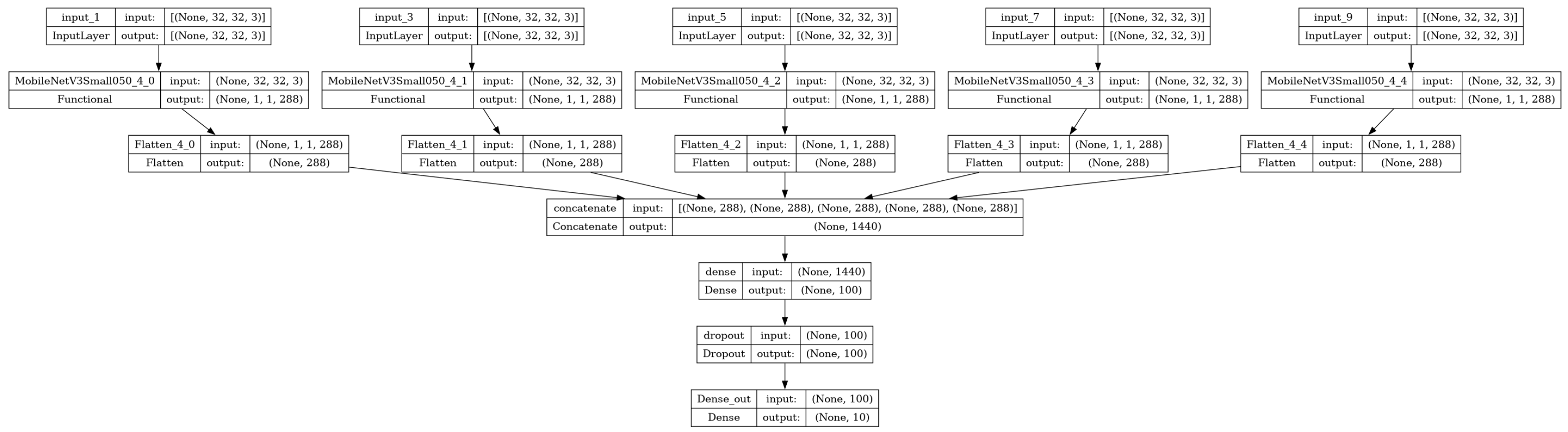

3.3. Multi-Backbone Models

3.4. Metrics

- Minimal validation loss: The lowest value of the loss function observed during validation, reflecting the model’s best convergence and generalization capacity.

- Maximal validation accuracy: The highest classification accuracy achieved on the validation set, representing the best rate of correctly predicted instances.

- Validation accuracy at minimal loss: The accuracy recorded at the point when the validation loss reached its minimum, helping to assess the model’s predictive performance at optimal convergence.

- Maximal validation Area Under the Curve (AUC): The highest Area Under the Receiver Operating Characteristic (ROC) Curve, as defined in [30]. This includes both micro and macro AUC variants, which summarize performance across classes in different ways to give a broader perspective on classification quality.

- Model size: The total number of parameters and the corresponding memory usage (in megabytes), providing a measure of the model’s complexity and storage requirements.

3.5. Workflow

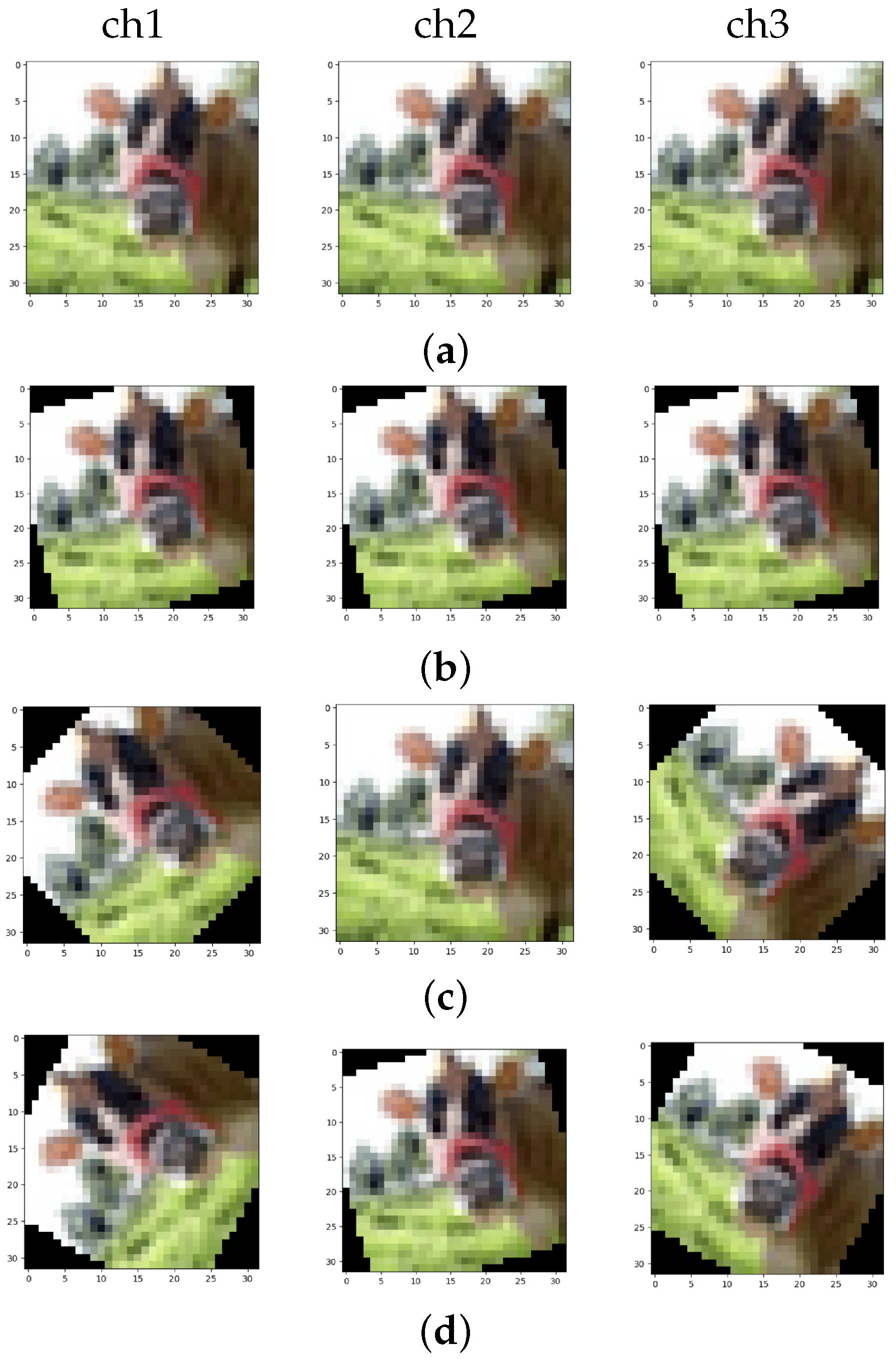

- “simulation” (Figure 6b and Figure 7b): Each channel receives the same original image, modified similarly by being rotated at the same random angle . This configuration simulates image capture with identical random camera orientations across all channels. All images are rotated counterclockwise around the center by a random angle between −90 and 90 degrees.

- “SMA + simulation” (Figure 6d and Figure 7d): Each channel receives a combination of “SMA” and “simulation” rotations; namely, each k-th channel in the configuration with channels receives a version of the original image that has been rotated by the angle (1) (“SMA”) and the same random angle is added (“simulation”), with the result being the range of angles .

4. Results

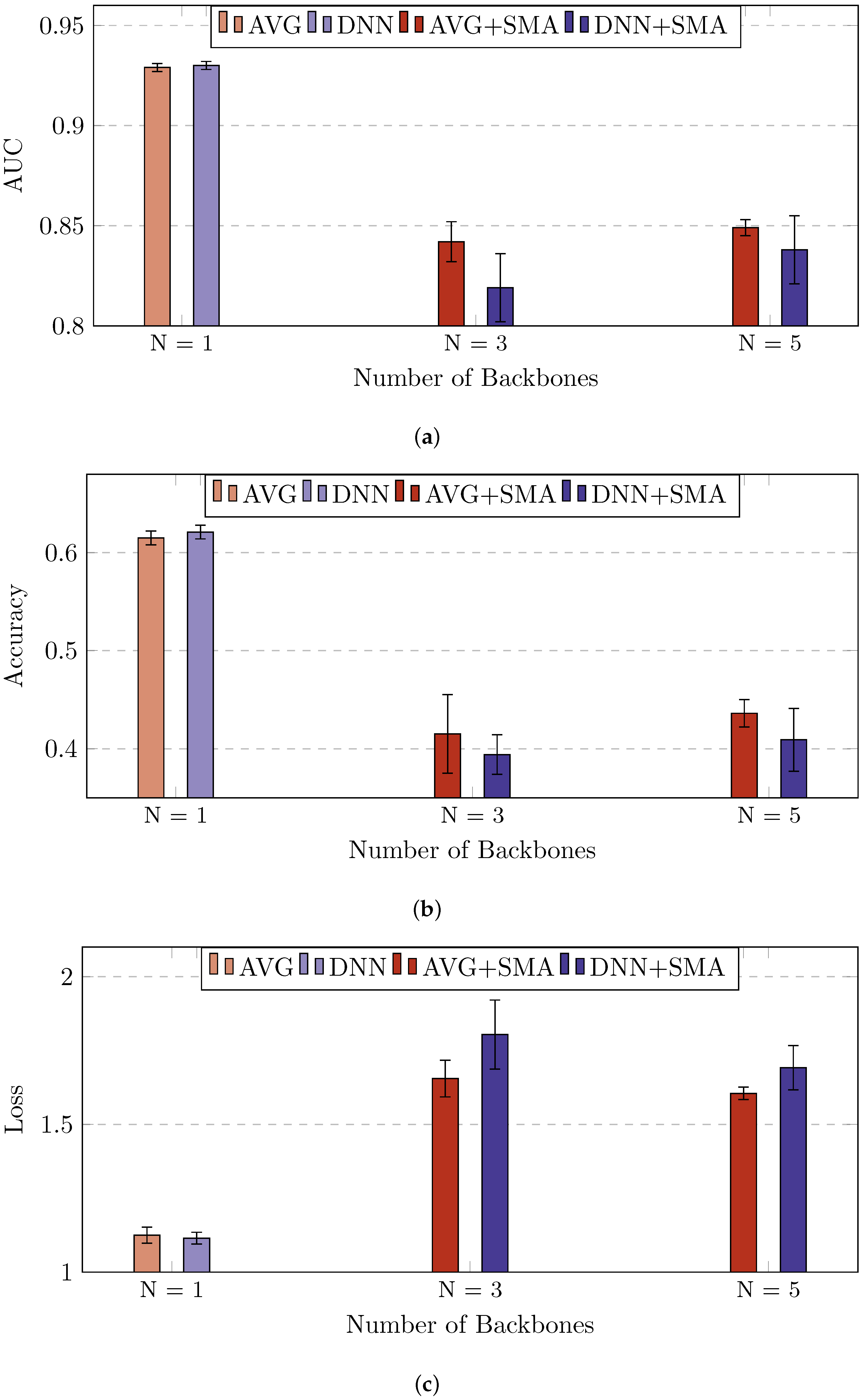

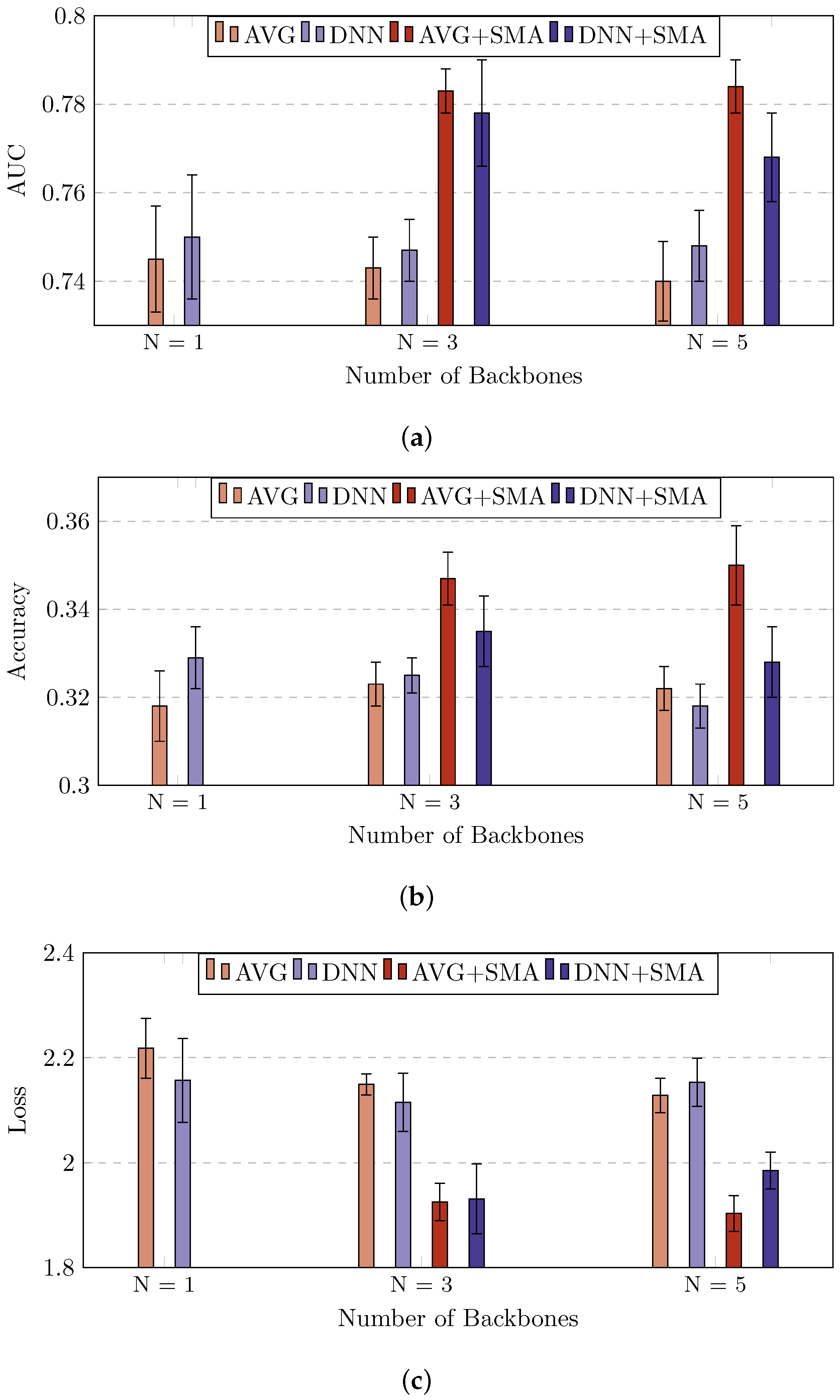

4.1. Validation on the “SMA” Dataset

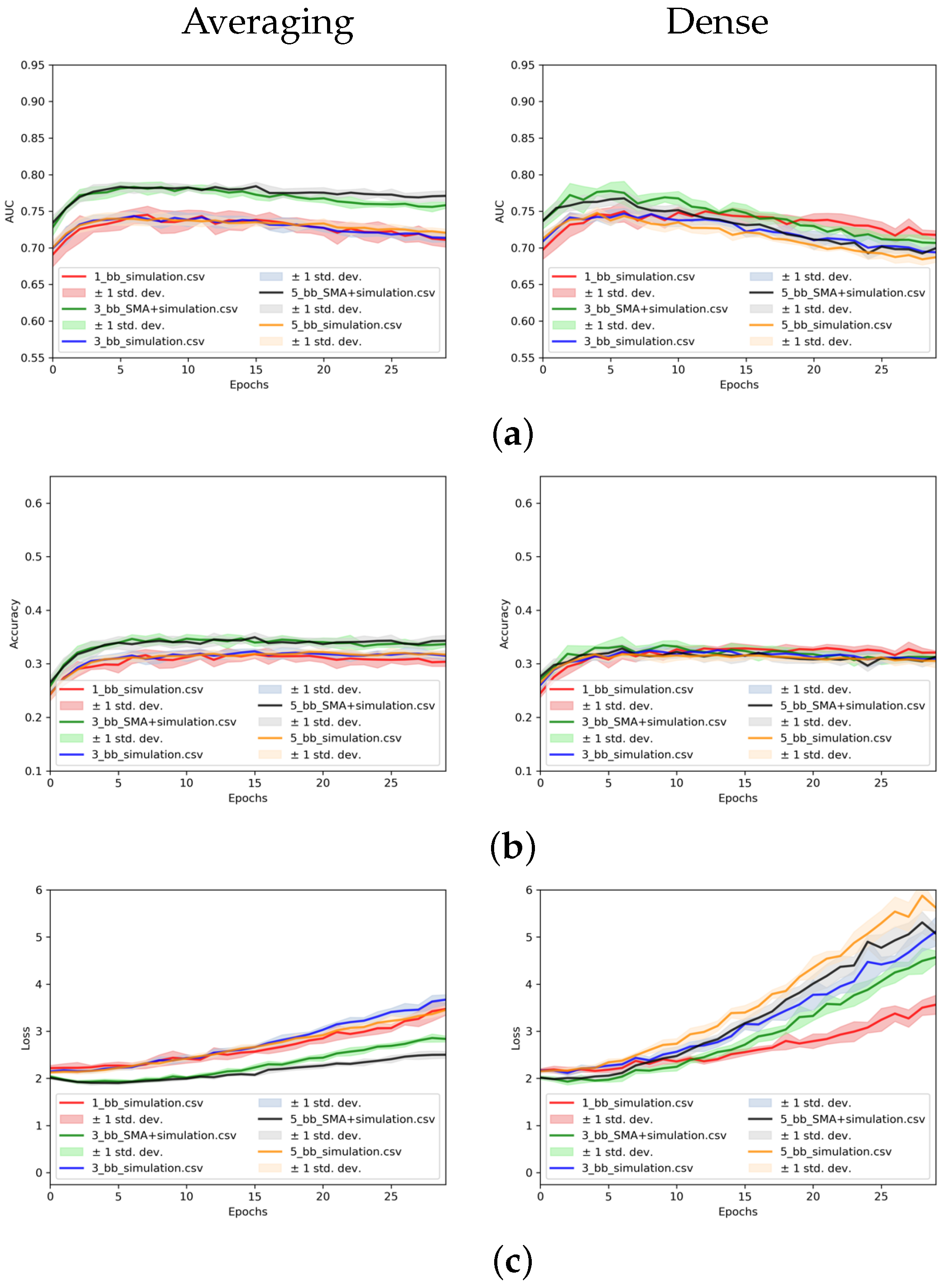

4.2. Validation on the “Simulation” and “SMA + Simulation” Datasets

5. Discussion

- comparing with DA during training techniques,

- validating models on larger datasets and real images with varying light conditions or camera settings,

- estimating the impact of different sensor settings and camera sensor types for SMAs.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Correction Statement

Abbreviations

| AI | Artificial Intelligence |

| AP | Average Precision |

| AUC | Area Under Curve |

| CLIP | Contrastive Language-Image Pretraining |

| CNN | Convolutional Neural Network |

| CPU | Central Processing Unit |

| CV | Computer Vision |

| DA | Data Augmentation |

| DCSA | Dual-path Compressed Sensing Attention |

| DL | Deep Learning |

| DKDMN | Data- and Knowledge-driven Deep Multi-view Network |

| DNN | Deep Neural Network |

| EC | Edge Computing |

| EI | Edge Intelligence |

| FedPCL | Federated Prototype-wise Contrastive Learning |

| FL | Federated Learning |

| GPU | Graphic Processing Unit |

| HSI | Hyperspectral Image Classification |

| HECNNet | Hybrid Ensemble CNN Network |

| IoT | Internet of Things |

| IOU | Intersection over Union |

| ISP | Image Signal Processing |

| MB | Multi-Backbone |

| MBBNet | Multi-BackBone Network |

| MBICF | MB Integration Classification Framework |

| MBMT-Net | MB Multi-Task Network |

| ML | Machine Learning |

| NN | Neural Network |

| PIL | Python Imaging Library |

| QPS | Queries Per Second |

| RAM | Random Access Memory |

| RGB | Red Green Blue |

| ROC | Receiver Operating Characteristic |

| SDR | Standard Dynamic Range |

| SMA | Synchronized Multi Augmentation |

| SOTA | State-Of-The-Art |

| TTA | Test Time Augmentation |

| ViT | Vision Transformer |

References

- Kelley, H.J. Gradient theory of optimal flight paths. Ars J. 1960, 30, 947–954. [Google Scholar] [CrossRef]

- Ivakhnenko, A.; Lapa, V. Cybernetic Predicting Devices. 1966. Available online: https://apps.dtic.mil/sti/citations/AD0654237 (accessed on 8 April 2025).

- Linnainmaa, S. Taylor expansion of the accumulated rounding error. BIT Numer. Math. 1976, 16, 146–160. [Google Scholar] [CrossRef]

- Fukushima, K. Neural network model for a mechanism of pattern recognition unaffected by shift in position-Neocognitron. IEICE Tech. Rep. 1979, 62, 658–665. [Google Scholar] [CrossRef] [PubMed]

- Williams, R. Complexity of Exact Gradient Computation Algorithms for Recurrent Neural Networks; Technical Report NU-CCS-89-27; Northeastern University, College of Computer Science: Boston, MA, USA, 1989. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Schmidhuber, J. The 2010s: Our Decade of Deep Learning/Outlook on the 2020s. SwissCognitive—The Global AI Hub, 2020. Available online: https://swisscognitive.ch/2020/03/11/the-2010s-our-decade-of-deep-learning-outlook-on-the-2020s/ (accessed on 8 April 2025).

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images; 2009; Available online: http://www.cs.utoronto.ca/~kriz/learning-features-2009-TR.pdf (accessed on 8 April 2025).

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Gordienko, N.; Gordienko, Y.; Stirenko, S. Synchronized Multi-Augmentation with Multi-Backbone Ensembling for Enhancing Deep Learning Performance. Appl. Syst. Innov. 2025, 8, 18. [Google Scholar] [CrossRef]

- Ciaparrone, G.; Bardozzo, F.; Priscoli, M.D.; Kallewaard, J.L.; Zuluaga, M.R.; Tagliaferri, R. A comparative analysis of multi-backbone Mask R-CNN for surgical tools detection. In Proceedings of the IEEE International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar]

- Tiwari, R.G.; Maheshwari, H.; Agarwal, A.K.; Jain, V. HECNNet: Hybrid Ensemble Convolutional Neural Network Model with Multi-Backbone Feature Extractors for Soybean Disease Classification. In Proceedings of the 2024 IEEE 2nd International Conference on Intelligent Data Communication Technologies and Internet of Things (IDCIoT), Bengaluru, India, 4–6 January 2024; pp. 813–818. [Google Scholar]

- Ouyang, Z.; Niu, J.; Ren, T.; Li, Y.; Cui, J.; Wu, J. MBBNet: An edge IoT computing-based traffic light detection solution for autonomous bus. J. Syst. Archit. 2020, 109, 101835. [Google Scholar] [CrossRef]

- Ciubotariu, G.; Czibula, G. MBMT-net: A multi-task learning based convolutional neural network architecture for dense prediction tasks. IEEE Access 2022, 10, 125600–125615. [Google Scholar] [CrossRef]

- Shin, J.; Kaneko, Y.; Miah, A.S.M.; Hassan, N.; Nishimura, S. Anomaly Detection in Weakly Supervised Videos Using Multistage Graphs and General Deep Learning Based Spatial-Temporal Feature Enhancement. IEEE Access 2024, 12, 65213–65227. [Google Scholar] [CrossRef]

- Zhang, J.; Zhao, F.; Liu, H.; Yu, J. Data and knowledge-driven deep multiview fusion network based on diffusion model for hyperspectral image classification. Expert Syst. Appl. 2024, 249, 123796. [Google Scholar] [CrossRef]

- Ye, M.; Wu, Z.; Chen, C.; Du, B. Channel Augmentation for Visible-Infrared Re-Identification. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 2299–2315. [Google Scholar] [CrossRef] [PubMed]

- Cubuk, E.D.; Zoph, B.; Mane, D.; Vasudevan, V.; Le, Q.V. Autoaugment: Learning augmentation strategies from data. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 113–123. [Google Scholar]

- Ljungbergh, W.; Johnander, J.; Petersson, C.; Felsberg, M. Raw or cooked? object detection on raw images. In Scandinavian Conference on Image Analysis; Springer: Cham, Switzerland, 2023; pp. 374–385. [Google Scholar]

- Xu, R.; Chen, C.; Peng, J.; Li, C.; Huang, Y.; Song, F.; Yan, Y.; Xiong, Z. Toward raw object detection: A new benchmark and a new model. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 13384–13393. [Google Scholar]

- Datta, G.; Liu, Z.; Yin, Z.; Sun, L.; Jaiswal, A.R.; Beerel, P.A. Enabling ISPless Low-Power Computer Vision. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 2429–2438. [Google Scholar]

- Taran, V.; Gordienko, Y.; Rokovyi, O.; Alienin, O.; Kochura, Y.; Stirenko, S. Edge intelligence for medical applications under field conditions. Adv. Artif. Syst. Logist. Eng. 2022, 135, 71–80. [Google Scholar]

- Polukhin, A.; Gordienko, Y.; Jervan, G.; Stirenko, S. Edge Intelligence Resource Consumption by UAV-based IR Object Detection. In Proceedings of the 2023 Workshop on UAVs in Multimedia: Capturing the World from a New Perspective, Ottawa, ON, Canada, 29 October–3 November 2023. [Google Scholar]

- Krizhevsky, A. The CIFAR-10 Dataset. 2009. Available online: https://www.cs.toronto.edu/~kriz/cifar.html (accessed on 28 January 2024).

- Howard, A.G. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Bradley, A.P. The use of the area under the ROC curve in the evaluation of machine learning algorithms. Pattern Recognit. 1997, 30, 1145–1159. [Google Scholar] [CrossRef]

- leondgarse; Awsaf; Cleres, D.; Haghpanah, M.A. leondgarse/keras_cv_attention_models: Cspnext_pretrained (cspnext). 2024. Available online: https://zenodo.org/records/10499598 (accessed on 8 April 2025).

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Gordienko, N. SMA Code Examples and Results of Training SMA Model. 2024. Available online: https://www.kaggle.com/code/pepsissalom/spatial-synchronized-multi-augmentation (accessed on 8 April 2025).

- Dieleman, S.; Van den Oord, A.; Korshunova, I.; Burms, J.; Degrave, J.; Pigou, L.; Buteneers, P. Classifying plankton with deep neural networks. Blog Entry 2015, 3, 4. [Google Scholar]

- Clark, A. Pillow (PIL Fork) Documentation. 2015. Available online: https://buildmedia.readthedocs.org/media/pdf/pillow/latest/pillow.pdf (accessed on 8 April 2025).

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

| N_b | Validation Dataset | Fusion | Accuracy | AUC | Loss |

|---|---|---|---|---|---|

| 1 | original | - | |||

| 3 | SMA | Averaging | |||

| 5 | SMA | Averaging | |||

| 1 | original | - | |||

| 3 | SMA | Dense | |||

| 5 | SMA | Dense |

| N | Validation Dataset | Fusion | Accuracy | AUC | Loss |

|---|---|---|---|---|---|

| 1 | simulation | - | |||

| 3 | simulation + SMA | Averaging | |||

| 3 | simulation | Averaging | |||

| 5 | simulation + SMA | Averaging | |||

| 5 | simulation | Averaging | |||

| 1 | simulation | - | |||

| 3 | simulation + SMA | Dense | |||

| 3 | simulation | Dense | |||

| 5 | simulation + SMA | Dense | |||

| 5 | simulation | Dense |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gordienko, N.; Gordienko, Y.; Stirenko, S. Enhancing Deep Learning Sustainability by Synchronized Multi Augmentation with Rotations and Multi-Backbone Architectures. Big Data Cogn. Comput. 2025, 9, 115. https://doi.org/10.3390/bdcc9050115

Gordienko N, Gordienko Y, Stirenko S. Enhancing Deep Learning Sustainability by Synchronized Multi Augmentation with Rotations and Multi-Backbone Architectures. Big Data and Cognitive Computing. 2025; 9(5):115. https://doi.org/10.3390/bdcc9050115

Chicago/Turabian StyleGordienko, Nikita, Yuri Gordienko, and Sergii Stirenko. 2025. "Enhancing Deep Learning Sustainability by Synchronized Multi Augmentation with Rotations and Multi-Backbone Architectures" Big Data and Cognitive Computing 9, no. 5: 115. https://doi.org/10.3390/bdcc9050115

APA StyleGordienko, N., Gordienko, Y., & Stirenko, S. (2025). Enhancing Deep Learning Sustainability by Synchronized Multi Augmentation with Rotations and Multi-Backbone Architectures. Big Data and Cognitive Computing, 9(5), 115. https://doi.org/10.3390/bdcc9050115