1. Introduction

Point-of-Interest (POI) recommendation plays a vital role in location-based services (LBS), enabling users to efficiently discover potential destinations based on their historical trajectories. Among traditional POI recommendation techniques, Collaborative Filtering (CF) stands out as one of the most classical approaches [

1]. By analyzing user–POI interaction histories, CF uncovers similarities among users or POIs to predict user interests. This method is simple to implement, offers strong interpretability, and performs effectively in data-rich scenarios by capturing collaborative patterns. However, CF suffers from several inherent limitations: interaction data are often sparse, with many users or POIs lacking sufficient histories, which undermines generalization in cold-start and long-tail settings. Moreover, CF neglects rich contextual information such as temporal patterns, geographical constraints, and semantic attributes of POIs—factors that play critical roles in shaping user mobility and preference decisions [

2]. Consequently, a pressing challenge in POI recommendation lies in integrating spatio-temporal features and semantic correlations to improve accuracy.

To address these limitations, Graph Neural Networks (GNNs) have emerged as an effective approach in recommendation systems [

3,

4]. GNNs excel at modeling high-order dependencies between nodes and their neighbors in graph-structured data. Through iterative message passing, GNNs aggregate multi-hop neighborhood features to learn expressive user and POI representations [

5,

6]. This capability makes GNNs particularly well-suited for modeling complex user–POI–context interaction networks. They help alleviate sparsity and cold-start issues while capturing spatio-temporal dynamics and semantic correlations to enhance both accuracy and interpretability. Nevertheless, applying GNNs to POI recommendation remains challenging. First, spatio-temporal dynamics lead to rapid changes in user interests over time and across locations, which static graph modeling fails to fully capture. Second, POI semantic attributes and contextual features are inherently high-dimensional and structurally diverse, making it difficult to align them effectively with user behavior sequences. Third, deep GNN architectures are prone to over-smoothing, where representations of distinct users or POIs converge and lose discriminative power [

7]. Hence, designing GNN-based methods that can jointly model spatio-temporal dependencies and semantic attributes, while maintaining both efficiency and interpretability, remains a central challenge in POI recommendation research.

Although GNN-based methods have achieved notable success in POI recommendation, most existing approaches remain confined to single-perspective modeling. Some methods focus primarily on structural relations in user–POI interaction graphs but fail to capture temporal and spatial dynamics effectively [

8,

9]. Others emphasize semantic integration while neglecting the profound impact of spatio-temporal signals on user mobility behavior [

10]. Moreover, discrepancies and semantic gaps across heterogeneous feature channels hinder effective alignment of multimodal information within a unified representation space [

11,

12,

13].

In particular, recent contrastive learning-based recommender models (e.g., LightGCL [

5], MDGCL [

14]) predominantly operate under a single-view or dual-view setting, focusing on either interaction–semantic or interaction–temporal alignment. However, these existing methods do not explicitly model both spatio-temporal and semantic dependencies simultaneously in a unified, interaction-aware manner. Similarly, transformer–graph hybrid approaches (e.g., TransGNN [

15]) and trajectory-based next POI prediction models (e.g., STP-Rec [

16], GETNext [

17]) integrate multi-modal signals to some extent but still lack a unified dual-channel contrastive alignment mechanism that captures both local sequential dynamics (micro-level) and global semantic correlations (macro-level) within a single optimization objective. Specifically, existing approaches do not leverage a dual-channel contrastive alignment strategy where spatio-temporal embeddings and semantic representations are jointly optimized to enhance representation diversity and mitigate over-smoothing. In contrast, our method explicitly introduces complementary contrastive learning objectives at both micro and macro levels, enabling the model to capture fine-grained behavioral shifts while embedding higher-order semantic dependencies, thus clearly differentiating our approach from prior multi-view GNN-contrastive methods.

Research Gap and Contribution.Although recent contrastive learning-based approaches have enhanced graph representations [

5,

6,

18], most operate under a single-view or loosely coupled dual-view paradigm. These methods mainly reinforce local structures or modality-specific information, but fail to model coordinated interactions between spatio-temporal dynamics and semantic knowledge. Existing hybrid GNN–contrastive models (e.g., LightGCL [

5], TransGNN [

15], STP-Rec [

16]) typically align only two perspectives, limiting representation diversity and potentially causing one view to dominate. To address these limitations, we propose a dual-channel contrastive alignment framework. Micro-level objectives refine short-term mobility patterns, while macro-level alignment consolidates semantic and long-range correlations. The two channels are jointly optimized to provide complementary contextual signals, improving representation quality across heterogeneous modalities. Theoretically, dual-channel alignment is superior because it explicitly captures both sequential dependencies and semantic structures in a unified optimization framework, mitigating over-smoothing and preserving view-specific diversity. To our knowledge, this is the first work to integrate spatio-temporal and multidimensional semantic knowledge through coordinated dual-channel contrastive learning, resulting in stronger generalization for POI recommendation.

To overcome these challenges, we propose a novel framework: Spatio-Temporal and Semantic Dual-Channel Contrastive Alignment for POI Recommendation (S

2DCRec). Unlike existing approaches, our framework jointly models spatio-temporal dynamics and multi-dimensional semantic dependencies within a unified contrastive optimization scheme, where micro-level alignment refines sequential mobility patterns and macro-level alignment consolidates high-order semantic abstraction across POIs. Building upon recent advances in contrastive representation learning [

5,

19], dynamic graph reasoning [

20,

21], we construct an integrated architecture that effectively captures both local and global dependencies for robust POI recommendation.

The proposed framework consists of two core components. First, the Multi-Graphs Embedding Module constructs three complementary graph views: collaborative interaction graphs, spatio-temporal graphs, and semantic knowledge graphs. Each graph view is processed using customized GNN encoders to generate high-quality embeddings, capturing complex topological structures and multi-scale neighborhood dependencies. This design ensures effective aggregation of nodes and preserves both local and global structural information. Second, the Contrastive Alignment on Spatio-Temporal and Semantic Dual-Channel performs fine-grained contrastive learning in two stages. At the micro level, collaborative interaction embeddings are aligned with spatio-temporal embeddings to capture short-term and local dependencies. At the macro level, these embeddings are further integrated with semantic embeddings to consolidate high-order semantic and structural correlations among POIs. This dual-channel contrastive alignment enables the model to learn complementary contextual signals from both sequential and semantic perspectives, improving representation quality for POI recommendation.

Our contributions in this work can be summarized as follows.

We emphasize the importance of jointly modeling spatio-temporal dynamics and semantic correlations in POI recommendation. With the design of a dual-channel contrastive alignment model, this work provides a novel perspective for effectively incorporating heterogeneous contextual signals into graph-based recommender systems;

We propose a new model, S2DCRec, that adopts a dual-channel design to integrate spatio-temporal and semantic information. Specifically, we construct a multi-graph embedding module where customized GNN encoders generate complementary representations from collaborative, spatio-temporal, and semantic graphs. Moreover, a dual-channel contrastive alignment mechanism is designed to fuse micro-level spatio-temporal dependencies with macro-level high-order semantic structures, achieving cross-channel enhancement and information complementarity;

Extensive experiments are conducted on two benchmark datasets, Foursquare NYC and Yelp. The results demonstrate that S2DCRec significantly outperforms state-of-the-art baselines, validating its effectiveness in POI recommendation.

3. Problem Formulation

In this section, we first introduce three essential forms of structured data, namely the Collaborative Interaction Graph constructed from user–item interactions, the Spatio-Temporal Graph capturing temporal and geographical dynamics, and the Semantic Knowledge Graph encoding auxiliary knowledge. We then formalize the recommendation task as a spatio-temporal–semantic dual-channel contrastive alignment problem. The frequently used notations are explained in

Table 1.

Collaborative Interaction Graph. Let

and

denote the sets of

M users and

N POIs (Points-of-Interest), respectively. The user–POI interaction data is represented as an implicit feedback matrix

, where each entry

indicates the implicit preference of user

u toward POI

v. Specifically, we define

In addition, each interaction is associated with rich contextual information, such as temporal attributes (e.g., timestamp, day of the week, hour) and spatial attributes (e.g., geographical coordinates, city, neighborhood).

Spatio-Temporal Graph. Apart from user-POI interactions, we construct a heterogeneous spatio-temporal knowledge graph

where

h,

r, and

t denote the head entity, relation, and tail entity of a knowledge triple, respectively.

E is the set of entities containing POIs, users, temporal features, and spatial features, and

R is the set of semantic and spatio-temporal relations.

An example triple, (vi, located_in, cityi), indicates POI vi is geographically located in cityi. A temporal triple (vi, peak_hour, hour18) denotes that the typical visitation peak of POI vi is at hour 18:00. Such spatio-temporal triples enrich the semantic context of POIs and users. Additionally, entities can be associated with semantic triples (e.g., (vi, has_category, restaurant)), which further profile the nature of POIs.

Semantic Knowledge Graph.We construct a heterogeneous semantic knowledge graph linking users, POIs, and related entities (spatial, temporal, semantic) via multi-hop relations. Typical paths, such as

preserve complete relational chains from user to POI to entities. This structure enriches contextual representation and supports reasoning over spatial, temporal, and semantic attributes for better recommendation.

Problem Statement. Given a user–POI interaction matrix Y and a spatio-temporal semantic knowledge graph G, our goal is to learn a predictive function that estimates the likelihood of interaction between a user u and a POI v. Formally, the model outputs , which quantifies the probability that user u will visit or interact with POI v.

In the following, we introduce the Spatio-Temporal and Semantic Dual-Channel Contrastive Alignment framework for POI recommendation (S2DCRec).

4. Methodology

In this section, we introduce S

2DCRec, an innovative POI recommendation framework that leverages a dual-channel contrastive alignment mechanism to comprehensively capture both spatio-temporal distributions and multi-dimensional semantic correlations of POIs. As illustrated in

Figure 1, the proposed framework consists of two key components.

The first component is the Multi-Graphs Embedding Module Generation. This part consists of three essential units: a collaborative interaction embedding module, a spatio-temporal embedding module, and a semantic embedding module. Corresponding to these heterogeneous perspectives, we construct a Collaborative Interaction Graph, a Spatio-Temporal Graph, and a Semantic Knowledge Graph. To encode these multi-view structures effectively, we employ a LightGCN Encoder [

8], a Long-range Connection GNN (LTGNN) Encoder [

26], and a GraphTrans Encoder for tailored representation learning. Together, these encoders produce three complementary types of embeddings that capture the complex topological characteristics and multi-scale neighborhood dependencies of POI graphs, while efficiently aggregating task-relevant contextual signals.

The second component is the Contrastive Alignment on Spatio-Temporal and Semantic Dual-Channel. Specifically, the collaborative interaction embedding module and the spatio-temporal embedding module jointly produce micro-level representations, upon which fine-grained contrastive learning is conducted in the spatio-temporal channel. Subsequently, these integrate with the semantic embedding module to form macro-level representations, where contrastive alignment learning is performed in the semantic channel to capture higher-order correlations between structure and semantics.

The dual-channel alignment enhances node representation diversity by simultaneously integrating micro-level (local) and macro-level (global) information. Compared with single-channel approaches, using only the micro-level channel relies solely on neighbor information, which can cause node embeddings to become overly similar in deep networks, leading to over-smoothing. Using only the macro-level channel captures global semantic and long-range dependencies but ignores local structural features. The dual-channel fusion preserves local node features while introducing complementary global information, mitigating over-smoothing, improving the discriminative power and diversity of representations, and strengthening the model’s ability to capture complex graph structures and semantic patterns. This makes it an effective multi-scale feature fusion strategy [

5,

14,

19].

4.1. Multi-Graphs Embedding Module Generation

In this stage, we begin by constructing the Semantic Knowledge Graph based on the original User–POI–Entities tripartite graph, which systematically captures the macro-level semantic associations among users, points of interest (POIs), and their multi-dimensional attribute entities. Subsequently, we partition the semantic graph into two complementary subgraphs: (1) the User–POI Subgraph, designed to model the direct interaction relationships between users and POIs, which we denote as the Collaborative Interaction Graph; and (2) the POI–Entities Subgraph, designed to characterize the structural relations between POIs and their multi-faceted attribute entities, which we regard as the Spatio-Temporal Graph.

To effectively encode the structural characteristics of these three views, we employ tailored graph encoders: a Long-range Connection GNN Encoder, a LightGCN Encoder, and a GraphTrans Encoder. These correspondingly give rise to three core embedding modules—namely, the semantic embedding module, the collaborative interaction embedding module, and the spatio-temporal embedding module. Together, they lay the representational foundation for the subsequent spatio-temporal and semantic dual-channel contrastive alignment learning.

The three graph views interact in a hierarchical manner: the collaborative interaction graph and the spatio-temporal graph are first fused at the micro-level to capture fine-grained user–POI relationships and sequential dynamics. These fused micro-level embeddings are then aligned with the Semantic Knowledge Graph at the macro-level through dual-channel contrastive learning, ensuring that high-level semantic dependencies and long-range interactions complement the detailed spatio-temporal patterns. This hierarchical interaction enables the model to jointly capture both local and global relational structures, facilitating more accurate POI representation learning.

4.1.1. Collaborative Interaction Embedding Module

We begin by constructing three unified graph structures, following the process outlined below. First, leveraging a proposed relation-aware aggregation mechanism, we recursively learn the

-step representations of items from the knowledge graph

G. The update rule is defined as

where

and

denote the

k-th layer representations of item

i and entity

v respectively. The path composition function is defined as

where ⊙ denotes element-wise interaction. For each triplet

, a relation-specific message

is designed, where the relation

r is modeled via projection or rotation operators to capture distinct semantic meanings of the triplet.

Subsequently, an item–item similarity graph is constructed based on cosine similarity:

To reduce computational complexity and mitigate noise, the fully-connected POI–POI graph is sparsified using k-nearest neighbors (KNN):

Normalization is applied to alleviate issues such as gradient explosion or vanishing:

The Collaborative Interaction Graph View is encoded via LightGCN. LightGCN operates on the collaborative interaction graph to capture high-order collaborative dependencies. Its output embeddings reflect structural interactions and short-term user preferences, forming the micro-level collaborative channel for contrastive alignment.Aggregation at the

k-th layer is given by

where

and

represent embeddings of POI

i and user

u, and

,

denote their corresponding neighbor sets.

4.1.2. Spatio-Temporal Embedding Module

The Spatio-Temporal Embedding Module constructs spatio-temporal graph views from POI–entity associations and encodes them via the proposed GraphTrans module. GraphTrans integrates the complementary strengths of GNNs and Transformers. Attention-guided neighbor sampling is employed to reduce computational complexity.GraphTrans Operates on user sequential behavior (time-ordered POI visits) to model spatio-temporal dynamics. The sequential dependency embeddings are used in the micro-level spatio-temporal channel, enabling alignment of temporal patterns across users and POIs.

For any node

, its sampled neighborhood is defined as

where

denotes the

i-th row of the similarity matrix.

Shortest-path-hop-based positional encoding (SPE) is applied:

where

P is the shortest-path hop matrix, and MLP is a two-layer feedforward network.

GraphTrans encoder with multi-head attention:

GNN-based message passing:

Random-walk-based neighbor sampling: This step computes the neighbor sampling probability based on random walks, which measures the relational weight between node

i and its neighbor

j.

Aggregated representation at layer

k:

4.1.3. Semantic Embedding Module

The Semantic Embedding Module is constructed on the original User–POI–Entity tripartite graph, forming a Semantic Knowledge Graph View. To address the limitations of conventional GNNs in modeling long-distance dependencies, we design an improved Long-range Connection GNN. LRC-GNN operates on the semantic knowledge graph to capture multi-dimensional semantic correlations and long-range dependencies. The semantically enriched embeddings form the macro-level semantic channel, aligning with the micro-level fused embeddings to ensure cross-channel semantic consistency.

Aggregation at layer

k:

where

, and

This module effectively captures multi-hop semantic interactions and strengthens the representation learning of POIs.

4.2. Contrastive Alignment on Spatio-Temporal and Semantic Dual-Channel

This component aims to integrate fine-grained spatio-temporal representations with high-level semantic information via a dual-channel contrastive alignment strategy. Specifically, we first perform joint modeling of the Collaborative Interaction Embedding Module and the Spatio-Temporal Embedding Module. By fusing multi-layer representations from the two views through weighted aggregation, we obtain fine-grained microscopic POI representations. These representations are subsequently projected into a contrastive learning space, where a microscopic-level contrastive loss is defined to achieve discriminative alignment and enhancement within the spatio-temporal channel. Further, the microscopic representations are fused with the outputs of the Semantic Embedding Module, generating macroscopic representations that encode high-order dependencies within the semantic space and global graph topology. These macroscopic embeddings are then mapped into a contrastive space to define the macroscopic-level loss . Through contrastive alignment in the semantic channel, this process facilitates cross-level semantic collaboration and complementary information exchange, thereby enhancing accuracy in POI recommendation.

To further illustrate the necessity of dual-channel alignment, we provide the following example:For instance, consider two POIs ( and ) that belong to the same category (e.g., coffee shops) and are therefore closely related in the semantic space. However, if is frequently visited in combination with transportation hubs while appears mainly in residential areas, their local interaction structures and spatio-temporal dynamics differ significantly. Micro-level alignment enables the model to discriminate between such locally distinct behavioral patterns, whereas macro-level alignment ensures that embeddings preserve their high-level semantic similarity. By jointly optimizing both levels, the model captures not only fine-grained sequential dependencies but also long-range semantic correlations, leading to more precise and context-aware POI representations.

4.2.1. Contrastive Alignment on Spatio-Temporal Channel

Within the spatio-temporal channel, we first derive multi-layer embeddings of users and POIs from the Collaborative Interaction Graph. At each layer

k, the Collaborative Interaction Embedding Module produces embeddings

and

for user

u and POI

i, respectively. Since lower layers emphasize local neighborhood patterns while higher layers capture broader structural relations, we aggregate embeddings across all layers (from 0 to

k) to preserve multi-scale structural information:

Here,

and

represent the cross-layer collaborative embeddings of users and POIs, respectively, which serve as inputs for subsequent contrastive alignment. Similarly, in the Spatio-Temporal Embedding Module, the representation of entity

i at the

k-th layer is denoted as

. To capture both short-range and long-range spatio-temporal dependencies, we perform layer-wise summation to obtain the cross-layer spatio-temporal embedding:

Here, denotes the microscopic spatio-temporal representation of entity i, which participates in cross-channel contrastive learning.

Given

and

, we conduct microscopic-level cross-view contrastive learning to encourage consistent and discriminative representation alignment. To map embeddings into the contrastive space, we apply a single-hidden-layer multi-layer perceptron (MLP):

where

,

are trainable parameters, and

denotes the ELU activation function. Following prior studies, we define positives and negatives as follows: for a given node in one view, its corresponding embedding in the other view is regarded as the positive sample, while embeddings of all other nodes are treated as negative samples. Based on this, the microscopic contrastive loss is formulated as

where

denotes the cosine similarity function, and

is the temperature parameter. Specifically, the denominator term can be decomposed as

where the first part corresponds to inter-view negative pairs, while the last term denotes the positive pair.

4.2.2. Contrastive Alignment on Semantic Channel

In the semantic channel, we leverage the Semantic Embedding Module to obtain multi-layer representations of POIs and their associated entities. Specifically, at the

k-th layer,

denotes the embedding of POI

i, while

represents the embedding of semantic entity

v. Since embeddings at different layers of the semantic knowledge graph capture semantic dependencies at varying granularities—from fine-grained local relations to global structural semantics—we aggregate the representations from layer 0 to layer

to construct holistic semantic embeddings:

Here,

denotes the macroscopic semantic representation of user

u, while

denotes that of POI

i. Similar to the spatio-temporal channel in

Section 4.2.1, these representations serve as inputs for cross-channel contrastive alignment, thereby jointly aligning semantic features with spatio-temporal features across multiple granularities.

Once macroscopic and microscopic representations are obtained, we project them into a shared contrastive space using a multi-layer perceptron (MLP):

where

,

are trainable parameters, and

denotes the ELU activation function.

Following the same positive–negative sampling strategy as in the microscopic contrastive learning, for each POI

i, the semantic-level contrastive losses are defined as

where

is the cosine similarity function,

is the temperature hyperparameter, and exp denotes the exponential function.

In particular, the denominator can be decomposed as

where

denotes inter-view negative pairs, while the remaining term corresponds to the positive pair.

Analogous formulations apply to entities

u, yielding

and

. Finally, the macroscopic contrastive loss is defined as

4.3. Prediction and Multi-Task Training

After completing multi-layer aggregation within the three modules and optimizing the learned representations through multi-level cross-module contrastive learning, we obtain multiple embeddings for each user and POI. Specifically, user

u is represented by

and

, while POI

i is represented by

,

, and

. To integrate these heterogeneous representations, we perform both summation and concatenation operations, and introduce learnable weights to control the relative contribution of each module. This results in the final user and POI embeddings, denoted as

where

and

are learnable coefficients.

The predicted score for user–POI interaction is then computed via the inner product:

The use of inner product maintains the simplicity and efficiency of the widely adopted dot-product scoring mechanism in recommendation tasks, while naturally adapting to the fused representations across different views. This unified scoring function effectively captures the alignment between user interests and POI characteristics.

To jointly optimize recommendation and self-supervised objectives, we adopt a multi-task learning strategy. For the knowledge-graph-enhanced recommendation task, we employ the pairwise Bayesian Personalized Ranking (BPR) loss to reconstruct user–item interaction data, which encourages higher prediction scores for observed items than for unobserved ones:

where

is the training dataset consisting of observed interactions

and unobserved interactions

, and

denotes the sigmoid function.

Finally, the BPR loss is integrated with both micro-level and macro-level contrastive objectives to form the overall training objective:

where

is the set of model parameters, and the coefficients satisfy

. By tuning

,

, and

, we can flexibly control the relative importance of recommendation accuracy and self-supervised alignment.

To further justify the introduction of learnable weights and in Equation (25), these parameters allow the model to dynamically balance the contributions from different embedding modules (collaborative, spatio-temporal, and semantic). Compared with fixed weighting, learnable coefficients enable adaptive fusion of micro-level and macro-level representations, thereby improving recommendation accuracy under diverse user behaviors and POI characteristics. In other words, and are jointly optimized with the overall objective , which ensures that the model can automatically adjust the relative importance of fine-grained sequential patterns and high-level semantic correlations according to the data distribution. This addresses the reviewer’s request to provide a clear motivation and explanation for why learnable fusion weights enhance performance.

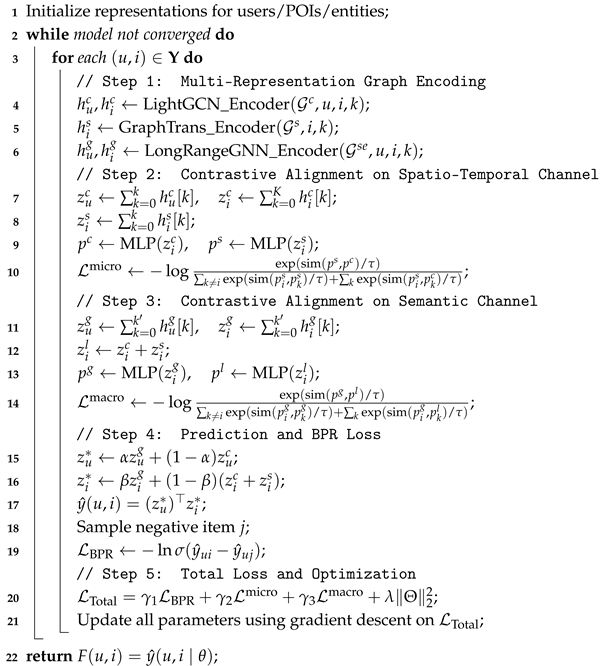

The complete algorithm pseudocode is presented as Algorithm 1, which details the steps for multi-graph embedding, dual-channel contrastive alignment, and parameter optimization.

To facilitate reproducibility and ensure stable performance even under sparse-interaction or cold-start conditions, all relevant hyperparameters (including the temperature

, number of negative samples, projection dimensions, etc.) have been clearly reported in

Section 5.2.3 Evaluation Metrics and Parameter Settings.

Table 2 summarizes all key variables and symbols used in the S

2DCRec framework, providing a clear reference for understanding the embeddings, contrastive alignment, and prediction components.

| Algorithm 1: S2DCRec Algorithm. |

Input: Interaction matrix ; Knowledge graph ; Collaborative graph ;

Spatio-temporal graph ; Semantic graph ; Sampling mapping ;

Trainable parameters , encoder weights;

Hyperparameters Output: Prediction score function ![Bdcc 09 00322 i001 Bdcc 09 00322 i001]() |

4.4. Encoder Functional Overview

To help readers intuitively understand the hierarchical relational encoding and dual-channel contrastive alignment in S2DCRec, we summarize the roles of each encoder and its contributions to capturing collaborative, spatio-temporal, and semantic dependencies in heterogeneous POI graphs. The table below provides a clear overview of how each encoder contributes to micro-level and macro-level representation learning.

Before presenting the mathematical formulations of S

2DCRec, the role of each encoder is summarized as follows (as summarized in

Table 3 ): LightGCNoperates on the collaborative interaction graph to capture high-order collaborative dependencies, producing structural embeddings that reflect short-term user preferences and constitute the micro-level collaborative channel for contrastive alignment. GraphTrans models user sequential behaviors based on time-ordered POI visits to extract spatio-temporal dynamics, and the resulting sequential dependency embeddings serve as the micro-level spatio-temporal channel, enabling alignment of temporal patterns across users and POIs. LRC-GNN functions on the semantic knowledge graph to capture multi-dimensional semantic correlations and long-range dependencies, generating semantically enriched embeddings that form the macro-level semantic channel, which is further aligned with the micro-level fused embeddings to ensure cross-channel semantic consistency.

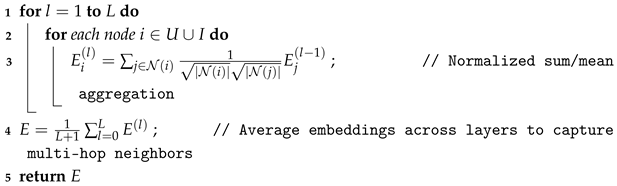

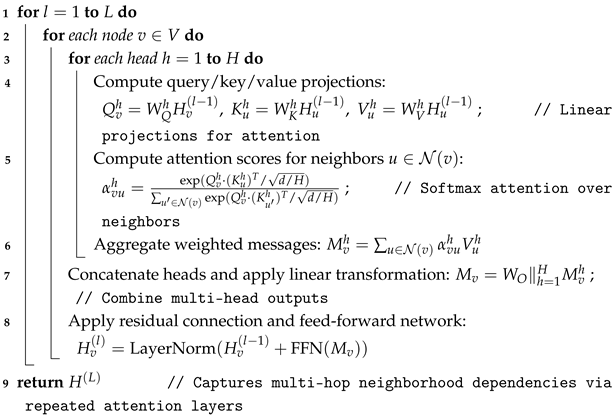

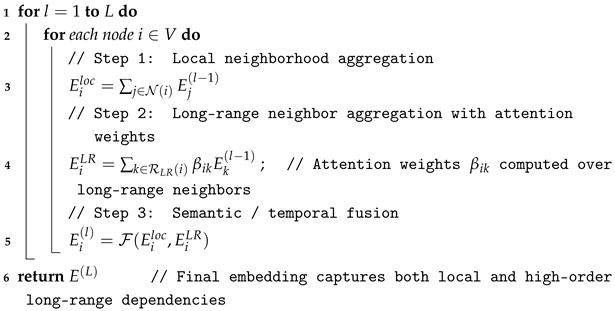

Algorithm 2 and Algorithm 3 illustrate the operations of LightGCN and GraphTrans, respectively. Algorithm 4 presents the Long-Range Connection GNN (LRC-GNN) for modeling long-range dependencies.

| Algorithm 2: LightGCN Propagation Process. |

Input: Interaction graph , initial embeddings , number of layers

L Output: Final node embeddings E ![Bdcc 09 00322 i002 Bdcc 09 00322 i002]() |

| Algorithm 3: GraphTrans Message Passing with Multi-Head Attention. |

Input: Graph , initial embeddings , number of layers L, attention

heads H, parameters Output: Node embeddings ![Bdcc 09 00322 i003 Bdcc 09 00322 i003]() |

This comparison highlights the evolution of the three encoders: LightGCN performs simple neighbor aggregation without attention, capturing local collaborative signals efficiently; GraphTrans introduces multi-head attention to model higher-order, attention-weighted neighbor interactions, enhancing the representation of complex relational structures; LRC-GNN explicitly incorporates long-range connections and semantic/temporal fusion, enabling high-order dependency modeling beyond immediate neighbors. Together, these encoders justify the dual-channel design of S

2DCRec, which integrates micro-level collaborative and spatio-temporal dependencies with macro-level semantic alignment, supporting multi-graph, multi-scale, and dual-channel information enhancement for accurate POI recommendation.

| Algorithm 4: LRC-GNN with Explicit Long-Range Connections. |

Input: Graph G, initial embeddings , long-range edge set , fusion

operator , number of layers L Output: Final embeddings ![Bdcc 09 00322 i004 Bdcc 09 00322 i004]() |

4.5. Graph Statistics and Preprocessing

Node and Edge Statistics.

Table 4 summarizes the key statistics of the constructed semantic, spatio-temporal, and collaborative graphs for Foursquare NYC and Yelp. Nodes represent users, POIs/items, and additional entities such as POI categories or attributes. Edges represent various relational types among nodes. Reporting the number of nodes, edges, and relation types facilitates reproducibility of the dataset construction pipeline.

POI Feature Extraction and Normalization. POI categories were extracted from the raw dataset and mapped to a standardized taxonomy. Temporal information, such as hours and days of the week, was discretized into fixed intervals, and location coordinates were normalized to a consistent spatial scale. This ensures that features across different graphs are compatible and comparable.

Handling Missing Relations and Incomplete Triples. During graph construction, missing relations or incomplete triples were filtered out. For multi-relational edges where partial information existed, only valid triples were retained to maintain consistency. This preprocessing ensures reliable downstream representation learning.

To better reflect the sparsity and user activity level of our filtered datasets, we compute two simple statistics: average interactions (i.e., user-POI edges) per user, and approximate density of the user-POI matrix. The results for our Foursquare-NYC and Yelp datasets are shown in

Table 5.

In this table, total user-POI edges denote the number of edges in the collaborative interaction graph after preprocessing. Avg. interactions per user are calculated as (user-POI edges)/(#Users), reflecting the typical user activity level. Approx. matrix density is computed as (user-POI edges)/(#Users × #POIs); here, we conservatively approximate #POIs ≈ #Users, since the actual number of POIs after filtering is close to the number of users.

This subsection provides all necessary statistics and preprocessing details for reproducing the multi-graph construction pipeline, including node and edge counts, detailed relation types, feature normalization, and missing data handling.

5. Experiment

Aiming to answer the following research questions, we conduct extensive experiments on two public datasets:

RQ1: How does S2DCRec perform compared with existing models?

RQ2: Do the key components of S2DCRec truly contribute to its performance?

RQ3: How do different hyperparameter settings affect the performance of S2DCRec?

RQ4: How effectively does S2DCRec capture spatio-temporal and semantic characteristics of POIs in recommendation?

5.1. Evaluation Metrics

We evaluate S2DCRec and baseline models using standard metrics commonly adopted in search and recommendation systems. Specifically, we report Precision@K, Recall@K, F1@K, and AUC, defined as follows:

Precision@K: Represents the proportion of correctly recommended relevant items among the top-

K results returned by the model.

Recall@K: Denotes the proportion of relevant items that are successfully retrieved within the top-

K recommendations.

F1@K: The harmonic mean of Precision@K and Recall@K, balancing both recommendation accuracy and coverage.

AUC: Measures the probability that a randomly selected positive sample receives a higher predicted score than a randomly selected negative sample, reflecting the overall ranking ability of the model.

where

is the indicator function that returns 1 if the condition holds and 0 otherwise. In our experiments, we adopt a chronological split, using the earliest 80% of interactions for training and the most recent 20% for testing in the next-location (next check-in) prediction task. These evaluation metrics are widely acknowledged in the literature of search and recommendation systems. Therefore, a single train/test split provides a reliable and fair comparison across models, and it is generally unnecessary to report confidence intervals or standard deviations for the evaluation.

5.2. Experiment Settings

5.2.1. Dataset

We evaluate the effectiveness of S2DCRec on two benchmark datasets: Yelp and Foursquare NYC. These datasets originate from different domains, are publicly accessible, and exhibit differences in scale and sparsity, making the experiments more convincing.

Yelp: This dataset is collected from the Yelp platform and contains five types of data: business information, user information, user reviews, check-in records, and tips. It includes approximately 45,545 users, 13,524 POIs, and 1.28 million reviews and check-ins.

Foursquare NYC: This dataset consists of real-world spatio-temporal check-in data collected from the Foursquare platform in New York City, encompassing multi-dimensional information such as users, locations, categories, and timestamps. It includes approximately 2275 users, 31,125 POIs, and 227,428 check-in records.

User, POI, category, and temporal information from both Yelp and Foursquare NYC can be uniformly transformed into knowledge graph triples, such as (User, checkin_at, POI) to represent user check-ins, (POI, has_category, Category) to denote POI–category associations, and (User, friends_with, User) to capture social relations. In this way, the raw heterogeneous data are converted into structured entity–relation representations, enabling joint modeling in knowledge graph construction and recommendation tasks. For both datasets, POIs or businesses that users actually visited or reviewed are treated as positive samples, while unvisited or unreviewed POIs are sampled as negatives either randomly or under category/geographical constraints. The basic statistics of the two datasets are summarized in

Table 6.

5.2.2. Baselines

To evaluate the effectiveness of the proposed S2DCRec framework, we compare it against representative recommendation methods grouped by their modeling strategies: collaborative filtering (CTR-based), deep learning–based, spatio-temporal modeling, and graph neural network (GNN)–based models.The details are as follows:

Collaborative Filtering/CTR-based:

- –

BPRMF [

1]: A classical collaborative filtering method that applies pairwise matrix factorization to implicit feedback and is optimized with the BPR loss.

Deep Learning-based:

- –

NCF [

27]: Leverages a multi-layer perceptron (MLP) to nonlinearly combine user and item embeddings, enabling modeling of complex interaction patterns.

- –

TALLRec [

28]: Enhanced by large language models (LLMs), incorporating prompt engineering and parameter-efficient fine-tuning to capture semantic signals in user–item interactions.

Spatio-Temporal Modeling:

- –

STGCN [

24]: Integrates graph convolutional networks with temporal sequence modeling to capture spatial dependencies and temporal patterns in user check-in behaviors.

- –

GeoSAN [

25]: Combines geographical proximity with self-attention to jointly model spatio-temporal behavioral patterns and semantic correlations of POIs.

- –

STP-Rec [

16]: Integrates trajectory modeling with time-aware Transformers to enhance both accuracy and interpretability in POI recommendation.

Graph Neural Network (GNN)-based:

- –

LightGCN [

8]: A lightweight GCN-based model that iteratively aggregates user and item neighborhood information to obtain higher-order representations.

- –

KGRec [

23]: A knowledge graph-enhanced recommendation method employing relation-aware neighbor aggregation, improving interpretability and multi-hop reasoning capability.

- –

TransGNN [

15]: Combines GNNs with Transformers to capture both global dependencies and local structural patterns, achieving more effective representation learning for recommendation.

5.2.3. Evaluation Metrics and Parameter Settings

We evaluate the proposed approach under two experimental scenarios: (1) CTR prediction task, where the trained model is applied to each interaction in the test set, and two widely used metrics, AUC and F1, are adopted to assess the prediction performance. (2) Top-K recommendation task, where for each user in the test set, the trained model selects the top-K items with the highest predicted click probabilities, and we measure the quality of the recommendation set using Recall@K.

We implement S

2DCRec and all baseline methods in PyTorch 2.1.0, with careful tuning of key hyperparameters. To ensure fairness, the embedding dimension of all models is fixed to 64, and the embedding parameters are initialized using the Xavier method. The models are trained with the Adam optimizer and a batch size of 4096. To determine optimal hyperparameter settings, we perform a grid search: the learning rate

is tuned from

, while the L2 regularization coefficient

is selected from

. For all baseline methods, the optimal hyperparameters are determined either through empirical validation or by following the configurations reported in the original papers. In addition, we investigated the sensitivity of S

2DCRec to key hyperparameters, including the temperature parameter

and the embedding dimension

d. The results indicate that the model is robust across a reasonable range of

(0.05–0.1) and embedding dimensions (32–128), with only minor performance variations. This demonstrates the stability of S

2DCRec under different hyperparameter settings. Moreover, fixing the embedding dimension to 64 for all models ensures fair comparison, attributing performance differences to model design rather than embedding capacity. All relevant parameter settings are summarized in

Table 7.

5.3. Performance Comparison (RQ1)

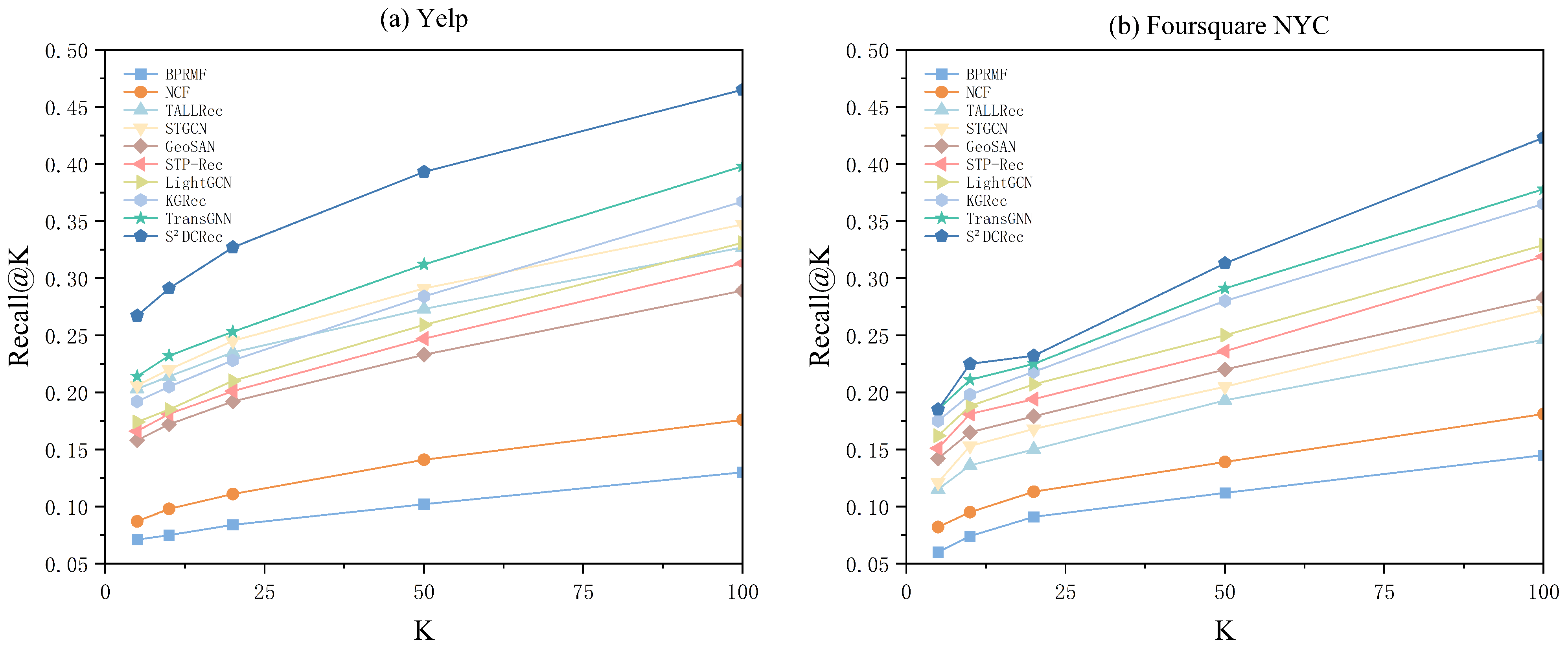

We present the performance comparison in

Table 8 and

Figure 2, where statistical significance tests are conducted between S

2DCRec and the strongest baselines. Several key findings emerge from the results.

First, S2DCRec consistently achieves the best performance across all four evaluation metrics, surpassing all baseline methods. Specifically, it improves AUC by 4.43% on Foursquare NYC and 2.69% on Yelp, while yielding F1 gains of 4.04% and 3.01%, respectively.

Compared with matrix factorization baselines, S2DCRec achieves 5.65–12.27% improvements, as it integrates spatio-temporal and semantic information and enhances user–POI discrimination via contrastive alignment, thereby alleviating the limitations of models that rely solely on interaction matrices.

Against deep nonlinear models, S2DCRec shows 4.18–9.08% gains, benefiting from multi-channel fusion that extends nonlinear expressiveness, while contrastive learning improves representation generalization to better capture complex user–POI interaction patterns.

For spatio-temporal GCN and attention-based models, the improvements range from 2.96–7.01%, owing to the ability of S2DCRec to simultaneously capture temporal dependencies and semantic correlations, with contrastive alignment further reinforcing feature separability.

Relative to higher-order GCN and KG-based methods, S2DCRec achieves 2.96–9.59% gains, as its dual-channel alignment significantly strengthens the expressiveness and discriminability of user–POI representations, enabling more effective exploitation of both graph structures and knowledge graph information.

Second,

Figure 2 illustrates the Recall@K results. As expected, all models exhibit steadily increasing Recall@K as the recommendation list length

K grows, since longer lists naturally cover more user-preferred POIs. Importantly, S

2DCRec consistently outperforms all baselines across all

K values, with its advantage becoming more pronounced as

K increases. In short-list settings (K = 5–10), S

2DCRec already demonstrates a clear lead. For instance, compared with the second-best model TransGNN, it achieves 24.8% and 11.9% improvements on Foursquare NYC and Yelp, respectively, highlighting its strong ability to capture users’ core interests. In medium-to-long lists (

), the margin further enlarges. At

, S

2DCRec yields relative gains of 16.8% and 12.0% over TransGNN on the two datasets, showing its superiority in covering long-tail POIs and improving overall recommendation accuracy.

Taken together, these results demonstrate that the dual-channel fusion of spatio-temporal and semantic information, coupled with contrastive alignment, not only enhances user–POI representation discriminability, but also enables the model to robustly capture both short-term preferences and long-tail interests. Consequently, S2DCRec achieves consistent and substantial improvements across diverse recommendation scenarios.

5.4. Ablation Studies (RQ2)

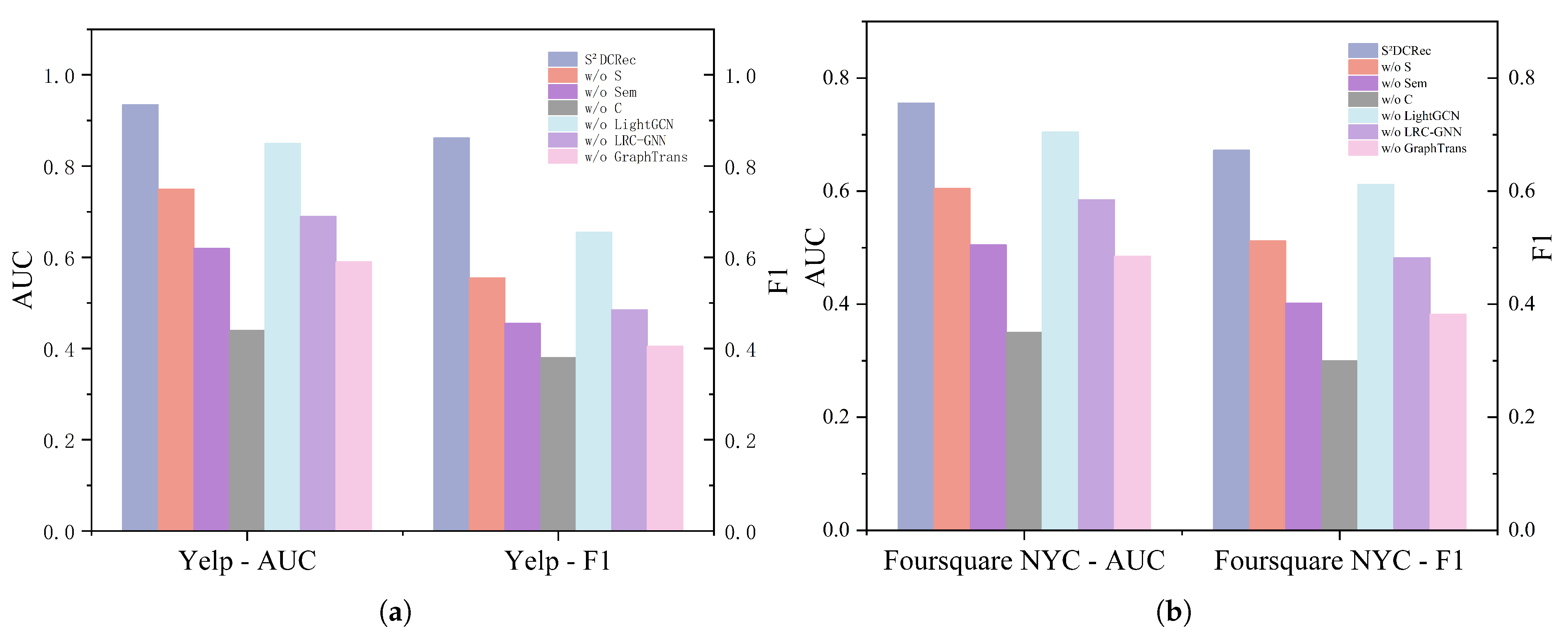

As illustrated in

Figure 3, we conduct ablation studies to investigate the contribution of each major component in S

2DCRec by comparing it with several variant models: S

2DCRec w/o S removes the spatio-temporal channel and retains only the semantic channel for representation learning; S

2DCRec w/o Sem removes the semantic channel and relies solely on the spatio-temporal channel for modeling; S

2DCRec w/o C discards the contrastive alignment module, training the two channels independently; S

2DCRec w/o LightGCN removes the LightGCN encoder; S

2DCRec w/o LRC-GNN removes the Long-range Connection GNN encoder; and S

2DCRec w/o GraphTrans removes the GraphTrans encoder.

Figure 3 reports the performance of S

2DCRec and its variants on the POI datasets, from which we derive the following observations: removing either the spatio-temporal channel (w/o S) or the semantic channel (w/o Sem) results in substantial performance degradation, highlighting that capturing both spatio-temporal dependencies between users and locations and semantic attributes of POIs is equally essential in POI recommendation. Furthermore, removing the contrastive alignment module (w/o C) also yields a notable drop, demonstrating that cross-channel alignment is crucial for effectively fusing spatio-temporal and semantic features into more discriminative representations.

The ablation on GNN encoders further shows that the LightGCN encoder plays a pivotal role in modeling collaborative filtering relations, the long-range connection GNN encoder is particularly important for capturing long-distance dependencies, and the GraphTrans encoder significantly enhances global semantic modeling. Overall, the complete S2DCRec consistently achieves the best performance across metrics, validating that contrastive alignment between spatio-temporal and semantic channels, coupled with the synergy of multiple GNN encoders, is highly effective in exploiting multi-view information for POI recommendation.

5.5. Sensitivity Analysis (RQ3)

To further investigate the sensitivity of our model to key hyperparameters, we systematically analyze the impact of , , K, , , , and on recommendation performance using the Foursquare NYC and Yelp datasets. The results demonstrate consistent patterns across settings, providing valuable insights for model optimization.

5.5.1. Impact of Hyperparameters , , K, and

For the influence of

,

,

K, and

,

Figure 4 illustrates their effects on S

2DCRec. When tuning the contrastive loss weight

, performance exhibits a rise-then-fall trend as

increases from 0 to 1, with the best results achieved at

on both datasets. This indicates that properly balancing local and global contrastive objectives substantially enhances representation learning, while overemphasis on local contrastive loss (

) neglects global structural information and harms performance. The weight

, which controls the trade-off between contrastive and recommendation losses in multi-task training, also plays a pivotal role. The model achieves optimal performance at

, confirming the importance of maintaining equilibrium; excessively small or large

values bias the model toward a single objective, leading to performance degradation. In terms of aggregation depth, the semantic channel achieves its best performance at

, as deeper propagation introduces noise and hampers generalization. Similarly, for the spatio-temporal channel,

effectively captures long-range dependencies, while deeper layers result in overfitting and noise accumulation. Overall,

Figure 4 highlights the necessity of carefully configuring

,

, and aggregation depth to stabilize training and enhance representation quality.

5.5.2. Impact of Channel Weight Parameters , , and

Regarding the channel weighting parameters

,

, and

, which govern the relative contributions of BPR loss, micro-level contrastive loss, and macro-level contrastive loss in the final objective, we conduct combinational experiments on both datasets with

,

, and

. As shown in

Figure 5, when

is large (e.g.,

–

), the model tends to overfit the BPR loss, underutilizing micro- and macro-level contrastive information and leading to slight performance drops in AUC and F1. When

takes moderate values (

–

), micro-level information in the spatio-temporal channel is effectively leveraged, allowing the model to capture fine-grained POI spatio-temporal distributions and improve Recall@

K. When

falls within

–

under the constraint, macro-level semantic information is fully exploited, enabling the model to encode category- and tag-level associations and enhance overall recommendation accuracy. A comprehensive comparison reveals that setting

yields the best results on both datasets, where the three components achieve a balanced contribution and the integration of micro- and macro-level representations is most effective.

In summary, the sensitivity analysis reveals a common rise–fall trend with respect to hyperparameter tuning, where excessive reliance on a single module undermines global representation ability, while balanced configurations fully exploit the strengths of the dual-channel contrastive alignment. The optimal configuration is identified as , , , , and , under which S2DCRec achieves the best performance across all metrics, verifying its effectiveness and robustness in POI recommendation.

5.6. Visualization (RQ4)

To address RQ4, we further conduct visualization experiments to illustrate the representational power of S

2DCRec across the spatio-temporal and semantic channels. We select two POI categories from the Yelp dataset and project their embeddings into a low-dimensional space under three configurations: spatio-temporal channel, semantic channel, and fused dual-channel representation, as shown in

Figure 6.

In the spatio-temporal channel visualization (

Figure 6a), POI embeddings are primarily generated based on check-in time and geographical location. The two categories exhibit a relatively loose distribution with considerable overlap, making it difficult to form clear boundaries. This suggests that while spatio-temporal patterns capture macro-level geographical proximity, they are insufficient for fine-grained category discrimination.

In the semantic channel visualization (

Figure 6b), POIs are encoded using category, functional attributes, and other high-order semantic features. Compared to the spatio-temporal channel, the embeddings are more compact, and inter-class boundaries begin to emerge, though some ambiguous regions remain. This indicates that semantic information complements spatio-temporal signals by enhancing POI separability.

In the fused channel visualization (

Figure 6c), S

2DCRec aligns and integrates representations from both spatio-temporal and semantic channels. Here, the two categories form tight, well-separated clusters with clearly defined boundaries and minimal overlap, demonstrating that the fused representation captures both local spatio-temporal dynamics and global semantic relationships, substantially enhancing discriminability and recommendability.

Collectively, the visualization results in

Figure 6 provide intuitive evidence of S

2DCRec’s effectiveness in POI recommendation: the spatio-temporal channel ensures modeling of geographical and temporal patterns, the semantic channel strengthens high-level semantic understanding, and their fusion yields highly discriminative POI embeddings that empower downstream recommendation tasks.

5.7. Discussion and Efficiency Analysis

Discussion. To interpret the performance improvement of S2DCRec, we compare it with key baseline models, including LightGCN, TransGNN, and STP-Rec. Experimental results show that S2DCRec consistently outperforms these baselines on both Yelp and Foursquare NYC datasets in terms of HR@10. Notably, HR@10 scores on Yelp are higher than on Foursquare NYC, which is expected due to denser user–POI interactions and richer semantic information in Yelp. The dual-channel architecture enables complementary learning: the spatio-temporal channel captures micro-level sequential patterns, while the semantic channel enhances macro-level contextual understanding. This combination produces highly discriminative representations and improves recommendation accuracy. To further explain the performance advantage, the micro-level contrastive alignment captures fine-grained sequential behaviors, while the macro-level alignment preserves high-level semantic correlations among POIs. This dual-channel design effectively mitigates over-smoothing because each channel enforces complementary constraints on the embeddings: the micro-level channel emphasizes local neighborhood distinctions by contrasting nearby POIs in spatio-temporal sequences, preventing embeddings from becoming too similar within local regions; the macro-level channel maintains global semantic differentiation by encouraging semantically similar POIs to be close while keeping dissimilar ones apart. Together, these mechanisms balance local and global information, avoiding the collapse of representations that typically leads to over-smoothing in GNN-based models. We also include illustrative examples from the datasets, demonstrating how S2DCRec better models long-range dependencies and maintains semantic consistency compared to existing baselines.

Complexity and Runtime Analysis. The theoretical time complexity of S

2DCRec is expressed as

where

L is the number of layers, and

,

,

denote the computational cost of LightGCN, GraphTrans, and LRC-GNN encoders, respectively.

As shown in

Table 9, we further compare the average training time per epoch and HR@10 across both datasets:

The table shows that S2DCRec achieves higher HR@10 on both datasets while maintaining moderate computational cost. Compared with TransGNN, training time increases slightly (≈3.3%), while HR@10 improves by ≈7.3% on Yelp and ≈6.8% on Foursquare NYC. These results indicate that the dual-channel design enhances performance without introducing prohibitive computational overhead. The larger HR@10 on Yelp is consistent with the denser interactions and richer semantic information in this dataset, whereas the improvement on Foursquare NYC demonstrates the model’s effectiveness on sparser data.

6. Conclusions

In this work, we addressed the task of Point-of-Interest (POI) recommendation by exploring dual-channel spatio-temporal and semantic contrastive alignment within a self-supervised learning paradigm. We proposed S2DCRec, an innovative framework composed of (1) a multi-graph embedding module that jointly learns representations from the collaborative interaction graph, the spatio-temporal graph, and the semantic knowledge graph; and (2) a cross-channel contrastive alignment mechanism that performs micro-level sequential and neighborhood dependency learning and macro-level semantic alignment. This strategy captures complex structural dependencies and multi-dimensional semantic relations among POIs, resulting in more expressive representations. To validate our approach, we conducted comprehensive experiments on two benchmark datasets (Foursquare NYC and Yelp) under widely adopted experimental settings. The results show that S2DCRec consistently outperforms multiple state-of-the-art baselines across multiple evaluation metrics, and further analysis confirms that the dual-channel contrastive learning mechanism enhances effectiveness under sparse data conditions and improves modeling of spatio-temporal dynamics and semantic information.

Overall, the main strengths of our work lie in the unified multi-graph modeling strategy, the novel contrastive alignment mechanism, and its demonstrated performance superiority in real-world scenarios. This highlights the added value of our method in improving the self-supervised POI recommendation process. We also acknowledge several limitations of our current approach. The method’s effectiveness partially depends on the quality and completeness of the underlying graphs, which may limit robustness in noisy or incomplete datasets. Moreover, scaling the dual-channel contrastive learning framework to extremely large datasets may require further optimization or approximation techniques to maintain computational efficiency. This constitutes the main limitation regarding the framework’s scalability.

For future work, we plan to extend S2DCRec in several directions. We aim to integrate additional contextual signals, such as social influence, temporal preference evolution, and dynamic user behavior patterns. We also intend to explore dynamic graph learning and incremental updating strategies to better adapt to evolving user–POI interactions. Furthermore, incorporating large-scale foundation models, multi-agent collaborative strategies, or cross-domain knowledge transfer could enhance representation learning and broaden applicability to other recommendation domains, including e-commerce and social networks. Future work will also investigate multi-city datasets and textual or contextual attribute integration to further improve scalability and cross-region generalization. These extensions will further strengthen the efficiency, and general applicability of the proposed framework.