1. Introduction

Security Operations Centers (SOCs) play a key role in organizations’ ability to manage cyber risks in real time. They act as operational hubs where threats are detected, analyzed, and addressed as they occur [

1]. Traditionally, research on SOCs has focused on optimization from a technical perspective and process design, with emphasis on detection tools, incident workflows, and the work of expert analysts [

2,

3]. This approach has improved our understanding of how SOCs protect digital systems, but it often separates technology, process, and people into isolated elements [

4,

5]. Although this line of research has clarified performance bottlenecks, it tends to fragment the sociotechnical nature of SOC operations by treating technology, processes, and people as discrete components rather than as interdependent elements.

More recent studies have started to explore organizational and sociotechnical factors that affect SOC performance [

4]. One stream of research examines how AI and automation influence incident detection and response [

5,

6,

7]. For example, explainable AI has been commonly used to increase transparency in alerts and to support trust in automated decisions [

8]. These developments demonstrate that technology and human expertise increasingly co-evolve rather than substitute each other. Together, these studies suggest that SOC performance depends not on any single element, AI, automation, or human expertise, but on their continuous interaction as an integrated sociotechnical system. Another stream of research investigates how SOC teams work under pressure using complex tools [

9,

10]. This includes studies on burnout and alert fatigue [

11], and research, introducing new models of human-AI teaming that combine automation with expert judgment in more flexible ways [

11,

12].

While we know much about how SOCs deal with the complexity and limitations of resources [

3,

4,

5,

13,

14,

15], there is far less understanding of how they manage ongoing internal organizational tensions. These tensions include the need for expediency in comparison to the need for authority, or the push for consistent processes versus the requirement for adaptability to specific situations. Most research sees these as design problems, which can be addressed through improved tools or procedures [

15]. In contrast, this study treats such contradictions as enduring paradoxes that must be continuously reconciled rather than permanently resolved.

Following Smith and Lewis, we define paradox as “contradictory yet interrelated elements that exist simultaneously and persist over time” [

16]. This view helps explain why problems in SOC coordination cannot simply be solved through automation or additional resources. This aligns with earlier work in organizational theory, which sees paradoxes as potential sources of innovation and learning, not just obstacles [

17]. Paradox Theory thus provides a dynamic lens to understand how SOC teams cope with simultaneous pressures for speed, control, flexibility, and reliability in high-velocity environments.

Compared to alternative perspectives such as Ambidexterity and Contingency Theories, Paradox Theory is uniquely suited to this context. Ambidexterity frameworks emphasize sequential or structural separation of conflicting demands (e.g., exploration versus exploitation), while Contingency Theory assumes that optimal structures can be designed to fit specific environments. However, SOC operations require simultaneous management of competing logics, acting fast while maintaining oversight, adapting responses while enforcing standardization. Paradox Theory explicitly addresses this coexistence of contradictions and thus provides a richer lens for examining how SOC professionals navigate these enduring tensions in real time.

This study addresses the following two interrelated research questions:

How do Security Operations Centers (SOCs) experience and navigate paradoxical tensions in incident response?

What role do AI, automation, and human expertise play in reconciling these tensions in practice?

To answer these questions, we draw on data from a multi-case study of SOCs in both public and private organizations. Our analysis surfaced two key tensions shaping incident response: (1) expediency versus authority and (2) adaptability versus consistency. These tensions are not temporary trade-offs, but persistent structural conditions that professionals must continuously manage.

In this paper, we provide several contributions to research on cybersecurity and organizational paradox. First, by bringing Paradox Theory into the SOC domain, we demonstrate how ongoing tensions, not one-time trade-offs, shape daily operations. Second, we propose a five-layer model that explains how human routines, AI, and automation work together to manage these tensions. Third, we show that traditional ambidexterity solutions, like the separation of tasks between teams or over time, are often not feasible in SOCs, because the conflicting demands must be addressed at once. Lastly, in our paper, we introduce the concept of Ambidextrous Integration. This is a new concept that describes how SOCs handle competing demands within the same workflows in real time.

Finally, this study anticipates two forms of contribution. From a theoretical standpoint, it extends Paradox Theory to AI-enabled organizational routines by illustrating how paradox reconciliation occurs through sociotechnical integration rather than structural separation. From a managerial standpoint, it offers actionable insight into how CISOs and SOC leaders can embed AI-driven processes that enhance responsiveness without eroding oversight or compliance. Together, these implications establish the relevance of paradox-based thinking for both academic inquiry and cybersecurity practice.

2. Theoretical Background

Research on cybersecurity incident response and Security Operations Centers (SOCs) has traditionally focused on two leading perspectives: technical optimization and organizational implementation [

2,

3]. The first perspective emphasizes automation, threat detection, and response efficiency, and frames SOC performance primarily as a problem of architectural design and computational improvement. This includes work on intrusion detection systems (IDS), SIEM platforms, and threat intelligence protocols designed to enhance detection accuracy and reduce false positives [

5,

6,

7,

8]. The second stream of research investigates the socio-organizational dynamics of new technologies adoption, highlighting resistance to change, knowledge fragmentation, and analyst fatigue [

9,

10,

11,

14]. Together, these perspectives explain how detection and response tools operate, but rarely show how the human and technological dimensions interact as part of an integrated system.

However, both perspectives overlook a fundamental aspect of SOC operations: the contradictory demands that security teams must manage simultaneously. These tensions are not occasional design flaws but enduring organizational features [

18]. For example, SOCs must respond to incidents with speed and agility (expediency and ability to adapt) while simultaneously following predefined protocols and governance structures (authority and consistency). Likewise, they must tailor responses to clients’ or business units’ needs, yet maintain standardized, repeatable practices that ensure efficiency and compliance. Recent empirical work shows that such contradictions persist even in mature SOCs, suggesting that they are not temporary inefficiencies but embedded design features [

4,

15]. Hence, SOC operations are shaped by paradoxical tensions that cannot be permanently resolved but must be continually managed. This change in understanding is aligned with organizational theory, where paradoxes are perceived as sources of learning and adaptive capability, especially in fast-paced, high-stakes environments like cybersecurity [

16,

17,

19].

To clarify the theoretical rationale, this study adopts Paradox Theory because it explicitly theorizes the simultaneous coexistence of opposing organizational demands rather than their temporal or structural separation. Alternative frameworks, such as Organizational Ambidexterity or Contingency Theory, describe sequential or structural differentiation but rarely capture the concurrent, real-time negotiation of contradictions that defines SOC work. Paradox Theory is therefore better suited to our phenomenon, as SOC analysts must uphold procedural authority while improvising rapid responses under uncertainty, managing both sides at once, rather than alternating between them over time.

Within this framing, organizations do not eliminate tensions once and for all but continuously navigate and regulate them through balancing mechanisms. This balancing is operationalized through two reconciliation strategies based on the paradox literature and our empirical findings: (1) Dynamic Equilibrium and (2) Iterative Integration [

16,

17]. Dynamic Equilibrium captures the ability of SOCs to address divergent demands simultaneously without leaning towards one side (

both/and balance perspective), while Iterative Integration reflects the need for constant refinement and adjustment, especially in variable technical settings (

continuous adjustment perspective).

However, while Dynamic Equilibrium emerges directly from Paradox Theory, Iterative Integration is an empirically derived insight from this study. In our case, study data revealed that SOCs manage paradoxes not only through balance but also through repeated learning, adjustment, and procedural refinement over time. Iterative Integration, therefore, extends Paradox Theory by capturing how paradox navigation unfolds through recursive operational practices in AI-enabled SOC routines.

To complement this perspective, we also draw on the concept of Organizational Ambidexterity, which offers models describing how organizations manage contradictory demands over time. Ambidexterity has been classically operationalized through structural separation (e.g., exploration in R&D, exploitation in operations), temporal cycling (e.g., alternating priorities gradually), or contextual balancing (e.g., empowering people to switch between competing logics) [

20,

21]. While widely applied in innovation and strategy research, these forms of ambidexterity are less explored in cybersecurity operations and prove limited for capturing simultaneous tensions.

Our empirical study of SOCs reveals that such classic modes of ambidexterity do not fully address the simultaneity and entanglement of tensions in real-time cyber defense. As a result, we use these foundations to develop the notion of Ambidextrous Integration: a configuration in which automation tools, analyst routines, and decision structures are not separated but combined in fluid, co-performing ways. This concept emerges from our data and contributes to both paradox and ambidexterity literature by showing that integration, rather than alternation or separation, becomes the dominant mode of tension management in AI-supported SOCs.

To consolidate these theoretical distinctions and clarify conceptual boundaries,

Table 1 compares Organizational Ambidexterity, Paradox Theory, and our proposed construct of Ambidextrous Integration. This comparative synthesis shows that while ambidexterity emphasizes alternation between conflicting goals and Paradox Theory emphasizes their coexistence, Ambidextrous Integration theorizes how these contradictions are enacted and reconciled in real time through the joint agency of humans and AI within SOC operations.

This positioning clarifies that our contribution is not to reassert alternation (ambidexterity) or remain purely interpretive (paradox), but to theorize how SOCs enact reconciliation through integrated, real-time coupling of human expertise and AI-enabled automation.

In this study, AI and automation are not treated as sources of tension but as enablers of paradox reconciliation. AI-powered detection systems and auto-mitigation protocols help sustain Dynamic Equilibrium by accelerating incident response while protecting decision integrity. Meanwhile, adaptive machine learning supports iterative integration, allowing SOCs to align with leaders’ priorities and regulatory changes. Thus, SOC analysts and AI systems automation function as co-performers within a sociotechnical system that unites human judgment and computational precision at the point of execution.

4. Findings

As we look at data from security operations centers across public, private, and managed service contexts, we identified two recurring and deeply rooted tensions in the management of incident response. These tensions are the Response Expediency–Authority paradox and the Adaptability–Consistency paradox. Both reflect opposing demands that SOC professionals face in their daily work. Rather than episodic problems, these tensions are enduring features of SOC practice that require continuous management.

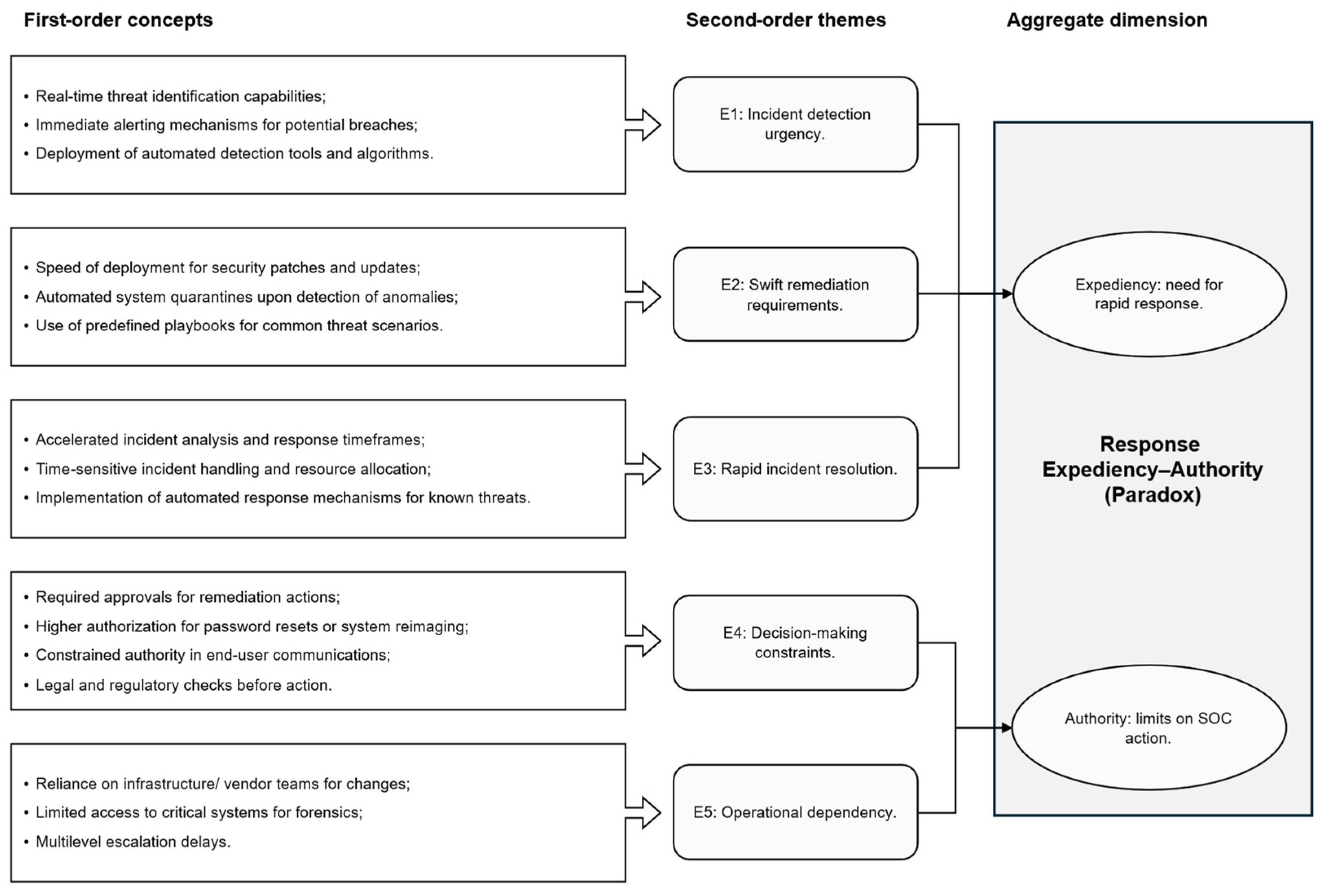

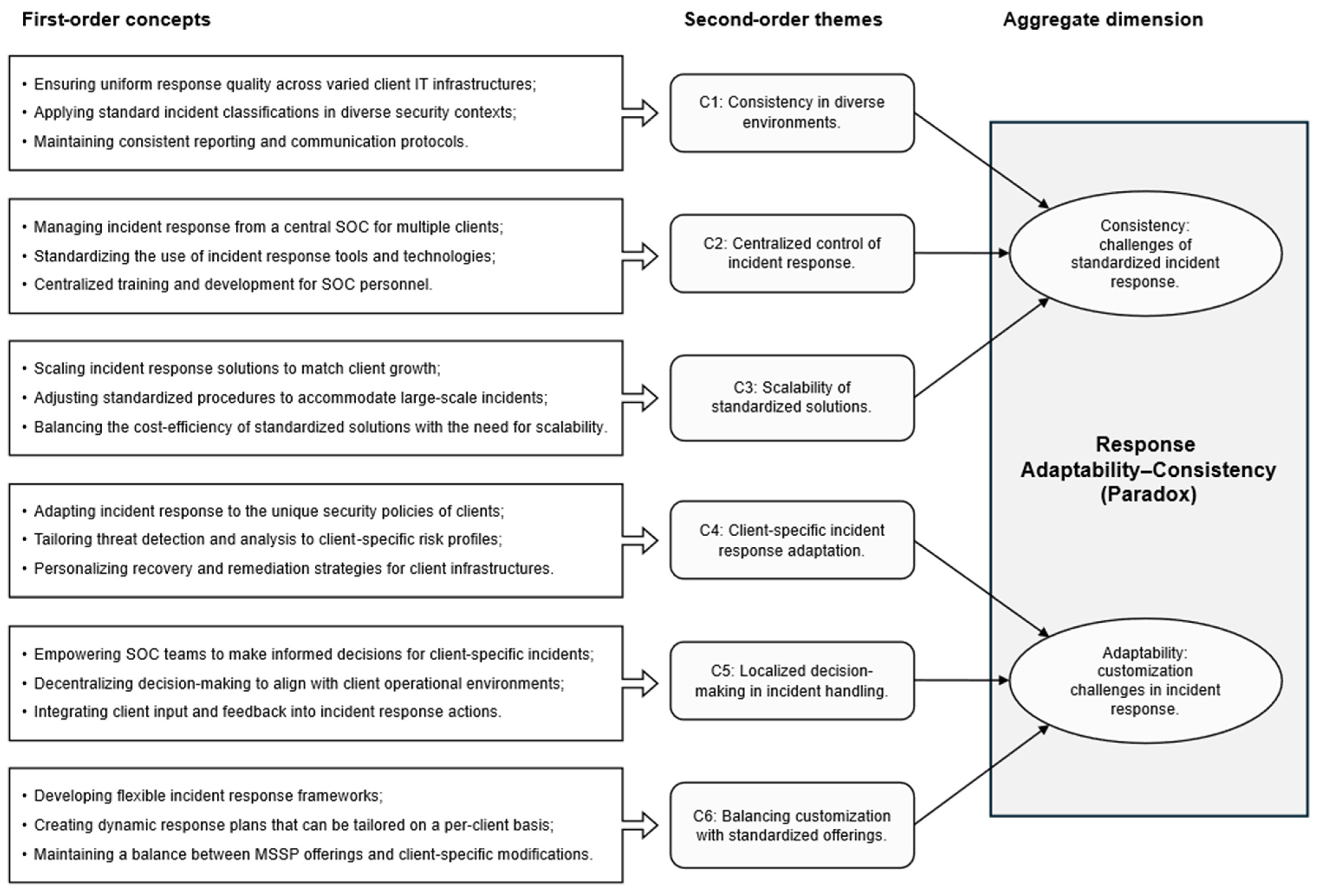

To structure the analysis, we first identified recurring first-order codes and second-order themes that converged into these two paradoxes.

Figure 1 and

Figure 2 illustrate the underlying data structure for each paradox, showing how analyst accounts, observed routines, and organizational conditions interlock. For readability, we number themes and use those numbers in the text (Expediency–Authority: E1–E5; Adaptability–Consistency: C1–C6). Each paradox is presented below through an integrated sequence that moves from empirical observation (participant evidence) to analytical interpretation (paradox mechanisms). Each subsection begins with empirical patterns, followed by a short analytical interpretation that links to the Paradox Theory.

Cross-case analysis revealed that while these tensions appeared across all settings, their expression varied with organizational characteristics. In the multinational CPG organization, pressure for rapid resolution was magnified by production-line dependencies and global coordination requirements. In the public-sector case, authority demands dominated due to regulation and multi-agency oversight. The MSSP exhibited both tensions concurrently: service-level agreements required tailored, time-sensitive responses, while standardization ensured consistency across heterogeneous client environments.

We defer theory building on “Ambidextrous Integration” to

Section 5 and focus here strictly on empirical patterns and brief paradox interpretations.

4.1. Response Expediency Versus Authority Paradox

Response Expediency vs. Authority paradox is illustrated in

Figure 2. In all cases, incidents unfolded under a double clock: technical time compressed by automation and organizational time paced by approvals. We observed five interdependent patterns (E1–E5): incident-detection urgency, swift remediation requirements, rapid incident resolution, decision-making constraints, and operational dependency [

36,

37,

38].

E1. Incident–detection urgency (tempo compression): AI and automation accelerated anomaly surfacing and alert triage. AI and automation further magnify this tension: they enhance detection and remediation speeds but do not automatically grant the SOC the authority to act. As one responder noted, “Removing any human error decision-making, managing … high-volume alerts received … monitoring … 24/7 … reaction time … an AI can react much faster than a human analyst” (MST, page 8, 19:52). SIEM correlations and behavioral models flagged patterns that would otherwise exceed human attention.

E2. Swift remediation requirements (orchestration load): This urgency is further visible in swift remediation requirements. A CISO explained the breadth of coordination across identities, servers, and application owners: “These activities are communicated to the relevant stakeholders to do specific actions. It might be an administrator of active directory … responsible for the user access management … the team that is managing a specific server that required patches …each case is quite unique “ (FPA, page 3, 06:49). Automation pre-generated tasks and deadlines; yet, Automation and AI does not eliminate the complexity of human coordination; however, orchestration still depended on agreement across silos.

E3. Rapid incident resolution (analysis bottlenecks): One analyst reflected that, “Identifying an incident is quite effective … the most difficult part is addressing the incident … time is against you … the attacker continues escalating the privileges … lateral movement … you need to link whether this is the same incident or the same attack … to address it, it takes much more effort” (DMA, page 6, 13:07). Tools reconstructed attack paths and suggested root causes; yet, resolving incidents often depends on real-time analysis, confirmation of the root cause, and strategic choices. Final decisions hinged on confirmation, containment scope, and business risk.

E4. Decision-making constraints (authorization topology): Speed is met with the reality of decision-making constraints. Escalation ladders, risk validation, and regulatory checks slowed execution, especially in the public sector with multi-agency oversight; similar delays arose in the CPG case through plant-level approvals.

E5. Operational dependency (business primacy): The head of platforms described production risks from isolating SAP servers: “For example, you have a server that is sharing their SAP on one production plant. Maybe these guys, they feel that isolate one server instead of all of them and search for them if something has happened to the rest of the servers is not the right way, because this will turn off the production of the plant—so the business is going to challenge the decision to do a deep dive across all of their servers and they would like to accept only the partial system downtime and not full system downtime” (TST, page 4, 09:12). Authority for impactful remediation often sat with operations rather than the SOC. As another expert summarized, fully automatic response remained rare; the CISO explained, “The majority of the response … implementation … remediation activities is taken by the admins … engineers” (FPA, page 5, 9:18). Another expert added “For the response, … it is very risky to provide directly to an assistant the approval to … isolate something, except if it is … 100% confirmed that the threat actor is there and needs to be isolated. So currently, I haven’t seen any organization … given the authority to respond to incidents automatically, except from the ones that the Endpoint Detection Response (EDRs) are blocking them by default, … taking actions afterwards. It is something that the EDR has not detected on the first place and it goes as an action after detecting specific indicators of compromise. I haven’t seen to allow automated actions in any organization” (TST, page 7, 23:04). EDRs act on known patterns; ambiguous cases still require human approval.

Having outlined these five recurring empirical patterns, we next interpret how they collectively express the core paradox between Expediency and Authority in SOC operations. This misalignment between technical tempo and institutional rhythm exemplifies a core paradoxical dynamic described in Paradox Theory. Across cases, automation compressed “signal time,” but authorization kept “action time” sequential. The paradox thus stems from a tempo mismatch coupled with distributed authority: the SOC is accountable for speed while control over impactful actions is shared. Paradox Theory suggests that such enduring contradictions cannot be resolved outright. Alternatively, they must be managed through what is referred to as “Dynamic Equilibrium”. In Paradox Theory terms, actors continuously hold both demands, such as rapid response and proper oversight, through situated balancing rather than resolution.

The following example from the CPG case illustrates how this paradox materializes during real-time incident response. During lateral movement on a production asset, automated correlation raised priority within minutes. Containment required plant approval; the SOC proposed targeted isolation, whereas operations opted for partial shutdown to preserve output. The incident was contained, but only after a negotiated scope.

From these empirical and analytical insights, we derive two propositions that capture the mechanisms through which expediency and authority are balanced in practice. Proposition 1 (Tempo Mismatch): When automation increases detection speed without commensurate authorization redesign, decision latency becomes the principal bottleneck. Proposition 2 (Distributed Authority): The greater the operational dependency on business systems, the more remediation rights shift away from the SOC, reinforcing the paradox.

These observations show the limitations of classical ambidexterity models. To explain how SOCs navigate this, we propose the concept of Ambidextrous Integration. A fuller account of reconciliation mechanisms is developed in

Section 5 (Ambidextrous Integration).

4.2. Incident Response Adaptability-Consistency Paradox

Incident Response Adaptability–Consistency Paradox is illustrated in

Figure 2. Across all three cases, SOCs faced a persistent contradiction between the need for standardized, repeatable processes and the simultaneous requirement to adapt to context-specific client environments. The paradox emerged from the coexistence of two opposing, yet interdependent logics: the demand for consistency in incident-handling quality and the pressure for adaptability to heterogeneous infrastructures, policies, and risk appetites. We observed six interlinked patterns (C1–C6): consistency in diverse environments, centralized control, scalability of standardized solutions, client-specific adaptation, localized decision-making, and structured customization.

C1. Consistency in diverse environments (baseline discipline): MSSPs relied on uniform operational playbooks to guarantee service reliability across clients. As one of the Microsoft Sentinel cloud security architects mentioned,

“SOC processes are really fixed... little flexibility would be needed.” (EAL,

Section 5, 14:46). Standard classifications and common reporting practices created a stable foundation for auditability and control.

C2. Centralized control (stability under churn): Turnover and rotating staff challenged continuity. Central triage models, standardized templates, and AI-suggested investigation steps helped maintain baseline quality despite personnel changes. As one incident coordinator reflected, “when the incident is open … we are gathering all the information, building exactly the whole structure of the investigation...” (BMI, page 3, 04:13) “Main challenges usually are, if it happens, is the retention of the people within the organization. So this is the one thing, when the peoples there are leaving and joining the company” (BMI, page, 05:57).

C3. Scalability of standardized solutions (growth pressure): Expanding client portfolios revealed limits in one-size-fits-all playbooks. As an engineer noted, “The first thing that we always do is to make sure that the customer is following common standard procedure” (BMI, page 15:09). Yet predefined standards often require adjustment to new infrastructures or SLAs. Automation and AI mitigated this tension by adapting response thresholds to client parameters and enforcing consistent policy application across heterogeneous environments.

C4. Client-specific adaptation (bounded tailoring): SOCs’ personalized containment and remediation procedures according to client-specific policies and contractual obligations. “There is a standard process in our company but … for each individual customer the process is changed” (EAL, page 4, 10:59). AI-enabled playbooks facilitated such differentiation, allowing analysts to activate or skip predefined steps depending on regulatory and business contexts.

C5. Localized decision-making (dual ownership): While escalation remained centrally orchestrated, the authority to act often resided with business owners. One participant described, “When we are working with incidents … the incident owners or the business owners are the ones that sometimes need to be convinced that we should be setting down parts of operations in order to have the containment and eradication steps” (DMA, page 5, 11:50). Even when automation provided structured recommendations or projected impact scores, final approvals depended on operational leaders.

C6. Structured customization (bending rules without breaking them): Managers described their approach as flexible yet disciplined: “For all customers … our starting point … is our standard … whenever customers would like to customize … process, way of working … we are making sure … it will not affect … quality … effort and costs” (BMI, page 8, 28:56). Configurable templates and AI-driven orchestration tools embedded this flexibility within guardrails, ensuring that local variations remained traceable and controlled.

Having outlined the six recurring empirical patterns, we now turn to interpret how these data exemplify the underlying paradoxical mechanism. The coexistence of standardization and adaptation exemplifies a core paradoxical dynamic in SOC practice. Across cases, automation served as a mediator that stabilized uniformity while enabling bounded flexibility. Configurable systems, parameterized thresholds, and modular playbooks allowed SOCs to operate within a dynamic equilibrium, where variation occurred inside controlled limits. In Paradox Theory terms, SOCs did not alternate between stability and flexibility but held both demands simultaneously through ongoing recalibration. The paradox thus became a governable tension rather than a problem to solve.

The following brief MSSP vignette illustrates how this paradox materializes in daily SOC operations and how automation mediates its reconciliation. A phishing triage engine applied the same classifier across multiple clients but routed containment actions differently according to SLA tiers and regulatory requirements. Senior analysts overrode automated defaults for high-exposure accounts, preserving both the common baseline and client-specific obligations.

Based on this analysis, we derive two theoretical propositions that capture how SOCs balance adaptability and consistency through automation and distributed decision-making. Proposition 3 (Embedded Flexibility): When automation defines boundaries for permissible variation, SOCs can achieve simultaneous standardization and adaptation through configurable processes. Proposition 4 (Dual Accountability): Where decision authority remains distributed between the SOC and business units, localized judgment endures, making adaptation structurally necessary even under strong standardization.

These findings further demonstrate that SOCs manage paradoxes through continuous reconciliation rather than resolution. A fuller explanation of the mechanisms sustaining this equilibrium is developed in

Section 5 (Ambidextrous Integration).

5. Navigating Paradox and Enabling Ambidextrous Integration in Security Operations Centers

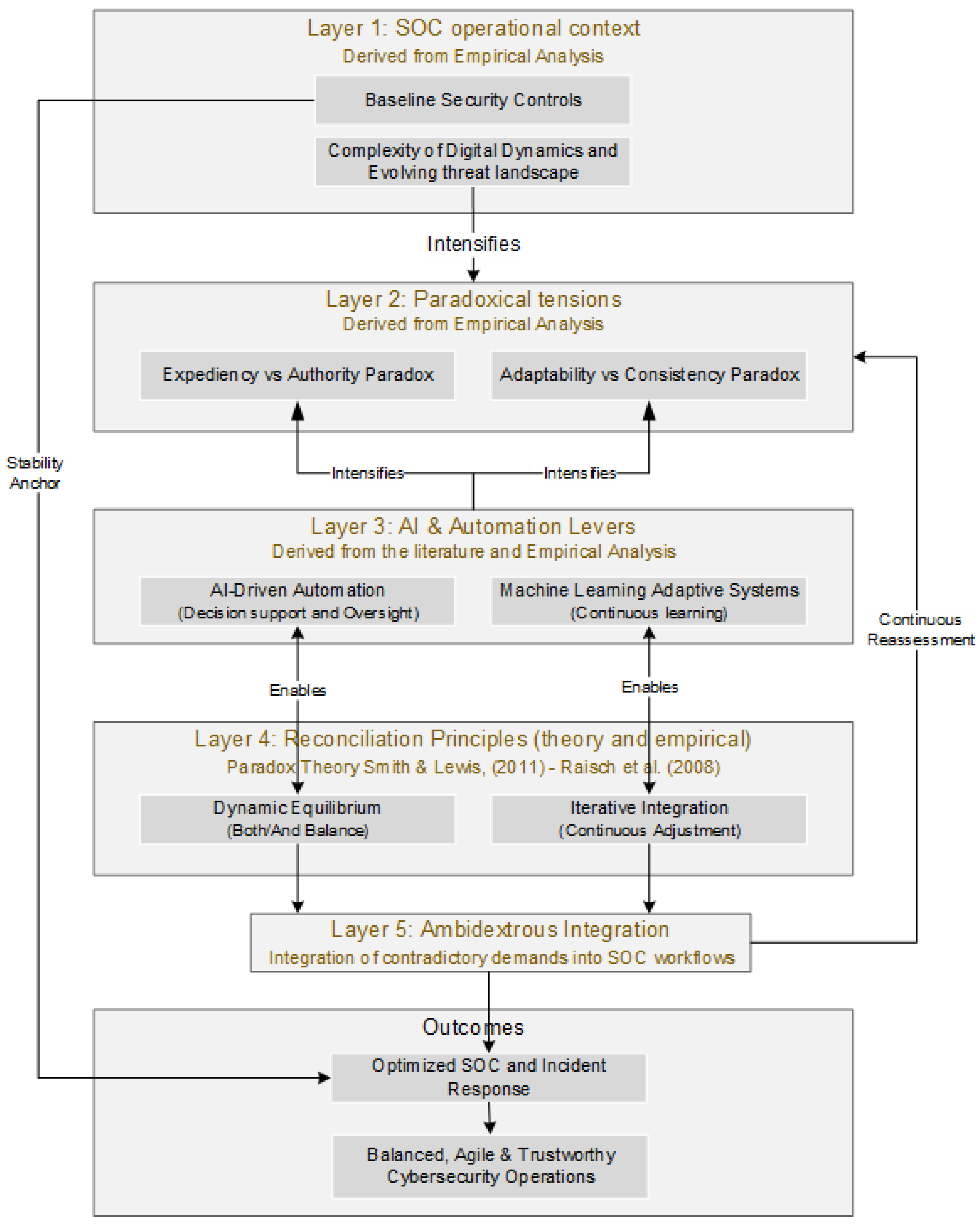

This chapter presents the conceptual model (delineated in

Figure 3) developed from empirical data and grounded in Paradox Theory [

16,

39]. The conceptual model describes how SOCs navigate two interdependent tensions: (1) expediency versus authority and (2) adaptability versus consistency that emerged as recurrent organizational paradoxes across the three case studies. The SOC literature separates technology, process, or human challenges, while prior SOC literature tends to separate technological, procedural, and human challenges [

3,

14], whereas this study reconceptualizes these as persistent organizational contradictions requiring continuous reconciliation rather than discrete technical fixes [

13].

This model does not aim to solve the tensions but to explain how SOCs manage them over time by integrating competing demands into the same operational space. The model builds layer by layer on second- and third-order dimensions derived from empirical data and advances theoretical understanding of how paradoxes are not eliminated but continuously balanced in practice.

To ensure analytical transparency and align terminology across the paper, we use the exact second-order theme labels from

Figure 1 and

Figure 2 in the mapping displays in

Table 3 (Panels A and B) and in the cross-case matrix (

Table 4). This provides one-to-one traceability from data (first-order evidence), themes (second-order), and aggregate paradoxes to the conceptual model. Additionally,

Table 5 anchors each conceptual layer in concrete empirical examples drawn from the three cases, illustrating how the theoretical mechanisms materialize in practice.

To strengthen the analytic transparency of the model, we explicitly link the Gioia data structure to five theoretical layers representing how empirical observations evolve into theoretical abstraction. The mapping between first-order concepts, second-order themes, and aggregate dimensions was iteratively refined through constant comparison across the three cases until no new themes emerged.

Table 3 summarizes this bridge from empirical context (Layer 1) to reconciliation mechanisms (Layers 4–5), demonstrating that the model was progressively abstracted rather than preconceived.

To demonstrate how the conceptual layers are grounded in the data,

Table 3 (Panel A) maps the five second-order themes from

Figure 1 to their aggregate dimension (Response Expediency vs. Authority) and to the specific model mechanisms each theme activates. This makes explicit how case evidence informs Layer 2 (tensions) and triggers Layer 3 levers and Layer 4 reconciliation.

Complementing Panel A,

Table 3 (Panel B) presents the second-order themes for the Adaptability vs. Consistency paradox matching

Figure 2 (e.g., “Consistency in diverse environments,” “Centralized control of incident response”). The right-hand columns show how these themes anchor Layer 2 while channeling toward Iterative Integration (Layer 4) or Ambidextrous Integration (Layer 5).

To assess the robustness of these patterns across organizational settings,

Table 4 provides a cross-case presence/intensity matrix. Using a descriptive coding legend (●, ●●, ●●●) and source tags (INT/OBS/DOC), the matrix supports analytic replication by showing where each second-order theme is most salient (CPG SOC, MSSP, public body) and which data streams underpin it.

Across the three cases, the expression of paradoxical tensions varied according to organizational mandate and structure. In the MSSP SOC, the Expediency–Authority paradox was most salient because multi-client engagements required rapid triage under contractual time constraints while maintaining layered approval chains. In contrast, the public body SOC emphasized consistency through centralized oversight, which ensured compliance but slowed incident containment. The CPG SOC occupied a middle position, balancing automation-enabled agility with strong business-risk governance. These contextual variations confirm that paradoxes are systemic yet contingent, requiring reconciliation mechanisms that are adaptive to institutional context.

Together, the mappings in

Table 3 and the cross-case profile in

Table 4 establish the empirical spine of the conceptual model, justifying a five-layer account that proceeds from operational context (Layer 1) to paradoxical tensions (Layer 2), to technology levers (Layer 3), to reconciliation principles (Layer 4), and, finally, to Ambidextrous Integration (Layer 5).

To anchor

Figure 3 in practice before detailing each layer,

Table 5 provides one empirical instantiation per layer and case (CPG, MSSP, Public), with source anchors (INT/OBS/DOC).

As summarized across

Table 3,

Table 4 and

Table 5, the conceptual model integrates data-derived themes, cross-case variation, and case-specific instantiations. This triangulation establishes the foundation for the five-layer exposition that follows, detailing how SOCs transform paradoxical tensions into a dynamic capability of Ambidextrous Integration.

Layer 1—SOC Operational Context

Each layer of the model is grounded in the collected empirical data presented in our Gioia tables and Findings Sections. The first layer, “SOC operational context”, identifies three foundational elements that shape how tensions emerge and escalate within Security Operations Centers: baseline security controls; the complexity of digital dynamics; and the evolving threat landscape.

These components work together in a dual role. They provide the necessary structure for stability, such as standardized rules and control mechanisms, while also intensifying real-time response. For example, baseline security controls guide procedural integrity, but can slow down action in fast-moving situations. In the public-body SOC, rigid baseline controls ensured compliance with governmental audit requirements but delayed escalation when incidents spanned multiple agencies, demonstrating how structural stability heightened the need for flexibility. This illustrates that stability and agility co-evolve, rather than substitute each other as an early manifestation of paradoxical coupling.

The complexity of digital dynamics, such as multi-vendor environments, interconnected systems, and cloud infrastructure, makes incident diagnosis and containment more difficult. In contrast, the MSSP SOC faced continuous client onboarding, which required analysts to reconcile standardized templates with diverse customer infrastructures—exemplifying how operational complexity amplifies paradoxical demands. The evolving threat landscape, with increasingly sophisticated and fast-moving attacks, places constant urgency on SOC performance. Together, these conditions form the contextual layer that sustains rather than causes paradoxical tension.

Across cases, this environment both supports and challenges operations, amplifying internal contradictions that set the stage for the paradoxes described in the next layer of the model.

Layer 2—Paradoxical Tensions

The second layer, “Paradoxical tensions”, introduces the core paradoxical tensions that structure SOC decision-making. These tensions do not arise as isolated problems but are systemic contradictions embedded in SOC workflows. They build the theoretical basis of the conceptual model as they clarify the dual demands that must be continuously balanced rather than resolved.

The first tension, Expediency versus Authority, captures the contradiction between the need for rapid action and the requirement for oversight. In the MSSP SOC, analysts described situations where automated triage recommended containment within seconds, yet escalation procedures demanded managerial validation, creating friction that vividly embodied the Expediency–Authority paradox.

The second tension, Adaptability versus Consistency, emerges from the need to customize incident response actions to specific client environments, while at the same time, teams should keep processes stable and auditable. In the CPG SOC, global playbooks had to be locally adapted for plants subject to strict operational-technology constraints, showing how flexibility and consistency must coexist. Likewise, the public-body SOC faced continuous adjustments between national standards and department-specific protocols, further illustrating this persistent duality.

In the model, these paradoxes represent the engine of SOC functioning, continually generating the need for reconciliation rather than resolution. They serve as the reference point for evaluating whether AI, automation, and organizational routines facilitate or intensify the paradoxical dynamics that follow. Having outlined the central tensions that energize SOC activity, the subsequent layer examines how AI and automation mechanisms mediate these opposing demands rather than resolve them.

Layer 3—AI and Automation Levers

The third layer, “AI and automation levers”, in the conceptual model introduces the technological mechanisms that influence how paradoxical tensions are managed in SOCs. Rather than eliminating contradictions, these tools act as enablers that help organizations live with and respond to paradoxes more effectively.

The first lever—I-driven automation—supports the speed and scale enhancement of SOC operations while introducing new oversight challenges. Such tools as automated alert triage, AI-guided playbooks, and real-time isolation mechanisms increase responsiveness and minimize manual effort. However, the same capabilities also bring concerns about authority and validation, as automated decisions may outpace traditional escalation paths. In this way, AI-driven automation contributes to both sides of the Expediency–Authority paradox. At the MSSP SOC, automated triage substantially reduced detection time but simultaneously created validation bottlenecks, as analysts waited for managerial sign-off before containment—making visible the expediency–authority tension in practice.

The second lever—machine learning adaptive systems—allows continuous learning as they tune detection and response strategies from emerging data. These systems modify rules, surface patterns from different incidents, and support analysts in refining actions over time. In the CPG SOC, for instance, adaptive correlation models were retrained after each malware outbreak, improving detection accuracy yet challenging consistency with global playbooks. Such adaptive mechanisms exemplify the iterative learning component of paradox navigation.

In the conceptual model, this layer serves as a pivot: it connects the tensions from Layer 2 with the reconciliation practices in Layer 4. These AI and automation levers do not offer resolution, but they expand the operational bandwidth of SOCs, making it possible for the teams to work within paradox rather than eliminate it. Their effectiveness is determined not only by their technical design but also by how they are embedded into human workflows, decision rights, and structures that reflect accountability.

Layer 4—Reconciliation Principles

The fourth layer of the model explains how SOCs manage the tensions identified in Layer 2. It introduces two core reconciliation principles: Dynamic Equilibrium and Iterative Integration. These principles represent how organizations cope with opposing demands without resolving them, but rather by keeping both active over the course of time.

Dynamic Equilibrium comes directly from Paradox Theory [

16,

39]. It describes the capacity of organizations to sustain contradictory goals simultaneously. In SOC operations, this means that responses to incidents should be quick (expediency) while ensuring decisions follow proper supervision and governance (authority). Favoring one side over the other or rotating between them should be excluded, as SOCs build joint processes where speed and control are both maintained. For this, the ongoing adjustment and mutual coordination between automation tools and human actors are needed.

In contrast, Iterative Integration is an insight grounded in our empirical findings. It refers to the constant fine-tuning of routines over time, especially as a response to evolving threats, changing technologies, or client-specific needs. Unlike dynamic equilibrium, which emphasizes holding tensions together in the moment, Iterative Integration captures how SOCs adjust playbooks, escalation matrices, and detection rules over multiple incidents. This type of learning process allows SOCs to balance between being adaptive and consistent by embedding change into routines without losing structure. Together, these principles translate the abstract notion of paradox management into observable organizational practice.

These two mechanisms provide a more realistic path to paradox navigation than traditional models of ambidexterity. Classical ambidexterity, as defined in organizational theory [

21], relies on either structural separation (delegating opposing objectives to different teams or units) or temporal separation (alternating between goals over time). However, our findings suggest that SOCs face both sets of demands simultaneously, within the same time frame and operational space. Therefore, separating tasks by team or time does not address the persistent and interrelated nature of these tensions adequately. SOCs need mechanisms working within a unified system, not by division.

For example, in the Expediency versus Authority paradox, analysts are expected to take immediate containment action. For example, isolation of compromised assets while awaiting formal approval from business units or risk managers. Such steps cannot be temporally sequenced because delays in containment increase risk, nor can they be structurally assigned to different units without creating decision bottlenecks. The analyst must act fast and defer to oversight at the same time. This concurrent demand highlights why traditional separation models do not meet the expectations in the SOC environment and why integration within the same workflow is essential.

Similarly, in the paradox of adaptability versus consistency, SOC teams often customize playbooks to accommodate specific customer infrastructures or requirements for compliance. Yet, these customizations must still follow a common baseline of operational quality and reporting. Temporally separating these tasks (e.g., customizing first, standardizing later) risks causing errors or delays, while structurally assigning standardization and customization to separate teams leads to misalignment and rework. Only by embedding both goals tailoring and standardizing within the only coherent response process, can SOCs maintain both flexibility and procedural reliability.

Together, Dynamic Equilibrium and Iterative Integration can explain how SOCs actively manage paradoxical tensions not by eliminating contradictions, but by enabling systems that can operate under both logics at once. These principles lay the groundwork for

Ambidextrous Integration, the fifth layer of our model, which captures how organizations embed these reconciliation mechanisms into the core structure of incident response [

16,

19].

Layer 5—Ambidextrous Integration

The

Ambidextrous Integration (

Layer 5) is the central contribution of this conceptual model and represents our theoretical synthesis. Empirically, this mechanism was evident across all three cases. In the CPG SOC, containment procedures were pre-integrated with business-approval logic so that analysts could isolate assets while automatically triggering managerial notification, an illustration of real-time reconciliation between speed and control. In the public-body SOC, customized playbooks were embedded within standardized national frameworks, ensuring both contextual adaptation and procedural consistency. At the MSSP SOC, automation thresholds were configured collaboratively with clients, institutionalizing flexibility within governance boundaries. This new construct, reflecting how contradictory demands are embedded and reconciled in SOC workflows, is defined here as the simultaneous embedding of dual logic within day-to-day SOC workflows, which means fulfilling both demands simultaneously (e.g., being fast and thorough simultaneously, rather than choosing between one of them during the incident response) within the same workflow. In organizational theory literature, ambidexterity describes an organization’s ability to manage conflicting demands, such as exploration and exploitation [

21]. This is typically achieved through temporal separation (performing one task first, then the other) or structural separation (creating different teams or units to handle each logic). However, such methods may not be efficient in time-sensitive, high-risk environments like SOCs.

Ambidextrous Integration, as introduced in this study, starts from these separation-based strategies. It discusses the real-time coexistence of conflicting demands, such as expediency and authority, or adaptability and consistency under the same operational process.

Ambidextrous Integration requires that SOC teams internalize paradoxical demands into a shared, cohesive workflow such that competing targets like speed and control, or adaptability and consistency, are no longer treated as sequential phases or distributed tasks but are instead managed simultaneously. This model of working relies on embedded routines, automation, and decision structures that allow these contradictions to be held and managed in the moment, rather than resolved in advance or deferred across teams.

This layer goes further than traditional views of ambidexterity as it proposes that in SOC environments, competing demands are not only managed side by side but structurally embedded into core operational routines. Rather than toggling between customization and standardization, SOCs intentionally design modular workflows that embed both. Adaptation is not external to the standard; it is modularized and designed into it.

Similarly, incident containment actions are not treated as isolated technical interventions. They are embedded within predefined authority boundaries that allow rapid response while safeguarding governance. This guarantees that decisions that bring balance to speed and risk are made without compromising accountability. As a result, decision rights, thresholds for escalation, and automation triggers are all pre-integrated into response routines.

The model also emphasizes a feedback loop between Ambidextrous Integration and Paradoxical Tensions. As new threats emerge, technologies evolve, or organizational roles shift, the reconciliation mechanisms must be revisited. In several cases, ambiguity in responsibilities, unclear escalation matrices, or absence of continuous standby support led to renewed tension. These examples highlight that paradox resolution is never final; the ongoing assessment is needed, as is a redesign of routines and structures.

This recurrent nature reinforces the idea that Ambidextrous Integration is not a frozen state but a dynamic capability. It grows via constant refinement, whereby SOC teams evaluate whether the embedded processes still effectively manage contradictory demands. When they do not, the system is adapted—not to resolve the paradox, but to better hold its opposing forces in place under new conditions.

Outcome Layer—Sustained Paradox Navigation as Operational Capability

The bottom of the model presents the outcomes: optimized SOC and incident response capabilities. These are not static achievements, but emergent properties of continuously navigating paradoxical demands in a dynamic, sociotechnical environment.

Rather than viewing performance outcomes as final deliverables, the model conceptualizes them as recurring effects of sustained paradox reconciliation. These reframing positions SOC agility, trustworthiness, and balance as dynamic capabilities achieved through the continuous orchestration of competing demands, not through their elimination.

In contrast to traditional models that assume fixed configurations of technology, roles, and policies (e.g., defining automation rules once or standardizing escalation procedures), this approach indicates a temporal and adaptive logic. Drawing from Paradox Theory [

16,

39], the model emphasizes the need to revisit and recalibrate SOC practices as threats evolve, technologies advance, and organizational structures change.

This outcome layer thus closes the loop; it shows that successful SOC performance stems not from resolving contradictions, but from developing the routines and structures to hold them in productive tension. Paradox navigation becomes an embedded organizational capability, continuously enabling SOCs to adapt and not to lose control at the same time, and to scale without sacrificing contextual sensitivity.

In this way, the model moves beyond a descriptive mapping of tensions to propose a theoretical mechanism of Ambidextrous Integration as the foundation for ongoing effectiveness. What emerges is a self-renewing system where performance depends on the institutionalization of paradox reconciliation, not its one-time resolution.

In summary, the conceptual model illustrates how Security Operations Centers navigate enduring organizational paradoxes through layered mechanisms that couple speed with control and flexibility with structure. Rather than resolving contradictions, SOCs institutionalize them as part of daily routines, transforming paradox management into an operational capability. This insight extends Paradox Theory into the cybersecurity domain by showing how Dynamic Equilibrium and Ambidextrous Integration function in real time within sociotechnical systems. Building on this foundation, the following Discussion section elaborates how these mechanisms advance theoretical understanding of paradox navigation and inform practical design principles for resilient, AI-enabled security operations.

6. Discussion and Conclusions

6.1. Contributions

This study makes a novel academic contribution by revealing, theorizing, and reconciling the persistent organizational tensions that are present in SOCs’ incident response practices. While prior SOC research has focused on technical tool deployment or on mitigating human capacity issues, this study brings to light the deeper interdependence between technology (AI, automation), human judgment, and governance context. For instance, Tilbury and Flowerday [

14] show that automated detection and response tools in SOCs are often introduced with limited attention to the impact they generate on human analysts. Similarly, Patterson [

13] highlights that organizations tend to conduct superficial post-incident learning, rarely embedding lessons learned into operational routines. These examples show that prior literature treated tensions among technology, institutions, and professionals as trade-offs to manage, such as speed versus caution or efficiency versus control. In contrast, our findings argue that tensions are persistent contradictions that must be continuously balanced over time.

We apply the Paradox Theory [

16,

39] to reframe these tensions not as implementation problems but as paradoxes that require ongoing, iterative reconciliation. Specifically, the study identifies two paradoxes in SOC operations: Expediency versus Authority and Adaptability versus Consistency. These tensions, not previously theorized as enduring organizational paradoxes in cybersecurity, move the discussion beyond describing SOC complexity toward understanding how operations are dynamically maintained in real time.

By introducing these paradoxes into high-velocity, high-risk digital environments, this study extends the theory’s applicability beyond traditional corporate or innovation contexts. These paradoxes emerge not from technical processes only, but also from institutional expectations around who has decision-making authority, how procedures are followed, and how accurate action must be across diverse environments. AI and automation tools often speed up detection and response, but can simultaneously dilute accountability. As an example, when automated decisions escalate an incident without human validation, SOCs must decide who is responsible. Similarly, automation workflows can standardize operations but may conflict with client-specific adaptation needs. These dynamics bring paradoxes to the surface, forcing SOCs to tackle them directly, rather than treat them as secondary concerns.

To explain how SOCs manage competing demands, we introduce the concept of

Ambidextrous Integration. Unlike traditional views of ambidexterity [

21], which separate opposing goals within different teams or phases, Ambidextrous Integration embeds both sides of the paradox, such as rapid response and oversight, into the same workflow. This method shows the reality of the SOC ecosystem, where expediency and precision must coexist. It also enlarges the application of Paradox Theory [

16] by demonstrating how contradictions can be managed through integration rather than separation.

The conceptual model developed in this study offers a framework for understanding how SOCs achieve resilience by navigating tensions without eliminating them. AI, automation, and SOC analyst expertise are not presented as competing forces, but as balancing mechanisms that help reconcile conflicting goals. Empirically, this study analyzes extensively the SOC operations across three distinct organizational contexts: a leading CPG company; a global MSSP; and a governmental agency. The findings present how SOCs use layered authority, flexible procedures, and hybrid governance to reconcile operational paradoxes in practice.

Theoretically, this study contributes to three domains. First, it advances Paradox Theory by situating paradox navigation within sociotechnical systems, emphasizing that technological acceleration and institutional rigidity can coexist as mutually enabling forces. Second, it extends organizational studies of cybersecurity by shifting focus from technical or human limitations to systemic tensions as drivers of learning and adaptation. Third, it introduces Ambidextrous Integration as a novel construct that captures how SOCs embed contradictory demands into unified routines, thereby operationalizing Paradox Theory in high-velocity, high-stakes environments.

Practically, this study provides actionable guidance for SOCS leaders, CISOs, and cybersecurity practitioners. It demonstrates that paradoxes such as speed versus control or standardization versus flexibility cannot be eliminated through new tools or restructuring but must be managed through deliberate design. SOCs can strengthen resilience by embedding Ambidextrous Integration into their workflows aligning automation triggers with human approval paths, modularizing playbooks to balance client specificity with procedural consistency, and codifying accountability for algorithmic actions. This perspective shifts managerial focus from implementing discrete technologies to cultivating dynamic coordination capabilities across human and machine actors. Such an approach informs how organizations can sustain operational agility, regulatory compliance, and trust in increasingly automated environments, turning paradox navigation into a strategic competency rather than a recurring problem.

6.2. Limitations and Further Research

As with any interpretive study, several boundary conditions delimit the scope of these findings and indicate fruitful directions for future research. Despite the rigorous steps taken to ensure findings are reliable and valid, this study, based on inductive qualitative methods, offers depth of insight but may limit broader generalizability. This interpretive orientation is consistent with paradox research traditions that privilege depth of meaning over breadth of prediction [

16,

17].

The three organizations examined in this study, two operational SOCs and one MSSP, capture diverse but not exhaustive manifestations of SOC and incident-response dynamics. While these cases reflect significant heterogeneity in ownership model, scale, and governance (public, private, and hybrid service models), they do not encompass all possible SOC archetypes or industrial environments. Expanding the scope to companies of different sizes that have less than 20,000 or more than 120,000 employees operating in different industries for instance, small and medium-sized enterprises or large multinationals exceeding 120,000 employees would enhance generalizability. Future comparative work could also integrate cross-regional cases to account for cultural, regulatory, and infrastructural variations in paradox navigation cross-regional or cross-sectoral comparisons (e.g., finance, healthcare, or critical infrastructure) to explore how institutional environments shape paradox enactment.

We recommend that future studies apply quantitative or mixed methods design to complement and validate our qualitative insights. Such work could operationalize constructs like expediency, authority, adaptability, and consistency through measurable indicators and test their relationships statistically. Longitudinal designs could also trace how reconciliation mechanisms evolve with AI maturity and trust calibration over time.

Additionally, while this study identified paradoxical tensions as persistent organizational features, their manifestation may evolve as technology matures. The long-term impact of AI and automation integration in SOC operations requires exploration, particularly with respect to how dynamic reconciliation mechanisms evolve and scale. Future studies should examine whether Ambidextrous Integration stabilizes as a durable capability or generates new paradoxes as SOCs mature technologically.

As automation reshapes the human–machine interface, it may introduce novel paradoxes or reconfigure existing ones, altering the balance between control, accountability, and trust. Such dynamics may, for example, shift the distribution of decision authority or accountability when automated agents act semi-autonomously. Future research could investigate the applicability of the identified paradoxical tensions and the proposed conceptual model in other industries, such as critical infrastructure service providers, especially those linked to national security. Future research could also test the proposed model in other high-reliability sectors, such as energy, critical infrastructure, or national-security operations, to assess its transferability across organizational contexts.

Additionally, it would be valuable for scholars to examine the potential challenges associated with AI and automation, as highlighted by the head of network security “fault cannot be thrown on the AI, you cannot sue it … You cannot ask for penalties because AI did something wrong … deleting the configuration of 50 sites … gonna be a person needs … who sits behind it” (TDO, page 7, 09:40). This empirical insight underscores the accountability gap created by autonomous systems, revealing an under-theorized paradox between human liability and machine agency. Hence, future research could further explore the trust–accountability paradox in AI-enabled environments and develop governance mechanisms to mitigate the “responsibility vacuum” arising when algorithmic actions cause harm.

Moreover, future studies could also explore how paradoxical tensions manifest in different governance models (e.g., decentralized vs. centralized SOCs), and how reconciliation mechanisms may vary by organizational maturity, sector, or threat exposure. Such comparative inquiries would refine the boundary conditions of Paradox Theory and extend its relevance to high-reliability, technology-intensive domains.

Overall, these future directions would advance both Paradox Theory and cybersecurity practice by clarifying how paradox-navigation capabilities can be institutionalized, scaled, and governed in increasingly automated environments. By treating paradox not as a problem to solve but as a capability to cultivate, future research can further illuminate how organizations sustain resilience amid technological acceleration and institutional complexity.

6.3. Conclusions

The systematic analysis of three real-world organizational case studies reveals how Security Operations Centers (SOCs) sustain resilience by learning to live with, rather than eliminate contradictory demands. Through the joint orchestration of artificial intelligence (AI), automation, and human expertise, SOCs transform persistent tensions into productive sources of coordination and learning. Rather than resolving tensions, this study demonstrates how SOCs continuously balance competing logics, acting swiftly while preserving oversight, and tailoring responses while maintaining standardization through dynamic embedment of automation, AI with SOC operator judgment.

Most participants viewed AI as a catalyst that enhances speed and scalability while reaffirming the irreplaceable value of human judgment in context-sensitive decisions. This interplay between automation and expertise positions paradox navigation as an organizational capability rather than a technical trade-off.

Practically, the findings highlight the need for closer collaboration among SOC leaders, CISOs, and researchers to design governance mechanisms, playbooks, and training systems that institutionalize paradox navigation. By aligning automation triggers with human oversight and clarifying accountability in AI-driven actions, organizations can preserve both agility and integrity in high-velocity threat environments.

Theoretically, the study advances Paradox Theory within sociotechnical systems by showing how technological acceleration and institutional control can coexist as mutually enabling forces. The construct of Ambidextrous Integration synthesizes this dynamic, demonstrating how SOCs embed speed with oversight and adaptability with consistency within unified operational routines.

Although most participants expressed enthusiasm about AI, some underscored the emerging risks surrounding accountability and trust when automated systems act semi-autonomously. Future work should investigate how these paradoxes of control, liability, and explainability evolve across sectors as AI autonomy increases.

Overall, this synthesis underscores that SOC effectiveness arises not from resolving contradictions but from institutionalizing their productive balance. By embedding paradox navigation into sociotechnical routines, organizations can transform tension into a continual source of resilience, adaptability, and learning in cybersecurity operations. This integrative perspective reframes paradox not as a constraint but as a dynamic capability that sustains performance under technological and institutional complexity.